Benefits of Learning Rate Annealing for

Tuning-Robustness in Stochastic Optimization

March 12, 2025

Abstract

The learning rate in stochastic gradient methods is a critical hyperparameter that is notoriously costly to tune via standard grid search, especially for training modern large-scale models with billions of parameters. We identify a theoretical advantage of learning rate annealing schemes that decay the learning rate to zero at a polynomial rate, such as the widely-used cosine schedule, by demonstrating their increased robustness to initial parameter misspecification due to a coarse grid search. We present an analysis in a stochastic convex optimization setup demonstrating that the convergence rate of stochastic gradient descent with annealed schedules depends sublinearly on the multiplicative misspecification factor \(\rho\) (i.e., the grid resolution), achieving a rate of \(O(\rho^{1/(2p+1)}/\sqrt{T})\) where \(p\) is the degree of polynomial decay and \(T\) is the number of steps, in contrast to the \(O(\rho/\sqrt{T})\) rate that arises with fixed stepsizes and exhibits a linear dependence on \(\rho\). Experiments confirm the increased robustness compared to tuning with a fixed stepsize, that has significant implications for the computational overhead of hyperparameter search in practical training scenarios.

1 Introduction↩︎

Stochastic Gradient Descent [1] is a cornerstone of modern machine learning. Starting at a point \(x_1\), the update step of SGD takes the form \(x_{t+1}=x_t-\eta_t g_t\), where \(\eta_t\) is the stepsize at step \(t\) and \(g_t\) is a stochastic gradient at \(x_t\). An effective stepsize sequence \(\eta_1,\eta_2,\ldots\) is critical for performance, yet it is notoriously hard to tune in many scenarios and applications [2], [3]. Furthermore, as models continue to scale, the computational burden of stepsize tuning becomes increasingly demanding.

A common approach to tuning the stepsize sequence is simply using a fixed stepsize, selecting the best fixed value by performing a geometric grid search [4]. In this method, the stepsize is selected based on its performance on a validation set, with the grid resolution determining the (multiplicative) proximity to the best stepsize within the specified range.

A primary approach to moving beyond fixed stepsize sequences is stepsize scheduling. In stepsize scheduling [5]–[7], the step at time \(t\) is determined by multiplying a baseline stepsize parameter with a parametric sequence. While the approach enables more versatile stepsize sequences and often leads to improved performance, it still requires tuning the baseline stepsize parameter, typically through grid search. Some stepsize schedules also exhibit theoretical benefits, such as anytime convergence guarantees and better last-iterate guarantees [8]–[11].

While stepsize tuning is a widely adopted practice, its theoretical foundations remain under-explored. One key question is how sensitive this procedure is to the grid resolution. Limited computational budgets restrict the resolution of grid searches, an issue that has become increasingly prominent with the emergence of modern models consisting of billions of parameters that take days—sometimes weeks—to train. In fact, at massive scales, it is often the case that any methodological tuning of the stepsize is prohibitive and therefore abandoned entirely.

Standard analyses of fixed stepsize SGD in the convex setting demonstrate a linear degradation in convergence rate as a function of the multiplicative misspecification of the stepsize, which can be significant when performing a coarse—or even absent—grid search. This work investigates to what extent stepsize schedules can mitigate this dependency, providing more robust performance at lower grid resolutions.

Focusing our analysis on stochastic convex optimization, we establish convergence guarantees for SGD with stepsize schedules that decay polynomially to zero, which reveals a key advantage of automatically adapting to multiplicative overestimation of the stepsize. For commonly used schedules, such as cosine annealing, our guarantees yield a sublinear dependence on the misspecification factor, in contrast to the linear dependence that arises with fixed stepsizes. We further validate our theoretical findings through experiments on synthetic and real data, demonstrating improved robustness to stepsize tuning using decaying schedules compared to tuning a constant stepsize using a grid-search.

1.1 Summary of Contributions↩︎

In more detail, we consider stochastic first-order convex optimization settings, where we aim to minimize a convex objective \(f : \domain \to \reals\), where \(\domain \subset \reals^d\) is a convex set with diameter \(\diam\), while accessing \(f\) only through a (sub-)gradient oracle \(g\) (i.e., \(\E[g(x)] \in \partial f(x)\) for all \(x \in \domain\)). Given an initial stepsize \(\eta > 0\), a schedule is specified by a function \(h : [0,1] \to [0,1]\) through \(\eta_t = \eta h(\frac{t-1}{T})\), where \(T\) is the total number of SGD update steps.

Our main results are the following:

Our first main result in the convex (non-smooth) case, where we assume that the second moment of the oracle is bounded, is a convergence guarantee of the last iterate of \(T\)-steps SGD using a decaying schedule \(h\) (which satisfies some mild assumptions), of the form \[\begin{align} O\brk*{\ratenonsmoothopt} \cdot \! \inf_{\usuf \in [0,1)} \brk[c]*{ \frac{1}{\rho \hsuf(\usuf)} + \rho \intsuf(\usuf) } , \end{align}\] where \(\ratenonsmoothopt\) is the convergence rate using a tuned stepsize, \(\hsuf\) and \(\intsuf\) are certain functions that depend on the schedule \(h\), and \(\rho=\ifrac{\eta}{\etastar} \geq 1\) is the multiplicative overestimation factor of \(\eta\) compared to the tuned stepsize \(\etastar\). The infimum above is at most \(O(\rho)\), but as we discuss below, may become sublinear in \(\rho\) depending on the particular schedule \(h\).

Our second main result deals with the convex smooth case, where we assume that \(f\) is \(\sm\)-smooth and that the oracle has a bounded variance. We obtain a similar convergence guarantee of \[\begin{align} O\brk*{\ratesmoothopt} \cdot \inf_{\usuf \in [\usuf_0,1)} \brk[c]*{ \frac{1}{\rho \hsuf(\usuf)} + \rho \intsuf(\usuf)} , \end{align}\] where \(\usuf_0\) is the fraction of steps with \(\eta_t > 1/2\sm\), \(\ratesmoothopt\) is the convergence rate with a tuned stepsize, and \(\rho \geq 1\) is the multiplicative overestimation factor compared to the tuned stepsize. The dependence on \(\usuf_0\) is unavoidable (up to constants), as convergence in smooth optimization requires step size smaller than \(\ifrac{2}{\sm}\). For sufficiently small \(\usuf_0\), the infimum is again \(O(\rho)\) in the worst case.

Applying our main result to the cosine annealing schedule in the convex Lipschitz case, we obtain that the last-iterate convergence rate of SGD is \(O(\ifrac{\rho^{0.2} \diam \lip}{\sqrt{T}})\). Similarly, applying the same result to the polynomially decaying schedule \((1-\frac{t-1}{T})^p\) for some constant degree \(p \geq 1\), we obtain that the last-iterate convergence rate of SGD is \(O(\ifrac{\rho^{1/(2p+1)} \diam \lip}{\sqrt{T}})\). In the convex smooth case, assuming \(\eta_1=\eta h(0) \leq 1/2\sm\), we obtain the same multiplicative sub-optimality of \(\rho^{0.2}\) and \(\rho^{1/(2p+1)}\) for cosine annealing and (degree \(p\)-)polynomially decaying schedules.

Additionally, we validate the robustness of various learning rate schedules to tuning in experiments, by performing grid search on two tasks: a synthetic logistic regression task with a linear model and the CIFAR-10 classification task with a deep neural network. We find that, when using a coarse grid, annealing schemes—specifically cosine annealing and linear decay—demonstrate greater robustness compared to a fixed step size schedule.

Our theoretical results show that polynomially decaying schedules, including cosine annealing, achieve convergence rates with a sublinear dependence on the misspecification factor, in contrast to the linear dependence observed in SGD with a fixed stepsize (which we demonstrate in detail in 9). This distinction is particularly striking since, while both fixed and annealed stepsizes are able to attain the optimal convergence rate when properly tuned, the latter exhibits significantly greater robustness to parameter misspecifications. When tuning the stepsize using a coarse grid search under a limited computational budget, this difference in robustness can significantly impact performance, as also seen in our synthetic and real-data experiments.

1.2 Additional Related Work↩︎

1.2.0.1 Adaptive and parameter-free methods.

Beyond learning rate scheduling, several approaches have been developed to minimize the need for extensive tuning in first-order optimization. These include adaptive methods, such as AdaGrad and Adam [12], [13], as well as recent theoretical advancements [14]–[21], which utilize gradient statistics to dynamically adjust learning rates. Additionally, parameter-free methods [22]–[28] primarily focus on automatically adapting to the problem’s complexity, such as the distance to the optimal solution. Recently, several parameter-free approaches demonstrated impressive practical performance, narrowing the gap to finely-tuned methods [29]–[31]. While these approaches take different paths to reduce tuning, adaptive methods and scheduling schemes are often used together in practice.

1.2.0.2 Theoretical analyses of stepsize annealing.

Several studies have analyzed different stepsize schedules. The influential work of [8] showed that the schedules \(\eta_t = \eta/t\) and \(\eta_t = \eta/\sqrt{t}\) yield suboptimal last-iterate guarantees and proposed a new schedule with optimal last-iterate performance. Later, [11] demonstrated that a linear decay schedule also achieves an optimal last-iterate guarantee. Additionally, [32] introduced "schedule-free" SGD, which eliminates the need to know the training length \(T\) in advance. While these works focus on optimality with well-tuned stepsizes and last-iterate guarantees, our work examines the robustness of these schedules when the step size is not finely tuned. Additionally, new scheduling schemes continue to emerge, such as those proposed by [33] and [34], which incorporate a cooldown phase to accommodate varying training durations. The robustness perspective we propose helps us better understand the benefits of different schedules and guides the design of more robust ones.

2 Preliminaries↩︎

2.1 Problem Setup↩︎

In this work, we are interested in first-order stochastic optimization over a bounded domain within the \(d\)-dimensional Euclidean space, \(\mathbb{R}^d\), equipped with the Euclidean norm, defined as \(\norm{\cdot} \eqdef \norm{\cdot}_2\). Let \(\domain \subset \reals^d\) be a convex set with diameter \(\diam\) (i.e., for all \(x,y \in \domain\), \(\norm{x-y} \leq \diam\)) and let \(f : \domain \to \reals\) be a convex function. Our goal is to find some \(\xout \in \domain\) such that \(f(\xout)-\min_{x \in \domain} f(x)\) is small, where we access \(f\) only through an unbiased sub-gradient oracle \(g : \domain \to \reals^d\) (i.e., \(\E[g(x)] \in \partial f(x)\) for all \(x \in \domain\), where we denote with a slight abuse of notation \(\nabla f(x) \eqdef \E[g(x)]\)). We consider two optimization scenarios:

Convex and Lipschitz setting. Here we assume \(g\) has a second moment bound, that is, for some \(\lip > 0\), \(\E\norm{g(x)}^2 \leq \lip^2\) for all \(x \in \domain\). This implies in particular that \(f\) is \(\lip\)-Lipschitz.

Convex and smooth setting. In this scenario we assume that \(f\) is \(\sm\)-smooth,3 and instead of a second moment bound we assume that \(g\) has a variance bound, that is, for some \(\sigma > 0\), \(\E[\norm{g(x)-\nabla f(x)}^2] \leq \sigma^2\) for all \(x \in \domain\).

2.1.0.1 Stochastic gradient descent.

We will analyze the (projected) Stochastic Gradient Descent (SGD) algorithm, which starts at some \(x_1 \in \domain\) and performs update steps of the form \(x_{t+1} = \proj{x_t - \eta_t g_t}\), where \(\eta_t\) is the stepsize at step \(t\), \(g_t=g(x_t)\) is a stochastic sub-gradient at \(x_t\), and \(\proj{\cdot}\) is the Euclidean projection to \(\domain\). The output of \(T\)-steps SGD is typically some average of the iterates or the last iterate. The convergence rate guarantee of the average iterate of fixed stepsize SGD with tuned stepsize is \(O(\ifrac{\diam \lip}{\sqrt{T}})\) in the convex Lipschitz case and \(O(\ifrac{\sm \diam^2}{T} + \ifrac{\diam \sigma}{\sqrt{T}})\) in the convex smooth case [35].

2.1.0.2 Stepsize scheduling.

Our focus will be on stepsizes of the form \(\eta_t = \eta h\brk{\frac{t-1}{T}}\), for some \(\eta > 0\) and \(h : [0,1] \to [0,1]\), where \(T \in \naturals\) is the number of SGD steps that are performed. Common schedules include \(h(u)=1\) (fixed stepsize), \(h(u)=\frac{1}{2}+\frac{1}{2} \cos(\pi u)\) (cosine annealing), and \(h(u)=(1-u)^p\) for some \(p \geq 1\) (polynomial decay). In particular, we will assume that \(h(u)\) is monotonically non-increasing, and satisfy \(h(u)=0 \Leftrightarrow u=1\); we will call such a schedule annealed for brevity. We additionally assume for technical reasons that the annealed schedules we consider are differentiable and \(p\)-Lipschitz. Using an annealed schedule, SGD with a properly tuned step size yields the same rate as optimally tuned fixed stepsize SGD, up to constant factors (where we treat \(p\) as a constant). See 10 for additional details. Notable annealed schedules include cosine annealing and polynomial decay.

2.1.0.3 Robustness to stepsize misspecification.

Fixing an initialization \(x_1 \in \domain\) and a stepsize schedule \(h(\cdot)\), it remains to tune the base stepsize \(\eta\). Considering a tuned stepsize \(\etastar\),4 we investigate the sensitivity of SGD when the stepsize is only tuned to a multiplicative misspecification factor \(\rho \geq 1\) (i.e., stepsize \(\eta = \rho \etastar\), where \(\rho\) is of course unknown to the algorithm). In this case, the convergence rate will likely degrade as \(\rho\) increases. For instance, the standard guarantee of fixed stepsize SGD degrades linearly in \(\rho\); we demonstrate this fact in the convex Lipschitz setting in 9.

Our main inquiry is to what extent stepsize schedules can mitigate this degradation, enabling more robust performance when the stepsize is crudely tuned (e.g., when tuned using a coarse grid search), and achieving convergence rates with sublinear dependence on \(\rho\), for \(\rho \geq 1\).

2.2 Convergence Analysis with Stepsize Schedules↩︎

Here we present convergence guarantees for SGD using an annealed schedule. The tuned stepsizes and respective convergence rates will serve as the baseline for establishing a sublinear dependence on the misspecification parameter. For their proofs, see 10.

Let \(h\) be a differentiable \(p\)-Lipschitz annealed schedule \(h\). We define the following two functions associated with \(h\): \[\begin{align} \label{eq:hsuf-intsuf} \hsuf(v) &\eqdef \int_u^1 h(u) du \qquad \text{ and } \qquad \intsuf(v) \eqdef \int_{v}^{1} \frac{\hsuf'(u)^2}{\hsuf(u)} du . \end{align}\tag{1}\] Throughout, convergence bounds will be expressed in terms of \(\hsuf\) and \(\intsuf\). We begin with the convex Lipschitz case.

lemmaAnnealedConvex Let \(\domain \subset \reals^d\) be a convex set with diameter \(\diam > 0\), \(f : \domain \to \reals\) a convex function, \(x^\star \in \argmin_{x \in \domain} f(x)\), and \(g : \domain \to \reals^d\) an unbiased first-order oracle of \(f\) with second-moment bounded by \(\lip^2 > 0\). Let \(x_1,x_2,\ldots,x_{T+1}\) be the iterates produced by \(T\)-steps SGD with stepsizes \(\eta_t=\eta h\brk{\frac{t-1}{T}}\) using the oracle \(g\), where \(h\) is a differentiable \(p\)-Lipschitz annealed schedule. Then it holds that \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] &\leq \frac{\diam^2}{2 \eta T \hsuf(0)} + 2\eta \lip^2 \intsuf(0) + \frac{8 p \eta \lip^2}{T} . \end{align}\]

We denote the tuned stepsize and respective convergence guarantee (up to lower-order terms) by \[\begin{align} \etastar &\eqdef \frac{\diam}{2 \lip \sqrt{T \hsuf(0)\intsuf(0)}} \label{eq:rate-nonsmooth-opt} \qquad \text{and} \qquad \ratenonsmoothopt \eqdef\frac{2 \diam \lip}{\sqrt{T}} \sqrt{\intsuf(0) / \hsuf(0)} . \end{align}\tag{2}\]

We proceed to the convergence guarantee in the convex smooth case.

lemmaAnnealedSmooth Let \(\domain \subset \reals^d\) be a convex set with diameter \(\diam > 0\), \(f : \domain \to \reals\) a \(\sm\)-smooth convex function, \(x^\star \in \argmin_{x \in \domain} f(x)\), and \(g : \domain \to \reals^d\) an unbiased first-order oracle of \(f\) with variance bounded by \(\sigma^2 \geq 0\). Let \(x_1,x_2,\ldots,x_{T+1}\) be the iterates produced by \(T\)-steps SGD with stepsizes \(\eta_t=\eta h\brk{\frac{t-1}{T}}\) using the oracle \(g\), where \(h\) is a differentiable \(p\)-Lipschitz annealed schedule and \(\eta h(0) \leq \frac{1}{2\sm}\). Then it holds that \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] &\leq \frac{\diam^2}{2 \eta T \hsuf(0)} + \eta \sigma^2 \intsuf(0) + \frac{4 p \eta \sigma^2}{T} . \end{align}\]

Similarly, we denote the tuned stepsize over \(\eta \in (0,\frac{1}{2\sm h(0)}]\) as \[\begin{align} \label{eq:etastarsm} \etastarsm &\eqdef \min\set*{\frac{1}{2\sm h(0)},\frac{\diam}{ \sigma \sqrt{2 T \hsuf(0)\intsuf(0)}}}, \end{align}\tag{3}\] and the respective convergence guarantee (up to lower-order terms) as \[\begin{align} \ratesmoothopt &\eqdef \frac{\diam^2}{2 \etastarsm T \hsuf(0)} + \etastarsm \sigma^2 \intsuf(0) \leq \frac{\sm \diam^2 h(0)}{T \hsuf(0)} + \frac{\diam \sigma}{\sqrt{T}} \sqrt{2 \intsuf(0) / \hsuf(0)} . \label{eq:smooth-opt} \end{align}\tag{4}\]

As we previously mentioned, under the mild assumption that \(p=\Theta(1)\) (the Lipschitz parameter of \(h\)), the guarantees match the rates of optimally tuned fixed stepsize SGD (see 10 for details). In both cases, a multiplicative overestimation of the optimal stepsize degrades the guarantee linearly (in the convex smooth case, for a large enough overestimation, \(\eta_1 > \frac{1}{2\sm}\) and the guarantee does not even hold).

3 Convex and Lipschitz Setting↩︎

This section considers a convex objective where the second moment of the sub-gradient oracle is bounded. The main result of this section is a convergence guarantee that mitigates the imbalance caused by overestimation by automatically adapting to the tails of \(\hsuf(v)\) and \(\intsuf(v)\). The key observation in obtaining this result is that any suffix of iterates \(x_\tsuf,\ldots,x_{T+1}\) can be viewed as a \((T-\tsuf+1)\)-steps SGD starting at \(x_\tsuf\), effectively ignoring the large stepsizes prior to step \(\tsuf\) that would otherwise degrade the convergence bound.

Next, we present the general guarantee, followed by corollaries for specific schedules.

Theorem 1. Let \(\domain \subset \reals^d\) be a convex set with diameter \(\diam > 0\), \(f : \domain \to \reals\) a convex function, \(x^\star \in \argmin_{x \in \domain} f(x)\), and \(g : \domain \to \reals^d\) an unbiased first-order oracle of \(f\) with second-moment bounded by \(\lip^2 > 0\). For any \(\rho \geq 1\), let \(x_1,x_2,\ldots,x_{T+1}\) be the iterates produced by \(T\)-steps SGD with stepsizes \(\eta_t=\eta h\brk{\frac{t-1}{T}}\) using the oracle \(g\), where \(\eta = \rho \cdot \etastar\) and \(h\) is a differentiable \(p\)-Lipschitz annealed schedule. Then it holds that \[\begin{align} \label{eq:main-non-smooth} \E[f(x_{T+1}) - f(x^\star)] \leq \frac{1}{2} \ratenonsmoothopt \cdot \inf_{\usuf \in [0,1)} \brk*{\frac{\hsuf(0)}{\rho \hsuf(\usuf)} + \frac{\rho \intsuf(\usuf)}{\intsuf(0)}} + O\brk*{\frac{p \rho \etastar \lip^2}{T}} , \end{align}\qquad{(1)}\] where \(\hsuf\), \(\intsuf\), \(\etastar\), and \(\ratenonsmoothopt\) are given in 1 2 . In particular, the optimal \(\usuf\) satisfies \(\hsuf(\usuf)\hsuf'(\usuf)=\ifrac{-\hsuf(0) \intsuf(0)}{\rho^2}\) (or \(\usuf=0\) if there is no solution).

First, note that for \(\rho=1\), 1 recovers \(\ratenonsmoothopt\) up to low order terms, as the infimum is at most \(2\). Furthermore, as \(\rho \geq 1\) and both \(\hsuf(v)\) and \(\intsuf(v)\) are decreasing and equal \(0\) at \(v=1\), the infimum adapts to the imbalance of the \(\frac{1}{\rho}\) and \(\rho\) terms which are introduced by the overestimation.

We defer the proof of 1 to 3.2. Following are corollaries for polynomially decaying and cosine annealing schedules which provide concrete examples for the power of 1.

Corollary 1. In the setting of 1, assuming \(h(u)=(1-u)^p\) for some \(p \geq 1\), \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] &= \ratenonsmoothopt \cdot \rho^{\frac{1}{2p+1}} + O\brk*{\frac{p \rho \etastar \lip^2}{T}} , \end{align}\] where \(\ratenonsmoothopt=\frac{p+1}{\sqrt{p}} \cdot \frac{2 \diam \lip}{\sqrt{T}}= O\brk*{\frac{\sqrt{p} \diam \lip}{\sqrt{T}}}\).

We observe that for \(p=\Theta(1)\), the optimal rate is the same as tuned SGD with fixed stepsize (up to constants), while the dependence on \(\rho \geq 1\) is sublinear, as we aimed to achieve. The dependence \(\rho^{\frac{1}{2p+1}}\) might lead to the idea that a larger \(p\) is always better, but as \(p\) increases the optimal rate degrades at a rate of \(O(\sqrt{p})\). In particular, using \(p=\Theta(\log \rho)\) the convergence rate will be \(O\brk{\ifrac{\diam \lip \sqrt{\log \rho}}{\sqrt{T}}}\), and increasing beyond this point will not improve the final rate.

Proof of 1. First note that \(h(u)=(1-u)^p\) is non-increasing, differentiable, \(p\)-Lipschitz (since \(\abs{h'(u)}=p(1-u)^{p-1} \leq p\)) and satisfy \(h(u)=0 \Leftrightarrow u=1\). Hence, \(h\) is annealed and we can use 1. A simple integration yields that \(\hsuf(\usuf)=\frac{1}{p+1}(1-\usuf)^{p+1}\), and \(\hsuf'(\usuf)=-(1-\usuf)^{p}\). Thus, \[\begin{align} \intsuf(\usuf) &= \int_{\tau}^{1} \frac{\hsuf'(u)^2}{\hsuf(u)} du = \frac{p+1}{p} (1-\usuf)^p. \end{align}\] We proceed to solve the optimality equation of 1, \(\hsuf(\usufb)\hsuf'(\usufb)=\frac{-\hsuf(0)\intsuf(0)}{\rho^2}\): \[\begin{align} \frac{-(1-\usufb)^{2p+1}}{p+1} &= \frac{-1}{p\rho^2} \implies \usufb = 1-\brk*{\frac{p+1}{p\rho^2}}^{\frac{1}{2p+1}}. \end{align}\] While this value is optimal, it may be negative for small \(\rho\), so we select a slightly sub-optimal value of \(\usufb=1-\rho^{\frac{-2}{2p+1}} \in [0,1)\) which is always valid. Using this value, \[\begin{align} \label{eq:p-infimum} \frac{\hsuf(0)}{\rho \hsuf(\usufb)} + \frac{\rho \intsuf(\usufb)}{\intsuf(0)} = \frac{(1-\usufb)^{-(p+1)}}{\rho} + \rho (1-\usufb)^p & = \frac{\rho^{\frac{2(p+1)}{2p+1}}}{\rho} + \rho \rho^{\frac{-2p}{2p+1}} = 2\rho^{\frac{1}{2p+1}} . \end{align}\tag{5}\] Hence, using this value to bound the infimum of ?? , \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] &\leq \ratenonsmoothopt \cdot \rho^{\frac{1}{2p+1}} + O\brk*{\frac{p \rho \etastar \lip^2}{T}} . \end{align}\] We conclude by plugging the values of \(\hsuf(0)\) and \(\intsuf(0)\) to 2 (and using \(p \geq 1\)), \[\begin{align} \ratenonsmoothopt &= \frac{2 \diam \lip}{\sqrt{T}} \sqrt{\frac{(p+1)^2}{p}} = O\brk*{\frac{\sqrt{p} \diam \lip}{\sqrt{T}}} . \qedhere \end{align}\] ◻

We proceed to the cosine annealing guarantee. Given its similarities to 1, the proof is deferred to 6.

Corollary 2. In the setting of 1, assuming \(h(u)=\frac{1}{2}(1+\cos\brk{\pi u})\), \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] &\leq \ratenonsmoothopt \cdot 18 \rho^{\frac{1}{5}} + O\brk*{\frac{\rho \etastar \lip^2}{T}} , \end{align}\] where \(\ratenonsmoothopt = \frac{2 \diam \lip}{\sqrt{T}} \sqrt{2 \intsuf(0)} \leq \frac{10 \diam \lip}{\sqrt{T}}\).

Again we observe a sublinear dependence on \(\rho\) with an optimal rate of \(O(\frac{\diam \lip}{\sqrt{T}})\). Note that this is the same behavior as in 1 with \(p=2\), which arises from the tail behavior of \(h(u)\). To see that, one can verify that \((1-u)^2 \leq h(u) \leq \frac{5}{2} (1-u)^2\) for all \(u \in [0,1]\).

3.1 Tighter Constants using Numerical Analysis↩︎

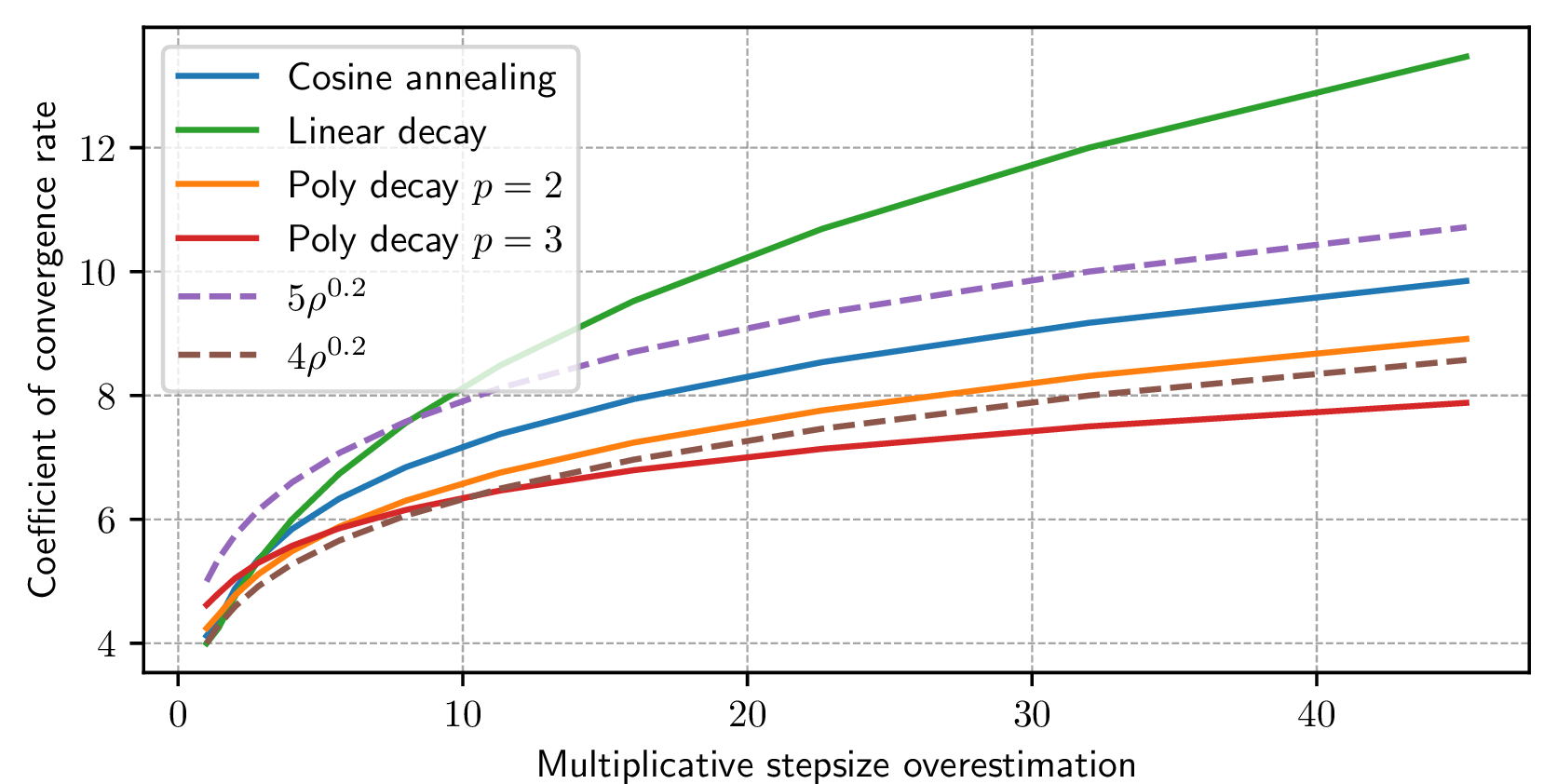

Figure 1: Numerically evaluating the coefficient of \(\ifrac{\diam \lip}{\sqrt{T}}\) for the convergence guarantee of 1 with different schedules and varying multiplicative misspecification parameter \(\rho\)..

The constants of 2 are not tight; in particular, the bound is established using crude (up to constants) bounds for \(\hsuf(u)\) and \(\intsuf(u)\). While a tighter bound can be obtained, the framework easily yields to numerical analysis as we demonstrate next.

The convergence guarantee of 1 is not posed as a closed-form equation but rather as a minimization over integrals that depend on the schedule. For a specific schedule and misspecification parameter, we use Scipy’s [36] quad integration to evaluate \(\hsuf,\intsuf\), and fsolve to solve the minimization of 1.

In 1 we provide a numerical analysis for the convergence guarantee of 1 with several decaying schedules, including the cosine annealing, showing in particular that the convergence rate of SGD with cosine annealing is bounded by \(5 \rho^{\frac{1}{5}} \frac{\diam \lip}{\sqrt{T}}\). We observe that the cosine annealing schedule and the quadratic decay schedule have similar convergence guarantees with a coefficient between \(4 \rho^{\frac{1}{5}}\) to \(5 \rho^{\frac{1}{5}}\). In addition, even for a somewhat large misspecification parameter of size \(50\), the difference between cosine annealing and the different polynomial decaying schedules is at most a factor of \(2\), which indicates that even mild decay might be sufficient if the grid is not too coarse.

3.2 Proof of 1↩︎

Before proving our main theorem, we first state a few lemmas we will require. The first is a last-iterate convergence guarantee, using the techniques of [9], [10] (proof appearing in 8).

lemmalastiterateconvex Let \(\domain \subseteq \reals^d\) be a convex set, \(f : \domain \to \reals\) a convex function, and \(g : \domain \to \reals^d\) an unbiased first-order oracle of \(f\) with second-moment bounded by \(\lip^2 > 0\). Let \(x_1,\ldots,x_{T+1}\) be the iterates produced by \(T\)-steps SGD with stepsizes \(\eta_1,\ldots,\eta_T\) using the oracle \(g\). Then for any \(\hat{x} \in \domain\), \[\begin{align} \E[f(x_{T+1}) - f(\hat{x})] &\leq \frac{\norm{x_1-\hat{x}}^2}{2 \sum_{s=1}^T \eta_s} + 2\lip^2 \sum_{t=1}^T \frac{\eta_t^2}{\sum_{s=t}^T \eta_s} . \end{align}\]

Next is a key lemma, translating the suffix of the last-iterate bound in [lem:last-iterate-convex] to one based on integrating the stepsize schedule (proof given later in the section).

Lemma 1. Let \(\tsuf \in [T]\), \(c_1,c_2,\eta > 0\), and \(\eta_t = \eta h\brk{\frac{t-1}{T}}\) for some differentiable \(p\)-Lipschitz annealed schedule \(h\). Then for any \(\usuf \in [\frac{\tsuf-1}{T},\frac{\tsuf}{T})\), \[\begin{align} \frac{c_1}{\sum_{s=\tsuf}^T \eta_s} + c_2 \sum_{t=\tsuf}^T \frac{\eta_t^2}{\sum_{s=t}^T \eta_s} & \leq \frac{c_1}{\eta T \hsuf(\usuf)} + c_2 \eta \int_{\usuf}^{1-\frac{1}{T}} \frac{h(u)^2}{\hsuf(u)} du + \frac{4 \eta c_2 p}{T} . \end{align}\]

We proceed to the proof of 1.

Proof of 1. Let \(\usuf \in [0,1)\) and let \(\tsuf = \floor{\usuf T} + 1 \in [T]\). Consider the suffix \(x_\tsuf,x_{\tsuf+1},\ldots,x_{T+1}\) as an SGD sequence starting at \(x_\tsuf\). By [lem:last-iterate-convex] with \(\hat{x}=x^\star\), \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] &\leq \frac{\diam^2}{2 \sum_{s=\tsuf}^T \eta_s} + 2\lip^2 \sum_{t=\tsuf}^T \frac{\eta_t^2}{\sum_{s=t}^T \eta_s} . \end{align}\] As \(\usuf \in [\frac{\tsuf-1}{T},\frac{\tsuf}{T})\), by 1 with \(c_1 = \frac{\diam^2}{2}\) and \(c_2 = 2 \lip^2\), \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] &\leq \frac{\diam^2}{2 \eta T \hsuf(\usuf)} + 2\eta \lip^2 \int_{\usuf}^{1} \frac{h(u)^2}{\hsuf(u)} du + \frac{8 p \eta \lip^2}{T} \\ &= \frac{1}{2} \ratenonsmoothopt \cdot \brk*{\frac{\hsuf(0)}{\rho \hsuf(\usuf)} + \frac{\rho \int_{\usuf}^{1} \frac{h(u)^2}{\hsuf(u)} du}{\int_{0}^{1} \frac{h(u)^2}{\hsuf(u)} du}} + \frac{8 p \rho \etastar \lip^2}{T} } \\&= \frac{1}{2} \ratenonsmoothopt \cdot \brk*{\frac{\hsuf(0)}{\rho \hsuf(\usuf)} + \frac{\rho \intsuf(\usuf)}{\intsuf(0)}} + \frac{8 p \rho \etastar \lip^2}{T} } . \end{align}\] This inequality holds for any \(\usuf \in [0,1)\), hence it holds for the infimum over all \(\usuf \in [0,1)\). It is left to find the \(\usuf\) which minimizes the bound. Let \[\begin{align} \label{eq:inf-func} g(v) = \frac{\hsuf(0)}{\rho \hsuf(v)} + \frac{\rho \intsuf(v)}{\intsuf(0)} . \end{align}\tag{6}\] By the fundamental theorem of calculus, \[\begin{align} \hsuf'(v) = \brk*{\int_0^1 h(u) du-\int_0^v h(u) du}' = -h(u) \end{align}\] and \[\begin{align} \intsuf'(v) &= \brk*{\int_{0}^{1} \frac{\hsuf'(u)^2}{\hsuf(u)} du-\!\int_{0}^{v} \frac{\hsuf'(u)^2}{\hsuf(u)} du}' = -\frac{\hsuf'(v)^2}{\hsuf(v)} . \end{align}\] Thus, \[\begin{align} g'(v) &= \frac{- \hsuf(0)\hsuf'(v)}{\rho \hsuf(v)^2} - \frac{\rho \frac{\hsuf'(v)^2}{\hsuf(v)}}{\intsuf(0)} = \frac{-\rho \hsuf'(v)}{\intsuf(0) \hsuf(v)^2} \brk*{\frac{\hsuf(0)\intsuf(0)}{\rho^2}+ \hsuf(v)\hsuf'(v)} \\& = \frac{\rho h(v)}{\intsuf(0) \hsuf(v)^2} \brk*{\hsuf(v)\hsuf'(v)+\frac{\hsuf(0)\intsuf(0)}{\rho^2}} . \end{align}\] Hence, when \(\usuf\) satisfy \(\hsuf(\usuf)\hsuf'(\usuf)=\frac{-\hsuf(0)\intsuf(0)}{\rho^2}\), \(g'(\usuf)=0\). For \(v > \usuf\), \[\begin{align} \hsuf(v)\hsuf'(v) &= -\hsuf(v) h(v) \geq -\hsuf(v) h(\usuf) > -\hsuf(\usuf) h(\usuf) = -\frac{\hsuf(0)\intsuf(0)}{\rho^2}, \end{align}\] so \(g'(v) > 0\) and \(g(v) > g(\usuf)\). Similarly, for \(v < \usuf\), \(g'(v) < 0\) and \(g(v) > g(\usuf)\). Hence, \(\usuf\) satisfying \(\hsuf(\usuf)\hsuf'(\usuf)=\frac{-\hsuf(0)\intsuf(0)}{\rho^2}\) is the minimizer. If no such \(\usuf\) exists, the derivative is always positive (as \(h\) is continuous and \(\hsuf(1)\hsuf'(1)=0\)), and the minimizer is at \(\usuf=0\). ◻

3.3 Proof of 1↩︎

. Let \(\usuf \in [\frac{k-1}{T},\frac{k}{T})\). As \(h\) is non-increasing, we can use integration to obtain the following bound, \[\begin{align} \frac{c_1}{\sum_{t=\tsuf}^T \eta_t} + c_2 \sum_{t=\tsuf}^T \frac{\eta_t^2}{\sum_{s=t}^T \eta_s} &\leq \frac{c_1}{\eta \int_{\tsuf}^{T+1} h\brk*{\frac{t-1}{T}} dt} + \frac{c_2}{\eta} \sum_{t=\tsuf}^T \frac{\eta_t^2}{\int_{t}^{T+1} h\brk*{\frac{s-1}{T}} ds} \\& = \frac{c_1}{\eta T \int_{\frac{\tsuf-1}{T}}^1 h\brk*{u} du} + \frac{c_2}{\eta T} \sum_{t=\tsuf}^T \frac{\eta_t^2}{\int_{\frac{t-1}{T}}^1 h\brk*{u} du}\\& = \frac{c_1}{\eta T \hsuf\brk*{\frac{\tsuf-1}{T}}} + \frac{c_2}{\eta T} \sum_{t=\tsuf}^T \frac{\eta_t^2}{\hsuf\brk*{\frac{t-1}{T}}} } . \end{align}\] Again bounding by integration and changing variables, \[\begin{align} \frac{c_2}{\eta T} \!\sum_{t=\tsuf}^T \frac{\eta_t^2}{\hsuf\brk*{\frac{t-1}{T}}} & \leq \frac{c_2 \eta}{T} \brk*{\frac{h\brk*{\frac{\tsuf-1}{T}}^2}{\hsuf(\frac{\tsuf-1}{T})} \!+\! \int_{\tsuf}^{T} \frac{h\brk*{\frac{t-1}{T}}^2}{\hsuf\brk*{\frac{t-1}{T}}} dt} = \frac{c_2 \eta}{T} \brk*{\frac{h\brk*{\frac{\tsuf-1}{T}}^2}{\hsuf(\frac{\tsuf-1}{T})} + T\! \int_{\frac{\tsuf-1}{T}}^{1-\frac{1}{T}} \frac{h\brk*{u}^2}{\hsuf\brk*{u}} du} . \end{align}\] As \(h(u)\) is differentiable, \(p\)-Lipschitz, and \(h(1)=0\), for any \(v \in [0,1)\), \[\begin{align} \label{eq:h-lipschitz} 2 p \hsuf(v) &= 2 p \int_v^1 h(u) du \geq 2 \int_v^1 h(u) (-h(u))' du = h(v)^2-h(1)^2=h(v)^2. \end{align}\tag{7}\] Hence, \(\frac{h\brk*{\frac{\tsuf-1}{T}}^2}{\hsuf(\frac{\tsuf-1}{T})} \leq 2p\) and since \(\abs{\usuf-\frac{k-1}{T}} \leq \frac{1}{T}\), \[\begin{align} \int_{\frac{k-1}{T}}^{1-\frac{1}{T}} \frac{h\brk*{u}^2}{\hsuf\brk*{u}} du & = \int_{\usuf}^{1-\frac{1}{T}} \frac{h\brk*{u}^2}{\hsuf\brk*{u}} du + \int_{\frac{k-1}{T}}^{\usuf} \frac{h\brk*{u}^2}{\hsuf\brk*{u}} du \leq \int_{\usuf}^{1-\frac{1}{T}} \frac{h\brk*{u}^2}{\hsuf\brk*{u}} du + \abs*{\int_{\frac{k-1}{T}}^{\usuf} 2p du} \\& \leq \int_{\usuf}^{1-\frac{1}{T}} \frac{h\brk*{u}^2}{\hsuf\brk*{u}} du + \frac{2p}{T} . \end{align}\] Plugging back, \[\begin{align} \frac{c_1}{\sum_{t=\tsuf}^T \eta_t} + c_2 \sum_{t=\tsuf}^T \frac{\eta_t^2}{\sum_{s=t}^T \eta_s} &\leq \frac{c_1}{\eta T \hsuf\brk*{\usuf}} + \eta c_2 \int_{\usuf}^{1-\frac{1}{T}} \frac{h\brk*{u}^2}{\hsuf\brk*{u}} du + \frac{4 \eta c_2 p}{T} . \qedhere \end{align}\] ◻

4 Convex and Smooth Setting↩︎

In the following section, we extend our robustness result to the convex smooth setting, in which we replace the second-moment gradient oracle assumption with the assumptions that the gradient oracle has bounded variance and that \(f\) is \(\sm\)-smooth. The core technique is the same as in 3, with some additional considerations due to the requirement in standard smooth analysis that the stepsizes satisfy \(\eta_1,\ldots,\eta_T \leq \frac{c}{\sm}\) for some constant \(c < 2\).

Next is the main result of this section, a convergence guarantee robust to a multiplicative misspecification of the stepsize.

Theorem 2. Let \(\domain \subset \reals^d\) be a convex set with diameter \(\diam > 0\), \(f : \domain \to \reals\) a \(\sm\)-smooth convex function, \(x^\star \in \argmin_{x \in \domain} f(x)\), and \(g : \domain \to \reals^d\) an unbiased first-order oracle of \(f\) with variance bounded by \(\sigma^2 \geq 0\). For any \(\rho \geq 1\), let \(x_1,x_2,\ldots,x_{T+1}\) be the iterates produced by \(T\)-steps SGD with stepsizes \(\eta_t=\eta h\brk{\frac{t-1}{T}}\) using the oracle \(g\), where \(\eta=\rho \cdot \etastarsm\) and \(h\) is a differentiable \(p\)-Lipschitz annealed schedule. Denote \(\usuf_0 \eqdef \min\set{\usuf \in [0,1) : \eta h\brk{\frac{\floor{\usuf T}}{T}} \leq \frac{1}{2\sm}}\). Then it holds that \[\begin{align} \label{eq:main-smooth-new} \E[f(x_{T+1}) - f(x^\star)] &\leq \ratesmoothopt \cdot \inf_{\usuf \in [\usuf_0,1)} \brk*{\frac{\hsuf(0)}{\rho \hsuf(\usuf)} + \frac{\rho \intsuf(\usuf)}{\intsuf(0)}} + O \brk*{\frac{p \rho \etastarsm \sigma^2}{T}}, \end{align}\qquad{(2)}\] where \(\hsuf\), \(\intsuf\), \(\etastarsm\), and \(\ratesmoothopt\) are given in 1 3 4 . In particular, the optimal \(\usuf\) satisfies \(\hsuf(\usuf)\hsuf'(\usuf)=\ifrac{-\hsuf(0)\intsuf(0)}{\rho^2}\) (or \(\usuf=\usuf_0\) if there is no solution).

As in 1, in 2 we observe a similar adaptivity to \(\rho\) using the tails of \(\hsuf\) and \(\intsuf\). One small yet important difference is that the infimum is limited to the range \([\usuf_0,1)\), where \(\usuf_0\) denotes the fraction of iterations in which the stepsize exceeds \(\frac{1}{2\sm}\). This dependency is somewhat unavoidable (up to constants) as stepsizes larger or equal to \(\frac{2}{\sm}\) do not converge. Additionally, note that the above guarantee holds even if we specify a stepsize that is larger than \(\frac{2}{\sm}\), which is not the case with fixed stepsize SGD.

Next are corollaries of 2 with polynomial decay and cosine annealing schedules. Due to space constraints and similarities to the convex Lipschitz case, we defer the proofs of 2 and of the corollaries to 7.

Corollary 3. In the setting of 2, let \(h(u)=(1-u)^p\) for some \(p \geq 1\) and \(\rho \leq \frac{T}{2p}\). Then if \(\rho^2 \geq (1-\usuf_0)^{-(2p+1)}\), \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] = \ratesmooth(\etastarsm) \cdot O\brk*{\rho^{\frac{1}{2p+1}} } , \end{align}\] and if \(\rho^2 < (1-\usuf_0)^{-(2p+1)}\), \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] = \ratesmooth(\etastarsm) \cdot O\brk*{\frac{1}{1-\usuf_0}} . \end{align}\] In addition, \(\ratesmoothopt = O\brk*{\frac{p \sm \diam^2}{T } + \frac{\sqrt{p}\diam \sigma}{\sqrt{T}}}\).

Corollary 4. In the setting of 2, let \(h(u)=\frac{1}{2} (1+\cos(\pi u))\) and \(\rho \leq \frac{2 T}{\pi}\). Then if \(\rho^2 \geq (1-\usuf_0)^{-5}\), \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] &= \ratesmoothopt \cdot O\brk*{\rho^{\frac{1}{5}} } , \end{align}\] and if \(\rho^2 < (1-\usuf_0)^{-5}\), \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] &= \ratesmoothopt \cdot O\brk*{\frac{1}{1-\usuf_0}} . \end{align}\] In addition, \(\ratesmoothopt=O\brk*{\frac{\sm \diam^2}{T } + \frac{\diam \sigma}{\sqrt{T}}}\).

Observing 3 4, a similar improved dependence on \(\rho\) as in 1 2 holds when \(\usuf_0\) is sufficiently small. When \(\usuf_0\) is large, we obtain the expected inverse dependence on the fraction of steps with small enough stepsizes, which is unavoidable as we explained above.

5 Experimental Evaluation↩︎

Our theory predicts that learning rate annealing schemes exhibit greater robustness to learning rate tuning compared to tuning a fixed learning rate. To support the prediction, we perform experiments to compare the performances of different scheduling strategies under varying grid search resolutions for learning rate tuning.

We conduct two types of experiments: the first involves a synthetic logistic regression task closely aligned with the theoretical setting, while the second involves training a neural network classifier.

5.1 Experimental setup↩︎

We consider common schedules, namely, fixed learning rate (as our baseline), in addition to the decaying cosine annealing, and linear decay schedules. To simulate varying grid resolutions, we train the models using a geometric grid of learning rates with a multiplicative factor of approximately \(\sqrt[3]{10} \approx 2.15\) (the values \(\{1,2.2,5\}\) multiplied by \(10^i\) with different \(i\)’s), and consider the different subsets with resolutions \(2.15,2.15^2,2.15^3\), etc. For example, with range \([0.01,5]\) and resolution of \(2.15^3\), we find the best model for each of the grids5 \(\{0.01,0.1,1\},\{0.022,0.22,2.2\},\{0.05,0.5,5\},\) and report the average test loss/top-1 error across grids.

5.1.0.1 Synthetic logistic regression.

In the synthetic experiment, we generate 100,000 samples of dimension 100, drawn from a normal distribution. Labels are assigned based on thresholding probabilities determined by a "true weights" vector of size 100, also sampled from a normal distribution. To introduce additional noise, we flip each label with a probability of 0.1. A test set of the same size is generated similarly. We train a linear classifier using binary cross-entropy loss, SGD without momentum, a batch size of 1,000, and a single epoch (updating the scheduler after each step). For the fixed learning rate scheduler, we report both the last iterate and the averaged iterate performances.

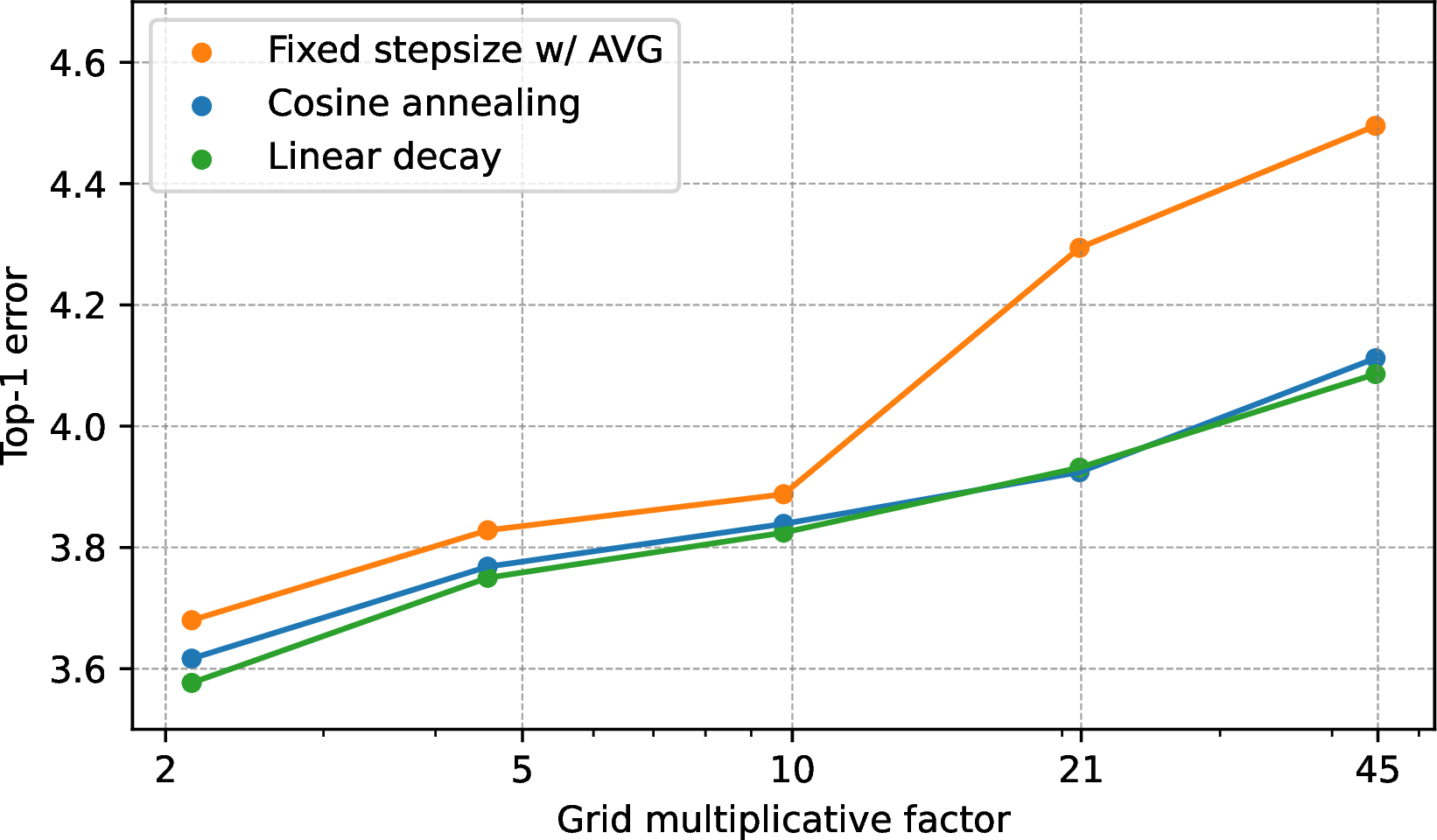

5.1.0.2 Wide ResNet on CIFAR-10.

We train a Wide ResNet 28-10 model6 [37] without dropout on the CIFAR-10 dataset [38]. We train for 200 epochs, using a batch size of \(128\), Nesterov momentum of \(0.9\), and weight decay of \(0.0005\). The scheduler is updated after each epoch. As the last iterate of fixed stepsize SGD is under-performing, we use polynomial averaging as proposed by [39], with parameter \(\gamma=8\), following [29].

5.2 Results↩︎

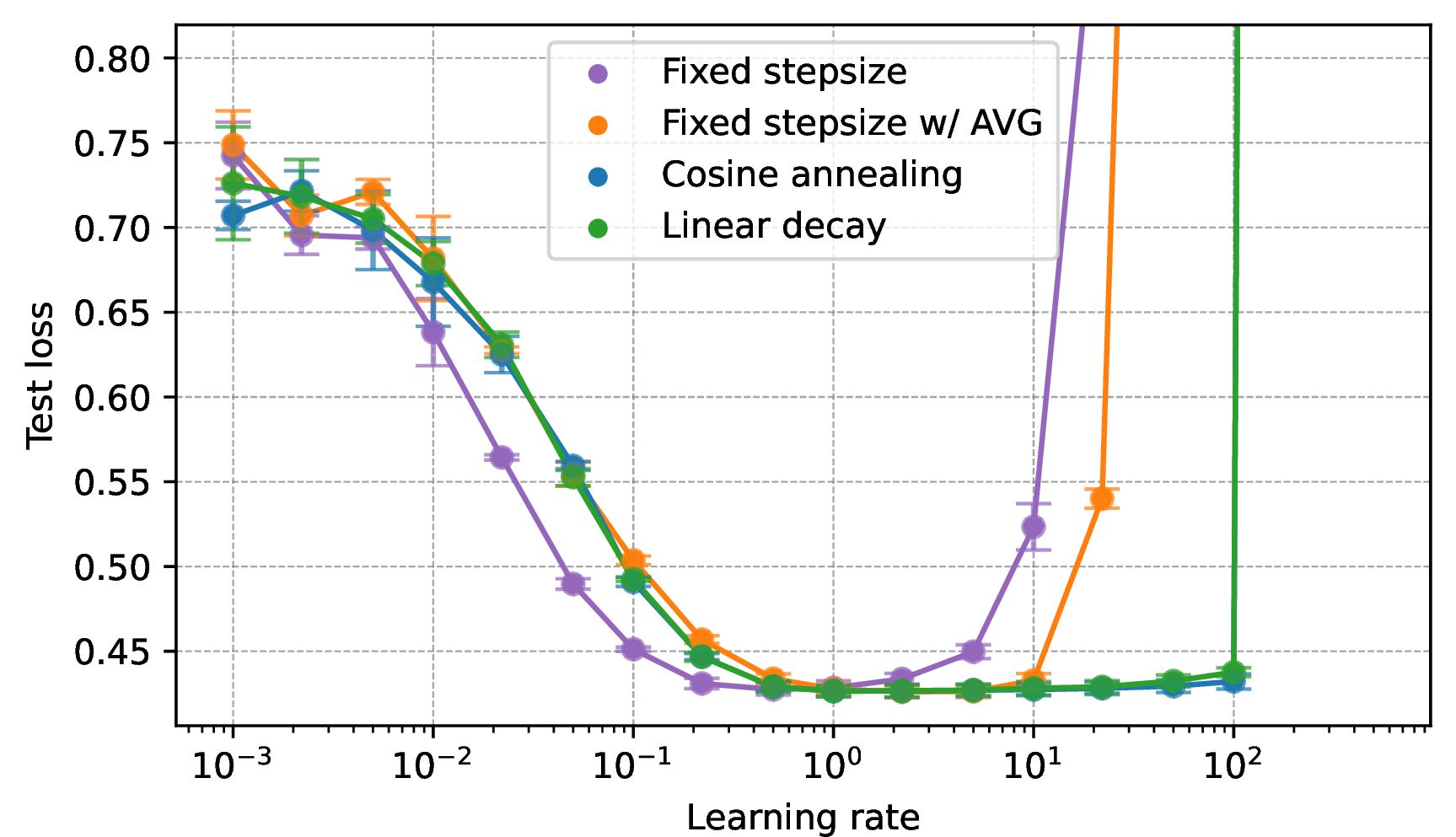

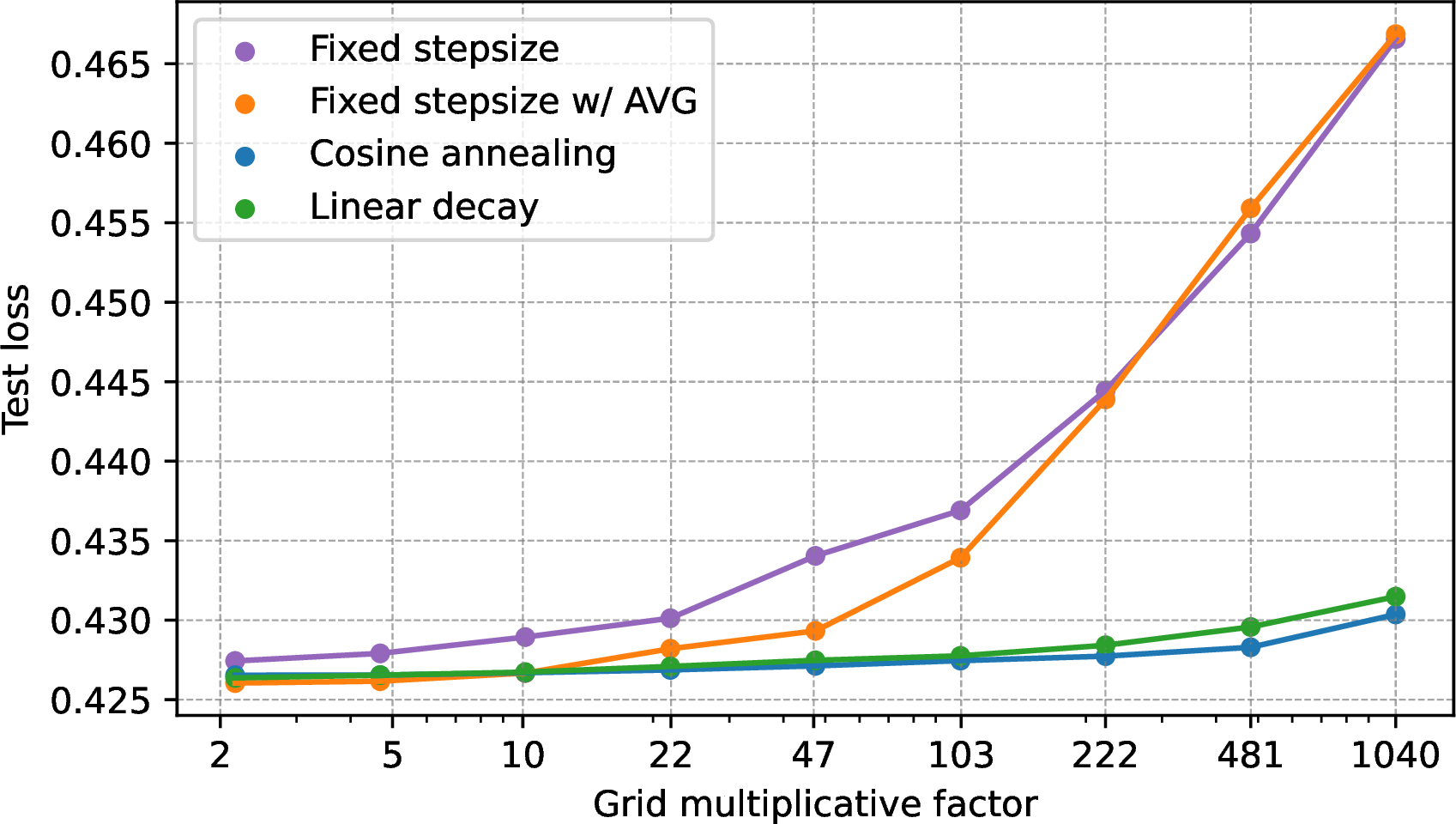

Figure 2: (a) Test loss for the logistic regression task with varying learning rates and different learning rate schedules. Each point represents 3 runs, reporting average and standard deviation. (b) Test loss of the best model in a sub-grid averaged over multiple sub-grids with the same multiplicative grid factor. The smallest multiplicative factor represents the full grid of (a). “Fixed stepsize w/ AVG” stands for fixed stepsize SGD with iterate averaging..

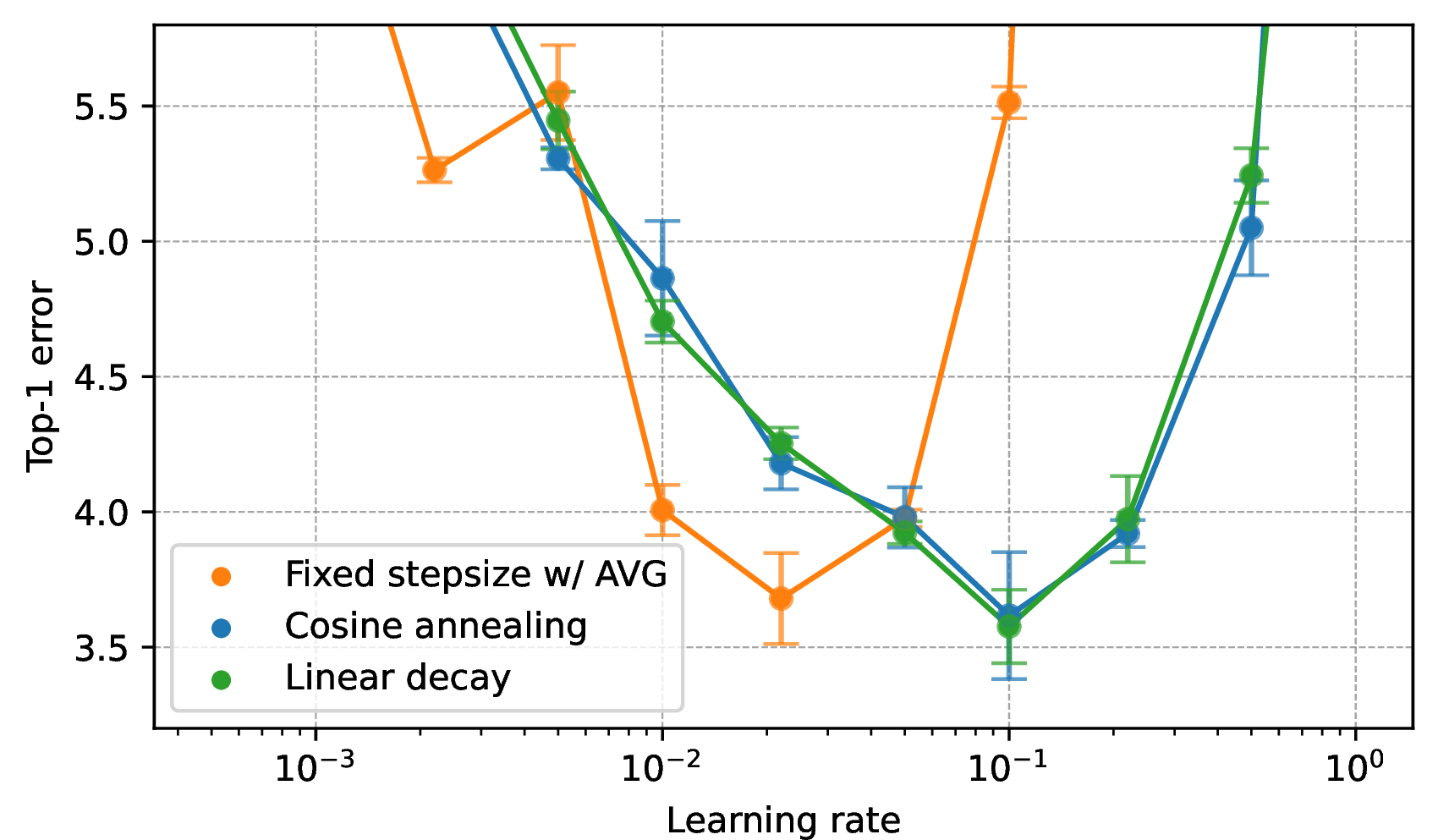

Figure 3: (a) CIFAR-10 top-1 test error of WideResNet28-10 with varying learning rates and different learning rate schedules. Each point represents 3 runs, reporting average and standard deviation. (b) Test error of the best model in a sub-grid averaged over multiple sub-grids with the same multiplicative grid factor. The smallest multiplicative factor represents the full grid of (a). “Fixed stepsize w/ AVG” stands for fixed stepsize SGD with polynomial iterate averaging..

The test loss per learning rate appears in 2 (a). For each resolution, 2 (b) illustrates the logistic regression test loss averaged across the best models for each sub-grid. At high resolutions (e.g., grid parameters up to 10), we observe a comparable performance degradation across different schedules (besides fixed stepsize without averaging which underperforms). However, as grid resolution decreases, the gap between the fixed learning rate schedule and the decaying schedules widens. For instance, with a grid factor of approximately 100, the performance of the fixed learning rate (with averaging) decreases by 0.08, whereas cosine annealing and linear decay schedules experience smaller drops of 0.01 and 0.014, respectively, with similar trends observed for grids with lower resolutions.

3 (b) shows the CIFAR-10 top-1 test error for each resolution, averaged over the best models per sub-grid, with the raw test error per learning rate appearing in 3 (a). Similar to the logistic regression task, degradation remains similar for high resolutions while the gap between the fixed learning rate schedule and the decaying schedules widens for large grid factors. With a grid factor of approximately 22, the performance of the fixed learning rate decreases by 0.61, with smaller drops of 0.3 and 0.35 observed for cosine annealing and linear decay schedules, respectively, and the trend continues for grids with lower resolutions.

5.3 Discussion↩︎

The experiments show that decaying schedules are more robust to coarse grids, while performance differences on fine grids remain minimal. These findings align with our theory, which suggests that all decaying schedules perform similarly to iterate averaging under small multiplicative misspecification but outperform it when misspecification is large. However, our theory also predicts robustness variations across decay rates, which are not observed in the real-data experiments. A possible explanation is the small difference in convergence rates among decaying schedules when misspecification is low, as illustrated in 1.

Acknowledgements↩︎

We are grateful to Noga Bar, Yair Carmon and Tomer Porian for helpful discussions. This project has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program (grant agreement No.). Views and opinions expressed are however those of the author(s) only and do not necessarily reflect those of the European Union or the European Research Council. Neither the European Union nor the granting authority can be held responsible for them. This work received additional support from the Israel Science Foundation (ISF, grant number 3174/23), a grant from the Tel Aviv University Center for AI and Data Science (TAD), and a fellowship from the Israeli Council of Higher Education.

6 Proofs of 3↩︎

6.1 Proof of 2↩︎

. Note that \(h(u)\) is non-increasing, differentiable (\(h'(u)=\frac{-\pi}{2} \sin(\pi u)\)), \(\frac{\pi}{2}\)-Lipschitz (as \(\abs{h'(u)}\leq \frac{\pi}{2}\)) and satisfy \(h(u)=0 \Leftrightarrow u=1\). Hence, \(h\) is annealed and by 1, \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] &\leq \frac{1}{2} \ratenonsmoothopt \cdot \inf_{\usuf \in [0,1)} \brk*{\frac{\hsuf(0)}{\rho \hsuf(\usuf)} + \frac{\rho \intsuf(\usuf)}{\intsuf(0)}} + O\brk*{\frac{\rho \etastar \lip^2}{T}} . \end{align}\] Next, we will bound \(h(u),\hsuf(u)\) and \(\intsuf(u)\) using polynomials. As \(\cos(\pi-\theta) = -\cos(\theta)\) and \(\cos(\theta) \geq 1-\frac{\theta^2}{2}\), \[\begin{align} \label{eq:cos-p1} h(u) = \frac{1}{2} (1-\cos(\pi (1-u))) \leq \frac{\pi^2}{4} (1-u)^2 \leq \frac{5}{2} (1-u)^2. \end{align}\tag{8}\] On the other hand, for \(u \in [0,1)\), \[\begin{align} \brk*{\frac{h(u)}{(1-u)^2}}' = \frac{-\frac{\pi}{2} \sin(\pi u) (1-u)^2 + 2 (1-u)}{(1-u)^4} = \frac{4-\pi(1-u)\sin(\pi u)}{2(1-u)^3} \geq \frac{4-\pi}{2} > 0 . \end{align}\] Using the fundamental theorem of calculus, for all \(u \in [0,1)\), \[\begin{align} \label{eq:cos-p2} \frac{h(u)}{(1-u)^2} = \frac{h(0)}{(1-0)^2} + \int_0^u \brk*{\frac{h(v)}{(1-v)^2}}' dv \geq 1 + \int_0^u 0 \cdot dv=1 \implies h(u) \geq (1-u)^2. \end{align}\tag{9}\] Using integration, 8 9 also implies that \[\begin{align} \label{eq:cos-p3} \frac{1}{3} (1-u)^3 \leq \hsuf(u) \leq \frac{5}{6} (1-u)^3. \end{align}\tag{10}\] Using the above inequalities, \[\begin{align} \label{eq:cos-p4} \intsuf(v) &= \int_v^1 \frac{h(u)^2}{\hsuf(u)} du \leq \frac{75}{4} \int_v^1 (1-u) du = \frac{75}{8} (1-v)^2 \end{align}\tag{11}\] and \[\begin{align} \label{eq:cos-p5} \intsuf(v) \geq \int_v^1 \frac{6(1-u)^4}{5(1-u)^3} du = \frac{3}{5} (1-v)^2. \end{align}\tag{12}\] Using the bounds, setting \(\usufb=1-\rho^{-0.4} \in [0,1)\), and noting that \(\hsuf(0)=\frac{1}{2} \int_0^1 (1+\cos(\pi u))du = \frac{1}{2}\), \[\begin{align} \label{eq:cos-inf-bound} \frac{\hsuf(0)}{\rho \hsuf(\usufb)} + \frac{\rho \intsuf(\usufb)}{\intsuf(0)} \leq \frac{3}{2\rho (1-\usufb)^3} + \frac{\rho 125 (1-\usufb)^2}{8} = \frac{3}{2\rho \rho^{-1.2}} + \frac{125 \rho \rho^{-0.8}}{8 \intsuf(0)} \leq 18 \rho^{0.2} . \end{align}\tag{13}\] Thus, \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] &\leq \ratenonsmoothopt \cdot 18 \rho^{\frac{1}{5}} + O\brk*{\frac{\rho \etastar \lip^2}{T}} . \end{align}\] Again noting that \(\hsuf(0)=\frac{1}{2}\) and using 11 , \[\begin{align} \ratenonsmoothopt &=\frac{2 \diam \lip}{\sqrt{T}} \sqrt{\intsuf(0) / \hsuf(0)} = \frac{2 \diam \lip}{\sqrt{T}} \sqrt{2 \intsuf(0)} \leq \frac{2 \diam \lip \sqrt{\frac{150}{8}}}{\sqrt{T}} \leq \frac{10 \diam \lip}{\sqrt{T}} . \qedhere \end{align}\] ◻

7 Proofs of 4↩︎

7.1 Proof of 2↩︎

In the proof, we use the following last-iterate guarantee for convex-smooth optimization, replacing [lem:last-iterate-convex] which we used in the convex Lipschitz case. The lemma is based on the technique introduced by [10] and the proof appears at 8.

lemmalastiteratesmooth Let \(\domain \subseteq \reals^d\) be a convex set, \(f : \domain \to \reals\) a convex function, and \(g : \domain \to \reals^d\) an unbiased first-order oracle of \(f\) with variance bounded by \(\sigma^2 \geq 0\). Let \(x_1,x_2,\ldots,x_{T+1}\) be the iterates produced by \(T\)-steps SGD with stepsizes \(\eta_1,\ldots,\eta_T\) (satisfying \(\eta_t \leq \frac{1}{2\sm}\) for all \(t \in [T]\)) and using the oracle \(g\). Then for any \(\hat{x} \in \domain\), \[\begin{align} \E[f(x_{T+1}) - f(\hat{x})] &\leq \frac{\norm{x_1-\hat{x}}^2}{2 \sum_{s=1}^T \eta_s} + \sigma^2 \sum_{t=1}^T \frac{\eta_t^2}{\sum_{s=t}^T \eta_s} . \end{align}\]

We proceed to the proof of 2.

Proof of 2. Let \(\usuf \in [\usuf_0,1)\) and let \(\tsuf = \floor{\usuf T} + 1 \in [T]\). Consider the suffix \(x_\tsuf,x_{\tsuf+1},\ldots,x_{T+1}\) as an SGD sequence starting at \(x_\tsuf\) and note that since \(h\) is non-increasing, \(\eta_\tsuf = \eta h\brk{\frac{\tsuf-1}{T}} \leq \eta h(\usuf_0) \leq \frac{1}{2\sm}\). Thus, by [lem:last-iterate-smooth] with \(\hat{x}=x^\star\), \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] &\leq \frac{\diam^2}{2 \sum_{s=\tsuf}^T \eta_s} + \sigma^2 \sum_{t=\tsuf}^T \frac{\eta_t^2}{\sum_{s=t}^T \eta_s} . \end{align}\] As \(\usuf \in [\frac{\tsuf-1}{T},\frac{\tsuf}{T})\), invoking 1 with \(c_1 = \diam^2/2\) and \(c_2 = \sigma^2\), \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] &\leq \frac{\diam^2}{2 \eta T \hsuf(\usuf)} + \eta \sigma^2 \int_{\usuf}^{1} \frac{h(u)^2}{\hsuf(u)} du + \frac{4 \eta p \sigma^2}{T} . \end{align}\] Substituting \(\eta=\rho \cdot \etastarsm\) and using 3 4 , \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] &\leq \frac{1}{\rho \hsuf(\usuf)} \cdot \frac{\diam^2}{2 \etastarsm T} + \brk*{\rho \int_{\usuf}^{1} \frac{h(u)^2}{\hsuf(u)} du} \cdot \etastarsm \sigma^2 + \frac{4 p \rho}{T} \cdot \etastarsm \sigma^2 \\ &= \frac{\hsuf(0)}{\rho \hsuf(\usuf)} \cdot \frac{\diam^2}{2 \etastarsm T \hsuf(0)} + \frac{\rho \intsuf(\usuf)}{\intsuf(0)} \cdot \etastarsm \sigma^2 \intsuf(0) + \frac{4 p \rho \etastarsm \sigma^2}{T} \\ &\leq \ratesmooth \cdot \brk*{\frac{\hsuf(0)}{\rho \hsuf(\usuf)} + \frac{\rho \intsuf(\usuf)}{\intsuf(0)} } + \frac{4 p \rho \etastarsm \sigma^2}{T} . \end{align}\] This inequality holds for any \(\usuf \in [\usuf_0,1)\), hence it holds for the infimum over all \(\usuf \in [\usuf_0,1)\). It is left to find the \(\usuf\) which minimizes the right-hand side. Let \[\begin{align} g(v) = \frac{\hsuf(0)}{\rho \hsuf(\usuf)} + \frac{\rho \intsuf(\usuf)}{\intsuf(0)} . \end{align}\] This is the same function as in 6 , so the same solution to \(\hsuf(\usuf)\hsuf'(\usuf)=\frac{-\hsuf(0)\intsuf(0)}{\rho^2}\) is the minimizer of the function, and if there is no solution, the function is increasing (positive derivative) and the minimizer is at \(\usuf=\usuf_0\). ◻

7.2 Proof of 3↩︎

. As in the proof of 1, \(h(u)\) is annealed as \(h(u)\) is non-increasing, differentiable, \(p\)-Lipschitz and satisfy \(h(u)=0 \Leftrightarrow u=1\). Hence, we can use 2. In addition, \(\hsuf(\usuf)=\frac{1}{p+1}(1-\usuf)^{p+1}\), \(\hsuf'(\usuf)=-(1-\usuf)^p\) and \(\intsuf(\usuf) = \frac{p+1}{p}(1-\usuf)^p\), so \[\begin{align} \frac{\hsuf(0)}{\rho \hsuf(\usuf)} + \frac{\rho \intsuf(\usuf)}{\intsuf(0)} &= \frac{1}{\rho (1-\usuf)^{p+1}} + \rho (1-\usuf)^p . \end{align}\] If \(\rho^2 \geq (1-\usuf_0)^{-(2p+1)}\) we can pick \(\usufb = 1-\rho^{\frac{-2}{2p+1}}\), as \(\usufb \in [\usuf_0,1)\). In this case, \[\begin{align} \frac{\hsuf(0)}{\rho \hsuf(\usufb)} + \frac{\rho \intsuf(\usufb)}{\intsuf(0)} &= \frac{1}{\rho \cdot \rho^{\frac{-2(p+1)}{2p+1}}} + \rho \cdot \rho^{\frac{-2p}{2p+1}} = 2 \rho^{\frac{1}{2p+1}} . \end{align}\] If \(\rho^2 < (1-\usuf_0)^{-(2p+1)}\) and \(\usuf_0 > 0\), picking \(\usufb=\usuf_0\) and using the \(p\)-Lipschitz property of \(h\), \[\begin{align} \frac{1}{2\sm} &\leq \eta h\brk*{\usuf_0-\frac{1}{T}} = \rho \etastarsm h\brk*{\usuf_0-\frac{1}{T}} \leq \rho \etastarsm h(\usuf_0) + \frac{p \rho \etastarsm}{T} \leq \frac{\rho h(\usuf_0)}{2\sm h(0)} + \frac{p \rho}{2 \sm h(0) T} {2\sm}} \\&\implies \rho \geq \brk*{1-\frac{p \rho}{T}} (1-\usuf_0)^{-p} \end{align}\] and \[\begin{align} \frac{\hsuf(0)}{\rho \hsuf(\usuf_0)} + \frac{\rho \intsuf(\usuf_0)}{\intsuf(0)} &= \frac{1}{\rho (1-\usuf_0)^{p+1}} + \rho (1-\usuf_0)^p \leq \frac{1}{\brk*{1-\frac{p\rho}{T}}(1-\usuf_0)} + \sqrt{\frac{1}{1-\usuf_0}} = O\brk*{\frac{1}{1-\usuf_0}} , \end{align}\] where the last two transitions use \(\rho^2 < (1-\usuf_0)^{-(2p+1)}\) and the assumption \(\rho \leq \frac{T}{2p}\). Since \(\rho \geq 1\) there is no case where \(\rho^2 < (1-\usuf_0)^{-(2p+1)}\) and \(\usuf_0 = 0\). Bounding the infimum of ?? in the two cases with our choices of \(\usufb\), if \(\rho^2 \geq (1-\usuf_0)^{-(2p+1)}\), \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] = \ratesmoothopt \cdot O\brk*{\rho^{\frac{1}{2p+1}} } + O \brk*{\frac{p \rho \etastarsm \sigma^2}{T}} , \end{align}\] and if \(\rho^2 < (1-\usuf_0)^{-(2p+1)}\), \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] = \ratesmoothopt \cdot O\brk*{\frac{1}{1-\usuf_0}} + O \brk*{\frac{p \rho \etastarsm \sigma^2}{T}} . \end{align}\] Noting that by the assumption \(\rho \leq \frac{T}{2p}\), \[\begin{align} \frac{p \rho \etastarsm \sigma^2}{T} \leq \frac{\etastarsm \sigma^2}{2} \leq \frac{\ratesmoothopt}{2 \intsuf(0)} = O(\ratesmoothopt), \end{align}\] we obtain our final convergence guarantees. The bound of \(\ratesmoothopt\) follows from plugging \(\hsuf(0)=\frac{1}{p+1}\) and \(\intsuf(0)=\frac{p+1}{p}\) to 4 . ◻

7.3 Proof of 4↩︎

. As in the proof of 2, \(h\) is annealed as \(h(u)\) is non-increasing, differentiable, \(\frac{\pi}{2}\)-Lipschitz and satisfy \(h(u)=0 \Leftrightarrow u=1\). Hence, we can use 2. We already established at 13 of the proof of 2 that \[\begin{align} \frac{\hsuf(0)}{\rho \hsuf(\usuf)} + \frac{\rho \intsuf(\usuf)}{\intsuf(0)} &\leq \frac{3}{2 \rho (1-\usuf)^{3}} + \frac{125 \rho (1-\usuf)^2}{8 } . \end{align}\] If \(\rho^2 \geq (1-\usuf_0)^{-5}\) we can pick \(\usufb = 1-\rho^{\frac{-2}{5}}\), as \(\usufb \in [\usuf_0,1)\). In this case, \[\begin{align} \frac{\hsuf(0)}{\rho \hsuf(\usufb)} + \frac{\rho \intsuf(\usufb)}{\intsuf(0)} &\leq \frac{3}{2 \rho \cdot \rho^{\frac{-6}{5}}} + \frac{125 \rho \cdot \rho^{\frac{-4}{5}}}{8} = \frac{137 \rho^{\frac{1}{5}}}{8} \leq 18 \rho^{\frac{1}{5}} . \end{align}\] If \(\rho^2 < (1-\usuf_0)^{-5}\) and \(\usuf_0 > 0\), picking \(\usufb=\usuf_0\), using the definition of \(\usuf_0\) and the Lipschitz property of \(h\), \[\begin{align} \frac{1}{2\sm} &\leq \eta h\brk*{\usuf_0-\frac{1}{T}} = \rho \etastarsm h\brk*{\usuf_0-\frac{1}{T}} \leq \rho \etastarsm h(\usuf_0) + \frac{\pi \rho \etastarsm}{2 T} \leq \frac{\rho h(\usuf_0)}{2\sm h(0)} + \frac{\pi \rho}{4 \sm h(0) T}, {2\sm}} \end{align}\] implying (with \(h(0)=1\)) that \[\begin{align} \rho \geq \brk*{1-\frac{\pi \rho}{2 T}} h(\usuf_0) \geq \brk*{1-\frac{\pi \rho}{2 T}} (1-\usuf_0)^2. \end{align}\] In addition to the assumption \(\rho^2 < (1-\usuf_0)^{-5}\), \[\begin{align} \frac{\hsuf(0)}{\rho \hsuf(\usuf_0)} + \frac{\rho \intsuf(\usuf_0)}{\intsuf(0)} &\leq \frac{3}{2 \rho (1-\usuf_0)^3} + \frac{125 \rho (1-\usuf_0)^2}{8} \\&\leq \frac{3}{2\brk*{1-\frac{\pi\rho}{2T}}(1-\usuf_0)} + \frac{125}{8} \sqrt{\frac{1}{1-\usuf_0}} = O\brk*{\frac{1}{1-\usuf_0}} , \end{align}\] where the last transition uses the assumption \(\rho \leq \frac{2 T}{\pi}\). Since \(\rho \geq 1\) there is no case where \(\rho^2 < (1-\usuf_0)^{-(2p+1)}\) and \(\usuf_0 = 0\). Bounding the infimum of ?? in the two cases with our choices of \(\usufb\), if \(\rho^2 \geq (1-\usuf_0)^{-5}\), \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] &= \ratesmoothopt \cdot O\brk*{\rho^{\frac{1}{5}} } + O \brk*{\frac{\rho \etastarsm \sigma^2}{T}} , \end{align}\] and if \(\rho^2 < (1-\usuf_0)^{-5}\), \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] &= \ratesmoothopt \cdot O\brk*{\frac{1}{1-\usuf_0}} + O \brk*{\frac{\rho \etastarsm \sigma^2}{T}} . \end{align}\] We obtain our final convergence guarantees by noting that \(\rho \leq \frac{2 T}{\pi}\), which, together with the fact that \(\intsuf(0)=\Theta(1)\) implies \[\begin{align} \frac{\rho \etastarsm \sigma^2}{T} \leq \frac{2 \etastarsm \sigma^2}{\pi} \leq \frac{2 \ratesmoothopt}{\pi \intsuf(0)} = O(\ratesmoothopt), \end{align}\] and plugging back to the above bounds. The bound of \(\ratesmoothopt\) is immediate from 4 as \(\hsuf(0)=\frac{1}{2}\) and \(\intsuf(0)=\Theta(1)\) (as we established in 12 ). ◻

8 Last Iterate Guarantees for Stochastic Gradient Descent↩︎

A convergence analysis of Stochastic Gradient Descent (SGD) for convex Lipschitz and convex smooth functions follows. The technique, introduced by [9] and later refined by [10], is based on comparing the iterates of SGD \((x_1,x_2,\ldots)\) with iterates of the form \[\begin{align} \label{eq:z-def} z_t \eqdef \frac{v_{t-1}}{v_t} z_{t-1} + \brk*{1-\frac{v_{t-1}}{v_t}} x_t = \frac{v_0}{v_t} \hat{x} + \sum_{s=1}^t \frac{v_s-v_{s-1}}{v_t} x_s \end{align}\tag{14}\] for some non-increasing sequence \(v_0,v_1,v_2,\ldots\), starting at some \(z_0 =\hat{x} \in \domain\). Note that by Jensen’s inequality, for any \(t \geq 2\), \[\begin{align} \label{eq:z-jensen} f(z_t) \leq \frac{v_0}{v_t} f(\hat{x}) + \sum_{s=1}^t \frac{v_s-v_{s-1}}{v_t} f(x_s). \end{align}\tag{15}\] In particular, for any \(t \in [T]\), we will use \[\begin{align} \label{eq:v-def} v_t \eqdef \frac{\eta_T}{\sum_{s=t}^T \eta_s} \end{align}\tag{16}\] and \(v_0=v_1\), similarly to [10]. Next, we restate the convergence results. Their proofs follow.

8.1 Proof of [lem:last-iterate-convex] [lem:last-iterate-smooth]↩︎

To prove the last-iterate guarantees we need the following lemmas. Their proofs follow. The first translates from an average regret-like guarantee to a last-iterate guarantee.

Lemma 2. Let \(\domain \subseteq \reals^d\) be a convex set, \(x_1,\hat{x}\in\domain\), \(f : \domain \to \reals\) a convex function and \(T \in \naturals\). Then for any sequences \(g_1,\ldots,g_T \in \reals^d\) and \(\eta_1,\ldots,\eta_T > 0\), the iterates defined by \(x_{t+1}=x_t-\eta_t g_t\) satisfy \[\begin{align} \eta_T v_T (f(x_{T+1})-f(\hat{x})) \leq \sum_{t=1}^T \eta_t v_t (f(x_{t+1})-f(z_t)) , \end{align}\] where \(z_0,\ldots,z_T\) and \(v_0,\ldots,v_T\) are defined by 16 14 .

Lemma 3. Let \(\domain \subseteq \reals^d\) be a convex set, \(x_1,\hat{x}\in\domain\), \(f : \domain \to \reals\) a convex function and \(T \in \naturals\). Then for any \(t \in [T]\), the iterates of SGD satisfy \[\begin{align} \E[f(x_{t+1}) - f(z_t)] &\leq \E\brk[s]*{\frac{\norm{x_t-z_t}^2-\norm{x_{t+1}-z_t}^2 - \norm{x_{t+1}-x_t}^2}{2 \eta_t} + f(x_{t+1})-f(x_t) + g_t \dotp (x_t-x_{t+1})} , \end{align}\] where \(z_0,\ldots,z_T\) are defined by 14 .

We proceed to the proof.

Proof of [lem:last-iterate-convex] [lem:last-iterate-smooth]. By 3, \[\begin{align} \E[f(x_{t+1}) - f(z_t)] &\leq \E\brk[s]*{\frac{\norm{x_t-z_t}^2-\norm{x_{t+1}-z_t}^2}{2 \eta_t} + \Delta_t} , \end{align}\] where \(\Delta_t \eqdef f(x_{t+1})-f(x_t)+g_t \dotp(x_t-x_{t+1})-\frac{\norm{x_{t+1}-x_t}^2}{2\eta_t}\). By the definition of \(z_t\) and the fact that \(v_t \geq v_{t-1}\), \[\begin{align} \norm{x_t-z_t}^2 &= \frac{v_{t-1}^2}{v_t^2} \norm{x_{t-1}-z_t}^2 \leq \frac{v_{t-1}}{v_t} \norm{x_{t-1}-z_t}^2 . \end{align}\] Combining with our previous inequality multiplied by \(\eta_t v_t\), \[\begin{align} \E[\eta_t v_t (f(x_{t+1}) - f(z_t))] &\leq \E\brk[s]*{\frac{v_{t-1} \norm{x_t-z_{t-1}}^2-v_t \norm{x_{t+1}-z_t}^2}{2} + \eta_t v_t \Delta_t} . \end{align}\] Summing for \(t=1,\ldots,T\), and removing \(-v_T \norm{x_{T+1}-z_T}^2 \leq 0\), \[\begin{align} \E\brk[s]*{\sum_{t=1}^T \eta_t v_t (f(x_{t+1}) - f(z_t))} &\leq \E\brk[s]*{\frac{v_{0} \norm{x_1-z_{0}}^2}{2} + \sum_{t=1}^T \eta_t v_t \Delta_t} . \end{align}\] Combining with 2, and noting that \(z_0=\hat{x}\), \[\begin{align} \label{eq:pre-special} \eta_T v_T (f(x_{T+1})-f(\hat{x})) &\leq \E\brk[s]*{\frac{v_{0} \norm{x_1-\hat{x}}^2}{2} + \sum_{t=1}^T \eta_t v_t \Delta_t} . \end{align}\tag{17}\] Next, we assume a second-moment bound (as in [lem:last-iterate-convex]). From convexity, \[\begin{align} \E[f(x_{t+1})-f(x_t)] \leq \E[\nabla f(x_{t+1}) \dotp (x_{t+1} - x_t)] \leq \E\brk[s]*{\eta_t \norm{\nabla f(x_t)}^2 + \frac{\norm{x_{t+1}-x_t}^2}{4 \eta_t}} , \end{align}\] where we used the inequality \(2 u \dotp v \leq \norm{u}^2 + \norm{v}^2\). Similarly, \(g_t \dotp (x_t-x_{t+1}) \leq \eta_t \norm{g_t}^2 + \frac{\norm{x_{t+1}-x_t}}{4 \eta_t}\). Hence, using the second-moment bound, \(\E \Delta_t \leq 2 \eta_t \lip^2\). Plugging the bound of \(\E[\Delta_t]\) to 17 concludes the proof of [lem:last-iterate-convex]. Next we assume that \(f\) is \(\sm\)-smooth, a variance bound, and that \(\eta_t \leq \frac{1}{2\sm}\) for all \(t \in [T]\) (as in [lem:last-iterate-smooth]). By smoothness, \[\begin{align} f(x_{t+1})-f(x_t) &\leq \nabla f(x_t) \dotp (x_{t+1}-x_t) + \frac{\sm}{2} \norm{x_{t+1}-x_t}^2 \\&\leq \nabla f(x_t) \dotp (x_{t+1}-x_t) + \frac{1}{4 \eta_t} \norm{x_{t+1}-x_t}^2 {2\sm}} . \end{align}\] By the inequality \(2 u \dotp v \leq \norm{u}^2 + \norm{v}^2\), \[\begin{align} \nabla f(x_t) \dotp (x_{t+1}-x_t) &= (\nabla f(x_t)-g_t) \dotp (x_{t+1}-x_t) + g_t \dotp (x_{t+1}-x_t) \\&\leq \eta_t \norm{\nabla f(x_t)-g_t}^2 + \frac{\norm{x_{t+1}-x_t}^2}{4 \eta_t} + g_t \dotp (x_{t+1}-x_t) . \end{align}\] Hence, using the variance bound, \[\begin{align} \E \Delta_t &\leq \E\brk[s]*{\eta_t \norm{\nabla f(x_t)-g_t}^2 + \frac{\norm{x_{t+1}-x_t}^2}{4 \eta_t} + \frac{\norm{x_{t+1}-x_t}^2}{4 \eta_t} - \frac{\norm{x_{t+1}-x_t}^2}{2 \eta_t}} \leq \eta_t \sigma^2 . \end{align}\] Plugging the bound of \(\E[\Delta_t]\) to 17 concludes the proof of [lem:last-iterate-smooth]. ◻

8.2 Proof of 2↩︎

Proof. By 15 , \[\begin{align} \sum_{t=1}^T \eta_t v_t (f(x_{t+1})-f(z_t)) &\geq \sum_{t=1}^T \eta_t v_t \brk*{f(x_{t+1})-\brk*{\frac{v_0}{v_t} f(\hat{x}) + \sum_{s=1}^t \frac{v_s-v_{s-1}}{v_t} f(x_s)}} \\ &= \sum_{t=1}^T \eta_t \brk*{v_t f(x_{t+1})-\brk*{v_0 f(\hat{x}) + \sum_{s=1}^t \brk{v_s-v_{s-1}} f(x_s)}} \\ &= \sum_{t=1}^T \eta_t \brk*{v_t f(x_{t+1})-v_t f(\hat{x}) - \sum_{s=1}^t \brk{v_s-v_{s-1}} (f(x_s)-f(\hat{x}))} \\ &= \eta_T v_T (f(x_{T+1})-f(\hat{x})) \\ &+ \sum_{t=1}^T \brk*{\eta_{t-1} v_{t-1} - (v_t-v_{t-1}) \sum_{s=t}^{T} \eta_s} (f(x_t)-f(\hat{x})) . \end{align}\] Note that \(v_1=v_0\) and for \(2 \leq t \leq T\), \[\begin{align} \eta_{t-1} v_{t-1} - (v_t-v_{t-1}) \sum_{s=t}^{T} \eta_s &= \eta_T \brk*{\frac{\eta_{t-1}}{\sum_{s=t-1}^T \eta_s} - \frac{\eta_{t-1} \sum_{s=t}^{T} \eta_s}{\sum_{s=t-1}^T \eta_s \cdot \sum_{s=t}^T \eta_s}} = 0 . \end{align}\] Thus, \[\begin{align} \eta_T v_T (f(x_{T+1})-f(\hat{x})) &\leq \sum_{t=1}^T \eta_t v_t (f(x_{t+1})-f(z_t)) . \qedhere \end{align}\] ◻

8.3 Proof of 3↩︎

Proof. Using the convexity of \(f\), \[\begin{align} \label{eq:xt1-zt} \E[f(x_{t+1}) - f(z_t)] &= \E[f(x_t) - f(z_t) + f(x_{t+1}) - f(x_t)] \leq \E[\nabla f(x_t) \dotp (x_t-z_t) + f(x_{t+1}) - f(x_t)] . \end{align}\tag{18}\] Focusing on the first term, as \(z_t\) does not depend on \(g_t\), \[\begin{align} \E[\nabla f(x_t) \dotp (x_t-z_t)] &= \E[g_t \dotp (x_t-z_t)] = \E[g_t \dotp (x_{t+1}-z_t) + g_t \dotp (x_t-x_{t+1})] . \end{align}\] Note that the update step is \[\begin{align} x_{t+1} = \argmin_{x \in \domain}\set*{f(x_t) + g_t \dotp (x-x_t) + \frac{1}{2 \eta_t} \norm{x-x_t}^2}. \end{align}\] From the first-order optimality condition, \[\begin{align} \frac{1}{\eta_t}(x_{t+1}-x_t+\eta_t g_t) \dotp (z_t-x_{t+1}) \geq 0. \end{align}\] Rearranging, \[\begin{align} g_t \dotp (x_{t+1}-z_t) &\leq \frac{\norm{x_t-z_t}^2-\norm{x_{t+1}-z_t}^2-\norm{x_{t+1}-x_t}^2}{2 \eta_t} . \end{align}\] Thus, \[\begin{align} \E[\nabla f(x_t) \dotp (x_t-z_t)] &\leq \E\brk[s]*{\frac{\norm{x_t-z_t}^2-\norm{x_{t+1}-z_t}^2-\norm{x_{t+1}-x_t}^2}{2 \eta_t} + g_t \dotp (x_t-x_{t+1})} . \end{align}\] Returning to 18 , we conclude that \[\begin{align} \E[f(x_{t+1}) - f(z_t)] &\leq \E\brk[s]*{\frac{\norm{x_t-z_t}^2-\norm{x_{t+1}-z_t}^2 - \norm{x_{t+1}-x_t}^2}{2 \eta_t} + f(x_{t+1})-f(x_t) + g_t \dotp (x_t-x_{t+1})} . \qedhere \end{align}\] ◻

9 Sensitivity of Fixed Stepsize Gradient Descent to Misspecification of the Stepsize↩︎

Given a \(\lip\)-Lipschitz function \(f : \domain \to \reals\), where \(\domain \subset \reals^d\) is a convex set with diameter \(\diam\), the standard average-iterate convergence guarantee of \(T\)-steps Gradient Descent (GD) with a fixed stepsize \(\eta>0\) is \[\begin{align} f\brk*{\frac{1}{T} \sum_{t=1}^T x_t} - \min_{x \in \domain} f(x) \leq \ratenonsmoothconstant(\eta) \eqdef \frac{\diam^2}{2 \eta T} + \frac{\eta \lip^2}{2}. \end{align}\] The optimal \(\etastar=\frac{\diam}{\lip\sqrt{T}}\) satisfy \(\ratenonsmoothconstant(\etastar)=\frac{\diam \lip}{\sqrt{T}}\). Given a multiplicative overestimation of the optimal stepsize, \(\eta=\rho \etastar\) for \(\rho \geq 1\), the convergence guarantee is \[\begin{align} \ratenonsmoothconstant(\rho \etastar) = \ratenonsmoothconstant(\etastar) \brk*{\frac{1}{2\rho} + \frac{\rho}{2}} = \Omega(\rho \ratenonsmoothconstant(\etastar)). \end{align}\] A natural follow-up question is whether this linear dependence on \(\rho\) is simply an artifact of the analysis or a true degradation in the convergence rate of GD. Next, we show that for any weights \(w_1,\ldots,w_T\), the worst-case convergence rate of the (weighted) average iterate is \(\Omega(\rho \ratenonsmoothconstant(\etastar))\).

Let \(T \in \naturals\), \(\diam > 0\), \(\lip > 0\), \(0 < \rho < \frac{1}{2} \sqrt{T}\) and \(w_1,\ldots,w_T > 0\). First we will assume that \(w_1+w_3+\ldots+w_{2\floor{(T-1)/2}+1} \geq w_2+w_4+\ldots+w_{2\floor{T/2}}\). Let \(\eta = \frac{\rho \diam}{\lip \sqrt{T}}\) for some \(\rho \geq 1\), \(f(x) = \lip \abs{x}\) defined over the domain \([-\frac{\diam}{2},\frac{\diam}{2}]\), and let \(x_1=\frac{3}{4}\lip \eta\). After a single gradient step, \(x_2 = x_1 - \eta \lip = -\frac{1}{4} \lip\eta\). After another update step, \(x_3=x_2 + \eta \lip = \frac{3}{4} \lip \eta = x_1\). Hence, the iterates will move back and forth between \(\frac{3}{4}\lip\eta\) and \(-\frac{1}{4}\lip\eta\), and the average iterate \(\xout\) will satisfy \[\begin{align} \xout = \frac{1}{\sum_{t=1}^T w_t} \sum_{t=1}^T w_t x_t &= \frac{\lip \eta \brk*{3 \sum_{t=1,3,\ldots} w_t - \sum_{t=2,4,\ldots} w_t}}{4 \sum_{t=1}^T w_t} \geq \frac{\lip \eta \brk*{2 \sum_{t=1,3,\ldots} w_t}}{8 \sum_{t=1,3,\ldots} w_t} = \frac{\eta \lip}{4} , \end{align}\] where we used our assumption that \(w_1+w_3+\ldots+w_{2\floor{(T-1)/2}+1} \geq w_2+w_4+\ldots+w_{2\floor{T/2}}\). Hence, \[\begin{align} f(\xout) \geq \frac{\eta \lip^2}{4} = \frac{\rho \diam \lip}{4 \sqrt{T}} = \Omega(\rho \ratenonsmoothconstant(\etastar)). \end{align}\] If, on the other hand, it holds that \(w_1+w_3+\ldots+w_{2\floor{(T-1)/2}+1} < w_2+w_4+\ldots+w_{2\floor{T/2}}\), we can initialize \(x_1 = -\frac{\lip \eta}{4}\) and mirroring the same argument will conclude the proof.

Hence, the worst-case convergence rate of fixed stepsize GD degrades linearly in a multiplicative misspecification of the stepsize. As GD is a private case of SGD, the lower bound also holds for SGD with a second-moment bound \(\lip^2\).

10 Convergence Analysis with Stepsize Schedules↩︎

In this section, we provide convergence guarantees for SGD with an annealed schedule in the convex Lipschitz and convex smooth settings. The guarantees are established by combining a last-iterate guarantee with 1, which translates the sums of stepsizes to integrals that depend on the schedule. The proofs follow.

Note that when we tune \(\eta\) according to 2 , we obtain a convergence rate of \[\begin{align} \frac{2 \diam \lip}{\sqrt{T}} \sqrt{\intsuf(0) / \hsuf(0)} + O\brk*{\frac{p \diam \lip / \sqrt{\hsuf(0)\intsuf(0)}}{T^{3/2}}}. \end{align}\]

Similarly, when we tune \(\eta\) according to 3 , we obtain a convergence rate of \[\begin{align} \frac{\sm \diam^2 h(0)}{T \hsuf(0)} + \frac{\diam \sigma}{\sqrt{T}} \sqrt{2 \intsuf(0) / \hsuf(0)} + O\brk*{\frac{p \diam \sigma / \sqrt{\hsuf(0)\intsuf(0)}}{T^{3/2}}}. \end{align}\]

Note that using the fact that \(h\) is non-increasing and the Lipschitz condition, \[\begin{align} h(0) \geq \hsuf(0) = \int_0^1 h(u) du \geq \int_0^{\min\{1,h(0)/2p\}} \frac{1}{2} h(0) du = \frac{1}{2} h(0) \min\{1,h(0)/2p\}. \end{align}\] Additionally, \[\begin{align} \intsuf(0) = \int_0^1 \frac{h(u)^2}{\hsuf(u)} du \geq \int_0^1 h(u) du = \hsuf(0) \geq \frac{1}{2} h(0) \min\{1,h(0)/2p\} \end{align}\] and using 7 , \[\begin{align} \intsuf(0) = \int_0^1 \frac{\hsuf'(u)^2}{\hsuf(u)} du \leq 2p . \end{align}\] Hence, assuming \(h(0)=\Theta(1)\) and \(p=\Theta(1)\), \(\hsuf(0)\) and \(\intsuf(0)\) are \(\Theta(1)\), and the rates above match those of optimally tuned fixed stepsize SGD up to constant factors.

10.1 Proofs of [lem:annealed-nonsmooth] [lem:annealed-smooth]↩︎

Proof of [lem:annealed-nonsmooth]. By [lem:last-iterate-convex] with \(\hat{x}=x^\star\), \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] &\leq \frac{\diam^2}{2 \sum_{s=1}^T \eta_s} + 2\lip^2 \sum_{t=1}^T \frac{\eta_t^2}{\sum_{s=t}^T \eta_s} . \end{align}\] Using 1 with \(c_1 = \diam^2/2\), \(c_2 = 2 \lip^2\), \(k=1\) and \(\usuf=0\), \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] & \leq \frac{\diam^2}{2 \eta T \hsuf(0)} \! + \! 2\eta \lip^2 \int_{0}^{1-\frac{1}{T}} \frac{h(u)^2}{\hsuf(u)} du \!+\! \frac{8 p \eta \lip^2}{T} \leq \frac{\diam^2}{2 \eta T \hsuf(0)} + 2\eta \lip^2 \intsuf(0) + \frac{8 p \eta \lip^2}{T} , \end{align}\] where that last inequality follows by the fact that \(h(u)\) and \(\hsuf(u)\) are non-negative and the definition of \(\intsuf(u)\). ◻

Proof of [lem:annealed-smooth]. As \(\eta_1 = \eta h(0) \leq \frac{1}{2\sm}\) and \(h\) is non-increasing, \(\eta_t \leq \frac{1}{2\sm}\) and we can use [lem:last-iterate-smooth] with \(\hat{x}=x^\star\), obtaining \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] &\leq \frac{\diam^2}{2 \sum_{s=\tsuf}^T \eta_s} + \sigma^2 \sum_{t=\tsuf}^T \frac{\eta_t^2}{\sum_{s=t}^T \eta_s} . \end{align}\] Invoking 1 with \(c_1 = \diam^2/2\), \(c_2 = \sigma^2\), \(k=1\) and \(\usuf=0\), \[\begin{align} \E[f(x_{T+1}) - f(x^\star)] &\leq \frac{\diam^2}{2 \eta T \hsuf(0)} + \eta \sigma^2 \int_{0}^{1-\frac{1}{T}} \frac{h(u)^2}{\hsuf(u)} du + \frac{4 \eta p \sigma^2}{T} \leq \frac{\diam^2}{2 \eta T \hsuf(0)} + \eta \sigma^2 \intsuf(0) + \frac{4 p \eta \sigma^2}{T} , \end{align}\] where that last inequality follows by the fact that \(h(u)\) and \(\hsuf(u)\) are non-negative and the definition of \(\intsuf(u)\). ◻

References↩︎

Blavatnik School of Computer Science, Tel Aviv University;

amitattia@mail.tau.ac.il.↩︎Blavatnik School of Computer Science, Tel Aviv University, and Google Research Tel Aviv;

tkoren@tauex.tau.ac.il.↩︎A function \(f : \domain \to \reals\) is said to be \(\sm\)-smooth if \(\norm{\nabla f(x)-\nabla f(y)} \leq \sm \norm{x-y}\) for all \(x,y \in \domain\). In particular, this implies that \(\abs{f(y) - f(x) - \nabla f(x) \dotp (y-x)} \leq \frac{\sm}{2} \norm{y-x}^2\) for all \(x,y \in \domain\).↩︎

By tuned we mean a stepsize that minimize a corresponding convergence guarantee that depend on \(\eta\), possibly ignoring lower-order terms for simplicity.↩︎

We average the performance over 3 runs per learning rate.↩︎

We use the PyTorch implementation of Wide ResNet at https://github.com/bmsookim/wide-resnet.pytorch.↩︎