Measure Twice, Cut Once: Grasping Video Structures and Event Semantics with LLMs for Video Temporal Localization

March 12, 2025

Abstract

Localizing user-queried events through natural language is crucial for video understanding models. Recent methods predominantly adapt Video LLMs to generate event boundary timestamps to handle temporal localization tasks, which struggle to leverage LLMs’ powerful semantic understanding. In this work, we introduce MeCo, a novel timestamp-free framework that enables video LLMs to fully harness their intrinsic semantic capabilities for temporal localization tasks. Rather than outputting boundary timestamps, MeCo partitions videos into holistic event and transition segments based on the proposed structural token generation and grounding pipeline, derived from video LLMs’ temporal structure understanding capability. We further propose a query-focused captioning task that compels the LLM to extract fine-grained, event-specific details, bridging the gap between localization and higher-level semantics and enhancing localization performance. Extensive experiments on diverse temporal localization tasks show that MeCo consistently outperforms boundary-centric methods, underscoring the benefits of a semantic-driven approach for temporal localization with video LLMs.1

1 Introduction↩︎

Localizing important temporal events based on user interests is an essential capability for video recognition systems to handle practical video tasks such as moment retrieval [1]–[4], action localization [5]–[8], video summarization [9]–[12], and dense video captioning [13]–[16]. Though specialist models used to be designed to handle each specific task, recent efforts have started leveraging video LLMs [17]–[24] to integrate these temporal localization tasks into a single framework [25]–[30].

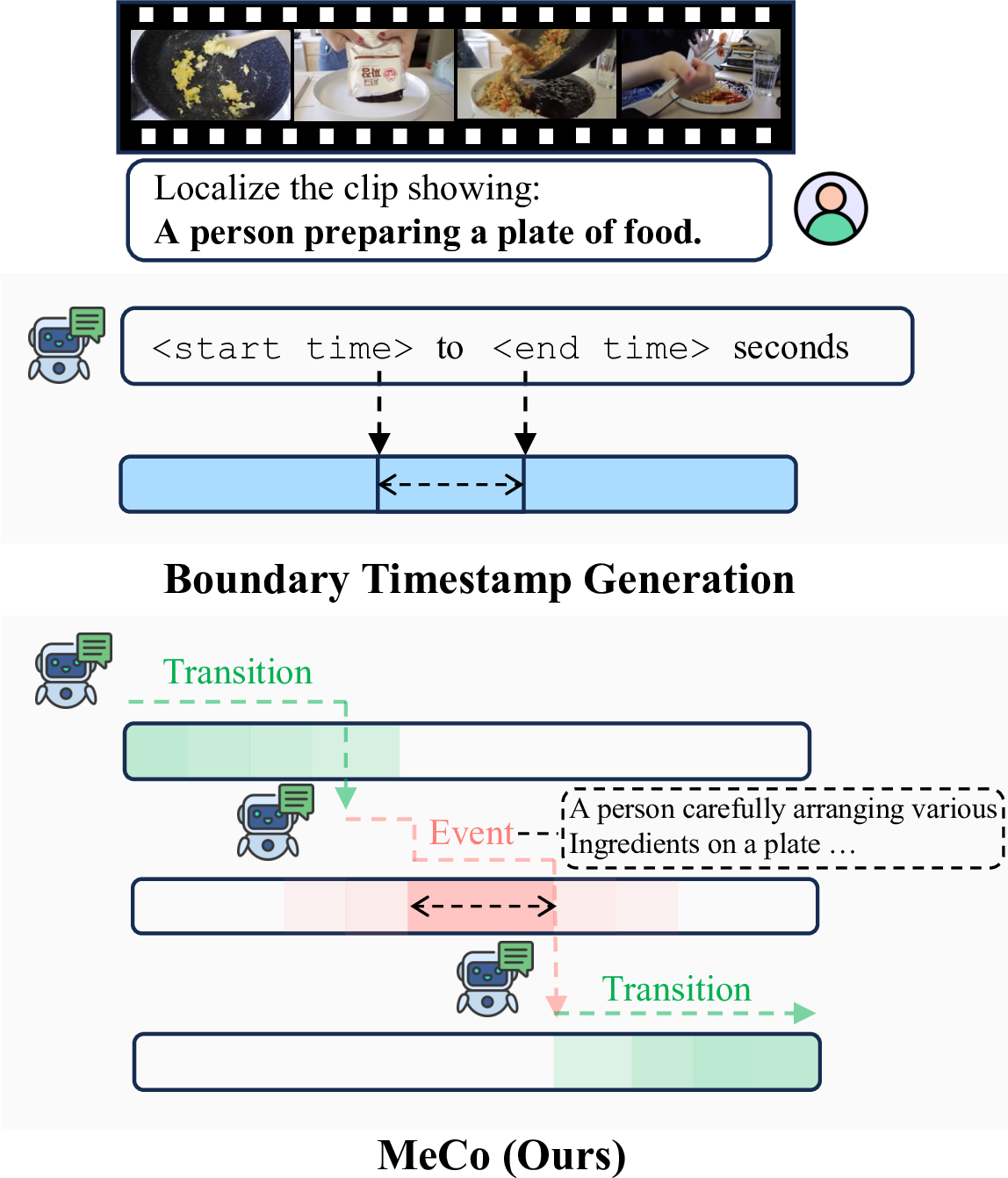

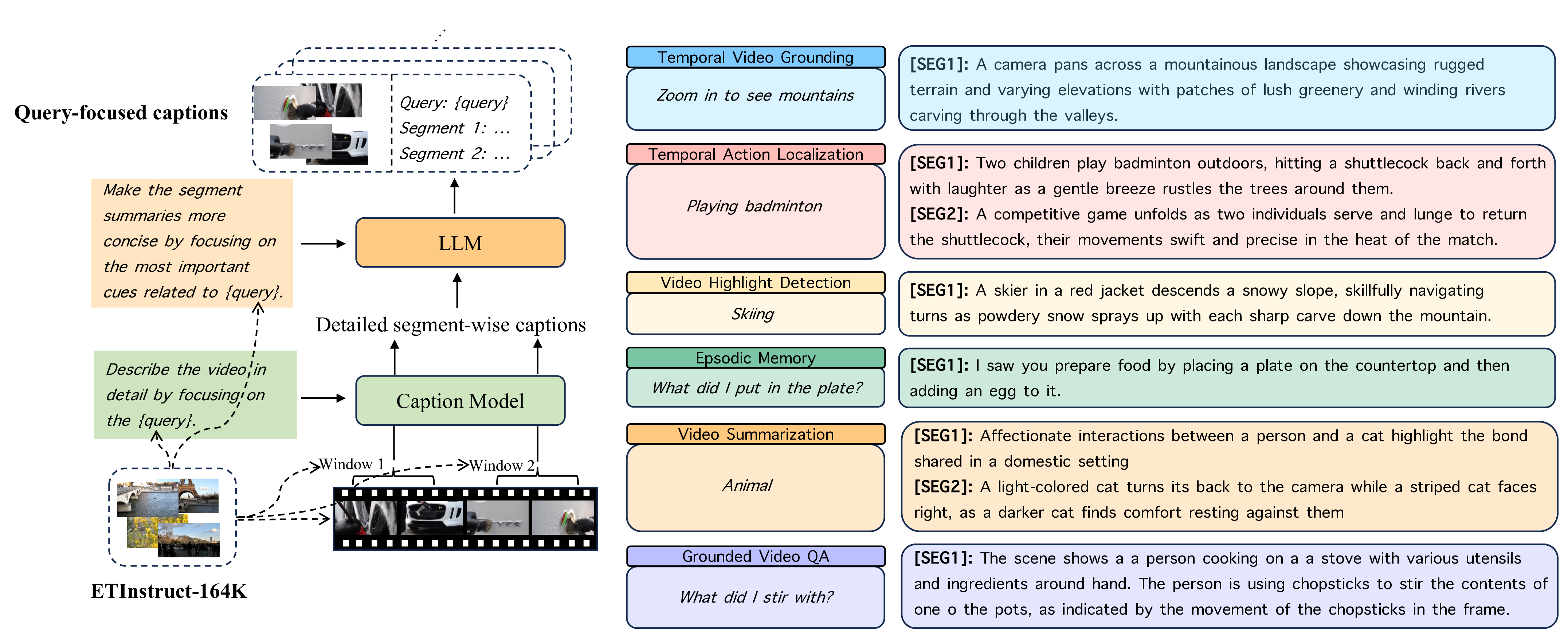

Figure 1: As opposed to previous semantic-poor boundary-centric approaches [25]–[30], MeCo leverages video LLMs to capture the temporal structure and segment the video into transition and event segments. We also tune the model to perform query-focused captioning to scrutinize the detailed event semantics for more precise localization.

To enable video LLMs to perform temporal localization, current efforts primarily focus on adapting them to capture event boundaries through intricately designed timestamp representations and compatibility mechanisms [25]–[28], [30], [31]. However, this one-shot boundary timestamp generation approach overlooks the holistic temporal structures of videos, which provides essential context for forming event segments [32]–[34]. Moreover, it neglects a detailed analysis of the targets’ semantic content, which is crucial for successful visual search [35], [36]. Technically, LLMs are intrinsically limited in generating highly uninformative outputs, such as boundary timestamps in temporal localization or mask polygons in image segmentation [37], and thus cannot fully address the target tasks unless they produce more semantically driven outputs [38]–[42]. In this work, we enhance temporal localization for video LLMs by leveraging their semantic understanding to capture the holistic temporal structures and inspect the target events for precise localization rather than merely adapting them to output boundary timestamps. An intuitive illustration of the differences between our methodology and previous works is provided in 1.

Specifically, we propose structural tokens that leverage video LLMs’ temporal structure understanding [17], [21], [23] for holistic segmentation. In our approach, the LLM generates two types of tokens, an event token and a transition token, in the temporal order of significant event segments and background transitions. This process requires the model to distinguish semantic differences between events and transitions and to capture the overall narrative flow for accurate event localization. To map the structural tokens to their corresponding video segments, we propose a structural token grounding module to directly manipulate the semantic similarities between their LLM hidden states via contrastive learning [43]–[45], building on recent findings that LLM hidden states contain rich discriminative information [40], [41], [46], [47].

While the structural tokens partition the video into consecutive segments, enabling straightforward localization of queried events, we hypothesize that making the model aware of the fine-grained semantic details of such events can further enhance performance. Moreover, many downstream tasks, such as grounded question answering, require deep semantic understanding as well. To facilitate this, we design a query-focused captioning task that requires the model to generate detailed captions for the queried event segments to refine structural token grounding. These captions capture rich, query-specific semantics that not only improve temporal localization but also bolster the LLM’s complex reasoning capabilities.

The proposed framework, named MeCo, enables video LLMs to Measure twice, by considering both holistic video structure and fine-grained event semantics, before Cutting out once for all the queried event segments. This design fundamentally differs from boundary-centric approaches, making MeCo the first video LLM-based temporal localization framework that eliminates the need for generating any boundary-related information. Extensive comparisons show that MeCo outperforms previous video LLM-based temporal localization methods across grounding [4], [4], [9], [10], [48]–[53], dense video captioning [54]–[57], and complex reasoning [4], [28], [50], [58] tasks.

2 Related Work↩︎

Video Temporal Localization Tasks. Video temporal localization tasks such as moment retrieval [4], video summarization [9], [10], [12], [59], action localization [5]–[8], and dense video captioning [13], [16], require localizing salient event segments in response to a user-specified query or prompt, often with the need for precise event boundary timestamps. Furthermore, tasks such as dense video captioning and grounded video question answering [58], [60]–[62] also involve generating captions and performing complex reasoning about these localized events. Traditionally, these tasks have been addressed by specialist models with task-specific designs and domain-specific training data. Although unified models for localization-only tasks have been proposed [1]–[3], they cannot handle generative tasks like captioning.

Video LLMs. Early efforts to enable LLMs to perform video-level tasks used LLMs as agents built on chain-of-thought reasoning and tool-use mechanisms [63]–[66]. Advances in end-to-end multimodal pretraining [43], [67], [68] and instruction tuning [69]–[72] have led to the development of powerful video LLMs [17]–[24], [73]–[76]. Recent studies have shown that these models excel at temporal reasoning over very long videos, benefiting from LLMs’ long-context semantic retrieval and structural understanding abilities [21]–[24]. However, while they are effective for general video understanding tasks such as captioning and question answering, they do not address tasks that require event temporal localization.

Temporal Localization Video LLMs. Recent developments in temporal localization Video LLMs have enabled unified approaches for both localization and generation tasks. Models such as TimeChat [25] and VTimeLLM [29] fine-tune pre-trained video LLMs to output numeric tokens that represent event boundary timestamps. Subsequent works [26], [27], [30], [31] augment the LLM’s vocabulary with learnable timestamp tokens. For example, VTGLLM unifies the timestamp token lengths via padding [27]. Built on this, TRACE utilizes specialized timestamp encoder and decoder [30]. Observing that LLMs struggle with numeric tokens and a large number of newly introduced tokens, E.T.Chat [28] instead fine-tunes LLMs on a boundary embedding matching task using a single newly introduced token. As a result, current methods invariably concentrate on event segment boundaries, which, especially for longer events, fail to capture the rich semantic content essential for precise localization.

Building on these insights, we propose a novel framework that leverages LLMs’ intrinsic semantic understanding to overcome the limitations of boundary-centric methods. Instead of generating explicit timestamp boundaries, the proposed structural tokens partition videos into consecutive segments, which are further refined by query-focused captioning. This dual strategy, capturing both the overall temporal structure and fine-grained event semantics, represents a significant departure from existing approaches and delivers superior temporal localization performance.

3 Preliminary↩︎

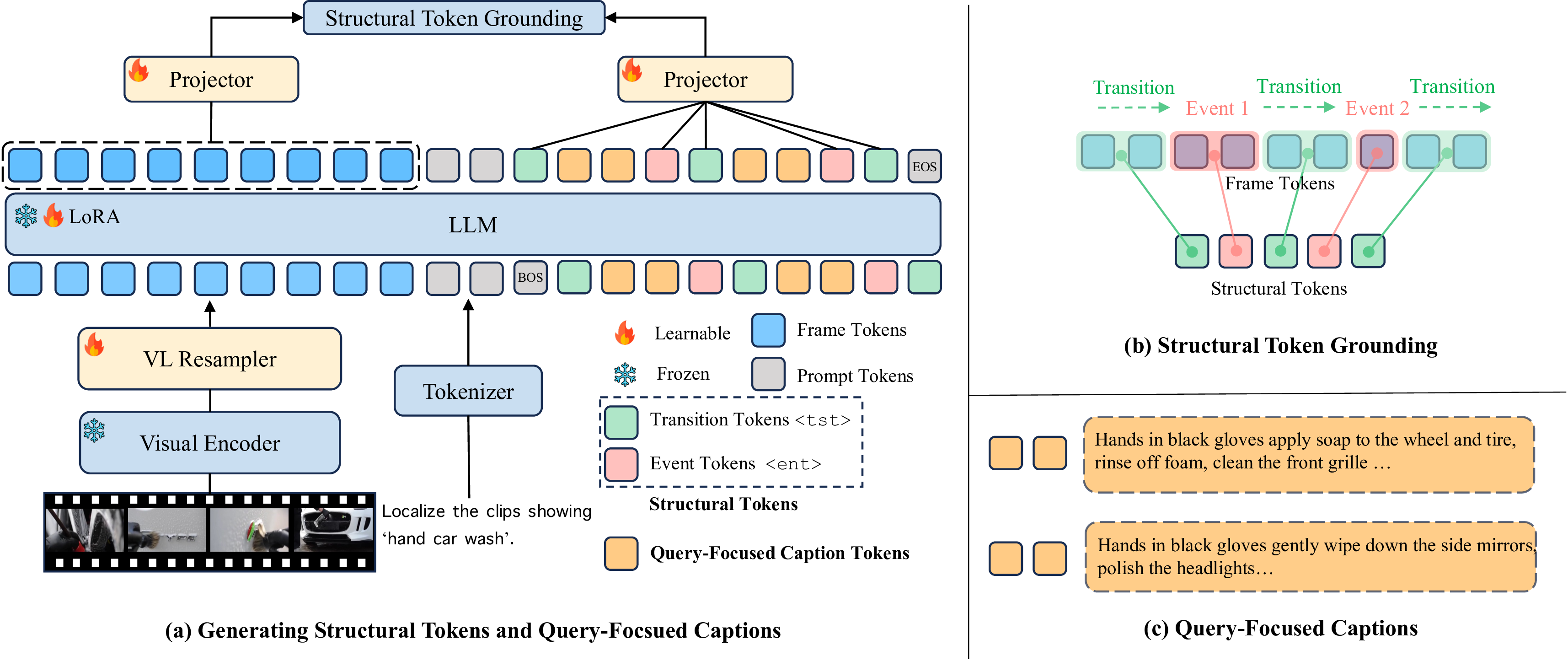

Figure 2: An overview of the proposed MeCo framework. Given an input video and a localization-aware user prompt, MeCo generates structural tokens, including the event token <ent> and the transition token

<tst>, to facilitate holistic temporal segmentation via structural token grounding. MeCo also generates query-focused captions, right before generating the <ent> token, to retrieve the

semantic details in the queried segments for improving structural token-based localization performance.

Video temporal localization involves understanding user-specified events and determining their temporal boundaries. In simpler tasks [4], [6], [53], the model outputs start and end timestamps for each event, denoted as \(\xtime = \{(t_{i}^{s}, t_{i}^{e})\}^{M}_{i=1}\), where the value of \(M\) varies by task and dataset, , some temporal grounding tasks [48] requires \(M=1\), while extractive video summarization [9], [10] usually requires \(M>1\). More complicated tasks like dense video captioning [13] require the model to reason about which events to localize and generate responses \(\xtext = \{\vx_n\}_{n=1}^{N}\) to the user prompt [16], [62], where \(N\) is the number of tokens in the response.

Traditionally, \(\xtime\) and \(\xtext\) are handled by their corresponding specialized models. With the advent of video LLMs designed for temporal localization tasks [16], [25]–[31], \(\xtime\) can be generated in the form of LLM tokens via numeric or specific timestamp tokens and can thus share the same output space with \(\xtext\). Specifically, such temporal localization video LLMs usually start with a visual encoder and a resampler that extracts a set of frame feature maps \(\{\mF_t\}_{t=1}^{T}\) from a \(T\)-frame video, where a frame feature map \(\mF_t \in \Rbb^{P \times C}\) has \(P\) token embedding vectors, each of which is of \(C\) dimensions. An LLM decoder takes \(\{\mF_t\}_{t=1}^{T}\) and the tokenized user prompt \(\{\vq_l\}_{l=1}^{L}\) as input and generates timestamp tokens as well as textual tokens \(\mX=\{\xtime, \xtext\}\). Given temporal localization tuning data, a pre-trained video LLM will be fine-tuned with the language modeling loss: \[\cL_{\text{LM}}(\mX) = -\frac{1}{N}\sum_{n=1}^{N} \log p(\mX_n| \{\mF_t\}_{t=1}^{T}, \{\vq_l\}_{l=1}^{L}, \mX_{<n}), \label{eq:lm}\tag{1}\] where \(\mX_n\) is the \(n\)-th token in \(\mX\), \(\mX_{<n} = \{\mX_{n'}\}^{n-1}_{n'=1}\), and \(N\) is the now total number of tokens in the combined sequence of timestamp and textual tokens.

4 Method↩︎

LLMs struggle with numeric tokens [28], [77], [78] and require extensive pre-training to adapt to new tokens [16], [41], [42]. Moreover, fixating on event boundaries, which lack inherent semantic content, fails to leverage LLMs’ semantic understanding.

To fully exploit video LLMs’ potential in temporal localization, we propose to fine-tune them on a structural token generation task that induces video LLMs’ temporal structure understanding. The generated structural tokens enable holistic segmentation through a structural token grounding step, which readily yields the queried event segments. However, generating structural tokens alone does not capture the fine-grained semantics of the events, potentially bottlenecking the localization performance. To overcome this limitation, we introduce a query-focused captioning task that compels the LLM to extract detailed event semantics for more precise localization. An overview of the proposed pipeline is shown in 2.

4.1 Structural Token Generation↩︎

While watching a video, we naturally segment its narrative into distinct events, which facilitates efficient retrieval of the content we care about [32]–[36]. This holistic structural understanding, especially when transition segments that carry only background information are explicitly modeled, has been crucial for specialist action localization methods [79]–[82]. However, these approaches often represent all transition segments with a single prototype due to limited model capacity, thereby overlooking their temporal dynamics and the subtle semantic differences from the key events. Although video LLMs have demonstrated excellent temporal structure understanding for general video tasks [21]–[24], current video LLM-based temporal localization methods still fail to fully exploit this capability.

To induce the holistic structural understanding from video LLMs for temporal localization tasks, we propose to fine-tune them on the structural token generation task, which enables them to distinguish between event and transition segments, represented

by their corresponding structural tokens <ent> and <tst>, which are newly introduced into the LLM vocabulary. Specifically, during training, given a \(T\)-frame video together with a

set of \(M\) ground-truth queried event segments depicted by their boundary timestamps \(\{(t_{i}^{s}, t_{i}^{e})\}^{M}_{i=1}\), we can augment them with their neighboring transition

segments to form an augmented set of segments \(\{(t_{i}^{s}, t_{i}^{e})\}^{M'}_{i=1}\), where \(M'\) is the total number of both the event and the transition segments. It holds that

\(t_{1}^{s}=1\), \(t^{e}_{M'}=T\) and \(t^{e}_{i}+1=t^{s}_{i+1}\), such that the segments cover the entire video. Let \(\cI_{\text{ent}}\) be a set of indices of the queried event segments; the sequence of structural tokens can be defined as \(\xst=\{\text{ST}(i)\}_{i=1}^{M'}\) with \[\text{ST}(i) = \begin{cases} \texttt{<ent>} & \text{if } i \in \cI_{\text{ent}}, \\ \texttt{<tst>} & \text{otherwise }, \end{cases}\] and we call <ent> the event token and

<tst> the transition token from now on.

4.2 Structural Token Grounding↩︎

To ground the structural tokens to their corresponding video segments, we maximize the log-likelihood of the structural tokens with respect to their corresponding segment frames. Formally, given the projected LLM hidden states of the segment frames \(\{\mH_{t}\}_{t=1}^{T}\) and the structural tokens \(\{\mathbf{s}_{i}\}_{i=1}^{M'}\) from two learnable MLP projectors [28], where \(\mH_{t}\in\Rbb^{P \times C}\) and \(\mathbf{s}_{i} \in \Rbb^{C}\), the structural token grounding loss can be formulated as: \[\begin{align} \cL_{\text{ST}} = -\frac{1}{M'}\sum_{i=1}^{M'} \displaystyle\sum_{t=t_{i}^{s}}^{t_{i}^{e}} \frac{\log p(\vh_{t}| \mathbf{s}_{i})}{t_{i}^e - t_{i}^s},\label{eq:st} \end{align}\tag{2}\] where \(\vh_{t}\in \Rbb^{C}\) is spatially average-pooled from \(\mH_{t}\), \(\tau\) is a learnable temperature parameter [43], and both \(\vh_{t}\) and \(\mathbf{s}_{i}\) are normalized to the unit sphere following [43], [44]. \(p(\vh_{t}| \mathbf{s}_{i})\) is the conditional probability of frame \(t\) given the structural token \(i\) and is computed as: \[p(\vh_{t}| \mathbf{s}_{i}) = \frac{\exp(\mathbf{s}_{i} {\cdot} \vh_{t} / \tau)}{\sum_{t'=1}^{T} \exp( \mathbf{s}_{i} {\cdot} \vh_{t'} / \tau)}, \label{eq:cond}\tag{3}\] which essentially makes 2 a contrastive learning objective [43], [44] that pulls together the structural tokens and the corresponding segment frames. We also primarily attempted the symmetric version of 2 by including \(p(\mathbf{s}_{i}|\vh_{t})\), similar to [43], but observed unstable training likely due to the problem setting and the optimization factors, which we leave for future exploration.

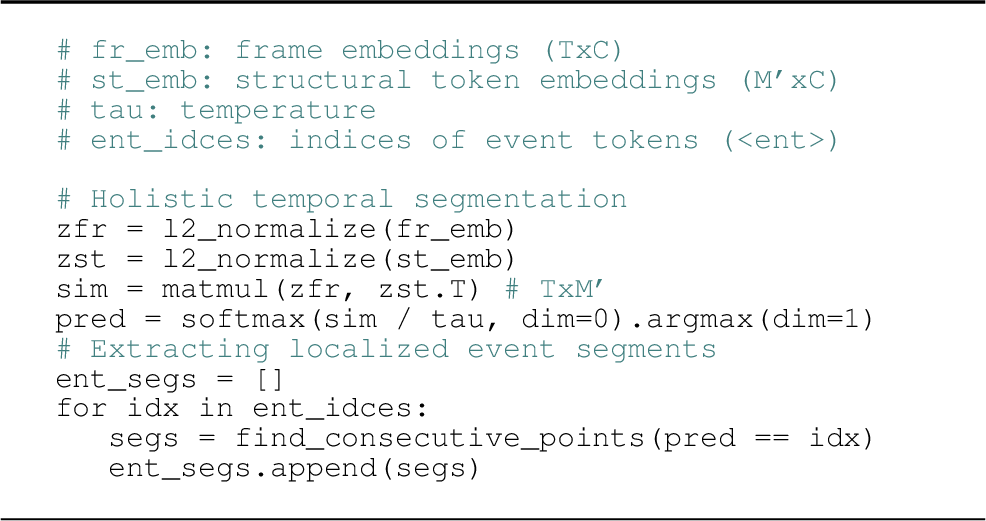

During inference, we compute 3 for all frames with respect to each structural token. We then obtain holistic temporal segmentation by assigning each frame to the structural token that leads to the highest conditional

probability, which directly yields the queried event segments via the event tokens <ent>. The pseudocode of MeCo inference is provided in [code:inference]. Consequently, our approach enables video LLMs to perform temporal localization by leveraging semantic structure, eliminating the need to process uninformative boundary timestamps that arise as

by-products of structural token-based localization.

Figure 3: Pseudocode of MeCo Inference.

4.3 Query-focused Captioning↩︎

Just as humans re-watch a clip to pinpoint details of interest, we believe that relying solely on structural tokens without scrutinizing event semantics hinders the model’s localization and reasoning capabilities. While “think step by step” strategies have boosted performance in tasks like math and coding [38], [83], it remains an untouched problem how to leverage this for enhancing temporal localization-related tasks.

To overcome this limitation, we introduce a query-focused captioning task that trains the model to generate detailed captions focusing on the queried segments. The task applies to a suite of temporal localization tasks, such as temporal grounding [4] and extractive video summarization [9], [10], to exploit the synergistic benefits of their respective data. An example of query-focused captions is provided in 2 and more are available in the supplementary material.

Essentially, query-focused captioning enables LLMs to extract detailed semantic information from the queried events, which we consider as the key to improving temporal localization performance. We leverage query-focused captioning by having the LLM

generate the detailed captions for a queried event right before it produces its corresponding event token (, <ent>). This effectively guides the <ent> token to encode more semantic information about the event, and it

thus can more precisely localize the event segment.

The overall tokens that the LLM needs to generate now become the interleaved sequence of the structural tokens and the query-focused caption tokens \(\mX_{\text{MeCo}}=\{\text{QFC}(i), \text{ST}(i)\}_{i=1}^{M'}\) with \[\text{QFC}(i) = \begin{cases} \texttt{[Cap]}_{i} & \text{if } i \in \cI_{\text{ent}}, \\ \varnothing & \text{otherwise}, \end{cases}\] where \(\texttt{[Cap]}_{i}\) encloses all the query-focused caption tokens for the \(i\)-th event segment, and \(\varnothing\) means no token is placed (we left out the end-of-sequence token for notation clarity). Overall, the LLM training objective is the combination of the structural token grounding loss and the auto-regressive generation loss, , \(\mathcal{L}_{\text{ST}} + \mathcal{L}_{\text{LM}}(\mX_{\text{MeCo}})\), where \(\mathcal{L}_{\text{LM}}\) is defined in 1 .

Interestingly, we find that although the query-focused captioning task prominently contributes to the structural-token-based temporal localization, it can barely be exploited by boundary-centric timestamp generation methods [25], [28]. This indicates the importance of focusing on the semantic understanding capabilities of LLMs to explore their full potential in temporal localization tasks.

5 Experiments↩︎

- [TABLE]

-

Zero-shot performance comparisons on E.T.Bench [28] with previous methods. The full names of each task appear in 5.1. For general video LLMs, the reported results come from timestamp-aware prompting in [28]. “I.T. Data” refers to instruction tuning data used in the temporal localization tuning stage, which may include both localization-specific and other datasets. For temporal localization video LLMs, methods marked with \(\dagger\) are evaluated using their officially released checkpoints, while those marked with \(\ddag\) are fine-tuned on E.T.Instruct [28] (LoRA rank 128) for one epoch. When trained on E.T.Instruct, all models start from their official checkpoints at the final pre-training stage, before any temporal localization tuning. Metrics shown in gray are not zero-shot results, indicating that the model accessed the training data of the corresponding evaluation dataset in E.T.Bench. The best metrics are highlighted in [6pt][2pt]green , and the second-best metrics in [6pt][2pt]blue .

5.1 Benchmarks↩︎

We focus on evaluating MeCo’s zero-shot performance on three benchmarks: E.T.Bench [28], Charades-STA [48] and QVHighlights [4].

E.T.Bench is a comprehensive benchmark comprising a suite of event-level and time-sensitive tasks, spanning four domains: referring, grounding, dense captioning, and complex temporal reasoning. Since the referring domain does not

involve temporal localization, we focus on the other three domains where temporal localization serves either as the primary or an auxiliary task. Specifically, the grounding domain includes five tasks: Temporal Video Grounding (TVG) [4], [48], Episodic Memory (EPM) [49], Temporal Action Localization (TAL) [50]–[52], Extractive Video Summarization (EVS) [9], [10], and Video Highlight Detection (VHD)

[9], [10]. The dense captioning domain

consists of two tasks: Dense Video Captioning (DVC) [54], [55] and Step Localization and Captioning (SLC) [56], [57]. Finally, the complex temporal reasoning domain involves two tasks: Temporal Event Matching (TEM) [4], [50] and Grounded Video Question Answering (GVQ) [58]. We directly apply the evaluation metrics provided in E.T.Bench.

Charades-STA and QVHighlights are widely adopted benchmarks for evaluating temporal moment retrieval and video highlight detection. Although E.T.Bench tasks such as TVG, VHD, and TEM include data

from these two benchmarks, we report additional results directly on the original benchmarks to facilitate comparisons with existing methods. Specifically, the simplified single-segment evaluation used by E.T.Bench reduces difficulty for tasks such as

temporal grounding/moment retrieval and highlight detection, especially on QVHighlights, which often contains samples with multiple ground-truth event segments.

We adopt the standard metrics for these benchmarks: for Charades-STA [48], we use recall at temporal Intersection over Union thresholds of 0.5 (R@1\(_{0.5}\)) and 0.7 (R@1\(_{0.7}\)); for QVHighlights, we use mean Average Precision (mAP) for moment retrieval (MR) and highlight detection (HL), along with HIT@0.1, indicating the accuracy of retrieving the top relevant highlights.

5.2 Implementation Details↩︎

Fine-tuning dataset. Though different works often collect their own data for temporal localization instruction fine-tuning [7], [25]–[27], [29], [30], we choose to utilize the E.T.Instruct dataset with 164K samples [28] as it covers a wide range of temporal localization tasks, including grounding, dense captioning, and complex reasoning tasks. More importantly, training on E.T.Instruct allows us to guarantee zero-shot evaluations on E.T.Bench, as data leakage has been avoided in the data collection process for E.T.Instruct.

Query-focused caption generation. As query-focused captioning is a novel task and there is currently no such dataset available, we leverage the ground-truth event timestamps in E.T.Instruct to extract event clips, which are then sent to a pre-trained video captioning model, MiniCPM-V-2.6 [75], to generate detailed clip captions. Because these initial captions are often very detailed and contain redundant information, we employ GPT-4o-mini [84] to summarize them into concise versions that preserve key details not mentioned in the original queries (if provided). Further details regarding the generation pipeline are presented in the supplementary material.

Model Architecture. We develop and evaluate MeCo using the E.T.Chat architecture [28], which employs a pre-trained ViT-G/14 from EVA-CLIP [85] as the visual encoder, and a resampler consisting of a pre-trained Q-Former [68] followed by a frame compressor [28] that produces one token per video frame. When using Phi-3-Mini-3.8B [86] as the base LLM, we build on the pre-trained E.T.Chat-Stage-2 model [28] for instruction fine-tuning. We also adopt the QWen2 model [73] from MiniCPM-V-2.6 [75] as the base LLM, following Stage 1 & 2 training in [28] to pre-train it before temporal localization fine-tuning. During fine-tuning, we follow [28] to apply LoRA adapters [87] to the LLM and train them together with the resampler for one epoch, while freezing all other parameters. Additionally, we test MeCo on other architectures, such as those used in TimeChat [25] and VTGLLM [27], for controlled comparisons. Only minimal changes are made to replace the original timestamp generation modules with MeCo without further developmental enhancements. Additional details about the E.T.Chat-based model and other architectures appear in the supplementary material.

5.3 Main Results↩︎

E.T.Bench: Comprehensive comparisons. As shown in 1, although previous temporal localization video LLMs demonstrate promising zero-shot

results compared to general video LLMs after temporal localization fine-tuning, they still underperform on most tasks compared to MeCo. Specifically, MeCo (3.8B) achieves substantial gains in all domains—for example, 59.1% vs. 44.3% on

TVG\(_{F1}\) (grounding), 43.4% vs. 39.7% on DVC\(_{F1}\) (dense captioning), and 9.6% vs. 3.7% on GVQ\(_{Rec}\)

(complex reasoning). Notably, many competing models use larger base LLMs (, 7B or 13B) and train for considerably more steps (, VTG-LLM [27] on 217K

samples for 10 epochs, TRACE [30] on 900K samples for 2 epochs with full-parameter fine-tuning). This outcome demonstrates that MeCo better

leverages video LLMs’ semantic understanding for temporal localization than boundary-centric methods. Furthermore, when MeCo uses a more powerful base LLM (QWen2-7B), its performance consistently improves on most tasks, reinforcing its scalability and

effectiveness.

E.T.Bench: Comparisons with E.T.Instruct fine-tuning. When fine-tuned on E.T.Instruct, VTG-LLM [27] retains performance levels

comparable to its original setting. Although TimeChat [25] shows notable gains on most tasks, it still underperforms MeCo by a substantial

margin. Meanwhile, TRACE [30] suffers the greatest performance drop, likely because its specialized timestamp encoder/decoder and newly introduced

timestamp tokens demand extensive tuning for effective adaptation with the LLM. In contrast, MeCo relies only on two structural tokens, <ent> and <tst>, and emphasizes semantic understanding, an area where LLMs

naturally excel, making it more amenable to efficient fine-tuning. Although E.T.Chat’s boundary matching mechanism does not require the LLM to generate timestamps, it overemphasizes uninformative boundaries and therefore fails to sufficiently capture the

semantic information of events, resulting in inferior performance compared to MeCo.

- [TABLE]

-

Zero-shot Performance on Charades-STA and QVHighlights. Detailed information on the E.T.Instruct fine-tuning setting, as well as the meanings of \(\dagger\) and \(\ddag\), can be found in the captions of 1. “MR” denotes Moment Retrieval, and “HL” stands for Highlight Detection.

Moment retrieval. As shown in 2, MeCo outperforms most existing methods on the Charades-STA benchmark, with only R@1\(_{0.7}\) on par with E.T.Chat [28] and TRACE [30]. However, Charades-STA contains relatively short videos (an average duration of 30 seconds [48]) and each video features only a single ground-truth segment, making it less challenging.

In contrast, QVHighlights consists of longer videos (about 150 s) and may contain one or more ground-truth segments per video. As MeCo is trained on E.T.Instruct, which does not include multi-segment moment retrieval data, we generalize MeCo to

multi-segment retrieval by thresholding the semantic similarities between <ent> and frame tokens (3 ) to produce one or more connected segments. As seen in 2 (E.T.Instruct fine-tuning setting), previous methods that have not encountered multi-segment retrieval data fail to generalize to this task, evidenced by their low mAP\(_{\text{MR}}\), because they generate discrete timestamp tokens rather than leveraging semantic similarities.

Highlight detection. For the QVHighlights highlight detection evaluation, we directly use the continuous semantic similarities derived from 3 for scoring. It is therefore unsurprising that MeCo achieves much higher performance in mAP\(_{\text{HL}}\) and HIT@0.1 than previous methods, which generate numeric tokens to approximate highlight scores without leveraging the underlying semantic information.

- [TABLE]

-

Zero-shot Comparisons Between Contrastive Vision–Language Models and Video LLMs. For each contrastive model, we compute the cosine similarities between the localization query feature and the frame features (sampled at 1 fps). We then apply a threshold to these similarity scores and merge contiguous points above the threshold as localized segments.

- [TABLE]

-

Replace Boundary-Centric Localization with MeCo. The original boundary-centric strategies in representative methods are replaced with MeCo for a more controlled comparison. Both the MeCo-adapted models and the original models are trained on E.T.Instruct for one epoch with LoRA adapters.

- [TABLE]

-

Ablations on the necessity of <tst> token and query-focused captioning (QFC). Here, “Query Copying” indicates that the model is trained to replicate the localization query from the prompt instead of performing QFC. Whenever <tst> is omitted, we identify segments by applying a fixed threshold (same for all tasks) to the cosine similarities between <ent> and frame tokens, and then merging contiguous points above this threshold.

5.4 Detailed Analysis↩︎

In this section, all experiments are conducted on E.T.Bench, and for all related analyses we use the MeCo (3.8B) variants trained on E.T.Instruct, unless otherwise specified. Except in 3, all metrics are reported as the average across all tasks within the corresponding domain.

Semantic-based methods excel; LLMs amplify. MeCo leverages LLMs’ semantic understanding to capture video temporal structure and fine-grained semantics for localization. However, contrastively trained vision-language models without

generation capability, such as CLIP [43] and EVA-CLIP [85], also exhibit strong semantic discriminative power. As shown in 3, these models perform impressively

on grounding tasks without any additional training, even surpassing the previous best temporal localization video LLMs on the EPM and EVS tasks while performing competitively compared to them on other tasks. This provides strong

evidence that semantic-based approaches are highly effective for temporal localization, and by harnessing LLMs’ capabilities, MeCo further amplifies this strength.

Replacing boundary-centric methods with MeCo yields consistent benefits. To isolate the benefits of MeCo, we compare it against boundary-centric methods under each of their settings. As shown in 4, MeCo consistently outperforms the original methods across all tasks. Note that aside from the E.T.Chat setting, we have not yet fully explored MeCo’s potential in each configuration; we leave the investigation on its compatibility with various base LLMs for future work.

The necessity of both holistic and localized understanding. As shown in 5, optimizing the structural token grounding loss without the transition tokens

(<tst>), with segments derived via thresholding, yields significantly poorer performance than when <tst> is used. Notably, <ent> tokens begin to take effect once query-focused captioning is

introduced; however, replacing query-focused captioning with an uninformative query copying task [30] reduces performance to the level achieved

using only <ent> tokens. By combining holistic structural information via <tst> tokens with localized details from query-focused captioning, MeCo achieves the best performance.

Boundary-centric methods fail to leverage query-focused captioning. As shown in 6, boundary-centric methods that focus solely on generating numeric timestamps cannot exploit the rich semantic cues contained in the query-focused captions. In contrast, the structural tokens of MeCo effectively leverage this detailed information to enhance performance in both localization and complex reasoning. Therefore, the success of MeCo demonstrates that discriminative and generative learning can interact effectively to facilitate temporal localization tasks.

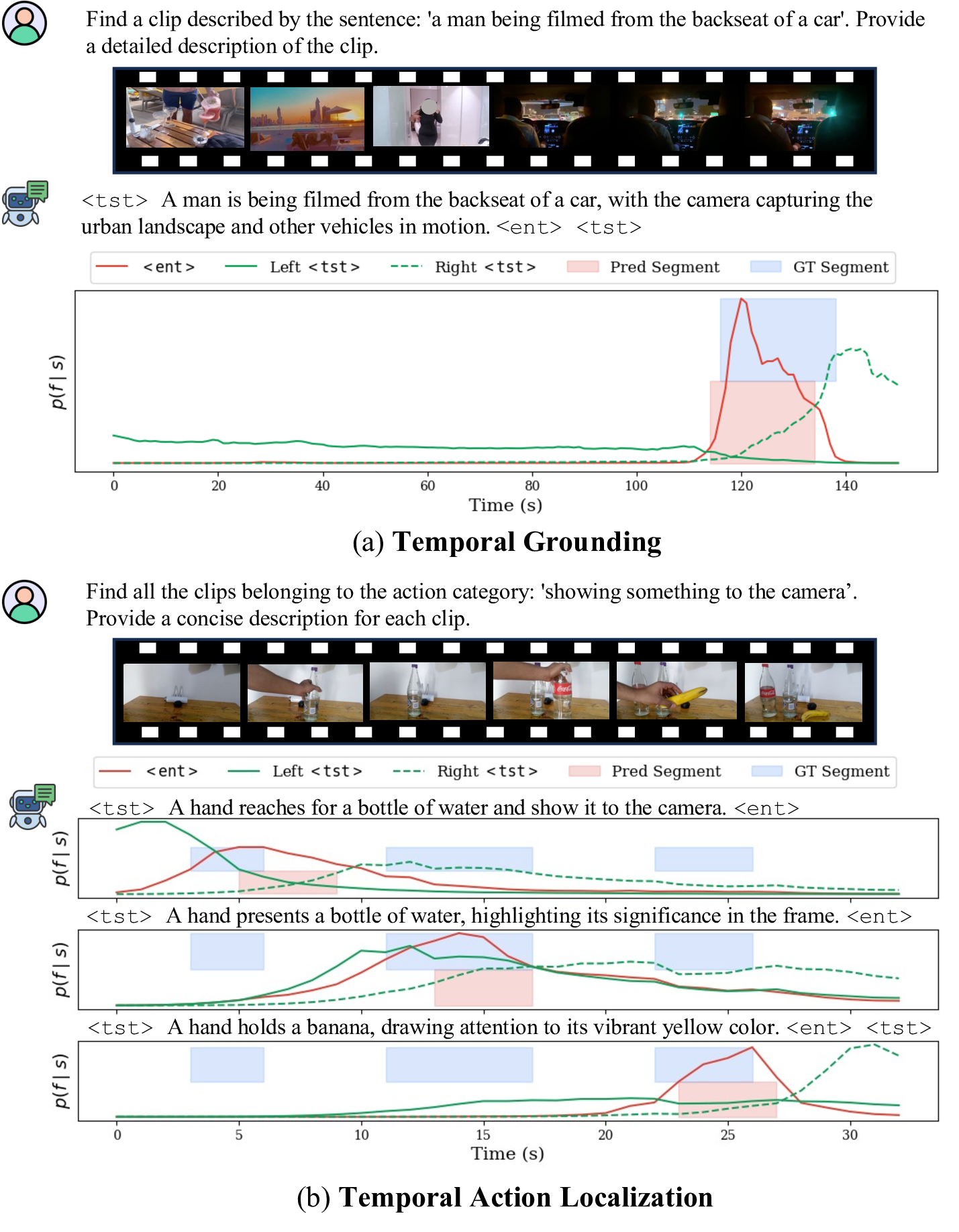

Figure 4: Visualizations of MeCo’s temporal localization results.

Qualitative analysis. As shown in the 4, MeCo can generate detailed query-focused captions and accurately localize the event segments in both single-event and multi-event segments. However, it is also observable that there is still much room for improvement.

6 Conclusion↩︎

In this work, we introduce MeCo, a novel timestamp-generation-free framework that equips video LLMs with temporal localization capabilities. By leveraging structural tokens to capture holistic video structure and query-focused captioning to extract fine-grained semantic details, MeCo outperforms traditional boundary-timestamp-centric methods across a suite of temporal localization tasks. Our results demonstrate that exploiting LLMs’ intrinsic semantic understanding can be a more effective approach for temporal localization. For future work, it could be interesting to explore how MeCo can scale with synthetic localization data.

Figure 5: Query-focused captioning pipeline and examples.

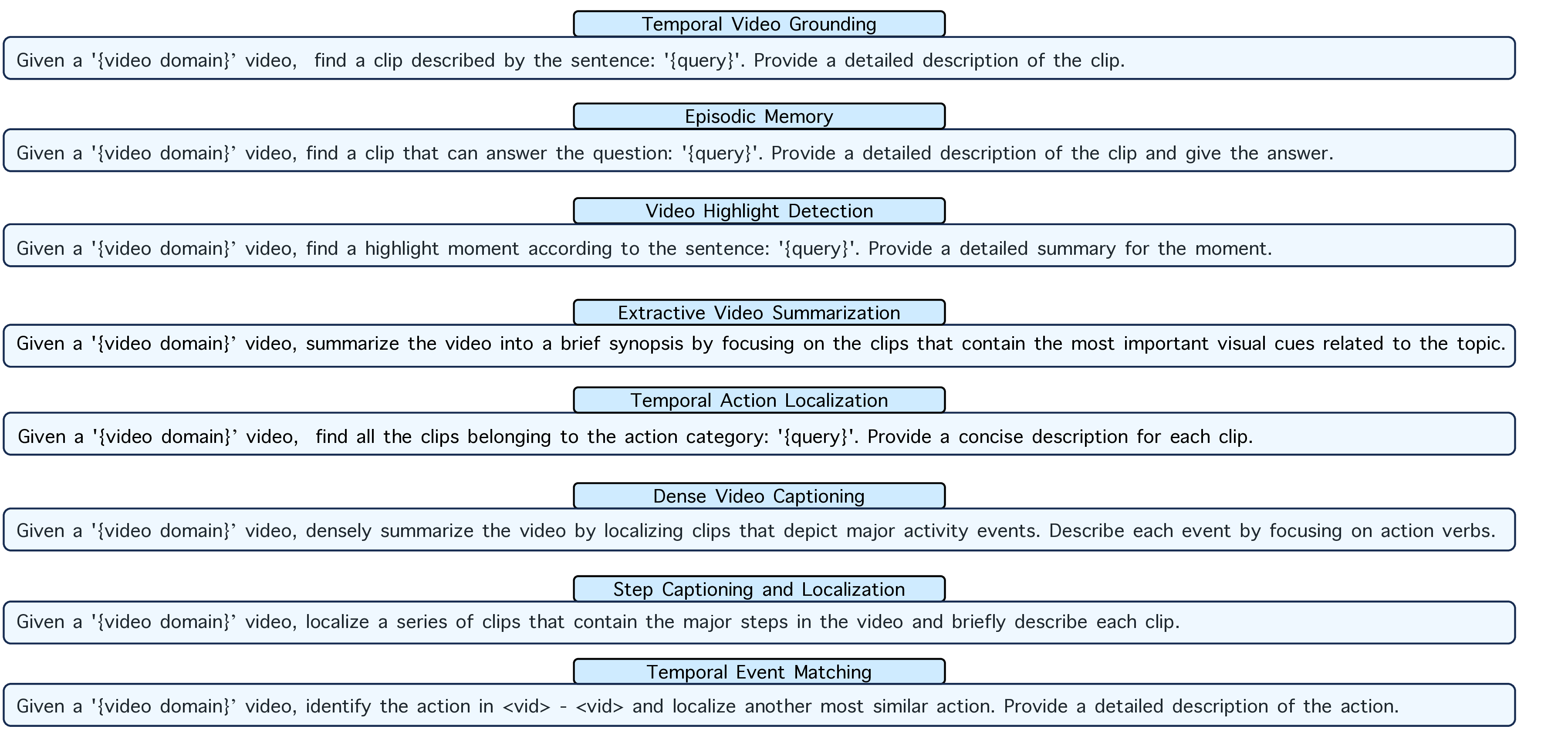

Figure 6: Evaluation prompt templates.

A.1. Detailed Implementation Details↩︎

In Table. 7, we provide the hyperparameters used for the MLP projectors for the structural tokens, LoRA, and model training. All training was done on 4 NVIDIA A100 (80G) GPUs.

| MLP Projectors | |

|---|---|

| Number of Layers | 2 |

| Hidden Size | 1536 |

| Output Size | 3072 |

| Large Range LoRA | |

| LoRA \(r\) | 128 |

| LoRA \(\alpha\) | 256 |

| LoRA Dropout | 0.05 |

| LoRA Modules | QVO Layers |

| Model Training | |

| Max Number of Tokens | 2048 |

| Number of Epochs | 1 |

| Batch Size | 2 |

| Learning Rate for LoRA | 5e-5 |

| LR Decay Type | Cosine |

| Warmup Ratio | 0.03 |

| Optimizer | AdamW |

| AdamW \(\beta_1, \beta_2\) | 0.9, 0.997 |

A.2. Details of Query-Focused Captioning↩︎

Based on the temporal localization data in E.T.Instruct [28], we extract event segments and send them into a video captioning model, MiniCPM-V-2.6 [75] to generate detailed captions. The captions often contain redundant information and are summarized using GPT-4o-mini [84]. Some QFC examples are shown in Figure. 5.

A.3. Adapting TimeChat and VTGLLM to Work with MeCo↩︎

In main text Table.3b, we show the results of plugging MeCo in the TimeChat [25] and VTGLLM [30] models. TimeChat and VTGLLM share the same architecture, with a ViT-G/14 from EVA-CLIP [85] as the visual encoder, a pre-trained Q-Former [68] as the visual resampler and a base LLM initialized from the pre-trained VideoLLaMa [20], except that TimeChat applies a sliding video Q-Former to compress the number of visual tokens into 96 but VTGLLM applies a slot-based visual compressor to obtain 256 tokens, both using 96 as the maximum number of sampled frames.

To plug MeCo into the architecture, we directly modify the video Q-Former in TimeChat to a standard image Q-Former, which resamples 32 tokens into 1 token per frame. Moreover, we apply the bi-directional self-attention for the visual token parts, following [28]. Other components remain unchanged. We then train TimeChat, VTGLLM, MeCo (adapted) on E.T.Instruct with the same hyperparameters following [25], except that LoRA \(r\) and \(\alpha\) are both changed into 128.

A.4. Evaluation and Training Prompt Templates↩︎

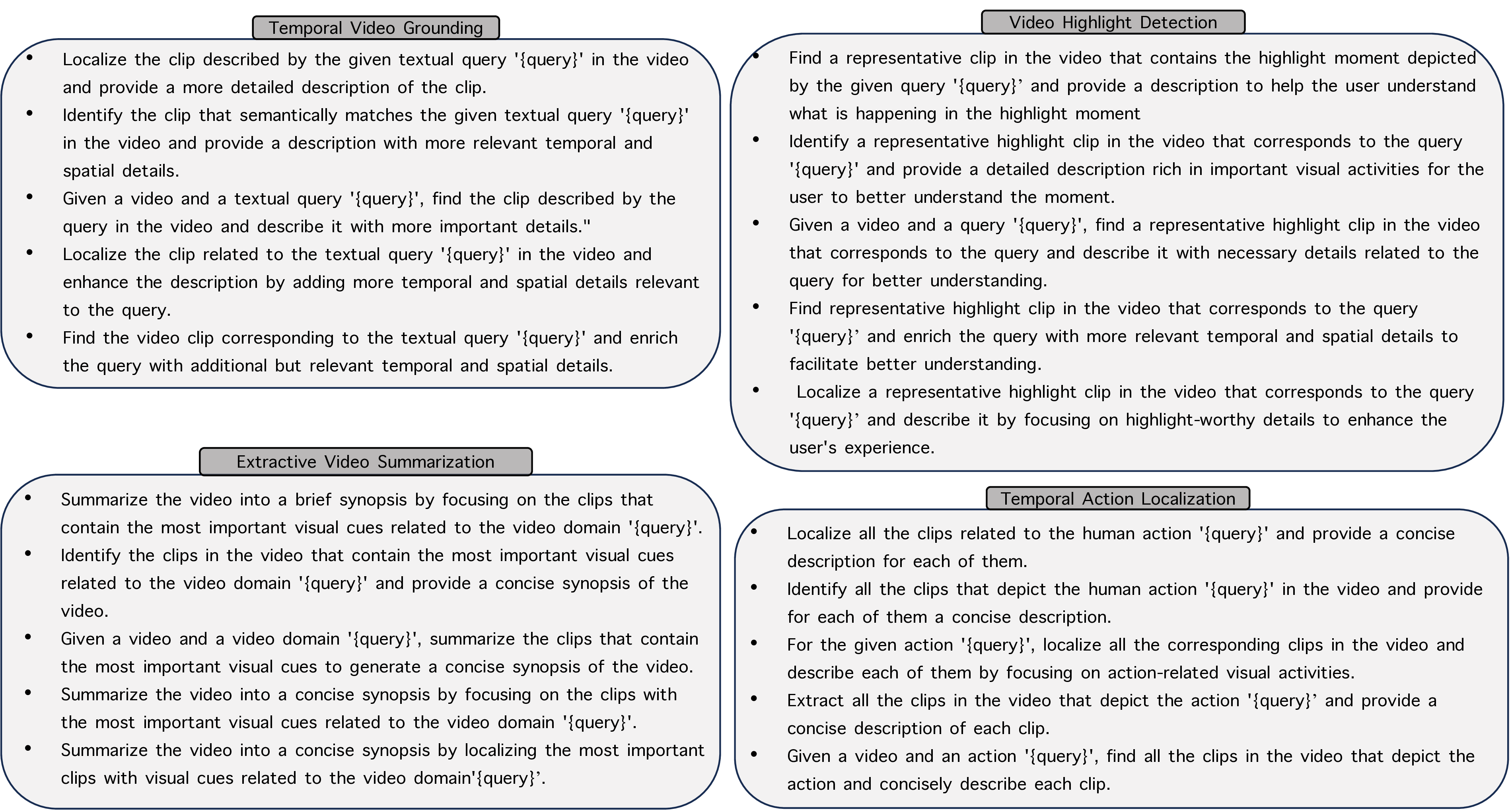

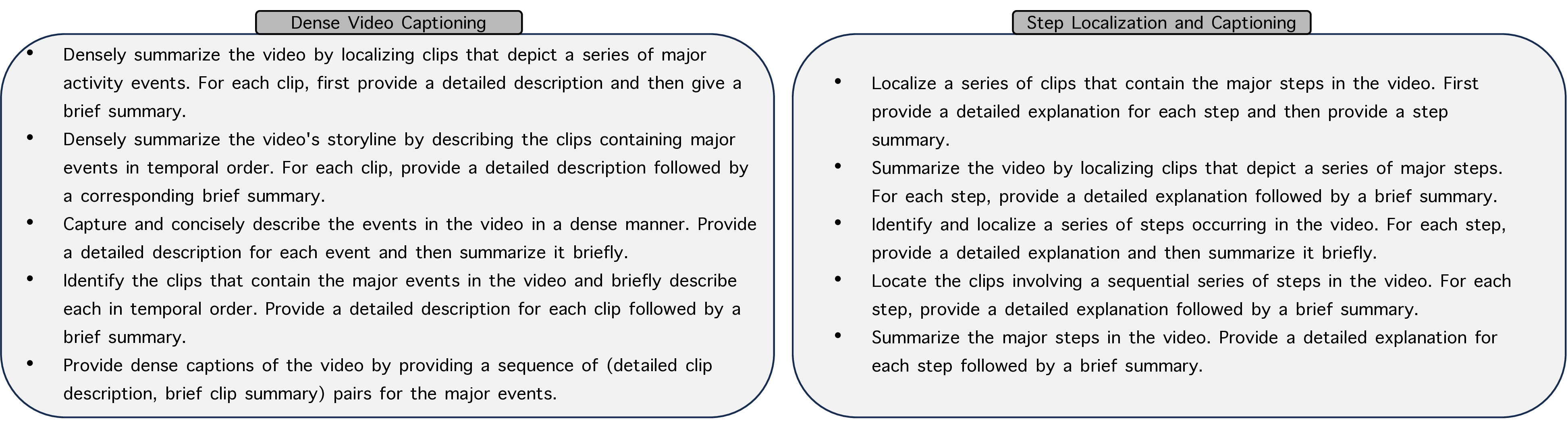

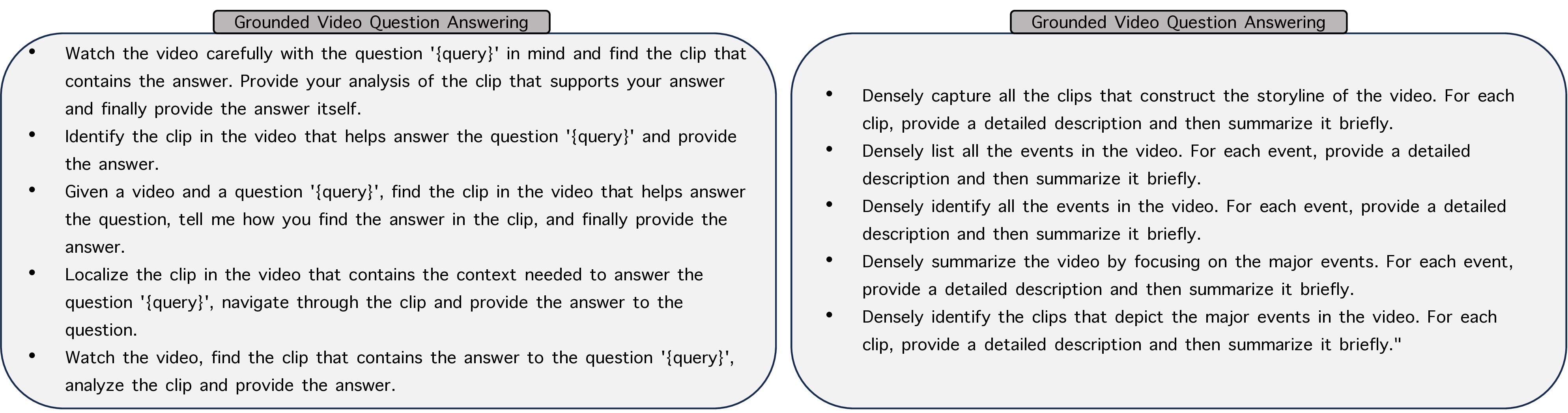

For evaluation, we modify E.T.Bench templates to work with MeCo, and the example templates are provided in Figure. 6. For training, we manually craft a query-focused captioning-aware instruction template for each task domain in E.T.Instruct, and diversity it with GPT-4o [84] to get four more templates. The instruction templates for all the domains are provided in Figures.7.

Temporal grounding.

Dense video captioning.

Complex reasoning.

Figure 7: Instruction templates for different task domains: (a) temporal grounding, (b) dense video captioning, and (c) complex reasoning..

References↩︎

Code available at https://github.com/pangzss/MeCo↩︎

Work done during an internship at CyberAgent, Inc.↩︎