Performance Models for a Two-tiered Storage System

March 12, 2025

Abstract

This work describes the design, implementation and performance analysis of a distributed two-tiered storage software. The first tier functions as a distributed software cache implemented using solid-state devices (NVMes) and the second tier consists of multiple hard disks (HDDs). We describe an online learning algorithm that manages data movement between the tiers. The software is hybrid, i.e. both distributed and multi-threaded. The end-to-end performance model of the two-tier system was developed using queuing networks and behavioral models of storage devices. We identified significant parameters that affect the performance of storage devices and created behavioral models for each device. The performance of the software was evaluated on a many-core cluster using non-trivial read/write workloads. The paper provides examples to illustrate the use of these models.

Queing theory, multi-threading, parallel IO, cooperative caching, online learning, distributed computing

1 Motivation↩︎

Scientific computing applications [1], [2] have shown good scalability on High-Performance Computing (HPC) machines with many-core processors. These machines designed using heterogeneous nodes (CPUs and GPUs) are capable of several teraflops/sec or exaflops/sec throughput for computations. Their performance drops with IO intensive workloads such as streaming [3] and archived data applications. In this article, we discuss a system that is suitable for processing archived data. Parallel IO performance on clusters depends on factors such as layout of files on storage devices (number of devices, file sizes, file partition sizes), read/write bandwidth and load distribution across storage devices, communication network and number of processes (readers/writers). It is difficult for storage systems to match the compute throughput of new machines which generate high volumes of IO requests at fast rates due to their increased concurrency. Storage system designs with shared HDDs are not sufficient to match these new request rates in spite of MPI-IO optimizations that reduce the number of IO requests. Parallel IO on such systems is affected by data transfer costs over the network and load from other users [4]. Tiered storage designs which cache frequently used data on faster solid-state devices (tier 1) and use HDDs for permanent storage (tier 2) have higher throughput [5]. HPC machines use the tiered approach due to economic reasons and for fault-tolerance. Distributed caching and tiering storage systems have been areas of active research [6], [7], [8], [9]. Caching remote files on local clients differs from caching files on solid-state devices in clusters. File transfers from remote servers would take seconds, while IO accesses (KB/MB) from disks in a cluster can be performed in milliseconds. We address the second problem here that is restricted to HPC clusters. There are several unknowns in using tiered storage such as request distribution, cache size, cache line size, number of processes and data eviction policies.

In this work we describe performance models that are useful in analyzing and configuring a two-tiered storage system based on application requirements. The two storage devices differ in speeds due to mechanical differences, concurrency and read/write bandwidth gaps. Our models help to determine the best way to compose these two device types to match the overall requirements of an application. We modeled the flow control of the system using quantities such as arrival rates, response times, waiting times and service times. The two devices were modeled by defining parameters for load distribution, synchronization, concurrency and communication costs. Most of the recent available performance data for tiered HPC storage systems are post data staging [9]. In this paper, we used tier 1 as an inclusive cache and forwarded misses to tier 2. The measured performance includes the cost of page misses. We used NVMes [10] as tier 1 solid-state devices and the HDF5 parallel IO library [11] for tier 2 file accesses from HDDs. We developed an Online Learning (OL) algorithm for cache replacement. This algorithm learned the popularity and temporal locality of the IO traffic and minimized total number of IO requests. Our hypothesis is that a mix of cache replacement algorithms will perform better for complex IO traffic. We considered two categories of IO request prefetchers in our implementation : stream identifiers and Markov chains [12]. Related work is described in detail in section 2, the software architecture and OL page replacement in section 3. A short discussion of machine architectures is provided in section 4 and the performance models are discussed in section 5. Experiments and conclusions are described in sections 6 and 7. The contributions of this paper are listed below :

An end-to-end performance model for two-tiered storage systems based on queuing networks.

Behavioral models of two types of storage devices based on their load distribution and parallelism.

Implementation of OL algorithm for cache replacement in tiered storage and its performance evaluation using different types of traffic models.

Performance evaluation of the full software benchmark on a recent cluster.

The results of experiments, observations and conclusions drawn from them will be useful for the HPC community.

2 Related Work ↩︎

Previous work on data movement in multi-tiered storage architectures can be found in [13]. They haven’t addressed approaches for minimizing data movement between tiers. Other designs for tiered storage such as those described in [14] and [15] have used novel replacement policies. A general approach for such designs is to use priority queues for storing data in the tiers. Data movement between tiers depends on the IO traffic and the priority function. There were efforts within the scientific community to model common page access patterns, referred to as traffic models [16], [17]. Majority of this work was meant for sequential storage systems in which multiple clients use a single interface to access data. Caching or tiering in distributed systems with independent caches was first described by Dahlin et al. [6], [18], [8]. Global page replacement algorithms are described in [7], [19]. An evaluation of remote caching algorithms for different workload types is discussed by Leff et al. [20]. We did not consider global replacement algorithms in this work. Recent work in multi-tiered storage includes the use of solid-state devices as distributed cache [21], [22]. The authors of [22] and [23] have developed performance models for NVMes. But their models do not fully address the overheads from accessing random file offsets. [24] has also used NVMes as distributed cache, but their design has a centralized metadata manager which creates overheads. Solid-state devices are being widely used as fast storage in HPC clusters, such as Proactive Data Containers [25], DAOS [26]. They are commonly used for buffering [9] and rarely as demand-paged caches.

Data movement or page replacement algorithms have been studied in depth [27], [28], [29], [30], [31], [32], [33]. These papers include both theoretical and experimental results. Besides LRU and LFU, some of the widely discussed page replacement algorithms are LRU-K [34],random, MIN,FIFO [28] and WS [35]. The optimal page replacement algorithm is MIN, described in [28]. That LRU is as good as optimal for slow evolving sequences was proved by [36], [28]. Eviction algorithms based on scoring functions were discussed by [37]. For any access sequence, an ideal page replacement algorithm is one that has full knowledge of the sequence. A page replacement algorithm can peek into the short-term future for evicting pages that are least likely to be used in the near future [28]. For simple strided sequences, near future page accesses can be predicted using stream identifiers or prefetchers [27]. Online learning algorithms for page replacement were explored by [38], [39]. The implementation described by [38] is for two experts only. [39] uses online convex optimization, which is more flexible. But they have not addressed the computation of time-varying popularity and utility functions. Complex page access sequences can be modeled using Markov chains [40]. The most probable next states are predicted using the current state and transition probabilities. Markov chains are better at recognizing non-trivial sequences than stream identifiers that compute differences between address offsets. [41] used unsupervised learning to predict page accesses from complex Markov chains.

IO performance modeling is a challenging problem. Analytical models of storage systems were created to understand quantities such as rotational delay, seek time and IO bandwidths for different IO sequences, request sizes and hardware. Poisson processes were used to model IO requests and queuing theory to analyze average waiting time and service rate [42]. But with complex IO systems used in shared environments, these models are inadequate. Service times can show significant variation with load on shared disks. Recent work on storage systems has used supervised learning to model quantities such as IO read/write times and server utilization [43], [44]. Parallel IO libraries such as HDF5 have used supervised learning models for predicting better file layouts for applications [45]. Performance models for NVMes are recent [22], [46]. These models have captured some features of NVMe performance. [5], [23] and [47] have developed performance models for IO resource management in multi-tenant environments. But the models, metrics and QOS requirements are not fully developed.

3 Software Architecture↩︎

This section describes the software architecture of our IO benchmark that was used for the experiments in section 6. We used MPI for spawning processes and for collective communication. Every MPI process has a corresponding posix file functioning as tier 1 cache located in its nearest NVMe. Each posix file is divided into \(N\) \(m\)-byte cache lines (pages); the values of \(N\) and \(m\) being configurable. The posix files are accessed by mapping their pages to CPU memory. Processes transferred data to their NVMe files using PCIe network. Each cache line has index, tag, valid and dirty bits. Our implementation is a demand-driven, fully-associative, write-back cache and transitions between cache states are similar to those found in CPUs [27]. The cache lines are multi-reader, single-writer and mutual exclusion is guaranteed by using read-write locks on the states. To reduce lookup time, cache states are stored in CPU memories and data on NVMe files. Tier 1 can also be described as a single copy inclusive distributed cache because we do not allow data replication. Replication would have required the implementation of a cache-coherency protocol and metadata management. Page migration between caches is also not allowed in our current implementation.

Pages are distributed across processes using a suitable mapping policy. We implemented widely used mapping policies such as round-robin, random, block and block-cyclic [48]. A combination of file number and \(page\_no= \frac{FILE\_OFFSET}{CACHE\_LINE\_SIZE}\) were used as index and tag fields. The random policy maps page numbers to processes using hash functions. The definitions of other mapping policies are consistent with their descriptions in literature. A suitable mapping function may be chosen based on the correlation between page accesses in a distributed application. For example, if all processes share a common set of pages, and if requests are uniformly distributed, random mapping will provide good load balance. Block mapping will minimize inter process communication for exclusively accessed pages.

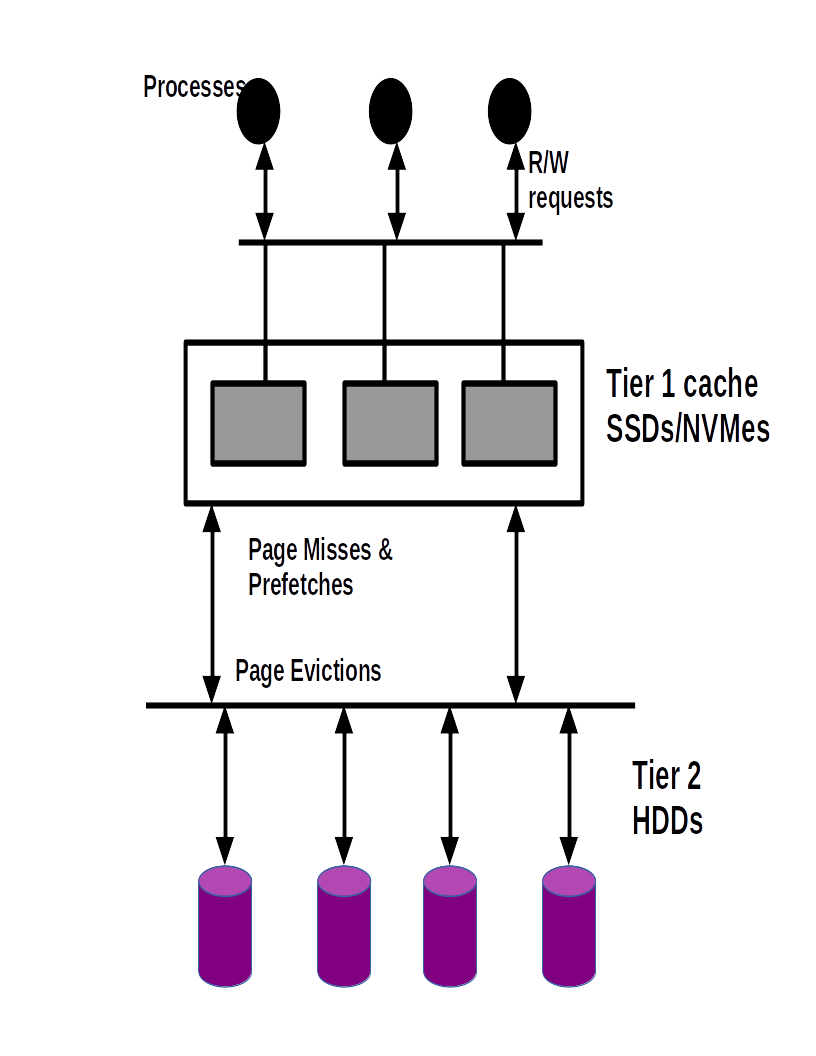

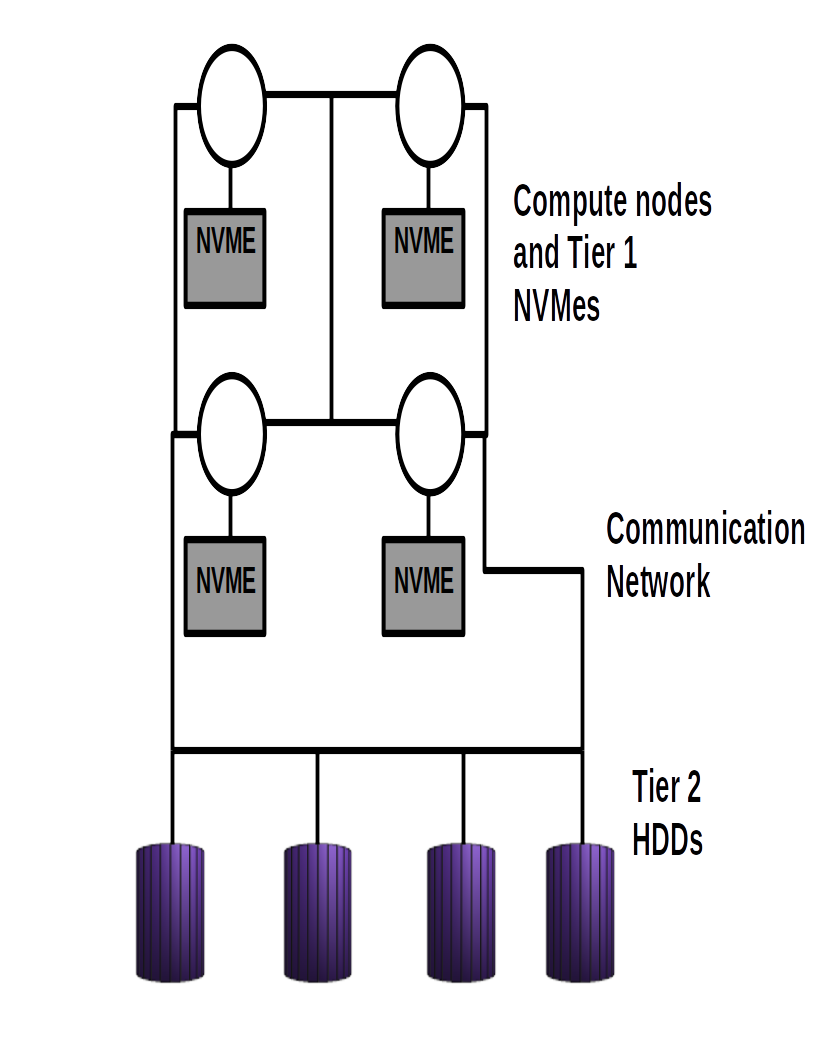

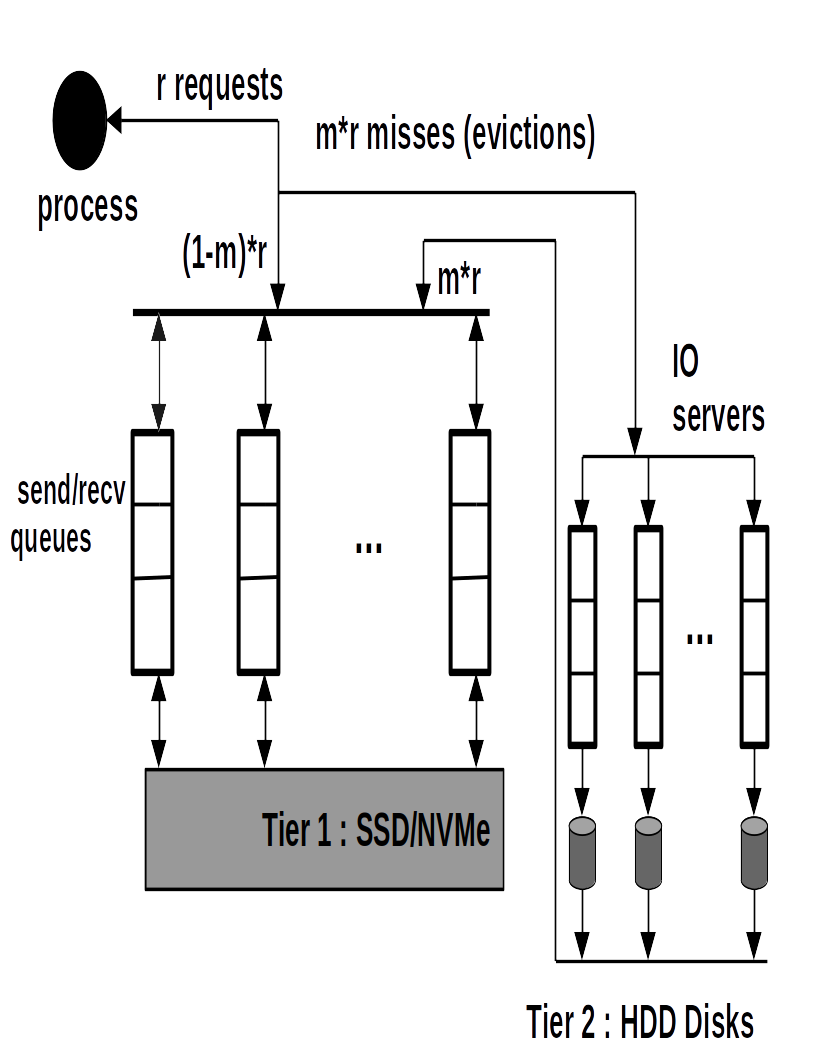

Figure 1: Two tier Storage : Software Architecture.

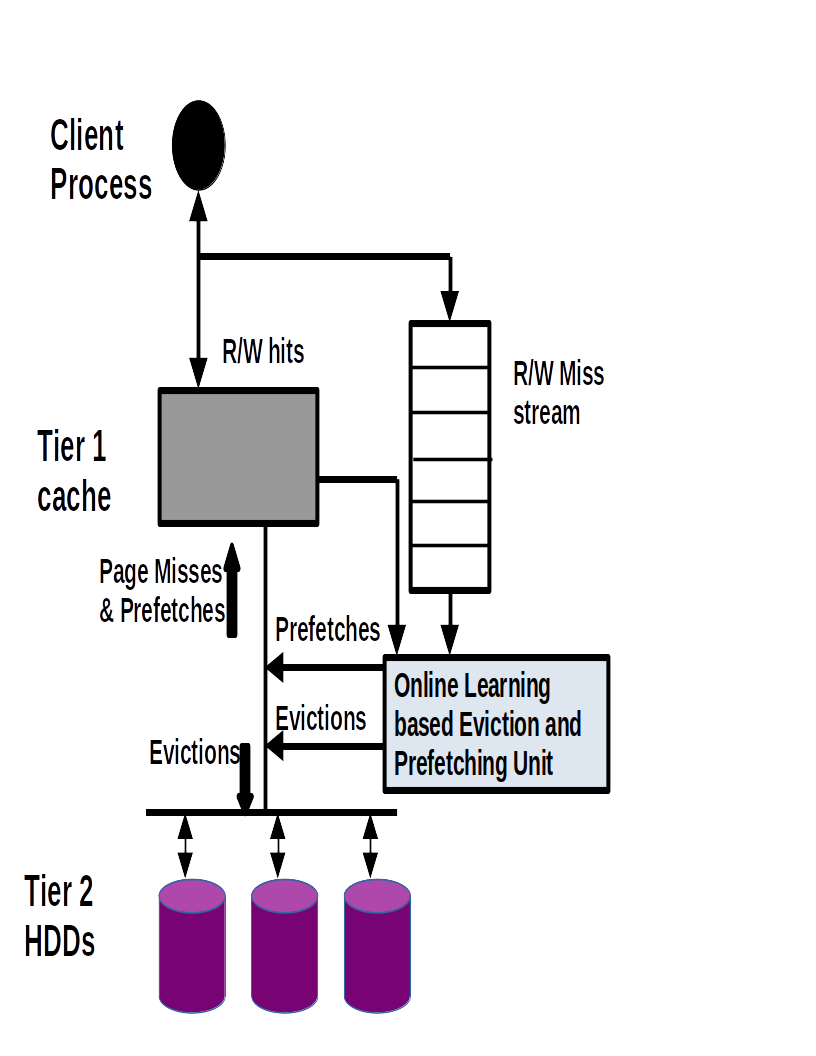

Figure 2: Two-tier Storage : Software Architecture and Data Movement.

The tier 2 implementation consists of an interface for submitting IO requests generated by tier 1 page misses. MPI threads generate read/write requests of \(s\) bytes each. In our implementation \(t+s \leq CACHE\_LINE\_SIZE~(page size)\), where \(t\) is the base address of an IO request. These requests are forwarded to the distributed cache via multi-threaded RPCs provided by Mercury [49]. Every process has a dedicated IO thread and an IO queue. Page misses in tier 1 are forwarded to IO queues which are shared between client and IO threads. IO threads poll the IO queues, and distribute page requests across processes according to the mapping functions described earlier in this section. Page misses are serviced by IO threads and pages are placed in tier 1 slots. The OL [50] cache replacement algorithm is activated when caches are full. The software architecture is described in figures 1 and 2. Figure 1 shows the tier 1 distributed cache and the tier 2 HDDs, and figure 2 shows the components involved in the movement of data between tiers for a single process. Eviction decisions depend on the observed page miss streams and the most recent cache states. Every cache line has frequency counter and timestamp fields associated with its state. These fields are updated during cache accesses. In our design, page misses are prioritized over prefetches. The most recent miss stream is used to generate prefetch requests using a stream identifier. Prefetched pages are stored in separate prefetch buffers and follow the same mapping function as the cache. On a miss, a page is first located in the prefetch buffer. If found, it is removed and promoted to the cache. In our current implementation, prefetching is performed only if there are empty slots in the prefetch buffer. The width of the buffer decides the maximum number of prefetches in any iteration. In our current implementation, cache sizes are fixed. Resizing was useful in implementations where remote caches were located in CPU RAMs [51]. It will be relevant in multi-tenant implementations which cache several files.

3.1 Online Learning for Data Movement↩︎

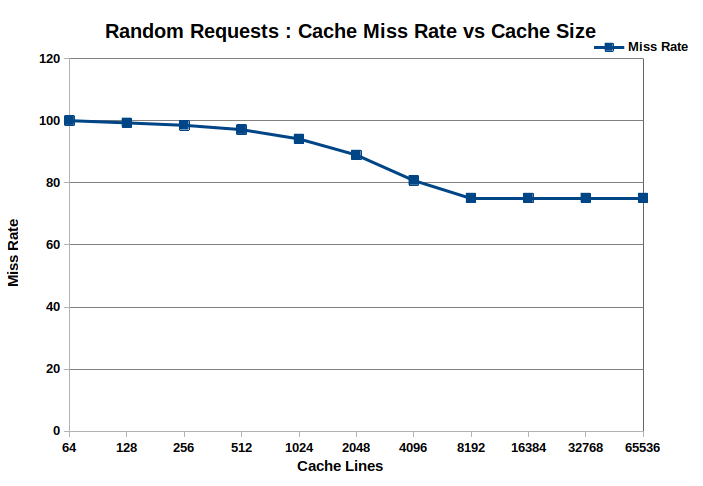

Assuming all page requests are the same size, data movement can be reduced by minimizing [52] page misses. Evictions and prefetches modify the page miss stream sequence. A good page eviction algorithm and a prefetcher that accurately identifies data access patterns will minimize the total number of page misses. If prefetching is performed when IO threads are idling (no page misses), it will reduce the total waiting time for IO requests. Since caching is similar to promote/demote operations in splay trees, the effectiveness of eviction algorithms and prefetching can be studied using amortized analysis. Figure 3 shows the plot of cache miss rate with increasing cache size for 1 MPI process using a random read workload. These experiments were performed on the Delta Supercomputer [53] using our IO benchmark. The cache miss rate function matches expected behavior observed in CPU caches and other cooperative cache implementations.

Figure 3: Capacity Misses : Miss Rate vs Cache Size for 1 MPI process.

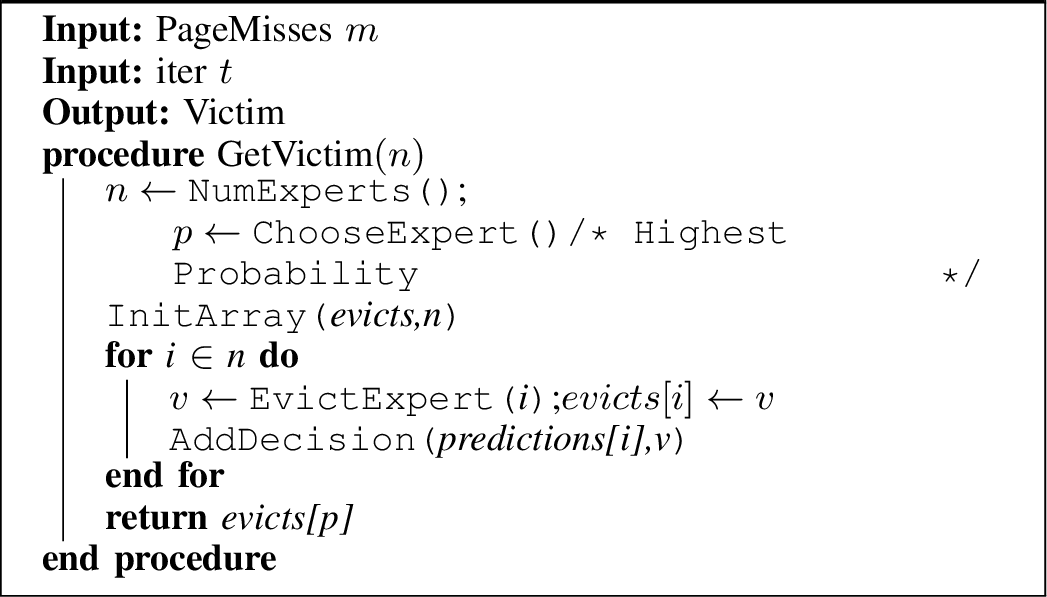

In our IO benchmark, we divided the polling iterations of IO threads into epochs and set epoch width equal to \(4\) iterations. We implemented \(3\) cache replacement policies : Least Recently Used (LRU), Least Frequently Used (LFU) and Random and used them as experts in a weight-sharing OL algorithm [50], [54]. If a page miss was generated for a page that was previously evicted in the same epoch, it is considered as a misprediction and the algorithm is penalized for its decisions. Penalties are computed and the weights associated with the eviction experts are adjusted. These weights are converted to probabilities and the expert with the highest probability is chosen. Since the OL algorithm described here is as fast as the experts, we chose low-overhead policies as experts. Their decisions can be computed by reading the current cache state. We used timestamps for LRU and frequency counters for LFU. Like discussed in the previous sections, all experts are local replacement algorithms without the need for global consensus.

Figure 4: GetVictim.

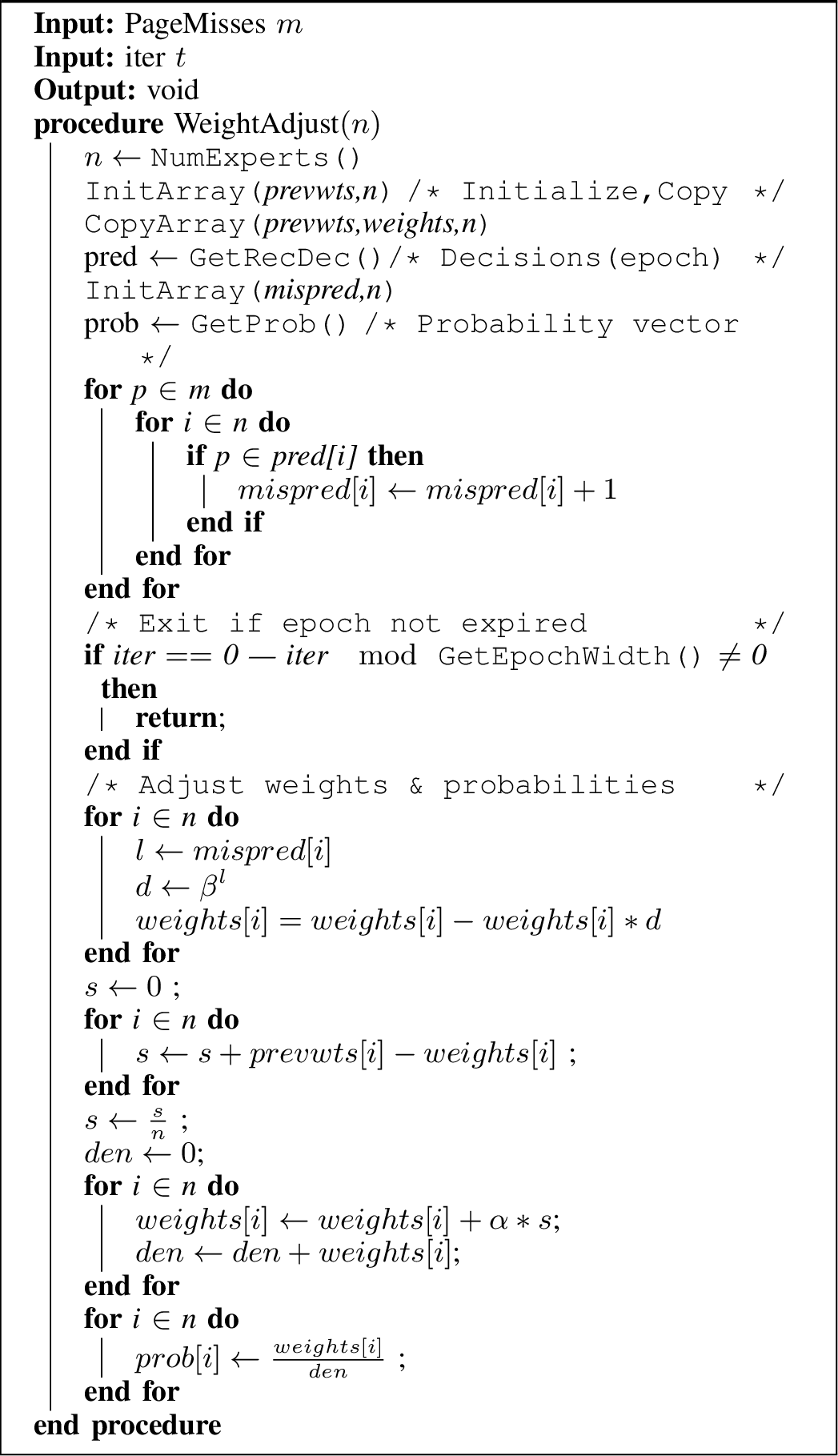

Figure 5: WeightSharing : Weight Adjust.

The weight-sharing algorithm used for evictions is described in algorithms [alg:alg1] and [alg:alg2]. Algorithm [alg:alg1] describes the weight adjustment function that is based on counting the number of mispredictions made by each expert. The predictions made by experts are stored in a prediction vector (algorithm [alg:alg2]). The prediction vector is cleared every \(EPOCH\_WIDTH\) iterations to avoid mixing history from distant past. If the number of mispredictions is less than \(THRESHOLD*miss\_count\), then they are ignored. \(miss\_count\) is the number of page misses in an epoch. In our experiments, we set \(THRESHOLD=0.25\). The algorithm chooses between experts according to their probabilities. Weights are adjusted in intervals of duration \(EPOCH\_WIDTH\),to avoid swift changes between experts. We found page replacement to be a problem suitable for OL with experts. OL was previously used for cache replacement by [39], [33]. But they did not show the benefits of learning using multiple experts with different traffic models.

4 System Architecture ↩︎

Figure 6: Example of a cluster with two-tiered storage .

Increasingly production HPC clusters are adding tiers to their storage systems [21] for improving IO performance. Some of these machines have NVMes attached to every compute node along with slower HDDs and tapes. Compute nodes may use PCI or RDMA to communicate with their local NVMes. This may not be an economical design for large clusters and will lead to low utilization of NVMes for most compute intensive HPC applications. Some large HPC clusters have special nodes with attached NVMes to aggregate IO requests. Remote aggregation of short requests on a network of NVMes is an economical design for two-tiered storage. Sharing of NVMes by multiple HPC applications improves storage utilization. But this design has to pay the overhead of transferring large volumes of IO requests over the network for IO workloads. In this paper, we have restricted ourselves to medium-sized clusters in which every compute node has an attached NVMe, shown in the diagram in figure 6. These NVMes across compute nodes constitute the tier 1 distributed cache. Processes can access data stored on remote NVMes using the high-speed communication network. The example in figure 6 has used HDDs for tier 2 and they are shared among all the nodes of the cluster. The data aggregated in NVMes are transferred to HDDs as large messages over the network. For large machines with several users and running high throughput concurrent applications, inter process communication can become an issue even with tiered storage. Although the discussion in this paper has used HDDs in tier 2, they may be substituted by another tier of NVMes. The design of a storage architecture for a machine is usually made after considering several factors including machine size, types of targeted applications, communication network, topology, processor architecture and budget [21], [55].

5 Performance Models ↩︎

Figure 7: Two-Tiered Storage : Queuing Network per process .

The two tiers can be modeled using a network of queues [56] [57], shown in figure 7 where the inputs to tier 1 queues are read/write requests. Tier 1 hits exit the system and misses enter tier 2 queues. They are serviced by tier 2 and re-enter tier 1. Additional requests generated by tier 1 such as evictions and prefetches are serviced by tier 2. Pages evicted from tier 1 are serviced by tier 2 and exit the system. The arrival of requests to tier 1 can be modeled using a random variable, with expected arrival rate \(E(\lambda)\) and variance \(\sigma^{2}\) [57]. There are two types of devices (servers) with mean service rates \(\mu_{1}\) and \(\mu_{2}\) respectively. We have ignored cold misses and analyzed using capacity misses with evictions.

\[T_{h_i} = n_{i_r}*\frac{1}{\mu_{1_r}}+n_{i_w}*\frac{1}{\mu_{1_w}}, \forall 1\leq i\leq P \label{eq:eq1}\tag{1}\]

\[T_{m_i} = n_{i_{m}}*\frac{1}{\mu_{2}} \label{eq:eq2}\tag{2}\]

\[T_i = \max(T_{h_i},T_{m_i}), \forall 1\leq i\leq P \label{eq:eq3}\tag{3}\]

\[T = \max(T_i), \forall 1\leq i\leq P \label{eq:eq4}\tag{4}\]

The total service time for the two-tier storage system for a workload with \(n_{i_r}\) read requests and \(n_{i_w}\) write requests per process is described using equations 1 to 4 . We have used service rates \(\mu_{1_r}\) and \(\mu_{1_w}\) for tier 1 read and write hits respectively. These values can be computed using the performance models for NVMes (subsection 5.1) and RPC communication costs. \(T_{h_i}\) is the minimum time for servicing hits by process \(i\) using \(k\) RPC threads per process. Let \(n_{i_m}\) be the number of capacity misses per process. Let \(\mu_{2}\) be the miss service rate. The values of \(\mu_{2}\) can be computed using the performance models for parallel IO using HDDs (subsection 5.2). \(T_{m_i}\) is the miss penalty per process. Since the IO thread and RPC service threads execute concurrently, \(T_i\), the total time for process \(i\) to empty all queues is defined by equation 3 . The total time across all processes is the maximum \(T_i, \forall 1\leq i\leq P\), where \(P\) is the number of processes.

But, equations 1 to 4 do not model other quantities of interest such as response rates and waiting times in the tiers. Let \(\lambda\) be the rate at which read/write requests are generated by a workload. To simplify equations, let \(E(\mu_1)\) and \(E(\mu_2)\) be the expected service rates of tiers 1, 2 and let \(p_{12}\) be the miss rate of the workload. The model description provided here is for a single process. The queuing network model of the system depends on its implementation. If hits and misses are serviced by the same set of \(k\) threads per process, then the system can be modeled using a single \(M/G/k\) queue, where arrivals from two populations enter the system with arrival rates \(\lambda*p_{12}\) and \(\lambda*(1-p_{12})\). These two types of requests have different service times \(\frac{1}{E(\mu_1)}\) and \(\frac{1}{E(\mu_2)}\). The expected service time for the system is provided in equation 5 . \[\frac{1}{\mu} = (1-p_{12})*\frac{1}{E(\mu_1)}+p_{12}*\frac{1}{E(\mu_2)} \label{eq:eqn5}\tag{5}\]

The expected arrival rate is denoted by \(\lambda\). The utilization ratio of hits is \(\rho_1 = \frac{(1-p_{12})*\lambda}{E(\mu_1)}\) and misses is \(\rho_2 = \frac{p_{12}*\lambda}{E(\mu_2)}\). The utilization ratio for the system is \(\rho = \frac{\lambda}{\mu}\). The number of requests in service and waiting in the queue can be computed using the values of arrival rates, service rates and utilization ratios for each population and also for the entire system [42]. The implementation described in this paper uses a separate IO thread for page misses, refer to figure 7. In this case, an \(M/G/k\) queue is used for servicing hits and an \(M/M/1\) queue for misses. The arrival and service rates of the miss queue are \(p_{12}*\lambda\) and \(E(\mu_2)\) respectively. On exiting the miss queue, requests enter the \(k\)-server queue. Therefore, the \(k\)-server queue has two types of traffic entering it at different rates ; hits at rate \((1-p_{12})*\lambda\) and misses at rate \(E(\mu_2)\) from the single server queue. Requests exit the system via the \(k\)-server queue. The effective arrival rate at the \(k\)-server queue is \((1-p_{12})*\lambda+E(\mu_2)\). The utilization ratio of the \(k\)-server queue is \(\rho_1 = \frac{(1-p_{12})*\lambda+E(\mu_2)}{E(\mu_1)}\). The utilization ratio of the IO queue is \(\rho_2 = \frac{p_{12}*\lambda}{E(\mu_2)}\).

This system can be analyzed at equilibrium to compute values of expected queue lengths (\(L_{1}\),\(L_{2}\)) and waiting times (\(W_{1}\),\(W_{2}\)) per process for the queues using equations 6 and 7 . Equation 6 describes an \(M/M/k\) queue with the same expected service rate for reads and writes, where \(P_{0}\) (probability of the queue being empty) can be computed using the full \(M/M/k\) model described in [42]. Similar equations can be derived for an \(M/G/k\) queue using the mean and variance of the read/write service (hit) time distribution. Equation 7 describes an \(M/M/1\) queue for IO misses.

\[L_{1} = \frac{P_{0}*\rho_{1}^{(k+1)}}{(k-1)!(k-\rho_{1})^2}, W_{1} = \frac{L_{1}}{(1-p_{12})*E(\lambda)+E(\mu_2)} \label{eq:eq6}\tag{6}\] \[L_{2} = \frac{\rho_{2}^{2}}{(1-\rho_{2})}, W_{2} = \frac{L_2}{E(\lambda)*p_{12}} \label{eq:eq7}\tag{7}\]

The analysis using queuing networks is useful when the system is at equilibrium, i.e. all utilization ratios are \(<1\). The configuration of the two-tier system can be adjusted for desired service rates while maintaining the equilibrium of the system. If not in equilibrium, equations 1 to 4 can be used to estimate minimum execution times. For example, consider a workload of \(n=10000\) read requests distributed over \(2000\) pages (\(page size=524288\) bytes) run using \(4\) MPI processes. Suppose each process has a \(32GB\) tier 1 cache. Assume the pages and requests are uniformly distributed across processes. Let \(p_{12}=0.2\) be the miss rate per process. Let \(\lambda=100\)reqs/sec, \(\mu_{1}=1000\)reqs/sec and \(\mu_{2}=33\)reqs/sec. The value of \(\mu_{1}\) is lower than NVMe throughput rates because it includes RPC and synchronization costs. The effective arrival rate \(\lambda = 0.8*100+0.2*0.33 = 86.6\)reqs/sec. The utilization ratios \(\rho_{1} = \frac{86.6}{1000} = 0.0866\) and \(\rho_{2} = \frac{20}{33} = 0.6\). This system is in equilibrium, because the utilization ratios of both queues are \(<1\). The expected length of the tier 1 queue is almost \(0\). The number of requests per process = \(2500\) and the number of misses per process = \(500\). The expected time taken for \(2500\) arrivals is \(\lambda*T=2500\), \(86.6*T=2500\), \(T=28.8\) sec. The total response time per process = \(\frac{2500}{1000}=2.5\) sec. We haven’t included RPC communication costs in our performance models, but they can be computed from LogP communication model [58] or by profiling RPC benchmarks. For the analysis, we have assumed that the request address stream is random. If a stream contains consecutive addresses to the same page, they can be grouped into a single request and forwarded to the miss queue. This will increase the mean service rate of the miss queue. We have assumed that all processes have the same request arrival and miss rates and that all NVMes are the same. If arrival rates and the probability distributions of requests to processes (mapping) are known, then the input distributions of processes can be modeled using separate random variables. If the load is not equally distributed, then processes will have different miss rates. Since HDDs are shared, \(\mu_{2}\) is a global variable. The \(M/G/k\) queues have to be replaced by \(G/G/k\) queues for such general cases. Quantities such as expected queue lengths and waiting times will be different for each process and the mean or maximum values may be chosen as system-wide global values. Harder quantities to model are \(n_{m_i}\), the number of misses because it depends on workloads and cache sizes. All the performance models in this section were built using linear regression [59]. We used R [60] for modeling and analysis. We used cross-validation [59] to reduce over-fitting. All training experiments were performed on the Delta Supercomputer[53]. Delta is described in detail in the evaluation section 6.

5.1 Solid-State Devices (NVMes) ↩︎

In this section, we describe models for predicting the performance of NVMes from a set of parameters. We used NVMes by mapping posix files to virtual memory. Read/write operations on these files are transferred over the network (PCIe here) and submitted to concurrent IO queues on the NVMes. Let \(n\) be the number of read/write requests issued to a file stored on an NVMe. NVMes can be modeled using multi-server \(G/G/k\) queues [57]. Using NVMes via page mapping adds overheads from page lookups and page faults. Highly correlated read requests benefit from mapping because they have fewer NVMe accesses. To utilize the concurrency provided by NVMes, they should be used directly by submitting requests asynchronously to their IO queues. Libraries such as libaio [61] or spdk [62] can be used for direct access of NVMes. These libraries also provide APIs for high throughput communication protocols such as RDMA for transferring requests [10]. Our performance models do not include the communication protocol or the mode of use of NVMes as parameters. Therefore, the same model can be trained for different systems. The total cost of a set of IO operations on a file stored on an NVMe depends on the mean request size, communication costs, NVMe configuration, workload size and the concurrency in the workload. The concurrency in a workload depends on the request type (read/write), number of IO queues in use, number of requests, total address range in use and the request generation rate.

We identified the following parameters to model the total time (training set is provided in brackets):

Number of client threads (\(X_{1}\)) : \([8,16,32,64]\)

Number of distinct block addresses (\(X_{2}\))

IO request size (\(X_{sec:3}\)) : \([512,4096,8192,65536,262144]\)

Number of IO requests (\(X_{sec:4}\)) : \([1000-4000000]\)

Total address range in use (\(X_{sec:5}\)) : \(500MB-500GB\)

Let \(Y\) be the total read/write time for any workload. Our performance model is provided by equation 8 . \[Y = X_1*X_3*X_4+X_5*X_4*X_3 \label{nvmeperfen}\tag{8}\]

| Estimate | Std. Error | t value | \(Pr(>|t|)\) | |

|---|---|---|---|---|

| (Intercept) | -5.941e+00 | 1.560e+01 | -0.381 | 0.70353 |

| x1 | 6.252e-01 | 4.387e-01 | 1.425 | 0.15490 |

| x3 | -6.326e-05 | 2.143e-04 | -0.295 | 0.76801 |

| x4 | 3.726e-05 | 1.860e-05 | 2.003 | 0.04580 |

| x5 | 6.213e-11 | 5.174e-11 | 1.201 | 0.23053 |

| x1:x3 | 1.667e-06 | 6.784e-06 | 0.246 | 0.80598 |

| x1:x4 | -8.464e-07 | 5.005e-07 | -1.691 | 0.09158 |

| x3:x4 | -1.650e-09 | 5.655e-10 | -2.917 | 0.00373 |

| x4:x5 | 2.029e-16 | 8.570e-17 | 2.368 | 0.01834 |

| x3:x5 | -6.564e-16 | 1.541e-15 | -0.426 | 0.67030 |

| x1:x3:x4 | 1.973e-10 | 1.510e-11 | 13.061 | \(< 2e-16\) |

| x3:x4:x5 | 1.103e-20 | 2.343e-21 | 4.706 | 3.46e-06 |

We trained separate performance models for reads and writes because read/write bandwidths are different. Our performance model includes singleton terms as well as interactions between parameters. Our hypothesis is that interactions between parameters are useful for modeling load distributions and concurrency in the performance models of hardware devices and concurrent software [63]. The write performance model is tabulated in table 1 with parameters and significance (column \(Pr(>|t|)\)) values. Lower the values of \((Pr(>|t|)\), higher the significance of the corresponding terms in the performance model. The significant terms of this model are \(X_1:X_3:X_4\) and \(X_3:X_4:X_5\) which have the least probabilities. The total write time depends on the number of client threads, IO request size and the number of requests because these parameters (\(X_1:X_3:X_4\)) model the load distribution. The term \(X_3:X_4:X_5\) models page faults and NVMe write costs such as garbage collection [64]. Since the NVMe configuration is fixed, garbage collection intervals depend on the number of blocks in use, which can be modeled using \(X_5\), \(X_4\) and \(X_3\). Concurrency of the workload is captured by \(X_4:X_5\), i.e. the total number of requests and their address range. Terms which did not have affect on the model are \(X_1\), \(X_3\) and \(X_5\) in isolation along with \(X_1:X_3\) and \(X_3:X_5\). Request size and number of client threads affect write performance only when combined with \(X_4\), i.e. the total number of requests which is evident from the observations of probability values in table 1.

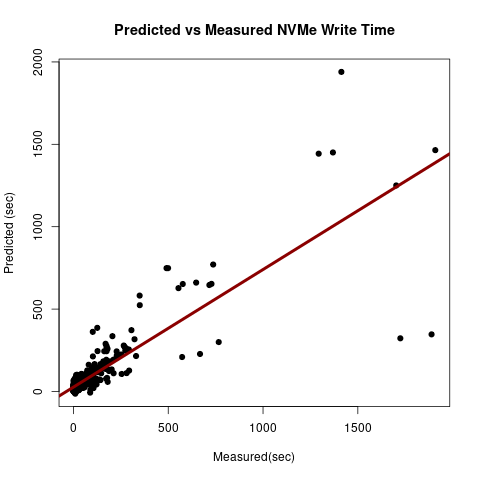

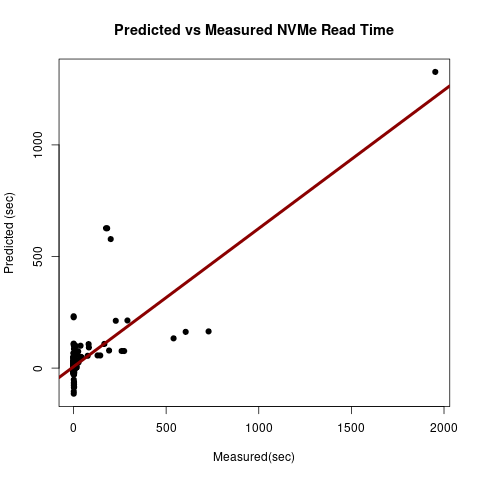

This model captures the effects of dependent parameters on the output accurately and verifies our initial hypothesis about NVMe write performance. It was trained using 400 experiments. The cross-validation parameter was \(K=20\). The AIC score for the linear regression model was 5267.4 and the goodness of fit is shown in figure 8. We chose the best model after comparing it with similar models using Anova [60].

Figure 8: NVMe Write Performance Model Fit .

| Estimate | Std. Error | t value | \(Pr(>|t|)\) | |

|---|---|---|---|---|

| (Intercept) | -6.059e+00 | 8.802e+00 | -0.688 | 0.491565 |

| x1 | 2.182e-02 | 2.475e-01 | 0.088 | 0.929812 |

| x3 | 1.009e-04 | 1.209e-04 | 0.835 | 0.404440 |

| x4 | -3.566e-06 | 1.049e-05 | -0.340 | 0.734131 |

| x5 | 6.963e-11 | 2.920e-11 | 2.385 | 0.017533 |

| x1:x3 | -2.066e-07 | 3.828e-06 | -0.054 | 0.956978 |

| x1:x4 | -1.165e-08 | 2.824e-07 | -0.041 | 0.967125 |

| x3:x4 | -4.060e-10 | 3.191e-10 | -1.272 | 0.203981 |

| x4:x5 | 1.259e-16 | 4.835e-17 | 2.603 | 0.009572 |

| x3:x5 | -2.984e-15 | 8.693e-16 | -3.433 | 0.000658 |

| x1:x3:x4 | -6.675e-12 | 8.522e-12 | -0.783 | 0.433929 |

| x3:x4:x5 | 1.896e-20 | 1.322e-21 | 14.340 | \(< 2e-16\) |

Figure 9: NVMe Read Performance Model Fit .

We used the same set of parameters for both read and write performance models. The performance model for reads was also selected after comparing with other models using Anova. The terms of the read performance model are tabulated in table 2. Unlike writes, read operations have no contention. Similar to writes, the work per thread is modeled using \(X_3:X_4:X_1\) and page faults are modeled by terms containing \(X_{sec:5}\). \(X_1\) has lower significance in this model compared to the write performance model, because of page mapping and zero contention. Pages that can be stored in page tables are reused during reads. Terms containing \(X_3\) have higher significance because they affect page boundaries and page faults, which lead to NVMe accesses. The AIC score of this model was 4786.4. The goodness of fit of the read performance model is provided in figure 9. We found regression to be a useful technique for modeling load distribution, contention and hardware features such as block garbage collection in NVMes, memory controllers and page table sizes. It was also a useful tool for inferring the significance of terms and for verifying hypotheses about expected behavior. We did not parameterize request rate in these models. The ratio of reads to writes may be added as a parameter to create a single model. Instead we chose to create separate models and determine the performance of a mixed workload by adding individual costs. The actual cost of a mixed workload is likely to be lower than the sum. If NVMes are accessed directly instead of page mapping, the relative significance of terms in these models will change. \(X_1\) may be replaced by the number of IO queues in this case. Our observations about the write performance model are not likely to differ. The read performance model is likely to have different significance values for its terms because of the absence of page tables.

5.2 Hard Disk Drives (HDDs)↩︎

Performance models for shared HDDs were built by the HPC community for exploring file layouts that minimized IO time. The objectives in those models were to determine the best file layout (stripe size, stripe count) that minimized IO time for an application, given a certain process count [45]. There have been other efforts to model IO performance overheads and variance using machine learning [45], [43], [65], [66] using data center workloads. Our objective was to model the total time for reading/writing a file, given its layout, and to compute the mean read/write time per stripe from total time. The costs of cache misses, evictions and prefetches can be computed as functions of the number of stripes. Separate models were created for reads and writes because of bandwidth differences. We chose the following parameters and training set values to model the total IO access time :

Number of processes (\(X_1\)) : \([4,8,16,32,64,128,200]\)

Number of disks (Stripe count \(X_2\)) : \([1,2,4,8]\)

Number of stripes per disk (\(X_3\))

Stripe size (\(X_4\)) : \([64KB-64MB]\)

File size (\(X_5\)) : \([100MB-350GB]\)

Let \(Y\) be the total read/write time for a file. Our performance model for hard disk drives is provided by equation 9 . \[Y = X_3*X_4+X_5*X_1*X_2 \label{diskperfmodel}\tag{9}\]

| Estimate | Std. Error | t value | \(Pr(>|t|)\) | |

|---|---|---|---|---|

| (Intercept) | 7.297e+00 | 5.837e+01 | 0.125 | 0.90066 |

| x3 | 4.318e-04 | 1.776e-04 | 2.432 | 0.01605 |

| x4 | -4.354e-06 | 1.464e-06 | -2.974 | 0.00336 |

| x5 | 1.002e-08 | 1.321e-09 | 7.586 | 1.90e-12 |

| x1 | 3.869e-01 | 8.273e-01 | 0.468 | 0.64059 |

| x2 | 6.664e+00 | 1.060e+01 | 0.629 | 0.53045 |

| x3:x4 | 2.007e-11 | 1.820e-09 | 0.011 | 0.99122 |

| x5:x1 | -7.486e-11 | 1.208e-11 | -6.196 | 4.07e-09 |

| x5:x2 | -9.269e-10 | 2.033e-10 | -4.560 | 9.61e-06 |

| x1:x2 | -9.916e-02 | 1.444e-01 | -0.687 | 0.49310 |

| x5:x1:x2 | 8.344e-12 | 1.890e-12 | 4.416 | 1.76e-05 |

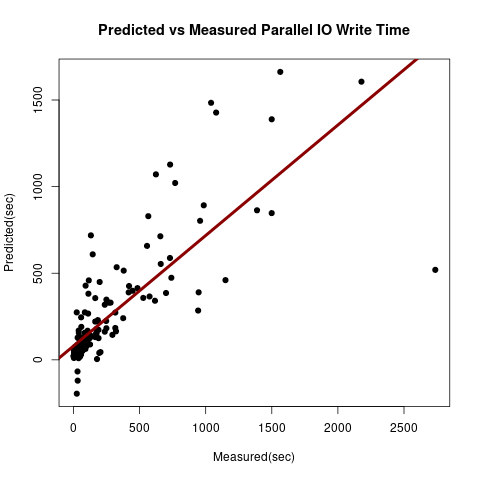

Figure 10: Parallel IO Write Performance Model for HDD fitness.

We used observations from 200 separate experiments for reads and writes. Each experiment accessed the entire file once in parallel. The best models were chosen after comparing with similar models using Anova. We used interaction terms to model IO request distribution on disks and data transfer costs between processes and disks. We used parallel HDF5 on Lustre file system for these experiments. The terms of the write performance model are tabulated in table 3. Stripe count (\(X_2\)), stripe size (\(X_4\)) and number of requests per disk (\(X_3\)) were found to be significant factors in determining the parallel IO time. HDF5 IO requests are broken down into Lustre requests not exceeding stripe size. These requests are queued and serviced independently by disks. Since we accessed the entire file, \(X_5:X_2\) and \(X_3:X_4\) model the load per disk. Between the two terms, \(X_5:X_2\) models the entire load as a single large contiguous request, while \(X_3:X_4\) treats the load as a function of the number of requests and stripe size. In our model described in table 3, \(X_5:X_2\) was significant, but \(X_3:X_4\) had low significance. We used \(X_5:X_1:X_2\) to model the total communication time for transferring \(X_5\) bytes by \(X_1\) processes to \(X_2\) disks or vice versa. \(X_5:X_1\) models the communication cost incurred by \(X_1\) processes. \(X_1:X_2:X_5\) has more significance compared to \(X_1:X_2\) because it incudes the total size of data transferred over the network. Depending on the file size, data may be transferred between processes and disks as variable length messages over multiple iterations. Therefore, we modeled the communication cost of the entire data transfer instead of adding a parameter for message size. The goodness of fit is provided in the figure 10 and the AIC score for this model was 2566.5.

| Estimate | Std. Error | t value | \(Pr(>|t|)\) | |

|---|---|---|---|---|

| (Intercept) | -3.771e-01 | 8.013e+01 | -0.005 | 0.99625 |

| x3 | 5.913e-04 | 2.106e-04 | 2.808 | 0.00573 |

| x4 | -1.584e-06 | 1.729e-06 | -0.916 | 0.36136 |

| x2 | 8.933e+00 | 1.326e+01 | 0.673 | 0.50180 |

| x1 | -2.563e+00 | 1.400e+00 | -1.830 | 0.06944 |

| x5 | 6.274e-10 | 2.154e-09 | 0.291 | 0.77131 |

| x3:x4 | 1.715e-08 | 2.718e-09 | 6.312 | 3.75e-09 |

| x2:x1 | 3.694e-01 | 2.113e-01 | 1.749 | 0.08262 |

| x2:x5 | -2.272e-10 | 2.550e-10 | -0.891 | 0.37452 |

| x1:x5 | -4.751e-11 | 2.038e-11 | -2.332 | 0.02121 |

| x2:x1:x5 | 5.167e-12 | 2.662e-12 | 1.941 | 0.05435 |

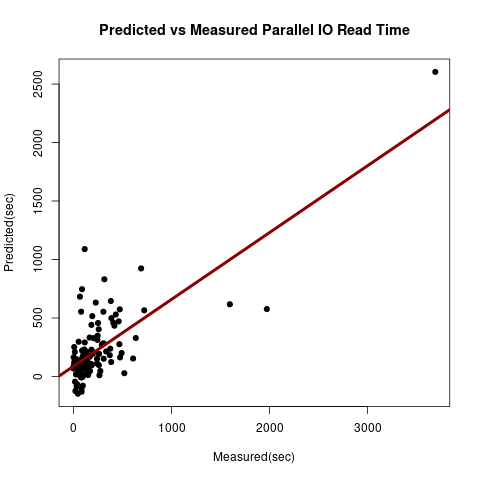

Figure 11: Parallel IO Read Performance Model for HDD fitness .

The same parameters were used to model the parallel read performance of HDDs. The terms of the read performance model and their significance are tabulated in table 4. The read model terms have different significance values. Both \(X_5:X_2\) and \(X_3:X_4\) are significant terms in this model. The differences between the two models for these terms is likely to be caused by insufficent training sets in terms of size and range. The AIC score of this model was 2035.1 and its goodness of fit is provided in figure 11.

6 Evaluation↩︎

All experiments described in this paper were performed on the Delta Supercomputer [53] at the National Center for Supercomputing Applications (NCSA). Delta has AMD Milan CPUs (8 NUMA nodes, 64 cores). Each CPU node has a local NVMe (0.7TB) attached to it via PCIe. Delta has Lustre PFS with upto 8 disks capable of 6PB storage. Delta has 128 CPU nodes in total. The communication network is high-speed cray slingshot [67]. The two-tier storage software was implemented in C++ using GCC and MPI. The other libraries we used are Mercury [49] for multi-threaded RPCs, Intel-TBB [68] for memory allocation, read-write locks and concurrent data structures, Intel-PMDK [69] for mapping NVMe posix files to CPU memories and HDF5 for parallel IO [11]. The NVMes were used exclusively, while the HDDs were shared with other users on the cluster.

6.1 Online Learning↩︎

We used two IO traffic models to test the weight-sharing cache replacement algorithm. The traffic models used are Poisson [57] and IRM [70]. In the Poisson model, the probability of a page request decreases exponentially with time since its arrival. It defines the temporal locality of a page in the workload. We chose suitable Poisson functions to ensure that the temporal locality of the workload is slow evolving [28]. In our traffic models, spatial locality is defined w.r.t. to a page. In the Independent Reference Model (IRM), pages have fixed lifetimes and popularities (maximum requests). The popularity distribution of pages follows a Zipf distribution [16]. A page expires when its number of requests have exceeded its allowed maximum. Most IO traffic fall into one of these two models. Once a page is fetched into the cache, its reuse depends on its lifetime or popularity. In the Poisson model, pages with longer lifetimes have higher chances of reuse while in the IRM model, majority of IO requests are distributed across the most popular pages. IRM workloads can have sharp changes in temporal locality. IO accesses common in HPC workloads [17] is closest to a Poisson model with the same lifetimes for all pages. We chose these two traffic models because their miss streams differ for LRU and LFU. In a Poisson model, LRU evicts pages with expired lifetimes with high probability. LFU is more suitable for the IRM model, because it evicts least popular pages with high probability. We compared the number of cache misses using both types of traffic models for LRU, LFU and weight-sharing online learning (WS) cache replacement policies. The observations from the Poisson model and the IRM model experiments are tabulated in tables [tab:table1] and [tab:table2]. We used 1 MPI process, \(64\) cache lines with line size \(8192\) bytes for these experiments. The results for LRU and LFU in tables [tab:table1] and [tab:table2] match our speculations about the temporal locality of these models. The WS replacement algorithm could learn the traffic models and switch between experts. The number of cache misses using WS is comparable to LRU for Poisson traffic and LFU for IRM traffic. In some cases, WS performed better than both because it could adapt to variations by choosing the most appropriate expert at a particular instant in time. The time taken by WS for OL decisions is presented in the tables under the column labeled WS (sec). We have used OL to learn the IO traffic from past accesses and restricted ourselves to LRU and LFU. It has been shown that these algorithms perform as well as clairvoyant MIN (within a constant factor) [29]. The OL algorithm can be extended by adding a farthest-in-future expert for improving cases which have easily identifiable sequences. We found WS to be a low overhead cache replacement technique suitable for IO workloads.

- [TABLE]

-

Poisson IO traffic Model

6.2 Performance↩︎

In this section we evaluate the parallel performance of the storage benchmark using different workloads. We tested the full implementation (including OL eviction algorithm and stride identifiers) for communication bottlenecks in request processing at scale.

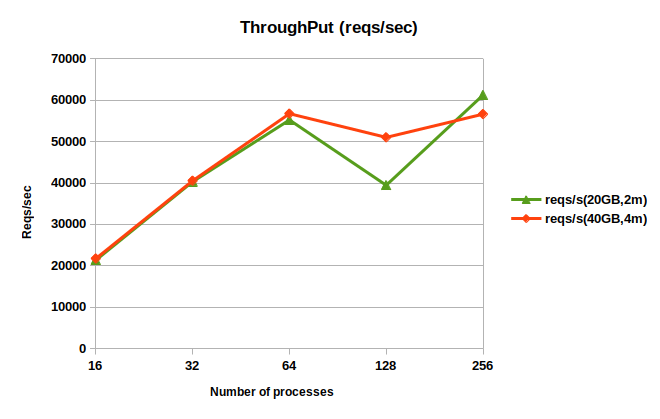

The graph in figure 12 shows the throughput of the two-tier storage system for a read workload with increasing number of MPI processes. The input file size was 500GB. This workload has two test cases :

\(2\)million \(128\)-byte read requests distributed over 20GB

\(4\)million \(128\)-byte read requests distributed over 40GB

Each MPI process had \(32GB\) cache allocated on tier 1 NVMes. We placed \(4\) MPI processes per node and used \(64\) threads per process to service RPC requests. \(16\) client threads were spawned per process for submitting requests. The request arrival rate was \(100\)reqs/sec. The page size and stripe size were \(524288\) bytes for these experiments and stripe count was \(8\). These experiments used block partitioning to partition pages across caches. Read requests were processed by accessing data from local NVMes without inter process communication. From the observations in the graph in figure 12, throughput increases with increasing processes. The drop in performance is due to page misses which may be affected by congestion in the network or by interference from other IO workloads on the cluster.

Figure 12: Read Throughput Vs Number of Processes.

We performed strong scaling experiments using random mapping and read/write workloads to understand the inter process communication costs of multi-threaded remote RPCs and also to evaluate dependencies between the quantities defined in equation 3 and response time. The two workloads used are described below :

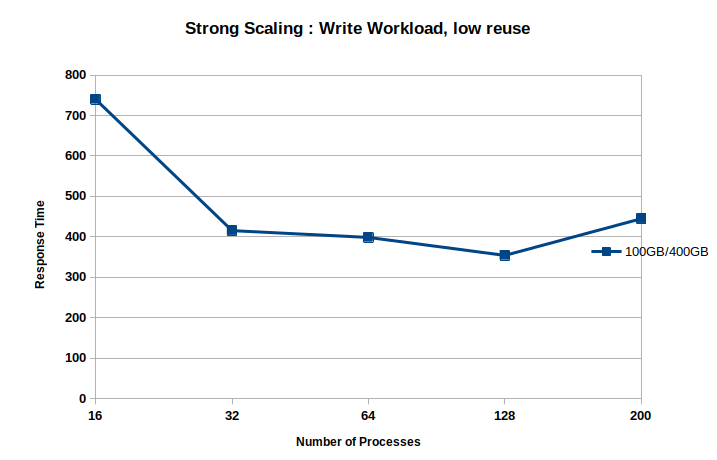

Workload1 : This is a low reuse workload, with \(5\)million requests distributed over \(229376\) pages. Page size was \(524288\) bytes and request size was \(512\) bytes. The workload accessed approximately \(100GB\) data from a \(400GB\) file. The request arrival rate was \(100\)reqs/sec. This workload and cache configuration includes systems that are not in equilibrium.

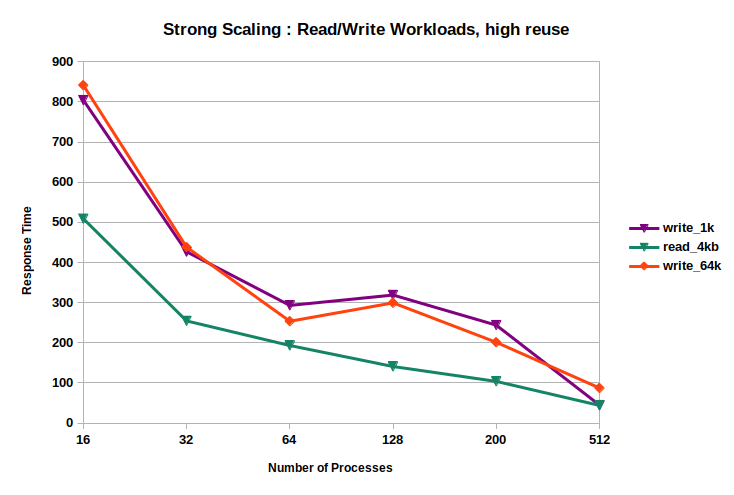

Workload2 : This is a high reuse workload, with \(8\)million requests spread over \(32768\) pages. Page size was \(524288\) bytes. It used approximately \(20GB\) data from a \(400GB\) file. The request arrival rate was \(100\)reqs/sec.

Each MPI process has \(32GB\) cache allocated on tier 1 NVMes. We placed \(4\) MPI processes per node and used \(16\) threads per process to service RPC requests. \(16\) client threads were spawned per process for submitting requests. Stripe count was \(8\) and the stripe size was equal to page size for both workloads.

| #procs | response time(s) | Mean Idle Time(s) | HDD time(s) |

|---|---|---|---|

| 16 | 740.063 | 1.12738 | 637.148 |

| 32 | 415.31 | 1.47583 | 373.875 |

| 64 | 398.438 | 3.0563 | 447.962 |

| 128 | 353.945 | 4.90604 | 473.151 |

| 200 | 444.919 | 12.6391 | 989.649 |

Figure 13: Strong Scaling of Two-tier Storage for Read-Modify-Write Workload1 .

Table 6 has tabulated the observations for Workload1 with read-modify-write requests. Response time was measured as the maximum time taken to complete request processing across all processes and client threads. The response time scales with increasing number of processes until \(128\) processes. Performance dropped at \(200\) processes because of the high IO miss time (HDD). The communication time of IO misses depends on the cluster design, such as number of IO servers, HDDs and network topology. This workload could cause congestion because there may be several large messages (stripe size) in transit on the network. Miss service time also depends on the interference caused by other workloads sharing HDDs. In this experiment, \(T_{m_i} > T_{h_i}\) (equation 3 ) and the completion time (\(\max(T_{h_i},T_{m_i}\)) is mostly dominated by miss penalty. The strong scaling graph of these experiments is plotted in the figure 13.

| #procs | response time(s) | HDD time(s) | completion time(s) |

|---|---|---|---|

| 16 | 509.089 | 70.9043 | 509.089 |

| 32 | 254.459 | 51.3207 | 254.459 |

| 64 | 193.333 | 96.5852 | 193.333 |

| 128 | 140.96 | 102.139 | 140.96 |

| 200 | 103.914 | 78.7909 | 103.914 |

| 512 | 44.051 | 36.574 | 44.051 |

| #procs | response time(s) | HDD time(s) | completion time(s) |

|---|---|---|---|

| 16 | 841.333 | 53.9377 | 841.333 |

| 32 | 438.327 | 54.6357 | 438.327 |

| 64 | 253.772 | 50.4423 | 253.772 |

| 128 | 299.586 | 319.854 | 319.854 |

| 256 | 201.257 | 195.658 | 201.257 |

| 512 | 87.4437 | 174.346 | 174.346 |

Figure 14: Strong Scaling Performance of Two-tier Storage with Read/Read-Modify-Write Workloads2 .

From the observations in tables 7 and 8 and figure 14, we find that workload2 scales strongly with increasing number of processes, because \(T_{h_i} > T_{m_i}\). We ran three experiments using workload2, one read workload with 4KB requests, and two read-modify-write workloads with 1KB and 64KB requests. Writes are more expensive than reads for the same number of hits and misses. Although the test cases accessed data from random processes in the cluster we do not observe sharp increase in response time due to inter process communication or runtime overheads in the software implementation. The high-speed slingshot network on Delta is one of the reasons for strong scaling of short messages in spite of the high volume of inter process communication. These experiments were used to evaluate the software design. To summarize, tiering benefits both types of workloads, but scalability is a challenge for workload1. For workload1, one option to improve scalability is to reduce the request arrival rate which will decrease the values of \(\rho\) and also request queue lengths at both tiers. If that is not possible, another option is to increase the unit of data transfer between tiers 1 and 2. A real application will have computation kernels in addition to IO which will reduce the mean arrival rates of IO requests.

7 Conclusions and Future Work↩︎

We could identify parameters that affect the performance of a tiered storage system. For required arrival and service rates, these performance models can be used to configure cache size (miss rate), number of processes and data sizes at each tier. We found it important to model the behavior of the system for supporting applications with different requirements, e.g. high throughput GPU applications and slower checkpointing. To conclude, tiering is a useful design choice for storage systems in large clusters, refer to IO communication times for large messages, network congestion and server load imbalance. The storage devices may be homogeneous or mixed. We would like to improve the performance models, the behavioral models of devices as well as evaluate prefetching. Other directions for future work are to design learning algorithms for data migration between caches w.r.t. IO request distribution. It is also worthwhile to investigate IO prefetchers which do not require offline training.

8 Acknowledgements↩︎

This research was funded by DOE grant #DE-SC0023263 and used the Delta advanced computing and data resource which is supported by the National Science Foundation (award #OAC 2005572) and the State of Illinois.