ResBench: Benchmarking LLM-Generated FPGA Designs with Resource Awareness

March 11, 2025

Abstract

Field-Programmable Gate Arrays (FPGAs) are widely used in modern hardware design, yet writing Hardware Description Language (HDL) code for FPGA implementation remains labor-intensive and complex. Large Language Models (LLMs) have emerged as a promising tool for automating HDL generation, but existing benchmarks for LLM HDL code generation primarily evaluate functional correctness while overlooking the critical aspect of hardware resource efficiency. Moreover, current benchmarks lack diversity, failing to capture the broad range of real-world FPGA applications. To address these gaps, we introduce ResBench, the first resource-oriented benchmark explicitly designed to differentiate between resource-optimized and inefficient LLM-generated HDL. ResBench consists of 56 problems across 12 categories, covering applications from finite state machines to financial computing. Our evaluation framework systematically integrates FPGA resource constraints, with a primary focus on Lookup Table (LUT) usage, enabling a realistic assessment of hardware efficiency. Experimental results reveal substantial differences in resource utilization across LLMs, demonstrating ResBench’s effectiveness in distinguishing models based on their ability to generate resource-optimized FPGA designs.

1 Introduction↩︎

Field-Programmable Gate Arrays (FPGAs) are widely used in reconfigurable computing, offering adaptable and high-performance hardware implementations for applications like artificial intelligence acceleration, financial computing, and embedded systems. However, FPGA development requires manually coding in hardware description languages (HDLs), a time-consuming and error-prone process. Large Language Models (LLMs) have emerged as a promising solution for automating HDL generation, potentially streamlining hardware design workflows. While recent studies, such as VeriGen [1] and RTLLM [2], have explored the feasibility of LLM-generated Verilog, existing benchmarks primarily focus on functional correctness, overlooking critical resource constraints essential for FPGA implementations.

Unlike traditional software programs, FPGA designs are highly constrained by hardware resource utilization, including lookup tables (LUTs), flip-flops (FFs), digital signal processors (DSPs), and block RAMs (BRAMs). Even when HDL code passes simulation tests, its hardware efficiency can vary significantly depending on how logic is optimized and mapped to FPGA hardware. This distinction is crucial in reconfigurable computing, where resource-aware optimizations determine whether a design can be practically deployed. Existing benchmarks fail to address this aspect, evaluating LLM-generated Verilog solely based on syntax and functional correctness while neglecting hardware efficiency.

To address this limitation, we introduce ResBench, the first FPGA-resource-focused benchmark explicitly designed to evaluate LLM-generated Verilog in terms of both functional correctness and hardware resource efficiency. Unlike previous benchmarks that emphasize syntax and logic accuracy, ResBench assesses how effectively LLMs generate Verilog optimized for FPGA resource constraints, providing a more realistic evaluation of AI-driven HDL generation. Our key contributions are:

A resource-centric benchmark comprising 56 manually curated problems across 12 diverse categories, covering combinational logic, state machines, AI accelerators, financial computing, and more, ensuring broad applicability to real-world FPGA workloads.

An automated evaluation framework that integrates functional correctness validation, FPGA synthesis, and resource analysis1. The framework systematically benchmarks LLM-generated Verilog, enabling consistent and reproducible evaluation across FPGA design tasks.

A detailed performance analysis of LLMs based on both correctness and FPGA resource efficiency. Our findings reveal significant variations in how different models optimize resource utilization.

By incorporating FPGA resource awareness into benchmarking, ResBench provides a practical assessment of LLM-generated HDL and offers insights into how well current models generalize to FPGA designs. This benchmark serves as a foundation for advancing AI-driven Verilog generation, encouraging the development of more hardware-efficient models tailored for reconfigurable computing.

2 Background↩︎

This section explores the evolution of large language models (LLMs) from general code generation to hardware description languages (HDLs), focusing on their adaptation for FPGA design. We review their capabilities, limitations, and existing benchmarks, which primarily assess functional correctness while neglecting FPGA-specific constraints like resource efficiency. This gap highlights the need for resource-aware evaluation to better assess LLM-generated HDL for real-world FPGA deployment.

2.1 Code-specialized and HDL-specialized LLMs↩︎

Language models have evolved significantly especially after the emergence of Transformers [3]. Building on this foundation, large-scale pre-trained models such as BERT [4], the GPT series [5]–[7], and PaLM 2 [8] have advanced text understanding and generation, enabling applications across a variety of tasks, including code generation. Surveys such as [9]–[11] provide detailed overviews of the latest techniques and model architectures in this field.

Code-specialized LLMs can be obtained with two approaches. The first approach trains models from scratch on large-scale open-source code datasets across multiple programming languages, as seen in CodeGen [12], InCoder [13], and StarCoder [14]. The second approach fine-tunes general-purpose LLMs for coding tasks, as with Codex [15] from GPT-3 and Code Llama [16] from Meta’s Llama.

While significant research has focused on using language models for general software code generation, the application of LLMs for hardware description has not received comparable attention. Existing efforts primarily involve fine-tuning existing code-generation or general-purpose LLMs on HDL datasets to develop specialized models capable of generating HDL code. In particular, these works aim to specialize LLMs in HDL generation by exposing them to HDL-specific datasets, fine-tuning methods, and dedicated benchmarking frameworks to enhance their understanding and performance in hardware description tasks. For instance, VeriGen [17] fine-tunes CodeGen (2B, 6B, 16B) on a Verilog dataset sourced from GitHub repositories and textbooks. It employs supervised fine-tuning with problem-code pairs and accelerates training using parallel computing, while functional correctness is validated using a benchmark set comprising custom problems and HDLBits [18]. MEV-LLM [19] uses 31104 GitHub files, labeled by GPT-3.5 to fine-tune CodeGen (2B, 6B, 16B and GEMMA (2B, 7B) models, leading to an improvement of up to 23.9% in the Pass@k metric over VeriGen. AutoVCoder [20] curates Verilog modules from GitHub, filtering high-quality samples using ChatGPT-3.5 scoring. It applies a two-stage fine-tuning process to improve generalization, with final evaluation conducted on a curated real-world benchmark.

Beyond direct fine-tuning, reinforcement learning approaches like Golden Code Feedback [21] incorporate user feedback to iteratively refine model outputs, ensuring that generated code better aligns with practical hardware design principles. Similarly, multi-modal techniques such as VGV [22] integrate circuit diagrams with textual data during training to allow models to understand spatial and parallel aspects of circuit design.

2.2 Benchmarks for LLM-Generated Software and Hardware↩︎

The research community has recognized the need for robust and standardized benchmarks to rigorously evaluate model performance. Among exiting benchmarks for code generation, HumanEval [15] and MBPP (Mostly Basic Python Problems) [23] are widely used in evaluating LLMs for code generation. HumanEval consists of 164 hand-crafted Python programming tasks, each with a function signature and corresponding test cases to validate correctness. It is relatively small in scope but carefully curated, making it ideal for quick functional correctness evaluations of Python-based code generation. Several extensions have been introduced to improve its comprehensiveness, including HumanEval+ [23], which increases the number of test cases by 80×, and HumanEvalPack [24], which expands the benchmark to six programming languages. Similarly, MBPP comprises a collection of approximately 974 short Python programming tasks, each with a task description prompt, a code solution, and three automated test cases. It emphasizes the correctness and clarity of solutions and is often used for training and inference because of its compact size. Its enhanced version, MBPP+ [23], corrects flawed implementations and augments test cases. While HumanEval and MBPP serve as fundamental benchmarks, they primarily consist of entry-level programming tasks, which do not always reflect the complexity of real-world coding challenges. To address this, benchmarks with more complicated problems have been introduced. For example, DSP-1000 [25] includes 1,000 science-related programming tasks from seven Python libraries, incorporating diverse contexts and multi-criteria evaluation metrics for a more realistic assessment of code generation models.

The benchmarks share a common evaluation framework aimed at assessing whether generated code is both syntactically valid and functionally correct. In particular, each benchmark generally includes four key components: (1) prompts, which can be presented as a natural language description [26], [27] or both description and function signature [15], [23], guiding the model on what to generate; (2) a reference solution, which serves as the correct implementation for comparison; (3) test cases, which are predefined inputs and expected outputs used to validate correctness; and (4) performance metrics, like Pass@k [15] and Code Similarity Scores [28], which quantify how effectively an LLM-generated solution satisfies the given problem constraints. The most widely used metric is Pass@k, which measures the likelihood that at least one of the top-k generated solutions passes all test cases.

Existing works on HDL generation benchmarks often build upon lessons from software code benchmarks, including strategies for curating diverse problem sets, defining standardized test cases, and developing automated evaluation frameworks. Additionally, performance metrics often adopt Pass@k, similar to their software evaluation counterparts. However, benchmarking Verilog differs due to the simulation-based nature of hardware verification [29]. Unlike software benchmarks, which typically evaluate correctness by directly executing code, Verilog requires custom testbench scripts that simulate hardware behavior to validate functionality. The evaluation process involves generating the reference Verilog code, requesting code from the LLM, executing them within a hardware simulation environment, and comparing the outputs to determine correctness.

| Benchmark | Size | PL | Type | Features |

|---|---|---|---|---|

| VerilogEval [30] | (Verilog) | Verilog code generation tasks | Diverse tasks from simple combinational circuits to finite state machines; automatic functional correctness testing. | |

| HDLEval [31] | Multiple | Language-agnostic HDL evaluation | Evaluates LLMs across multiple HDLs using standardized test benches and formal verification; categorizes problems into combinational and pipelined tests. | |

| PyHDL-Eval [32] | Python-embedded DSLs | Specification-to-RTL tasks | Focuses on Python-embedded DSLs for hardware design; includes Verilog reference solutions and test benches; evaluates LLMs’ ability to handle specification-to-RTL translations. | |

| RTLLM [33] | Verilog, VHDL, Chisel | Design RTL generation | Supports evaluation in various HDL formats; covers a wide range of design complexities and scales; includes automated evaluation framework. | |

| VHDL-Eval [34] | VHDL | VHDL code generation tasks | Aggregates translated Verilog problems and publicly available VHDL problems; utilizes self-verifying testbenches for functional correctness assessment. | |

| GenBen [35] | Verilog | Fundamental hardware design tasks, debugging tasks | Evaluate synthesizability, power consumption, area utilization, and timing performance to ensure real-world applicability |

Some benchmarks have emerged to address these challenges, as presented in Table 1. Among them, VerilogEval [30] is widely adopted to evaluate LLMs in the context of Verilog code generation [20], [36], [37]. It comprises an evaluation dataset of 156 problems sourced from the HDLBits instructional website, covering a diverse range of Verilog code generation tasks from simple combinational circuits to complex finite state machines. The framework enables automatic functional correctness testing by comparing the simulation outputs of generated designs against golden solutions. Another example is HDLEval [31]. Unlike benchmarks confined to specific languages like Verilog, HDLEval adopts a language-agnostic approach, allowing the same set of problems, formulated in plain English, to be tested across different HDLs. The benchmark consists of 100 problems, systematically categorized into combinational and pipelined designs, covering fundamental hardware components such as logic gates, arithmetic operations, and pipelined processing units. It incorporates formal verification over traditional unit tests, ensuring that generated HDL code is functionally correct and meets logical equivalence with reference implementations. Additionally, PyHDL-Eval [32] is a framework for evaluating LLMs on specification-to-Register Transfer Level (RTL) tasks within Python-embedded domain-specific languages (DSLs). It includes 168 problems categorized into 19 subclasses, covering combinational logic, sequential logic, and parameterized designs. The evaluation process involves executing the generated code in Python-embedded HDLs (e.g., PyMTL3, PyRTL) and measuring functional correctness based on pass rates, ensuring the model’s ability to generate valid and synthesizable RTL implementations.

2.3 Challenges in LLM Benchmarking for FPGA Design↩︎

Despite advancements in LLM-driven Verilog generation, existing models primarily focus on producing syntactically and functionally correct HDL but fail to address critical hardware constraints essential for FPGA deployment, such as resource efficiency, timing constraints, and power consumption. Unlike ASIC design, FPGA-based development demands careful consideration of resource usage, including lookup tables (LUTs), flip-flops (FFs), block RAM (BRAM), and digital signal processing (DSP) blocks. However, current LLMs for Verilog generation lack an understanding of FPGA-specific requirements, often producing designs that are functionally correct but inefficient and impractical for real-world FPGA deployment.

The inability to evaluate and optimize LLM-generated Verilog for FPGA resource constraints highlights the need for advancing resource-aware Verilog generation and motivates this study. To establish LLMs as a practical solution for HDL automation, it is essential to equip them with a deeper understanding of FPGA design constraints. Achieving this requires developing new training datasets, designing robust evaluation frameworks, and refining LLM training strategies to enhance their capability in hardware-aware code generation. This paper focuses on constructing a benchmark that provides an evaluation of LLMs’ performance in generating HDLs that are both functionally correct and optimized for FPGA-specific constraints. The next section reviews existing benchmark efforts in this domain.

3 Design of ResBench↩︎

This section introduces the design of ResBench, detailing its guiding principles and structured problem set for evaluating LLM-generated Verilog. We define key criteria for assessing both functional correctness and FPGA resource efficiency, ensuring the benchmark effectively differentiates models based on optimization capabilities and application diversity.

3.1 Design Principles and Benchmark Problems↩︎

ResBench is designed to systematically evaluate LLM-generated Verilog across a diverse set of FPGA applications. The benchmark consists of 56 problems categorized into 12 domains, each reflecting a key aspect of FPGA-based hardware design. The design of problems follows two fundamental principles to ensure both meaningful evaluation and alignment with real-world FPGA applications.

The Principle of Hardware Efficiency Differentiation ensures that ResBench captures differences in LLMs’ ability to optimize FPGA resource usage. The benchmark includes problems that allow multiple implementation strategies and require algebraic simplifications, making it possible to distinguish models that generate Verilog with lower hardware costs. The Principle of FPGA Application Diversity acknowledges FPGAs’ versatility, particularly in computational acceleration and edge computing. ResBench spans application domains such as financial computing, climate modeling, and signal processing, ensuring that LLMs are tested across a broad range of real-world FPGA workloads.

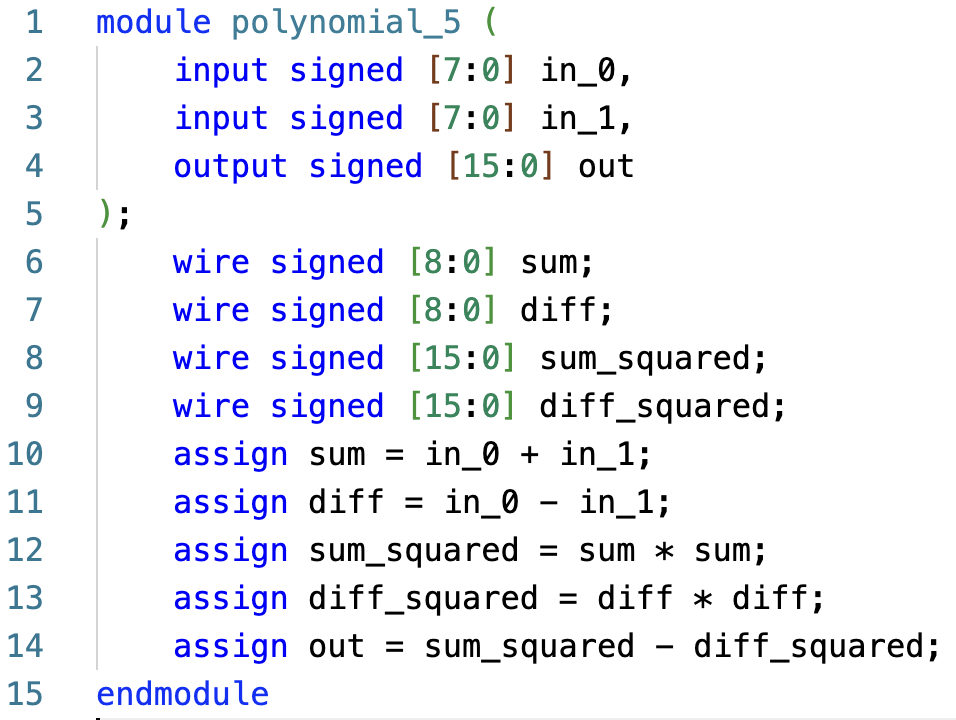

Response from Qwen-2.5 (213 LUTs)

Response from GPT4 (0 LUT + 1 DSP)

Figure 1: Benchmark example illustrating HDL optimization capability using the expression \((a+b)^2 - (a-b)^2\). (a) Qwen-2.5 computes the full expression directly, leading to high LUT usage. (b) GPT-4 simplifies the expression to \(4ab\), significantly reducing resource usage by using a single DSP unit instead of LUTs. This example demonstrates ResBench’s ability to differentiate LLMs based on resource optimization..

| Category | # Problems | Example Problems |

|---|---|---|

| Combinational Logic | 8 | parity_8bit, mux4to1, bin_to_gray |

| Finite State Machines | 4 | fsm_3state, traffic_light, elevator_controller |

| Mathematical Functions | 5 | int_sqrt, fibonacci, mod_exp |

| Basic Arithmetic Operations | 5 | add_8bit, mult_4bit, abs_diff |

| Bitwise and Logical Operations | 4 | bitwise_ops, left_shift, rotate_left |

| Pipelining | 5 | pipelined_adder, pipelined_multiplier, pipelined_fir |

| Polynomial Evaluation | 5 | \((x+2)^2 + (x+2)^2 + (x+2)^2\), \((a+b)^2 - (a-b)^2\) |

| Machine Learning | 5 | matrix_vector_mult, relu, mse_loss |

| Financial Computing | 4 | compound_interest, present_value, currency_converter |

| Encryption | 3 | caesar_cipher, modular_add_cipher, feistel_cipher |

| Physics | 4 | free_fall_distance, kinetic_energy, wavelength |

| Climate | 4 | carbon_footprint, heat_index, air_quality_index |

| Total | 56 | – |

Each problem in ResBench is carefully structured to evaluate both functional correctness and resource efficiency across different FPGA applications. The dataset includes problems from digital logic, mathematical computation, control logic, and application-driven domains such as machine learning, cryptography, and financial computing. Table 2 provides an overview of problem categories and representative examples.

ResBench effectively enforces the principle of hardware efficiency differentiation by incorporating problems that demand advanced optimization techniques beyond basic logic synthesis. Fig. 1 illustrates this with the polynomial evaluation benchmark \((a+b)^2 - (a-b)^2\), where algebraic simplification enables a more efficient Verilog implementation using a single DSP block. LLMs that fail to recognize this optimization generate designs with excessive LUT consumption, highlighting disparities in resource efficiency.

ResBench also ensures FPGA application diversity by encompassing traditional and emerging workloads. Foundational FPGA tasks such as combinational logic, finite state machines, and arithmetic operations are included, alongside computational acceleration problems involving pipelining, polynomial evaluations, and mathematical functions. Additionally, application-driven categories such as machine learning, encryption, and financial computing highlight the adaptability of FPGAs in AI acceleration, security, and high-speed data processing. By spanning this broad range of applications, ResBench provides an in-depth evaluation of LLMs’ ability to generate Verilog optimized for real-world FPGA use cases.

3.2 Comparison with Existing Benchmarks↩︎

| Benchmark | Year | Hardware | Optimization | Problem Diversity |

| Awareness | ||||

| VerilogEval [17] | 2023 | General HDL | No | Logic, FSMs, arithmetic |

| HDLEval [31] | 2024 | General HDL | No | Digital circuits, control logic |

| PyHDL-Eval [32] | 2024 | General HDL | No | Python-based HDL, small designs |

| RTLLM [2] | 2024 | General HDL | No | RTL, bus protocols, DSP |

| VHDL-Eval [34] | 2024 | General HDL | No | VHDL logic, sequential circuits |

| GenBen [35] | 2024 | General HDL | No | Application-driven tasks |

| ResBench (Ours) | 2025 | FPGA | Resource Opt. | Broad scope across 12 domains |

Table 3 highlights key differences between ResBench and existing HDL benchmarks, particularly in FPGA resource optimization awareness and problem diversity. Most existing benchmarks, including VerilogEval, HDLEval, and GenBen, primarily assess functional correctness and HDL syntax quality but do not explicitly evaluate FPGA resource efficiency. These benchmarks lack mechanisms to measure how effectively LLMs optimize hardware costs, making them unsuitable for assessing FPGA-specific constraints. In contrast, ResBench explicitly incorporates optimization-aware problems and reports FPGA resource usage, ensuring that generated Verilog is not only functionally correct but also hardware-efficient.

Another key distinction of ResBench is its problem diversity. Existing benchmarks tend to focus on fundamental HDL constructs such as basic logic, FSMs, and arithmetic operations, without considering the broad application landscape of FPGAs. For instance, VerilogEval centers on control logic and arithmetic, while HDLEval primarily evaluates digital circuits and state machines. GenBen introduces application-driven problems but lacks structured evaluation for optimization strategies. ResBench, by contrast, covers a significantly wider spectrum of FPGA workloads, including applications such as machine learning, encryption, financial computing, and physics-based modeling. These domains represent real-world FPGA use cases, where resource efficiency is critical. By encompassing both fundamental and high-performance FPGA tasks, ResBench ensures a more representative and practical assessment of LLM-generated Verilog for FPGA hardware design.

4 Evaluation Framework↩︎

To systematically assess LLM-generated Verilog for FPGA design with ResBench, we implement a structured framework that evaluates both functional correctness and hardware efficiency. The framework defines \(\text{LUT}_{\min}\) as a key resource metric to measure optimization quality and incorporates an automated benchmarking system that streamlines Verilog generation, functional verification, FPGA synthesis, and result analysis, ensuring consistency and scalability in evaluation.

4.1 Resource Usage Metric↩︎

LUT utilization is a fundamental metric for assessing FPGA resource efficiency, as LUTs serve as the primary logic resource for combinational operations and small memory elements. While FPGAs also contain DSP blocks, BRAM, and flip-flops, these components are often application-specific. DSPs and BRAMs are essential for arithmetic-intensive and memory-heavy designs, but they are not universally required across FPGA workloads. In contrast, LUTs are always present, making them a reliable baseline for evaluating different HDL implementations. Furthermore, high LUT consumption frequently correlates with inefficient logic synthesis, making LUT usage a practical indicator of design efficiency.

Each LLM-generated design \(d_i\) is evaluated based on its lookup table (LUT) consumption. If a design successfully passes both synthesis and functional verification, its LUT count is recorded as \(\text{LUT}(d_i)\). Otherwise, it is assigned \(\infty\) to signify an invalid or non-synthesizable result:

\[\text{LUT}(d_i) = \begin{cases} \text{LUT count from synthesis}, & \text{if } d_i \text{is synthesizable and correct}, \\ \infty, & \text{otherwise}. \end{cases}\]

Given \(k\) generated designs for a problem, we determine the most resource-efficient implementation by selecting the minimum LUT usage among all valid designs:

\[\text{LUT}_{\min} = \min \left( \text{LUT}(d_1), \text{LUT}(d_2), \dots, \text{LUT}(d_k) \right).\]

By using \(\infty\) for failed designs, our approach naturally excludes non-functional implementations, maintaining computational consistency and eliminating the need for explicit filtering.

4.2 Open-Source Software for Automated Benchmarking↩︎

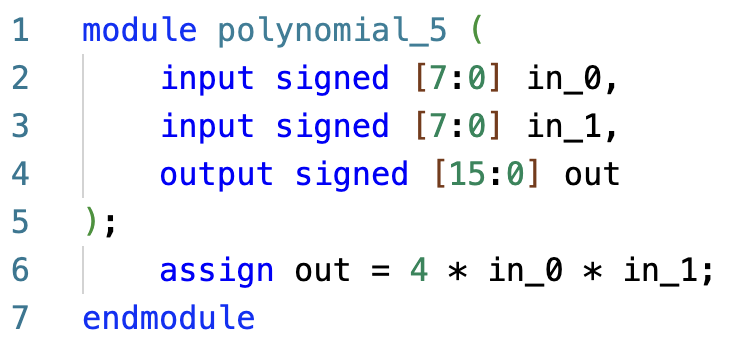

The software for ResBench automates the evaluation of LLM-generated Verilog, systematically measuring both functional correctness and FPGA resource efficiency with minimal manual intervention. An overview of the software’s workflow is shown in Fig. 2.

The software accepts a user-specified LLM model, which can be either a pre-integrated option or a custom model, and generates Verilog solutions based on structured problem definitions. It produces detailed evaluation reports, indicating whether each design passes synthesis and functional correctness checks, along with a resource utilization summary. The primary metric reported is \(\text{LUT}_{\min}\), representing the smallest LUT usage among successfully synthesized designs. By automating the full evaluation pipeline, the framework supports large-scale benchmarking and comparative assessment of different LLMs for HDL code generation.

To maintain consistency, each problem follows a structured format consisting of three essential components: a natural language problem description in plain English, a standardized Verilog module header, and a predefined testbench. The problem description specifies input-output behavior and functional constraints, ensuring that LLM-generated code aligns with real-world hardware design requirements. The module header provides a consistent Verilog interface with defined input and output signals but omits internal logic. The testbench validates functional correctness through simulation by applying predefined test cases and comparing outputs against a manually verified reference solution.

Figure 2: Overview of the software workflow. The process begins with Verilog generation using an LLM, followed by functional verification through testbenches. Functionally correct designs undergo FPGA synthesis to extract resource usage metrics, and the framework compiles performance reports comparing functional correctness and resource efficiency.

The evaluation framework follows a structured pipeline to assess LLM-generated designs. First, the framework queries the selected LLM model to generate Verilog code for each problem. These generated solutions are stored with references to their corresponding problem descriptions. Next, functional verification is conducted using predefined testbenches. Designs that pass all test cases proceed to FPGA synthesis, while failed designs have their error logs recorded.

FPGA synthesis is performed to extract resource usage metrics such as LUT count, DSP utilization, and register count. LUT usage for successfully synthesized designs is stored, while failed synthesis attempts are assigned \(\infty\) for consistency in numerical processing. The key reported metric, \(\text{LUT}_{\min}\), reflects the smallest LUT usage among functionally correct designs, identifying the most resource-efficient implementation.

In the final stage, the framework consolidates results across all models and problems, producing structured reports that summarize pass rates, synthesis success rates, and resource usage statistics. Users can visualize model performance through automatically generated comparisons of functional correctness and resource efficiency. By following this structured evaluation process, the framework offers a fully automated benchmarking solution that systematically assesses LLM-generated Verilog across a diverse range of FPGA applications, ensuring relevance to real-world hardware design challenges.

5 Evaluation↩︎

This section evaluates LLM-generated FPGA designs with ResBench, focusing on functional correctness and FPGA resource efficiency. We detail the experimental setup, using Pass@k for correctness and LUT usage for resource usage. Model performance is analyzed across benchmarks, highlighting differences in correctness and resource efficiency, with a focus on distinguishing optimized from inefficient designs.

5.1 Experimental Setup and Metrics↩︎

We assess functional correctness using the widely adopted Pass@k metric, which quantifies the probability that at least one of the top-\(k\) generated solutions passes all functional test cases. The metric is formally defined as follows: \[\text{Pass@k} = {E}_{Q \sim \mathcal{D}} \left[ 1 - \frac{\binom{n - c_Q}{k}}{\binom{n}{k}} \right]\] where \(Q\) is a problem sampled from the benchmark dataset \(\mathcal{D}\), \(n\) represents the total number of independently generated solutions for \(Q\), and \(c_Q\) is the number of solutions that pass all functional correctness tests. The binomial coefficient \(\binom{n}{k}\) represents the number of ways to choose \(k\) solutions from \(n\).

For the evaluation, we employ Vivado 2023.1 for both simulation and synthesis. During simulation, the testbenches provided for each problem are compiled and executed to verify the functional correctness of the generated Verilog modules. Upon successful simulation, Vivado’s synthesis tool is used to assess resource utilization and generate detailed reports.

For LLM evaluation, we set the temperature parameter to 1.5 to encourage diverse code generation. The evaluation includes three types of models to ensure meaningful comparisons: general-purpose LLMs, code-specialized LLMs, and HDL-specialized LLMs. The tested general-purpose models include GPT-3.5 [7], GPT-4o [38], GPT-4 [39], GPT-o1-mini [40], Llama3.1-450B [41], Qwen-Max [42], and Qwen-Plus [43]. The code-specialized models include Qwen2.5-Coder-32B-Instruct [44] and Codestral [45], while VeriGen represents the HDL-specialized category. However, during evaluation, VeriGen [17] failed to generate meaningful code for all problems, making it impossible to extract or assess functional results; consequently, VeriGen’s results are omitted from further discussion.

Our experiments evaluate the ability of LLMs to generate both correct and hardware-efficient Verilog code by examining trends in Pass@k as the number of candidate solutions increases, comparing the performance of different model types in terms of syntax and functional correctness, and evaluating resource utilization. In particular, we focus on how well different models optimize FPGA resources such as LUTs, and we analyze the variations in resource usage across diverse problem categories. We also investigate the impact of specially designed "trick" problems—those requiring algebraic simplifications—on resource consumption, providing insights into the optimization strategies employed by the models.

5.2 Functional Correctness↩︎

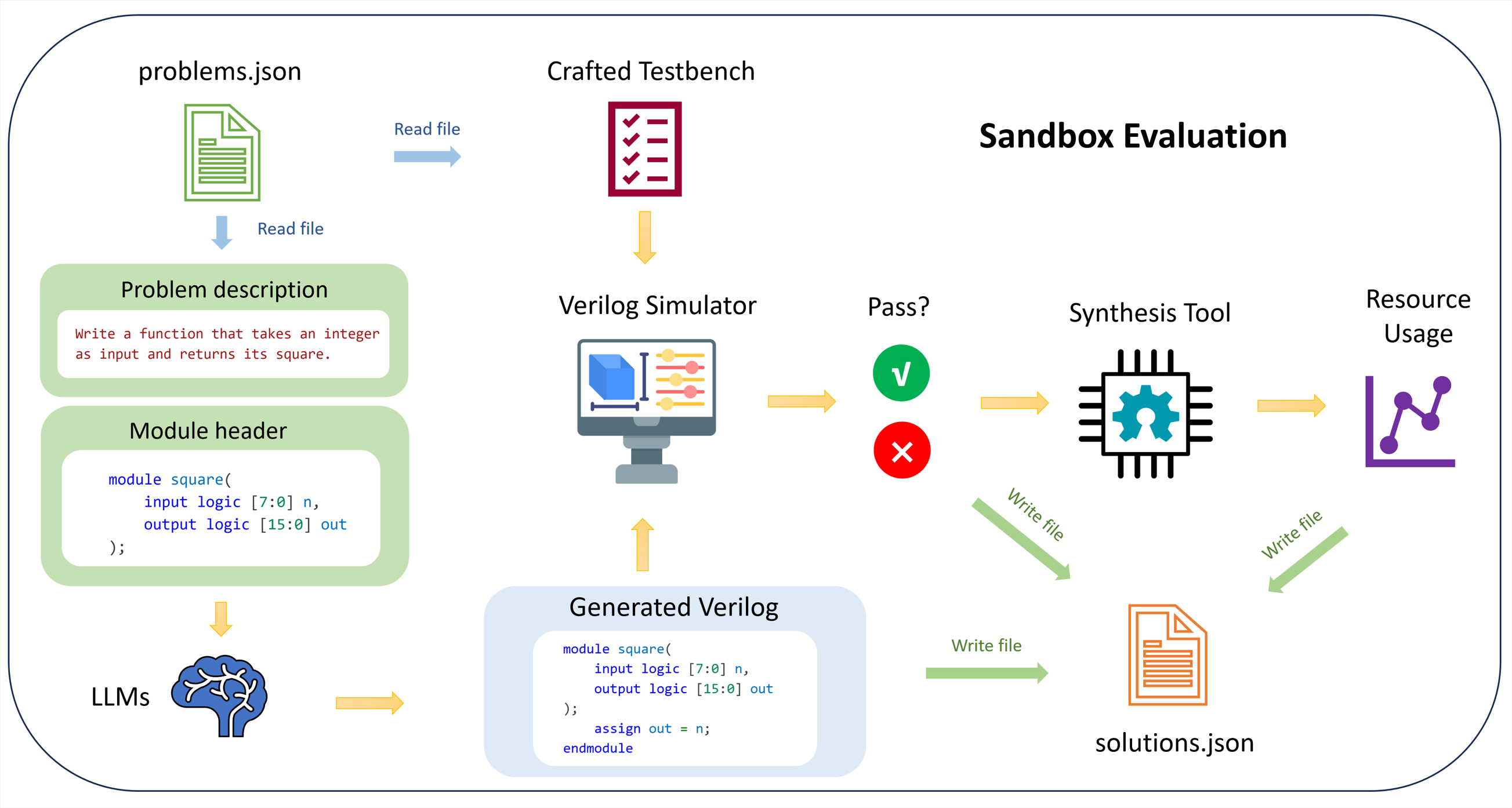

Figure 3 presents a heatmap illustrating Pass@k across 12 problem categories with \(k=15\), while Table [passcount] details the pass counts of various LLMs in generating Verilog code, including a “Number of Wins” metric that indicates the number of categories in which each LLM achieved the highest pass count.

max width=

Analyzing the number of wins, GPT-o1-mini emerges as the leading model, achieving the highest pass counts in most categories as also evidenced by Figure 3. This suggests that reasoning-optimized models have an advantage in Verilog code generation, potentially due to enhanced reasoning capabilities that contribute to more accurate outputs. A notable trend is observed in fundamental hardware design problems such as combinational logic, finite state machines, and pipelining. In cases where LLMs fail to produce correct solutions, the generated code is often syntactically correct but functionally incorrect, indicating that while LLMs grasp basic syntax, they struggle with complex logic control. Conversely, for more intricate problems with complex contexts, such as encryption or mathematical functions, LLMs tend to produce syntactically incorrect code, reflecting challenges in understanding and reasoning within these contexts.

According to Figure 3, most categories include at least one LLM that achieves a Pass@k score of 1, indicating that for every problem there is at least one correct solution. However, in categories such as pipelining, financial computing, and encryption, LLMs tend to underperform and exhibit higher variability. For example, while GPT-3.5 produced no passing solutions in pipelining, LLaMA achieved a Pass@k score of 0.8. Similar patterns are observed in the mathematical functions category, underscoring the importance of evaluating both functional correctness and resource optimization in complex design scenarios.

Figure 3: Pass@15 heatmap for each LLM across categories

5.3 Resource Usage↩︎

| Problem | gpt-3.5-turbo | gpt-4 | gpt-4o | gpt-o1-mini | llama3.1-405B | qwen-max | qwen-plus | qwen2.5-coder-32B | codestral |

|---|---|---|---|---|---|---|---|---|---|

| fsm 3state | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| traffic light | 1 | 1 | 2 | 0 | 0 | 2 | 3 | 2 | \(\infty\) |

| elevator controller | 3 | 3 | 2 | 2 | 2 | 2 | 2 | 2 | 2 |

| vending machine | 1 | 1 | 2 | 1 | 2 | 1 | 1 | 2 | 1 |

| int sqrt | \(\infty\) | \(\infty\) | 68 | 177 | \(\infty\) | 64 | 229 | 173 | \(\infty\) |

| fibonacci | \(\infty\) | 56 | 1 | 56 | 56 | 56 | \(\infty\) | \(\infty\) | \(\infty\) |

| mod exp | \(\infty\) | \(\infty\) | 4466 | 4669 | \(\infty\) | 1911 | 1678 | \(\infty\) | \(\infty\) |

| power | \(\infty\) | 79 | 93 | 93 | \(\infty\) | 93 | 93 | 93 | \(\infty\) |

| log2 int | \(\infty\) | \(\infty\) | \(\infty\) | 10 | 20 | \(\infty\) | \(\infty\) | 12 | \(\infty\) |

| abs diff | 12 | 12 | 14 | 12 | 12 | \(\infty\) | 12 | 12 | 12 |

| modulo op | 82 | 82 | 82 | 82 | 111 | \(\infty\) | \(\infty\) | \(\infty\) | \(\infty\) |

| left shift | 10 | 10 | 10 | 10 | 10 | 12 | 12 | 10 | 10 |

| pipelined adder | \(\infty\) | 0 | 16 | \(\infty\) | 0 | \(\infty\) | 0 | 15 | \(\infty\) |

| pipelined multiplier | \(\infty\) | \(\infty\) | 77 | 70 | 56 | \(\infty\) | 70 | \(\infty\) | \(\infty\) |

| pipelined max finder | \(\infty\) | 0 | 24 | 0 | 24 | 24 | 24 | 24 | 24 |

| \(x^3 + 3x^2 + 3x + 1\) | 49 | 49 | 0 | 91 | 0 | 91 | 0 | 91 | 49 |

| \((x+2)^2 + (x+2)^2 + (x+2)^2\) | 64 | 33 | 96 | 11 | 108 | 108 | 26 | 18 | 33 |

| \((a+b)^2 - (a-b)^2\) | \(\infty\) | 0 | 213 | 59 | 16 | 213 | 16 | 16 | 16 |

| relu | 8 | 8 | 8 | 8 | 8 | 16 | 8 | 8 | 16 |

| mse loss | \(\infty\) | 216 | 64 | 64 | 216 | 64 | 216 | 64 | 64 |

| compound interest | \(\infty\) | 13060 | 10135 | 10135 | 52950 | 9247 | \(\infty\) | 10135 | 52950 |

| currency converter | \(\infty\) | \(\infty\) | 0 | 0 | 25 | 0 | \(\infty\) | \(\infty\) | \(\infty\) |

| free fall distance | 6 | 6 | 64 | 6 | 6 | 64 | 67 | 64 | 6 |

| kinetic energy | 70 | 70 | 54 | 54 | 54 | 54 | 54 | 54 | 54 |

| potential energy | 6 | 6 | 84 | 0 | 6 | 6 | 6 | 6 | 6 |

| carbon footprint | 174 | 121 | 110 | 92 | 121 | 121 | 110 | 110 | 110 |

| heat index | 16 | 16 | 201 | 16 | 195 | 16 | 124 | 201 | 201 |

| air quality index | \(\infty\) | \(\infty\) | 128 | 104 | \(\infty\) | 104 | 116 | 128 | 128 |

| Number of wins | 7 | 12 | 10 | 19 | 11 | 10 | 9 | 7 | 8 |

Consequently, we focus primarily on LUT usage, as detailed in Table 4. In this table, problems with identical LUT usage across different LLMs are excluded from further analysis. The cell with the least resource usage for each category is marked in bold, and these bold cells are counted as the number of wins to indicate the number of categories in which each LLM achieves optimal resource utilization.

The observed variations in resource usage across different problem types confirm that LLM-generated Verilog can lead to different hardware resource demands. For simple tasks such as combinational logic and basic arithmetic operations (omitted from Table 4), resource usage is nearly uniform, suggesting that for straightforward problems, LLMs tend to generate optimized solutions with minimal variance due to reliance on well-established standard implementations.

Analyzing the wins in Table 4, GPT-o1-mini leads with 19 wins, demonstrating that it optimizes resource usage for Verilog code generation more effectively across most problems. This suggests that its reasoning capabilities may offer a deeper understanding of Verilog and the associated challenges, leading to superior resource optimization. In contrast, code-specialized LLMs, while achieving high precision in generating functionally correct code, may lack the overall reasoning ability required to address practical FPGA design challenges.

6 Conclusion and Future Work↩︎

LLMs offer a promising approach to HDL generation but struggle to meet practical FPGA design requirements. Existing benchmarks focus on functional correctness while neglecting FPGA-specific constraints such as resource usage and optimization potential. They also lack problem diversity, limiting their ability to evaluate real-world applicability. To address this, we introduced ResBench, the first resource-centric benchmark for LLM-generated HDL. It includes 56 problems across 12 categories, integrating FPGA resource constraints to assess both correctness and efficiency. Experimental results show that different LLMs exhibit distinct optimization behaviors, validating the benchmark’s effectiveness.

Future work will extend ResBench by incorporating additional FPGA resource metrics, such as power consumption and timing, and improving the benchmarking software with an enhanced interface to facilitate community contributions and dataset expansion.

References↩︎

Code repository: https://github.com/jultrishyyy/ResBench↩︎