Multimodal-to-Text Prompt Engineering in Large Language Models Using Feature Embeddings for GNSS Interference Characterization

1

January 09, 2025

Abstract

Large language models (LLMs) are advanced AI systems applied across various domains, including NLP, information retrieval, and recommendation systems. Despite their adaptability and efficiency, LLMs have not been extensively explored for signal

processing tasks, particularly in the domain of global navigation satellite system (GNSS) interference monitoring. GNSS interference monitoring is essential to ensure the reliability of vehicle localization on roads, a critical requirement for numerous

applications. However, GNSS-based positioning is vulnerable to interference from jamming devices, which can compromise its accuracy. The primary objective is to identify, classify, and mitigate these interferences. Interpreting GNSS snapshots and the

associated interferences presents significant challenges due to the inherent complexity, including multipath effects, diverse interference types, varying sensor characteristics, and satellite constellations. In this paper, we extract features from a large

GNSS dataset and employ LLaVA to retrieve relevant information from an extensive knowledge base. We employ prompt engineering to interpret the interferences and environmental factors, and utilize t-SNE to analyze the feature embeddings. Our findings

demonstrate that the proposed method is capable of visual and logical reasoning within the GNSS context. Furthermore, our pipeline outperforms state-of-the-art machine learning models in interference classification tasks.

Github: https://gitlab.cc-asp.fraunhofer.de/darcy_gnss

Large Language Models, LLaVA, Multimodal-to-Text, Prompt Engineering, In-context Learning, Global Navigation Satellite System, Interference Characterization

1 Introduction↩︎

Humans interact with the world through various channels, such as vision and language, with each channel offering distinct advantages for representing and communicating specific concepts. The aim is to develop a versatile assistant capable of effectively following multimodal vision-and-language instructions, aligning with human intent to execute a wide range of real-world tasks in dynamic environments [1]. Language-augmented foundational vision models [2] have demonstrated strong performance in open-world visual understanding tasks, including classification [3], object detection [4], semantic segmentation [5], as well as visual generation and editing [6]. LLMs serve as a universal interface for a general-purpose assistant, enabling the explicit representation of various task instructions in language. The recent success of ChatGPT [7] has exemplified the power of aligned LLMs in adhering to human instructions [1]. Pre-trained language models [8] are task-agnostic, extending to the learned hidden embedding space, where models such as recurrent neural networks or transformers are pre-trained on web-scale unlabeled text corpora for general tasks and subsequently fine-tuned for specific tasks. LLMs, characterized by their larger model size and enhanced language comprehension, are capable of in-context learning [9], where they acquire new tasks from a small set of examples provided in the prompt during inference time [10]. Through advanced application and augmentation techniques, LLMs can be deployed as AI agents – artificial entities that perceive their environment, make decisions, and take actions. These agents often need to augment LLMs to access updated information from external knowledge bases and verify whether system actions yield the desired results [11].

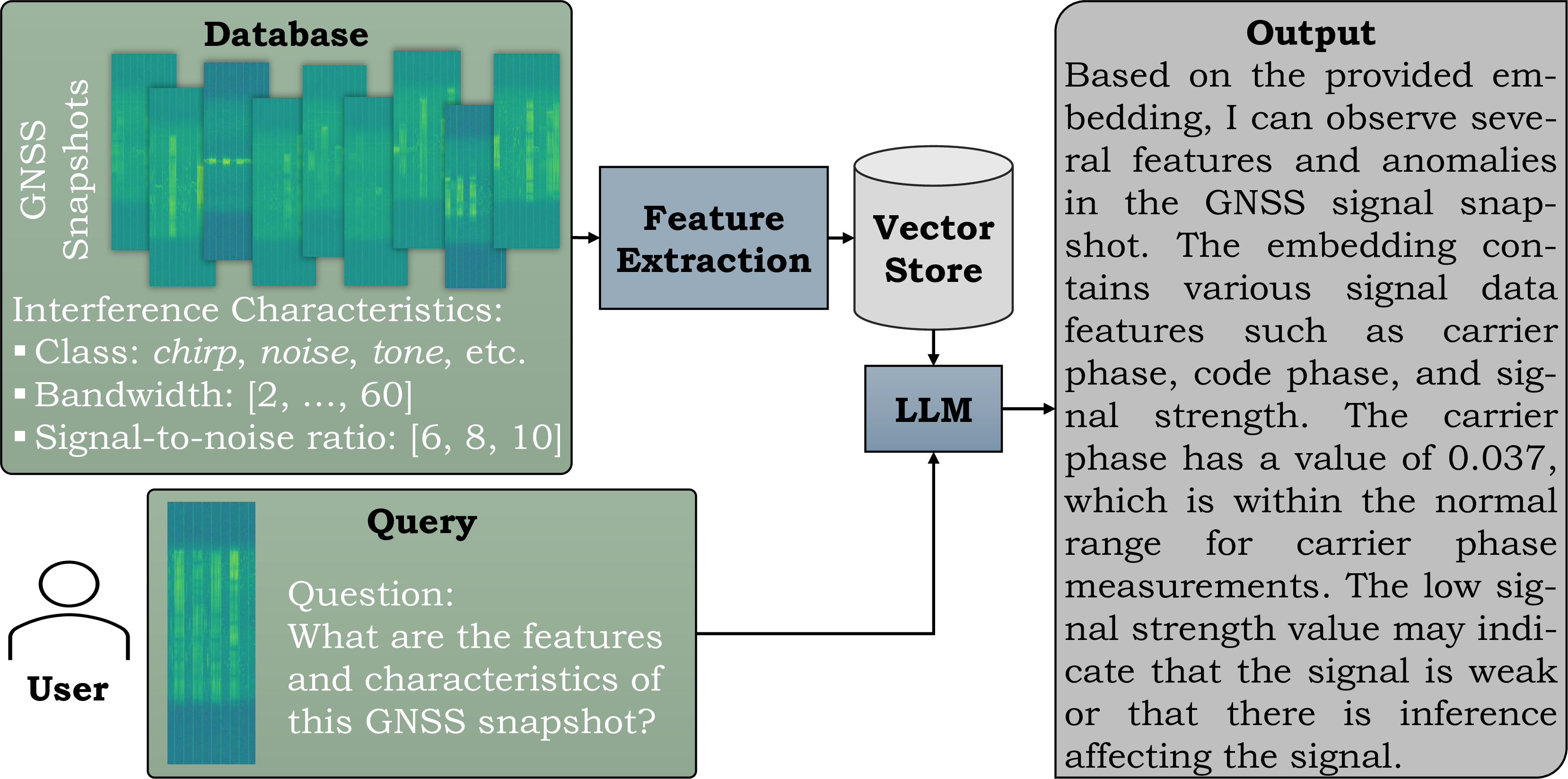

Figure 1: Based on feature embeddings extracted from GNSS snapshots, which include associated interference characteristics, a language model (LLM) generates a description in response to a contextual query provided by a user..

Previous research has primarily focused on developing agents tailored to specific tasks and domains. However, in the context of GNSS interference monitoring [12]–[18], no studies have yet explored the use of language models for analyzing GNSS signals [19], [20], which have potential applications in crowdsourcing [21], aerospace systems, and toll collection management for highway trucking [22], [23]. The application of LLMs in these domains remains largely unexplored and is still at an early stage of research. The accuracy of GNSS receivers is significantly compromised by interference from jamming devices [24], a problem that arises by the increasing availability of affordable and accessible jammers [25]. Therefore, mitigating these interference signals is crucial, requiring the detection, classifation, and localization of interference source [26]. However, analyzing GNSS signal and characterizing the interferences present substantial challenges due to the wide variability in interference bandwidths, signal-to-noise ratios, antenna characteristics, and environmental factors such as multipath effects [26]–[28]. The challenge lies in the multimodal vision-and-text approach to interference characterization, the transferability of techniques from image analysis to GNSS tasks [29], and the analysis of snapshots through feature extraction. LLMs hold great promise for GNSS interference monitoring, thanks to their capability to process and interpret complex, multivariate data in real-time. They can accurately detect interference events while delivering explainable insights that strengthen system resilience and adaptability. Figure 1 illustrates our objective: to retrieve information from GNSS snapshots, characterize features using LLMs, and present a descriptive output to the end user, who may be a decision-maker, such as a non-expert interpreting the model’s output in real-world operations [30]. We evaluate the LLM output by examining prompt engineering in the context of GNSS interference monitoring systems.

Contributions. The primary objective of this work is to provide a detailed characterization of GNSS interferences using language models and prompt engineering. The novelty lies in adapting LLM capabilities to the specialized domain of GNSS interference monitoring by addressing key challenges; this includes adapting LLMs to process GNSS signal data, which fundamentally differs from text data. The key contributions of this research are as follows: (1) We introduce a method that leverages feature extraction and LLaVA [1] to generate descriptive outputs in response to user queries. (2) We present a comprehensive description of a GNSS dataset, focusing on its characteristics, including interference class, bandwidth, signal power, and multipath effects. (3) We explore prompt engineering by manually evaluating hundreds of query-output pairs with varying levels of detail. (4) We assess feature embeddings using t-SNE analysis. (5) We demonstrate that our proposed method outperforms traditional machine learning (ML) approaches in the task of interference classification.

2 Related Work↩︎

First, we provide a summary of related work that has evaluated prompt engineering for language models (see Section 2.1). Following this, we introduce the methods used for GNSS interference classification (see Section 2.2).

2.1 Prompt Engineering with Language Models↩︎

In the context of signal processing, Verma & Pilanci [31] establish a comparison between classical Fourier transforms and learnable time-frequency representations for each intermediate activation signal within an LLM. Nguyen et al. [32] examine the vulnerabilities of LLMs in the context of 6G technology, particularly focusing on their susceptibility to malicious exploitation by analyzing known security weaknesses. Lin et al. [33] highlight the potential of deploying LLMs at the 6G edge, emphasizing their ability to reduce long response times, high bandwidth costs, and data privacy violations. Yu et al. [34] investigate the application of LLMs in diagnosing cardiac diseases and sleep apnea using ECG signals, by incorporating expert knowledge to guide the models beyond their inherent capabilities. Go et al. [35] provide a comprehensive survey of prompt engineering, a technique that involves augmenting a large pre-trained model with task-specific prompts to adapt the model to new tasks. This approach allows for predictions based solely on the prompt, without requiring updates to the model parameters, which aligns with our proposed method. We extract features from a large database without fine-tuning the language model. However, this method requires complex reasoning to identify model errors, hypothesize about gaps or misleading aspects in the current prompt, and communicate the task with clarity. Its potential is limited due to lack of sufficient guidance for complex reasoning, as well as the need for detailed descriptions, context specification, and a structured reasoning framework [36]. Nevertheless, prompt engineering and language models have yet to be applied to GNSS interference monitoring, which we address in the subsequent section.

2.2 GNSS Interference Classification↩︎

Several recent methods have focused on the classification of GNSS interferences. For instance, Swinney et al. [12] explored the use of jamming signal power spectral density, spectrogram, raw constellation, and histogram signal representations as images to apply transfer learning from the imagery domain. Ferre et al. [13] employed support vector machines and convolutional neural networks to classify jammer types in GNSS signals, whereas Li et al. [14] and Xu et al. [15] adopted a twin SVM-based approach. Ding et al. [16] leveraged ML models in a single static (line-of-sight) propagation environment; in contrast, we extend this work by considering multipath environments. Gross et al. [17] utilized a maximum likelihood method to ascertain whether a synthetic signal is compromised by multipath or jamming. Heublein et al. [27] highlighted the challenges associated with data discrepancies in the GNSS context. Few-shot learning [22] has been applied in the GNSS context to integrate new classes into a support set, aiming for a more continuous representation between positive and negative interference pairs. Raichur et al. [29] adapted to novel interference classes through continual learning. Brieger et al. [26] incorporated both spatial and temporal relationships between samples by utilizing a joint loss function and a late fusion technique. Furthermore, Raichur et al. [21] introduced a crowdsourcing approach that leverates smartphone-based features to localize the source of detected interference. For a comprehensive overview of recent adaptive GNSS applications, refer to Ott et al. [23]. Gaikwad et al. [37] proposed a federated learning approach using few-shot learning and aggregation of the model weights on a global server by introducing a dynamic early stopping method to balance out-of-distribution classes based on representation learning, specifically utilizing the maximum mean discrepancy of feature embeddings between local and global models. Heublein et al. [38] proposed an ML approach that achieves high generalization in classifying interference through orchestrated monitoring stations deployed along highways. The presented semi-supervised approach is coupled with an uncertainty-based voting mechanism by combining Monte Carlo and Deep Ensembles that effectively minimizes the requirement for labeled training samples to less than 5% of the dataset while improving adaptability across varying environments.

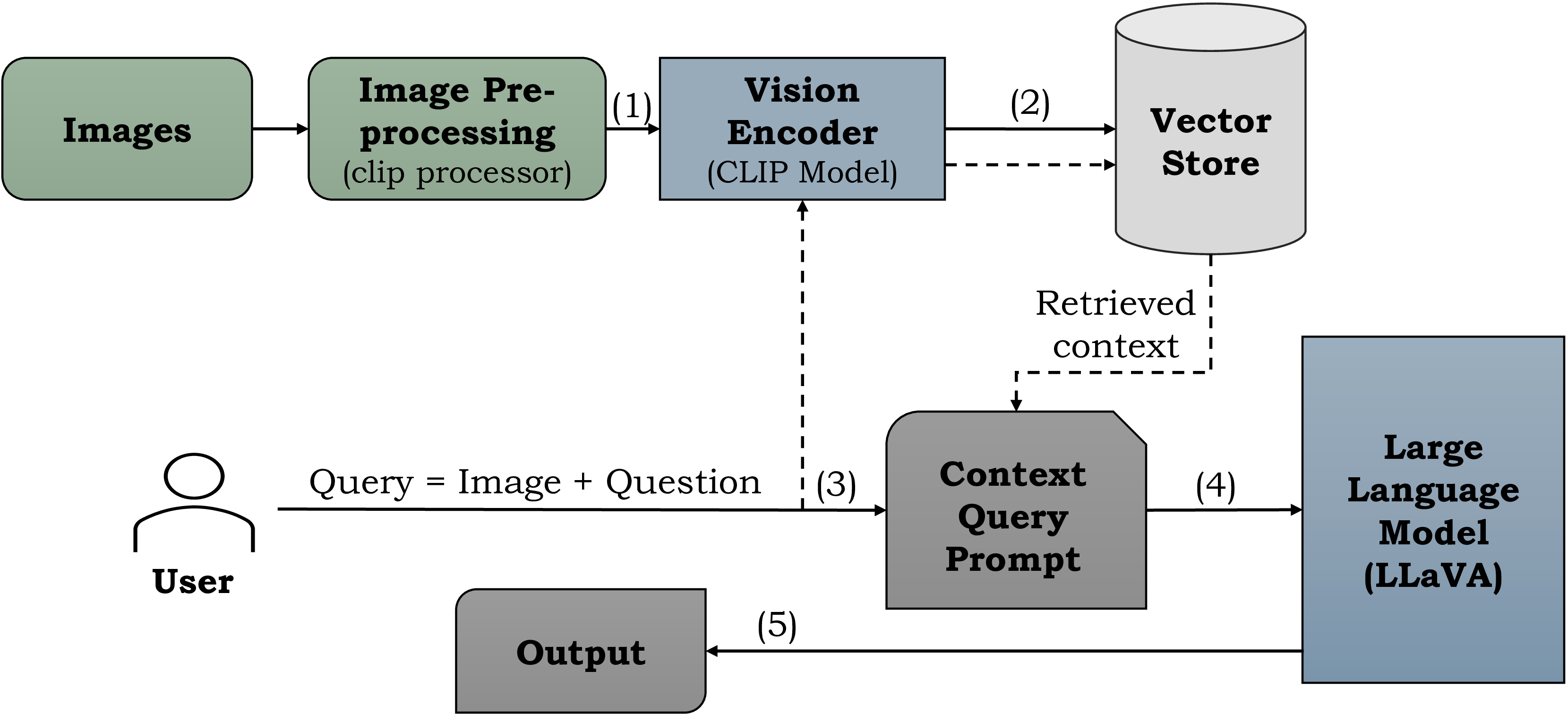

Figure 2: Method overview. GNSS snapshots are processed (1), and the resulting image embeddings are stored as vectors (2). When the user submits a context query prompt (3), the LLM (4) provides the corresponding output (5)..

Figure 3: Overview of CLIP of an image-text pair input [39]..

3 Methodology↩︎

First, we provide an overview of the method. Following that, we introduce the embedding and LLM model. Lastly, we present the specifics of our prompt engineering approach.

Method Overview. Figure 2 presents a detailed overview of the proposed pipeline. The input image has dimensions of \(1,024 \times 34\), and the dataset comprises a total of 42,592 GNSS snapshots. Initially, the images are processed using the CLIP (contrastive language-image pre-training) visual encoder ViT-L/14 [39]. The features extracted by the CLIP model are stored as embeddings in a vector store. The process continues when the user submits a query, which includes an image (a GNSS snapshot) and a related question. Using a context query prompt, we instruct the LLM on its task. The LLM employed is based on LLaVA [1], and the output is constrained to a maximum of 500 tokens.

Vision Encoder. We employ the CLIP2 ViT-L/14 vision encoder (refer to Figure 3), as proposed by Radford et al. [39]. CLIP is a neural network trained on a diverse set of (image, text) pairs. It is designed to predict the most relevant text snippet for a given image based on natural language instructions, without direct task-specific optimization. This approach facilitates zero-shot transfer of the model to downstream tasks.

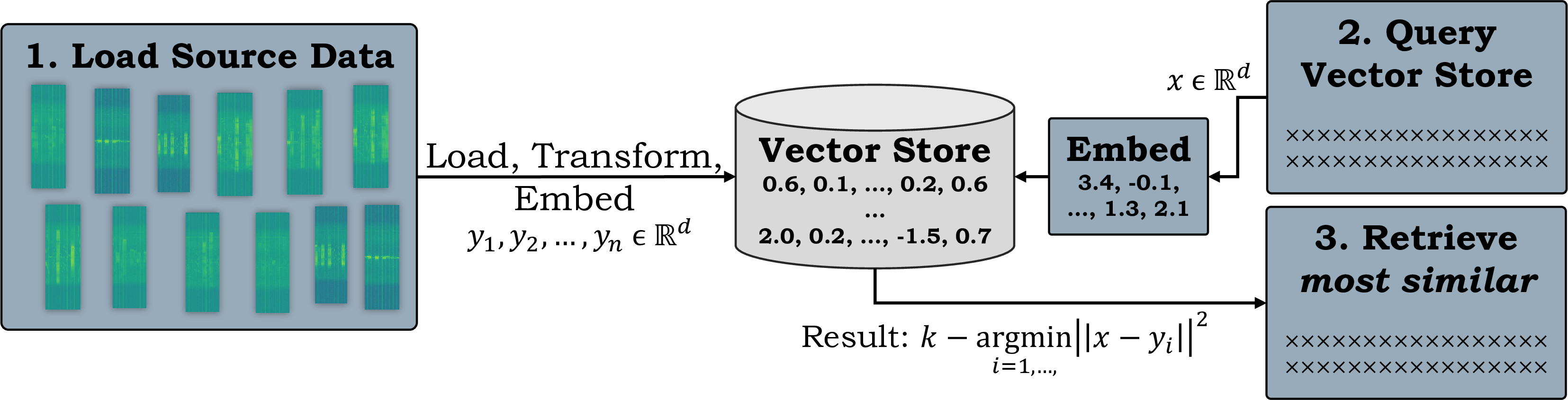

Vector Store. A prevalent method for storing and searching unstructured data involves embedding the data, storing the resulting embedding vectors, and then embedding the unstructured query at query time to retrieve the vectors that are most similar to the embedded query. A vector store manages the storage of embedded data and executes vector searches (refer to Figure 4). In this work, we utilize the Facebook AI similarity search (FAISS) vector database [40] to store embeddings of size \((1, 512)\).

Figure 4: Overview of the vector store [40]..

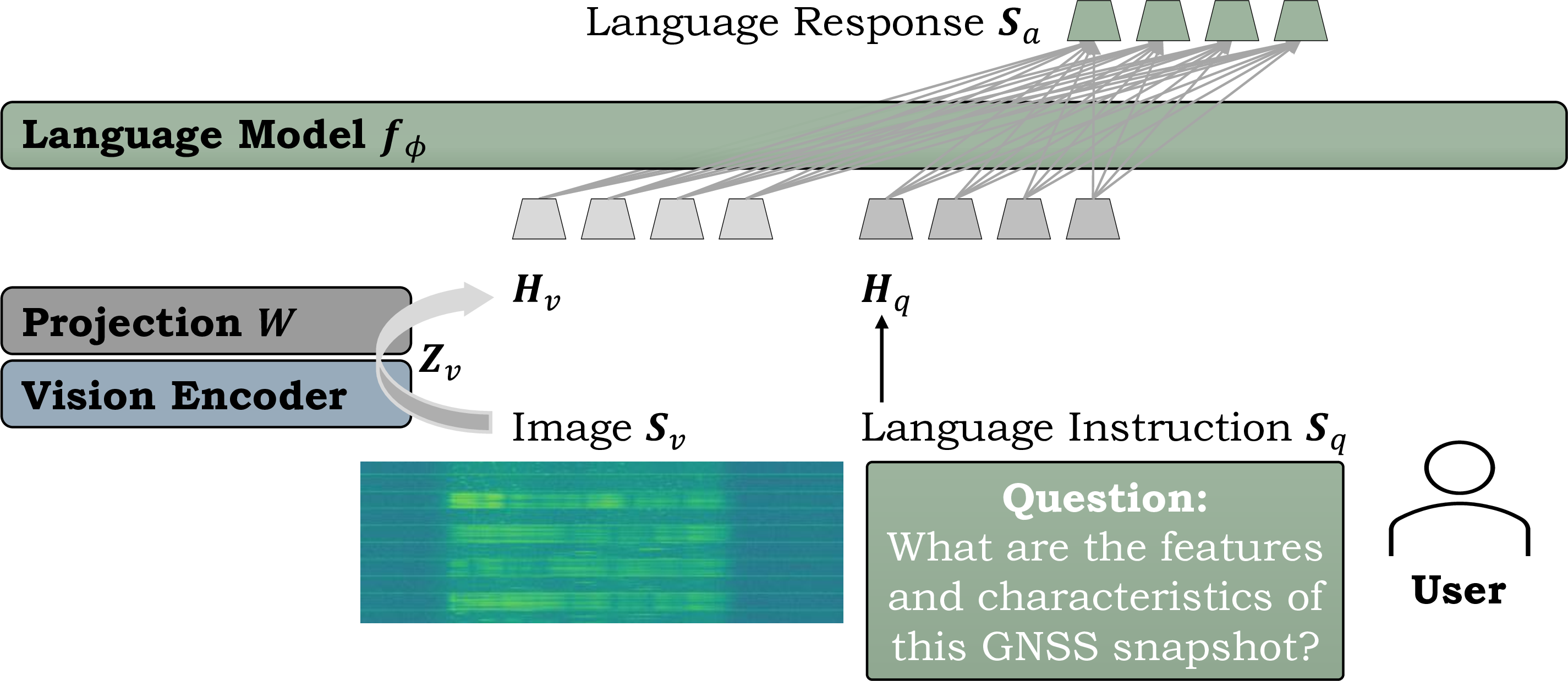

Figure 5: Network architecture of LLaVA [1]..

Figure 6: Exemplary snapshot samples (concatenation of 10 samples) of the non-interference class (a) and all six interference types (b to g), a signal with chirp interference with different bandwidths (BW) (h to j) and signal powers (k to m), and a chirp interference from the scenario 1 (open environment), scenario 7, and scenario 8. Figures from Heublein et al. [18]..

Language Model. The objective is to effectively harness the capabilities of both the pre-trained LLM and the visual model. We employ the LLaVA3 model [1], which is based on Vicuna [41], as the language model \(f_\phi(\cdot)\), parameterized by \(\phi\). Although LLaVA-NeXT [10] is too large for our current hardware configuration4, it remains a potential architecture for future work. Figure 5 illustrates the network architecture, where a GNSS snapshot is used as input, and user queries serve as language instructions. Given an input image snapshot \(\mathbf{S}_v\), the visual feature \(\mathbf{Z}_v = g(\mathbf{S}_v)\) is extracted using the pre-trained CLIP visual encoder ViT-L/14 [39]. A linear layer maps the image features into the word embedding space; specifically, the visual features \(\mathbf{Z}_v\) are projected via the matrix \(\mathbf{W}\) into language embedding tokens \(\mathbf{H}_v\) by \(\mathbf{H}_v = \mathbf{W} \cdot \mathbf{Z}_v\) [1]. During the training phase, multi-turn conversation data \((\mathbf{S}_q^1, \mathbf{S}_a^1, \ldots, \mathbf{S}_q^T, \mathbf{S}_a^T)\) is generated for each image \(\mathbf{S}_v\), where \(T\) represents the total number of turns. Following the approach used in LLaVA, we conduct instruction-tuning of the LLM on the prediction tokens using an auto-regressive training objective: For a sequence of length \(L\), the probability of the target answers \(\mathbf{S}_a\) are computed by \[\label{equ95instruct} p(\mathbf{S}_a | \mathbf{S}_v, \mathbf{S}_{\text{instruct}}) = \prod_{i=1}^{L} p_{\theta}(s_i | \mathbf{S}_v, \mathbf{S}_{\text{instruct},<i}, \mathbf{S}_{a,<i}),\tag{1}\] where \(\mathbf{S}_{\text{instruct},<i}\) are the instruction tokens and \(\mathbf{S}_{a,<i}\) are the answer tokens in all turns before the current prediction token \(s_i\) [1].

Prompt Engineering. Visual LLMs have the potential to provide a more comprehensive understanding of multimodal data by combining textual and visual cues, thereby generating outputs that more closely align with human-like reasoning and perception. This fusion process allows the model to capture interdependencies and interactions between textual and visual elements, leading to more accurate and contextually grounded outputs. For instance, when additional textual information about GNSS data is provided by the user, the model can better integrate this input. A well-designed fusion module is crucial for capturing interactions and relationships between modalities, avoiding semantic mismatches, and mitigating biases [35]. Task instruction prompting [42] involves using carefully designed prompts that provide explicit task-related instructions to guide the model’s behavior; defined as \(x_{\text{input}} = \mathcal{H}(\mathbf{S}_v, t)\), where \(\mathcal{H}\) represents the task instruction function, taking the image \(\mathbf{S}_v\) and text \(t\) as inputs to produce a modified input representation \(x_{\text{input}}\). In-context learning [43], [44] is a method where the model is presented with a sequence of related examples or prompts, enabling it to learn and generalize from the provided context; defined as \(x_{\text{input}} = \mathcal{H}(\mathcal{C}, \mathbf{S}_v, t)\), where \(\mathcal{H}\) integrates the given context \(\mathcal{C}\) with the image \(\mathbf{S}_v\) and text \(t\) inputs [35]. In our approach, we utilize in-context learning by incorporating the retrieved context from the vector store into the user query (refer to Figure 2).

4 Dataset↩︎

Figure 7: Overview of different multipath scenarios where large black absorber walls are placed between and around the signal generator and the antenna (from Heublein et al. [18])..

We employ a GNSS snapshot-based dataset as introduced by Ott et al. [18]. The primary objective is to develop ML models that demonstrate robustness against various types of jammers, interference characteristics, antenna variations, and environmental changes. Data collection takes place within the Fraunhofer IIS L.I.N.K. center, a controlled environment measuring \(1,320\,\textit{m}^2\), which facilitates the incorporation of multipath effects. A receiver antenna is positioned at one end of the hall, while an MXG vector signal generator is placed at the opposite end. The signal generator is capable of producing high-quality radio frequency signals across a wide range of frequencies. The signals received by the antenna are recorded as snapshots that include various interferences introduced by the signal generator. A comprehensive dataset is gathered under different configurations, including scenarios within an empty environment and setups where absorber walls are placed between the antenna and the generator (as shown in Figure 7). The antenna captures snapshots at a frequency of \(100\,\text{MHz}\) with a duration of \(10\,\mu s\). Each snapshot consists of 1,024 channels, resulting in dimensions of \(1,024 \times 34\). Figure 6 presents a selection of snapshots, with each image showcasing 10 randomly selected samples. Six distinct types of interference are introduced. Furthermore, the jammer types are characterized py parameters such as bandwidth (BW), ranging from 0.1 to 60, and signal power levels, ranging from -10 to 10. It is noted that increased multipath effects correspond to a reduction in interference intensity. The dataset contains a total of 42,592, of which 576 are free from any interferences [18].

5 Evaluation↩︎

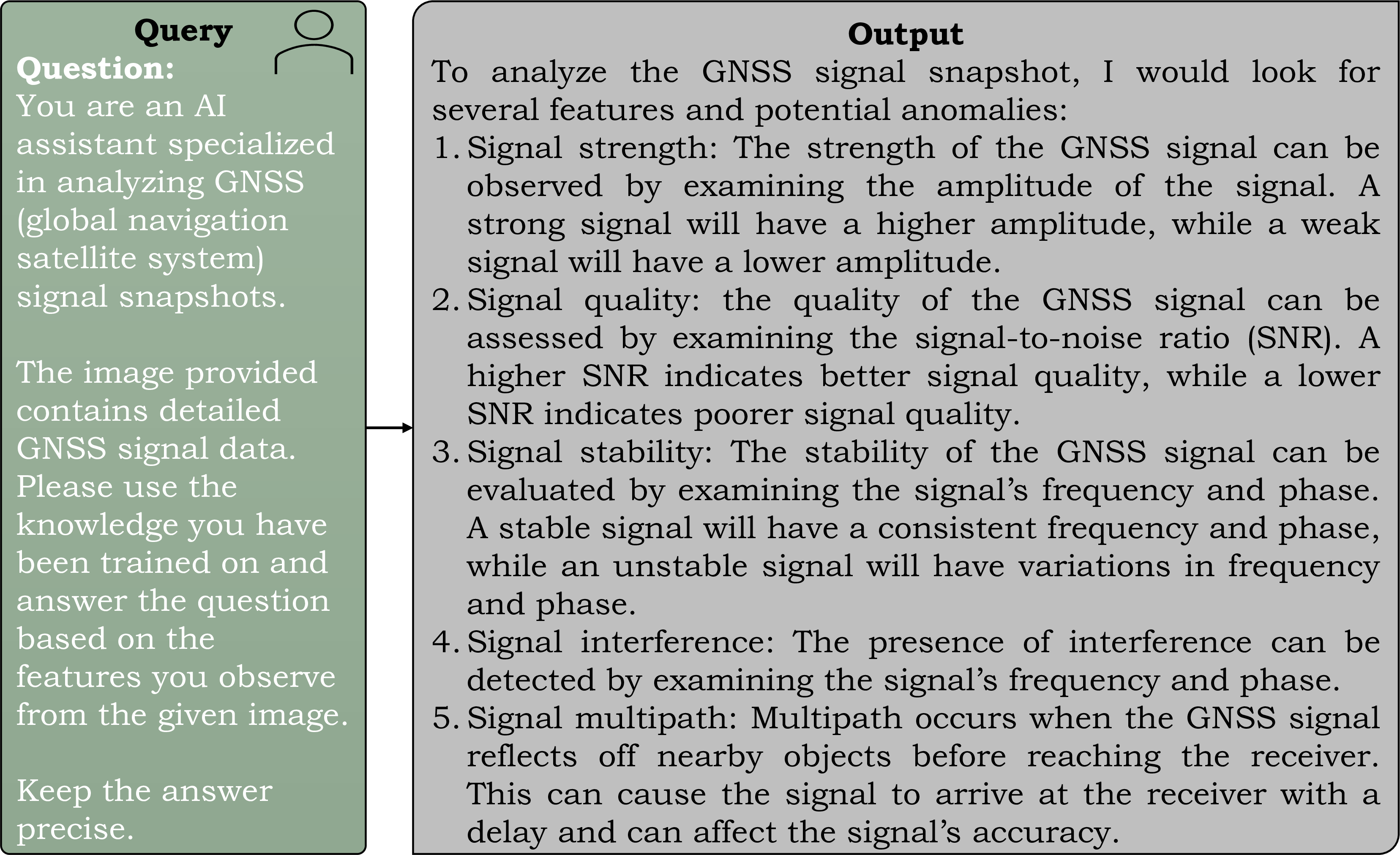

Figure 8: General user query..

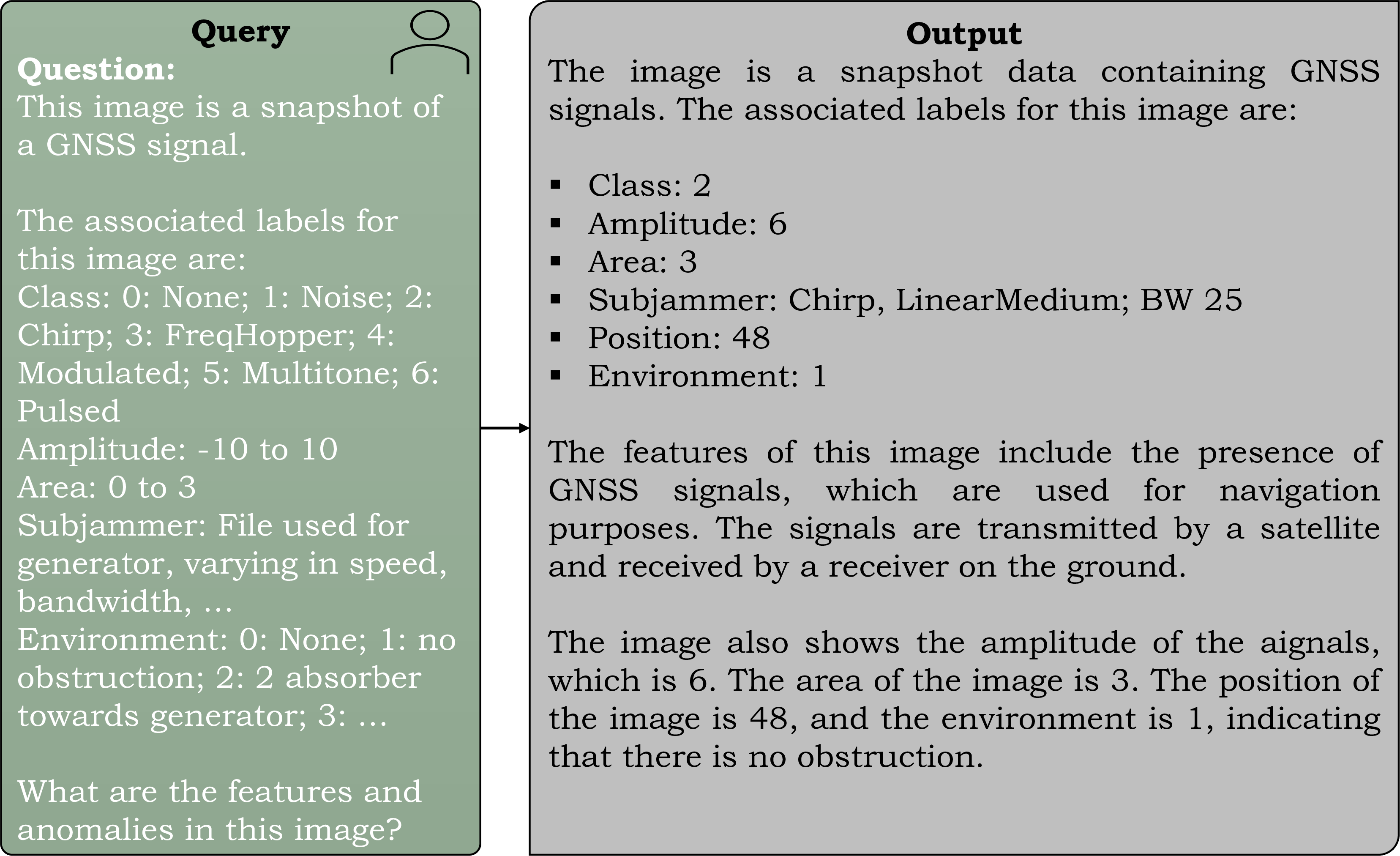

Figure 9: Signal information with general user query..

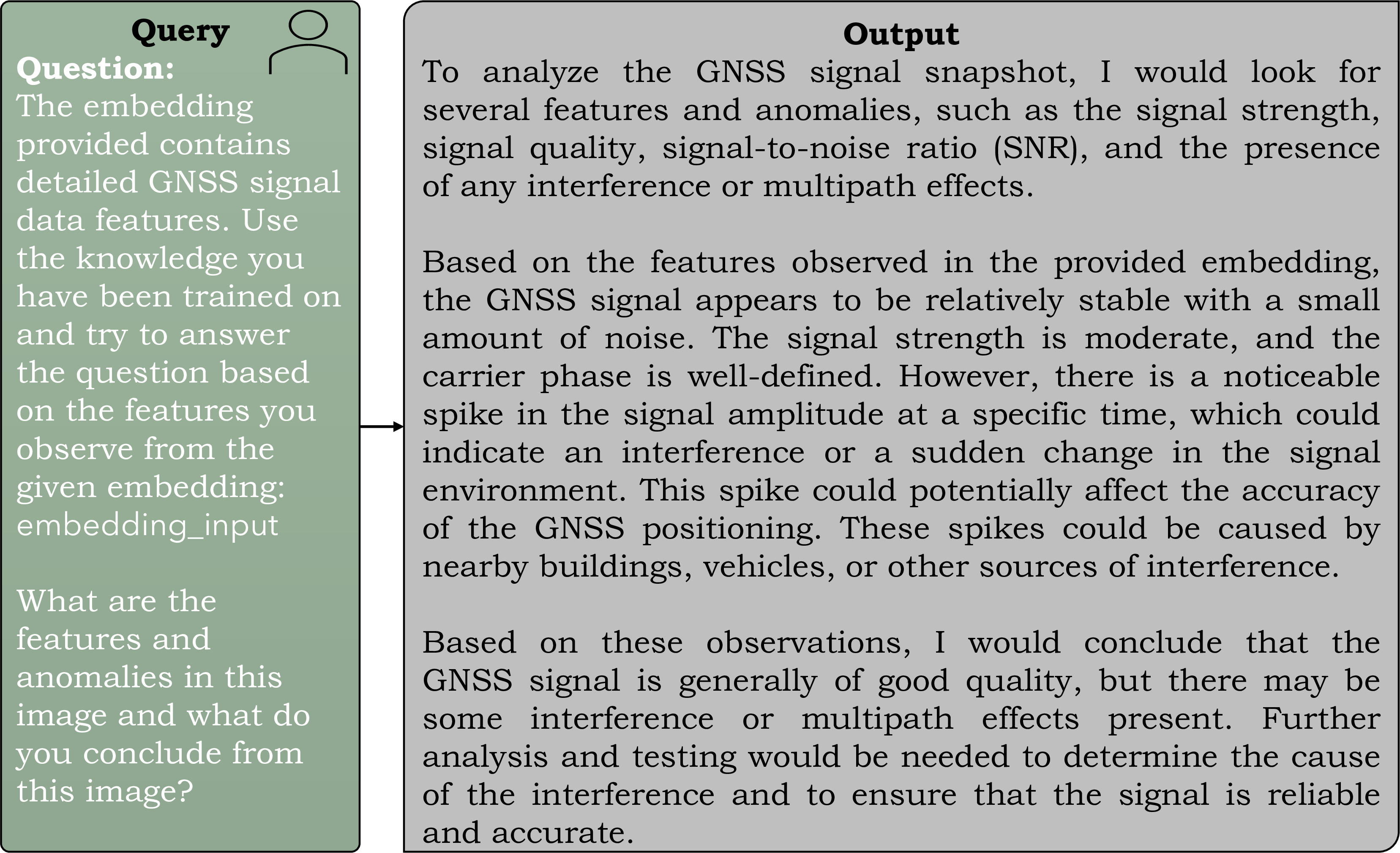

Figure 10: Signal information with detailed user query..

Figure 11: General user query with interpretation..

Prompt Engineering. Evaluating predictions of language models is challenging because their outputs are inherently descriptive, even for tasks like classification, making it harder to align predictions with rigid evaluation metrics. In general, the analysis suggests that the model can accurately interpret the image dimensions without requiring additional information about the GNSS signal, as demonstrated by the following response: “The size of the snapshot is 32 times 1024, with 32 being the length of the snapshot and 1024 being the number of channels.” When an exemplary of chirp interference is provided (see Figure [figure95exmp95samples2]), the model accurately describes the snapshot, stating: “The signal appears to be a chirp signal, which is a type of modulated signal used in GNSS systems and are characterized by a linear frequency sweep, which means that the frequency of the signal increases or decreases linearly over time.” Figure 8 to 11 presents four user query questions with varying levels of detail and their corresponding outputs. In Figure 8, where the prompt lacks in-context learning, the model’s output is general, describing potential features of possible anomalies without interpreting the actual image input. With in-context learning (as shown in Figures 9, 10, and 11), the model interprets the given image input more accurately and provides detailed description. In Figure 9, when all possible interference characteristics are provided with an embedding input, the model successfully classifies the interference and offers detailed characteristics, such as amplitude and bandwidth, while accurately interpreting the image without multipath effects. In Figure 10, the user query is modified to instruct the model to utilize the knowledge of the CLIP model. The resulting output is more descriptive, yet still correctly identifies interference characteristics. However, when all possible label information is removed (as seen in Figure 11), the model describes the image input and its anomalies, noting, for instance, “with a small amount of noise” and “there is a noticeable spike in the signal amplitude”.

Hyperparameter Evaluation. We next evaluate the impact of the LLaVA hyperparameterson the model’s output. The temperature parameter (ranging from 0 to 1) is employed to modulate the probabilities of the next token, thereby controlling the randomness and creativity of the model’s predictions. As the temperature value decreases, the model’s output becomes more deterministic and repetitive. We observe that with values between 0.6 and 1.0, the model generates more diverse and potentially creative outputs. The \(\text{top}_{\text{k}}\) parameter, where \(1 < \text{top}_{\text{k}} < 100\), allows the model to select randomly from the top k tokens based on their respective probabilities. A \(\text{top}_{\text{k}}\) value of 100 results in more explanatory but less accurate responses (as illustrated in Figure 8). In contrast, a \(\text{top}_{\text{k}} = 40\) value of 40 yields a more accurate model output.

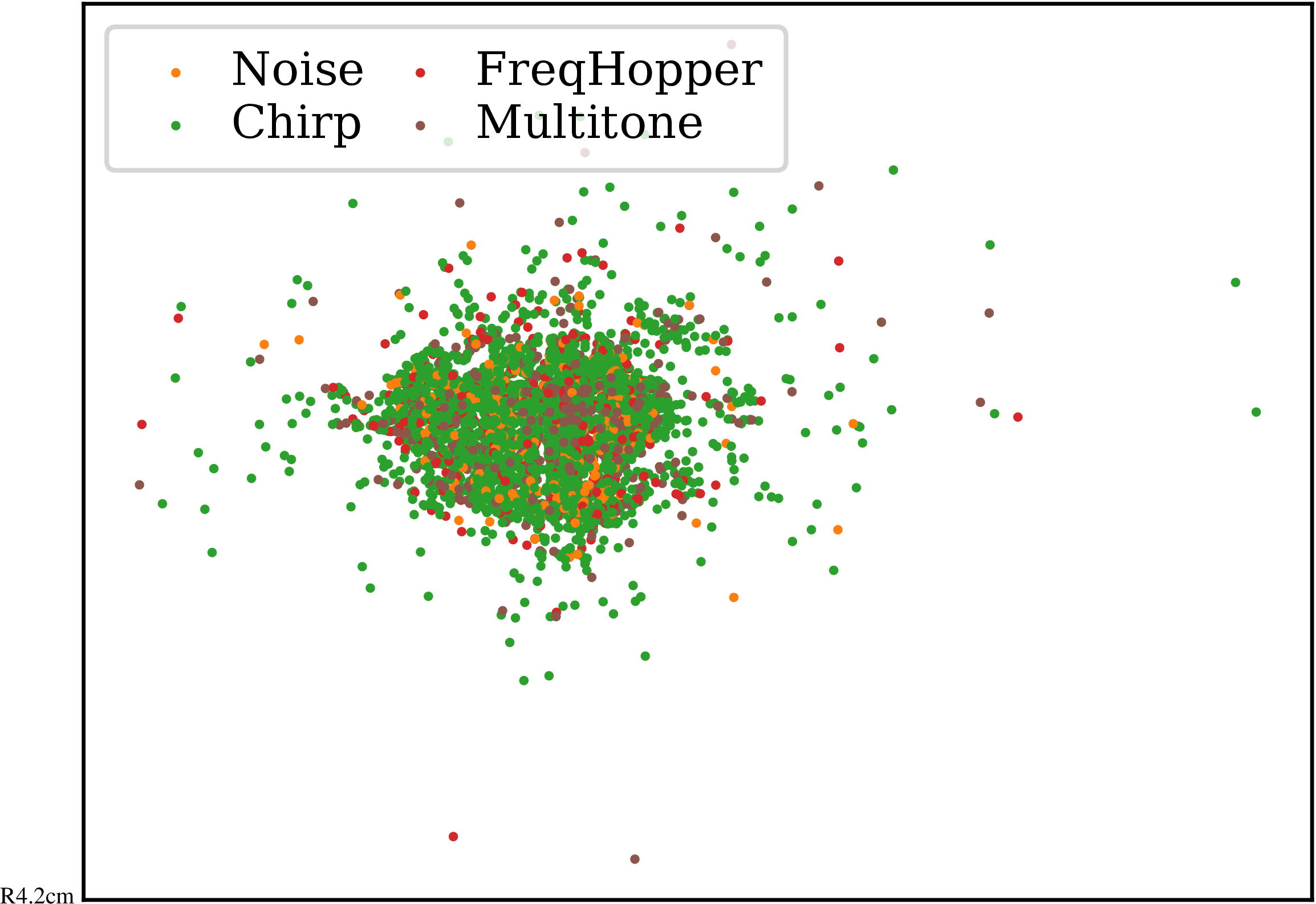

Figure 12: Embeddings of four classes using t-SNE [45]..

Embedding Analysis. Figure 12 illustrates feature embeddings for four different labels in the vector store of the vision encoder, i.e., the CLIP model, of output size 512. We utilize the t-distributed stochastic neighbor embedding (t-SNE) [45] with perplexity of 30, an initial momentum of 0.5, and a final momentum of 0.8. We recognize the challenge in distinguishing between the classes due to the absence of distinct clusters, resulting in overlapping scatter plots. Nevertheless, the LLM is still capable of accurately interpreting the embeddings. This observation encourages us to fine-tune the model in future work to achieve improved feature embeddings.

| Model | Intf. Type | Subjammer | Power | Bandwidth |

|---|---|---|---|---|

| ResNet18 | 81 | 47 | 0.3720 | 0.1993 |

| BEiT | 99 | 80 | 0.0461 | 0.0217 |

| DeiT | 76 | 26 | 0.4252 | 0.3948 |

| Swim | 99 | 75 | 0.1071 | 0.0259 |

| CLIP | 81 | 34 | 0.3352 | 0.2742 |

| ViT | 95 | 67 | 0.1630 | 0.0862 |

Benchmark of Vision Models. Table 1 presents a comparison of state-of-the-art vision models on the snapshot dataset for a multi-task problem, encompassing interference classification, subjammer type identification, signal-to-noise ratio estimation, and bandwidth prediction. The models included in the comparison are ResNet18 [18], BEiT [46], DeiT [47], the Swin Transformer [48], CLIP [39], and ViT [49]. For GNSS interference detection methods, see [27]. Notably, the vision models BEiT, Swin, and ViT demonstrate significant performance improvements over traditional methods based on ResNet18 across all four tasks. Heublein et al. [27] achieved an accuracy of 96.15% on the independent single-task test dataset using a ResNet18 model. In comparison, our LLM surpasses the state-of-the-art, attaining an accuracy of 96.87%. With the introduction of our proposed method leveraging LLaVa, these results can now be effectively interpreted and analyzed.

Time. The inference time per GNSS snapshot is less than \(50\,ms\), making it suitable for real-time applications. However, the primary latency arises during the initial loading phase of the vision encoder and the LLaVA preprocessor. Once loaded, the per-snapshot inference remains highly efficient.

6 Conclusion↩︎

We proposed a pipeline for GNSS interference monitoring, integrating the vision encoder CLIP, the vector store FAISS, and the language model LLaVA. The model’s predictions are evaluated based on user queries consisting of multimodal image-text inputs. Specifically, the image input is a GNSS snapshot containing interference, and the accompanying text is a question designed to identify the snapshot’s characteristics. Through the evaluation of task instruction prompting, we demonstrated that providing detailed contextual information within the query leads to more accurate and comprehensive model outputs. Additionally, by employing in-context learning, we showed that a sequence of related examples is essential for the model to generalize effectively from the provided context. In conclusion, our proposed framework enables non-expert users to effectively evaluate GNSS snapshots.

In future work, we will incorporate retrieval-based prompting, a method that involves selecting prompts or context using retrieval techniques. In this approach, the model retrieves relevant prompts or context from a prompt pool or an external knowledge base to guide its generation or decision-making process. This can be formally defined as \(\mathcal{C} = \mathcal{R}(\mathbf{S}_v, t)\), where \(\mathcal{R}\) represents the retrieval method that identifies pertinent prompts or context based on the image \(\mathbf{S}_v\) and text \(t\) inputs. The external knowledge base may include data from various experts who have manually labeled and described GNSS samples with interferences. The retrieved context \(\mathcal{C}\) is subsequently used to guide the model’s generation or decision-making process. It is important to note that the retrieval method \(\mathcal{R}\) can vary depending on the specific approach and the available prompt pool or knowledge base. This method allows the model to leverage existing information, thereby enhancing its performance by incorporating relevant prompts or context during the generation process.

References↩︎

This work has been carried out within the DARCII project, funding code 50NA2401, sponsored by the German Federal Ministry for Economic Affairs and Climate Action (BMWK) and supported by the German Aerospace Center (DLR), the Bundesnetzagentur (BNetzA), and the Federal Agency for Cartography and Geodesy (BKG).↩︎

CLIP encoder: https://huggingface.co/zer0int/CLIP-GmP-ViT-L-14↩︎

LLaVA model: https://huggingface.co/llava-hf/llava-v1.6-mistral-7b-hf↩︎

All experiments were conducted using Nvidia Tesla V100-SXM2 GPUs with 32 GB VRAM, alongside Core Xeon CPUs and 192 GB RAM.↩︎