Quantum-enhanced causal discovery for a small number of samples

January 09, 2025

Abstract

The discovery of causal relationships from observed data has attracted significant interest from disciplines such as economics, social sciences, epidemiology, and biology. In practical applications, considerable knowledge of the underlying systems is often unavailable, and real data are often associated with nonlinear causal structures, which make the direct use of most conventional causality analysis methods difficult. This study proposes a novel quantum Peter-Clark (qPC) algorithm for causal discovery that does not assume any underlying model structures. Based on the independence conditional tests in a class of reproducing kernel Hilbert spaces characterized by quantum circuits, the proposed qPC algorithm can explore causal relationships from the observed data drawn from arbitrary distributions. We conducted extensive and systematic experiments on fundamental graph parts of causal structures, demonstrating that the qPC algorithm exhibits a significantly better performance, particularly with smaller sample sizes compared to its classical counterpart. Furthermore, we proposed a novel optimization approach based on Kernel Target Alignment (KTA) for determining hyperparameters of quantum kernels. This method effectively reduced the risk of false positives in causal discovery, enabling more reliable inference. Our theoretical and experimental results demonstrate that the proposed quantum algorithm can empower classical algorithms for robust and accurate inference in causal discovery, supporting them in regimes where classical algorithms typically fail. Additionally, the effectiveness of this method was validated using the Boston Housing dataset as a real-world application. These findings demonstrate the new potential of quantum circuit-based causal discovery methods in addressing practical challenges, particularly in small-sample scenarios where traditional approaches have shown limitations.

1 Introduction↩︎

Deciphering causal relationships among observed variables is a crucial problem in the social and natural sciences. Historically, interventions or randomized experiments have been employed as standard approaches to evaluate causality among observed variables [1]. For example, randomized controlled trials have been commonly used in clinical research to assess the potential effects of drugs. However, conducting such interventions or randomized experiments is often difficult because of ethical constraints and high costs. Alternatively, causal discovery offers effective methods for inferring causal relationships among variables from passively observed data beyond correlation analysis [2]–[6]. The Peter–Clark (PC) algorithm [2], a widely accepted algorithm for causal discovery, yields an equivalence class of directed acyclic graphs (DAGs) that captures causal relationships (see Fig. 1 (a) for an overview of the PC algorithm). The PC algorithm does not assume any specific statistical models or data distributions, unlike the other methods, including the linear non-Gaussian acyclic model (LiNGAM) [7], [8], NOTEARs [9], the additive noise model [10], the post nonlinear causal model [11], and the GES algorithm [12]. Thus, applications of the PC algorithm and its variants have elucidated causal relationships from various observed data spanning from natural science to engineering [13]–[16]. In the PC algorithm, kernel methods can be used for conditional independent tests, a process known as kernel-based conditional independence test (KCIT) [17], [18]. This approach enables applications to various types of data, including those characterized by nonlinearity and high dimensionality [14], [18], [19].

Although the PC algorithm using KCIT can be applied to both linear and nonlinear data without any assumption of the underlying models, its performance depends on the choice of kernels. Empirically, kernels are often chosen from representative classes such as Gaussian, polynomial, and linear kernels [20]. Alternatively, quantum models that embed data in an associated RKHS have been developed recently, providing a class of algorithms called quantum kernel methods [21]–[25] (Fig. 1 (b)). Among them, the kernel-based LiNGAM extended with quantum kernels [25] demonstrates potential advantages over classical methods, such as accurate inference with small sample sizes [26], as suggested in supervised learning contexts [27]. However, the quantum LiNGAM (qLiNGAM) [25] assumes linear causal relationships, which limits its applicability to real-world problems.

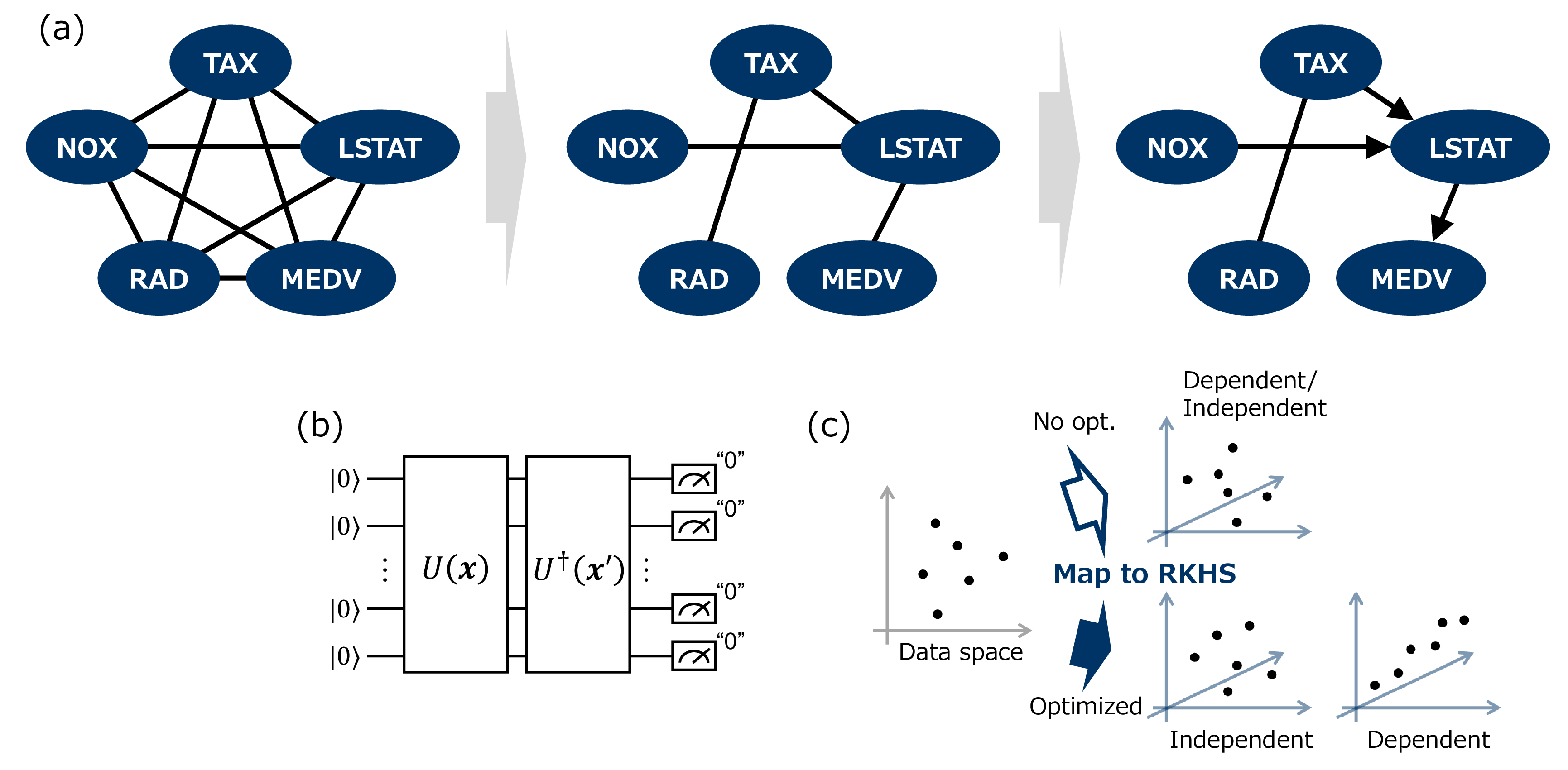

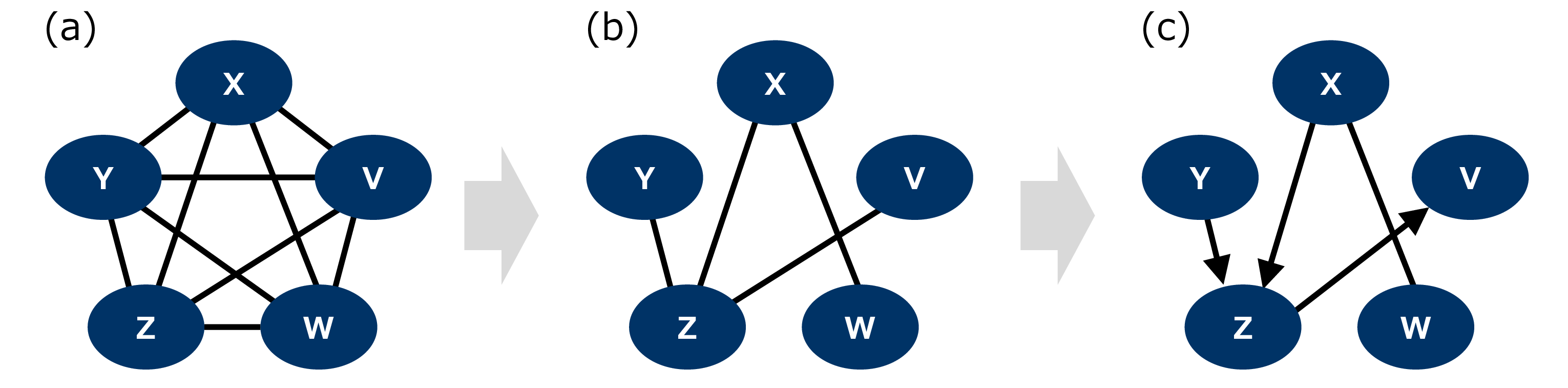

Figure 1: Schematic of the proposed quantum Peter–Clerk (qPC) algorithm and our optimization method based on kernel target alignment (KTA). (a) Overview of the qPC algorithm. Left: The graph representation of an initial input. The qPC algorithm identifies causal relationships among random variables and represents them as complete partially directed acyclic graphs (CPDAGs). The qPC algorithm begins with a complete undirected graph, where each node represents a random variable, and each edge represents the correlation between two random variables. The middle: The graph of the (conditional) independence among the random variables. The algorithm prunes redundant edges by performing the (conditional) independence test between two random variables conditioned on other random variables. Note that when performing the conditional independence test between any two random variables, the set of random variables used for conditioning is recorded. Right: The resulted causal graph. The edges can be oriented following the rules (the details are described in Appendix 6). (b) Quantum circuit for a kernel. We defined the kernel, \(k(x, y)\), for the KCIT as the inner product of quantum states \(U_{\theta}(\mathbf{x})\ket{0}^{\otimes n}\) and \(U_{\theta}(\mathbf{x'})\ket{0}^{\otimes n}\) generated from the parameterized unitary \(U_{\theta}\). (c) Overview of kernel optimization for independence test in causal discovery. If the kernel that is inappropriate and not optimized is used for independence test, it fails to detect the dependent or independent relation between variables accurately. The optimized kernel can disentangle complex relations between variables, which allows for the accurate discrimination of dependent or independent relation in statistical tests.

While quantum causal inference for small sample data presents a promising alternative, it still faces challenges. First, existing quantum models have failed to address nonlinear causal relationships. Second, similar to classical kernels, the performance of quantum kernel methods depends critically on the choice of quantum circuits used [28], and systematic approaches for selecting appropriate quantum kernels in causal discovery are still lacking. In most previous studies that used classical methods, kernel parameters such as the median strategy were often selected heuristically. Moreover, no established methods exist for setting quantum circuit hyperparameters. Finally, it remains unclear why causal inference using quantum kernels outperforms classical methods for small sample data.

To address these challenges, we propose the quantum PC (qPC) algorithm by leveraging the quantum kernel in independence tests of the PC algorithm (Fig. 1). We then propose a novel method based on kernel target alignment (KTA) to determine the appropriate hyperparameters in quantum kernels for causal discovery. The proposed method enables the setting of kernels with objective criteria and eliminates arbitrariness in kernel method applications. Furthermore, we discuss how the qPC algorithm can enhance inference accuracy in small sample sizes. Using KTA, we demonstrate that the quantum models we used can effectively learn to produce kernels with high independence detection capabilities. To demonstrate that our optimization method based on the KTA facilitates accurate casual discovery by the qPC algorithm through the selection of appropriate kernels, we used simulations based on three-node causal graphs (Fig. 2 (a)), which are the fundamental blocks of general causal graphs.

Our first simulation, motivated by the superiority of quantum kernels in small sample regimes, employs quantum circuit models to generate data from which causal discovery methods infer the underlying causal relations. Although the data from quantum models can highlight the characteristics of the qPC algorithm, it is desirable to use classical data to estimate the typical performance of the quantum method using the proposed kernel choice process in practical applications. Thus, we assessed the situation in which we observed data drawn from classical systems. The optimization method based on the KTA bridges the gap between the qPC algorithm and realistic data. Using the kernel choice method, we demonstrate the applicability of the qPC algorithm to real and synthetic data. The real data is on the Boston housing price [29]. The results obtained by the qPC algorithm provide insights unavailable through classical methods, highlighting the usefulness of the quantum method, particularly for small datasets.

2 Results↩︎

2.1 Overview of the qPC algorithm↩︎

We propose the qPC algorithm for causal discovery that employs quantum kernel methods [21], which embed classical data into quantum states (Fig. 1 (c)). The qPC algorithm is an extension of the PC algorithm for causal inference. It utilizes a conditional independence test implemented via the KCIT with quantum kernels composed of data-embedded quantum states as a natural extension of the Gaussian kernel. The PC algorithm [2], [30] offers CPDAGs that capture the causal relationships between variables from their observed data (Appendix 6). This algorithm is a nonparametric method that does not consider underlying statistical models. The KCIT is introduced because of its powerful capacity to infer causality in data with nonlinearity and high dimensionality.

The qPC algorithm is twice the original PC algorithm: discrimination of unconditional/conditional independence and orientation of causality relations (see the overview of the PC algorithm in Appendix 6). The qPC algorithm outputs CPDAGs, which capture the causal relations among the observed variables with directed and undirected edges between them (Fig. 1 (a)). It relies on the KCIT framework (see Appendix 5 for the details of the KCIT), where the original data are embedded into feature spaces to detect independence (Fig. 1 (b)). Appropriate embedding in KCIT facilitates the disentangling of complex nonlinear relations in the original data space, which often leads to accurate results in statistical hypothesis tests, especially when dealing with high-dimensional or nonlinear data [17], [18]. The qPC algorithm leverages quantum kernels associated with the quantum state to embed data into the RKHS defined by quantum circuits. Quantum kernels are defined by \(k_Q(\mathbf{x}, \mathbf{x}')=\mathrm{Tr}[\rho(\mathbf{x})\rho(\mathbf{x}')]\), where input \(\mathbf{x}\) is encoded into the quantum circuits generating state \(\rho(\mathbf{x})\). It defines a method for encoding data into quantum circuits. Our proposed quantum circuit has hyperparameters analogous to the widths of the Gaussian kernels.

2.2 Detection of fundamental causal graph structures↩︎

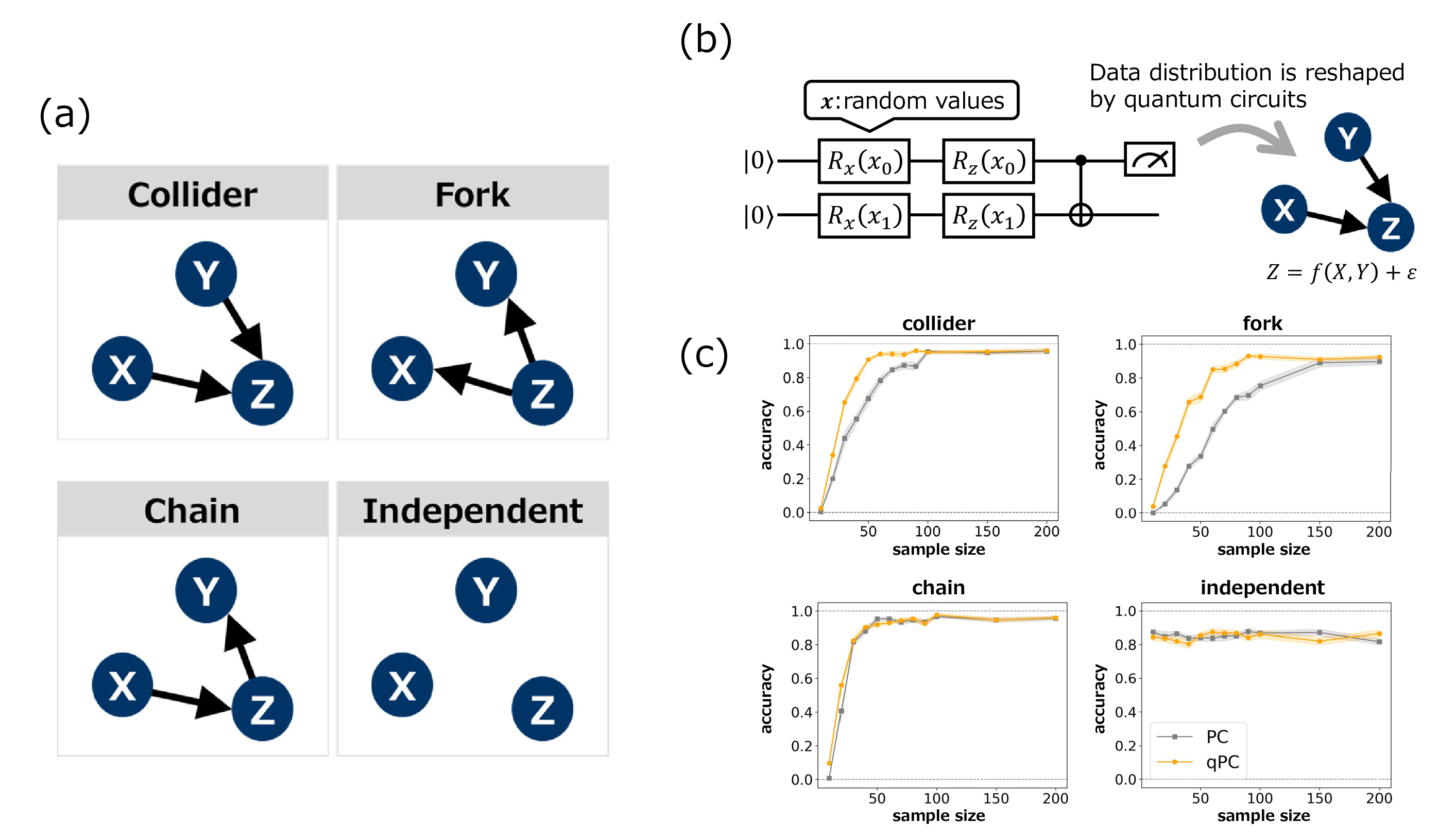

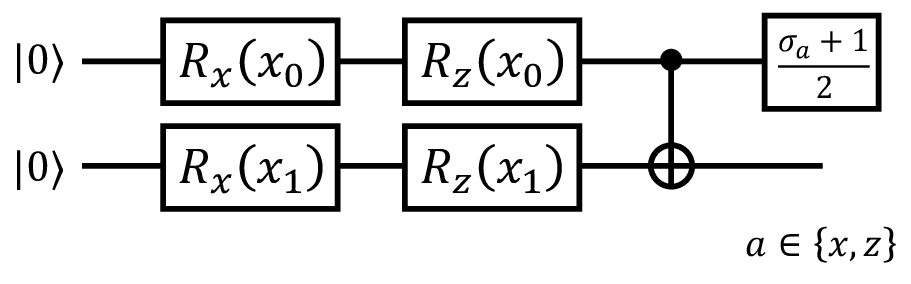

To demonstrate how the qPC algorithm can effectively retrieve the underlying causal structures, we applied it to synthetic data from fundamental causal relations with three nodes, collider, fork, chain, and independent structures (Fig. 2 (a)) [1]. These elements capture any local part of the general causal graphs, and thus provide a summarized assessment of causal discovery methods. In particular, we assume that source random variables are generated through observations in quantum circuits with random variable inputs and that the other nodes receive their inputs through a relation defined by the function \(f\) and noise, such as \(Z =f(X, Y)+\epsilon\) (Fig. 2 (b)). Specifically, random values \(\mathbf{x}_i\) sampled from Gaussian distributions were used as inputs to the data embedder of the quantum circuit, and we measured observables \(M_a\), that is, \(M_a=\mathrm{Tr}[O_a\rho(\mathbf{x}_i)]\), \(O_a=(\sigma_a +1)/2\), \(a \in \{x, z\}\). We then prepared a dataset for causal discovery using an algebraic operation of the measured values. Consequently, the data distribution is far from a typical probability distribution such as a Gaussian distribution (see Fig. 2 (b)). This setting aims to highlight that under such data generation processes, the quantum kernel can be typically superior to classical kernels in accurately reproducing the underlying causal structures. Because the qPC or PC algorithm yields CPDAGs, we evaluate the accuracy by considering Markov equivalence; the fork and chain should not be distinguished in this case.

Figure 2: Characteristic performance of the qPC algorithm. (a) Basic causal graphs under three variables with their corresponding dependent and independent relations. (b) Data generation with quantum models. The source variables were drawn from quantum circuits with random-variable inputs and the other variables were given by a causal structure. (c) Accuracy of the PC and qPC algorithms for the four causal patterns with different sample sizes. Shaded regions represent the standard errors over 10 different simulations.

Comparisons of the performances of the classical PC and qPC algorithms for causal junctions are shown in Fig. 2 (c). For chain or independent structures, we observe no significant differences between the classical and quantum methods. However, for the collider or fork, the quantum kernel outperformed the classical kernel for small sample sizes. The results of the performance comparison may be questionable because the fork and chain are Markov equivalent. However, because random variable \(Z\) constructed from the quantum circuit occupies different positions in the fork and chain, the difficulty of the independence and conditional independence tests in the PC algorithm varies between the two cases. The superior performance of the qPC algorithm may have resulted from the inductive bias of the models. The data generation process is based on the observation of quantum circuits, which can be related to the quantum kernels used. In the following sections, we investigate more general cases using datasets unrelated to quantum models.

2.3 Optimization of quantum circuits via KTA↩︎

In the previous subsection, we experimentally confirmed that quantum kernels with small sample sizes are effective for causal discovery. We used artificial data generated from quantum circuits that are considered suitable for quantum kernels. However, naïve quantum kernels are not suitable for classical data in general. Specifically, the qPC algorithm has one main challenge: in contrast to the classical Gaussian kernel, which has several established guidelines for determining the kernel hyperparameters, the quantum kernel method lacks a standardized approach for selecting its hyperparameters for inference [28]. Thus, we propose a systematic method for adjusting the hyperparameters in quantum circuits for datasets. To demonstrate the applicability of the qPC algorithm to a wide range of data, we compare the performance of the two methods using artificial datasets with classical settings.

Herein, we briefly explain an optimization method for determining the hyperparameters of quantum circuits for kernels based on the normalized Hilbert-Schmidt inner product (HSIP). Its expectation value is zero if and only if random variables \(X\) and \(Y\) are independent. This property allows for the use of HSIP as test statistics in statistical hypothesis tests [17], [18]. The hypothesis test should be improved by selecting a kernel that minimizes the HSIP for uncorrelated data samples while maximizing the HSIP for correlated data samples; in principle, HSIP approaches zero in the uncorrelated case and nonzero otherwise. The normalized HSIP 1 , which measures the distance between the feature vectors in which two data samples are embedded, is called KTA [31]. From the perspective of statistical hypothesis testing, KTA minimization for uncorrelated data reduces the false-positive (FP) risk, whereas KTA maximization for correlated data reduces the false-negatives (FN) risk. Thus, KTA minimization can be interpreted as enhancing the identifiability of two independent random variables, thereby reducing the likelihood of Type-I errors. In contrast, KTA maximization reduces the identifiability of dependent random variables, thereby lowering the likelihood of Type-II errors. Here, we focus on KTA minimization for uncorrelated data because the actual relationships behind the data are often unavailable, making it difficult to employ the KTA maximization strategy.

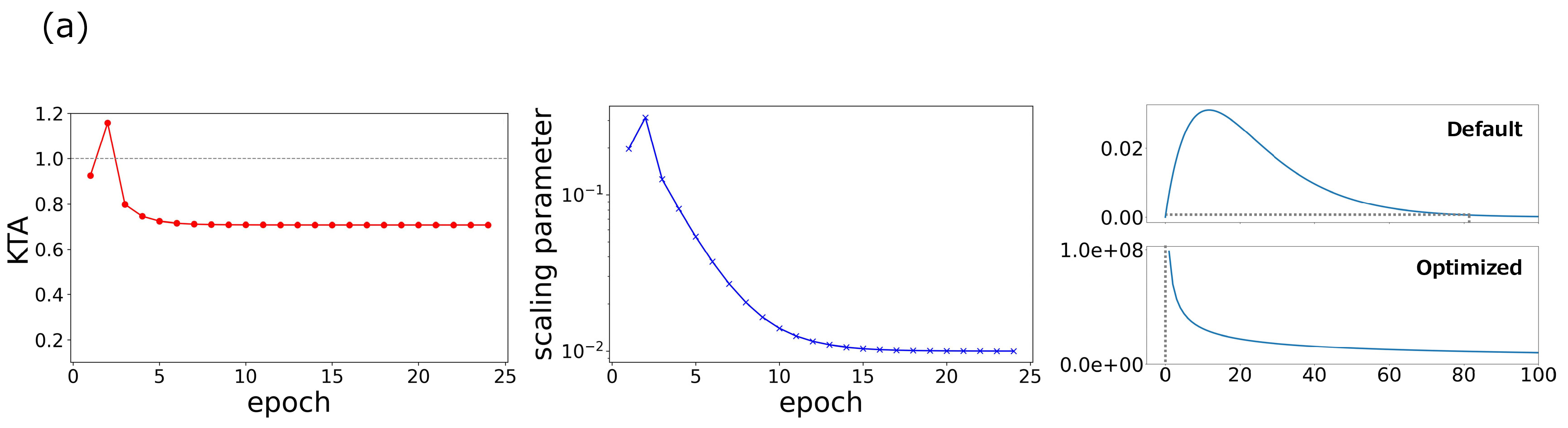

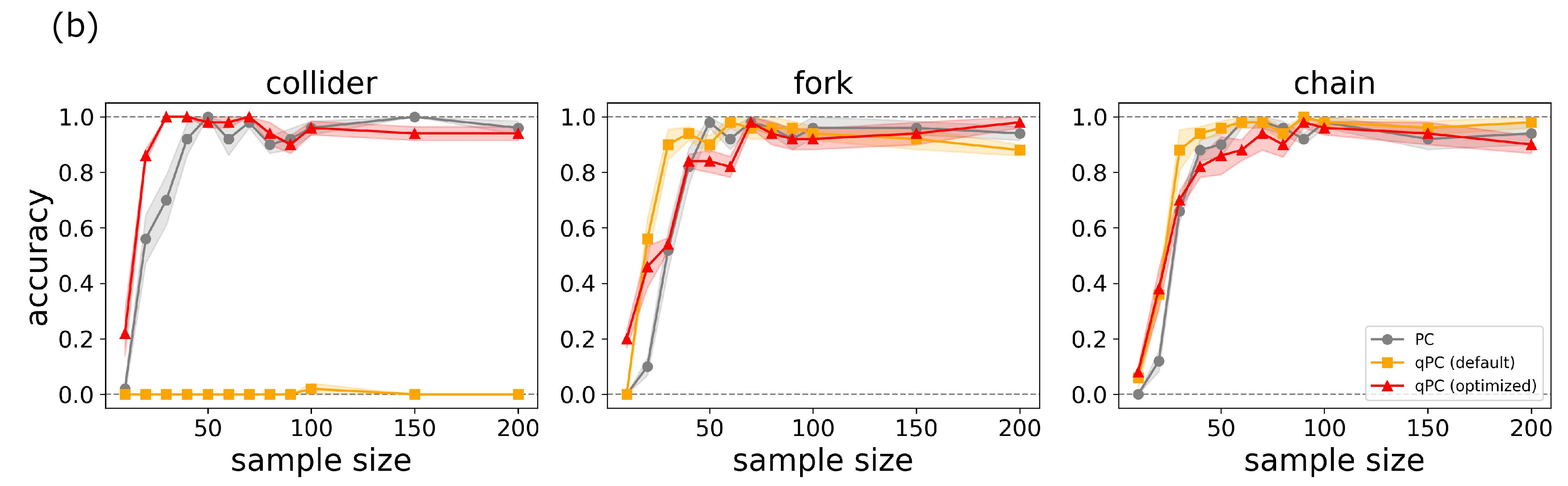

To evaluate the performance of the qPC algorithm using our optimization method, we conducted an experiment in which the data were drawn from a classical setting with the same three fundamental causal graphs as those in Fig. 2. Figure. 3 (a) shows the typical behaviors of the KTA and quantum scaling parameter during the optimization process and the difference in statistics between the default and optimized kernels. Through optimization, the KTA was minimized for the independent data, and correspondingly, the scaling parameter approached the optimal value. A comparison of the statistics indicates that the FP probability was significantly suppressed. Figure 3 (b) shows the accuracy over different sample sizes for three cases: the PC with Gaussian kernels of heuristic width choice and the qPC algorithms with quantum kernels of default and optimized scaling parameters. The qPC algorithm with the default scaling parameters collapses into the collider structure. However, the optimization of the scaling parameters drastically improved its performance. The qPC algorithm with the optimized parameters performed better than the PC algorithm in the small size regime. Figure. 3 (c) shows the ROC curves for three causal patterns with a sample size \(50\). This suggests that the qPC algorithm with the optimized scaling parameters can achieve the best performance if the level of significance is appropriately set. These results indicate that a reduction in the FP risk yields quantum kernels that surpass classical kernels even for classical datasets with small sample sizes.

Figure 3: Optimization of the hyperparameters in quantum circuits in the qPC algorithm. (a) Changes of the KTA and quantum scaling parameter during optimization, and the statistics before and after the optimization process. A typical example was chosen from the simulation in (b). (b) Accuracy of the PC and qPC with default and optimized hyperparameters with different sample sizes for the three junction patterns. (c) ROC curves obtained by the three methods for the junction patterns with sample size \(50\). Shaded regions represent the standard errors over 10 different simulations..

2.4 Application of the qPC algorithm to real-world data↩︎

Here, we demonstrate the application of the qPC algorithm and our optimization method to real-world data. We used the datasets on the Boston housing price [29]. In the optimization, we sought a suitable scaling parameter by minimizing the KTA for the independent distributions obtained by shuffling the original data.

The results of applying the classical PC and qPC algorithms to the Boston housing data are presented in Fig. 4. Panel (a) shows the marginal distributions for the selected variables, most of which deviate from Gaussian or other conventional distributions. Using the classical PC with KCIT for the full sample data (\(N=?\)), we obtained the CPDAG shown in Fig. 4 (b), which captures reasonable causal relations among the variables. However, the small sample size obscures the causal relations between them, and the PC algorithm failed to reconstruct the CPDAG under the same conditions, such as the level of significance, as shown in Fig. 4 (c). The qPC algorithm with the optimized scaling parameters is still capable of providing a more comprehensive estimate of causality, as shown in Fig. 4 (d), where it detected the potential causes of the price denoted as the MEDV node. The closeness between the results of the PC with full samples and those of the qPC with a small part of the whole sample set is consistent with our artificial data experiment.

Figure 4: Application to the Boston housing data. (a) Marginal distributions for the variables. (b) CPDAG obtained from the PC algorithm using the Gaussian kernel. The algorithm was executed for the full samples with \(N = 394\). (c) CPDAG obtained from the PC with a small part of the dataset with \(N=50\). (d) CPDAG obtained from the qPC using a quantum kernel with the same data as in (c). For all cases, the levels of significance were set as \(\alpha=0.01.\)

3 Discussion↩︎

We proposed the qPC algorithm for causal discovery by leveraging quantum circuits that generate the corresponding RKHS. Our simulations demonstrated that the qPC algorithm can surpass the classical method in reconstructing the underlying causal relations, particularly with a small number of samples. Furthermore, with no existing method for determining the hyperparameters of quantum kernels, we proposed a method for choosing quantum kernels adaptively for the data. In the proposed method for kernel choice, we employed the KTA to set quantum kernels suitable for causal discovery, thereby decreasing the FP risk for independent cases. We numerically demonstrated that the optimization method can improve the inference results for both synthetic and real data. Our experimental results indicate that even for small sizes, quantum kernels can facilitate accurate causal discovery. This finding indicate that quantum circuits can enhance the performance of existing causal discovery methods and widen the range of their applications in real-world problems.

Although our experiments on artificial and real data suggest the superiority of the qPC algorithm for causal discovery with small datasets compared to the classical PC algorithm, it could be further discussed to unveil the principle behind this phenomenon. For small sample datasets, we cannot apply the asymptotic theory of the test statistics shown in the KCIT, making it difficult to expect the independence test to perform as theoretically predicted. Therefore, optimization via KTA could improve the performance of the hypothesis test considerably. However, because such an improvement should be achievable with any kernel, it is reasonable to infer that the success of the quantum kernel with the dataset used is owing to its inductive bias in quantum models [32]. Although we demonstrated that the qPC algorithm exhibits high accuracy for data generated from quantum circuits, even with default hyperparameters, it fails to capture causal relations from classical data without adjusting the hyperparameters. Optimization raises the capacity of the qPC algorithm significantly, making it superior to classical heuristics. Investigating the properties of quantum kernels, for example, in terms of the eigenvalues of the kernel matrices, could provide insights into the underlying mechanisms. Moreover, the change in the properties of the RKHS associated with the quantum models through optimization and its effect on the independence tests could be studied.

The proposed optimization method based on the KTA increases the applicability of quantum methods. Our result in Fig. 3 connects the quantum method with realistic data. Remarkably, the optimal values of the scaling parameters obtained in our cases are highly compatible with previous results in a supervised learning setting [28]. This implies that there are parameter regions in which the computational capacity of the quantum kernels is maximized. Our results could also be used for building a procedure of heuristic parameter choice for quantum kernels, similar to that for Gaussian kernels. While we chose kernels by minimizing the KTA to decrease the FP probability in this study, other strategies for choosing kernels in independence tests or causal discovery exist. A study designed kernels for independence tests to maximize test power [33]–[35]. The main difference is that our method chooses kernels to reduce the probability of Type-I errors while their methods aim to decrease Type-II errors. Another study minimized mutual information [36], where ridge regression was assumed. In their method, the mutual information is calculated for the obtained causal structures.

Finally, we describe the promising extensions of this study. First, for simplicity, we assume that no hidden variables affect the causality of the visible variables. Such confounding factors may change the inferred causal structures. An extended version that incorporates their existence, the FCI algorithm, has been developed [37]. Our algorithm can be used for independence tests within the framework of the FCI algorithm. In addition, while we focus on static situations in which data are drawn from static distributions, causal discovery has been applied to real-world problems associated with dynamic systems. Our approach with quantum kernels can be utilized to analyze time-series data with straightforward modifications following the PCMCI algorithm [38], which expands the applicability of the qPC algorithms to real-world problems such as meteorology or financial engineering. In addition, it is possible to develop a more elaborate kernel choice, such as the multiple kernel method [39], where a combination of multiple kernels is employed, and the optimal solution is obtained via convex optimization. These developments will make the qPC algorithm more applicable to various real-world applications.

The present work showed that the quantum-inspired algorithm can enhance the accuracy of the causal discovery method, especially for a small number of samples. Our numerical investigation revealed that the quantum method reconstructed the causal fundamental structures more accurately from small datasets than the classical one. The introduction of KTA optimization allows us to evaluate optimal quantum kernels without using the underlying causal relations. While the KTA metric provides insights on what types of kernels yield accurate inference by reducing false positive ratio for independent data, it is not fully understood how quantum nature elevates the performance of classical methods. Furthermore, we primarily analyzed the linear cases of causal relations in numerical demonstrations as the initial assessment of the quantum algorithm. Future work on data with more complicated causal relations or various distributions could offer fundamental insights for practical applications.

4 Methods↩︎

4.1 Details of the quantum kernel-based conditional tests for the qPC algorithm↩︎

The KCIT [17], [18] is a hypothesis test for null hypothesis \(X \perp \!\!\!\! \perp Y \;| \;Z\) between random variables \(X\) and \(Y\) given \(Z\). It was developed as a conditional independence test by defining a simple statistic based on HSIP of two centralized conditional kernel matrices and deriving its asymptotic distribution under the null hypothesis (see Appendix 5 for details). Unconditional independence statistic \(T_{UI}\) is defined as \[\begin{align} T_{UI} := \frac{1}{n} {\rm Tr} \bigl[ \widetilde{\mathbf{K}}_X \widetilde{\mathbf{K}}_Y \bigr], \end{align}\] where \(\widetilde{\mathbf{K}}_X\) and \(\widetilde{\mathbf{K}}_Y\) are the centralized kernel matrices i.i.d. of size \(n\) for \(X\) and \(Y\). Under the null hypothesis that \(X\) and \(Y\) are statistically independent, it follows that the Gamma distribution \[\begin{align} p(t) = t^{k-1} \frac{e^{-t/\theta}}{\theta^k \Gamma (k)}, \end{align}\] where shape parameter \(k\) and scale parameter \(\theta\) are estimated by \[\begin{align} k &=& \frac{{\rm Tr} \bigr[ \widetilde{\mathbf{K}}_X \bigl]^2 {\rm Tr} \bigr[ \widetilde{\mathbf{K}}_Y \bigl]^2}{2 {\rm Tr} \bigr[ \widetilde{\mathbf{K}}_X^2 \bigl] {\rm Tr} \bigr[ \widetilde{\mathbf{K}}_Y^2 \bigl]}, \\ \theta &=& \frac{2 {\rm Tr} \bigr[ \widetilde{\mathbf{K}}_X^2 \bigl] {\rm Tr} \bigr[ \widetilde{\mathbf{K}}_Y^2 \bigl]}{n^2 {\rm Tr} \bigr[ \widetilde{\mathbf{K}}_X \bigl] {\rm Tr} \bigr[ \widetilde{\mathbf{K}}_Y \bigl]}. \end{align}\] The conditional independence statistic, \(T_{CI}\), is defined as \[\begin{align} T_{CI} := \frac{1}{n} \mathrm{Tr} \bigl[ \widetilde{\mathbf{K}}_{\ddot{\mathbf{X}} | \mathbf{Z}} \widetilde{\mathbf{K}}_{\mathbf{Y} | \mathbf{Z}} \bigr], \end{align}\] where \(\ddot{X} = (X, Z)\), \(\widetilde{\mathbf{K}}_{\ddot{X}|Z} = \mathbf{R}_Z \widetilde{\mathbf{K}}_{\ddot{X}} \mathbf{R}_Z\) and \(\mathbf{R}_Z = \mathbf{I} - \widetilde{\mathbf{K}}_Z (\widetilde{\mathbf{K}}_Z + \epsilon \mathbf{I})^{-1} = \epsilon (\widetilde{\mathbf{K}}_Z + \epsilon \mathbf{I})^{-1}\). We constructed \(\widetilde{\mathbf{K}}_{Y|Z}\) similarly. Although \(T_{CI}\) approximately follows the Gamma distribution under the null hypothesis, parameters \(k\) and \(\theta\) are described by a matrix defined by a \(3\)rd-order tensor based on the eigenvectors \(\widetilde{\mathbf{K}}_{\ddot{X}|Z}\) and \(\widetilde{\mathbf{K}}_{Y|Z}\).

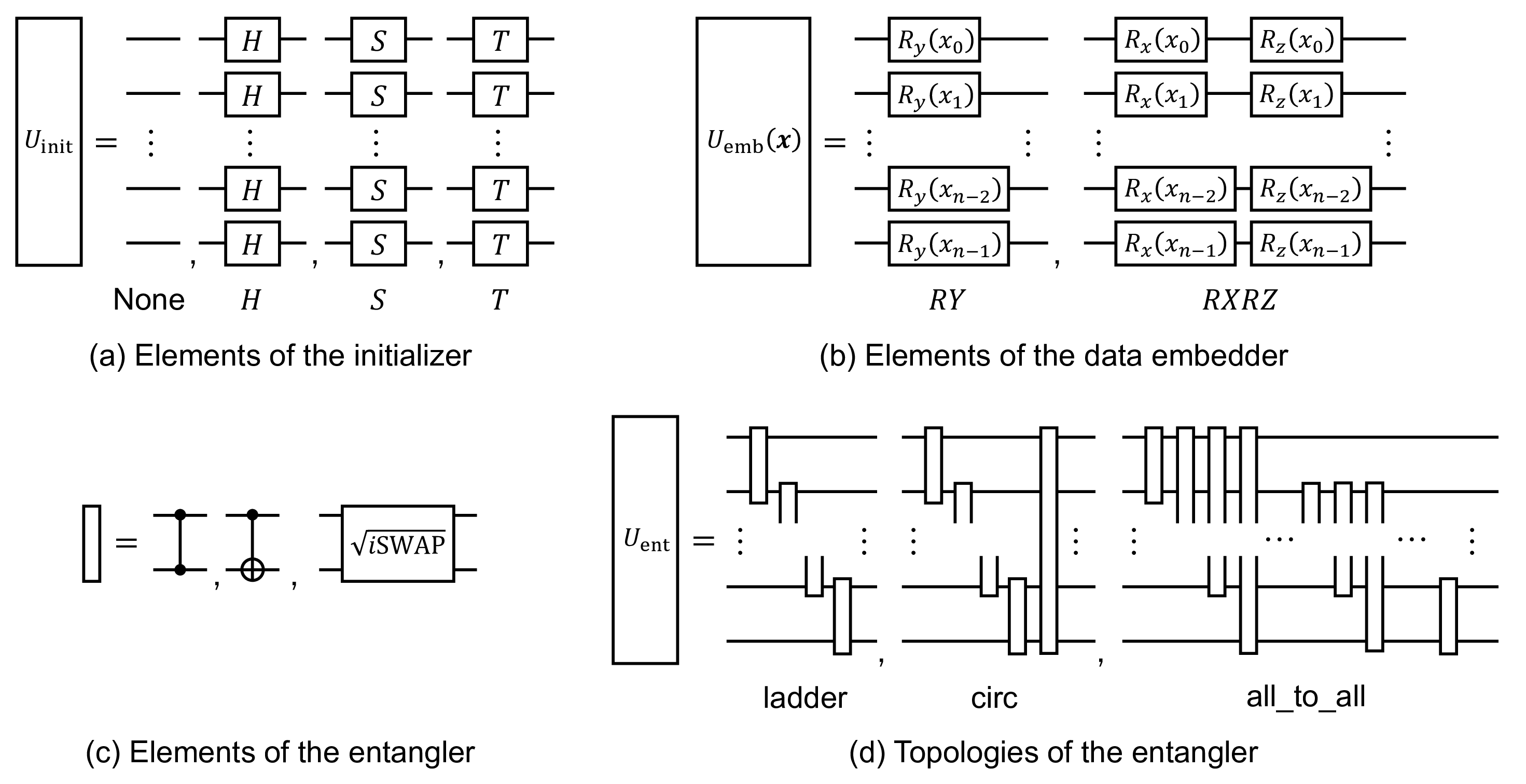

We employed a quantum kernel to design the kernel matrices. The most basic quantum kernel is calculated using the fidelity of two quantum states: the embedded data \(\mathbf{x}\) and \(\mathbf{x'}\), \(k(\mathbf{x, x'})=\mathrm{Tr}[\rho(\mathbf{x})\rho(\mathbf{x'}) ]\) [40]. Data-embedded quantum states are generated using a parameterized quantum circuit. As shown in Fig. 5, data \(\mathbf{x}\) is mapped into the quantum state via the unitary operation \(U(\mathbf{x})=\Pi^{n_{\mathrm{dep}}}_{i}U_i(\mathbf{x})U_{\mathrm{init}}\ket{0}^{\otimes n}\), where \(n\) is the number of qubits and \(n_{\mathrm{dep}}\) is the number of data reuploading. This operation offers the effect of superposition and entanglement between qubits. Here, if we design an appropriate quantum circuit, the data will be effectively mapped onto the RKHS suitable for the KCIT. The details of the quantum circuits tested in this study are described in Appendix 9. The key to designing an effective quantum circuit is the selection of the components of the unitary operation and the pre- and post-processing of the data. Preprocessing includes the scaling and affine transformations of the embedding data, and postprocessing includes the design of the ovservables. In this study, we introduced only scaling for preprocessing and employed fidelity as the observable parameter for simplicity.

Figure 5: Structure of the quantum circuit for data generating the quantum state.

4.2 KTA↩︎

Here, we discuss kernel selection for unconditional independence test and propose optimization heuristics based on KTA [31]. We rely on the fact that the statistic is constructed from the HSIP, which measures the discrepancy between feature vectors. \(X\) and \(Y\) are independent if and only if the feature vectors of the embedded data in RKHS are orthogonal. Intuitively, this leads to the selection of a kernel that minimizes (resp. maximizes), the HSIP for independent (resp. dependent) of the data samples.

We define the normalized HSIP i.e., the KTA \[\begin{align} {\rm KTA}(X, Y) := \frac{\mathrm{Tr} \bigl[ \widetilde{\mathbf{K}}_{\mathbf{X}} \widetilde{\mathbf{K}}_{\mathbf{Y}} \bigr]}{\sqrt{\mathrm{Tr} \bigl[ \widetilde{\mathbf{K}}_{\mathbf{X}}^2 \bigr] \mathrm{Tr} \bigl[ \widetilde{\mathbf{K}}_{\mathbf{Y}}^2 \bigr]}}, \label{eq:kta95loss} \end{align}\tag{1}\] as the evaluation function. The normalized HSIP can be interpreted as the signal-to-noise ratio \({\rm S/N}\) of the asymptotic gamma distribution under the null hypothesis. This is demonstrated by Theorem 7 (Proposition 5 of ref. [17], [18]) as follows: \[\begin{align} {\rm S/N} &:=& \frac{\mathbb{E}\left[ \breve{T}_{UI} \mid \mathcal{D} \right]}{\sqrt{\mathbb{V}ar\left[ \breve{T}_{UI} \mid \mathcal{D} \right]}} \\ &=& \frac{\mathrm{Tr} \bigl[ \widetilde{\mathbf{K}}_{\mathbf{X}} \widetilde{\mathbf{K}}_{\mathbf{Y}} \bigr]}{\sqrt{\mathrm{Tr} \bigl[ \widetilde{\mathbf{K}}_{\mathbf{X}}^2 \bigr] \mathrm{Tr} \bigl[ \widetilde{\mathbf{K}}_{\mathbf{Y}}^2 \bigr]}} \\ &=& {\rm KTA}(X, Y). \label{eq:signal95to95noise95ratio} \end{align}\tag{2}\]

The derivatives of Eq. 1 for minimization is expressed as follows:

Lemma 1. For parameterized kernels \((\mathbf{K}_X)_{xx'} = k_X(x, x' | \theta)\) and \((\mathbf{K}_Y)_{yy'} = k_Y(y, y' | \phi)\), consider the following function: \[\begin{align} f(\theta, \phi) &=& -\log \left( \frac{{\rm Tr}[\mathbf{K}_X \mathbf{K}_Y]}{\sqrt{{\rm Tr}[\mathbf{K}_X^2]{\rm Tr}[\mathbf{K}_Y^2]}} \right) = -\log \left( {\rm KTA} \left(\mathbf{K}_X, \mathbf{K}_Y \right) \right). \end{align}\] The derivatives of the function are then given by \[\begin{align} \frac{\partial f}{\partial \theta} &=& -\frac{{\rm Tr}[ (2 \mathbf{K}_Y - \mathbf{K}_Y \circ \mathbf{I}) \partial_{\theta} \mathbf{K}_X ]}{{\rm Tr}[ \mathbf{K}_X \mathbf{K}_Y ]} + \frac{{\rm Tr}[ (2 \mathbf{K}_X - \mathbf{K}_X \circ \mathbf{I}) \partial_{\theta} \mathbf{K}_X ]}{{\rm Tr}[ \mathbf{K}_X^2 ]}, \\ \frac{\partial f}{\partial \phi} &=& -\frac{{\rm Tr}[ (2 \mathbf{K}_X - \mathbf{K}_X \circ \mathbf{I}) \partial_{\phi} \mathbf{K}_Y ]}{{\rm Tr}[ \mathbf{K}_X \mathbf{K}_Y ]} + \frac{{\rm Tr}[ (2 \mathbf{K}_Y - \mathbf{K}_Y \circ \mathbf{I}) \partial_{\phi} \mathbf{K}_Y ]}{{\rm Tr}[ \mathbf{K}_Y^2 ]}, \end{align}\] where \((\partial_{\theta} \mathbf{K}_X)_{xx'} = \partial_{\theta} k_X(x, x'|\theta)\) and \((\partial_{\theta} \mathbf{K}_Y)_{yy'} = \partial_{\phi} k_Y(y, y'|\phi)\).

Proof 1. See Appendix 7.

4.3 Kernel optimization↩︎

We now explain the actual optimization of classical or quantum kernels. As mentioned in the previous subsection, we minimize KTA in Eq. 1 for the independent data samples. One natural method is to eliminate the correlation between two random variables by random shuffling of given data samples. We then minimize KTA using the gradient descent. The random shuffling method generates independent data while preserving the marginal distribution, and minimizing the KTA for such data reduces the signal-to-noise ratio in Eq. 2 under the null hypothesis. From the perspective of statistical hypothesis testing, the KTA minimization reduces the false-positive (FP) risk. We present the pseudocode for the gradient-based KTA minimization in Algorithm 6.

An alternative method is to sample the assumed marginal distributions in advance, whose moments are estimated using the given data samples. Sampling from modeled marginal distributions has the advantage of allowing the generation of large data samples, whereas the random shuffle method does not require prior knowledge of the marginal distribution. In our experiments, we adopted the random shuffling method for small data samples. To minimize the KTA, we used a sampling-based method, such as branch and bound [41]–[43], instead of a differentiation-based method.

Figure 6: KTA Minimization

4.4 Experimental details↩︎

Experimental results were generated using the python package causal-learn [20]. We built our quantum models based on the package emulating quantum models with Qiskit [44] and Qulacs [45]. In the classical method, we used the KCIT with the heuristic choice of the Gaussian kernel width already implemented in causal-learn, which is one of the methods with the best performance in classical kernels.

In Section 2.2, our simulations were run with noise ratios \(0.05\) for the following relations, where the source variables were drawn from the Gaussian distributions. In details, we used the relations of the collider, \(z=z_1, x=(z+y)/2, y =x_1^2\), the chain, \(z=(z_1+x_1)/2, x=y^2, y=0.5z\), and the fork \(z=0.5x, x=(z_1+x_1)/2, y=x^2.\) To estimate accuracy, we run 30 iterations for each simulation. The scaling parameters of the quantum models were fixed to \(1.0\). The significance level was set to \(\alpha=0.05\).

In Section 2.3, we run our simulation for linear relations with Gaussian variables, unless otherwise described. For optimization, we created the independent data by shuffling the original data and applied the optimizer to decrease the KTA value of the shuffled data. We changed the single scaling parameter and searched for its optimal value within the range \([0.01,0.5]\) starting from the initial value of \(0.1\). All data were standardized before applying the causal discovery methods. In the default quantum models, we used the scaling parameters equivalent to \(1\). In the ROC curves, we changed the level of significance in the set \(\{0.999999, 0.9, 0.75, 0.5, 0.25, 0.2, 0.1, 0.05, 0.01, 0.001, 0.0001, 0.00001\}\). The ROC curves require calculation of the true positive ratio (TPR) and false positive ratio (FPR). We focused on the skeltons of the CPDAGs considering only the existence or absence of edges between the variables to evaluate the TPR and FPR. If an edge exists between the two variables, it is judged positive; otherwise, it is judged negative. If the estimate and ground-truth match, it is called a TP if an edge is present and a TN if no edge is present. Conversely, if the estimate implies that an edge is present and the ground truth does not have an edge, it is called a FP. If no edge is inferred in the estimate and an edge is present in the ground truth, it is called a FN. Using the scores for TP, TN, FP, and FN, TPR and FPR are calculated as \(\mathrm{TPR}=\mathrm{TP}/(\mathrm{TP}+\mathrm{FN})\) and \(\mathrm{FPR}=\mathrm{FP}/(\mathrm{FP}+\mathrm{TN})\), respectively.

In Section 2.4, we used the classical and quantum kernels, which are the same as those used in the previous sections. For Boston housing data, we used the data source [46].

Contributions↩︎

Y. Maeda and H. Tezuka contributed to the study conception and design. Y. Tanaka and Y. Terada contributed to manuscript preparation. H. Tezuka, K. Arai, Y. Maeda, Y. Tanaka and Y. Terada commented on and revised the previous versions of the manuscript. K. Arai, Y. Terada and H. Tezuka developed the base computation system and conducted experiments to collect and analyze data. Y. Tanaka, Y. Terada and H. Tezuka created all images and drawings. All the authors have read and approved the final manuscript.

Corresponding author↩︎

Correspondence to Hiroyuki Tezuka.

5 Review of the kernel-based conditional independence test↩︎

This section briefly reviews the KCIT [17], [18]. Let us begin with given continuous random variables \(X, Y\), and \(Z\) with domains \(\mathcal{X}, \mathcal{Y}\), and \(\mathcal{Z}\), respectively. The probability law for \(X\) is denoted by \(P_X\). We introduce a measurable, positive definite kernel \(k_{\mathcal{X}}\) on \(\mathcal{X}\) and denote the corresponding RKHS as \(\mathcal{H}_{\mathcal{X}}\). The space of the square integrable functions of \(X\) is denoted by \(L^2_X\). \(\mathbf{K}_X\) is then the kernel matrix of the i.i.d. sample \(\mathbf{x} = \{ x_1, ..., x_n \}\) of \(X\), and \(\widetilde{\mathbf{K}}_X = \mathbf{H} \mathbf{K}_{\mathbf{x}} \mathbf{H}\) is the centralized kernel, where \(\mathbf{H} := \mathbf{I} - \frac{1}{n} \mathbf{1} \mathbf{1}^T\) with \(\mathbf{I}\) and \(\mathbf{1}\) being the \(n \times n\) identity matrix and the vector of 1’s, respectively. Similarly, we define \(P_Y, P_Z, k_{\mathcal{Y}, k_{\mathcal{Z}}}, \mathcal{H}_{\mathcal{Y}}, \mathcal{H}_{\mathcal{Z}}, L^2_Y, L^2_Z, \mathbf{K}_Y, \mathbf{K}_Z, \widetilde{\mathbf{K}}_Y, \widetilde{\mathbf{K}}_Z\) as well.

The problem here is to perform the test for conditional independence (CI), i.e., test the null hypothesis \(X \perp \!\!\!\! \perp Y \;| \;Z\), between \(X\) and \(Y\) given \(Z\) from their i.i.d. samples. In Refs. [17], [18], a CI test was developed by defining a simple statistic based on two characterizations of the CI [47], [48] and deriving its asymptotic distribution under the null hypothesis.

One characterization of the CI is provided in terms of cross-covariance operator \(\Sigma_{XY}\) in the RKHS [47]. For random vector \((X, Y)\) on \(\mathcal{X} \times \mathcal{Y}\), cross-covariance operator \(\Sigma_{XY}\) is defined by the following relation: \[\begin{align} \langle f, \Sigma_{XY}f \rangle = \mathbb{E}_{XY} \left[ f(X) g(Y) \right] - \mathbb{E}_X \left[ f(X) \right] \mathbb{E}_Y \left[ g(Y) \right] \end{align}\] for all \(f \in \mathcal{H}_{\mathcal{X}}\) and \(g \in \mathcal{H}_{\mathcal{Y}}\).

Lemma 2 (Theorem 3 (ii) of Ref. [47]). Denote \(\ddot{X} = (X, Z)\) and \(k_{\ddot{\mathcal{X}}} = k_{\mathcal{X}} k_{\mathcal{Z}}\). Assume that \(\mathcal{H}_{\mathcal{X}} \subset L^2_X, \mathcal{H}_{\mathcal{Y}} \subset L^2_Y\), and \(\mathcal{H}_{\mathcal{Z}} \subset L^2_Z\). Furthermore, assume that \(k_{\ddot{\mathcal{X}}} k_{\mathcal{Y}}\) is a characteristic kernel on \((\mathcal{X} \times \mathcal{Z}) \times \mathcal{Y}\) and \(\mathcal{H}_{\mathcal{Z}} + \mathbb{R}\) is dense in \(L^2(P_Z)\). Then, \[\begin{align} \Sigma_{\ddot{X}Y | Z} = 0 \Leftrightarrow X \perp \!\!\!\! \perp Y \;| \;Z. \end{align}\]

The other characterization of CI is given by explicitly enforcing the uncorrelatedness of functions in suitable spaces.

Lemma 3 ([48]). The following conditions are equivalent to each other: \[\begin{align} X \perp \!\!\!\! \perp Y \;| \;Z \Leftrightarrow \mathbb{E}\left[ f' g' \right] = 0, \forall f' \in \mathcal{E}_{XZ}, \forall g' \in \mathcal{E}'_{XZ}, \nonumber \\ \end{align}\] where \[\begin{align} \mathcal{E}_{XZ} &:=& \left\{ f' \in L^2_{\ddot{X}} \;| \;\mathbb{E}\left[ f' | Z \right] = 0 \right\}, \\ \mathcal{E}'_{YZ} &:=& \left\{ g' \;| \;g' = g(Y) - \mathbb{E}\left[ g | Z \right], g \in L^2_Y \right\}. \end{align}\]

These functions are constructed from the corresponding \(L^2\) spaces. For instance, for arbitrary \(f \in L^2_{XZ}\), function \(f'\) is given by \[\begin{align} f'(\ddot{X}) = f(\ddot{X}) - \mathbb{E}\left[ f | Z \right] = f(\ddot{X}) - h_f^{\ast}(Z), \label{eq:reg95fun} \end{align}\tag{3}\] where \(h_f^{\ast} \in L^2_Z\) denotes regression function \(f(\ddot{X})\) on \(Z\).

Refs. [17], [18] established that if functions \(f\) and \(g\) are restricted to spaces \(\mathcal{H}_{\ddot{X}}\) and \(\mathcal{H}_Y\), respectively, then Lemma 3 is reduced to Lemma 2. Specifically, they used kernel ridge regression to estimate regression function \(h_f^{\ast}\) in Eq. 3 ; that is, \[\begin{align} \hat{h}_f^{\ast} (\mathbf{z}) = \widetilde{\mathbf{K}}_Z (\widetilde{\mathbf{K}}_Z + \epsilon \mathbf{I})^{-1} \cdot f(\ddot{\mathbf{x}}), \label{eq:k95ridge} \end{align}\tag{4}\] where \(\epsilon\) denotes a small positive regularization parameter. From Eq. 4 , we can construct a centralized kernel matrix corresponding to function \(f'(\ddot{X})\), \[\begin{align} \widetilde{\mathbf{K}}_{\ddot{X}|Z} = \mathbf{R}_Z \widetilde{\mathbf{K}}_{\ddot{X}} \mathbf{R}_Z, \end{align}\] where \(\mathbf{R}_Z = \mathbf{I} - \widetilde{\mathbf{K}}_Z (\widetilde{\mathbf{K}}_Z + \epsilon \mathbf{I})^{-1} = \epsilon (\widetilde{\mathbf{K}}_Z + \epsilon \mathbf{I})^{-1}\). Similarly, we construct centralized kernel matrix \(\widetilde{\mathbf{K}}_{Y|Z}\) corresponding to function \(g'(Y)\).

Furthermore, to propose the statistic for CI, they provided general results on the asymptotic distributions of some statistics defined in terms of kernel matrices under uncorrelated-ness between functions in specific spaces. Let us consider the eigenvalue decompositions of the centralized kernel matrices of \(\widetilde{\mathbf{K}}_{X}\) and \(\widetilde{\mathbf{K}}_{Y}\), i.e., \(\widetilde{\mathbf{K}}_{X} = \mathbf{V}_{X} \mathbf{\Lambda}_{X} \mathbf{V}_{X}^T\) and \(\widetilde{\mathbf{K}}_{Y} = \mathbf{V}_{Y} \mathbf{\Lambda}_{Y} \mathbf{V}_{Y}^T\), where \(\mathbf{\Lambda}_{X}\) and \(\mathbf{\Lambda}_{Y}\) are diagonal matrices containing the non-negative eigenvalues \(\lambda_{\mathbf{x}, i}\) and \(\lambda_{\mathbf{y}, j}\), respectively. Furthermore, we define that \(\boldsymbol{\psi}_{\mathbf{x}} = \left[ \psi_{\mathbf{x}, 1}(\mathbf{x}), ..., \psi_{\mathbf{x}, n}(\mathbf{x}) \right] := \mathbf{V}_{X} \mathbf{\Lambda}_{X}^{1/2}\) and \(\boldsymbol{\phi}_{\mathbf{y}} = \left[ \phi_{\mathbf{y}, 1}(\mathbf{y}), ..., \phi_{\mathbf{y}, n}(\mathbf{y}) \right] := \mathbf{V}_{Y} \mathbf{\Lambda}_{Y}^{1/2}\), i.e., \(\psi_{\mathbf{x}, i}(x_k) = \lambda_{\mathbf{x}, i}^{1/2} V_{\mathbf{x}, ik}\) and \(\phi_{\mathbf{y}, j}(y_k) = \lambda_{\mathbf{y}, j}^{1/2} V_{\mathbf{y}, jk}\). Then, defining tensor \(\mathbf{T}\) and matrix \(\mathbf{T}^{\ast}\) by \[\begin{align} T_{ijk} &:=& \frac{1}{\sqrt{n}}\psi_{\mathbf{x}, i}(x_k) \phi_{\mathbf{y}, j}(y_k) \\ &=& \sqrt{\frac{\lambda_{\mathbf{x}, i} \lambda_{\mathbf{y}, j}}{n}} V_{\mathbf{x}, ik} V_{\mathbf{y}, jk}, \\ T^{\ast}_{ij}(X,Y) &:=& \sqrt{\lambda^{\ast}_{X,i} \lambda^{\ast}_{Y,j}} u_{X,i}(X) u_{Y,j}(Y), \end{align}\] where \(\lambda^{\ast}_{X,i}, \lambda^{\ast}_{Y,j}\) and \(u_{X,i}(X) u_{Y,j}(Y)\) are the eigenvalues and eigenfunctions of kernel \(k_{\mathcal{X}}\) with regard to the probability measure with the density \(p(x)\), respectively, we define matrices \(\mathbf{M}\) and \(\mathbf{M}^{\ast}\) by \[\begin{align} M_{ij, i'j'} &=& \sum_{k=1}^n T_{ijk} T_{i'j'k}, \label{eq:M} \\ M^{\ast}_{ij, i'j'} &=& T^{\ast}_{ij}(X, Y) T^{\ast}_{i'j'}(X, Y). \end{align}\tag{5}\] Note that \(\mathbf{M}\) and \(\mathbf{M}^{\ast}\) for the conditional kernels are defined similarly. The main technical results presented in Ref. [17], [18] are as follows:

Theorem 4 (Theorem 3 of Ref. [17], [18]). Suppose that we are given arbitrary centred kernels \(k_{\mathcal{X}}\) and \(k_{\mathcal{Y}}\) with discrete eigenvalues and the corresponding RKHS’s \(\mathcal{H}_{\mathcal{X}}\) and \(\mathcal{H}_{\mathcal{Y}}\) for sets of random variables \(X\) and \(Y\), respectively. We make the following three statements:

Under the condition that \(f(X)\) and \(g(Y)\) are uncorrelated for all \(f \in \mathcal{H}_{\mathcal{X}}\) and \(g \in \mathcal{H}_{\mathcal{Y}}\), for any \(L\) such that \(\lambda^{\ast}_{X,L+1} \neq \lambda^{\ast}_{X,L}\) and \(\lambda^{\ast}_{Y,L+1} \neq \lambda^{\ast}_{Y,L}\), we have \[\begin{align} \sum_{i,j = 1}^L M_{ij, ij} \overset{d}{\longrightarrow} \sum_{i,j=1}^L \mathring{\lambda}^{\ast}_{ij} z^2_{ij},\quad {\rm as}\;n \to \infty, \label{eq:con1} \end{align}\qquad{(1)}\] where \(z_{ij}\) are i.i.d.* standard Gaussian variables (i.e., \(z_{ij}^2\) are i.i.d. \(\chi^2_1\)-distributed variables), and \(\mathring{\lambda}^{\ast}_{ij}\) are the eigenvalues of \(\mathbb{E}[\mathbf{M}^{\ast}]\).*

In particular, if \(X\) and \(Y\) are further independent, we have \[\begin{align} \sum_{i,j = 1}^L M_{ij, ij} \overset{d}{\longrightarrow} \sum_{i,j=1}^L \lambda^{\ast}_{X, i} \lambda^{\ast}_{Y, j} z_{ij}^2,\quad {\rm as}\;n \to \infty, \label{eq:con2} \end{align}\qquad{(2)}\] where \(z_{ij}^2\) are i.i.d. \(\chi^2_1\)-distributed variables.

The results of Eqs. ?? and ?? hold for \(L = n \to \infty\).

Based on these considerations, the authors in Ref. [17], [18] proposed statistics defined by the HSIP for unconditional and conditional independence tests.

Theorem 5 (Theorem 4 of Ref. [17], [18]). Under the null hypothesis that \(X\) and \(Y\) are statistically independent, statistic \[\begin{align} T_{UI} := \frac{1}{n} {\rm Tr} \bigl[ \widetilde{\mathbf{K}}_X \widetilde{\mathbf{K}}_Y \bigr] \end{align}\] has the same asymptotic distribution as \[\begin{align} \breve{T}_{UI} := \frac{1}{n^2} \sum_{i,j=1}^n \lambda_{\mathbf{x},i} \lambda_{\mathbf{y},j} z_{ij}^2, \label{eq:asymp} \end{align}\qquad{(3)}\] i.e., \(T_{UI} \overset{d}{=} \breve{T}_{UI}\) as \(n \to \infty\), where \(z_{ij}\) are i.i.d.* standard Gaussian variables, \(\lambda_{\mathbf{x},i}\) are the eigenvalues of \(\widetilde{\mathbf{K}}_X\), and \(\lambda_{\mathbf{y},i}\) are the eigenvalues of \(\widetilde{\mathbf{K}}_Y\).*

The statistic for the unconditional independence test closely relates to those based on the Hilbert-Schmidt independence criterion (HSIC) [49]. The difference between these statistics is that they exhibit different asymptotic distributions. Eq. ?? depends on the eigenvalues of \(\widetilde{\mathbf{K}}_X\) and \(\widetilde{\mathbf{K}}_Y\), whereas the HSIC\(_b\) in Eq. (4) in Ref. [49] depends on the eigenvalues of an order-four tensor. The following is the statistic for CI.

Theorem 6 (Theorem 5 of Ref. [17], [18]). Under the null hypothesis that \(X\) and \(Y\) are conditionally independent, given \(Z\), we obtain the statistic \[\begin{align} T_{CI} := \frac{1}{n} \mathrm{Tr} \bigl[ \widetilde{\mathbf{K}}_{\ddot{\mathbf{X}} | \mathbf{Z}} \widetilde{\mathbf{K}}_{\mathbf{Y} | \mathbf{Z}} \bigr] \end{align}\] has the same asymptotic distribution as \[\begin{align} \breve{T}_{CI} := \frac{1}{n} \sum_{k=1}^{n^2} \lambda_k z_k^2, \end{align}\] where \(\lambda_k\) are the eigenvalues of matrix \(\mathbf{M}\) in Eq. 5 , which is constructed by \(\widetilde{\mathbf{K}}_{\ddot{\mathbf{X}} | \mathbf{Z}}\) and \(\widetilde{\mathbf{K}}_{\mathbf{Y} | \mathbf{Z}}\), and \(z_k\) are i.i.d.* standard Gaussian variables.*

Clearly, we can construct the unconditional and conditional independence tests by generating approximate null distribution using the Monte Carlo simulation. In practice, we can approximate the null distribution with a Gamma distribution whose two parameters are related to the mean and variance. Under the null hypothesis, the distribution of \(\breve{T}_{UI}\) can be approximated by the \(\Gamma(k, \theta)\) distribution \[\begin{align} p(t) = t^{k-1} \frac{e^{-t/\theta}}{\theta^k \Gamma(k)}, \end{align}\] where \(k = \mathbb{E}^2 \bigl[\breve{T}_{UI} \bigr] / \mathbb{V}ar \bigl[\breve{T}_{UI} \bigr]\) and \(\theta = \mathbb{V}ar \bigl[\breve{T}_{UI} \bigr] / \mathbb{E} \bigl[\breve{T}_{UI} \bigr]\). In the unconditional case, the two parameters can be similarly defined in the same way. The mean and variance are estimated as follows:

Theorem 7 (Proposition 5 of Ref. [17], [18]).

Under the null hypothesis that \(X\) and \(Y\) are independent, on the given sample \(\mathcal{D}\), we have that \[\begin{align} \mathbb{E} \bigr[ \breve{T}_{UI} | \mathcal{D} \bigl] &=& \frac{1}{n^2} {\rm Tr} \bigr[ \widetilde{\mathbf{K}}_X \bigl] {\rm Tr} \bigr[ \widetilde{\mathbf{K}}_Y \bigl], \label{eq:expectation} \\ \mathbb{V}ar \bigr[ \breve{T}_{UI} | \mathcal{D} \bigl] &=& \frac{2}{n^4} {\rm Tr} \bigr[ \widetilde{\mathbf{K}}_X^2 \bigl] {\rm Tr} \bigr[ \widetilde{\mathbf{K}}_Y^2 \bigl]. \label{eq:variance} \end{align}\] {#eq: sublabel=eq:eq:expectation,eq:eq:variance}

Under the null hypothesis that \(X\) and \(Y\) are conditionally independent given \(Z\), we have that \[\begin{align} \mathbb{E} \bigr[ \breve{T}_{CI} | \mathcal{D} \bigl] &=& {\rm Tr} \bigr[ \mathbf{M} \bigl], \\ \mathbb{V}ar \bigr[ \breve{T}_{CI} | \mathcal{D} \bigl] &=& 2 {\rm Tr} \bigr[ \mathbf{M}^2 \bigl], \end{align}\] where \(\mathbf{M}\) is the matrix of Eq. 5 , which is constructed by \(\widetilde{\mathbf{K}}_{\ddot{\mathbf{X}} | \mathbf{Z}}\) and \(\widetilde{\mathbf{K}}_{\mathbf{Y} | \mathbf{Z}}\).

6 PC algorithm↩︎

Figure 7: PC algorithm

Here, we summarize the PC algorithm [2], [30] and highlight our contribution by emphasizing the difference between the qPC and conventional PC algorithms. Historically, the PC algorithm [30] was introduced as a computationally efficient version of the Spirtes–Glymour–Scheines algorithm and has been widely used because of its efficiency and effectiveness, given that it can perform a number of tests that grow exponentially with the number of variables. The PC algorithm includes (conditional) independence test and orientation of the edges to provide the CPDAGs from observed data under the assumptions of causal faithfulness and causal sufficiency. A CPDAG with directed and undirected edges describes an equivalence class of DAGs and a set of DAGs with the same skeleton and collider structures. This equivalence class is called a Markov equivalence class. The causal faithfulness condition states that if two variables are statistically independent, there should be no direct causal path between them in the causal model. Causal sufficiency assumes that there are no unobserved variables. The PC algorithm assumes acyclicity in the causal graphs. We also assume that the observed data are collected independently and were identically distributed. In contrast to causal model-based algorithms and gradient-based algorithms using statistical models, such as LiNGAM [7] and NOTEARS [9], the PC algorithm does not require any specific functional assumptions on causal relations. Additionally, the PC algorithm employs statistical tests but does not assume their specific types. Thus, it is applicable to discrete and continuous variables, with suitable tests. We describe the PC algorithm procedure for obtaining CPDAGs below.

The PC algorithm starts with a complete undirected graph and performs three steps to obtain the CPDAG. As the first part of the PC algorithm, the skeleton, that is, the undirected graph for the corresponding CPDAG, was inferred through statistical tests. In this step, we select two variables from the set of all variables, \(X\) and \(Y\). Thereafter, for \(X\) and \(Y\), we perform an independence test to investigate whether \(X \mathpalette{\independenT}{\perp}Y\). If the two variables are independent, we remove the edge between them. For \(X\) and \(Y\) with a still existing edge and another variable \(Z_1\), we perform the conditional independent test to investigate whether \(X \mathpalette{\independenT}{\perp}Y | Z_1\). For \(X\) and \(Y\) with a still existing edge and a set of other variables such as \(Z_1\) and \(Z_2\), we perform the conditional independence test to investigate whether \(X \mathpalette{\independenT}{\perp}Y | Z_1, Z_2\). The above process continues until the number of other variables \(Z_1,Z_2,\cdots\) becomes the total number adjacent to \(X\) or \(Y\). This process was performed for each ordered pair of variables. In the second part, one seeks for v-structures are sought and oriented as colliders. In the obtained skelton graph, if there are edges between \(X\) and \(Z\) as well as \(Y\) and \(Z\) but no edge exists between \(X\) and \(Y\), such as \(X-Z-Y\), we investigate whether \(X \not\!\mathpalette{\independenT}{\perp}Y | Z\). If this holds true, we call this triplet a v-structure and orient it as a collider, where \(X\to Z\gets Y\). Finally, the remaining parts of the graph were oriented using orientation propagation. If we find structures such as \(X\to Z-Y\), we orient them as \(X\to Z\to Y\), given that a v-structure \(X\to Z\gets Y\) contradicts \(X \mathpalette{\independenT}{\perp}Y | Z\), as confirmed in the first part. If we find a structure \(X-Y\) with a directed path from \(X\) to \(Y\), we orient it as \(X\to Y.\)

Figure 8: Process of the PC algorithm.

Although the PC algorithm is generally and widely applicable, it has inherent limitations associated with its assumptions. One of the most crucial limitations of this study is the presence of confounders. In most real-world problems, the effects of hidden variables cannot be avoided, which break the assumptions of the PC algorithm and can thus produce unreliable results. The FCI algorithm [50] is a variant of the PC algorithm, and is applicable to cases with confounders. In contrast to the PC algorithm, the FCI algorithm determines the directions of arrows when they can be an arrow or tail. Consequently, the FCI algorithm yields partial ancestral graphs, which may include not only directed and undirected edges but also bidirected edges representing latent confounders. Although the FCI algorithm sacrifices the computational cost, it can be used in broader situations. Another problem can arise from the assumption of static data properties. The real data that we analyze often have temporal structures that we call time-series data. In such cases, the PC algorithm can be applied by expanding the causal graphs in the temporal direction. In both cases, the qPC algorithm can be applied with modifications to the PC algorithm.

7 Proof of Lemma 1↩︎

For a given differentiable scalar-valued function \(f(\mathbf{A})\) of matrix \(\mathbf{A}\), it should be noted that \[\begin{align} \frac{d f}{d z} = \sum_{kl} \frac{\partial f}{\partial A_{kl}} \frac{\partial A_{kl}}{\partial z} = {\rm Tr} \left[ \left[ \frac{\partial f}{\partial \mathbf{A}} \right]^T \frac{\partial \mathbf{A}}{\partial z} \right]. \end{align}\] Furthermore, if matrix \(\mathbf{S}\) is symmetric, we derive \[\begin{align} \frac{\partial \mathbf{S}}{\partial S_{ij}} = J^{ij} + J^{ji} - J^{ij}J^{ij}, \end{align}\] where \(J^{ij}\) denotes a single-entry matrix. Thus, for a given scalar function \(f(\mathbf{S})\), we derive \[\begin{align} \frac{d f}{d \mathbf{S}} = \left[ \frac{\partial f}{\partial \mathbf{S}} \right] + \left[ \frac{\partial f}{\partial \mathbf{S}} \right]^T - {\rm diag} \left[ \frac{\partial f}{\partial \mathbf{S}} \right]. \label{eq:d95f95sym} \end{align}\tag{6}\] In particular, for matrix \(\mathbf{A}\) and symmetric matrix \(\mathbf{S}\), Eq. 6 results in \[\begin{align} \frac{\partial {\rm Tr}[ \mathbf{AS} ]}{\partial \mathbf{S}} = \mathbf{A} + \mathbf{A}^T - (\mathbf{A} \circ \mathbf{I}). \end{align}\]

Using the above equations, we can calculate the following: \[\begin{align} \frac{\partial}{\partial \theta} {\rm Tr} \left[ \mathbf{K}_X \mathbf{K}_Y \right] &=& {\rm Tr} \Biggl[ \left( \frac{\partial {\rm Tr}[\mathbf{K}_X \mathbf{K}_Y]}{\partial \mathbf{K}_X} \right)^T \frac{\partial \mathbf{K}_X}{\partial \theta} + \left( \frac{{\partial \rm Tr}[\mathbf{K}_X \mathbf{K}_Y]}{\partial \mathbf{K}_Y} \right)^T \frac{\partial \mathbf{K}_Y}{\partial \theta} \Biggr] \\ &=& {\rm Tr} \left[ \left( \frac{\partial {\rm Tr}[\mathbf{K}_X \mathbf{K}_Y]}{\partial \mathbf{K}_X} \right)^T \frac{\partial \mathbf{K}_X}{\partial \theta} \right] \\ &=& {\rm Tr} \left[ \left( 2 \mathbf{K}_Y - \mathbf{K}_Y \circ \mathbf{I} \right) \partial_{\theta} \mathbf{K}_X \right], \tag{7} \\ \frac{\partial}{\partial \theta} {\rm Tr} \left[ \mathbf{K}_X^2 \right] &=& {\rm Tr} \left[ \left( \frac{\partial {\rm Tr}[\mathbf{K}_X^2]}{\partial \mathbf{K}_X} \right)^T \frac{\partial \mathbf{K}_X}{\partial \theta} \right] \\ &=& {\rm Tr} \left[ \left( 4 \mathbf{K}_X - 2\mathbf{K}_X \circ \mathbf{I} \right) \partial_{\theta} \mathbf{K}_X \right]. \tag{8} \end{align}\] Therefore, we derive that \[\begin{align} \frac{\partial f}{\partial \theta} &=& - \frac{ \partial_{\theta} {\rm Tr}[ \mathbf{K}_X \mathbf{K}_Y ]}{{\rm Tr}[ \mathbf{K}_X \mathbf{K}_Y ]} + \frac{ \partial_{\theta} {\rm Tr}[ \mathbf{K}_X^2 ]}{2{\rm Tr}[ \mathbf{K}_X^2 ]} + \frac{ \partial_{\theta} {\rm Tr}[ \mathbf{K}_Y^2 ]}{2{\rm Tr}[ \mathbf{K}_Y^2 ]} \\ &=& - \frac{{\rm Tr} \left[ \left( 2 \mathbf{K}_Y - \mathbf{K}_Y \circ \mathbf{I} \right) \partial_{\theta} \mathbf{K}_X \right]}{{\rm Tr}[ \mathbf{K}_X \mathbf{K}_Y ]} + \frac{{\rm Tr} \left[ \left( 2 \mathbf{K}_X - \mathbf{K}_X \circ \mathbf{I} \right) \partial_{\theta} \mathbf{K}_X \right]}{{\rm Tr}[ \mathbf{K}_X^2 ]}. \end{align}\] The case of \(\partial_{\phi} f\) can be derived similarly.

8 Comparison of the proposed optimization method with median heuristic in classical cases↩︎

Here, we discuss the applicability of our kernel choice method to classical kernels. As mentioned in the main text, the proposed method for determining the kernel hyperparameters can be applied to both classical and quantum kernels. Here, we used KTA-based optimization to adjust the Gaussian kernel bandwidths.

Figure shows the comparison of the heuristics and optimized results under the conditions considered in Fig. 3. The Gaussian kernels with optimized bandwidth succeeded in reconstructing the causal structures for the three junction patterns more accurately than the kernels with heuristic widths. These results suggest the utility of the proposed kernel choice method for classical kernels as well as for causal discovery.

9 Details of quantum circuits↩︎

Here, we describe the quantum circuit candidates used in this study. As described in Sec. 4.1, the structure of quantum circuit \(U(\mathbf{x})\), called as “ansatz," is composed of three parts: the initialization \(U_\mathrm{init}\), data embedding \(U_{\mathrm{emb}}(\mathbf{x})\), and entangling \(U_{\mathrm{enc}}\) parts, as shown in Fig. 5. In addition, the amount of data re-uploaded, called the depth \(n_{\mathrm{dep}}\), is a major degree of freedom in quantum circuits. We compared the performance of the causal discovery problems with various combinations of components. This lineup is illustrated in (Fig. 9) as follows: \(U_{\mathrm{init}} \in \{\mathrm{None}, H, S, T\}\), \(U_{\mathrm{emb}}(\mathbf{x}) \in \{RY, RXRZ\}\), \(U_{\mathrm{ent}} \in \{CX, CZ, \sqrt{\mathrm{iSWAP}}\}\{\mathrm{ladder, circ, all\_to\_all}\}\), and \(n_{\mathrm{dep}} \in \{1, 4, 16\}\) for junction pattern experiments and \(n_{\mathrm{dep}} \in \{5\}\) for real world data experiments. These candidates were partially selected based on the expressibility reported by [51] and [52]; however, we did not observe a clear correlation between ansatz expressibility and causal discovery performance.

Finally, we describe the quantum circuit used to generate the dataset in Sec. 2.2 in Fig. 10. Using this data generator, input vector \(\mathbf{x} \in [0, \pi]^2\) is mapped to \([0, 1]^2\) via quantum operation. We found that analyzing the dataset generated by this procedure is difficult for classical methods such as the Gaussian kernel, but can be handled effectively by quantum kernel methods.

Figure 9: Elements of the quantum circuit

Figure 10: Quantum circuit of the data generator used in Sec. 2.2