Hidden Data Privacy Breaches in Federated Learning

November 27, 2024

Abstract

Federated Learning (FL) emerged as a paradigm for conducting machine learning across broad and decentralized datasets, promising enhanced privacy by obviating the need for direct data sharing. However, recent studies show that attackers can steal private data through model manipulation or gradient analysis. Existing attacks are constrained by low theft quantity or low-resolution data, and they are often detected through anomaly monitoring in gradients or weights. In this paper, we propose a novel data-reconstruction attack leveraging malicious code injection, supported by two key techniques, i.e., distinctive and sparse encoding design and block partitioning. Unlike conventional methods that require detectable changes to the model, our method stealthily embeds a hidden model using parameter sharing to systematically extract sensitive data. The Fibonacci-based index design ensures efficient, structured retrieval of memorized data, while the block partitioning method enhances our method’s capability to handle high-resolution images by dividing them into smaller, manageable units. Extensive experiments on 4 datasets confirmed that our method is superior to the five state-of-the-art data-reconstruction attacks under the five respective detection methods. Our method can handle large-scale and high-resolution data without being detected or mitigated by state-of-the-art data reconstruction defense methods. In contrast to baselines, our method can be directly applied to both FedAVG and FedSGD scenarios, underscoring the need for developers to devise new defenses against such vulnerabilities. We will open-source our code upon acceptance.

1 Introduction↩︎

Federated Learning (FL) [1], [2] has emerged as a solution to the increasing concerns over user data privacy, allowing clients to partake in large-scale model training without sharing their private data. In a typical FL cycle, the server dispatches the model to clients for local training using their private data, after which the clients return their updates. These updates are then aggregated by the server to refine the global model, setting the stage for subsequent iterations. Despite its privacy-centric design, recent studies have revealed that servers, even when operating passively, can infer information about client data from their shared gradients [3]–[9], thus compromising the fundamental privacy guarantee of federated learning.

In the field of data reconstruction attacks, server attacks in FL can be categorized into passive and active attacks. Passive attacks analyze transmitted data without modifying the FL protocol, typically using iterative optimization or analytical methods to reconstruct training data [10]–[13]. Iterative optimization treats reconstructed data as trainable parameters, aiming to match the generated gradients with the true gradient values [10]–[12], [14]–[17]. Analytical methods derive original training data directly from gradients using mathematical formulas [13], [18], [19]. Although effective, passive attacks struggle with large batch sizes and high-resolution datasets, depending on specific model structures, and are mitigated by secure aggregation methods [20], [21]. Active server attacks, in contrast, manipulate the training process by altering model structures or weights to extract private data [22], [23].

| RtF [24] | YES | NO | YES | 256 / 256 | YES | YES | ||||||||||||

| Boenisch et al. [25] | NO | YES | YES | 2 / 2 | NO | NO | ||||||||||||

| Fishing [26] | NO | YES | YES | 1 / 256 | NO | NO | ||||||||||||

| Inverting[12] | NO | YES | YES | 1 / 100 | NO | NO | ||||||||||||

| LOKI [22] | YES | YES | YES | 436 / 512 | YES | YES | ||||||||||||

| Boenisch et al. [27] | NO | YES | YES | 50 / 100 | NO | NO | ||||||||||||

| Zhang et al. [28] | NO | YES | YES | 64 / 128 | NO | NO | ||||||||||||

| Zhao et al. [29] | YES | YES | YES | 50 / 64 | YES | NO | ||||||||||||

| SEER [21] | NO | NO | NO | 1 / 512 | NO | NO | ||||||||||||

| Ours | NO | NO | NO | 512 / 512 | YES | YES |

\(^\dagger\) indicates requires inserting a malicious module into the architecture, e.g., placing a large dense layer in front or inserting customized convolutional kernels into the FL model.

\(^\mathsection\) denotes the corresponding amount of data stolen given the maximum amount of private training data that can be processed in each round.

\(^\$\) signifies that the attacks are also capable of recovering high-resolution images, making them applicable for targeting models trained on high-resolution datasets.

\(^*\) indicates the attack is effective in the FedAvg federated learning scenario.

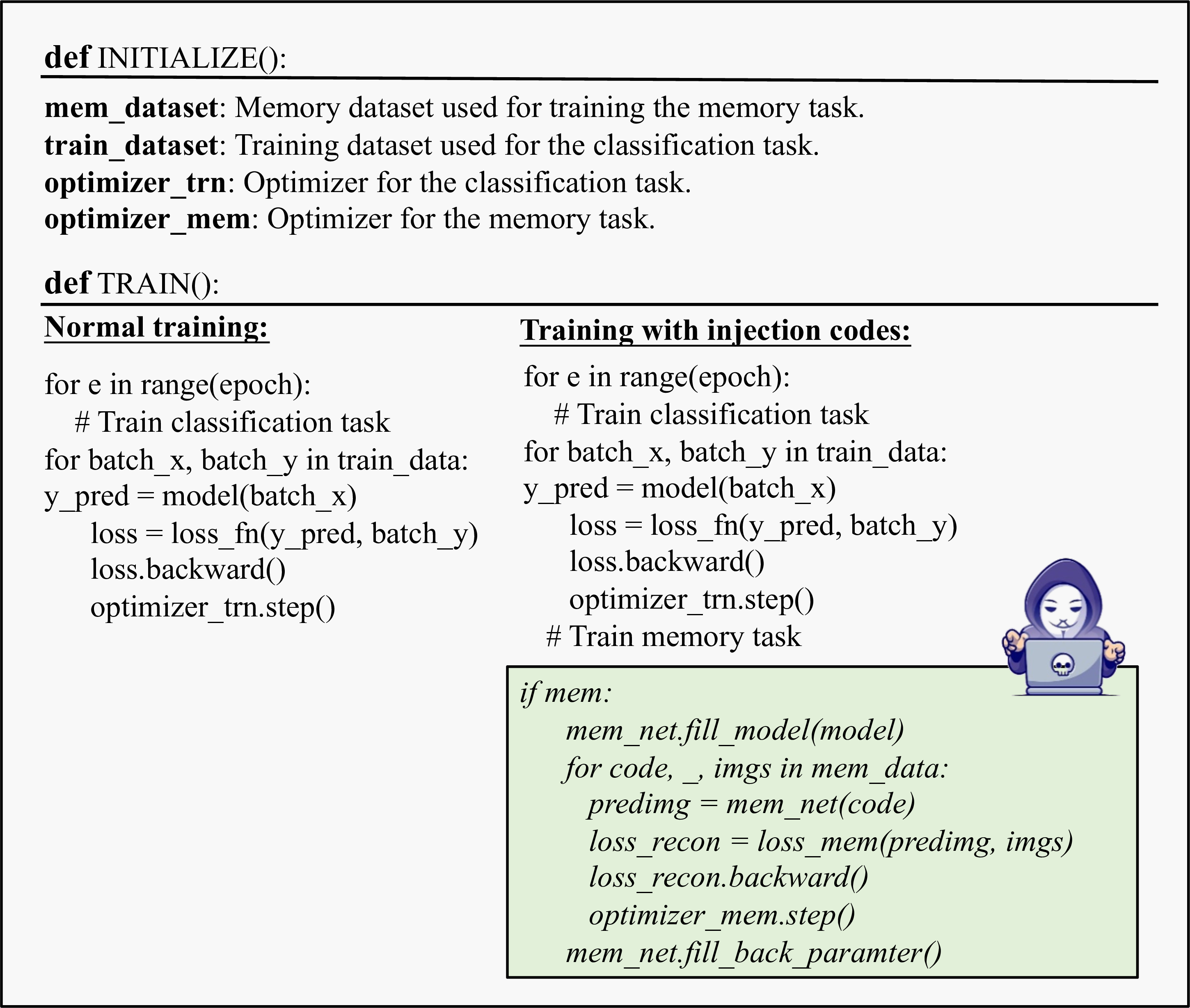

Figure 1: Examples of injected malicious code in our method. The green boxes highlight the sections where the malicious code needs to be injected.

We list the state-of-the-art active server attacks in Table 1. Most of the existing active server attacks focus on manipulating the model’s weights [23], [25]–[28] and structures [22], [24], [29] to conduct data reconstruction attacks. Parameter modification-based attacks rely on maliciously altering model parameters, such as weights and biases, to enhance the gradient influence of targeted data while diminishing that of other data. Structure modification-based attacks usually demand unusual changes to the architecture, like adding a large dense layer at the beginning or inserting customized convolutional kernels. However, the success of these attacks might heavily depend on the specific architecture and parameters of the model, limiting their applicability across different settings. To enhance the attack performance, some works even require the additional ability to introduce Sybil devices [25], send different updates to different users [22], [23], [29], or control the user sampling process [25], which make the attacks more easily detectable. Besides, most of the existing works are ineffective for reconstructing high-resolution data, especially in scenarios involving large batches [21]–[23], [25]–[29]. Moreover, it is shown that only a few attacks [22], [24] can also be applied to the FedAvg training scenario.

In this paper, we introduce a novel data reconstruction attack based on malicious code poisoning in FL. As shown in Figure 1, by injecting just a few lines of codes into the training process, our method covertly manipulates the model’s behavior to extract private data while maintaining normal operation. This approach leverages vulnerabilities in shared machine learning libraries [30]–[32], which often lack rigorous integrity checks, allowing attackers to introduce subtle modifications that evade detection. Many machine learning frameworks depend on third-party libraries that are not thoroughly vetted, making them susceptible to covert malicious modifications. Existing works show that attackers can introduce backdoors that execute the malicious code during the training process, without affecting the primary training objective [33].

To launch the attack, our method introduces a secret model that shares parameters with the local model, making it indistinguishable from the local model in terms of both structure and behavior. Unlike existing methods requiring significant changes to the model architecture, our method uses parameter sharing to memorize sensitive client data while preserving the model’s normal appearance. To enhance the attack performance, we also introduce a distinctive index design leveraging Fibonacci coding to efficiently retrieve memorized data, and a block partitioning strategy that enhances our method’s capacity to handle high-resolution images. Specifically, the distinctive index design ensures efficient and structured retrieval of memorized data, allowing the hidden model to systematically extract sensitive information based on unique codes. The block partitioning strategy allows our method to overcome the challenges of handling high-resolution data by dividing the data into smaller, manageable units, which are then processed in a way that maintains the effectiveness of the attack while minimizing the detection risk.

Our method consistently outperformed 5 state-of-the-art data-reconstruction attacks under 5 detection methods across 4 datasets. Our method is able to steal nearly 512 high-quality images per attack on CIFAR-10 and CIFAR-100, and nearly 64 high-quality images on ImageNet and CelebA, significantly higher than state-of-the-art baseline methods. These results demonstrate that our method exhibits robustness in handling high-resolution images and large-scale data theft scenarios.

To conclude, we make the following key contributions.

We propose a novel data reconstruction attack paradigm based on malicious code poisoning. Unlike previous approaches that require conspicuous modifications to architecture or parameters, which are easily detected, our method covertly trains a secret model within the local model through parameter sharing. This secret model is designed to memorize private data and is created by carefully selecting a few layers from the local model. Moreover, our method is model-agnostic, enabling seamless integration with various architectures without modifying their core structures.

To enhance the extraction performance, we propose a novel distinctive indexing method based on Fibonacci coding, which meets three key requirements: sparsity, differentiation, and label independence. We also propose a novel block partitioning strategy to overcome the limitations of existing optimization-based extraction methods when dealing with high-resolution datasets or large-scale theft scenarios.

Extensive experiments on 4 datasets confirm that our method outperforms 5 state-of-the-art data-reconstruction attacks in terms of both leakage rate and image quality. Our method is capable of handling large-scale and high-resolution data without being detected by 5 advanced defense techniques. Moreover, our method can also be easily transferred to an FL framework equipped with secure aggregation.

2 sec:Background↩︎

2.1 Malicious Code Poisoning↩︎

Malicious code poisoning involves the stealthy injection of harmful code into the training process of machine learning models1, enabling attackers to alter model behavior while remaining difficult to detect [33], [34]. Unlike traditional attack vectors that exploit vulnerabilities in model architecture or parameters, code poisoning specifically targets the training process, embedding malicious functionality at the code level.

One common approach to malicious code poisoning is through the exploitation of vulnerabilities in package management systems such as npm, PyPI, and RubyGems2. Attackers upload malicious packages to public repositories, often using the same names as internal libraries but with higher version numbers. This tricks dependency managers into downloading the malicious version instead of the intended internal one. These attacks are particularly challenging to detect because they exploit trusted sources, such as public repositories, which developers often assume to be reliable. For example, attackers may target widely used libraries like TensorFlow, embedding malicious code into critical functions such as train\(\_\)step. Since these libraries are highly trusted, developers often skip thorough code reviews, unknowingly installing compromised versions. Once executed, these packages can introduce backdoors, manipulate model behavior, or exfiltrate sensitive data. Recent research has shown that even widely-used machine learning repositories, such as FastAI [30], Fairseq [31], and Hugging Face [32], despite having thousands of forks and contributors, often rely on basic tests—such as verifying output shapes and basic functionality checks, making such code poisoning attacks more impactful and feasible.

Despite the privacy-focused design of federated learning, shared model updates can inadvertently expose sensitive information. We show that a malicious server can manipulate the model training code to reconstruct users’ training data, leading to significant security vulnerabilities.

2.2 Data Reconstruction Attacks against FL↩︎

In this section, we focus on providing an in-depth introduction to active server attacks. Unlike passive server attacks, the server can modify its behavior, such as the model architecture and model parameters sent to the user, to obtain training dataset information of the victim clients. Existing active server attacks can be categorized into three classes, i.e., parameter modification-based attacks, structure modification-based attacks, and handcrafted-modification-free attacks.

Parameter modification-based attacks. Wen et al. [26] introduce two “fishing" strategies, i.e., class fishing and feature fishing, to recover user data from gradient updates. Rather than altering the model architecture, they manipulate the model parameters sent to users by maliciously adjusting the weights in the classification layer, magnifying the gradient contribution of a target data point, and reducing the gradient contribution of other data. The class fishing strategy amplifies bias for non-target neurons in the last classification layer, reducing the model’s confidence in target class predictions and thus boosting the target data’s gradient impact. When dealing with batches containing several target class samples, feature fishing modifies weights and biases for these targets, adjusting the decision boundary to further isolate and emphasize the target data’s gradient. However, a single attack of [26] can only recover one sample, making it easy to be detected by users. Pasquini et al. [23] proposed a gradient suppression attack based on model inconsistency, degrading the aggregated gradient to that of the target user’s gradient, thereby breaking secure aggregation. Specifically, they send normal model weights to the target user, producing normal local gradients. For non-target users, they exploit the characteristic of ReLU neurons producing zero gradients when not activated, by sending malicious model weights to generate zero gradients. It is independent of the number of users participating in the secure aggregation. However, such methods can easily detected by users with a strong awareness of prevention. Zhang et al. [28] proposed reconstruction attacks based on the direct data leakage in the FC (fully-connected) layer [35]. However, gradient obfuscation within a batch significantly hinders its effectiveness. To address this challenge, Zhang et al. maliciously changed the model parameters to diminish the obfuscation in shared gradients. This strategy effectively compromises privacy in large-batch FL scenarios. However, this method assumes the server owns auxiliary data that is independently and identically distributed with users’ private trainsets, which is not practical in the real world.

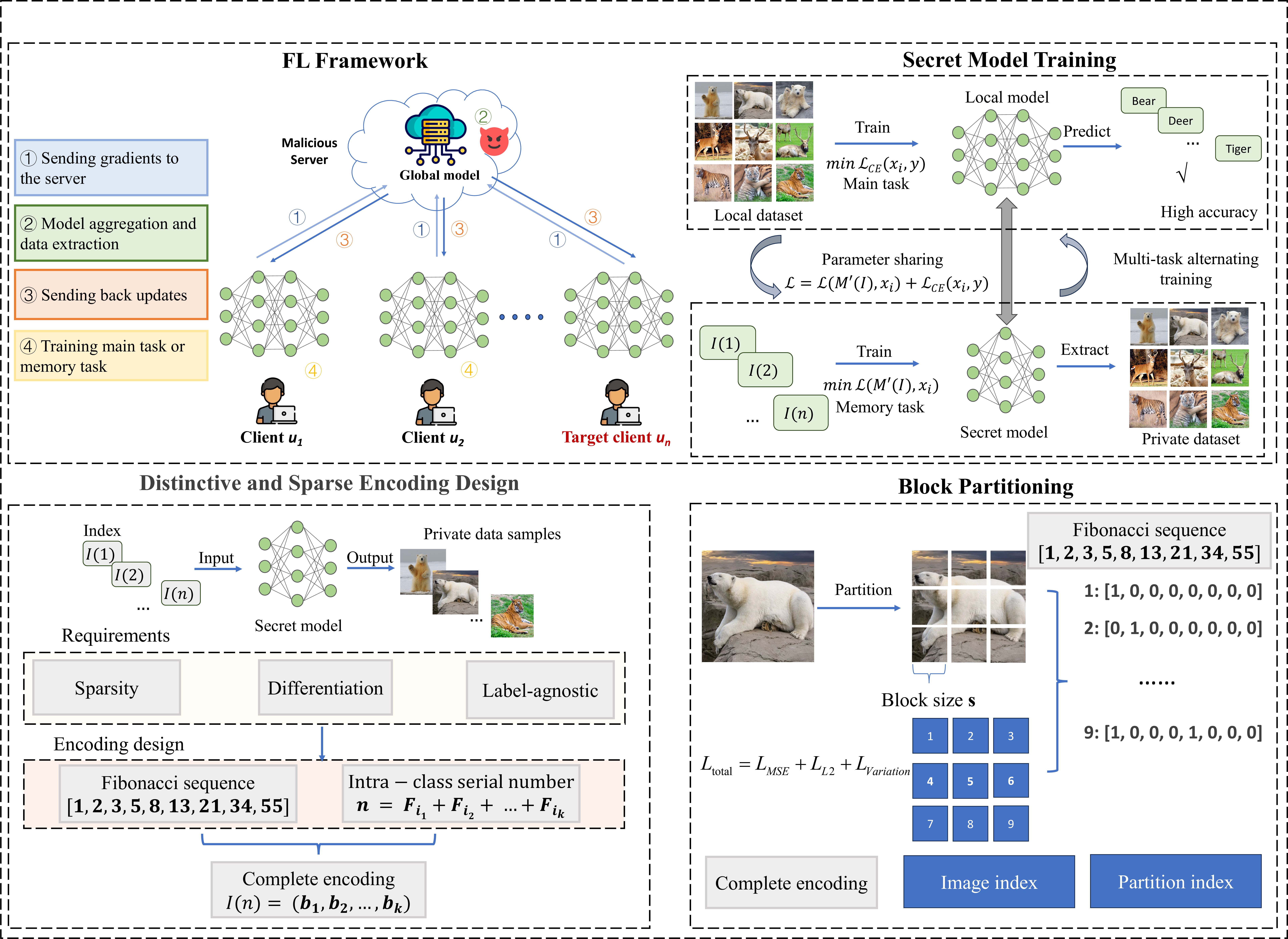

Figure 2: Overview of our method. our method features an active server attack designed to extract the training samples of victim clients. It is composed of three key modules: secret model training, distinctive and sparse encoding design, and block partitioning. Note that \(b_i\) represents the Fibonacci coding bits of \(n\).

Structure modification-based attacks. Fowl et al. [24] introduced a method to compromise user privacy by making small but harmful changes to the model architecture, allowing the server to directly obtain a verbatim copy of user data from gradient updates, bypassing complex inverse problems. This method involves manipulating model weights to isolate gradients in linear layers. Specifically, they estimate the cumulative distribution function for a basic dataset statistic like average brightness, then add a fully connected layer with ReLU activation and \(k\) output neurons, called the imprint module, at the beginning of the model. It is shown that even when user data is aggregated in large batches, it can be effectively reconstructed. Further, Zhao et al. [29] improved [24] by introducing an additional convolutional layer before the imprint module and assigning unique malicious convolutional kernel parameters to different users. This setup allows for an identity mapping of training data from different users to distinct output positions of the convolutional layer. By setting non-zero connection weights only for the current user’s training data output, they effectively isolate the weight gradients produced in the imprint module by different users. Consequently, the size of the imprint module is determined by the batch size rather than the number of users, significantly reducing the computing cost.

Recently, Zhao et al. [22] proposed LOKI, specifically designed to overcome the FL with FedAVG and secure aggregation. By manipulating the FL model architecture through the insertion of customized convolutional kernels for each client, LOKI enables the malicious server to separate and reconstruct private client data from aggregated updates. Each client receives a model with slightly different convolutional parameters (identity mapping sets), ensuring that the gradients reflecting their data remain distinct even in aggregated updates.

Handcrafted-modification-free attacks. Different from the above attacks, modification-free attacks do not rely on conspicuous parameter and structure modifications. Garov et al. [21] introduced SEER, an attack framework designed to stealthily extract sensitive data from federated learning systems. The key of SEER is the use of a secret decoder, which is trained in conjunction with the shared model. This secret decoder is composed of two main components: a disaggregator and a reconstruction. The disaggregator is to pinpoint and segregate the gradient of a specific data point according to a secret property, such as the brightest image in a batch, effectively nullifying the gradients of all non-matching samples. This isolated gradient is then passed to the reconstructor, which reconstructs the original data point. SEER is also an elusive attack that doesn’t visibly alter the model’s structure or parameters, making it harder to detect than other methods. However, it requires training a complex decoder, which can be resource-intensive. The attack’s success also relies on choosing a secret property that uniquely identifies one sample in a batch, making this selection crucial for its effectiveness. Additionally, in a single batch, the attacker can recover only one image, which limits the attack’s scalability.

In this paper, we propose a novel data reconstruction attack which leverages malicious code injection to covertly extract sensitive data. Unlike prior methods that require conspicuous modifications to the model architecture or parameters, our method embeds a secret model via parameter sharing, ensuring minimal detection risk. It introduces a block partitioning strategy for handling high-resolution data, while also employing a Fibonacci-based distinctive indexing method to streamline data retrieval and improve attack performance. Our method also operates without relying on auxiliary devices or user sampling manipulation, making it both more practical and less detectable in real-world federated learning settings.

2.3 Data Reconstruction Defenses against FL↩︎

A range of defensive strategies have been introduced to counter data reconstruction attacks, such as differential privacy, gradient compression, and feature disruption techniques. Additionally, secure aggregation has also demonstrated effectiveness in protecting against a subset of these attacks. Differential privacy (DP) [36] is a critical approach for quantifying and curtailing the exposure of individual-level information. With local DP, clients can apply a randomized mechanism to the gradients before uploading them to the server [37], [38]. DP can provide a worst-case information theoretic guarantee on information an adversary can glean from the data. However, DP-based methods often require adding much noise, which will affect the model’s utility. Gradient compression is another method shown to mitigate information leakage from gradients. A gradient sparsity rate of over 20% proves effective in resisting [10]. However, such methods are only effective for a small part of passive server attacks. Sun et al. [39] pointed out that the privacy leakage problem caused by gradient inversion mainly comes from feature leakage. Therefore, they perturb the network’s intermediate features by cropping, adding as little disturbance to the features as possible, making the reconstructed input from the perturbed features as different from the real input as possible, thus maintaining model performance while reducing the leakage of private information. However, this method is mainly designed for passive server attacks and has shown to be ineffective against advanced passive attacks [16].

The secure aggregation protocol [20] is a sophisticated multi-party computation (MPC) technique that enables a group of users to collectively compute the summation (a.k.a. aggregation) of their respective inputs. This protocol ensures that the server is only privy to the collective aggregate of all client updates, without access to the individual model updates from any specific client. Such a system is designed to preserve privacy during the federated learning process. It has been demonstrated that a range of attacks [26], [28] are rendered ineffective under secure aggregation. Recently, Garov et al. [21] presented D-SNR to effectively detect data reconstruction attacks. D-SNR measures the signal-to-noise ratio in the gradient space, identifying when a gradient from a single example dominates the aggregate gradient of a batch. It works by defining a property \(P\) to single out target examples and comparing individual gradients to the batch average. High D-SNR values indicate potential privacy leaks, allowing clients to opt out of training rounds that may compromise their data. This method provides a principled and cost-effective way to assess and safeguard against privacy breaches in federated learning setups. This method is shown to be effective for detecting attacks such as [23], [26].

In this paper, we will assess the resilience of our method against state-of-the-art defenses.

3 Detailed Construction↩︎

3.1 Threat Model↩︎

We assume there are two parties in the federated learning, i.e., the server \(S\) and the users \(U\). The users train the global model using the local dataset and return the updates to the server. The server’s objective is to clandestinely reconstruct the private training data of the target client, all while adhering to the standard federated learning protocol and avoiding detection by sophisticated defenses. We set the following abilities for the active server attacker.

Capability to aggregate client updates. The server can aggregate the updates submitted by clients. These updates may be processed by secure aggregation. We will discuss the effectiveness of our method in both cases.

Capability to distribute training codes to clients. The server can distribute the necessary training code or model parameters to the clients so they can perform local computations and updates before sending them back to the server.

We set the following limitations for the server.

No introduction of Sybil devices. The server is prohibited from integrating manipulated devices into the FL protocol. While these devices might return arbitrary gradients that could potentially assist the attacker in inferring target data gradients, such actions are easily detectable.

No control over user sampling. The server is not allowed to manipulate the user sampling process. Additionally, it is incapable of sending distinct updates to different users.

No unusual modifications to parameters and structure. The attacker is barred from making unusual modifications to the model’s structure, such as adding an excessively large dense layer at the beginning or integrating custom convolutional kernels into the model. Additionally, the attacker is prohibited from making unusual handcrafted modifications to the parameters of the shared model to evade detection.

3.2 Overview of our method↩︎

Our goal is not only to steal private data samples from the victim participants but also to systematically retrieve them based on their indices. By injecting just a few lines of malicious training code, we aim to embed a hidden model within the victim’s local model. This secret model, \(M'\), shares parameters \(\theta\) with the victim’s local model, making it indistinguishable from a normal local model in both appearance and memory usage. Unlike in multi-task learning, the main task and the memorization task are entirely separate, ensuring the memorization remains undetectable without prior knowledge of the secret model. To systematically retrieve the stolen data, we introduce a novel Fibonacci-based encoding algorithm that assigns a unique number to each memorized sample. Additionally, to address the parameter limitations of the secret model, we implement a block partitioning technique, splitting large images into smaller blocks for processing.

In conclusion, our method mainly contains three key modules, as shown in Figure 2.

Secret model training. Rather than introducing a foreign model to memorize private data, we hide a secret model within the local model through parameter sharing. The secret model shares the same memory space and consists of selected parameters from the local model. When the local model’s parameters are sent to the server, the server reconstructs the secret model and extracts the client’s training data. We consider several parameter selection methods and propose to use systematic sampling to select parameters for the secret model, ensuring even distribution across layers.

Distinctive and sparse encoding design. A distinctive index is used to systematically retrieve memorized data samples. The key is to design an encoding algorithm that assigns a unique number to each stolen data sample. We propose a novel, label-agnostic Fibonacci-based coding method that ensures clear differentiation between samples while reducing computational overhead to accelerate training.

Block partitioning. Due to the parameter limitations of memory models, extracting high-resolution images can be difficult. To overcome this, we use a block partitioning approach, where large images are split into smaller blocks. Each block is treated as a separate input for the memory task. Additionally, we adjust the encoding design to align with the block partitioning scheme.

3.3 Secret Model Training↩︎

We first flatten the local model’s parameters \(w\) into a parameter vector \(p = \{p_1, p_2, p_3, \ldots, p_n\}\). We then select parameters from this vector to populate the secret model \(M'\). There are five methods to construct the secret model from the local model structure:

Random sampling. A straightforward approach is random sampling, where parameters are selected from the vector until the threshold number is met. However, this method may result in an uneven distribution of selected parameters across different layers, potentially impacting the model’s main task accuracy.

Random sampling with constraints. Another option is random sampling with constraints, which involves randomly selecting parameters from \(p\) while ensuring that no single layer is overrepresented in \(M'\). By setting limits on the number of parameters that can be taken from each layer, we achieve a more balanced parameter vector \(p'\).

Systematic sampling. A more systematic method is systematic sampling, where every \(k\)-th parameter from the original vector \(p\) is chosen. This ensures that the parameters are evenly distributed across the layers of the local model. For instance, if \(k = 2\), the parameter vector for \(M'\) would be \(p' = \{p_1, p_3, p_5, \ldots\}\).

Layer-wise sampling. Layer-wise sampling involves selecting parameters from specific layers based on their importance or contribution to the overall model performance. This method prioritizes critical layers while minimizing the impact on less important ones.

Importance-based sampling. Importance-based sampling selects parameters based on their significance to the model’s performance. By analyzing the importance distribution of model parameters, we select those that contribute most to the decrease in the loss function, \(\Delta L = L(p) - L(p')\). This ensures that \(M'\) contains the most representative and impactful parameters, capable of effectively memorizing the training data.

In our evaluation, we assess the effectiveness of these five methods, with a default preference for the systematic sampling method.

Given the predefined structure, the secret model \(M'\) is optimized as: \[\min \mathcal{L}_{dist}(M'(I), x_i)\] where \(I\) denotes the index of the data, and \(dist\) represents the distance function. The input to \(M'\) is the index \(I\), and the output is the stolen data. During training, the distance between the stolen data \(M'(I)\) and \(x_i\) is minimized. For images, the distance function \(dist\) could be either the \(L_1\) or \(L_2\) distance. In our work, we set \({L}_{dist}(M'(I), x_i)\) as follows: \[\label{memory95loss} \mathcal{L}_{dist}(M'(I), x_i) = \mathcal{L}_{1}(M'(I), x_i) + \mathcal{L}_{2}(M'(I), x_i)\tag{1}\]

Since \(M'\) and \(M\) (local model) share parameters, their gradient updates are also linked. However, because transferring parameters from \(M\) to \(M'\) is non-differentiable, joint optimization in a single step is not feasible. Therefore, we iteratively optimize \(M\) and \(M'\) to approximate simultaneous optimization. Specifically, we first fine-tune the local model \(M\) on training data point using \(\mathcal{L}_{CE}\), followed by training the secret model \(M'\) to memorize these samples using \(\mathcal{L}_{dist}\).

After receiving the updates from the clients, the server first reconstructs the secret model \(M'\) through the pre-designed parameter selection algorithm and then extracts the client’s training data by inputting the index. \(M'\) is reconstructed as: \[M' = \{p_i \mid i \equiv r \;(\text{mod} \;k)\}\] where \(p_i\) represents the \(i\)-th parameter from the parameter vector \(p\), \(r\) is a fixed offset \((1 \leq r < k)\) that determines the starting position for selecting parameters, and \(k\) is the systematic sampling interval. In our method, \(r=1\) and \(k=2\). This allows the server to systematically reconstruct the secret model from the shared parameters and subsequently retrieve the memorized data using the index \(I\).

3.4 Distinctive and Sparse Encoding Design↩︎

We aim to uniquely index each stolen data sample, assigning a distinct number to every sample. This helps the secret model \(M'\) learn and retrieve data more effectively. Let indexer \(I: \mathbb{N}_0 \to \mathbb{R}^n\) be a function that maps a natural number to a point in an \(n\)-dimensional Euclidean space, where \(I(i) \neq I(j)\) for all \(i \neq j\). The outputs of \(I(\cdot)\) are spatial indices. With an indexer, a user can systematically find every indexed point in \(\mathbb{R}^n\) by following the sequence \([I(0), I(1), \ldots]\). Previous data memorization attacks [40] leverage the intuition that neural networks can capture similarities using Euclidean distance internally. It combines one-hot encoding and Gray code to propose a spatial index. The specific calculation formula is as follows: \[I(i, c) = \text{Gray}(i) + E(c)\] where \(I(i, c)\) is the spatial index for the \(i\)-th item in class \(c\). \(\text{Gray}(i)\) is the \(n\)-bit Gray code of \(i\), and \(E(c)\) is a vector representing the class encoding. One implementation of \(E(c)\) is to use one-hot encoding for each class multiplied by \(n\), where \(n\) is the value used in the \(n\)-bit Gray code. This ensures that the indices between classes are orthogonal and non-repetitive.

While effective in most cases, this approach struggles when multiple stolen data samples belong to the same class, resulting in insufficient distinction between codes. For example, a 10-dimensional code for the first sample in class 0 is: \[\begin{align} (0, 0, 0, 0, 0, 0, 0, 0, 0, 1) + (0, 0, 0, 0, 0, 0, 0, 0, 0, 1) \times 10 = \\ (0, 0, 0, 0, 0, 0, 0, 0, 0, 11) \end{align}\] and the second sample in class 0 is: \[\begin{align} (0, 0, 0, 0, 0, 0, 0, 0, 0, 2) + (0, 0, 0, 0, 0, 0, 0, 0, 0, 1) \times 10 = \\ (0, 0, 0, 0, 0, 0, 0, 0, 0, 12) \end{align}\] In this case, the two encodings differ by only one bit, the secret model is required to reconstruct two completely different images. Since the secret model is a fully connected neural network (FCNN), this presents a challenge. The FCNN performs linear transformations through weight matrices and nonlinear transformations through activation functions, similar inputs produce similar intermediate feature representations. As a result, the FCNN struggles to learn sufficient discrimination during training, leading to confusion in the output images. Therefore, we need a better indexing method to address the issue of insufficient distinction between codes when belonging to the same class.

Additionally, since we use FCNN, using sparse and distinctive vectors as input can improve the model’s learning efficiency and representation capacity. Sparse vectors, where most elements are zero, allow FCNNs to compute only the parts corresponding to non-zero elements, reducing parameter updates and gradient calculation complexity, thereby accelerating the training process.

Moreover, in prior reconstruction attacks [40], adversaries relied on local data labels to infer encoding schemes, limiting attack effectiveness. It requires the server to either know or accurately estimate the local data distribution for a successful attack. To simplify the encoding process and make attacks more practical, a label-agnostic encoding scheme is also required.

We summarize our requirements for the encoding as follows:

Differentiation: Encodings for different samples must be sufficiently distinct, even when their indices are close. This ensures that the linear model can map each input to a unique output, minimizing errors in retrieval.

Sparsity: Sparse codes, where only a small fraction of bits are non-zero, reduce the computational load during training. This allows the model to focus on meaningful elements, speeding up convergence and improving training efficiency.

Label Independence: The server no longer needs to know or estimate the local data labels to understand the encoding scheme. This makes the attack more practical and robust against varying local data distributions. With knowledge of the total number of images in the local dataset, the server can effortlessly determine the encoding scheme. This simplifies the server’s inference process and reduces the computational overhead.

To achieve these three requirements, we design a novel distinctive indexing method based on Fibonacci coding [41]. Fibonacci coding is a universal code that uses only 0 and 1, where each digit’s position corresponds to a Fibonacci number. Fibonacci coding is designed based on the properties of the Fibonacci sequence, where the specific digits correspond to the size of the number and its representation in the Fibonacci sequence. This means that even if two decimal numbers are very close, their Fibonacci codes can differ significantly in many positions, providing excellent distinction. Based on Zeckendorf’s theorem [42], any natural number \(n\) can be uniquely represented as a sum of Fibonacci numbers. The properties of Fibonacci coding satisfy our requirements for code distinction and sparsity. To eliminate the reliance on labels, we simply assign sequential indices to images (e.g., from 1 to 500), which simplifies the encoding process and makes the attacks more practical.

The encoding process of our method can be described in the following steps. First, we define the Fibonacci sequence for encoding: \[.\] This sequence allows us to encode indices up to 142, and it can be extended further if needed to support larger datasets.

Next, for a given index \(n\), we express it as a sum of non-consecutive Fibonacci numbers: \[n = F_{i_1} + F_{i_2} + \cdots + F_{i_k}\] where \(F_{i_j}\) are Fibonacci numbers from the defined sequence. We set the corresponding bit positions in the encoding vector to 1.

For example, the index 49 can be represented as: \[49 = 34 + 13 + 2\] Thus, the Fibonacci code for 49 is: \[(0, 1, 0, 0, 0, 1, 0, 1, 0)\]

Each sample is assigned a unique binary code based on its index. This encoding is independent of any label information, ensuring that the process is purely index-based. The complete encoding function \(E\) for a given index \(n\) is defined as: \[E(n) = (b_1, b_2, \ldots, b_k)\] where \(b_i\) represents the Fibonacci coding bits of \(n\).

3pt

| CIFAR-10 dataset | |||||||||

| Baselines | Metrics\(^\dagger\) | \(N=16\) | \(N=32\) | \(N=64\) | \(N=128\) | \(N=256\) | \(N=512\) | ||

| Leakage (\(\uparrow\)) | 8.2 \(\pm\) 4.0 | 2.4 \(\pm\) 2.0 | 0.6 \(\pm\) 0.8 | 0.2 \(\pm\) 0.4 | 0.0 \(\pm\) 0.0 | 0.0 \(\pm\) 0.0 | |||

| SSIM (\(\uparrow\)) | 0.506 \(\pm\) 0.034 | 0.394 \(\pm\) 0.023 | 0.306 \(\pm\) 0.037 | 0.250 \(\pm\) 0.035 | 0.197 \(\pm\) 0.019 | 0.151 \(\pm\) 0.010 | |||

| PSNR (\(\uparrow\)) | 15.994 \(\pm\) 0.441 | 14.882 \(\pm\) 0.385 | 14.378 \(\pm\) 0.387 | 13.983 \(\pm\) 0.559 | 13.504 \(\pm\) 0.319 | 13.103 \(\pm\) 0.330 | |||

| LPIPS (\(\downarrow\)) | 0.457 \(\pm\) 0.010 | 0.490 \(\pm\) 0.021 | 0.518 \(\pm\) 0.013 | 0.547 \(\pm\) 0.010 | 0.548 \(\pm\) 0.007 | 0.557 \(\pm\) 0.009 | |||

| 1-8 RtF | Leakage (\(\uparrow\)) | 11.5 \(\pm\) 0.3 | 11.2 \(\pm\) 1.1 | 4.2 \(\pm\) 0.6 | 1.6\(\pm\) 0.2 | 0.7 ± 0.3 | 1.4 ± 0.3 | ||

| SSIM (\(\uparrow\)) | 0.670 \(\pm\) 0.018 | 0.407 \(\pm\) 0.023 | 0.198 \(\pm\) 0.013 | 0.070 \(\pm\) 0.007 | 0.195 ± 0.049 | 0.280 ± 0.036 | |||

| PSNR (\(\uparrow\)) | 19.931 \(\pm\) 0.417 | 13.115 \(\pm\) 0.456 | 8.848 \(\pm\) 0.293 | 6.358 \(\pm\) 0.116 | 7.885 ± 0.939 | 8.601 ± 0.688 | |||

| LPIPS (\(\downarrow\)) | 0.205 \(\pm\) 0.015 | 0.390 \(\pm\) 0.018 | 0.521 \(\pm\) 0.007 | 0.577 \(\pm\) 0.007 | 0.504 ± 0.042 | 0.485 ± 0.023 | |||

| 1-8 LOKI | Leakage (\(\uparrow\)) | 13.8 \(\pm\) 0.4 | 27.5 \(\pm\) 0.5 | 55.6 \(\pm\) 0.9 | 110.0 \(\pm\) 0.7 | 219.080 \(\pm\) 0.9 | 435.6 \(\pm\) 4.6 | ||

| SSIM (\(\uparrow\)) | 0.870 \(\pm\) 0.019 | 0.849 \(\pm\) 0.015 | 0.800 \(\pm\) 0.015 | 0.739 \(\pm\) 0.006 | 0.696 \(\pm\) 0.006 | 0.700 \(\pm\) 0.015 | |||

| PSNR (\(\uparrow\)) | 32.095 \(\pm\) 1.32 | 31.400 \(\pm\) 0.960 | 29.471 \(\pm\) 0.890 | 27.269 \(\pm\) 0.127 | 25.903 \(\pm\) 0.198 | 26.173 \(\pm\) 0.725 | |||

| LPIPS (\(\downarrow\)) | 0.071 \(\pm\) 0.011 | 0.086 \(\pm\) 0.010 | 0.114 \(\pm\) 0.009 | 0.151 \(\pm\) 0.004 | 0.177 \(\pm\) 0.004 | 0.173 \(\pm\) 0.008 | |||

| 1-8 | Leakage (\(\uparrow\)) | 16.0 \(\pm\) 0.0 | 32.0 \(\pm\) 0.0 | 64.0 \(\pm\) 0.0 | 128.0 \(\pm\) 0.0 | 256.0 \(\pm\) 0.0 | 512.0 \(\pm\) 0.0 | ||

| SSIM (\(\uparrow\)) | 1.000 \(\pm\) 0.000 | 1.000 \(\pm\) 0.000 | 1.000 \(\pm\) 0.000 | 0.999 \(\pm\) 0.001 | 0.934 \(\pm\) 0.015 | 0.785 \(\pm\) 0.021 | |||

| PSNR (\(\uparrow\)) | 68.734 \(\pm\) 0.465 | 67.565 \(\pm\) 0.446 | 64.764 \(\pm\) 0.711 | 59.978 \(\pm\) 1.394 | 30.394 \(\pm\) 0.778 | 23.122 \(\pm\) 0.130 | |||

| LPIPS (\(\downarrow\)) | 0.000 \(\pm\) 0.000 | 0.000 \(\pm\) 0.000 | 0.000 \(\pm\) 0.000 | 0.001 \(\pm\) 0.001 | 0.093 \(\pm\) 0.019 | 0.295 \(\pm\) 0.021 | |||

| CIFAR-100 dataset | |||||||||

| Baselines | Metrics\(^\dagger\) | \(N=16\) | \(N=32\) | \(N=64\) | \(N=128\) | \(N=256\) | \(N=512\) | ||

| Leakage (\(\uparrow\)) | 3.8 \(\pm\) 2.6 | 3.8 \(\pm\) 3.4 | 3.4 \(\pm\) 2.1 | 5.4 \(\pm\) 2.4 | 9.4 \(\pm\) 2.3 | 7.2 \(\pm\) 1.9 | |||

| SSIM (\(\uparrow\)) | 0.388 \(\pm\) 0.053 | 0.301 \(\pm\) 0.047 | 0.248 \(\pm\) 0.018 | 0.224 \(\pm\) 0.014 | 0.215 \(\pm\) 0.016 | 0.190 \(\pm\) 0.009 | |||

| PSNR (\(\uparrow\)) | 15.582 \(\pm\) 0.921 | 14.463 \(\pm\) 0.494 | 13.896 \(\pm\) 0.383 | 13.725 \(\pm\) 0.186 | 13.590 \(\pm\) 0.223 | 13.348 \(\pm\) 0.070 | |||

| LPIPS (\(\downarrow\)) | 0.475 \(\pm\) 0.018 | 0.499 \(\pm\) 0.017 | 0.528 \(\pm\) 0.007 | 0.533 \(\pm\) 0.004 | 0.549 \(\pm\) 0.009 | 0.559 \(\pm\) 0.012 | |||

| 1-8 RtF | Leakage (\(\uparrow\)) | 11.3 \(\pm\) 0.3 | 11.6 \(\pm\) 1.3 | 5.0 \(\pm\) 0.3 | 2.7 \(\pm\) 0.4 | 0.9 ± 0.2 | 1.6 ± 0.2 | ||

| SSIM (\(\uparrow\)) | 0.655 \(\pm\) 0.028 | 0.421 \(\pm\) 0.027 | 0.209 \(\pm\) 0.010 | 0.106 \(\pm\) 0.012 | 0.229 ± 0.026 | 0.297 ± 0.034 | |||

| PSNR (\(\uparrow\)) | 19.656 \(\pm\) 0.779 | 13.784 \(\pm\) 0.588 | 8.860 \(\pm\) 0.261 | 6.820 \(\pm\) 0.245 | 7.446 ± 0.820 | 9.075 ± 1.139 | |||

| LPIPS (\(\downarrow\)) | 0.213 \(\pm\) 0.016 | 0.371 \(\pm\) 0.018 | 0.521 \(\pm\) 0.006 | 0.570 \(\pm\) 0.006 | 0.522 ± 0.011 | 0.475 ± 0.007 | |||

| 1-8 LOKI | Leakage (\(\uparrow\)) | 13.6 \(\pm\) 1.0 | 26.6 \(\pm\) 1.4 | 54.8 \(\pm\) 2.4 | 113.6 \(\pm\) 3.4 | 221.8 \(\pm\) 5.9 | 432.4 \(\pm\) 8.6 | ||

| SSIM (\(\uparrow\)) | 0.856 \(\pm\) 0.074 | 0.854 \(\pm\) 0.046 | 0.794 \(\pm\) 0.037 | 0.740 \(\pm\) 0.042 | 0.701 \(\pm\) 0.024 | 0.705 \(\pm\) 0.024 | |||

| PSNR (\(\uparrow\)) | 33.282 \(\pm\) 6.744 | 31.572 \(\pm\) 3.341 | 27.907 \(\pm\) 2.039 | 26.638 \(\pm\) 2.045 | 26.026 \(\pm\) 1.137 | 5.810 \(\pm\) 0.888 | |||

| LPIPS (\(\downarrow\)) | 0.073 \(\pm\) 0.043 | 0.083 \(\pm\) 0.024 | 0.125 \(\pm\) 0.021 | 0.155 \(\pm\) 0.029 | 0.182 \(\pm\) 0.014 | 0.180 \(\pm\) 0.016 | |||

| 1-8 | Leakage (\(\uparrow\)) | 16.0 \(\pm\) 0.0 | 32.0 \(\pm\) 0.0 | 64.0 \(\pm\) 0.0 | 128.0 \(\pm\) 0.0 | 256.0 \(\pm\) 0.0 | 505.8 \(\pm\) 5.19 | ||

| SSIM (\(\uparrow\)) | 1.000 \(\pm\) 0.000 | 1.000 \(\pm\) 0.000 | 1.000 \(\pm\) 0.000 | 0.999 \(\pm\) 0.001 | 0.920 \(\pm\) 0.014 | 0.702 \(\pm\) 0.034 | |||

| PSNR (\(\uparrow\)) | 68.520 \(\pm\) 0.887 | 65.489 \(\pm\) 0.431 | 62.697 \(\pm\) 0.230 | 56.803 \(\pm\) 0.815 | 29.916 \(\pm\) 0.619 | 22.281 \(\pm\) 0.436 | |||

| LPIPS (\(\downarrow\)) | 0.000 \(\pm\) 0.000 | 0.000 \(\pm\) 0.000 | 0.000 \(\pm\) 0.000 | 0.001 \(\pm\) 0.001 | 0.102 \(\pm\) 0.016 | 0.340 \(\pm\) 0.021 | |||

\(^\dagger\) (\(\uparrow\)) signifies that a higher value is preferable, while (\(\downarrow\)) indicates that a lower value is more desirable.

3.5 Block Partitioning↩︎

Given the parameter limitations of memory models, extracting high-resolution images can be challenging. To address this, we adopt a block partitioning scheme, in which large images are divided into smaller blocks. Each block is then used as an individual input for the memory task.

To ensure smoothness between adjacent blocks, we introduce a variation loss into the loss function. This variation loss helps maintain continuity and coherence across blocks. Specifically, let \(\mathbf{I}\) be the input image with dimensions \(B \times C \times H \times W\), where \(B\) is the batch size, \(C\) is the number of channels, \(H\) is the height, and \(W\) is the width. Let \(s\) be a hyperparameter representing the block size. The variation loss \(L_{\text{var}}\) is given by: \[\begin{gather} L_{\text{var}} = \frac{\lambda}{B \cdot C \cdot H \cdot W} \Bigg( \sum_{i,j} \left( \mathbf{I}_{i,j,s-1:-1:s,:} - \mathbf{I}_{i,j,s::s,:} \right)^2 \\ + \sum_{i,j} \left( \mathbf{I}_{i,j,:,s-1:-1:s} - \mathbf{I}_{i,j,:,s::s} \right)^2 \Bigg), \end{gather}\] where \(\lambda\) is a weighting factor that controls the importance of the variation loss. With the addition of the total variational loss, the loss function for the memory task can be further extended from Eq. 1 as follows: \[\mathcal{L}_{dist}(M'(I), x_i) = \mathcal{L}_{1}(M'(I), x_i) + \mathcal{L}_{2}(M'(I), x_i) + L_{\text{var}}\] To this end, we can not only extend memory constraints but also ensure that the reconstructed image maintains a high degree of quality and smoothness across block boundaries.

However, since the previous spatial index was limited to indexing entire images, we need to extend it to accommodate block-wise indexing within each image. To achieve this, we append additional digits to the original sample code to represent the block number for each sample, while continuing to use the Fibonacci coding method. For high-resolution images, the updated encoding function \(E_h\) is defined as: \[E_h(n, c, p) = (b_1, b_2, \ldots, b_k, c, p_1, \ldots, p_n),\] where \(p_i\) denotes the Fibonacci encoding of the block number \(p\). After extracting each block, the blocks can be concatenated to reconstruct the entire image. This extension allows us to handle large-scale images efficiently, ensuring precise indexing and smooth reconstruction.

4 Experiment↩︎

4.1 Experiment Setup↩︎

Datasets and Models We conduct experiments on various vision tasks, covering multiple datasets, including CIFAR-10 [43], CIFAR-100 [43], and MINI-ImageNet3 [44], and CelebA [45]. In our experiments, we employed ResNet-18 architectures to train models for these datasets, respectively. More details are shown in the appendix.

Baseline Data Reconstruction Attacks. We compare our method with 5 state-of-the-art data reconstruction attacks, including Transpose Attack [40], Robbing the Fed (RtF) [24], LOKI [22], SEER [21], and Inverting [12]. We run these baselines according to their open-sourced codes.

Evaluation Metrics We evaluate the effectiveness of our method through three metrics, i.e., leakage or leakage rate, SSIM, PSNR, and LPIPS.

We evaluate both FedAvg and FedSGD frameworks. In FedAvg, each client trains on its entire local dataset for 10 epochs before sending the updated model parameters to the server. In FedSGD, each client trains on a single batch and sends the gradient updates directly to the server. We set the number of participating clients to 20, using a Dirichlet distribution with parameter 0.6 to simulate unbalanced data across clients.

More details of the datasets, models, and evaluation metrics are shown in the appendix. All experiments were conducted on an Ubuntu 20.04 system with a 20-core Intel CPU. The models were trained on a single NVIDIA RTX 4090 GPU.

4.2 Comparison with Baselines↩︎

We compare our method with 5 state-of-the-art data reconstruction attacks. We mainly target the FedAvg scenario, where each client trains on the entire dataset in each round. We show that our method can also applied to the FedSGD scenario. Note that for schemes based on leakage through linear layers, such as LOKI and RtF, their target is to steal all images in the training set, so in experiments, \(N\) directly represents the size of the local dataset of each user. In contrast, for Transpose Attack and our method, \(N\) represents the specific target number of images to be stolen, which is less than the total size of the local dataset, presenting a higher level of attack difficulty. Since Fishing, SEER, and Inverting rely on gradient disaggregation and are designed for the FedSGD scenario, they are excluded from the FedAvg context. The comparison results are shown in Table 2, Table 9 (appendix), and Table 10 (appendix).

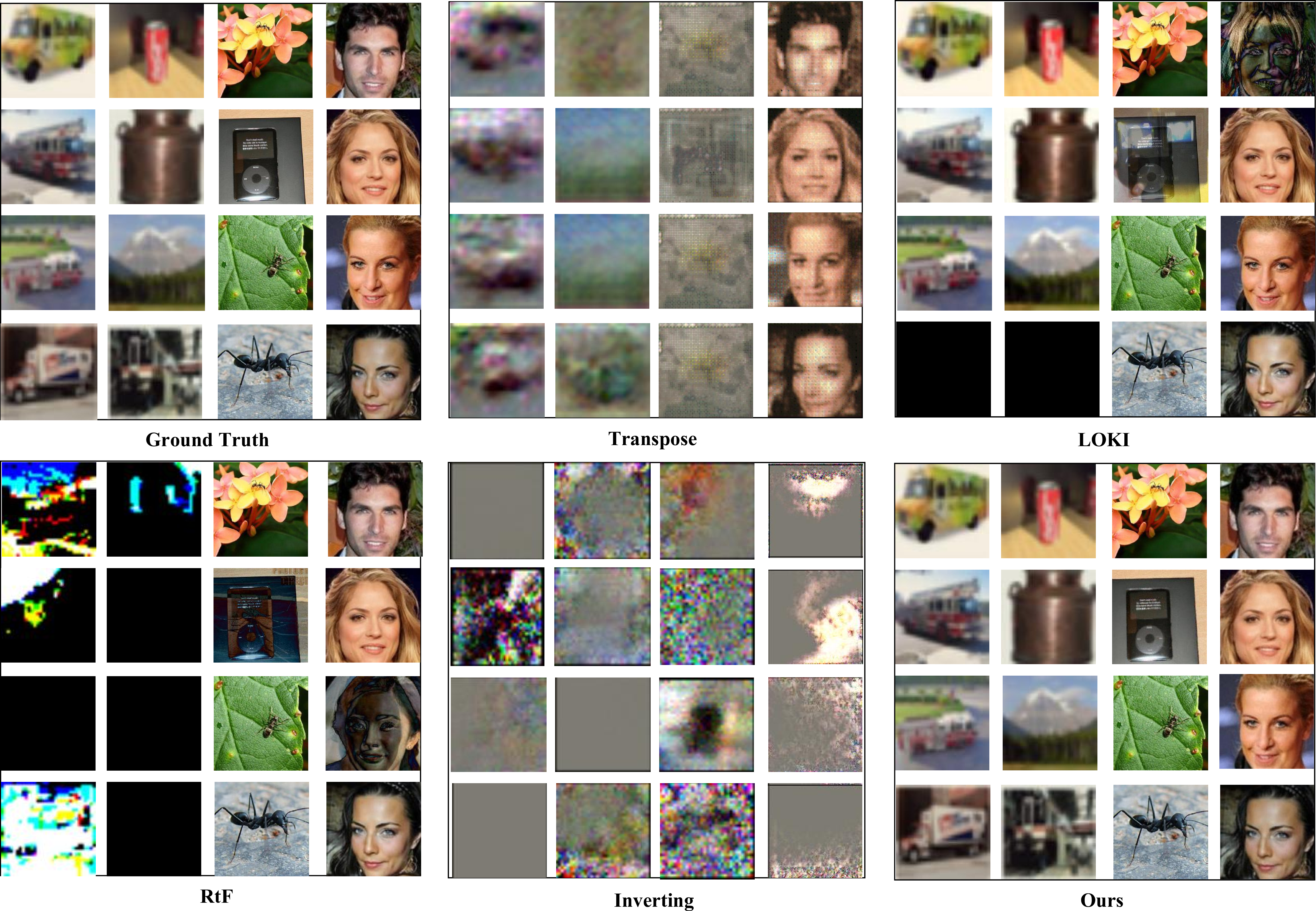

Figure 3: Comparison of our method with baselines in terms of the reconstruction sample quality.

Our method consistently outperforms the baselines in terms of the number of leaked samples across all datasets in the FedAvg scenario. For instance (\(N=16\)), our method achieves a leakage of 16 on CIFAR-10 and CIFAR-100 datasets, while the best-performing baseline, LOKI, reaches only 13.8 and 13.6, respectively. Additionally, our method surpasses baselines in image quality metrics, showing superior fidelity with significantly higher SSIM and PSNR scores and lower LPIPS values across most settings. Specifically, our method achieves an SSIM close to 1 when extracting 128 samples, with a PSNR of 60 and LPIPS of 0.001 on CIFAR-10, whereas LOKI reaches only 0.739 SSIM, 27.269 PSNR, and 0.151 LPIPS, followed by Transpose at 0.250 (SSIM), 13.983 (PSNR), and 0.547 (LPIPS), and RtF with 0.07 (SSIM), 6.358 (PSNR), and 0.577 (LPIPS).

In the FedSGD scenario, our method also achieves a higher leakage rate and superior image quality compared to baselines, especially for high-resolution datasets. For example, on the CelebA dataset, our method can extract 64 samples when \(N=64\), whereas the baselines SEER and Interting prove ineffective under the same conditions. This robust performance underscores our method’s effectiveness in maintaining high-quality data reconstruction while delivering greater data recovery precision than existing methods.

We also visualize the extracted samples from both the baselines and our method across all datasets, as shown in Figure 3. We set \(N = 512\) for CIFAR-10 and CIFAR-100 datasets, and set \(N = 16\) for high-resolution datasets. For Inverting, the grayscale result likely stems from optimization-based gradient averaging, which leads to detail loss. For RtF and LOKI, images that are accurately restored exhibit high quality. However, other images suffer from kernel collision issues, resulting in low quality and diminished effectiveness. For Transpose, due to the limited number of training epochs, the transpose fails to converge, resulting in poor performance. Since SEER does not allow direct specification of target samples, we did not include it in the visualization comparison. The results reveal that the images extracted by our method are noticeably clearer and more vivid compared to those from the baselines, highlighting our method’s superior extraction quality.

4.3 Ablation Study↩︎

Impact of parameter selection algorithms. There are five methods for constructing the secret model from the local model structure: Random, Random with Constraints, Systematic, Layer-wise, and Importance-based selection. Their performance comparisons are presented in Table 8 (appendix). Among these, the systematic method performs consistently well across all datasets. Although it may not be the best on every dataset, it delivers the highest overall results, especially on MINI-ImageNet, where it achieves over 90% leakage, while the other methods reach a maximum of 40%. Given its simplicity, ease of implementation, and time efficiency, we select this method as the default. Note that attackers can choose the optimal approach based on each dataset’s specifics.

Impact of block partitioning. For high-resolution datasets, we use a block partitioning approach, where large images are divided into smaller blocks. To assess the impact of different block sizes on extraction effectiveness and time, we set the leakage sample size to 40 and varied the block size. The results are presented in Table 7 (appendix). It can be observed that as the block size decreases, the extraction time increases. This is because smaller block sizes generate a larger number of segmented images, leading to longer training times for the secret model and more complex optimization. Regarding extraction effectiveness, it tends to improve with increasing block size up to a certain point, after which it starts to decline. In our experiments, block sizes of 16 or 28 strike a good balance between extraction effectiveness and time efficiency.

Impact of different encoding methods. We investigate the impact of different encoding methods in index design, considering three types: Binary, Gray, and Fibonacci encoding. The results are presented in Table 6 (appendix). For a fair comparison, all three methods incorporate intra-class serial numbers and append the class number to form the complete sample code. The results show that, in most cases, Fibonacci encoding achieves the best extraction performance. The potential reason is that Fibonacci encoding generates codes with high sparsity, meaning that during the encoding process, only a few bits are set to 1 while the rest are 0. In contrast, both binary encoding and Gray code exhibit weaker sparsity. Additionally, Fibonacci encoding avoids the occurrence of adjacent 1s, which enhances the distinction between codes and reduces the likelihood of interference.

| Baselines | Metrics\(^\dagger\) | With label | Without label | |

|---|---|---|---|---|

| Leakage Rate (\(\uparrow\)) | 90.2% | 99.4% | ||

| SSIM (\(\uparrow\)) | 0.618 | 0.703 | ||

| PSNR (\(\uparrow\)) | 20.566 | 21.867 | ||

| LPIPS (\(\downarrow\)) | 0.409 | 0.358 | ||

| Leakage Rate (\(\uparrow\)) | 1.4% | 98.6% | ||

| SSIM (\(\uparrow\)) | 0.199 | 0.663 | ||

| PSNR (\(\uparrow\)) | 14.244 | 21.514 | ||

| LPIPS (\(\downarrow\)) | 0.604 | 0.371 | ||

| MINI-ImageNet | Leakage Rate (\(\uparrow\)) | 31.3% | 89.8% | |

| SSIM (\(\uparrow\)) | 0.424 | 0.635 | ||

| PSNR (\(\uparrow\)) | 18.617 | 23.850 | ||

| LPIPS (\(\downarrow\)) | 0.569 | 0.412 | ||

| CelebA-Subset | Leakage Rate (\(\uparrow\)) | 30.5% | 100% | |

| SSIM (\(\uparrow\)) | 0.472 | 0.794 | ||

| PSNR (\(\uparrow\)) | 15.206 | 28.290 | ||

| LPIPS (\(\downarrow\)) | 0.541 | 0.292 |

Impact of label information in encoding. In our method, we introduce a novel label-agnostic image encoding scheme that separates the encoding process from local label information. We investigate the influence of label information on our method, with the results presented in Table 3. Notably, our method without label information achieves a leakage rate of 89.8% and high image quality on MINI-ImageNet, whereas our method with label information achieves only a 31.3% leakage rate with lower image quality. The results indicate that the reliance on label information limits performance, as the server must either possess or accurately estimate the local data distribution for an effective attack.

4.4 Time Cost↩︎

To evaluate the efficiency of our method, we calculate its time cost, which consists of two components: training cost and memory cost. The training cost refers to the time required for the main task training of the local model, while the memory cost represents the time taken for training the secret model. The results are presented in Table 4 and 5.

These two tables show the time costs for training and memory tasks separately, reflecting their independence and respective dependencies. Training time is influenced by the local dataset size \(M\). With fixed training epochs and batch size, training time increases almost linearly with dataset size. For example, in the CIFAR-10 dataset, with \(M = 2000\), training time is 16.4s, doubling to 32.8s when \(M\) is 4000.

In contrast, memory task time depends on the target theft quantity \(N\). Since we set the batch size equal to \(N\) for memory tasks, the time cost does not follow a strict linear relationship. For instance, with \(N = 512\) in CIFAR-10, memory time is 19.2s.

In a practical theft scenario, specific configurations can make the task less noticeable. For instance, in CIFAR-10 with a local dataset size of 4000 and a theft of 512 images, training and memory times are 32.8s and 19.2s, respectively. The additional time for the memory task is close to half the original training time, making the theft less detectable.

5pt

| Dataset | M=2000 | M=4000 | M=6000 |

|---|---|---|---|

| CIFAR-10 | 16.4 | 32.8 | 48.3 |

| CIFAR-100 | 16.2 | 32.1 | 47.6 |

| ImageNet | 21.5 | 43.7 | 63.2 |

| CelebA | 20.2 | 41.8 | 60.9 |

5pt

| Dataset | N=128 | N=256 | N=512 |

|---|---|---|---|

| CIFAR-10 | 12.4 | 14.8 | 19.2 |

| CIFAR-100 | 11.6 | 15.7 | 20.8 |

| Dataset | N=32 | N=48 | N=64 |

| ImageNet | 22.1 | 24.3 | 26.8 |

| CelebA | 18.2 | 19.7 | 21.2 |

[tt]

\caption{{\color{black}Robustness of \sys to various advanced defenses.} }

\label{wsgmufyd}

\centering

\setlength\tabcolsep{3pt}

\footnotesize

\begin{tabular}{cl|cccccccccc}

\toprule

\multirow{2}{*}{\shortstack{Dataset}}&\multirow{2}{*}{\shortstack{Metrics$^\dagger$}} & \multicolumn{2}{c|}{Gradient Pruning} & \multicolumn{2}{c|}{Gradient Clipping} & \multicolumn{2}{c|}{Noise Perturbation} & \multirow{2}{*}{\shortstack{D-SNR Detection}}\\

& & $\tau$= 1e{-}6 & \multicolumn{1}{c|}{$\tau$= 1e{-}5} & max\_norm = 5 & \multicolumn{1}{c|}{max\_norm = 1} & $\epsilon$= 1e{-}3 & \multicolumn{1}{c|}{$\epsilon$= 4e{-}3} & \\

\midrule

\multirow{4}{*}{\shortstack{CIFAR-10}}

&Leakage ($\uparrow$) & 511.0 & 448.0 & 511.0 & 511.0 & 511.8 & 431.4 & 512.0 \\

&SSIM ($\uparrow$) & 0.746 & 0.590 & 0.748 & 0.748 & 0.760 & 0.593 & 0.788 \\

&PSNR ($\uparrow$) & 22.637 & 20.186 & 22.692 & 22.675 & 22.537 & 19.043 & 23.687 \\

&LPIPS ($\downarrow$) & 0.325 & 0.428 & 0.324 & 0.323 & 0.317 & 0.427 & 0.264 \\

\hline

\multirow{4}{*}{\shortstack{CIFAR-100}}

&Leakage ($\uparrow$) & 510.0 & 387.0 & 511.0 & 511.0 & 505.8 & 502.8 & 506.4 \\

&SSIM ($\uparrow$) & 0.699 & 0.568 & 0.712 & 0.724 & 0.689 & 0.685 & 0.712 \\

&PSNR ($\uparrow$) & 22.080 & 20.248 & 22.304 & 22.523 & 21.966 & 21.899 & 22.486 \\

&LPIPS ($\downarrow$) & 0.345 & 0.427 & 0.335 & 0.325 & 0.349 & 0.352 & 0.183 \\

\hline

\multirow{4}{*}{\shortstack{MINI-ImageNet}}

&Leakage ($\uparrow$) & 32.0 & 32.0 & 32.0 & 31.0 & 32.0 & 11.4 & 32.0 \\

&SSIM ($\uparrow$) & 0.829 & 0.823 & 0.830 & 0.779 & 0.735 & 0.485 & 0.805\\

&PSNR ($\uparrow$) & 28.361 & 28.157 & 28.373 & 26.845 & 26.039 & 21.356 & 27.311 \\

&LPIPS ($\downarrow$) & 0.222 & 0.230 & 0.222 & 0.281 & 0.331 & 0.520 & 0.248 \\

\hline

\multirow{4}{*}{\shortstack{CelebA Subset}}

&Leakage ($\uparrow$) & 32.0 & 32.0 & 32.0 & 32.0 & 32.0 & 13.8 & 32.0 \\

&SSIM ($\uparrow$) & 0.907 & 0.903 & 0.914 & 0.919 & 0.849 & 0.489 & 0.911 \\

&PSNR ($\uparrow$) & 32.659 & 32.486 & 33.085 & 33.421 & 30.744 & 22.423 & 33.041 \\

&LPIPS ($\downarrow$) & 0.127 & 0.131 & 0.117 & 0.110 & 0.231 & 0.557 & 0.123\\

\bottomrule

\end{tabular}5 Robustness to State-of-the-art Defenses↩︎

In this section, we investigate the effectiveness of our method under state-of-the-art data reconstruction defenses, including D-SNR [21], noise perturbation, gradient pruning, and gradient clipping. Additionally, we monitor loss changes as a defense mechanism to assess our method’s resilience against detection.

5.1 D-SNR Detection↩︎

Disaggregation signal-to-noise ratio (D-SNR) is a novel metric for detecting vulnerabilities in federated learning, specifically against disaggregation attacks. D-SNR signals a potential attack when the gradient of a single example outweighs the batch gradient, suggesting an adversary has isolated an individual example from the batch. This metric is essential for evaluating the risk of data leakage by analyzing gradients, bypassing the need for costly optimization-based attack simulations. By ensuring that any layer with potential disaggregation has a high D-SNR, clients can detect and skip vulnerable training rounds.

When applying D-SNR, we observe that our method can still succeed by avoiding dominant gradients within any single training round. The success of our method lies in its multi-round, incremental data embedding approach, which encodes small portions of sensitive data across multiple rounds. This strategy distributes the data over several rounds and merges gradients with shared parameters, keeping gradient magnitudes low enough to evade D-SNR detection. Moreover, our method’s sparse encoding minimizes gradient impact per round, effectively bypassing the D-SNR threshold.

5.2 Noise Perturbation↩︎

Defenders can enhance a model’s robustness by adding noise to the gradients, which involves applying small, continuous perturbations. A common method is to introduce Gaussian noise after each backpropagation step, referred to as the diffusion term [46]. This technique generates random variations in gradient values, thereby mitigating the risk of data leakage during updates.

To assess the impact of noise-based defenses, we applied Gaussian noise directly to the gradients in our experiments, with noise levels set at \(1 \times 10^{-3}\) and \(4 \times

10^{-3}\). Despite these relatively high noise levels, the results indicate that our method remains effective. When the noise is set to \(1 \times 10^{-3}\), the effectiveness of data theft is nearly

unaffected. With an increased noise level of \(4 \times 10^{-3}\), our method still achieves a leakage of 431.4 on CIFAR-10 with a target theft of 512 images, and for CelebA, with a target of 32 images,

our method successfully steals 13.8 images. our method succeeds due to its low-magnitude, multi-round embedding strategy, which enables it to accumulate data across multiple rounds while maintaining effectiveness even with added noise.

5.3 Gradient Pruning↩︎

Gradient pruning [47] reduces computational complexity in neural networks by selectively pruning channels based on the importance of their gradients. It evaluates the significance of each channel using the mean gradient of the feature maps, pruning those with lower mean gradients that have less impact on the loss function. It can decrease the network’s size and floating-point operations (FLOPs). In the experiments, we selected two threshold values for gradient pruning, \(\tau = 1 \times 10^{-6}\) and \(\tau = 1 \times 10^{-5}\), to control the level of pruning applied. The threshold \(\tau\) determines the minimum mean gradient magnitude required for a channel to be retained. Lower values of \(\tau\) (e.g., \(1 \times 10^{-6}\)) result in fewer channels being pruned, preserving more information, while higher values (e.g., \(1 \times 10^{-5}\)) increase pruning aggressiveness, removing more channels with lower gradient contributions. Despite the use of gradient pruning, our method remains effective due to its multi-round, low-intensity embedding strategy. Our method does not rely on high-gradient channels in any single round but instead distributes data across multiple rounds and channels, with each round embedding minimal information. By leveraging sparse encoding and parameter sharing, our method disperses data across numerous channels, allowing it to circumvent pruning. Even if some channels are pruned, the cumulative effect of the attack remains intact, ensuring successful data leakage.

5.4 Gradient Clipping↩︎

Gradient clipping [48] is a defense technique that limits the norm of gradients during backpropagation to prevent excessively large updates that could destabilize training, especially when dealing with exploding gradients. When the gradient norm exceeds a predefined threshold, it is rescaled to match the threshold. This helps maintain training stability by controlling the gradient magnitude, allowing the model to navigate non-smooth regions of the loss landscape more effectively, leading to faster convergence and potentially improved generalization. In the experiments, we applied gradient clipping with two threshold values, \(\text{max\_norm} = 1\) and \(\text{max\_norm} = 5\), to control the magnitude of the gradients during backpropagation. These thresholds help limit the gradient updates, with \(\text{max\_norm} = 1\) enforcing stricter clipping and \(\text{max\_norm} = 5\) allowing slightly larger updates. By constraining the gradient norm, we aim to prevent excessively large updates that could destabilize training or signal potential data leakage.

However, we can see that our method remains effective under gradient clipping because it does not rely on large gradient values in a single round. Instead, it employs a multi-round, low-magnitude embedding strategy, where each round only contributes a small amount of data, keeping the gradient norm within the clipping limits.

5.5 Loss Change Monitor↩︎

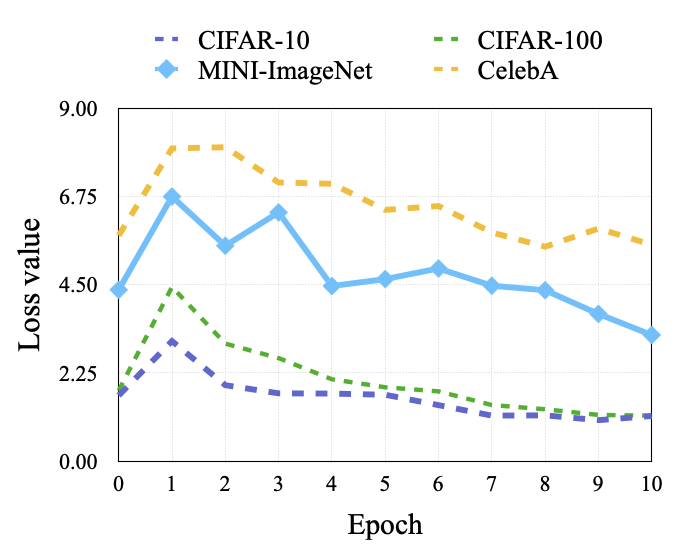

During model training, clients may monitor the change in loss to detect anomalies. To prevent detection, the loss should appear normal and remain within expected fluctuations throughout training. The loss change is shown in Figure 4 (appendix). We can see that there is a slight increase in the first round when the client trains the local model alongside the memory task. However, the loss quickly stabilizes and gradually decreases, resembling the pattern of standard training, thereby reducing the risk of drawing the client’s attention. In normal training, minor increases in loss can occur due to factors such as model adjustments and data variability. Thus, this subtle rise is unlikely to raise suspicion, as it resembles natural training fluctuations, demonstrating the evasiveness of our method.

6 Discussion↩︎

6.1 Secure Aggregation↩︎

Secure aggregation presents a significant challenge for data reconstruction attacks, as it only allows the server to access aggregated updates from all clients, blocking access to individual updates [20]. Many attacks rely on reconstructing high-quality images from individual client updates, which makes them largely ineffective when secure aggregation is in place [26]–[28].

Federated Learning (FL) also faces communication bottlenecks due to the limited bandwidth of edge devices and their resource constraints [49]–[51]. To address this, methods like reducing the number of clients per round [52], [53] and applying gradient compression techniques (e.g., quantization, sparsification, low-rank approximation) [54]–[56] have been developed. Gradient sparsification is especially useful, as it filters out less important gradients, reducing the amount of data transmitted during updates.

We discovered that by combining our approach with communication acceleration strategies, it is possible to bypass secure aggregation. These strategies can create model inconsistencies across clients due to differences in data distribution and gradient sparsification. Attackers can exploit these inconsistencies to extract gradient information. For instance, in APF [51], a global frozen mask is used to synchronize parameters across clients. By manipulating this mask with malicious code (e.g., setting it to zero or inverting it), attackers can expose the target user’s gradient information.

6.2 Generalize our method to NLP↩︎

Although our method was originally designed for applications in computer vision, its principles can be adapted to natural language processing (NLP) tasks with slight modifications. In NLP, the secret model can be embedded within transformers or similar architectures by selecting and masking specific layers or attention heads. Instead of handling images, the model memorizes token sequences, using techniques like tokenization for indexing to distinguish between different samples.

To process longer text sequences, block partitioning can be applied by splitting the text into smaller segments. These segments are treated as individual inputs for memorization, with token-level information used to reassemble them during reconstruction. The loss function would focus on minimizing the difference between predicted and actual token sequences, allowing the model to covertly learn and store sensitive text data. In the future, we will explore the effectiveness and scalability of our method in NLP tasks.

6.3 Potential Countermeasures↩︎

To defend against the covert data-reconstruction mechanisms in our method, two potential countermeasures can be applied.

First, regularly verifying the integrity of model parameters before and after training is crucial. Techniques such as hash-based verification can help detect unauthorized modifications by comparing model snapshots across different training rounds. This approach can flag suspicious changes that might suggest data-stealing activities early on. However, it may not be effective if the our method introduces subtle changes that blend with normal updates. These changes can be distributed across numerous parameters, making them difficult to detect through simple integrity checks. Specifically, malicious code injections can modify the model in ways that appear innocuous when viewed in isolation but collectively enable a stealthy attack.

Second, gradient noise injection techniques, such as differential privacy, can make it more difficult for attackers to recover sensitive data from model updates. By adding controlled noise to the gradients during training, the accuracy of extracted data is reduced, limiting our method’s ability to reconstruct sensitive token sequences. However, excessive noise may degrade the model’s performance, reducing its utility for legitimate users, making it challenging to find a balance between security and accuracy.

In the future, designing more effective defenses against our method will be necessary to mitigate the risks of such attacks.

7 Conclusion↩︎

In this paper, we introduced a novel data reconstruction attack against federated learning. Unlike traditional methods that rely on conspicuous changes to architecture or parameters, our method injects malicious code during training, enabling undetected data theft. By covertly training a hidden model through parameter sharing, our method efficiently extracts private data. To improve performance, we proposed a Fibonacci-based indexing and a block partitioning strategy that enhances the attack’s ability to handle high-resolution datasets and large batch sizes. Extensive experiments show that our method can bypass state-of-the-art detection methods while effectively handling high-resolution datasets and large-scale theft scenarios.

A. Datasets and Models↩︎

CIFAR-10. CIFAR-10 [43] contains 60,000 images belonging to 10 classes. Each sample has a dimension of 32 \(\times\) 32. We randomly select 50,000 samples as the training set, and the remaining 10,000 samples as the test set. In the experiments, we employ the ResNet-18 architecture to train the victim model. The local training process spans 10 epochs, with a learning rate initially set to 0.1. We use the SGD optimizer with a weight decay of 0.001 to prevent overfitting. To refine training over time, we apply a learning rate scheduler that decays the rate by a factor of 0.9 every epoch. The training batch size is set to 32.

CIFAR-100. CIFAR-100 dataset [43] closely resembles CIFAR-10, differing in the number of classes. It comprises 100 classes, each containing 600 images. The dataset is split into 500 training images and 100 testing images per class, amounting to a comprehensive set of diverse visual data for classification tasks. In the experiments, we employ the ResNet-18 architecture to train the victim model. The local training details for the CIFAR-100 dataset are consistent with those of CIFAR-10.

MINI-ImageNet. MINI-ImageNet[44] is a subset of ImageNet, widely used in the research community [57], [58]. It consists of 100 classes, each containing 600 images. For our experiments, we selected 40,000 images from the dataset, with 400 images per class across 100 classes, to ensure balanced representation across categories. Each image has a high resolution with a dimension of 224 \(\times\) 224. In the experiments, we train a ResNet-18 model for 10 epochs as the victim model. The local training details for the MINI-ImageNet dataset are consistent with those of CIFAR-10.

CelebA. CelebA [45] is a large-scale face attributes dataset containing 10,177 identities with 202,599 face images. Each image includes annotations for 5 landmark locations and 40 binary attributes, and has a high resolution of 224 × 224 pixels. In our experiments, we selected 1,000 identities from the dataset, with 20 images per identity, totaling 20,000 images. For local training, we kept the training parameters consistent with those used for CIFAR-10.

B. Evaluation Metrics↩︎

Leakage: The leakage quantifies the number of images that can be extracted from the total dataset in the given attack rounds.

Leakage Rate: The leakage rate quantifies the proportion of images that can be extracted from the total dataset in the given attack rounds. \[\text{Leakage Rate} = \frac{E}{N},\] where \(E\) is the number of extracted images, and \(N\) is the total number of images in the target dataset.

SSIM. SSIM is a commonly-used Quality-of-Experience (QoE) metric [59] that quantifies the differences in luminance, contrast, and structure between the original image and the distorted image. \[SSIM = A(x,x')^{\alpha} B(x,x')^{\beta} C(x,x')^{\gamma},\] where \(A(x,x'), B(x,x')\), and \(C(x,x')\) quantify the luminance similarity, contrast similarity, and structure similarity between the original image \(x\) and the distorted image \(x'\). \(\alpha, \beta\), and \(\gamma\) are parameters in the range \([0, 1]\).

PSNR. PSNR is computed based on MSE (Mean Squared Error) regarding the signal energy. \[\begin{align} PSNR = 10\log_{10} \frac{E}{MSE},\\ MSE = \frac{1}{N} \sum_i (x_i' - x_i)^2, \end{align}\] where \(E\) is the maximum signal energy.

LPIPS. LPIPS [60] measures the similarity between two images based on the idea that the human visual system processes images in a hierarchical manner, where lower-level features, e.g., edges and textures, are processed before higher-level features, e.g., objects and scenes. The LPIPS metric uses a deep neural network to calculate the similarity between the two images. \[LPIPS(A,B) = \sum_i w_i * ||F_i(A) - F_i(B)||^2\] where \(F_i(A)\) and \(F_i(B)\) are the feature representations of images \(A\) and \(B\) at layer \(i\) of the pre-trained neural network, \(||.||\) denotes the L\(_2\) norm, and \(w_i\) is a weight that controls the relative importance of each layer. The smaller the value, the more similar the two images are.

Figure 4: Impact of the loss change.

| Baselines | Metrics\(^\dagger\) | Binary | Gray | Fibonacci | |

|---|---|---|---|---|---|

| Leakage Rate (\(\uparrow\)) | 84.4% | 77.3% | 85.9% | ||

| SSIM (\(\uparrow\)) | 0.604 | 0.567 | 0.607 | ||

| PSNR (\(\uparrow\)) | 28.037 | 26.736 | 28.105 | ||

| LPIPS (\(\downarrow\)) | 0.401 | 0.425 | 0.401 | ||

| Leakage Rate (\(\uparrow\)) | 83.6% | 79.7% | 84.4% | ||

| SSIM (\(\uparrow\)) | 0.829 | 0.781 | 0.827 | ||

| PSNR (\(\uparrow\)) | 32.918 | 29.873 | 32.142 | ||

| LPIPS (\(\downarrow\)) | 0.143 | 0.130 | 0.147 | ||

| MINI-ImageNet | Leakage Rate (\(\uparrow\)) | 77.8% | 80.0% | 84.4% | |

| SSIM (\(\uparrow\)) | 0.598 | 0.572 | 0.612 | ||

| PSNR (\(\uparrow\)) | 23.179 | 22.239 | 23.535 | ||

| LPIPS (\(\downarrow\)) | 0.464 | 0.490 | 0.451 | ||

| CelebA-Subset | Leakage Rate (\(\uparrow\)) | 93.8% | 81.3% | 93.8% | |

| SSIM (\(\uparrow\)) | 0.631 | 0.736 | 0.661 | ||

| PSNR (\(\uparrow\)) | 21.565 | 25.307 | 22.667 | ||

| LPIPS (\(\downarrow\)) | 0.441 | 0.323 | 0.412 |

1pt

| Baselines | Metrics\(^\dagger\) | Size = 4 | Size= 7 | Size= 8 | Size = 14 | Size= 16 | Size = 28 | Size = 32 |

|---|---|---|---|---|---|---|---|---|

| MINI-ImageNet | SSIM (\(\uparrow\)) | 0.766 | 0.837 | 0.834 | 0.819 | 0.819 | 0.827 | 0.800 |

| PSNR (\(\uparrow\)) | 26.679 | 28.335 | 28.321 | 27.737 | 27.885 | 28.228 | 27.484 | |

| LPIPS (\(\downarrow\)) | 0.298 | 0.218 | 0.212 | 0.227 | 0.224 | 0.220 | 0.254 | |

| CelebA-Subset | SSIM (\(\uparrow\)) | 0.854 | 0.894 | 0.919 | 0.929 | 0.937 | 0.913 | 0.891 |

| PSNR (\(\uparrow\)) | 30.236 | 31.810 | 33.332 | 34.063 | 34.664 | 32.981 | 31.809 | |

| LPIPS (\(\downarrow\)) | 0.234 | 0.208 | 0.120 | 0.103 | 0.090 | 0.121 | 0.146 | |

| Parameters | 2.22M | 2.32M | 2.37M | 2.78M | 2.96M | 4.58M | 5.32M | |

| Memory time (s) | 178.95 | 71.73 | 71.69 | 41.07 | 34.22 | 26.78 | 27.64 | |

| Baselines | Metrics\(^\dagger\) | Random | Random with Constraints | Systematic | Layer-wise | Importance-based | |||

|---|---|---|---|---|---|---|---|---|---|

| Leakage Rate (\(\uparrow\)) | 100% | 100% | 100% | 100% | 99.4% | ||||

| SSIM (\(\uparrow\)) | 0.937 | 0.867 | 0.881 | 0.895 | 0.727 | ||||

| PSNR (\(\uparrow\)) | 28.251 | 25.499 | 25.812 | 26.391 | 21.820 | ||||

| LPIPS (\(\downarrow\)) | 0.083 | 0.195 | 0.175 | 0.162 | 0.330 | ||||

| 1-8 | Leakage Rate (\(\uparrow\)) | 100% | 99.6% | 100% | 98.0% | 100% | |||

| SSIM (\(\uparrow\)) | 0.778 | 0.712 | 0.824 | 0.666 | 0.779 | ||||

| PSNR (\(\uparrow\)) | 23.656 | 22.401 | 24.376 | 21.567 | 23.637 | ||||

| LPIPS (\(\downarrow\)) | 0.275 | 0.329 | 0.232 | 0.367 | 0.279 | ||||

| 1-8 MINI-ImageNet | Leakage Rate (\(\uparrow\)) | 84.4% | 82.0% | 92.2% | 89.8% | 91.4% | |||

| SSIM (\(\uparrow\)) | 0.619 | 0.603 | 0.659 | 0.636 | 0.646 | ||||

| PSNR (\(\uparrow\)) | 23.562 | 23.265 | 24.290 | 23.874 | 24.047 | ||||

| LPIPS (\(\downarrow\)) | 0.431 | 0.449 | 0.386 | 0.411 | 0.400 | ||||

| 1-8 CelebA-Subset | Leakage Rate (\(\uparrow\)) | 99.2% | 97.7% | 96.9% | 97.7% | 100% | |||

| SSIM (\(\uparrow\)) | 0.621 | 0.625 | 0.617 | 0.620 | 0.657 | ||||

| PSNR (\(\uparrow\)) | 23.883 | 23.781 | 23.496 | 23.801 | 24.132 | ||||

| LPIPS (\(\downarrow\)) | 0.523 | 0.511 | 0.510 | 0.519 | 0.481 |

3pt

| MINI-ImageNet dataset | |||||||||

| Baselines | Metrics\(^\dagger\) | \(N=8\) | \(N=16\) | \(N=24\) | \(N=32\) | \(N=48\) | \(N=64\) | ||

| Transpose Attack | Leakage (\(\uparrow\)) | 0.0 \(\pm\) 0.0 | 0.2 \(\pm\) 0.4 | 0.0 \(\pm\) 0.0 | 0.0 \(\pm\) 0.0 | 0.0 \(\pm\) 0.0 | 0.0 \(\pm\) 0.0 | ||

| SSIM (\(\uparrow\)) | 0.301 \(\pm\) 0.028 | 0.247 \(\pm\) 0.030 | 0.246 \(\pm\) 0.014 | 0.247 \(\pm\) 0.011 | 0.215 \(\pm\) 0.018 | 0.193 \(\pm\) 0.020 | |||

| PSNR (\(\uparrow\)) | 14.957 \(\pm\) 1.698 | 13.700 \(\pm\) 1.204 | 13.737 \(\pm\) 0.992 | 13.842 \(\pm\) 0.986 | 13.085 \(\pm\) 0.783 | 12.508 \(\pm\) 0.357 | |||

| LPIPS (\(\downarrow\)) | 0.622 \(\pm\) 0.015 | 0.657 \(\pm\) 0.017 | 0.653 \(\pm\) 0.004 | 0.653 \(\pm\) 0.005 | 0.665 \(\pm\) 0.010 | 0.688 \(\pm\) 0.006 | |||

| 1-8 RtF | Leakage (\(\uparrow\)) | 6.9 \(\pm\) 0.2 | 13.0 \(\pm\) 0.3 | 20.0 \(\pm\) 0.1 | 26.2 \(\pm\) 0.5 | 27.9 \(\pm\) 1.0 | 52.1 \(\pm\) 0.2 | ||

| SSIM (\(\uparrow\)) | 0.844 \(\pm\) 0.011 | 0.804 \(\pm\) 0.015 | 0.816 \(\pm\) 0.007 | 0.814 \(\pm\) 0.013 | 0.850 \(\pm\) 0.014 | 0.780 \(\pm\) 0.004 | |||

| PSNR (\(\uparrow\)) | 35.372 \(\pm\) 0.870 | 34.094 \(\pm\) 0.822 | 32.836 \(\pm\) 0.374 | 33.407 \(\pm\) 0.934 | 34.395 \(\pm\) 0.850 | 30.052 \(\pm\) 0.358 | |||

| LPIPS (\(\downarrow\)) | 0.124 \(\pm\) 0.005 | 0.161 \(\pm\) 0.009 | 0.153 \(\pm\) 0.005 | 0.156 \(\pm\) 0.009 | 0.124 \(\pm\) 0.008 | 0.182 \(\pm\) 0.002 | |||

| 1-8 LOKI | Leakage (\(\uparrow\)) | 6.0 \(\pm\) 1.3 | 13.6 \(\pm\) 1.2 | 19.8 \(\pm\) 1.2 | 25.4 \(\pm\) 3.5 | 40.8 \(\pm\) 1.3 | 57.0 \(\pm\) 3.0 | ||

| SSIM (\(\uparrow\)) | 0.820 \(\pm\) 0.173 | 0.882 \(\pm\) 0.061 | 0.868 \(\pm\) 0.052 | 0.816 \(\pm\) 0.113 | 0.855 \(\pm\) 0.028 | 0.866 \(\pm\) 0.025 | |||