GLS: Geometry-aware 3D Language Gaussian Splatting

November 27, 2024

Abstract

Recently, 3D Gaussian Splatting (3DGS) has achieved significant performance on indoor surface reconstruction and open-vocabulary segmentation. This paper presents GLS, a unified framework of surface reconstruction and open-vocabulary segmentation based on 3DGS. GLS extends two fields by exploring the correlation between them. For indoor surface reconstruction, we introduce surface normal prior as a geometric cue to guide the rendered normal, and use the normal error to optimize the rendered depth. For open-vocabulary segmentation, we employ 2D CLIP features to guide instance features and utilize DEVA masks to enhance their view consistency. Extensive experiments demonstrate the effectiveness of jointly optimizing surface reconstruction and open-vocabulary segmentation, where GLS surpasses state-of-the-art approaches of each task on MuSHRoom, ScanNet++, and LERF-OVS datasets. Code will be available at https://github.com/JiaxiongQ/GLS.

1 Introduction↩︎

Surface reconstruction [1]–[6] and open-vocabulary segmentation [7]–[12] based on 3DGS [13], being widely applied in AR/VR [14] and embodied intelligence [15], [16] due to its capabilities of efficient training and real-time rendering. Recently, notable works have achieved significant progress in both areas.

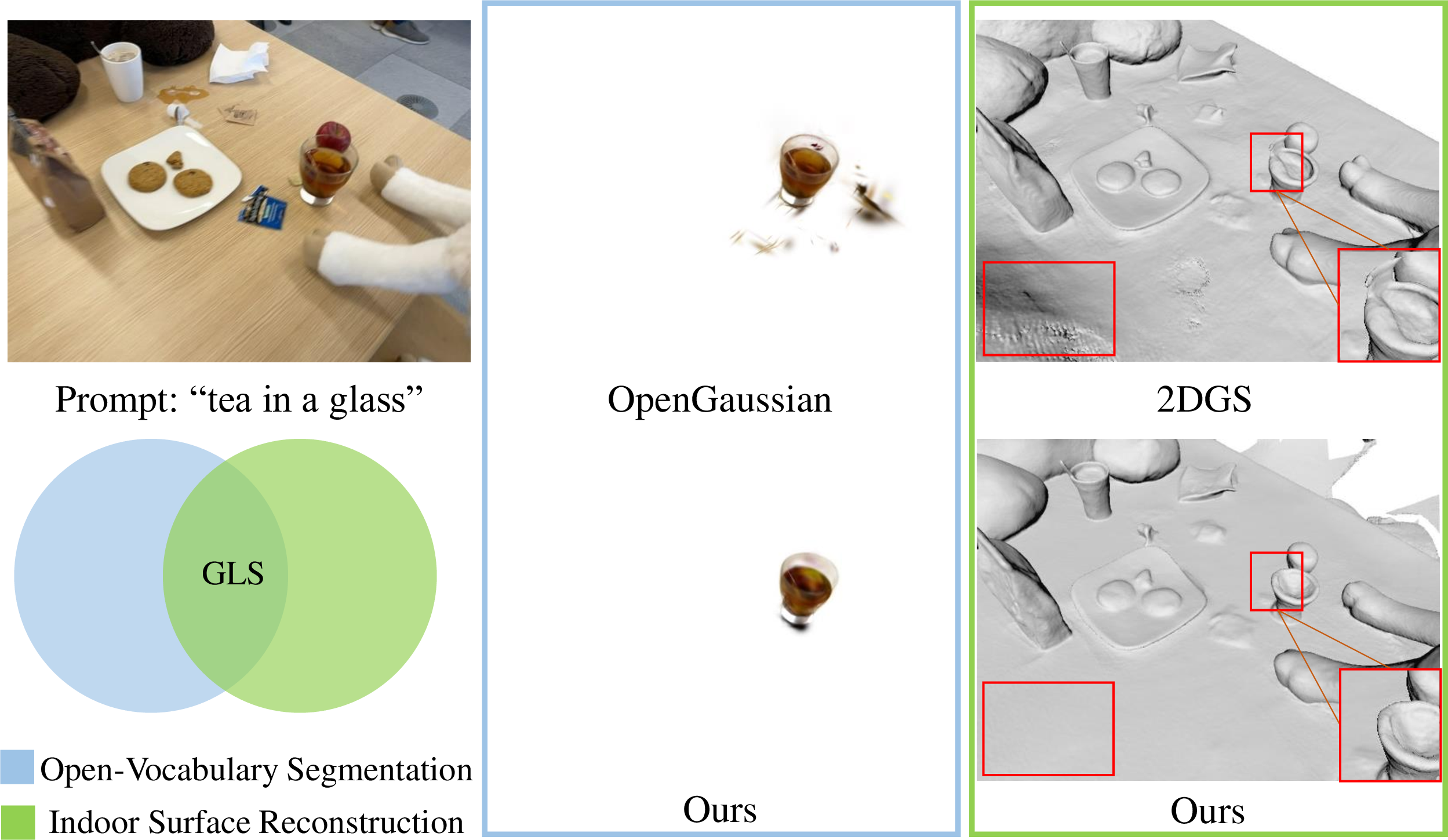

For surface reconstruction, SuGaR [1] proposes regularization terms to align Gaussians and scene surface, then uses Possion reconstruction [17] to extract mesh from Gaussians. For open-vocabulary segmentation, LangSplat [7] and OpenGaussian [12] successfully introduces SAM [18] and CLIP [19] to 3DGS. Gaussian grouping [10] utilizes a universal temporal propagation model DEVA [20] to obtain consistent object masks across views and propose a 3D regularization term to grouping Gaussians. However, these methods only focus on one task and suffer from unstable performance in complex indoor scenes as Fig. 1 shows.

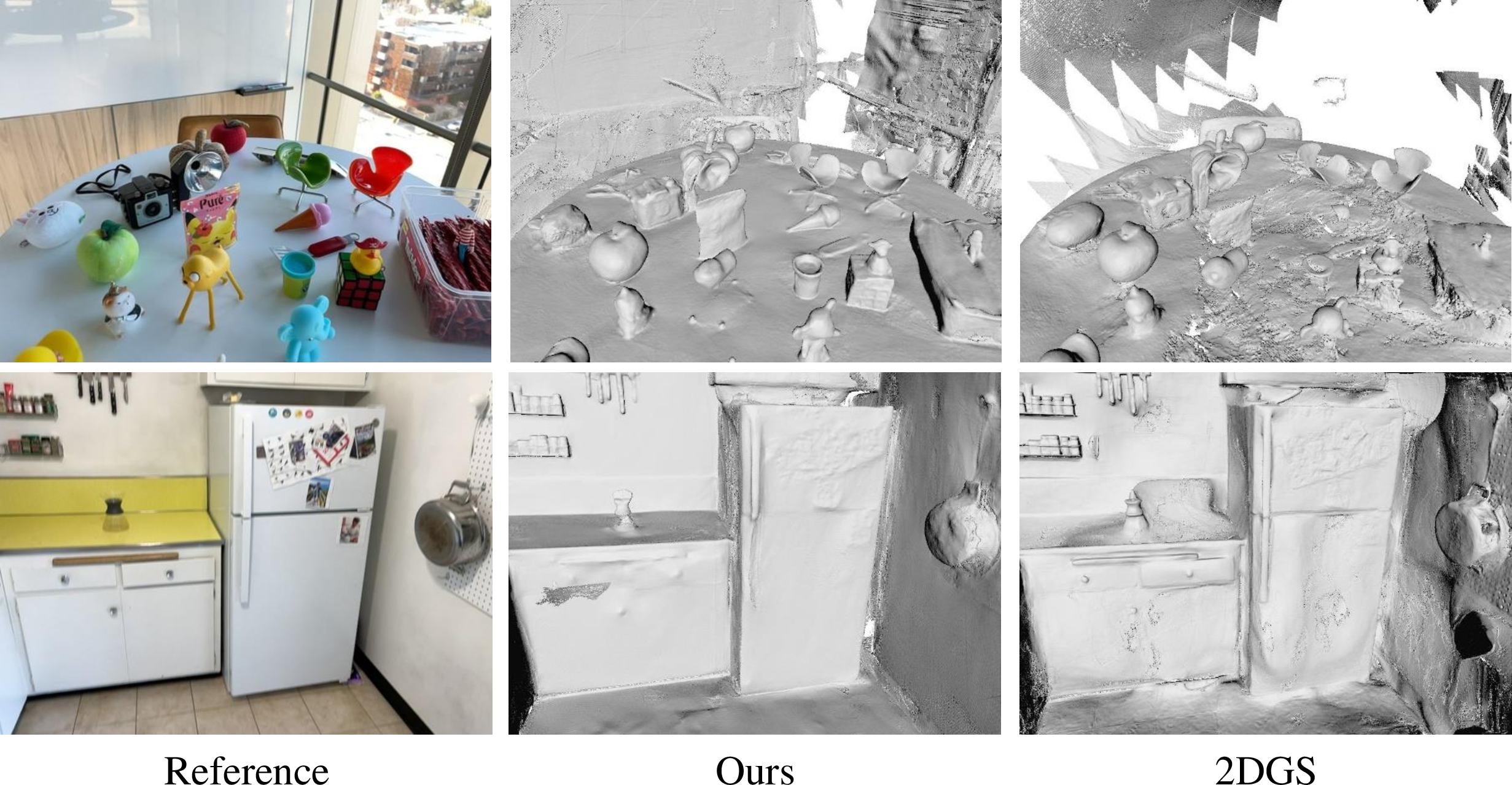

Figure 1: Indoor surface reconstruction and open-vocabulary segmentation. Shadow and high-light regions make state-of-the-art methods struggle in indoor scenes. Our proposed GLS jointly optimizes two tasks based on 3DGS and achieves much better results than OpenGaussian [12] and 2DGS [2].

In this work, we aim to achieve efficient and robust indoor surface reconstruction and open-vocabulary segmentation based on 3DGS. Our goal has two main motivations. On the one hand, the 2D open-vocabulary supervision [18], [19] is naturally view-inconsistent, which easily results in noise body and blur boundary of the segmented object from Gaussians. The accurate scene surface consists of sharp and smooth object surfaces, illustrating that Gaussians are mainly distributed on objects and preserve the object boundaries. This property can make the object segmentation results from Gaussians cleaner and sharper. On the other hand, due to the complex materials and lighting conditions of the indoor scene, the shadow and high-light regions on texture-less and reflective objects always result in noisy surfaces. Fortunately, accurate object masks erase the interference details on the object’s surface. Hence, the object segmentation results can supply the smoothness prior to reducing the reconstruction noises of these objects. In general, the optimization goals of the two can be considered to be the same.

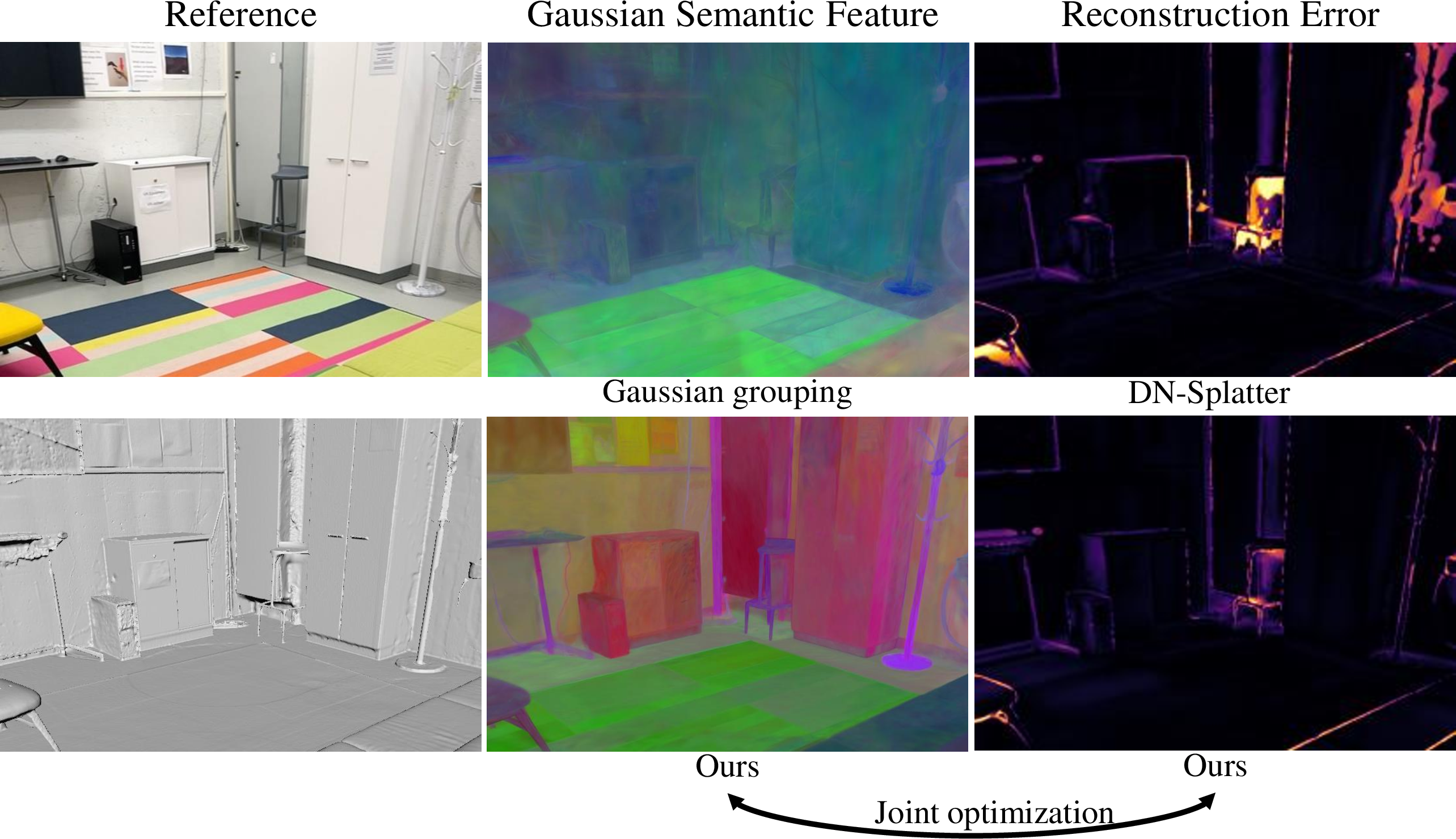

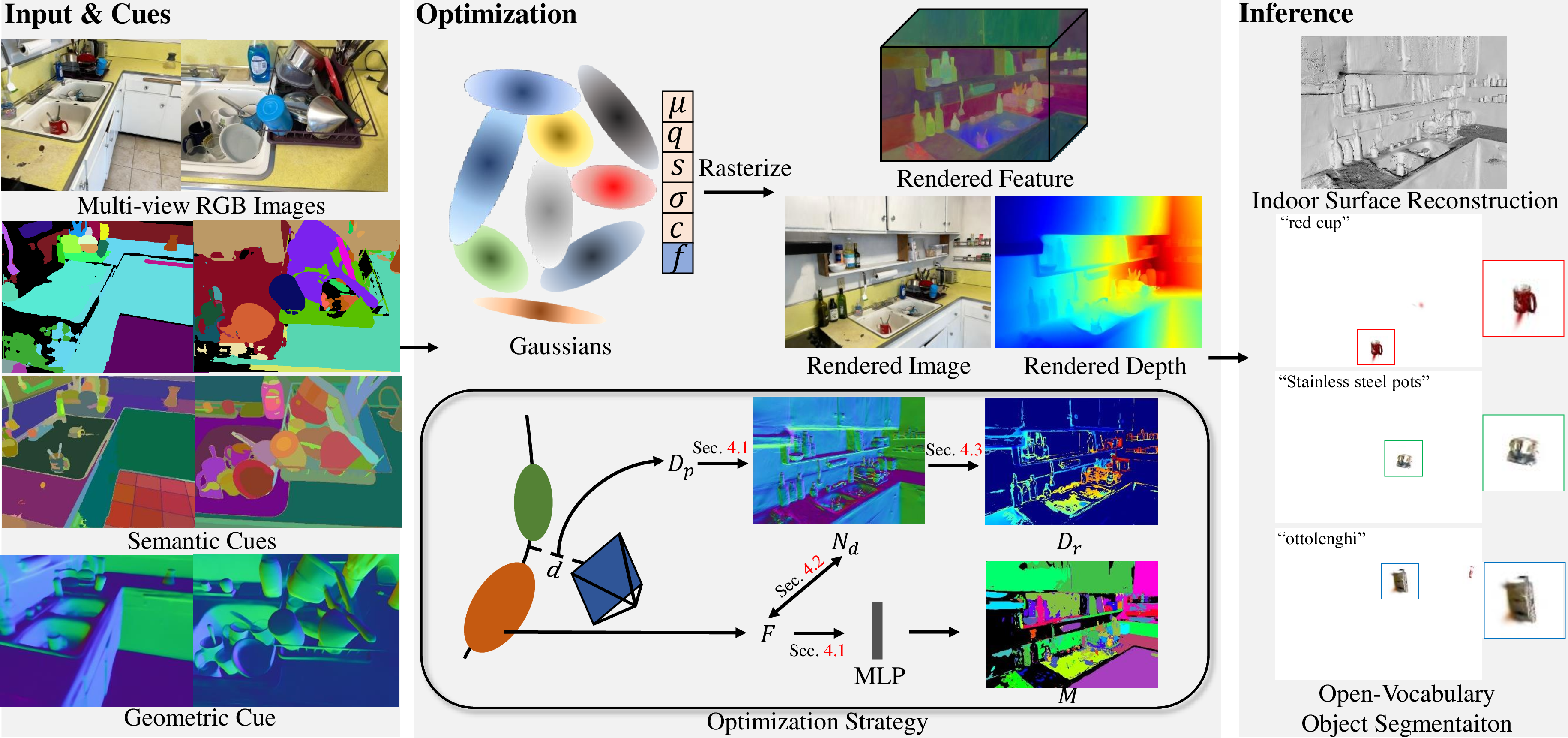

Based on above motivations, we introduce GLS, which leverages complementary between surface reconstruction and open-vocabulary segmentation to boost the performance of both tasks. The framework is presented in Fig. 3. Specifically, we first introduce the normal prior [21] to regularize the surface normal estimated from rendered depth. Then we analyzed different situations of rendered depth under different normal errors and propose a regularization term to enhance the sharpness of the scene surface. To integrate the open-vocabulary information, we add Gaussian semantic features into vanilla 3DGS and then utilize the consistent object masks from DEVA and image features from CLIP to supervise them. In addition, we consider the clip features as the smoothness prior to strengthen the accuracy of texture-less and reflective surfaces. Finally, we adopt TSDF fusion [22] from rendered depth to extract scene mesh, then compute the similarity between input text embeddings and learned semantic embeddings to acquire object masks. We conduct extensive experiments on MuSHRoom [23], ScanNet++ [24] and LERF-OVS [7], [25] datasets. As Fig. 2 shows, the superior performance of our model on both tasks demonstrates the effectiveness of connecting surface reconstruction and open-vocabulary segmentation in 3DGS.

In summary, our technical contributions can be listed as follows:

We design a novel framework based on 3DGS, by jointly optimizing surface reconstruction and open-vocabulary segmentation in complex indoor scenes.

We propose two regularization terms with the help of geometric and semantic cues, to facilitate the sharpness and smoothness of reconstructed scene surfaces and segmented objects.

Our method inherits the training and rendering efficiency of 3DGS and achieves state-of-the-art accuracy on surface reconstruction and open-vocabulary segmentation tasks.

Figure 2: Effect of joint optimization between indoor surface reconstruction and open-vocabulary segmentation. Our method significantly improves the quality of Gaussian semantic features and surface reconstruction.

Figure 3: Overview of our framework. We adopt geometric and semantic cues produced by generalizable models to jointly strengthen the reconstruction and segmentation quality of 3DGS. Our framework achieves accurate indoor surface reconstruction and open-vocabulary segmentation via effective regularization terms, which faithfully enable Gaussian primitives to distribute along the object surface.

2 Related Work↩︎

2.1 Neural Rendering↩︎

Neural Radiance Fields (NeRF) [26] based on implicit representations have achieved remarkable advancements in novel view synthesis. Some NeRF-based approaches [27]–[29] concentrate on extending NeRF to more challenging scenes, while they are still limited by low training and rendering efficiency. Alternatively, other NeRF-based methods [30]–[35] utilize explicit representations such as voxels, grids, point clouds and meshes. They faithfully reduce the large computational cost of neural networks. The recent technologies [13], [36], [37] of neural rendering based on alpha-blend rendering have further advanced the rendering speed and quality, by optimizing the attributes of the explicit 3D Gaussians. Our framework is built upon 3DGS and focuses on optimizing it to tackle both surface reconstruction and open-vocabulary segmentation tasks.

2.2 3DGS-Based Surface Reconstruction↩︎

Surface reconstruction from multi-view images [38]–[41] is a challenging task in computer vision and graphics. Recently, extracting scene surfaces from Gaussian primitives has become a popular research topic. SuGaR [1] utilizes Poisson reconstruction [17] to extract mesh from sampled Gaussians and encourages Gaussians attach to the scene surface by several regularization terms, for enhancing reconstruction accuracy. However, due to the disorder of the Gaussians and the complexity of the scene, fitting Gaussians on geometric surfaces is challenging. 2DGS [2] replaces 3D Gaussian primitives with 2D Gaussian disks for efficient training. They also introduce two regularization terms of depth distortion and normal consistency to reconstruct a smooth scene surface. GOF [4] proposes the Gaussian opacity field, which extracts holistic mesh by identifying the scene level-set directly. PGSR [5] estimates the unbiased depth to disable the degeneration of the blending coefficient, and introduces the multi-view regularization term to achieve global-consistent reconstruction. DN-Splatter [42] and VCR-GauS [43] leverage normal or depth priors from generalizeable models [21], [44], [45], to learn effective surfel representations. GaussianRoom [46] integrates implicit SDF representation with explicit Gaussian representation, and utilizes normal and edge priors to improve the reconstruction accuracy.

Due to the lack of object attributes, these methods struggle to reconstruct sharp objects against complex lighting conditions of an indoor scene. To address these issues, we propose combining open-vocabulary segmentation and surface reconstruction. In contrast with previous related works [47]–[49], our method releases the need for ground-truth segmentation supervision and achieves highly efficient training speed.

2.3 3DGS-Based Open-Vocabulary Segmentation↩︎

Recent works [50]–[52] about open-vocabulary scene understanding have significant progress by integrating 2D foundational models with 3D point cloud representations. These methods project a 3D point cloud into 2D space for zero-shot learning of aligned features. LERF [25] designs a pipeline of 3D open-vocabulary segmentation by distilling features from CLIP into NeRF. 3GS-based methods add the semantic attribute into 3D Gaussians and are supervised by 2D scene priors [18], [19] to overcome large computational cost of NeRF-based methods. LangSplat [7] designs a per-scene autoencoder to reduce the dimension of CLIP features on multi-level latent spaces, this scheme generates clear boundaries of rendered features. LEGaussians [8] integrate uncertainty with CLIP and DINO [53] image features to Gaussians. To learn high-dimensional semantic features, Feature3DGS [9] develops a parallel Gaussian rasterizer. Gaussian Grouping [10] introduces view-consistent masks from DEVA and proposes a 3D local consistency regularization term to strengthen the segmentation accuracy. OpenGaussian [12] focuses on 3D point-level open-vocabulary understanding via CLIP features and proposes a two-level codebook scheme with SAM masks to refine the rendered mask. Similar to these methods, we introduce CLIP features and SAM masks to supervise the semantic features of 3DGS.

3 Preliminary↩︎

Initialized with point clouds and colors produced by SfM[38], 3DGS [13] adopt differentiable 3D Gaussian primitives \(\{\boldsymbol{G}\}\) to explicitly represent a scene. Each primitive is parameterized by the Gaussian function: \[\setlength{\abovedisplayskip}{3pt} \setlength{\belowdisplayskip}{3pt} \boldsymbol{G}(\boldsymbol{x}|\boldsymbol{\mu}, {\textstyle \tiny \boldsymbol{\sum}}) = e^{-\frac{1}{2}(\boldsymbol{x}-\boldsymbol{\mu})^T\boldsymbol{\sum}^{-1}(\boldsymbol{x}-\boldsymbol{\mu})}, \label{eq:Gaussian}\tag{1}\] where \(\boldsymbol{\mu} \in \mathbb{R}^3\) and \({\textstyle \tiny \boldsymbol{\sum}} \in \mathbb{R}^{3 \times 3}\) are the center and the covariance matrix of spatial points \(\boldsymbol{x}\) respectively. \({\textstyle \tiny \boldsymbol{\sum}}\) consists of a scaling vector \(\boldsymbol{s} \in \mathbb{R}^3\) and a quaternion vector \(\boldsymbol{q} \in \mathbb{R}^4\).

3DGS enables an efficient alpha-blending procedure for real-time rendering. Given a camera view, 3D Gaussian primitives are projected into viewing space to be 2D Gaussians, which are sorted by the z-buffer strategy and alpha-composited by the volume rendering equation [26] to generate pixel colors \(\boldsymbol{C}\): \[\setlength{\abovedisplayskip}{3pt} \setlength{\belowdisplayskip}{3pt} \boldsymbol{C} = \sum_{i \in N}\boldsymbol{c}_i\alpha_i\prod_{j=1}^{i-1}(1-\alpha_j), \label{eq:volumn95rendering}\tag{2}\] where \(N\) is the number of 3D Gaussian primitives, \(\boldsymbol{c} \in \mathbb{R}^3\) is the color of a Gaussian primitive estimated from spherical harmonics and the viewing direction. \(\alpha\) is the blending coefficient determined by the opacity \(\sigma\). Similar to rendered color \(\boldsymbol{C}\), the rendered alpha \(A\), depth \(D\) and rendered semantic features \(\boldsymbol{F}\) can be denoted by: \[\setlength{\abovedisplayskip}{3pt} \setlength{\belowdisplayskip}{3pt} \begin{align} A &= \sum_{i \in N}\alpha_i\prod_{j=1}^{i-1}(1-\alpha_j),\\ D &= \sum_{i \in N}d_i\alpha_i\prod_{j=1}^{i-1}(1-\alpha_j),\\ \boldsymbol{F} &= \sum_{i \in N}\boldsymbol{f}_i\alpha_i\prod_{j=1}^{i-1}(1-\alpha_j). \end{align} \label{eq:volumn95rendering2}\tag{3}\] where \(d\) is the distance between the 2D Gaussian point and the camera center, \(\boldsymbol{f}\) is the semantic feature of each Gaussian.

4 Method↩︎

Given multi-view RGB images captured by a camera in an indoor scene, our goal is to jointly reconstruct the scene and open-vocabulary objects. To achieve this goal, we introduce GLS, a novel framework based on 3DGS. As shown in Fig. 3, our framework consists of three procedures. In the input procedure, we use the generalizable model SAM [18], DEVA [20] and CLIP [19] to produce 2D consistent semantic masks \(\hat{M}\) and object-level features \(\hat{F}\). Then we adopt the generalizable model [21] of surface normal estimation to acquire the geometric cue \(\hat{N}\). In the optimization procedure, we utilize the semantic and normal priors for regularization. We first follow previous approaches to regularize the rendered color, depth and semantic feature (Sec. 4.1). Then we propose a novel smoothness term (Sec. 4.2) to tackle texture-less regions and a novel constraint by analyzing the normal error of Gaussians (Sec. 4.3) to refine object structures. In the inference procedure, our model reconstructs the indoor surface and selects the target object by the open-vocabulary text simultaneously.

4.1 Leveraging 2D Semantic and Geometric Cues↩︎

Previous works [12], [42] either only consider surface reconstruction, or only perform the 2D open-vocabulary segmentation. We propose combining two tasks to jointly reconstruct the scene surface and segment objects of the scene.

For indoor surface reconstruction, in contrast to DN-splatter [42], we utilize the TSDF Fusion [22], [54] to extract the mesh. Hence, we focus on optimizing the rendered depth \(D\). As demonstrated in 2DGS [2], the local smoothness of rendered depth is challenging and important for the surface reconstruction of 3DGS. To smooth rendered depth, we introduce the normal prior \(\hat{N}\). We follow the manner of PGSR [5] to estimate the gradients of 3D points projected from rendered depth \(D\), and take them as the local surface normal \(N_d\). Then we leverage \(\hat{N}\) to regularize it by: \[\setlength{\abovedisplayskip}{3pt} \setlength{\belowdisplayskip}{3pt} \mathcal{L}_n = \sum_{i,j}A(1-N_d^T\hat{N}), \label{eq:depth2normal95loss}\tag{4}\]

For open-vocabulary segmentation, we directly use the view-consistent segmentation results of DEVA [20] as the supervision of the rendered mask \(M\). Specifically, given the rendered features \(F\), we use an MLP layer to increase its feature dimension to the number of total categories first. The softmax function and standard cross-entropy loss \(\mathcal{L}_m\) are adopted for final classification. To enhance the open-vocabulary capabilities of our model, we employ the CLIP features \(\hat{F}\) to supervise \(F\) by: \[\setlength{\abovedisplayskip}{3pt} \setlength{\belowdisplayskip}{3pt} \mathcal{L}_{clip} = || F - \hat{F} ||^1, \label{eq:clip95loss}\tag{5}\]

4.2 Semantic-feature Guided Normal Smoothing↩︎

Big surfaces like floors and desktops are generally over-smoothing in an indoor scene. Although \(\mathcal{L}_n\) supply local smoothness for reconstruction, it still struggles in shadow and high-light regions of these surfaces as Fig. 5 shows. High-weight \(\mathcal{L}_n\) causes an over-smoothing effect and then makes some small objects disappear, while low-weight \(\mathcal{L}_n\) disables the local smoothness of rendered depth.

To resolve this issue, we propose introducing clip features to help smooth big surfaces, then we can employ \(\mathcal{L}_n\) with low weight for protecting small objects. Essentially, clip features supply high-dimension object-level smoothness which can implicitly reduce the inference of big surfaces. Concretely, we first introduce SAM to select objects that occupy the top-\(k\) area by the mask \(M_o\). and design a novel regularization term \(\mathcal{L}_s\) for smoothing them as: \[\setlength{\abovedisplayskip}{3pt} \setlength{\belowdisplayskip}{3pt} \mathcal{L}_s = M_o(|\partial_x N_d|e^{-||\partial_x \boldsymbol{\hat{F}}||^1} + |\partial_y N_d|e^{-||\partial_y \boldsymbol{\hat{F}}||^1}), \label{eq:smooth95loss}\tag{6}\]

4.3 Normal-error Guided Depth Refinement↩︎

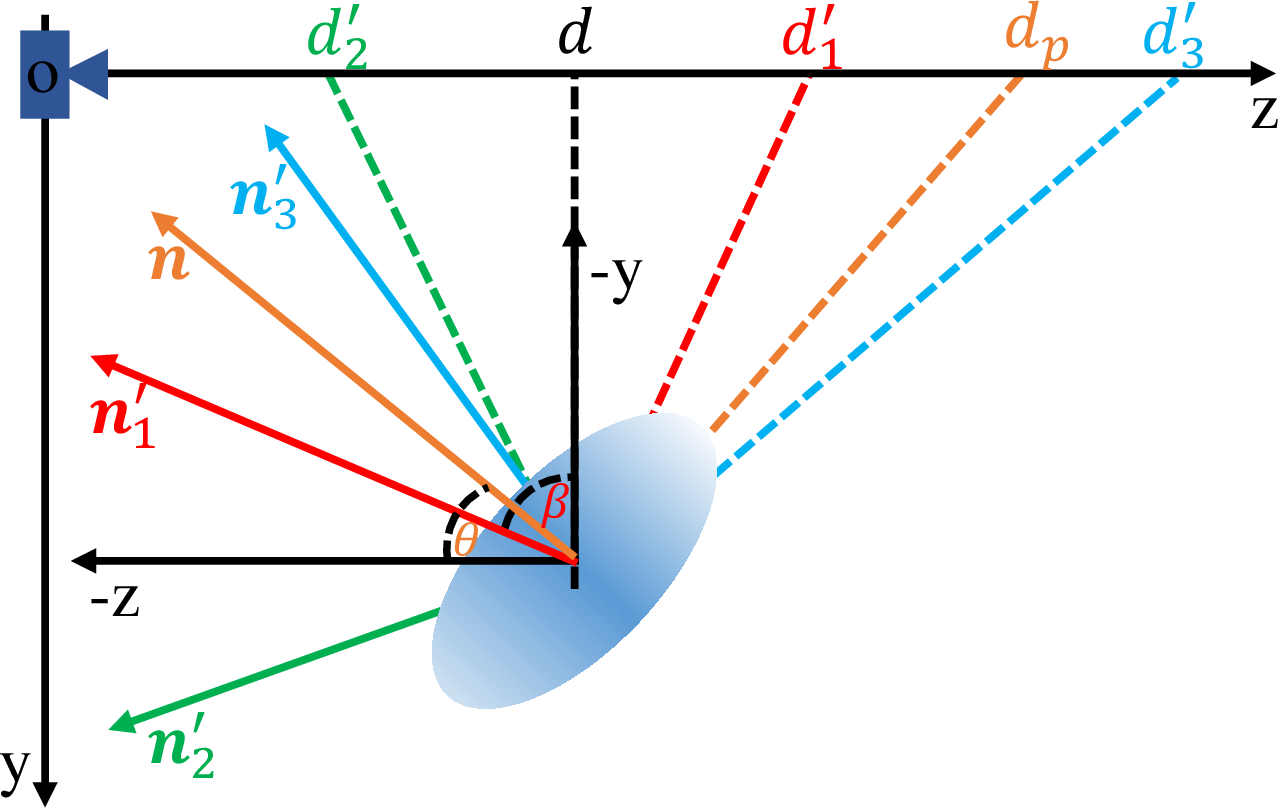

Figure 4: Depth refinement guided by the normal error between the rendered normal \(\boldsymbol{n}\) and the ideal normal \(\boldsymbol{n}'\). We analyze three conditions of \(\boldsymbol{n}'\). The ideal depth \(d'\) of each condition is the unbiased depth proposed by PGSR [5].

We take the unbiased depth \(D_p\) from PGSR [5] to replace the rendered depth and as the input of TSDF fusion procedure, which denoted by: \(D_p = \frac{D}{cos(\theta)}\), where \(\theta\) is the angle between the direction of the intersecting ray and the rendered normal \(\boldsymbol{n}\) [55]. However, due to the ambiguity of the shortest Gaussian axis, \(\boldsymbol{n}\) always occurs large error when compared to the ideal normal \(\boldsymbol{n}'\). As Fig. 4 shows, given \(\boldsymbol{n}\) and the corresponding unbiased depth \(d_p\), there are three main conditions of the ideal normal \(\boldsymbol{n}'\) as follows:

\(\boldsymbol{n}'_{1}\) exists between \(\boldsymbol{n}\) and \(\boldsymbol{-z}\). The corresponding ideal depth \(d'_{1} \in [d, d_p]\).

\(\boldsymbol{n}'_{2}\) exists between \(\boldsymbol{-z}\) and \(\boldsymbol{y}\). The corresponding ideal depth \(d'_{2} \in [0, d]\).

\(\boldsymbol{n}'_{3}\) exists between \(\boldsymbol{n}\) and \(\boldsymbol{-y}\). The corresponding ideal depth \(d'_{3} \in [d_p, d]\).

To determine the \(i\)-th \(\boldsymbol{n}'\), we propose using the angle \(\alpha\) between \(\boldsymbol{n}'_i\) and \(\boldsymbol{-y}\) along with \(\theta' = 90^\circ - \theta\). Then we have \(cos(\alpha) = \boldsymbol{n}'\cdot\boldsymbol{-y}\) and \(cos(\theta') = \boldsymbol{n}\cdot\boldsymbol{-y}\). Consequently, we can get \(\boldsymbol{n}'_{1} \in \{\boldsymbol{n}'|cos(\alpha) > cos(\theta') > 0\}\), \(\boldsymbol{n}'_{2} \in \{\boldsymbol{n}'| cos(\alpha) < 0\}\) and \(\boldsymbol{n}'_{3} \in \{\boldsymbol{n}'|0 < cos(\alpha) \leq cos(\theta')\}\).

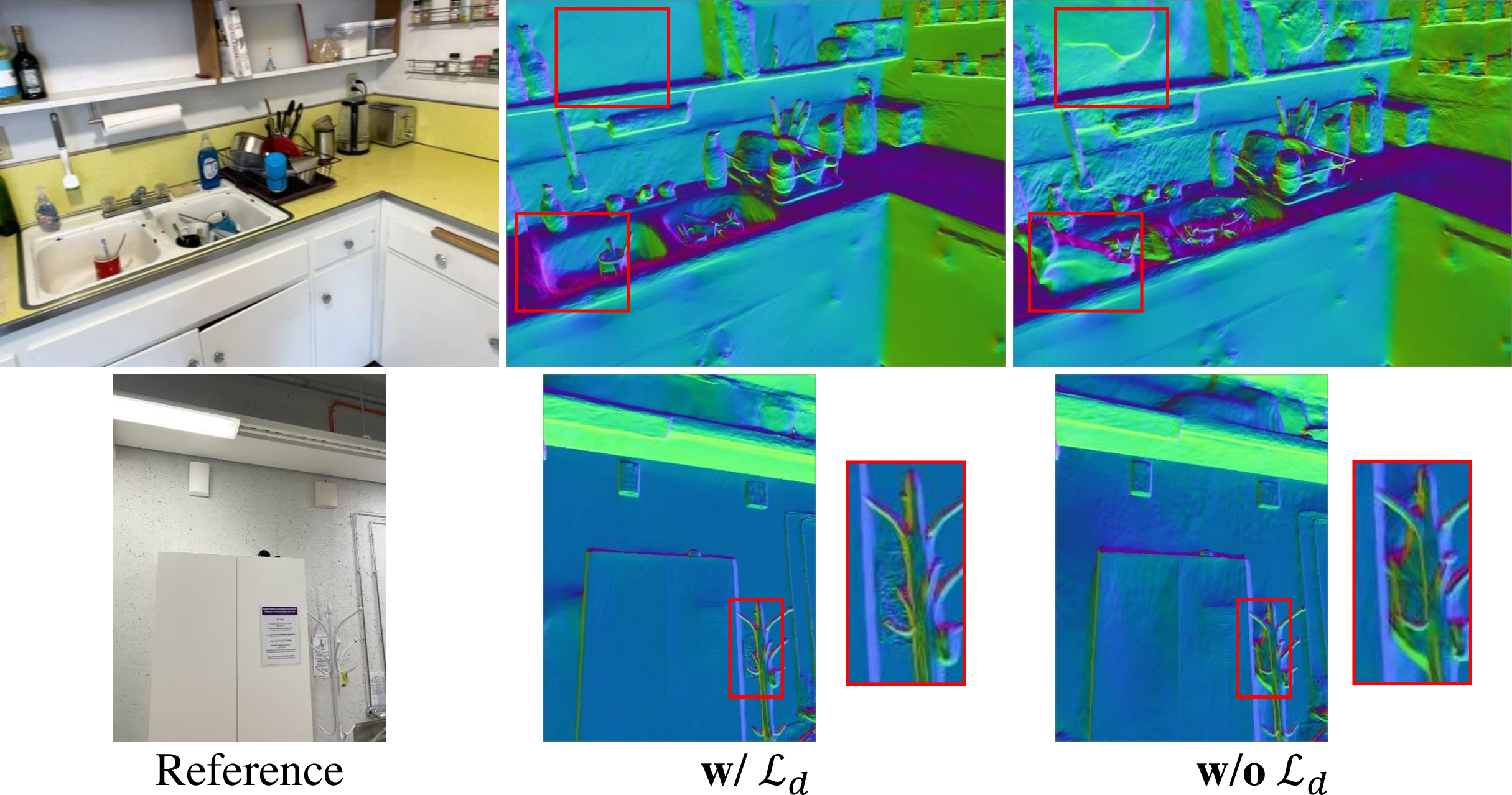

In practice, we take \(\hat{N}\) to approximate \(\boldsymbol{n}'\) in 2D space. Then we can acquire three masks (\(M_1\),\(M_2\) and \(M_3\)) through the above three conditions. Ulteriorly, to make \(d_p\) close to \(d'\), we refine \(D_p\) by reconstituting depth in each mask. Specifically, for the first condition, we introduce the rendered alpha \(A\) to integrate \(D\) and \(D_p\) in \(M_1\) by: \(M_1(AD_p + D - AD)\). For the second condition, we choose \(M_2D\) as the target depth. For the last condition, we choose \(M_3D_p\) as the target depth. Finally, the whole target depth \(D_r\) can be denoted by: \[\setlength{\abovedisplayskip}{3pt} \setlength{\belowdisplayskip}{3pt} D_r = M_1(AD_p + D - AD) + M_2D + M_3D_p, \label{eq:target95depth}\tag{7}\] Then we conduct a novel regularization term \(\mathcal{L}_d\) between \(D_r\) and \(D_p\) by: \[\setlength{\abovedisplayskip}{3pt} \setlength{\belowdisplayskip}{3pt} \mathcal{L}_d = 1 - e^{-||D_p-D_r||^1}, \label{eq:opt95d95loss}\tag{8}\] Furthermore, we choose the pixels under \(\{N_d^T\hat{N} < 0.9\}\) to compute the value of \(\mathcal{L}_d\). As Fig. 5 demonstrates, \(\mathcal{L}_d\) encourages the Gaussians closely attach to the object surface and then improves the sharpness and smoothness of the reconstructed surface.

Figure 5: Effect of \(\mathcal{L}_d\). The surface normal is estimated from the unbiased depth. \(\mathcal{L}_d\) faithfully enhances the sharpness and smoothness of the reconstructed surface.

4.4 Optimization↩︎

We adopt the photometric loss \(L_c\) from vanilla 3DGS [13]. All loss functions are simultaneously optimized by training from scratch. The total loss function \(\mathcal{L}\) can be defined as: \[\label{eq:total95loss} \setlength{\abovedisplayskip}{3pt} \setlength{\belowdisplayskip}{3pt} \mathcal{L} = \mathcal{L}_c + \alpha_n \mathcal{L}_n + \alpha_m \mathcal{L}_m + \alpha_{clip} \mathcal{L}_{clip} + \alpha_d \mathcal{L}_d + \alpha_s \mathcal{L}_s.\tag{9}\] We set \(\alpha_n = 0.07\), \(\alpha_m = 0.3\), \(\alpha_{clip} = 1.0\), \(\alpha_d = 0.1\) and \(\alpha_s = 0.5\) in our experiments.

[t]

\footnotesize

\centering

\caption{Quantitative results of indoor surface reconstruction on MuSHRoom dataset. Our method outperforms other methods on most metrics. The best metrics are \textbf{highlighted}.}

\vspace*{-3mm}

\resizebox{\textwidth}{!}{\begin{tabular}{c|c|c|ccccc}

\cline{1-8}

Methods & Sensor Depth & Algorithm & Accuracy$~\downarrow$ & Completion$~\downarrow$ & Chamfer$-L_1$ $~\downarrow$ & Normal Consistency$~\uparrow$ & F-score$~\uparrow$\\

\cline{1-8}

DN-Splatter~\cite{turkulainen2024dnsplatter} & ✔ & Poisson & 0.0402 & \textbf{0.0211} & 0.0306 & 0.8449 & 0.8590 \\

Ours & ✔ & TSDF & \textbf{0.0288} & 0.0254 & \textbf{0.0271} & \textbf{0.8640} & \textbf{0.8924}\\

\cline{1-8}

2DGS~\cite{Huang2DGS2024} & $\times$ & TSDF & 0.1728 & 0.1619 &

0.1674 & 0.7113 & 0.3433\\

PGSR~\cite{chen2024pgsr} & $\times$ & TSDF & 0.4957 & 0.7672 & 0.6315 & 0.5566 & 0.1178\\

Ours & $\times$ & TSDF& \textbf{0.0903} & \textbf{0.0703} & \textbf{0.0870} & \textbf{0.7830} & \textbf{0.5153}\\

\cline{1-8}

\end{tabular}}

\label{tab:mush}

[t]

\footnotesize

\centering

\caption{Quantitative results of indoor surface reconstruction on ScanNet++ dataset. 'MC` means Marching Cube algorithm~\cite{lorensen1998marching}. Our method achieves comparable performance and $30\times$ training speed than MonoSDF~\cite{yu2022monosdf}. The best metrics are \textbf{highlighted}.}

\vspace*{-3mm}

\resizebox{\textwidth}{!}{\begin{tabular}{c|c|c|ccccc}

\cline{1-8}

Methods & Sensor Depth & Algorithm & Accuracy$~\downarrow$ & Completion$~\downarrow$ & Chamfer$-L_1$ $~\downarrow$ & Normal Consistency$~\uparrow$ & F-score$~\uparrow$ \\

\cline{1-8}

MonoSDF~\cite{yu2022monosdf} & ✔ & MC & \textbf{0.0536} & 0.0490 & 0.0513 & \textbf{0.9142} & 0.7124 \\

DN-Splatter~\cite{turkulainen2024dnsplatter} & ✔ & Poisson & 0.0977 & 0.0431 & 0.0704 & 0.8272 & 0.7094 \\

Ours & ✔ & TSDF & 0.0640 & \textbf{0.0272} & \textbf{0.0444} & 0.9064 & \textbf{0.8623} \\

\cline{1-8}

2DGS~\cite{Huang2DGS2024} & $\times$ & TSDF & 0.2440 & 0.4362 & 0.3401 & 0.6343 & 0.1838\\

PGSR~\cite{chen2024pgsr} & $\times$ & TSDF & 0.1670 & 0.2188 & 0.1929 & 0.7622 & 0.2227\\

Ours & $\times$ & TSDF & \textbf{0.1001} & \textbf{0.1114} & \textbf{0.1057} & \textbf{0.8393} & \textbf{0.4711}\\

\cline{1-8}

\end{tabular}}

\vspace*{-3mm}

\label{tab:scanpp}5 Experiments↩︎

5.1 Settings↩︎

5.1.0.1 Datasets & Metrics.

For open-vocabulary segmentation, we follow LangSplat [7] to conduct experiments on the LERF-OVS dataset. We adopt the evaluation metrics from Gaussian Grouping. Specifically, we use the text query to select 3D Gaussians, then calculate the average IoU (mIoU) and boundary IoU (mBIoU) accuracy between rendered masks and annotated object masks. For indoor surface reconstruction, we conduct experiments on two RGBD datasets: MuSHRoom [23] and ScanNet++ [24]. We adopt PSNR, SSIM and LPIPS metrics for rendered images and follow DN-Splatter [42] to evaluate mesh quality through five metrics: Accuracy, Completion, Chamfer-L1 distance, Normal Consistency and F-scores. For novel view synthesis, we follow standard PSNR, SSIM and LPIPS metrics for rendered images.

5.1.0.2 Baselines.

We compare our model against a series of baseline approaches on two tasks. a) open-vocabulary segmentation: Langsplat [7], Gaussian Grouping [10] and OpenGaussian [12]. We deploy the scheme of OpenGaussian to render the selected objects. Specifically, we compute the similarity between 3D Gaussian semantic features and text features, then select Gaussians with high similarity and obtain the rendered object by the rasterizer. b) Indoor surface reconstruction: MonoSDF [56], 2DGS [2], PGSR [5] and DN-Splatter [42]. To align with the setting of DN-Splatter, we introduce the sensor depth to supervise \(D_p\) by Mean Absolute Error loss.

5.1.0.3 Implementation details.

Our code is built based on PGSR [5]. The densification strategy is adopted from AbsGS [57]. We train GLS for 30k iterations, consuming about 40 minutes on a single NVIDIA RTX 4090 GPU. For indoor surface reconstruction, we first generate rendered depth in each training view, followed by performing the TSDF Fusion [22], [54] to extract the mesh in the TSDF field. Subsequently, for open-vocabulary segmentation, we first compute the similarity between 3D Gaussian semantic features and text features, then filter Gaussians with high similarity to render and reconstruct the selected object.

5.2 Indoor Surface Reconstruction↩︎

5.2.0.1 Quantitative Comparisons.

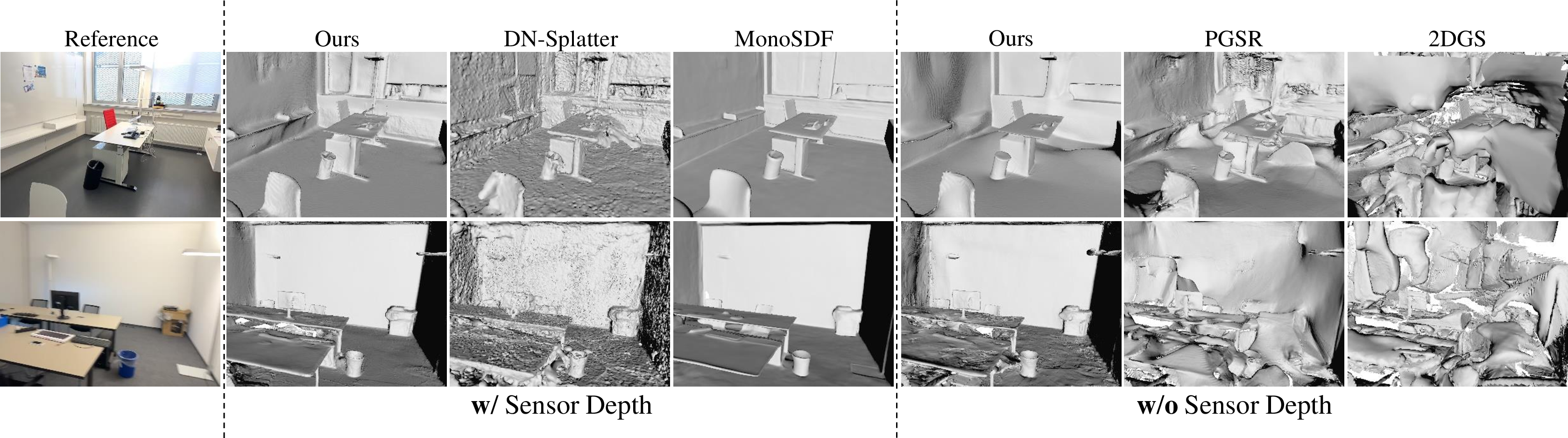

For indoor surface reconstruction, we conduct comparisons on two real-world datasets, including MuSHRoom [23] and ScanNet++ [24]. We report the metric values in Tab. [tab:mush] and Tab. [tab:scanpp]. It can be seen that our method outperforms other 3DGS-based approaches [2], [5] without sensor depth among all metrics. When adopting sensor depth as the prior information of scene scale, our model also achieves better performance of sharpness and smoothness than DN-Splatter [42] according to the Accuracy and Normal Consistency metric. With the help of deep MLP networks, the SDF-based method MonoSDF [56] can learn the actual scale for each densely sampled 3D point. However, this method suffers from low training efficiency. Our model not only has comparable performance, but also reduces the training time by 30 times.

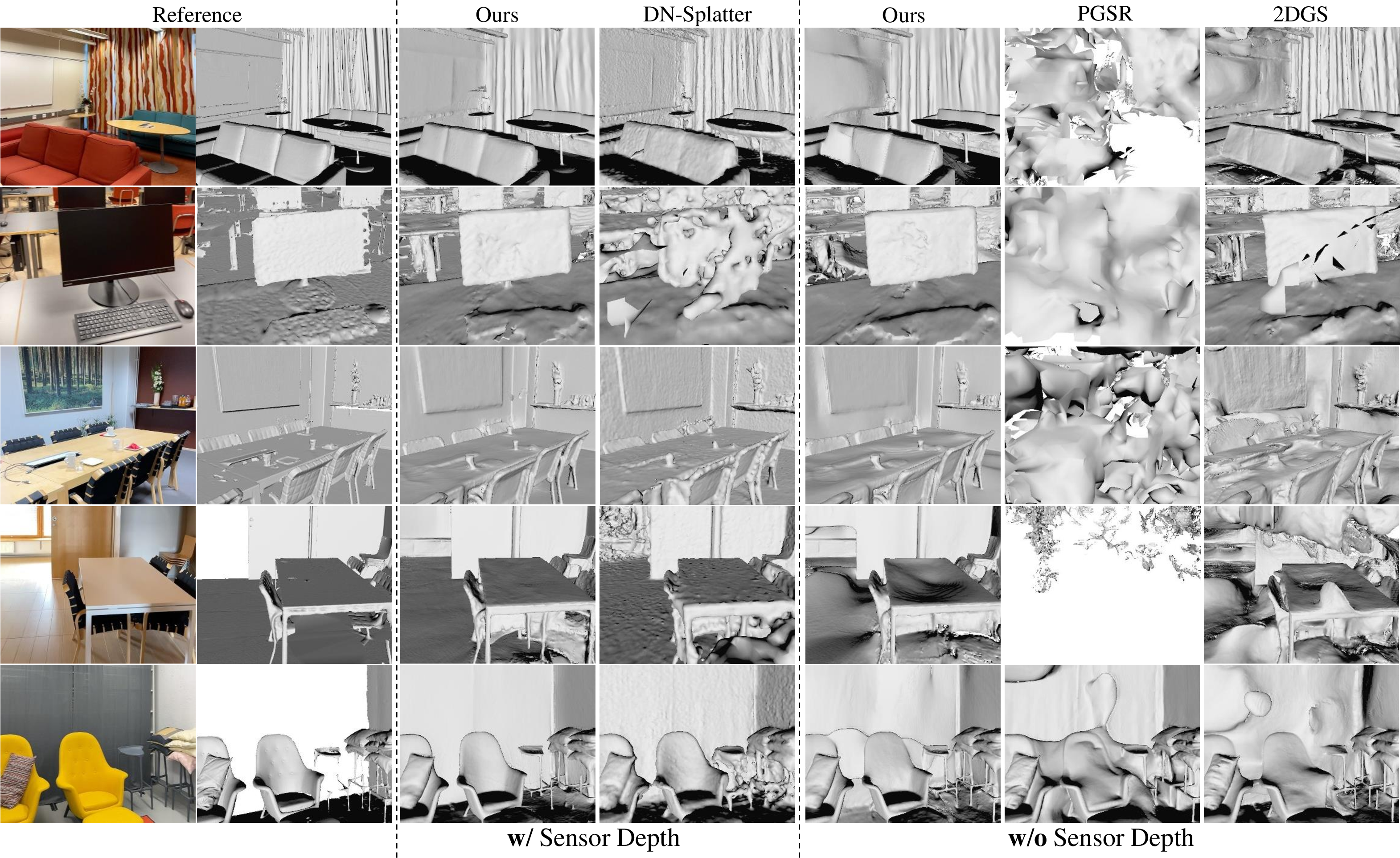

5.2.0.2 Qualitative Comparisons.

As shown in Fig. 6, there are surface reconstruction results produced by different methods on the MuSHRoom dataset. It can be observed that DN-Splatter [42] generates severe noise on scene surfaces and even destroys object structures because of texture-less regions. On the contrary, our model reconstructs smooth and sharp scene surfaces and recovers more thin structures than ground-truth scene surfaces. This observation demonstrates the joint optimization of surface reconstruction and open-vocabulary segmentation can significantly promote the reconstruction quality. For 3DGS-based approaches without sensor depth as supervision, due to the complex camera motion and light conditions, PGSR presents unstable performance and fails in most scenes. When compared to 2DGS, our model handles shadow and high-light regions better and generates cleaner scene surfaces.

Moreover, we further evaluate the generalization ability of all methods on ScanNet++ dataset as Fig. 7 shows. These scenes encode various lighting conditions, which makes reconstructing scene surfaces more challenging. Hence, more noises occur in DN-splatter results, while our results are still sharp and smooth. MonoSDF decreases the interference of lighting conditions by over-smoothing effects, which result in the loss of several objects (e.g., the computer bracket and screen). For 3DGS-based approaches without sensor depth as supervision, PGSR and 2DGS produce meaningless results while our results successfully tackle these scenes well.

Figure 6: Qualitative comparisons of indoor surface reconstruction on MuSHRoom dataset. PGSR generates unstable results by the default hyperparameters.

Figure 7: Qualitative comparisons of indoor surface reconstruction on ScanNet++ dataset. The lighting conditions change violently in these scenes. MonoSDF loses scene details in its results.

5.3 Open-vocabulary Segmentation↩︎

5.3.0.1 Quantitative Comparisons.

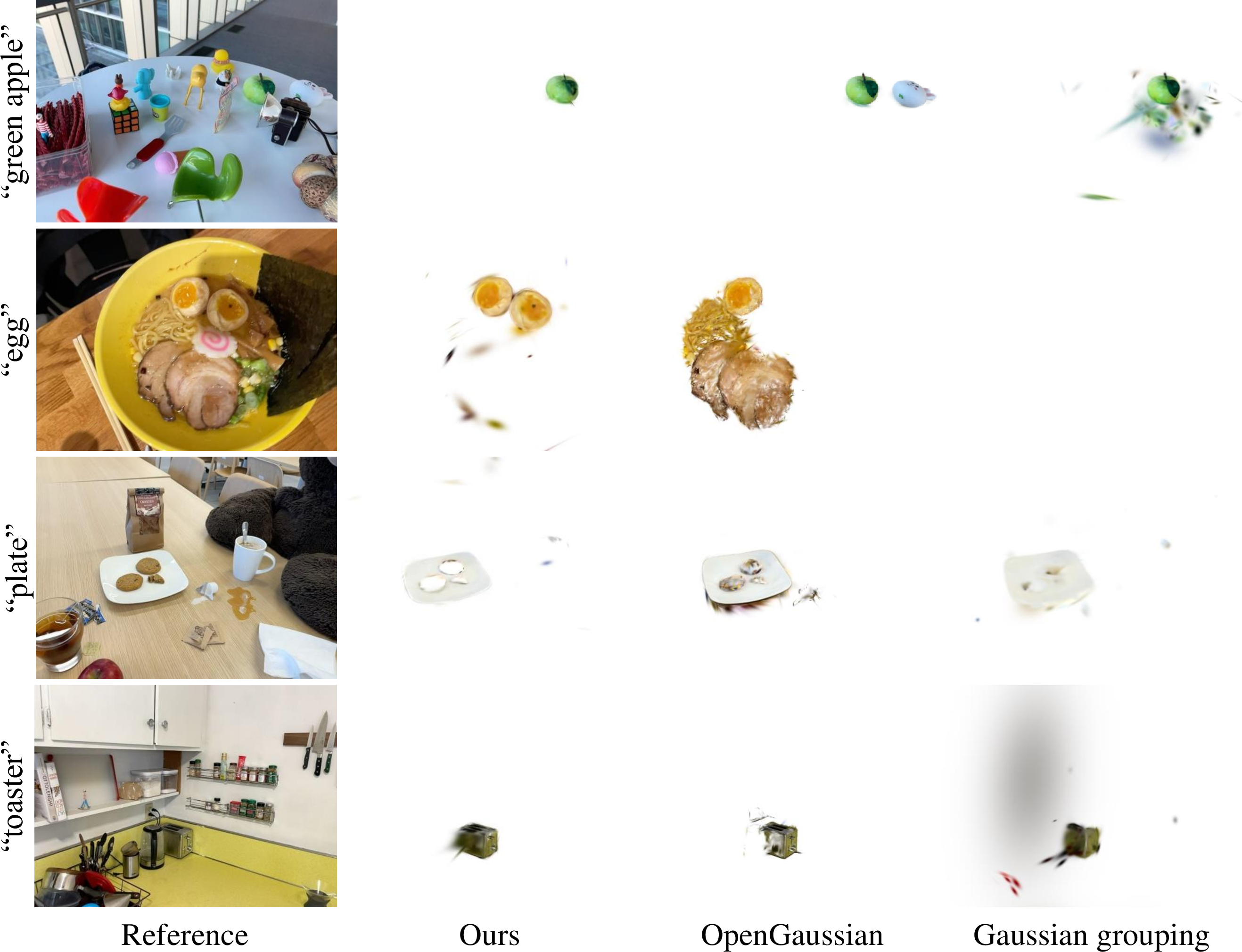

Open-vocabulary segmentation drops predefined labels of objects and uses texts as prompts to segment target objects. We conduct comparisons of open-vocabulary segmentation on the widely-used LERF-OVS dataset. Following Gaussian grouping [10], we employ mIoU and mBIoU as the evaluation metrics, which represent the segmentation quality of the selected object. The estimated metric scores are reported in Tab. 1. Our model outperforms state-of-the-art method OpenGaussian [12] over \(4\%\) on mIoU and achieves over \(20\%\) gain when compared with Gaussian grouping [10] on mBIoU. This fact illustrates that the joint optimization of two tasks improves the smoothness and sharpness of scene surfaces then makes segmentation results smoother and sharper.

| 1-12 Methods | mIoU\(~\uparrow\) | mBIoU\(~\uparrow\) | Time | ||||||||

| figurines | ramen | teatime | waldo_kitchen | Mean | figurines | ramen | teatime | waldo_kitchen | Mean | ||

| 1-12 LangSplat [7] | 10.16 | 7.92 | 11.38 | 9.18 | 9.66 | - | - | - | - | - | 2.2h |

| Gaussian grouping [10] | 15.53 | 17.49 | 22.27 | 26.51 | 20.45 | 13.71 | 13.86 | 19.10 | 18.85 | 16.38 | 0.7h |

| OpenGaussian [12] | 39.29 | 31.01 | 60.44 | 22.70 | 38.36 | - | - | - | - | - | 1.0h |

| Ours | 49.73 | 31.21 | 58.01 | 32.15 | 42.78 | 47.97 | 27.90 | 52.96 | 23.93 | 38.19 | 0.7h |

| 1-12 | |||||||||||

5.3.0.2 Qualitative Comparisons.

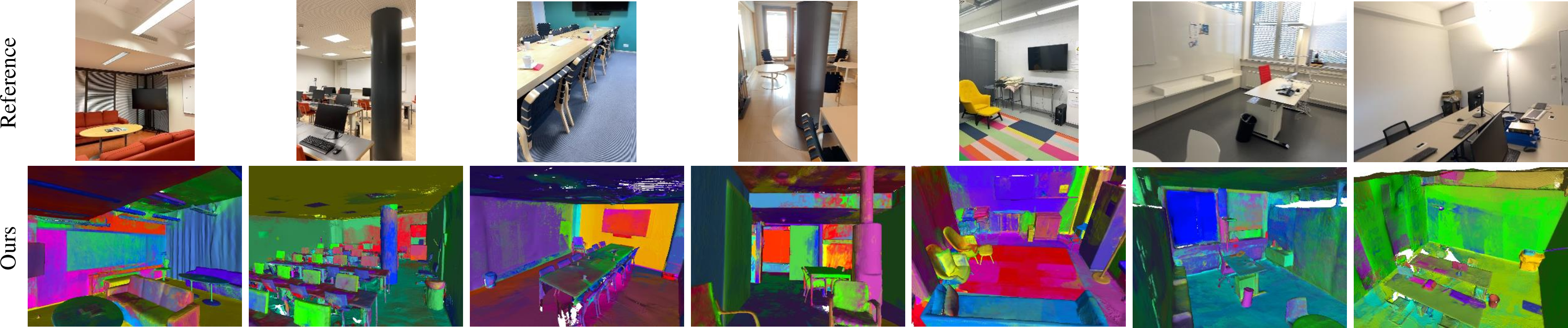

Fig. 8 shows some open-vocabulary segmentation results of different methods. Without the optimization of surface reconstruction, OpenGaussian [12] easily treats other objects as targets and Gaussian grouping [10] exhibits noisy results. Our model successfully identifies the 3D Gaussians relevant to the query text and generates object selection with a clearer boundary.

5.4 Ablation study↩︎

We conduct sufficient ablation experiments to study the effect of different regularization terms, by disabling each one and enabling others. The results of indoor surface reconstruction and open-vocabulary segmentation are reported in Tab. [tab:ab951] and Tab. 2 respectively.

[t]

\footnotesize

\centering

\caption{Ablation study of indoor surface reconstruction on a scene of MuSHRoom dataset. The best metrics are \textbf{highlighted}.}

\vspace*{-3mm}

\resizebox{\textwidth}{!}{\begin{tabular}{c|ccccc}

\cline{1-6}

Settings & Accuracy$~\downarrow$ & Completion$~\downarrow$ & Chamfer$-L_1$ $~\downarrow$ & Normal Consistency$~\uparrow$ & F-score$~\uparrow$ \\

\cline{1-6}

No $\mathcal{L}_n$ & 0.0864 & \textbf{0.0758} & 0.0811 & 0.7390 & 0.5559\\

No $\mathcal{L}_n$, No $\mathcal{L}_d$ & 0.1031 & 0.0828 & 0.0929 & 0.7208 & 0.5247\\

No $\mathcal{L}_s$ & 0.0956 & 0.0852 & 0.0904 & 0.8035 & 0.5453\\

No $\mathcal{L}_{clip}$ & 0.1037 & 0.0985 & 0.1011 & 0.8113 & 0.5434\\

No $\mathcal{L}_m$ & 0.0731 & 0.0871 & 0.0801 & \textbf{0.8343} & 0.5695\\

\cline{1-6}

All & \textbf{0.0720} & 0.0828 & \textbf{0.0774} & 0.8267 & \textbf{0.5784}\\

\cline{1-6}

\end{tabular}}

\label{tab:ab951}| 1-3 Settings | mIoU\(~\uparrow\) | mBIoU\(~\uparrow\) |

|---|---|---|

| 1-3 No \(\mathcal{L}_n\) | 30.80 | 18.99 |

| No \(\mathcal{L}_d\) | 28.12 | 19.76 |

| No \(\mathcal{L}_s\) | 30.84 | 20.87 |

| No \(\mathcal{L}_{clip}\) | 23.20 | 15.27 |

| No \(\mathcal{L}_m\) | 26.78 | 16.15 |

| 1-3 All | 32.15 | 23.93 |

| 1-3 |

Figure 8: Qualitative comparisons of open-vocabulary segmentation on LERF-OVS dataset, between state-of-the-art methods (OpenGaussian [12] and Gaussian grouping [10]) and our model.

5.4.0.1 Effect of \(\mathcal{L}_n\).

The Normal prior supplies the regions with smooth surfaces, where shadow and highlight exist in an indoor scene. For indoor surface reconstruction, \(\mathcal{L}_n\) remarkably improves surface smoothness in terms of the Normal Consistency metric. For open-vocabulary segmentation, \(\mathcal{L}_n\) helps the full model to produce accurate boundary of segmentation results by the joint optimization according to the metric mBIoU metric.

5.4.0.2 Effect of \(\mathcal{L}_d\).

Fig. 5 illustrates the visual effects of \(\mathcal{L}_d\) for indoor surface reconstruction. For open-vocabulary segmentation, the performance of our model degrades when disabling \(\mathcal{L}_d\). Although \(\mathcal{L}_d\) is designed for refining the unbiased depth, it also strengthens the quality of segmentation results through joint optimization.

5.4.0.3 Effect of \(\mathcal{L}_s\).

To determine whether \(\mathcal{L}_s\) is necessary for two tasks, we disable it from our full model. \(\mathcal{L}_s\) enhances the performance of our model among all metrics, by connecting the surface normal estimated from the unbiased depth and Gaussian semantic features.

5.4.0.4 Effect of \(\mathcal{L}_{clip}\).

Then we disable \(\mathcal{L}_{clip}\), to verify it is a significant regularization term for two tasks. We introduce CLIP features to strengthen the representation ability of Gaussian semantic features, and which plays the most important role in open-vocabulary segmentation. It also significantly maintains the sharpness of reconstructed indoor surfaces via joint optimization.

5.4.0.5 Effect of \(\mathcal{L}_m\).

We further explore the effect of \(\mathcal{L}_m\) by dropping the segmentation results of DEVA as supervision. As Tab. 2 reports, \(\mathcal{L}_m\) faithfully increases the completeness and sharpness of segmentation results.

5.5 Additional Results↩︎

5.5.0.1 Surface reconstruction on LERF-OVS dataset.

Fig. 9 shows additional reconstruction results of our model and 2DGS on LERF-OVS dataset. Our model not only produces smooth and sharp object surfaces, but also successfully reconstructs the surfaces of highly- reflective whiteboard and transparent glass.

5.5.0.2 Novel view synthesis.

Finally, we evaluate the performance of our model in the task of novel view synthesis. The results are presented in Tab. 3. Our model achieves comparable performance against the state-of-the-art methods.

| 1-4 Methods | PSNR\(~\uparrow\) | SSIM\(~\uparrow\) | LPIPS\(~\downarrow\) |

|---|---|---|---|

| 1-4 DN-Splatter [42] | 20.73 | 0.8476 | 0.1996 |

| 2DGS [2] | 15.60 | 0.7791 | 0.2581 |

| PGSR [5] | 19.47 | 0.8275 | 0.2060 |

| Ours | 20.69 | 0.8496 | 0.1709 |

| 1-4 |

Figure 9: Results of indoor surface reconstruction on LERF-OVS dataset. Our model reconstructs sharper and smoother indoor surfaces than 2DGS [2].

6 Conclusion↩︎

In this work, we present GLS, a novel 3DGS-based framework that effectively combines indoor surface reconstruction and open-vocabulary segmentation. We propose leveraging 2D geometric and semantic cues to optimize the performance of 3DGS on two tasks jointly. We design two novel regularization terms to enhance the sharpness and smoothness of the scene surface, and then improve the segmentation quality. Comprehensive experiments on both open-vocabulary segmentation and indoor surface reconstruction tasks illustrate that GLS outperforms state-of-the-art methods quantitatively and qualitatively. Besides, the ablation study explores the effectiveness of each regularization term on two tasks.

6.0.0.1 Limitation.

The proposed method follows the natural limitation of TSDF fusion, whose completeness relies on the number of captured views. Our model generates empty geometry of selected objects in unseen views of the scene. We will introduce image-to-3D models to supply unseen information in future work.

7 Additional Algorithm Details↩︎

About \(N_d\). We follow the manner of PGSR [5] to estimate \(N_d\). Given a pixel point p and its four neighboring pixels, we unproject these 2D points into 3D points \(\{P_i|i=0,...,4\}\) by \(D_p\), then calculate the local normal \(N_d\) of p via: \[\setlength{\abovedisplayskip}{3pt} \setlength{\belowdisplayskip}{3pt} N_d(p) = \frac{(P_1-P_0)\times(P_3-P_2)}{|(P_1-P_0)\times(P_3-P_2)|}\]

About \(\mathcal{L}_n\). Inspired by the 2DGS [2] and considering semi-transparent surfels, we adaptively give high weights to actual surfaces in \(\mathcal{L}_n\) by \(A\).

About \(\mathcal{L}_s\). \(\mathcal{L}_s\) is a powerful regularization term for \(N_d\) and easily causes over-smoothing effect for small objects. In practice, we adaptively select big objects that occupy top-\(3\) areas in a view by \(M_o\). Then we adopt \(\mathcal{L}_s\) to constrain them.

About \(D_r\) and \(\mathcal{L}_d\). Essentially, \(D_r\) is a refined depth based on the probability rather than an actual depth. It is built on the error analysis between the rendered normal and the ideal normal. We only consider the normal prior [21] as the reference normal, because its quality is unstable as mentioned by VCR-GauS [43]. Hence, the ideal normal cannot be acquired and the error analysis is necessary. We adopt a total of six loss functions during training, to balance the value of each loss function and avoid the abrupt value of \(D_r\), we propose the function \(y = 1-e^{(-|x|)}\) to generate \(\mathcal{L}_d\).

8 Additional Experimental Details↩︎

Tab. 4 presents metrics of datasets used in our experiments. We list additional optimization hyperparameters below:

def __init__(self, parser): self.iterations = 30_000 self.position_lr_init = 0.00016 self.position_lr_final = 0.0000016 self.position_lr_delay_mult = 0.01 self.position_lr_max_steps = 30_000 self.feature_lr = 0.0025 self.opacity_lr = 0.05 self.scaling_lr = 0.005 self.rotation_lr = 0.001 self.percent_dense = 0.01 self.lambda_dssim = 0.2 self.densification_interval = 100 self.opacity_reset_interval = 3000 self.densify_from_iter = 500 self.densify_until_iter = 15_000 self.densify_grad_threshold = 0.0006 self.opacity_cull_threshold = 0.05 self.densify_abs_grad_threshold = 0.0008 self.abs_split_radii2D_threshold = 20 self.max_abs_split_points = 50_000 self.max_all_points = 6000_000

For Open-vocabulary Segmentation, we follow LangSplat [7] to decrease the last dimension of original CLIP features from \(512\) to \(16\) by the encoder part of an encoder-decoder network. Then we use the decoder part of the same network to increase the the last dimension of Gaussian semantic features from \(16\) to \(512\), to compute the relevancy between them and the original CLIP features. The extraction scheme for SAM masks and CLIP features is also aligned with LangSplat [7], while we follow OpenGaussian [12] only to extract the large layer of SAM masks. The learning rate of the MLP layer is \(0.00005\).

For indoor surface reconstruction, we use the iPhone sequences with COLMAP registered poses on both MuSHRoom [23] and ScanNet++ [24] datasets. Besides, we adopt the densification strategy of AbsGS [57]. We disable the exposure compensation strategy of PGSR to maintain fair comparison.

9 Additional results↩︎

Per-scene quantitative results of GLS on the MuSHRoom and ScanNet++ datasets are reported in Tab. [tab:scanpp]. As Fig. 10 and Fig. 11 show, GLS also can attach the semantic information to the resconstructed mesh, by replacing the color image with the encoded semantic mask during TSDF fusion [22]. Please note that our model is trained without any manual semantic annotations. Fig. 12 shows more open-vocabulary segmentation results of GLS. Our model can accurately segment target objects selected by the corresponding text.

[t]

\footnotesize

\centering

\caption{Per-scene quantitative results of GLS on the MuSHRoom and ScanNet++ datasets.}

\vspace*{-3mm}

\resizebox{\textwidth}{!}{\begin{tabular}{c|c|ccccc}

\cline{1-7}

& Scenes & Accuracy$~\downarrow$ & Completion$~\downarrow$ & Chamfer$-L_1$ $~\downarrow$ & Normal Consistency$~\uparrow$ & F-score$~\uparrow$ \\

\cline{1-7}

\multirow{7}{*}{\textbf{w}/ Sensor Depth} & coffee\_room & 0.0231 & 0.0275 & 0.0253 & 0.8702 & 0.9020 \\

& computer & 0.0394 & 0.0277 & 0.0336 & 0.8756 & 0.8445 \\

& honka & 0.0264 & 0.0284 & 0.0274 & 0.8742 & 0.9090 \\

& kokko & 0.0305 & 0.0272 & 0.0444 & 0.9064 & 0.8623 \\

& vr\_room & 0.0244 & 0.0237 & 0.0241 & 0.8885 & 0.8802 \\

& 8b5caf3398 & 0.0936 & 0.0241 & 0.0588 & 0.8657 & 0.8741 \\

& b20a261fdf & 0.0335 & 0.0270 & 0.0303 & 0.9219 & 0.8841 \\

\cline{1-7}

\multirow{7}{*}{\textbf{w}/\textbf{o} Sensor Depth} & coffee\_room & 0.0684 & 0.0669 & 0.0676 & 0.7992 & 0.6103 \\

& computer & 0.0963 & 0.0874 & 0.0918 & 0.7708 & 0.4739 \\

& honka & 0.0810 & 0.0750 & 0.0780 & 0.7899 & 0.5503 \\

& kokko & 0.1102 & 0.1041 & 0.1072 & 0.7515 & 0.3969 \\

& vr\_room & 0.0956 & 0.0852 & 0.0904 & 0.8035 & 0.5453 \\

& 8b5caf3398 & 0.0618 & 0.0580 & 0.0599 & 0.8732 & 0.6045 \\

& b20a261fdf & 0.1383 & 0.1647 & 0.1515 & 0.8054 & 0.3376 \\

\cline{1-7}

\end{tabular}}

\vspace*{-3mm}

\label{awnupigr}| 1-12 Dataset | LERF-OVS [25] | MuSHRoom [23] | ScanNet++ [24] | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1-12 Scene | figurines | ramen | teatime | waldo_kitchen | coffee_room | computer | honka | kokko | vr_room | 8b5caf3398 | b20a261fdf |

| 1-12 Resolution | 986 \(\times\) 728 | 988 \(\times\) 731 | 988 \(\times\) 730 | 985 \(\times\) 725 | 738 \(\times\) 994 | 1920 \(\times\) 1440 | |||||

| 1-12 Training Views | 299 | 131 | 177 | 187 | 353 | 455 | 320 | 348 | 418 | 126 | 59 |

| 1-12 Initial points | 65k | 27k | 23k | 15k | 1000k | 111k | 111k | ||||

| 1-12 | |||||||||||

Figure 10: Semantic mesh results of GLS on LERF-OVS [25].

Figure 11: Semantic mesh results of GLS on MuSHRoom [23] and ScanNet++ [24].

Figure 12: More open-vocabulary segmentation results of GLS on LERF-OVS [25].