Deduction Game Framework and Information Set Entropy Search

July 30, 2024

Abstract

We present a game framework tailored for deduction games, enabling structured analysis from the perspective of Shannon entropy variations. Additionally, we introduce a new forward search algorithm, Information Set Entropy Search (ISES), which effectively solves many single-player deduction games. The ISES algorithm, augmented with sampling techniques, allows agents to make decisions within controlled computational resources and time constraints. Experimental results on eight games within our framework demonstrate the significant superiority of our method over the Single Observer Information Set Monte Carlo Tree Search(SO-ISMCTS) algorithm under limited decision time constraints. The entropy variation of game states in our framework enables explainable decision-making, which can also be used to analyze the appeal of deduction games and provide insights for game designers.

Information Set, Deduction Games, Information Entropy, Fun of Games, Game Design, Search Algorithm

1 Introduction↩︎

Deduction games represent an important category of games with a substantial user base engaging in regular play. Examples include single player games such as Black Box[1], Mastermind, and Wordle, and multiplayer games such as Cluedo, Scotland Yard, and The Resistance. While some poker games feature deduction elements, the term “deduction games” specifically denotes those where deductive reasoning is the fundamental mechanism[[2]][3]. The structure of single-player deduction games typically involves the concealment of a ‘secret code’ at the game’s start, prompting players to engage in a sequence of queries (actions). Following each player action, feedback is provided by the in-game ‘Oracle.’ Players must continually engage in action and reasoning processes until they identify the secret.

Our longer term goal is to better understand single and multi-player deduction games, and provide agents to play the games and tools to analyse the games. In this paper we focus on single-player games. The main contributions are:

A framework for implementing single-player deduction games and different kinds of information sets.

Information Set Entropy Search - ISES: a family of algorithms to play the games.

Entropy variation as measure of a game’s challenge or potential interest.

Results on eight deduction games.

2 Related Works↩︎

Deduction games are a class of imperfect information games, in which Information Set is a key concept: it is the set of all game states that are consistent with an agent’s knowledge at a point in the game. The Information Set Monte Carlo tree search(ISMCTS) algorithm specializes in sampling potential states within an information set, effectively tackling the complexities and strategic subtleties inherent in games where complete information is not universally accessible [4]. It exhibits superior performance in deduction games, as demonstrated by Reinhardt’s utilization of the SO-ISMCTS agent in Secret Hitler, outperforming rule-based approaches [5].

Our work is closely related to Downing et al (2019) [6], who used entropy considerations to derive a deduction game policy. Although the core principles of entropy-based policy are similar, the methods are significantly different.

Downing et al used a Domain-Specific Language (DSL) to describe deduction games.

In our work we offer an alternative approach that has significant advantages:

No need to learn a DSL.

Fast game solving in many cases, though this depends on the game and the variant of the algorithm used.

The anytime variants of our algorithm offer extremely rapid game solving which often remains close to optimal.

We envisage that ISES can be more easily adapted to multi-player and/or impure deduction games i.e. games with a deduction element combined with other mechanics.

Besides, methods similar to ours have also been applied in research on specific games. For instance, Neuwirth and Kooi have used entropy-based methods in Mastermind[[7]][8]. However, our work focuses more on the versatility of the method and provides a deeper analysis of deduction games. Our final goal is to explore how entropy-based methods can be used to evaluate game states in information-based gameplay to optimize existing search algorithms. This application of entropy differs completely from some past studies that used entropy to optimize policy iteration[9].

3 Deduction Game Framework↩︎

3.1 Structure of Single-player Deduction Games↩︎

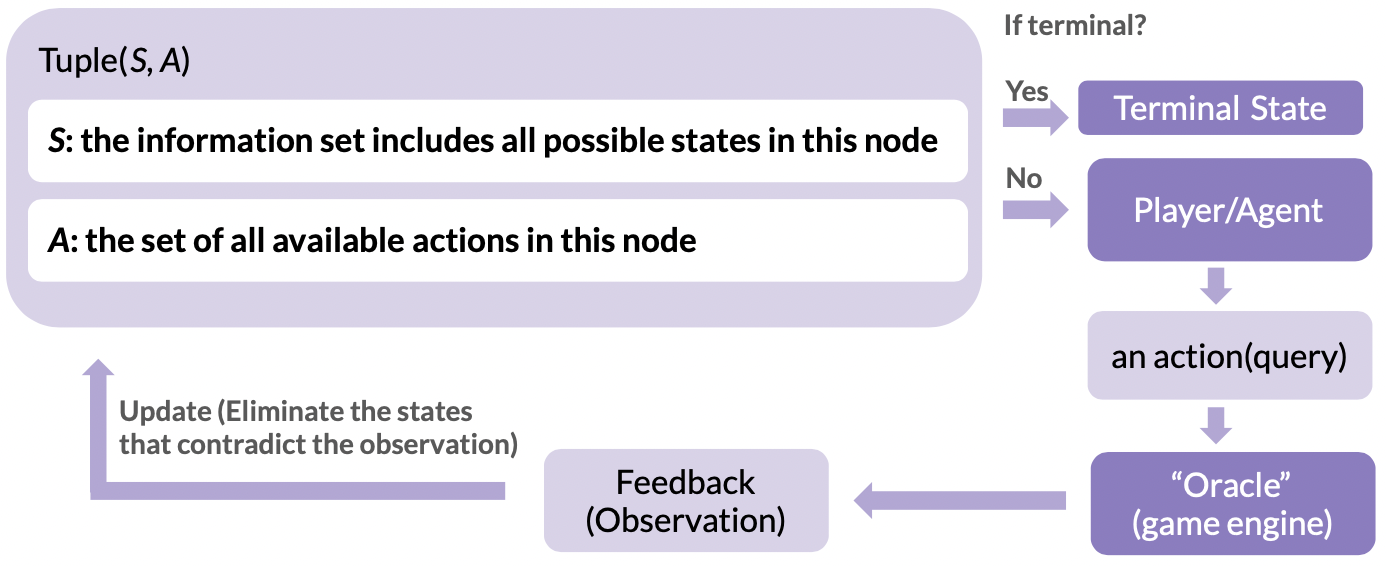

Figure 1: The structure of typical single player deduction games

Single-player deduction games usually start with a hidden ‘secret’, and players ask questions and receive feedback until they discover the truth. Unlike typical partially observable Markov processes represented by a 6-tuple \((S,A,T,R,\Omega,O)\)[10], in these games, the ‘Oracle’ is usually truthful, and both feedback and state transitions are deterministic. Thus, transition probabilities can be ignored, and the reward form differs from other games since the goal is often to uncover the secret in the fewest steps possible.

A simpler model can be used to represent this process, as shown in Fig. 1, where the game’s decision-making information is represented by a 2-tuple. The information set is the collection of all possible states at a decision point, and the action space is all legal actions at that point. Without termination conditions, players must continually choose actions (make queries) and exclude possibilities contradicted by observations to update the information set. Termination conditions vary by game, usually ending when the information set has only one possibility, though different rules may apply to some games.

3.2 Two representations of information sets↩︎

In our framework, the information entropy of game information sets is used to evaluate the degree of uncertainty in an agent’s incomplete information state within a game. Meanwhile, the goal of players in deduction games is to obtain certain state information, that is, to reduce the information entropy of the information set to a target value. We note that the information state of some games can be represented in table form, whereas for some games, due to the nature of combinatorial mathematics, their information states cannot be updated in a tabular form. The following two examples of games illustrate this point.

Fake Coin Game: In this game, among n identical-looking coins, one coin is slightly lighter or heavier. Players can infer the identity and weight of the fake coin by placing coins on both sides of a balance scale and observing its tilt. The game terminates when the fake coin and its weight are determined[11].

Mastermind: In its classic form, the secret comprises a code of four colored pegs, with each peg chosen from a set of six colors. Players receive feedback by proposing their own four-color code. The number of black pegs in the response indicates the number of pegs with correct colors and positions, while white pegs represent correct colors but incorrect positions. The game terminates when the correct code is provided[12].

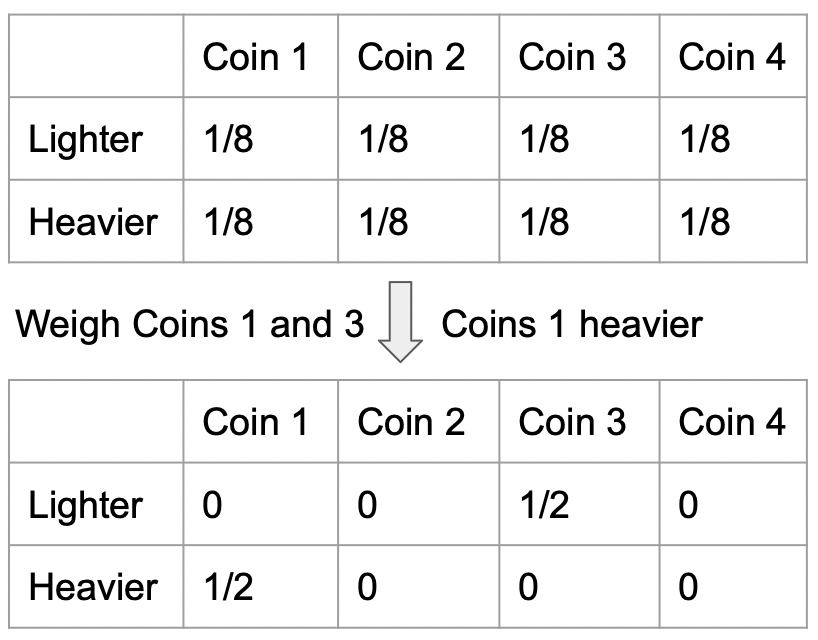

Figure 2: The change in the information state matrix of the 4-coin fake coin game after a weighing result.

As shown in Fig. 2, the information state of the fake coin game can be represented by a table, and the table can be accurately iterated at each step of the game to reflect the information currently held by the player. However, as an NP-complete problem, Mastermind cannot represent its information state as a two-dimensional matrix that can be iterated step-by-step due to its combinatorial nature[13]. Consequently, obtaining its optimal solution cannot be transformed into a directly solvable polynomial computation problem[14].

This leads to the need in our framework to calculate the entropy of information states in two ways. Based on Shannon’s entropy formula (1) [15], for games like the fake coin game, it is only needed to calculate the entropy of the probability table of the information state. For games like Mastermind, however, it is necessary to know the number of all possible codes in the current information set. Since the probability of all possible codes is equal, the calculation formula is as shown in formula (2) and n is the number of possible codes.

\[\begin{align} H(X) &= -\sum_{i=1}^{n} P(x_i) \log P(x_i) \end{align}\]

\[H(X) = -\sum_{i=1}^{n} \frac{1}{n} \log \frac{1}{n} = -n \cdot \frac{1}{n} \log \frac{1}{n} = \log n\]

3.3 Entropy analysis of games↩︎

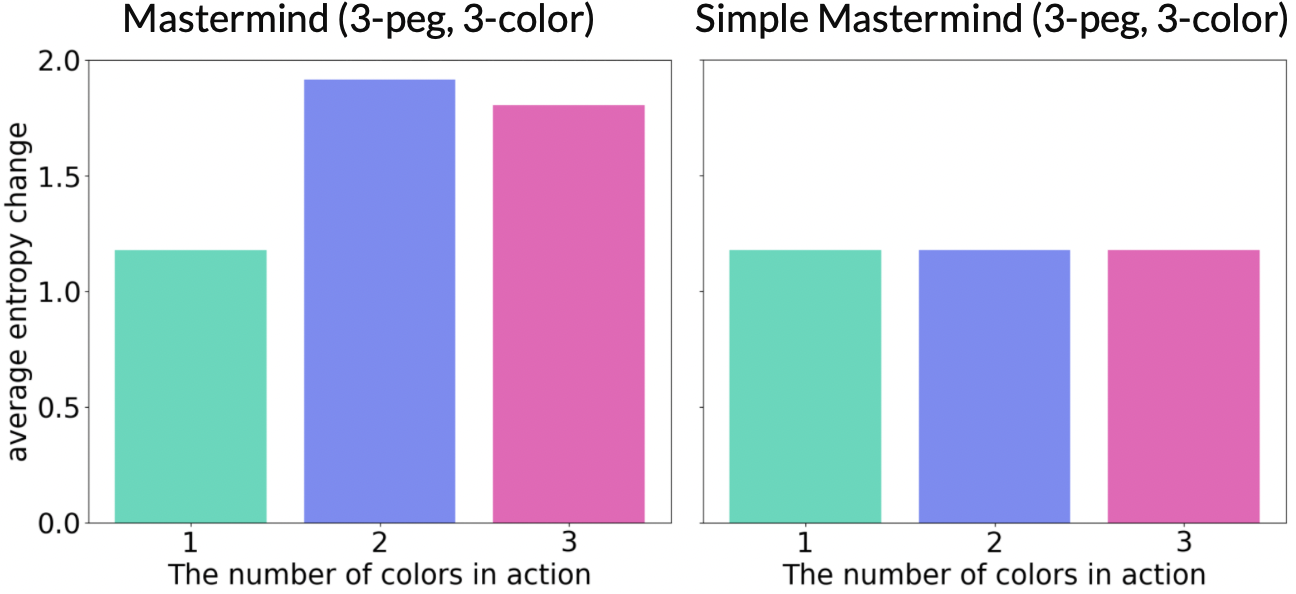

The use of state information entropy to evaluate game states not only confers a high level of interpretability upon strategies but also manifests the strategic depth of a game. For example, in Fig. 3, we demonstrate the mean value of the change in state information entropy induced by various actions at the initial state across all possible starting configurations in Mastermind and Simple Mastermind—games characterized by three pegs and colors.

Figure 3: The impact of different actions on the entropy change of game state information in the initial state.

The bar graph for Mastermind shows starting with two colors optimally reduces entropy and increases certainty. In Simple Mastermind, unlike the standard version of Mastermind, the feedback for guesses includes only black pegs, indicating correct colors in the correct positions, but no white pegs for correct colors in incorrect positions. Consequently, all first moves are similar, lacking strategic depth. Fig. 3 illustrates this difference, explaining why Mastermind is more intriguing.

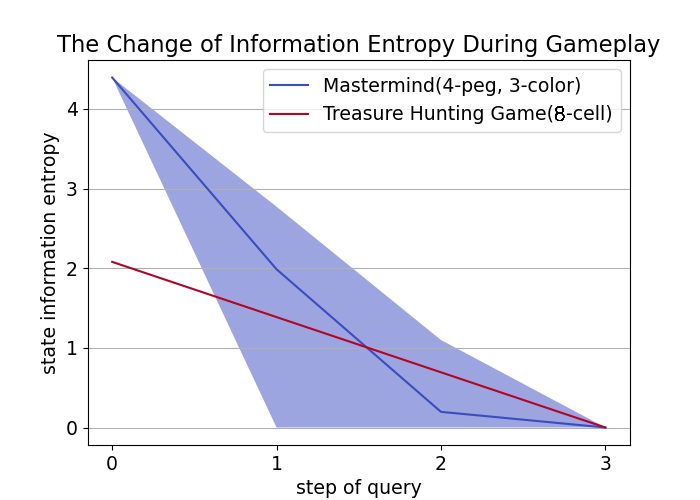

Is strategic superiority of some actions sufficient for an engaging deduction game? We visualized state information entropy changes through successive query rounds in Treasure Hunting Game and Mastermind Fig. 4. Results assume an agent invariably adopting the optimal average strategy, with algorithmic details in the next section.

Figure 4: The variation of state information entropy of two games over 20 experiments with the use of the optimal average entropy reduction strategy.

In Treasure Hunting Game, players select cells to locate a hidden treasure. The game ends when treasure’s location is narrowed to one cell. Fig. 4 shows entropy range over game iterations. For Treasure Hunting Game, entropy change with optimal strategy is a straight line, while Mastermind has a range. Binary search optimally locates treasure in 8 cells, consistently halving uncertainty. But Mastermind, an NP-complete problem[14], has uncertain outcomes even with the policy of minimizing entropy on average, lacking a polynomial solution like Treasure Hunt.

This explains why Mastermind is more interesting despite both being strategic games: Mastermind has uncertain entropy changes and no definitive resolution, while Treasure Hunt loses appeal once binary search is known. In conclusion, two factors make deduction games interesting: 1) Spatial - players’ choices at decision points aren’t equivalent. 2) Temporal - an optimal action’s outcome remains uncertain after exploration, as Mastermind’s entropy range in Fig. 4 shows. Same initial move could reduce entropy to zero or require more steps.

4 Information Set Entropy Search↩︎

In the previous section, we introduced a methodology for assessing game states using information entropy and discussed that the optimal action in single-player games should maximize average information gain. This idea has been expanded into a generalized search algorithm called the ISES algorithm.

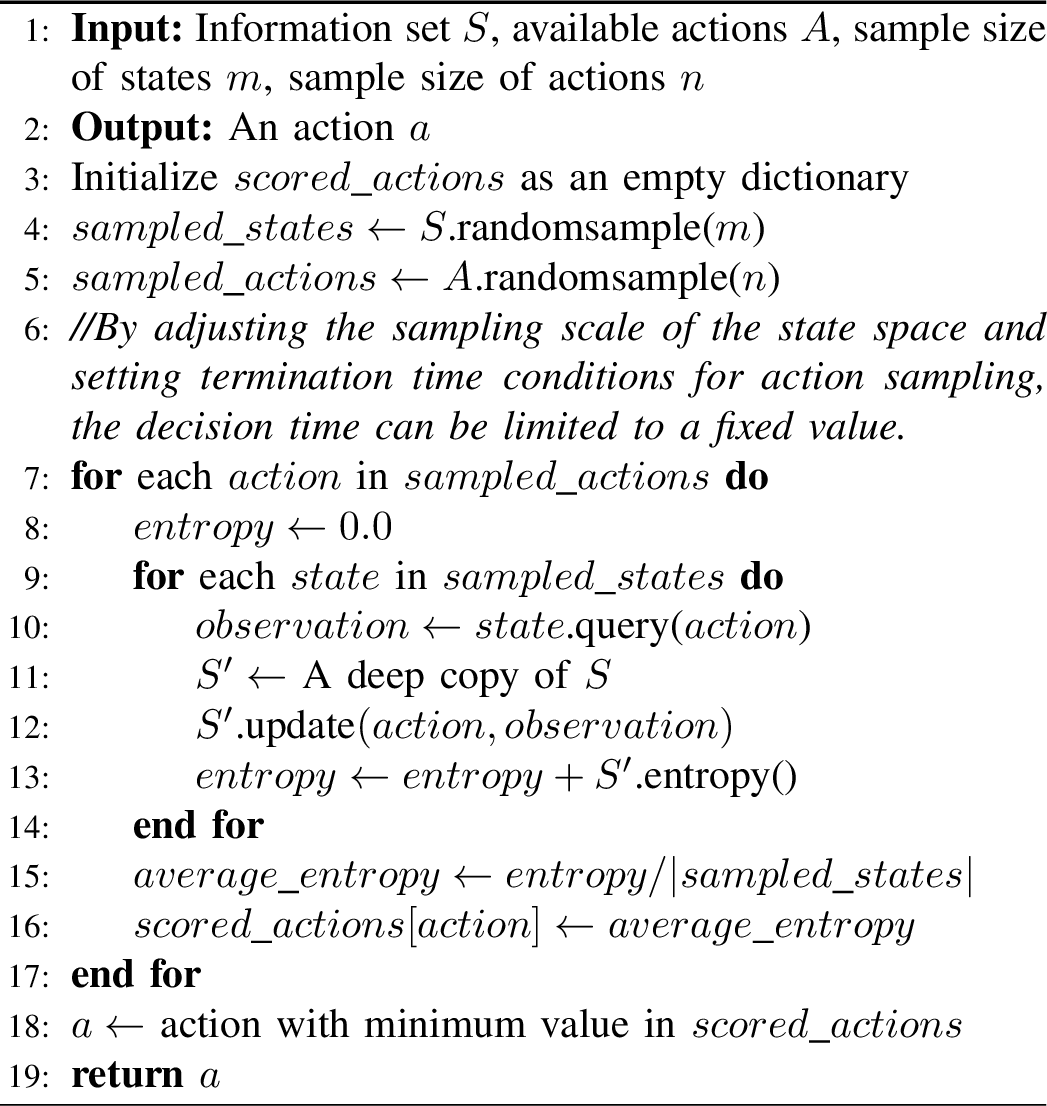

Due to the exhaustive nature of the original ISES algorithm, which requires significant computational resources, we propose a variant that allows for controlled sampling scale. The pseudocode below shows that state and action space sampling scales can be manually set. By establishing a termination time, sampling can be halted upon reaching the predefined time limit, enabling immediate decision-making. This ensures suboptimal yet advantageous decisions within a manageable timeframe.

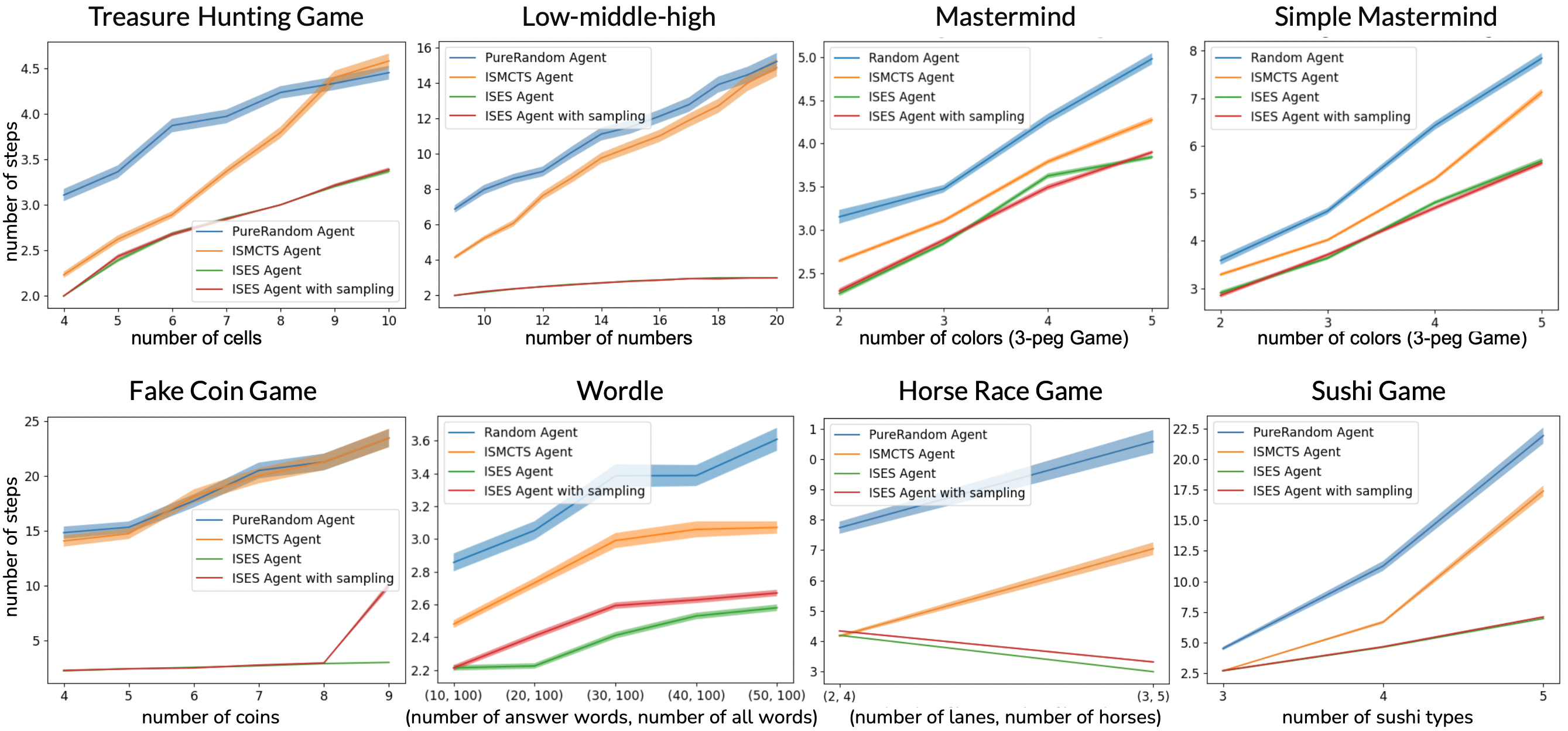

Figure 5: The performance of four agents in different game sizes across 8 games.

Figure 6: Sampling Information Set Entropy Search

5 Results and Conclusions↩︎

To assess the ISES algorithm’s effectiveness, we tested it on eight games and compared it with two agents: the Random Agent and the SO-ISMCTS, which is effective in many imperfect information games. The goal of these games is to decipher the game’s secret efficiently, so we designed the final reward to be inversely related to the number of steps taken.

For the eight games within our framework, we designed experiments across varying scales, with each of the four agents undergoing 500 trials for each variant. The results displayed in Fig. 5 encapsulate their average performance across these games. It is evident that the ISES algorithm consistently outperformed the others, achieving game completion in fewer average steps across all scales.

The sampled version of ISES, even with a 0.1s decision time limit, closely mirrored the original ISES’s efficacy, particularly in games with lower computational complexity. This indicates that for smaller-scale games, partial random sampling is sufficient to maintain performance because many actions and states are equivalent. However, as game complexity escalates, the sampled approach may falter, as seen in the Fake Coin Game results, where exponential complexity growth at nine coins challenged the sampled ISES algorithm.

The experimental findings also highlight the limitations of the ISMCTS algorithm, particularly in games with extensive search spaces where performance diminishes due to insufficient simulation within the constrained decision time. In our studies, the ISMCTS agent, like the sampled ISES agent, was allotted a 0.1s decision time cap, yet its performance was significantly inferior. This gap widened with increasing game scale due to the exponential growth of the search space, as clearly shown in the Treasure Hunt Game and Low-Middle-High.

In future we aim to expand our framework to include multiplayer deduction games such as Cluedo. The dynamics of multiplayer deduction games involve maximising one’s own information while minimising the information gain of our opponents, and entropy-based methods seem a natural way to approach this.