On the Regret of Recursive Methods for

Discrete-Time Adaptive Control with Matched Uncertainty

April 02, 2024

Abstract

Continuous-time adaptive controllers for systems with a matched uncertainty often comprise an online parameter estimator and a corresponding parameterized controller to cancel the uncertainty. However, such methods are often unimplementable, as they depend on an unobserved estimation error. We consider the equivalent discrete-time setting with a causal information structure. We propose a novel, online proximal point method-based adaptive controller, that under a weak persistence of excitation (PE) condition is asymptotically stable and achieves finite regret, scaling only with the time required to fulfill the PE condition. We show the same also for the widely-used recursive least squares with exponential forgetting controller under a stronger PE condition.

1 Introduction↩︎

Adaptive control studies controllers that can adapt to or learn unmodelled changes in the dynamics [1]. Adaptive controllers often guarantee perfect asymptotic tracking or regulation, and parameter convergence [2], [3]. Concurrently with the developments in adaptive control, the theory of online optimization was established to minimize an a priori unknown, sequentially revealed cost [4]. The connections between the two have recently been highlighted [5], [6], showing that adaptive controllers are closely linked to an online cost optimization problem and are often proportional to an online estimation cost gradient. In this work, we consider adaptive controllers that aim to minimize a cost function while controlling the system [7]. Given such a controller, we note that asymptotic stability alone does not automatically provide performance guarantees on the cost [8], [9]. Instead, we provide finite-time guarantees by characterizing the regret [4] of our adaptive controller, a concept borrowed from online learning to quantify the additional cost incurred by the controller over a horizon due to partial model knowledge.

We consider systems with matched uncertainty [2], where the unknown dynamics is parameterized by an unknown parameter vector and a known, state-dependant feature matrix. In this setting, the uncertainty can be exactly canceled by the control input given knowledge of the true parameter. We aim to find causal adaptive controllers that estimate this parameter recursively while stabilizing the system and minimizing regret. Most continuous-time adaptive controllers in this setting utilize Lyapunov theory and provide only asymptotic stability guarantees, see for example [1]–[3]. Using the same tools, recently, a finite \(\mathcal{O}(1)\) regret bound for linear systems is derived in [10], and for nonlinear time-varying systems in [11]. In [11], the authors also extend the results to a discrete-time setting, achieving a \(o(T)\), sublinear-in-time, bound with a causal formulation, and a \(\mathcal{O}(1)\) with a non-causal one. In contrast to our proposed method, the Lyapunov-based adaptive controllers in [10], [11] are not realizable in discrete time, as the parameter update depends on the current, unobserved error, making it non-causal. The online gradient descent-based algorithm in [11], although causal, achieves \({o}(T)\) regret even in the deterministic case. The difficulty is inherent in the discrete-time formulation of the problem, which introduces a one-step delay in the error observation. In discrete time, the convenient Lyapunov function cancellation [2] no longer holds and discrete-time Lyapunov functions are known to be harder to construct [12].

The recursive learning algorithm for the adaptive controller can be set up to relate to an online or “running" cost. This setting is studied in the time-varying optimization literature providing asymptotic tracking and convergence guarantees for online costs, e.g., [13], [14]. However, in this line of work, the problem setup considers no underlying dynamics for the state, and the regret generally is not studied.

We design causal, uncertainty-matching, recursive methods for discrete-time, nonlinear, time-varying systems. Our contributions are threefold. First, we introduce a recursive proximal learning (RPL) parameter estimation algorithm based on the proximal point method [15]. Under a weak notion of persistence of excitation (PE), RPL produces estimates of the unknown parameters that are contractive with respect to the true one. Second, we show that RPL-based adaptive control achieves finite regret scaling with the time required to achieve the weak PE condition. By contrast, in [11] finite regret is only achieved through access to a Lyapunov function or by a non-causal controller. Finally, we analyze the regret of the recursive least-squares with forgetting factor (RLSFF) [16] in this setting. We show that while both RPL and RLSFF achieve finite regret with similar bounds, this is established for RPL under a weaker PE condition. We demonstrate the algorithms on a discrete-time model reference adaptive control (MRAC) [17] numerical example.

Notation: The sets of positive real numbers, positive integers, and non-negative integers are denoted by \(\mathbb{R}_{+}\), \(\mathbb{N}_{+}\) and \(\mathbb{N}\), respectively. For a given vector \(x\), its Euclidean norm is denoted by \(\|x\|\). The spectral norm of a square matrix \(W\) is denoted by \(\|W\|\), and the largest and smallest singular values and eigenvalues by \(\sigma_{min}(W)\), \(\sigma_{max}(W)\), and \(\lambda_{min}(W)\), \(\lambda_{max}(W)\) respectively. For a positive scalar \(r\in \mathbb{R}_+\), we define the closed ball around the origin as \(\mathcal{B}_r:=\{x\in \mathbb{R}^n \;| \;\|x\|\leq r\}\).

2 Problem Statement↩︎

We consider nonlinear dynamical systems with matched uncertainty of the form \[\label{eq:nonlinear95matched} x_{k+1} = f_k(x_k) + B_k(x_k)\left(u_k - \alpha(x_k)\right),\tag{1}\] where \(f: \mathbb{N} \times \mathbb{R}^n \rightarrow \mathbb{R}^n\) models the known nominal dynamics and satisfies \(f_k({0}) = {0}\) for all \(k\in \mathbb{N}\), \(B_k: \mathbb{N}\times\mathbb{R}^n \rightarrow \mathbb{R}^{n\times m}\) is a known input matrix with a bounded norm, and \(\alpha: \mathbb{R}^n \rightarrow \mathbb{R}^m\) is an unknown mapping to the input space, capturing the uncertain dynamics of the model. We assume that \(\alpha\) can be exactly parameterized by possibly nonlinear, but known and bounded basis matrices, \(\phi: \mathbb{R}^n \rightarrow \mathbb{R}^{p\times m}\), that map the state to a feature in \(\mathbb{R}^{p\times m}\). The uncertainty is then given by \(\alpha(x_k) = \phi^\top(x_k)\theta^\star\), where \(\theta^\star \in \mathbb{R}^{p}\) is an unknown weighting vector5. We also assume \(\|B_k(x_k)\phi^\top(x_k)\| \leq b\) for all \(k\in \mathbb{N}\), \(x \in \mathbb{R}^n\) and some \(b \in \mathbb{R}_+\). The tracking problem under partial model knowledge can also be cast into this framework in the context of MRAC, as shown in 5.

We characterize the performance of a given controller for system 1 over a time horizon \(T\) by comparing it to a benchmark control signal \(u^\star=\begin{bmatrix} {u^\star_0}^\top & \ldots & {u^\star_{T-1}}^\top \end{bmatrix}^\top\) that cancels the uncertainty perfectly and is defined below. We use regret to quantify the controller performance \[\label{eq:regret} \mathcal{R}_T({u}) = \sum_{k=0}^{T-1} c(x_k)- c(x^\star_k),\tag{2}\] where \(u=\begin{bmatrix} u_0^\top & \ldots & u_{T-1}^\top \end{bmatrix}^\top\) denotes the control signal of a given controller, \(x_k\) and \(x^\star_k\) are state sequences due to the control signals \(u\) and \(u^\star\), respectively, and \(c:\mathbb{R}^n \rightarrow \mathbb{R}\) is a stage cost. We restrict our analysis to locally Lipschitz continuous costs and assume for any \(R\in \mathbb{R}_+\), there exists a \(L_c \in \mathbb{R}_+\), such that for all \(x,y \in \mathcal{B}_R\), \[\label{eq:lipschitz95cost} \|c(x) - c(y)\| \leq L_c \|x-y\|.\tag{3}\]

Assumption 1. (System Dynamics) The nominal dynamics \(x_{k+1} = f_k(x_k)\) are uniformly, globally ediss [18]. In other words, there exist \(c_0,c_w, r_w \in \mathbb{R}_+\) and \(\rho \in (0,1)\), such that for any \(x_0,y_0 \in\mathbb{R}^n\), \(w_k \in \mathcal{B}_{r_w}\), and all \(k \in \mathbb{N}\) the perturbed dynamics \(y_{k+1} = f_k(y_k)+w_k\) satisfy \[\|x_k-y_k\| \leq c_0 \rho^k\|x_0-y_0\|+c_w\sum_{i=0}^{k-1}\rho^{k-i-1}\|w_i\|.\]

The ediss property characterizes only the known and autonomous part of the dynamics. It is known that if \(f_k\) is smooth in the state and is contractive, then it is also ediss [11], [19]. If \(f_k\) is not smooth, then under milder conditions, we show in [20] that exponential stability implies ediss.

2.1 Online Estimation↩︎

We consider adaptive control laws that produce control inputs of the form \(u_k =\phi^\top(x_k)\theta_k\) where \(\theta_k \in \mathbb{R}^{p}\) is an online estimate of \(\theta^\star\) at time \(k\). The closed-loop dynamics for a controller of this form is given by \[\label{eq:suboptimal} x_{k+1} = f_k(x_k) + \underbrace{B_k(x_k)\phi(x_k)^\top\left(\theta_k-\theta^\star\right)}_{w_k(x_k)},\tag{4}\] where \(w_k(x_k)\) can be thought of as a state-dependant artificial disturbance introduced due to an inexact uncertainty matching. One can also consider a benchmark counterfactual control input \(u_k^\star: = \phi(x_k^{\star})^\top \theta^\star\) and the corresponding signal \(u^\star\) that perfectly cancels out the uncertainty in 1 leading to a benchmark state evolution \[\label{eq:optimal} x_{k+1}^\star = f_k(x_k^\star).\tag{5}\] Note that \(u^\star\) is a counterfactual policy that is not realizable, as \(\theta^\star\) is unknown. To simplify the analysis, we take \(x_0=x_0^\star\).

A standard setup in adaptive control design is to produce estimates \(\theta_k\) that minimize the magnitude of \(w_k(x_k)\), effectively steering the state of 4 to that of 5 thanks to the ediss property. This is often done by setting up a least squares estimation problem, minimizing the following online estimation cost for all \(k \in \mathbb{N}_+\) \[\begin{align} \label{eq:nominal95cost} \theta_k &= \mathop{\mathrm{arg\,min}}_\theta h_{k-1}(\theta), \\ h_{k-1}(\theta): \!&= \! \frac{1}{2}\sum_{i=0}^{k-1}\|B_i\phi_i^\top\left(\theta-\theta^\star\right)\|^2 = \frac{1}{2}\|\Phi_{k}{\theta} -Y_{k}\|^2,\notag \\ \nonumber Y_k &= \begin{bmatrix} y_0 \\ y_1 \\ \vdots \\ y_{k-1} \end{bmatrix}, \qquad \Phi_k = \begin{bmatrix} B_0\phi_0^\top\\ B_1\phi_1^\top\\ \vdots \\ B_{k-1}\phi^\top_{k-1} \end{bmatrix},\\ \nonumber y_k &= x_{k+1} - f_k(x_k) - B_k\phi_k^\top \theta_k = B_k\phi_k^\top \theta^\star. \end{align}\tag{6}\] Note that at time \(k\), the latest available online estimation cost is \(h_{k-1}\), as \(y_k\) is not yet available. Solving 6 directly requires computing an expensive matrix inverse at each time step and maintaining an increasing memory. In this work, we study two recursive methods that alleviate this issue, both optimizing an online cost related to 6 . In particular, we introduce and analyze a recursive proximal learning method in Section 3, and also study the well-established recursive least squares with forgetting factor [16] in Section 4.

2.2 Regret↩︎

Speed or velocity-gradient controllers are introduced in [7] to solve the continuous-time equivalent of the online estimation problem 6 in the framework of (integral) goal-oriented control. It is shown that these methods achieve finite estimation error in the limit, under the assumption that the controller at time \(t\) can depend on the estimation error \({\theta}_t-\theta^\star\). Recent works [10], [11] noted that if an exponential Lyapunov function exists for the nominal system, a speed-gradient descent-based uncertainty matching controller achieves finite quadratic cost on the state. In particular, the suboptimal closed-loop state trajectory \(x_t\) satisfies \[J_\mathrm{cont}(x_0,u):=\lim_{T\rightarrow \infty}\int_{0}^{T}\|x_t\|^2dt = \mathcal{O}(1).\] Moreover, the existence of the exponential Lyapunov function also implies that \(J_{\mathrm{cont}}(x_0,u^\star)\) for the optimal state evolution is finite. Defining continuous-time regret as \(\mathcal{R}_{\mathrm{cont}}(u):= J_{\mathrm{cont}}(x_0,u) - J_{\mathrm{cont}}(x_0,u^\star)\), an \(\mathcal{O}(1)\) bound and the extra cost accumulated by the adaptive control law as compared to the benchmark follows directly. In [11] a discrete-time version of the speed-gradient algorithm is introduced with a finite cost bound, but with the same assumption that at time \(k\) the error \(\theta_k-\theta^\star\) is available, making the controller non-causal. Without this assumption, the authors achieve \(o(T)\) sublinear regret using an online learning toolkit. In this work, we consider causal controllers that minimize 6 at time \(k\) with access only to \(y_{k-1}\). To characterize the performance of such an adaptive, uncertainty-matching controller, we analyze the regret 2 .

3 Recursive Proximal Learning↩︎

The recursive proximal learning algorithm corresponds to the \(\varepsilon\)-scaled proximal operator of 6 evaluated at the previous estimate \[\label{eq:proximal95update} \begin{align} \theta_{k} &= \mathrm{prox}_{\varepsilon h_{k-1}}\left(\theta_{k-1}\right)\\ &=\mathop{\mathrm{arg\,min}}_{\theta}\left(h_{k-1}(\theta) + \frac{\varepsilon}{2}\|\theta-\theta_{k-1}\|^2\right), \end{align}\tag{7}\] for some \(\theta_0 \in \mathbb{R}^p\) and \(\varepsilon \in \mathbb{R}_+\). From the online learning perspective [4], \(\theta_k\) can be thought of as the minimizer of the latest available cost \(g_{k-1}\) at time \(k\) \[\label{eq:proximal95cost} g_{k-1}(\theta) = h_{k-1}(\theta) + \frac{\varepsilon}{2}\|\theta-\theta_{k-1}\|^2.\tag{8}\] While a closed-form solution to 7 exists, it requires memory increasing with time. The following equivalent set of updates to 7 remedies both issues. In particular, for all \(k\in \mathbb{N}\), the following is equivalent to 7 \[\label{eq:rpl} \begin{align} P_{k+1}^{-1} &= P_k^{-1} + \phi_kB_k^\top B_k \phi_k^\top, \\ H_{k+1} &= H_{k} + \phi_k B_k^\top B_k\phi_k^\top\\ s_{k+1} &= s_{k} + \phi_kB_k^\top y_k\\ \theta_{k+1} &= \theta_k - P_{k+1}\left(H_{k+1} \theta_k - s_{k+1}\right), \end{align}\tag{9}\] with the initialization \(H_0=\boldsymbol{0}_{p \times p}\), \(s_0={0}\) and \(P_0^{-1} = \varepsilon I_p\).

3.1 Contraction in Parameter Space↩︎

We define \(T_s\)-weak PE as follows.

Definition 1. A matrix signal \(\phi_k:\mathbb{N} \rightarrow \mathbb{R}^{p \times n}\) is called \(T_s\)-weak persistently exciting if for a \(T_s \in \mathbb{N}\), there exists a \(\delta \in \mathbb{R}_+\) such that \[\begin{align} 0 <\delta I_p \leq \sum_{i=0}^{T_s}\phi_i\phi_i^\top. \end{align}\]

We show below that under a \(T_s\)-weak PE assumption, the RPL update 7 enjoys a contraction property on the parameter; moreover, under an additional assumption, the lifted input vector \(\Phi_k \theta_k\) is also contractive.

Assumption 2. There exists a \(\beta \in \mathbb{R}_+\) such that \(\lim_{T\rightarrow \infty}\sum_{i=0}^{T}\phi_i B_i^\top B_i\phi_i^\top\leq \beta I_p\)

Lemma 1. Assume \(B_k \phi_k^\top\) is \(T_s\)-weak persistently exciting, then the RPL estimate 7 satisfies \[\label{eq:theta95contraction} \begin{align} \|{\theta}_{k} - \theta^\star\| &\leq \eta\|{\theta}_{k-1} - \theta^\star\|, \qquad \forall k\geq T_s\\ \|{\theta}_{k} - \theta^\star\| &\leq \|{\theta}_{k-1} - \theta^\star\|, \qquad \forall 0< k < T_s \end{align}\qquad{(1)}\] where \(\eta = \frac{\varepsilon}{\delta+\varepsilon} \in (0,1)\). Moreover, if Assumption 2 holds, and \(\varepsilon < \frac{\delta\sqrt{\delta}}{\sqrt{\beta}-\sqrt{\delta}}\) then there exists a \(\gamma \in (0,1)\) such that \[\begin{align} \|\Phi_{k+1} \left({\theta}_{k}-\theta^\star\right)\| &\leq \gamma\|\Phi_{k} \left({\theta}_{k-1}-\theta^\star\right)\|, \qquad \forall k \geq T_s,\\ \|\Phi_{k+1} \left({\theta}_{k}-\theta^\star\right)\| &\leq \sqrt{\beta} \|\theta_0-\theta^\star\|, \qquad \forall 0\leq k < T_s. \end{align}\]

Proof. The proximal update 7 can equivalently be represented by a single online Newton step update [21] on 8 . At time \(k\), it is equivalently represented by \[\theta_{k} = \theta_{k-1} - \left(\nabla^2g_{k-1}(\theta)\Bigr|_{\theta=\theta_{k-1}}\right)^{-1}\nabla g_{k-1}(\theta)\Bigr|_{\theta=\theta_{k-1}}.\] Subtracting the true \(\theta^\star\) from both sides, denoting \(\tilde{\theta}_k:=\theta_k-\theta^\star\), noting that \(Y_k = \Phi_k \theta^\star\) and recalling the definition of \(h_{k-1}\) from 6 \[\begin{align} \tilde{\theta}_{k} &= \tilde{\theta}_{k-1}-\left(\Phi_{k}^\top \Phi_{k} + \varepsilon I_p\right)^{-1}\Phi_{k}^\top \left(\Phi_{k}\theta_{k-1}-Y_{k}\right)\\ &= \tilde{\theta}_{k-1}-\left(\Phi_{k}^\top \Phi_{k} + \varepsilon I_p\right)^{-1}\Phi_{k}^\top\Phi_{k}\tilde{\theta}_{k-1}\\ &= \left(I_p - \left(\Phi_{k}^\top \Phi_{k} + \varepsilon I_p\right)^{-1}\Phi_{k}^\top\Phi_{k} \right)\tilde{\theta}_{k-1}\\ &:= \left(I_p - M_{k}\right)\tilde{\theta}_{k-1}, \end{align}\] where \(M_k = \left(\Phi_{k}^\top \Phi_{k} + \varepsilon I_p\right)^{-1}\Phi_{k}^\top\Phi_{k}\). Using the orthonormality property of singular value decomposition of symmetric matrices and the weak persistence of excitation of \(B_k\phi_k\), for all \(k\geq T_s\) \[\begin{align} \sigma_{min}\left(M_{k}\right) &\geq \frac{\delta}{\varepsilon + \delta} \in (0,1),\\ \eta_k:= \|I_p - M_{k}\|&\leq \frac{\varepsilon}{\delta+\varepsilon} \in (0,1), \end{align}\] obtaining the contraction result for parameter since \(\sigma_{max}(M_k) \in(0,1)\). For all \(0< k < T_s\), if the \(T_s\)-weak PE condition is not fulfilled, hence \(\sigma_{min}(M_k) = 0\) and \(\|\tilde{\theta}_k\|\leq \| \tilde{\theta}_{k-1}\|\).

To prove the second part, note that for a full column rank matrix \(A\), \(\|A\tilde{\theta}_{k+1}\| = \|\tilde{\theta}_{k+1}\|_{A^\top A}\), and \({{\lambda_{min}(A^\top A)}} = {\sigma_{min}(A^\top A)}\) , and likewise for \(\lambda_{max}\). Then, for all \(k\geq T_s\) \[\begin{align} &\sigma_{min}\left(\left(\Phi_{k+1}^\top\Phi_{k+1}\right)^{-1}\right)\|\tilde{\theta}_{k}\|^2_{\Phi_{k+1}^\top\Phi_{k+1}} \leq \|\tilde{\theta}_{k}\|^2 \\ &\leq \eta^2 \|\tilde{\theta}_{k-1}\|^2 \leq \eta^2 \sigma_{max}\left(\left(\Phi_{k}^\top\Phi_{k}\right)^{-1}\right) \|\tilde{\theta}_{k-1}\|_{\Phi_{k}^\top\Phi_{k}}^2. \end{align}\] It follows that \[\begin{align} \|\Phi_{k+1}\tilde{\theta}_{k}\| \leq \eta \sqrt{\frac{\sigma_{max}\left(\Phi_{k+1}^\top\Phi_{k+1}\right)}{\sigma_{min}\left(\Phi_{k}^\top\Phi_{k}\right)}} \|\Phi_{k} \tilde{\theta}_{k-1}\|, \end{align}\] where we used the fact that \(\sigma_{max}\left((A^\top A)^{-1}\right) = 1/\sigma_{min}(A^\top A)\) and \(\sigma_{min}\left((A^\top A)^{-1}\right) = 1/\sigma_{max}(A^\top A)\). The proof is completed by noting that for all \(k\geq T_s\) \[\gamma:= \eta \sqrt{\frac{\sigma_{max}\left(\Phi_{k+1}^\top\Phi_{k+1}\right)}{\sigma_{min}\left(\Phi_{k}^\top\Phi_{k}\right)}} \leq \frac{\varepsilon\sqrt{\beta}}{\varepsilon\sqrt{\delta} +\delta\sqrt{\delta}}<1,\] given \(\varepsilon < \frac{\delta\sqrt{\delta}}{\sqrt{\beta}-\sqrt{\delta}}\). Finally, for all \(k \in \mathbb{N}\), \(\|\Phi_{k+1}\tilde{\theta}_k\|\leq\|\Phi_{k+1}\|\|\tilde{\theta}_k\|\leq \sqrt{\beta}\|\tilde{\theta}_0\|\). ◻

3.2 Closed-loop Analysis↩︎

Consider the suboptimal state evolution 4 under the RPL controller \(u_k^{\mathrm{RPL}} = \phi_k^\top\theta_k\), with \(\theta_k\) given in 7 . We can represent it equivalently as \[\label{eq:suboptimal95rpl} x_{k+1} = f_k(x_k)+ \underbrace{S_k\left(\Phi_{k+1}{\theta}_k-\Phi_{k+1}\theta^\star\right)}_{w_k(x_k)},\tag{10}\] where \(S_k:= \begin{bmatrix} \boldsymbol{0} & \ldots & \boldsymbol{0} & I_{n}, \end{bmatrix} \in \mathbb{R}^{n \times n(k+1)}\) is a selector matrix that selects the last component of \(\Phi_{k+1}\left({\theta}_k-\theta^\star\right)\), corresponding to \(B_k\phi_k^\top(\theta_k-\theta^\star)\). Similarly, the optimal state evolution under \(u^\star\) is given by \[\label{eq:optimal95rpl} x_{k+1}^\star =f_k(x^\star_k) +\underbrace{S_k\left(\Phi^\star_{k+1}\theta^\star-\Phi^\star_{k+1}\theta^\star\right)}_{\boldsymbol{0}},\tag{11}\] where \(\Phi^\star_{k+1}:= \Phi(x^\star_{[0,\ldots,k]})\). In the following theorem, we characterize the closed-loop system 10 in terms of asymptotic stability and regret. In particular, we show that the regret of the RPL controller scales with \(\mathcal{O}\left(\|\tilde{\theta}_0\| T_s\right)\).

Theorem 1. Assume that 1 satisfies Assumption 1, and \(B_k\phi_k\) is \(T_s\)-weak persistently exciting, then the RPL adaptive controller

renders the closed-loop system asymptotically stable,

achieves finite regret

\[\mathcal{R}_T(\mathrm{RPL})\leq c_w bL_c\|\tilde{\theta}_0\|\left(\frac{T_s}{1-\rho}\!+\!\frac{\rho^T\!+\!(1-\eta)\rho+\eta}{(1-\rho)^2(1-\eta)}\right),\] for the \(\eta\) established in Lemma 1. If in addition, \(B_k\phi_k\) also satisfy Assumption 2 and \(\varepsilon < \frac{\delta \sqrt{\delta}}{\sqrt{\beta} - \sqrt{\delta}}\), then \[\mathcal{R}_T(\mathrm{RPL})\leq c_w c_{p}L_c\|\tilde{\theta}_0\|\left(\frac{T_s}{1-\rho}\!+\!\frac{\rho^T\!+\!(1-\gamma)\rho+\gamma}{(1-\rho)^2(1-\gamma)}\right),\] where \(c_p := \|\Phi_{T_s}\| \leq \sqrt{\beta}\) and \(\gamma\) is defined in Lemma 1.

Proof. To show (i), recall the definition of ediss, and the fact that \(f_k({0}) = {0}\) for all \(k\in \mathbb{N}\), then consider the suboptimal state evolution of the nonlinear system under the RPL adaptive controller 10 for all \(k\in \mathbb{N}_+\), recalling that \(\tilde{\theta}_k = \theta_k - \theta^\star\) \[\label{eq:x95bounded} \begin{align} \|x_k\| &\leq c_0\rho^k\|x_0\| + c_w\sum_{i=0}^{k-1}\rho^{k-i-1}\|B_i \phi_i\tilde{\theta}_i\|\\ &< c_0\|x_0\|+\frac{c_w b\| \tilde{\theta}_0\|}{1-\rho} , \end{align}\tag{12}\] where we use the submultiplicativity property of the norms, the bound on \(B_k\phi_k\) and non-expansivity of \(\|\tilde{\theta}_k\|\) from Lemma 1. The asymptotic stability of the closed-loop then follows from \(\lim_{k \rightarrow \infty}\|x_k\| = 0\), since by Lemma 1 \(\lim_{k \rightarrow \infty}\|\tilde{\theta}_k\| = 0\), given the input-to-state stability [22] of the closed-loop system 10 .

Next, we show (ii), by first noting that since \(x_k\) and \(x_k^\star\) are bounded from 12 , it follows from 3 \[\begin{align} &\mathcal{R}_T(\mathrm{RLS})\leq \underbrace{L_c\sum_{k=T_s+1}^{T-1}\|x_k-x_k^\star\|}_{\text{I}} + \underbrace{L_c\sum_{k=0}^{T_s}\|x_k-x_k^\star\|}_{\text{ II}}, \end{align}\] where II represents the cost in the initial \(T_s\)-long non-PE phase, and I the cost improvement afterwards.

Using the ediss condition on the nominal system and noting that \(x_k\) and \(x_k^*\), given by 10 and \(\eqref{eq:optimal95rpl}\), respectively, are equal in the first time step, for all \(k>T_s\) \[\label{eq:x95bound95I} \begin{align} &\|x_k-x_k^\star\| \leq c_wb\sum_{i=0}^{k-1}\rho^{k-i-1}\|\tilde{\theta}_i\|\\ &\leq c_w b\sum_{i=1}^{T_s}\rho^{k-i}\|\tilde{\theta}_{i-1}\| + c_wb\sum_{i=T_s}^{k-1}\rho^{k-i-1}\|\tilde{\theta}_{i}\|\\ &\leq c_wb\rho^{k-T_s}\|\tilde{\theta}_{0}\|\sum_{i=1}^{T_s}\rho^{T_s-i} + c_wb\sum_{i=T_s}^{k-1}\rho^{k-i-1}\|\tilde{\theta}_{i}\|\\ &\leq \rho c_wc_{T_s}b\|\tilde{\theta}_0\|\rho^{k-T_s} + c_wb\|\tilde{\theta}_0\|\sum_{i=T_s}^{k-1}\rho^{k-i-1}\eta^{i-T_s+1}, \end{align}\tag{13}\] where the second inequality follows from the boundedness of \(B_k\phi_k\), the third from the non-expansivity of \(\|\tilde{\theta}_k\|\) from Lemma 1 and rearrangement of the constants, and the last one from the contraction of \(\|\tilde{\theta}_k\|\) and we define \(c_{T_s}= \frac{1-\rho^{T_s}}{1-\rho}\). For \(0<k\leq T_s\), we may only use the non-expansive result from Lemma 1 and hence \[\label{eq:x95bound95II} \|x_k-x_k^\star\| \leq c_w b\sum_{i=1}^{k}\rho^{k-i}\|\tilde{\theta}_{i-1}\|\leq c_wb\|\tilde{\theta}_0\|\sum_{i=1}^{k}\rho^{k-i}.\tag{14}\] Using the Cauchy Product inequality defined for two finite series \(\{a_i\}_{i=1}^T\) and \(\{b_i\}_{i=1}^T\) \[\label{eq:cauchy95product}\textstyle{ \sum_{i=0}^T\left|\sum_{j=0}^{i}a_jb_{i-j}\right| \leq \left(\sum_{i=0}^T|a_i|\right) \left(\sum_{j=0}^T|b_j|\right)},\tag{15}\] and summing 14 and 13 over \(T_s-1\) and \(T-T_s-2\), respecitvely, we get \[\begin{align} \text{ II}&\leq c_wbL_c\|\tilde{\theta}_0\|\sum_{k=0}^{T_s-1}\sum_{i=0}^k\rho^{k-i}\leq c_wc_{T_s}T_sbL_c\|\tilde{\theta}_0\|\\ \text{I} &\leq c_wbL_c\|\tilde{\theta}_0\|\left(\rho c_{T_s}\!\sum_{k=1}^{T-T_s-1}\rho^{k}\!+\!\sum_{k=0}^{T-T_s-2}\sum_{i=0}^{k}\rho^{k-i}\eta^{i+1}\right)\\ &\leq \rho c_wbL_c\|\tilde{\theta}_0\|\frac{\rho^T + \rho}{(1-\rho)^2}\\ &\quad + c_wb\eta L_c\|\tilde{\theta}_0\| \frac{\left(1-\rho^{T-T_s-1}\right)\left(1-\eta^{T-T_s-1}\right)}{\left(1-\rho\right)\left(1-\eta\right)}. \end{align}\] The first regret bound is achieved by combining the terms.

Now if Assumption 2 is satisfied and \(\varepsilon < \frac{\delta \sqrt{\delta}}{\sqrt{\beta} - \sqrt{\delta}}\), one can make use of the lifted input contraction in Lemma 1. In particular, for all \(k>T_s\), referring to 10 and 11 again \[\label{eq:x95contractive} \begin{align} &\|x_k-x_k^\star\| \leq c_w\sum_{i=0}^{k-1}\rho^{k-i-1}\|w_i(x_i)\|\\ &\leq c_w\|S_k\|\sum_{i=0}^{k-1}\rho^{k-i-1}\|\Phi_{i+1}\tilde{\theta}_{i}\|\\ &\leq c_w\sum_{i=1}^{T_s}\rho^{k-i}\|\Phi_{i}\tilde{\theta}_{i-1}\| + c_w\sum_{i=T_s}^{k-1}\rho^{k-i-1}\|\Phi_{i+1}\tilde{\theta}_{i}\|\\ &\begin{aligned} \leq c_w\sum_{i=1}^{T_s}\rho^{k-i}&\|\Phi_{i}\tilde{\theta}_{i-1}\| \\ &+c_w\|\Phi_{T_s}\tilde{\theta}_{T_s-1}\|\sum_{i=T_s}^{k-1}\rho^{k-i-1}\gamma^{i-T_s+1} \end{aligned} \\ &\begin{align} =c_w\rho^{k-T_s}\sum_{i=1}^{T_s}&\rho^{T_s-i}\|\Phi_{i}\tilde{\theta}_{i-1}\|\\ &+c_w\|\Phi_{T_s}\tilde{\theta}_{T_s-1}\|\sum_{i=T_s}^{k-1}\rho^{k-i-1}\gamma^{i-T_s+1} \end{align} \\ &\leq \rho c_wc_pc_{T_s}\|\tilde{\theta}_0\|\rho^{k-T_s}+c_wc_p\|\tilde{\theta}_0\| \sum_{i=T_s}^{k-1}\rho^{k-i-1}\gamma^{i-T_s+1}, \end{align}\tag{16}\] where the second inequality follows from the submultiplicative property of the norms, the third from the fact that \(\|S_k\|=1\), and the last two from Lemma 1 and by denoting \(c_{T_s}:= \frac{1-\rho^{T_s}}{1-\rho}\). Similarly, for \(0<k\leq T_s\) \[\label{eq:x95nonexpansive} \|x_k-x_k^\star\| \leq c_w\sum_{i=1}^{k}\rho^{k-i}\|\Phi_{i}\tilde{\theta}_{i-1}\|\leq c_wc_p\|\tilde{\theta}_0\|\sum_{i=1}^{k}\rho^{k-i}\tag{17}\]

We then obtain new bounds for \(\text{I}\) and \(\text{II}\) \[\begin{align} \text{II}&\leq c_wc_p\|\tilde{\theta}_0\|L_c\sum_{k=0}^{T_s-1}\sum_{i=0}^k\rho^{k-i}\leq T_s \|\tilde{\theta}_0\|c_wc_{T_s}c_pL_c, \end{align}\] where the first inequality follows from 17 and the second one from the Cauchy Product inequality. Using 16 \[\begin{align} &\text{I}\!\leq\! c_wc_p\|\tilde{\theta}_0\|L_c \left(\rho c_{T_s}\sum_{k=1}^{T-T_s-1}\rho^k\!+\! \sum_{k=0}^{T-T_s-2}\!\sum_{i=0}^{k}\rho^{k-i}\gamma^{i+1}\right) \\ &\leq \rho c_wc_pc_{T_s}\|\tilde{\theta}_0\|L_c\frac{\rho - \rho^{T-T_s}}{1-\rho}\\ &\qquad +c_wc_p\|\tilde{\theta}_0\| \gamma L_c \frac{\left(1-\rho^{T-T_s-1}\right)\left(1-\gamma^{T-T_s-1}\right)}{\left(1-\rho\right)\left(1-\gamma\right)}. \end{align}\] Noting that the first term in the above summation is upper bounded by \(\rho c_wc_p\|\tilde{\theta}_0\|L_c\left(\frac{\rho^T +\rho}{(1-\rho)^2}\right)\) and combining I and II results in the second stated bound. ◻

The theorem states that given the assumptions on the nominal system, both 10 and 11 state trajectories converge to the origin. It is important to note that no further restrictions are put on the stage costs \(c\), other than local Lipschitz continuity. Hence, the finite regret result of the theorem does not imply the cost \(\sum_{k=0}^{T-1}c(x_k)\) is minimized, but the relative cost performance is bounded. Moreover, it shows that this performance, in terms of regret scales with the initial exploratory time period \(T_s\), and cannot be avoided, as during these transients the parameter updates are only nonexpansive by Lemma 1. If the additional assumptions on \(\varepsilon\) and \(B_k\phi_k\) are satisfied, then the constant term is updated from a uniform bound \(b\) to \(c_p:=\|\Phi_{T_s}\|\), which relates to the regression matrix only in the initial period.

In the following lemma, we show that Lipschitz continuity of the basis matrices and \(\phi({0})=\boldsymbol{0}_{p \times m}\) is a sufficient condition to fulfill Assumption 2.

Lemma 2. Let \(B_k \phi_k\) be \(T_s\)-weak persistently exciting, \(\phi({0})=\boldsymbol{0}_{p \times m}\), and the basis matrices \(\phi\) be \(L-\)Lipschitz continuous, such that there exists a \(L\in \mathbb{R}_+\) satisfying \[\|\phi(x)-\phi(y)\| \leq L\|x-y\|. \quad \forall x,y \in \mathbb{R}^n,\] then Assumption 2 holds.

Proof. Given \(T_s\)-weak PE holds, we make use of ?? , summing the first bound in 12 over a horizon \(T\) and taking the limit we get \[\begin{align} &\mathop{\mathrm{\overline{lim}}}_{T\rightarrow \infty}\sum_{k=0}^T\|x_k\|\leq \frac{c_0\|x_0\|}{1-\rho}+c_w b\sum_{k=1}^{T_s}\sum_{i=1}^{k-1}\rho^{k-i}\|\tilde{\theta}_{i-1}\|\\ &<\mathop{\mathrm{\overline{lim}}}_{T\rightarrow \infty}c_w b\|\tilde{\theta}_0\| \left(\rho c_{T_s}\sum_{k=1}^{T-T_s-1}\rho^k +\sum_{k=0}^{T-T_s-2}\sum_{i=0}^{k}\rho^{k-i}\eta^{i+1}\right)\\ &< \frac{\rho^2 c_w c_{T_s}b\|\tilde{\theta}_0\|}{1-\rho}+ \frac{c_wb\|\tilde{\theta}_0\|\eta}{(1-\rho)(1-\eta)}, \end{align}\] where the first inequality follows from the ediss definition and geometric series, the second from Lemma 1 and the last from the Cauchy Product inequality. The result then follows from the boundedness of \(B_k\) and \(\phi_k\) and \(\|\phi(x)\|\leq L\|x\|\). ◻

4 RLS with Exponential Forgetting↩︎

The RLSFF algorithm minimizes the online cost \[\begin{align} \label{eq:rls95ff} g_{k-1}^f(\theta) = \frac{1}{2}\sum_{i=0}^{k-1}\lambda^{2(k-1-i)}&\|B_i\phi_i^\top \left(\theta-\theta^\star\right)\|\\ &+ \frac{\lambda^{2(k-1)} \varepsilon}{2}\|\theta_0 - \theta^\star\|^2, \end{align}\tag{18}\] for a fixed exponential forgetting factor \(\lambda^2 \in (0,1)\), where the first term can be thought of as the discounted version of 6 and the second, as a discounted regularizer on the initial parameter error. The update can be recursively implemented as follows [23] for all \(k \in \mathbb{N}\) \[\label{eq:rls95combined} \begin{align} P_{k+1}^{-1} &= \lambda^2 P_{k}^{-1} + \phi_{k}B_{k}^\top B_{k}\phi_{k}^\top,\\ \theta_{k+1} &= \theta_{k} - P_{k+1} \phi_{k} B_{k}^\top(B_k\phi_{k}^\top\theta_{k} - y_{k}), \end{align}\tag{19}\] where \(\theta_0 \in \mathbb{R}^p\) is an initial guess and \(P_0^{-1} = \varepsilon I_p\). The convergence of the above update requires a persistence of excitation condition in the sense of the following [16].

Definition 2. A matrix signal \(\phi_k:\mathbb{N} \rightarrow \mathbb{R}^{p \times n}\) is called persistently exciting if it is bounded and there exists a \(\delta > 0\) and \(T_s \in \mathbb{N}\) such that for all \(k_0 \in \mathbb{N}\) \[0 <\delta I_p \leq \sum_{i=k_0}^{k_0+T_s}\phi_i\phi_i^\top.\]

The PE condition in Definition 2, commonly found in the adaptive control literature [1], [16] is a stronger version of the \(T_s\)-weak PE condition in Definition 1. The latter requires the positive definiteness of the regressor matrix only in the initial phase of the estimation, rather than in all time intervals of length \(T_s\).

Lemma 3. Assume \(B_k\phi_k\) is persistently exciting then the RLSFF update 19 satisfies \[\begin{align} \|\theta_k-\theta^\star\| &\leq c_r\lambda^{k-T_s}\|\theta_0-\theta^\star\|, &&\forall k\geq T_s\\ \|\theta_k-\theta^\star\| &\leq \|\theta_0-\theta^\star\|, &&\forall 0<k<T_s \end{align}\] where \(c_r^2 = {\varepsilon\left(\lambda^{2T_s}-\lambda^{-2)}\right)}/\left(\delta\left(1-\lambda^{-2}\right)\right)\).

The non-expansive result follows by noting from 19 \[\tilde{\theta}_k = \left(I_p - P_{k+1}\phi_kB_k^\top B_k \phi_k^\top\right)\tilde{\theta}_{k-1}, \quad \forall k>0,\] and that \(\sigma_{min}\left(P_{k+1}\phi_kB_k^\top B_k \phi_k^\top \right)\geq 0\) and \(\sigma_{max}\left(P_{k+1}\phi_kB_k^\top B_k \phi_k^\top \right)<1\). The proof for the exponential stability for \(k\geq T_s\) can be found in [23] and is hence omitted.

Consider the RLSFF controller \(u_k^{\mathrm{RLS}} = \phi_k^\top\theta_k\) with \(\theta_k\) given by the recursive law 19 .

Theorem 2. Assume that 1 satisfies Assumption [assum:system], and \(B_k\phi_k\) is persistently exciting, then the RLSFF adaptive controller

renders the closed-loop system asymptotically stable

achieves finite regret

\[\mathcal{R}_T(\mathrm{RLS})\leq c_wbL_c\|\tilde{\theta}_0\|\left(\frac{T_s}{1-\rho}\!+\!\frac{c_r\left(\rho^T+1\right)}{(1-\rho)^2(1-\lambda)} \right).\]

Proof. Consider the benchmark state \(x_k^\star\) evolution in 5 under perfect uncertainty matching and the RLSFF closed-loop state given by 4 with \(\theta_k\) update in 19 . Using the ediss condition on the nominal system and noting that \(x_k\) and \(x_k^*\) are equal in the first time step, for all \(k>T_s\) \[\begin{align} &\|x_k-x_k^\star\| \leq c_wb\sum_{i=0}^{k-1}\rho^{k-i-1}\|\tilde{\theta}_i\|\\ &\leq \rho c_wc_{T_s}b\|\tilde{\theta}_0\|\rho^{k-T_s} + c_wc_rb\|\tilde{\theta}_0\|\sum_{i=T_s}^{k-1}\rho^{k-i-1}\lambda^{i-T_s}, \end{align}\] where the second inequality follows from the bound of \(B_k\phi_k\) and Lemma 3, and we define \(c_{T_s}:= \frac{1-\rho^{T_s}}{1-\rho}\). For \(0<k\leq T_s\) \[\|x_k-x_k^\star\| \leq c_w b\sum_{i=1}^{k}\rho^{k-i}\|\tilde{\theta}_{i-1}\|\leq c_wb\|\tilde{\theta}_0\|\sum_{i=1}^{k}\rho^{k-i}\] Similar to the proof of Theorem 1, the states, \(x_k\) and \(x_k^\star\) can both be shown to be bounded and, hence \[\begin{align} &\mathcal{R}_T(\mathrm{RLS})\leq \underbrace{L_c\sum_{k=T_s+1}^{T-1}\|x_k-x_k^\star\|}_{\text{I}} + \underbrace{L_c\sum_{k=0}^{T_s}\|x_k-x_k^\star\|}_{\text{ II}}, \end{align}\] where, as before, II represents the cost in the initial \(T_s\) long PE phase, and I the cost improvement afterward. Using the Cauchy Product inequality and the inequalities above \[\begin{align} \text{ II}&\leq c_wbL_c\|\tilde{\theta}_0\|\sum_{k=0}^{T_s-1}\sum_{i=0}^k\rho^{k-i}\leq c_wc_{T_s}T_sbL_c\|\tilde{\theta}_0\|\\ \text{I} &\leq c_wbL_c\|\tilde{\theta}_0\|\left(\rho c_{T_s}\!\sum_{k=1}^{T-T_s-1}\rho^{k}\!+\!\sum_{k=0}^{T-T_s-2}\sum_{i=0}^{k}\rho^{k-i}\lambda^{i}\right)\\ &\leq \rho c_wbL_c\|\tilde{\theta}_0\|\frac{\rho^T + \rho}{(1-\rho)^2}\\ &\quad + c_wc_rbL_c\|\tilde{\theta}_0\| \frac{\left(1-\rho^{T-T_s-1}\right)\left(1-\lambda^{T-T_s-1}\right)}{\left(1-\rho\right)\left(1-\lambda\right)}. \end{align}\] Combining the terms complestes the proof. The asymptotic stability proof parallels that of Theorem 1 and is omitted. ◻

4.1 Analysis of the Bounds↩︎

Both the RPL and the RLSFF adaptive controllers achieve a finite regret containing three separate terms of potential interest. A constant term, an exponentially decaying one with rate \(\rho\), and a linear term in the PE time \(T_s\). The latter is of most interest, which arises from the non-expansive properties of both estimators and signifies that no improvement in the worst-case can be expected until the time \(T_s\) when the PE condition is met. Comparing the regret bounds directly, when \(\gamma <\lambda\) and \(c_r>1\), i.e. \(\varepsilon > \delta\) for the RLSFF algorithm 19 , then the upper bound of the RLSFF regret is strictly larger than that of the modified RPL controller.

5 Numerical Simulation↩︎

In this section, we show that the MRAC problem can be cast into the considered setting and provide a numerical example to showcase the performance of discussed adaptive controllers. Consider the uncertainty-matched system \[\label{eq:linear95matched} x_{k+1} = Ax_k + B(u_k - \phi(x_k)^\top\theta^\star),\tag{20}\] where \(A \in \mathbb{R}^{n\times n}\), \(B \in \mathbb{R}^{n \times m}\), \(\phi (x_k)\) is defined as before, and \(\theta^\star \in \mathbb{R}^p\) is unknown. The MRAC goal is to track the reference dynamics \[\bar{x}_{k+1} = A_r \bar{x}_k + B_r r_k,\] where \(\bar{x}_k \in \mathbb{R}^n\), is the ideal reference vector to be tracked, \(r_k \in \mathbb{R}^m\), is the bounded input command, \(B_r\in \mathbb{R}^{n\times m}\) and \(A_r \in \mathbb{R}^{n \times n}\) is Schur stable. As in [17], we assume \((A,B)\) to be controllable and \(B\) to be full column rank, and consider the input \(u_k = -K_1x_k +K_2r_k + \phi_k^\top\theta_k\) with matrices \(K_1, K_2 \in \mathbb{R}^{m \times n}\) chosen such that \(A-BK_1 = A_r\) and \(BK_2 = B_r\) hold and \(\theta_k\) being the parameter estimate. Denoting \(e_k = x_k-\bar{x}_k\), the error dynamics are given by \[\label{eq:error95dynamics} e_{k+1} = A_r e_k + B\phi(x_k)^\top \tilde{\theta}_k,\tag{21}\] in the form of 1 . Since \(A_r\) is stable, the ediss condition is satisfied. For the cost we consider \(c(x):= \|x\|^2\) [11], which is locally Lipschitz since the closed-loop states are bounded.

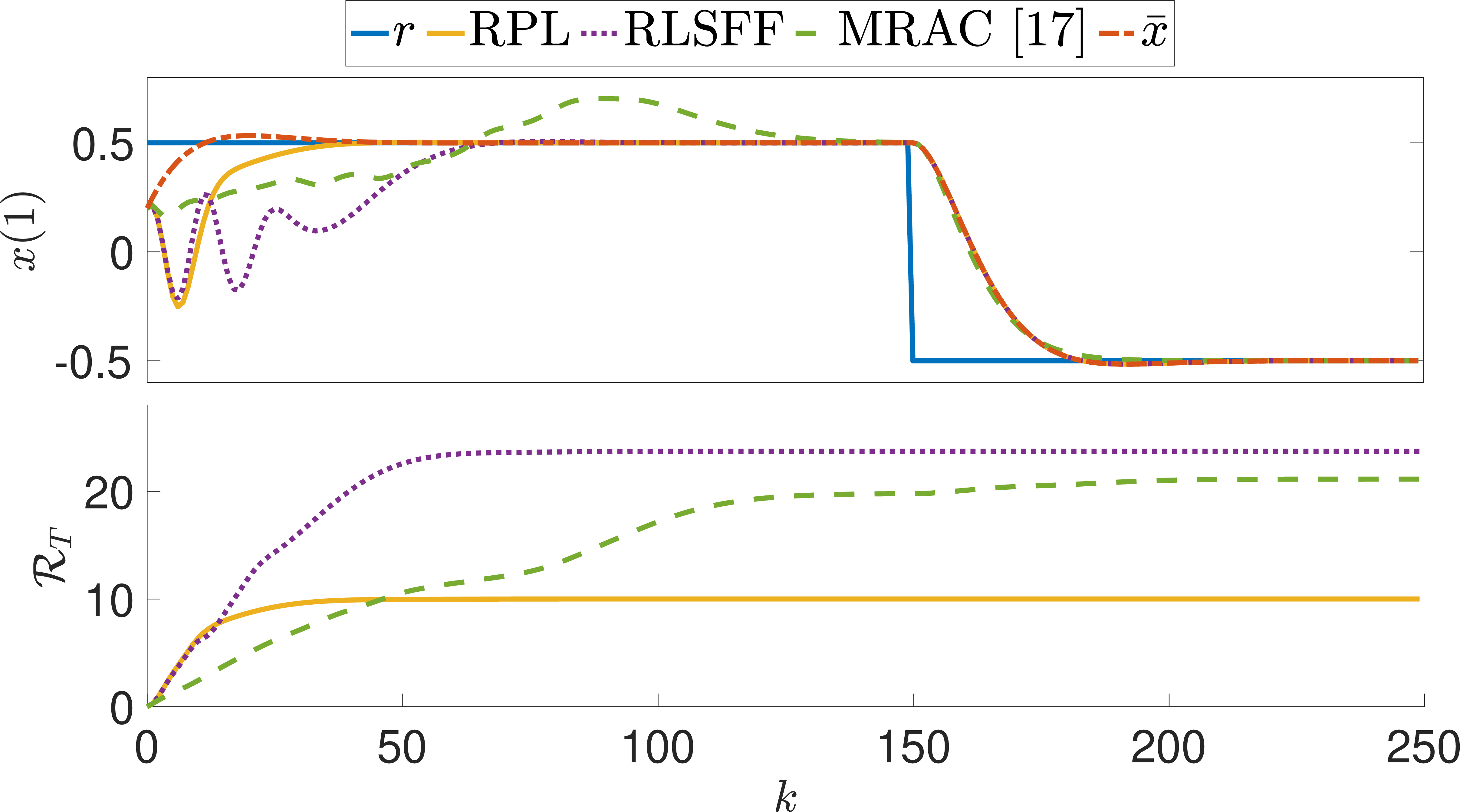

We consider the following numerical example [17] \[A = \begin{bmatrix} 1.0314& 0.2526\\ 0.2526& 1.0314 \end{bmatrix}, \; B = \begin{bmatrix} 0.0314\\ 0.2526 \end{bmatrix},\] with the reference model dynamics \[A_r = \begin{bmatrix} -0.9929& 0.2253\\ -0.0569& 0.8117 \end{bmatrix}, \; B_r = \begin{bmatrix} 0.0314\\ 0.2526 \end{bmatrix}.\] The true parameter is \(\theta^\star = \begin{bmatrix} -0.75& -0.50 \end{bmatrix}^\top\) and \(\phi(x_k) = x_k\). Figure 1 shows the tracking of the first component of state \(x_k\) for the above example under the RPL, RLSFF and the command governor-based MRAC controller proposed in [17] without regret guarantees.

Figure 1: Comparison of adaptive controllers for the MRAC example in terms of asymptotic tracking (on the top) and regret (on the bottom).

For all algorithms we initialize the estimate by \(\theta_0\!=\!\begin{bmatrix} 5.00& -1.00 \end{bmatrix}^\top\), take \(\varepsilon=1\) and start the simulation at \(x_0 = \begin{bmatrix} 0.2& 0.2 \end{bmatrix}^\top\). For RLSFF we used a forgetting factor of \(\lambda^2=0.99\), as lower values led to conditioning problems. For the controller of [17] we used the same parameters as in Example 1, [17] and a tuned saturation value of \(1.5\) for the command governor update for best results. The superior performance of RPL is evident from 1, despite a less stringent PE condition. The top figure shows the tracking performance of the controllers while the bottom the regret for the quadratic costs, which in this case is \(\mathcal{R}_T(u): = \sum_{k=0}^{T-1}\|e_k\|^2\), since by definition \(\Bar{x}_0={x}_0\) and therefore \(e^\star_k=0\) for all \(k\in \mathbb{N}\).

6 Conclusion↩︎

Two recursive learning algorithms were considered for the nonlinear adaptive control problem with matched uncertainty in the context of regret minimization. We showed that both the recursive least squares with forgetting factor and the novel recursive proximal learning algorithm are asymptotically stable and achieve finite regret scaling with the time required to achieve persistence of excitation. Possible extensions include the consideration of inexact basis matrices, bounded, non-stochastic noise, and the development of goal-oriented controllers that instead of the estimation cost aim to minimize the objective cost directly.

References↩︎

This work has been supported by the Swiss National Science Foundation under NCCR Automation (grant agreement \(51\text{NF}40\_180545\)), the European Research Council under the ERC Advanced grant agreement \(787845\) (OCAL) and by the German Research Foundation (DFG) under Germany’s Excellence Strategy - EXC 2075 – 390740016.↩︎

A. Karapetyan, A. Tsiamis and J. Lygeros are with the Automatic Control Laboratory, Swiss Federal Institute of Technology (ETH Zürich), 8092 Zürich, Switzerland (E-mails:

akarapetyan, atsiamis, jlygeros``@ethz.ch).↩︎E. C. Balta is with the Control and Automation Group, inspire AG, 8005 Zürich, Switzerland, and with the Automatic Control Laboratory

efe.balta@inspire.ch.↩︎A. Iannelli is with the Institute for Systems Theory and Automatic Control, University of Stuttgart, Stuttgart 70569, Germany (E-mail:

andrea.iannelli@ist.uni-stuttgart.de).↩︎We drop the explicit state dependence on state for \(B_k:=B_k(x_k)\) and \(\phi_k:= \phi(x_k)\), wherever required for readability.↩︎