Emergence of Chemotactic Strategies with Multi-Agent Reinforcement Learning

April 02, 2024

Abstract

Reinforcement learning (RL) is a flexible and efficient method for programming micro-robots in complex environments. Here we investigate whether reinforcement learning can provide insights into biological systems when trained to perform chemotaxis. Namely, whether we can learn about how intelligent agents process given information in order to swim towards a target. We run simulations covering a range of agent shapes, sizes, and swim speeds to determine if the physical constraints on biological swimmers, namely Brownian motion, lead to regions where reinforcement learners’ training fails. We find that the RL agents can perform chemotaxis as soon as it is physically possible and, in some cases, even before the active swimming overpowers the stochastic environment. We study the efficiency of the emergent policy and identify convergence in agent size and swim speeds. Finally, we study the strategy adopted by the reinforcement learning algorithm to explain how the agents perform their tasks. To this end, we identify three emerging dominant strategies and several rare approaches taken. These strategies, whilst producing almost identical trajectories in simulation, are distinct and give insight into the possible mechanisms behind which biological agents explore their environment and respond to changing conditions.

1 Introduction↩︎

Microswimmers have the unique privilege of having evolved over millions of years to learn how to optimally navigate noisy, Brownian motion-dominated environments in search of better living conditions. Most interactions humans are familiar with occur on

length and time scales that are not subject to this noise. Therefore, we do naturally have an understanding of how these microswimmers can perform this navigation. However, understanding the emergence of this behaviour is critical as scientists strive to

construct the artificial counterparts of biological microswimmers. Previous reviews have discussed the emergence and function of biological microswimmers in great detail [1], [2], elucidating the mechanisms and strategies behind their movement. One recurring form of navigation in microswimmers is the so-called run-and-tumble

motion exhibited by Escherichia coli (E-coli) wherein the bacteria will travel in a straight line for some extended period before spontaneously rotating into a random new direction [3]–[5]. One application of this navigation mechanism is bacterial chemotaxis [6], the biased movement of a bacteria towards regions with higher concentrations of beneficial chemicals or lower concentrations of harmful chemicals [7]. Bacteria achieve this biased motion through changes in run duration depending on changes in the concentration of the chemo-attractant or -repellant. Learning chemotaxis has been the focal point of several research

papers aimed at reproducing or better understanding biological microswimmers through the use of reinforcement learning (RL) [8]–[11]. In their 2021 study, [10] applied a genetic algorithm to the problem of learning shape

deformations for navigation in static and dynamic environments. They found that the neural networks learned a movement closely resembling that of run-and-tumble motion. In another 2021 study, [11] applied Q-learning to learning navigation strategies in self-thermophoretic particles from which they again see the emergence of run-and-tumble motion. They further investigated the

effects of temperature on the learning process, identifying that models trained at higher temperatures took longer to learn their emergent strategy. Finally, our previous work [8] directly addressed the role of temperature in the emergent strategy of RL-driven microswimmers by studying chemotaxis learning by the actor-critic reinforcement learning algorithm. It was found that, while the efficacy

of the chemotaxis changed with different temperatures, the same run-and-tumble motion arose from the majority of agents trained at different temperatures. While it is clear that RL algorithms can and, in fact, seemingly often do learn

run-and-tumble type motion for chemotaxis problems, what impact this has on our understanding of biological microswimmers and even optimal design of artificial swimmers is not clear. This study investigates natural limitations on emergent

chemotaxis by training actor-critic RL models using prolate, oblate, and spherical agents of different sizes and with different swim speeds in physically realistic fluid environments subject to translational and rotational Brownian motion. In this way, we

hope to identify how optimal RL algorithms are for the learning task and to identify, if any exist, optimal size/speed combinations of microswimmers in these environments, which may guide our interpretation of biological microswimmers as well as advise the

design of artificial ones. Furthermore, by investigating the deployment of the RL algorithms close to conditions where agents will be dominated by rotational and translational Brownian motion, we can explore the emergence of different navigation strategies

that may be leveraged in the treatment of biological or artificial swimmers, essentially peering into the minds of bacteria as they navigate environments.

The manuscript is structured as follows. We will first discuss the theory behind the investigation, explaining the mechanism and physical limitations of chemotaxis in biological systems. We then introduce deep actor-critic reinforcement learning and discuss its multi-agent realization. The simulation and training methods are discussed in detail before our results are presented, and a brief outlook is presented.

2 Theory↩︎

2.1 Biological Chemotaxis↩︎

As mentioned briefly in the introduction, chemotaxis is the biased movement of bacteria towards favourable regions in their environment [7].

Escherichia coli (e. coli) perform chemotaxis actively using run-and-tumble motion. In their running phase, they bundle their flagella together and rotate them anti-clockwise, during a tumble phase, one or more flagella change their

rotation direction, breaking apart the bundle and causing random rotation of the bacteria before the bundle reforms and translational swimming is resumed [12]. Utilizing a sensing mechanism, these bacteria can identify if they are moving towards or away from favourable regions of their environment, adjusting their tumble rates accordingly to maintain desired movement [13].

In order to capture the essential features of bacterial motility, we consider here active particles that can perform four distinct types of movement. Firstly, they can move forward along their intrinsic direction like bacteria in the run phase. Secondly, they can actively rotate clockwise or counterclockwise, like bacteria in the tumble phase. Our swimmers can choose the direction of rotation while bacteria rotate towards a random new orientation. Thirdly, the swimmers can opt to do nothing, that is, only move passively by Brownian motion.

In our investigations, interactions of the particles with their surrounding fluid is modelled by the over-damped Langevin equations \[\dot{\vb{r}}_i = \gamma_t^{-1} \left[F(t) \vb{e}_i(\Theta_i) - \nabla V(\vb{r}_i, \{\vb{r}_j\}) \right] + \sqrt{2 k_B T \gamma_t^{-1}} \vb{R}^t_i(t), \label{eq:brownian95pos}\tag{1}\] \[\dot{\Theta} = \gamma_r^{-1} m(t) + \sqrt{2 k_B T \gamma_r^{-1}} R^r_i(t). \label{eq:brownian95angle}\tag{2}\] where, \(\vb{r}_i\) is the (two-dimensional) position of particle \(i\), \(\Theta_i\) the angle describing the particle orientation, \(\gamma_{(t,r)}\) the translational (rotational) friction coefficient, \(F\) and \(m\) a force and torque corresponding to the respective type of active motion, \(\vb{e} = (\cos(\Theta), \sin(\Theta))^T\) the particle orientation, \(V\) an interaction potential between all particles in the system, \(k_B\) the Boltzmann constant, \(T\) the temperature and \(\vb{R}^{(t, r)}_i\) a noise term with zero mean and correlations according to \(\require{physics} \expval{R^{(t,r)}_i(t) R^{(t,r)}_j(t')} = \delta_{ij} \delta(t-t')\), where \(\require{physics} \expval{\cdot}\) denotes an ensemble average.

To quantify the relative importance of active and passive motion, we define translational and rotational Péclet numbers \[Pe^{trans, rot} = \frac{\tau^{diff}_{trans, rot}}{\tau^{act}_{trans, rot}}.\] Here, \[\tau_\text{rot}^\text{diff} = \frac{1}{2D_\text{rot}} = \frac{\gamma_r}{2 k_B T}, \quad \tau_\text{rot}^\text{act} = \frac{2\pi}{\omega^\text{act}}, \label{eqn:rot-scale}\tag{3}\] are the timescale of decorrelation of the particle director through rotational diffusion and the timescale for one active rotation, respectively. For the translational degrees of freedom we have \[\tau_\text{trans}^\text{diff} = \frac{a^2}{D_\text{trans}} = \frac{a^2 \gamma_t}{k_B T}, \quad \tau_\text{trans}^\text{act} = \frac{a}{v^\text{act}}, \label{eqn:tran-scale}\tag{4}\] as the timescale for diffusion of one particle radius and the timescale for swimming of one particle radius, respectively. In regimes where \(Pe^{trans, rot} \gg 1\) the dynamics will be dominated by active motion and when \(Pe^{trans, rot} \ll 1\), it will resemble passive diffusion.

2.2 Actor-Critic Reinforcement Learning↩︎

Reinforcement learning concerns itself with the interactions between an agent and its environment within which it gradually learns to achieve a desired task [14]. This agent is typically provided with a set of actions it may perform and uses a policy, \(\pi(a_{t}|s_{t})\) to decide at time \(t\), based on its current state, \(s_{t}\), what the best actions, \(a_{t}\) will be such that it maximises a reward, \(r(s_{t})\). Over the course of one or many simulations, this policy will be updated so that the agent becomes more efficient at accomplishing this task and maximising its reward, \[ \pi' = \mathop{\mathrm{arg\,max}}_{\pi} \langle r(s_{t}|\pi) \rangle\] Deep reinforcement learning accomplishes this task using deep neural networks as the policy, \(\pi\) [15]. During our investigations, the actor-critic approach to deep reinforcement learning has been adopted due to its flexibility and efficacy [16], [17]. In actor-critic reinforcement learning, the actor takes on the role of the policy, \(\pi_{\theta}\), parameterized by \(\theta\), taking as input the current state of the agent and returning oftentimes a distribution over possible actions from which one is selected. The critic then takes on the role of a value function, \(V^{\pi_{\theta}}_{\omega}\), the objective of which is to describe the expected return of an agent starting in state \(s_{t}\) and following policy \(\pi\). During training, the actor is tasked with maximising its finite-horizon return of its policy \[ J(\pi_{\theta}) = \left\langle \left [ \sum\limits_{t=0}^{T} \log \pi_{\theta}(a_{t}|s_{t})\cdot A^{\pi_{\theta}}(s_{t}, a_{t}) \right ] \right\rangle_{\tau}, \label{eqn:update-value}\tag{5}\] where \(A^{\pi_{\theta}}\) is the so-called advantage, computed by \[ A^{\pi_{\theta}}_{t} = G(s_{t}, a_{t}) - V^{\pi_{\theta}}_{\omega}(s_{t}),\] where \(G\) is an analytic expected returns function. In our studies, a simple decaying return function \[ G_{t} = \sum\limits_{t'=t}^{T}\epsilon^{t' - t}r_{t'},\] with decay factor \(\epsilon\) is used. \(J(\pi_{\theta})\) is maximised by way of gradient ascent on the actor parameters with updates taking the form \[ \theta' = \theta + \eta\cdot\nabla_{\theta}J(\pi_{\theta}),\] with learning rate \(\eta\). Recalling that the actor output is a distribution over actions, should the advantage be negative, i.e, the critic believes a better trajectory could have been chosen, the log probability of these action will be discouraged. If this number is positive, the actor has outperformed the expectation of the critic and the policy is reinforced. The critic network is trained directly on the chosen expected returns function via the Huber loss \[ L^{\delta}(y^{\text{true}}, y^{\text{predicted}}) = \begin{cases} \frac{1}{2}\cdot(y^{\text{true}} - y^{\text{predicted}})^{2} & \text{, for } | y^{\text{true}} - y^{\text{predicted}}| \leq \delta, \\ \delta\cdot (|y^{\text{true}} - y^{\text{predicted}}| - \frac{1}{2}\delta) & \text{, otherwise}, \end{cases}\] with \(\delta = 1\) in all studies. Such an update procedure is referred to as simple or vanilla policy gradient [18]. Whilst more sophisticated approaches exist, namely proximal policy optimization [19], for this particular study, the simpler approach sufficed.

2.3 Multi-Agent Reinforcement Learning↩︎

In our simulations, we work with not one, but many agents simultaneously, moving from the general concept of reinforcement learning into multi-agent reinforcement learning (MARL) [20]. In these cases, each agent shares a single actor and critic network and at the update time, also the experience that they have gathered. During the simulations, each agent asks the actor for an action to take individually and collects its own reward. At the time of the update, \(J(\pi_{\theta})\) becomes, \[J_{\text{MARL}} = \frac{1}{N}\sum\limits_{i}^{N} J_{i}(\pi_{\theta}),\] where \(i\) sums over the agents in the system and \(J_{i}\) is simply Equation 5 for a single agent. In this way, the experience of each agent is accumulated and updated together.

The field of MARL has a a vast set of definitions with respect to how individual agents interact and share knowledge in order to achieve the problem they are training on [21]. In this work, a decentralized Markov decision process is used to describe how the agents in the system interact. The system is considered decentralized as each agent receives only local information regarding its environment and a local reward for its own actions. During training, these rewards and local states are summed over and in doing so, the agents share the knowledge with one another.

3 Methods↩︎

3.1 SwarmRL↩︎

All reinforcement learning and simulation has been handled through the open-source software package, SwarmRL [22]. SwarmRL is a Python library built to combine molecular dynamics engines with classical control and reinforcement learning algorithms. All machine learning uses the JAX [23] and Flax [24] libraries.

3.2 ESPResSo Simulations↩︎

In this study, reinforcement learning is applied to training microswimmers in a physically realistic simulated environment. For this environment, we employ the ESPResSo simulation engine [25]. Trajectories of the particles are simulated using the over-damped Langevin equations of motion for both position and orientation described in Equations 1 and 2 . The friction coefficient of a spherical agent with radius \(r\) in a fluid with dynamic viscosity \(\mu\) is calculated according to Stokes’ law as \(\gamma_t = 6 \pi \mu r\) and \(\gamma_r = 8 \pi \mu r^3\). Interactions between the spherical agents are modelled with the two-body Weeks-Chandler-Anderson (WCA) potential [26], which can be seen as an almost-hard-sphere interaction \[V(r_{ij}) = \begin{cases} 4\cdot V_{0}\left[ \left(\frac{\sigma}{r_{ij}}\right)^{12} - \left(\frac{\sigma}{r_{ij}}\right)^{6} \right] + V_{0}, \quad &r_{ij}<2^{1/6}\sigma\, \\ 0, &\text{else}. \end{cases} \label{eqn:wca}\tag{6}\] Here, \(r_{ij} = || \vb{r}_i - \vb{r}_j ||_2\) is the Euclidean distance between the particles, and \(\sigma = 2a\) the colloid diameter. We choose the interaction strength \(V_0 = k_B T\). Details on the anisotropic particles’ friction coefficients and interaction potentials can be found in the supplementary information.

3.3 Reinforcement Learning Parameters↩︎

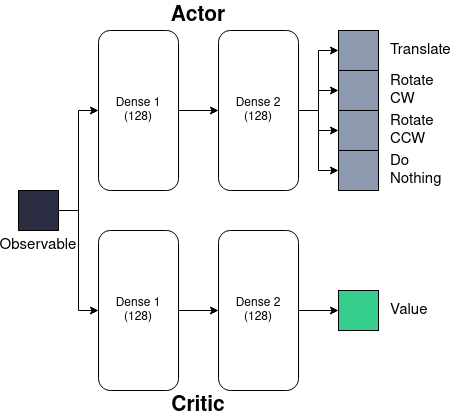

In our investigations, the actor-critic approach to reinforcement learning is utilised with a network architecture displayed in Figure 1. A two-layer network, each with 128 units, is deployed for both the actor and the critic, along with ReLU activation functions.

Figure 1: Representation of actor-critic reinforcement learning architectures.

During the training, each network is trained for 10000 episodes, each of which consists of 20 applications of the policy over 4000 simulation time steps. Each episode would be 2 s in real-time. Updates of the network are handled by the Adam optimizer [27] using a learning rate of 0.002. For each swim speed and agent size, 20 reinforcement learning runs were performed to collect statistics.

3.4 Agent Definition↩︎

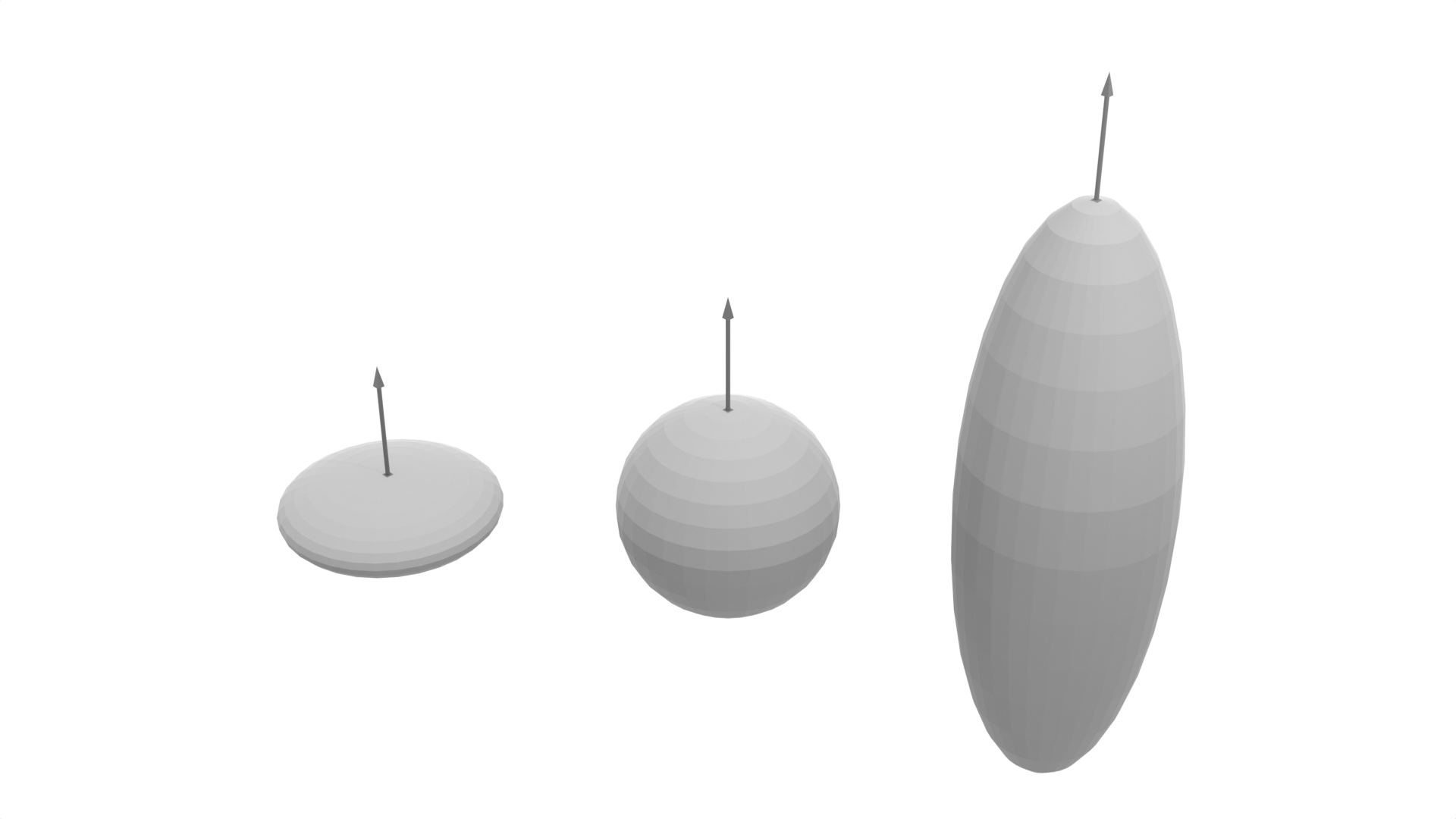

The study considered three agent shapes with a fixed set of actions. Agent shapes were designed to mimic some of those found in biology: oblate, prolate, and spherical bacteria or microswimmers. Figure 2 displays renderings of these agents for radius \(1 \mu m\) constructed using the Vedo Python package [28].

Figure 2: Graphical Representation of the three agent shapes considered in this study, the sphere (center), prolate (right), and oblate (left). In each case, the volume of the agent is kept equal for a given radius value.

As the agents are designed to mimic bacterial particles, we endow them with the ability to perform the four actions described in Section 2.1, \[\mathcal{A}= \begin{cases} \text{Translate:} & v = n\cdot d \mu m s^{-1}\text{, } \omega = 0.0 s^{-1} \\ \text{Rotate CCW:} & v = 0 \mu m s^{-1} \text{, } \omega = 10.472 s^{-1} \\ \text{Rotate CW:} & v = 0 \mu m s^{-1} \text{, } \omega = -10.472 s^{-1} \\ \text{Do Nothing:} & v = 0 \mu m s^{-1} \text{, } \omega = 0.0 s^{-1}, \end{cases}\] where \(d\) is the colloid diameter, \(n\) is a scaling factor that we vary during the experiment, and \(\omega\) is the angular velocity measured in radians per second. The rotation speed was chosen to be similar to that of Escherichia coli [29]. In line with [30], we argue that agent volume is proportional to its swimming speed. Therefore, the action is measured in body lengths, and all agents with the same radius will swim at the same speed. The agents receive a state description designed to resemble a bacterium sensing changes in its surroundings, defined mathematically by \[ o_{i}(t) = f\left(||\mathbf{r}_{i}(t) - \mathbf{r}_{s}(t)||_{2}\right) - f\left(||\mathbf{r}_{i}(t-\Delta t) - \mathbf{r}_{s}(t-\Delta t)||_{2}\right), \label{eqn:concentration-sensing}\tag{7}\] where \(o_{i}\) is the observable for the \(i^\text{th}\) agent, \(f\) is the field chosen to represent the chemical being sensed, in our study, \(\frac{1}{r_{is}}\), \(\mathbf{r}_{i}(t)\) is the position of the \(i^{\text{th}}\) agent at time \(t\), \(\Delta t\) is the amount of time since the last action was computed and \(\hat{\mathbf{r}}_{s}(t)\) denotes the position of the source of the field at time \(t\). To encourage chemotaxis, agents are rewarded using a similar function \[ r_{i}(t) = \begin{cases} o_{i} & \text{ if } o_{i} > 0 \\ 0 & \text{ else.} \end{cases}\] This way, movement towards the source is encouraged, but movement away is not explicitly discouraged. We further refrain from using an absolute measure of the field in this study as it would not resemble the natural sensing abilities of the bacteria [31]. The addition of such a reward might be used to encourage agents to form groups reminiscent of biofilms or to replicate the act of digesting the source of the field, both of which are left for future studies.

3.5 Computational Methods↩︎

Training and deployment of the reinforcement learning models was performed on the University of Stuttgart SimTech compute cluster. Each simulation and training routine utilised six threads of an AMD EPYC 7702 CPU node, and all simulations were run in parallel. Due to the system sizes and machine learning being performed, no GPUs were required for these experiments. Training of each model required approximately twenty-four hours, and the deployment simulations were approximately six hours. The simulations and models were analysed on the same cluster hardware.

4 Results↩︎

Due to the similarity in the results and the amount of analysis, only the plots for the spherical agent analysis are shown in the main manuscript. All other plots are included in the SI, and any deviations between results are mentioned here.

4.1 Probability of Emergent Chemotaxis↩︎

This investigation aims to identify limits on emergent chemotaxis in RL agents in the hope that such limits cross over into biology, allowing us to study natural biological processes using RL as a valid surrogate model. These limits suggest formulating a phase diagram with forbidden regions where this behaviour is strictly prohibited. To this end, all simulations were collected where the final 50 % of the deployment trajectory was below 15 μm from the source of the field. This distance was determined based on the visual observation that no model that had successfully learned chemotaxis was farther away from the source than this distance. The successful simulations were used to compute the probability of learning chemotaxis by rationing them against the total number of simulations performed for a single speed and agent size.

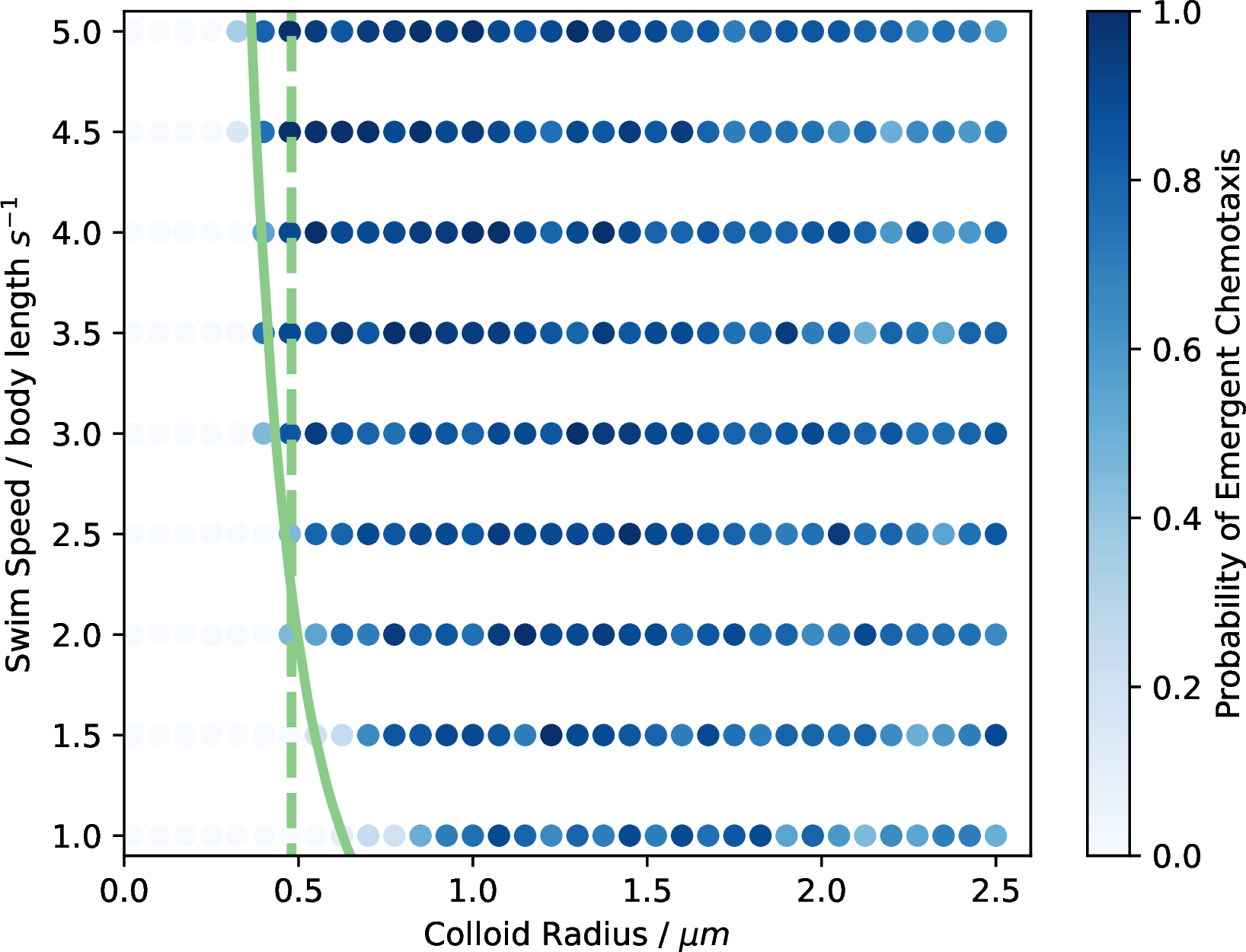

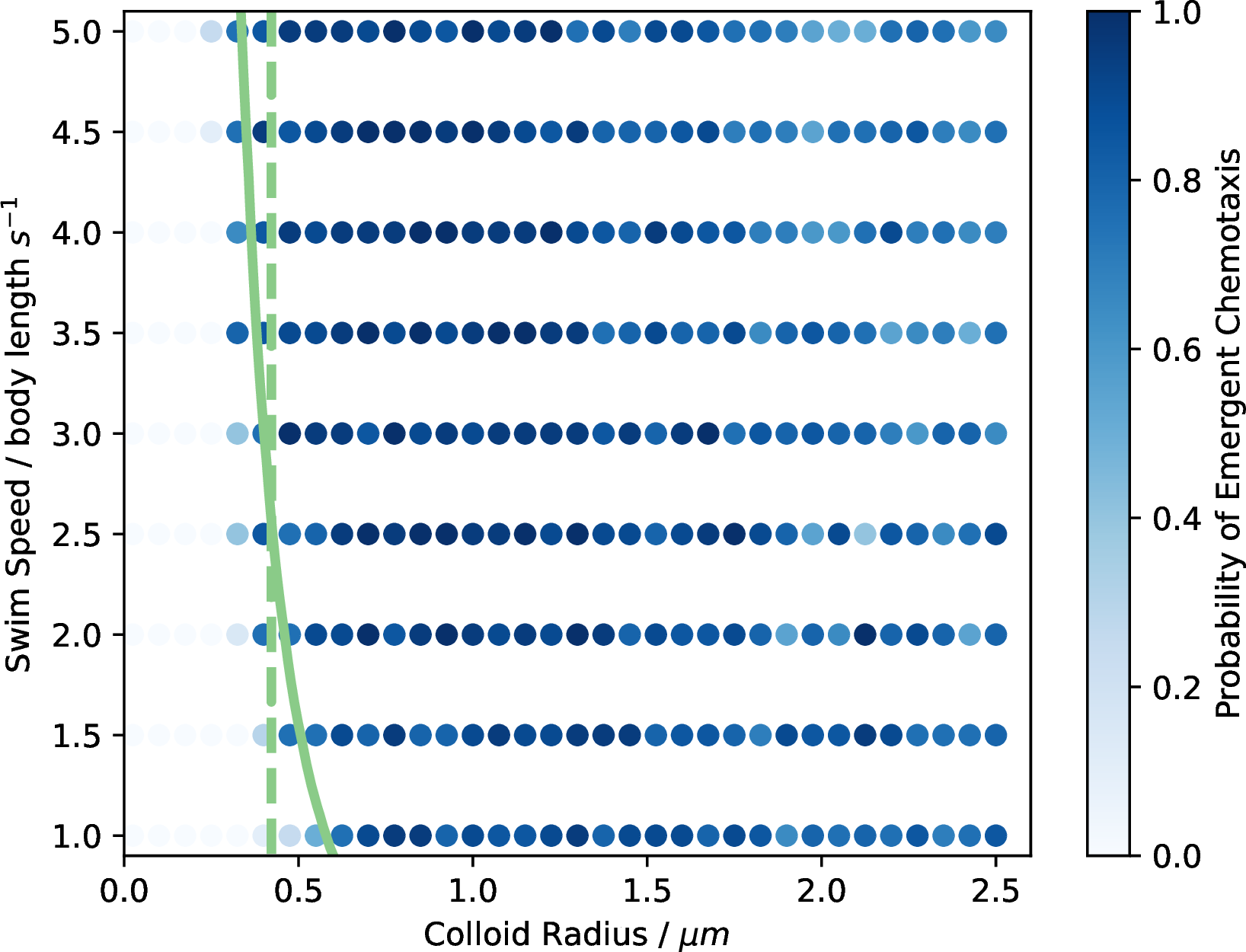

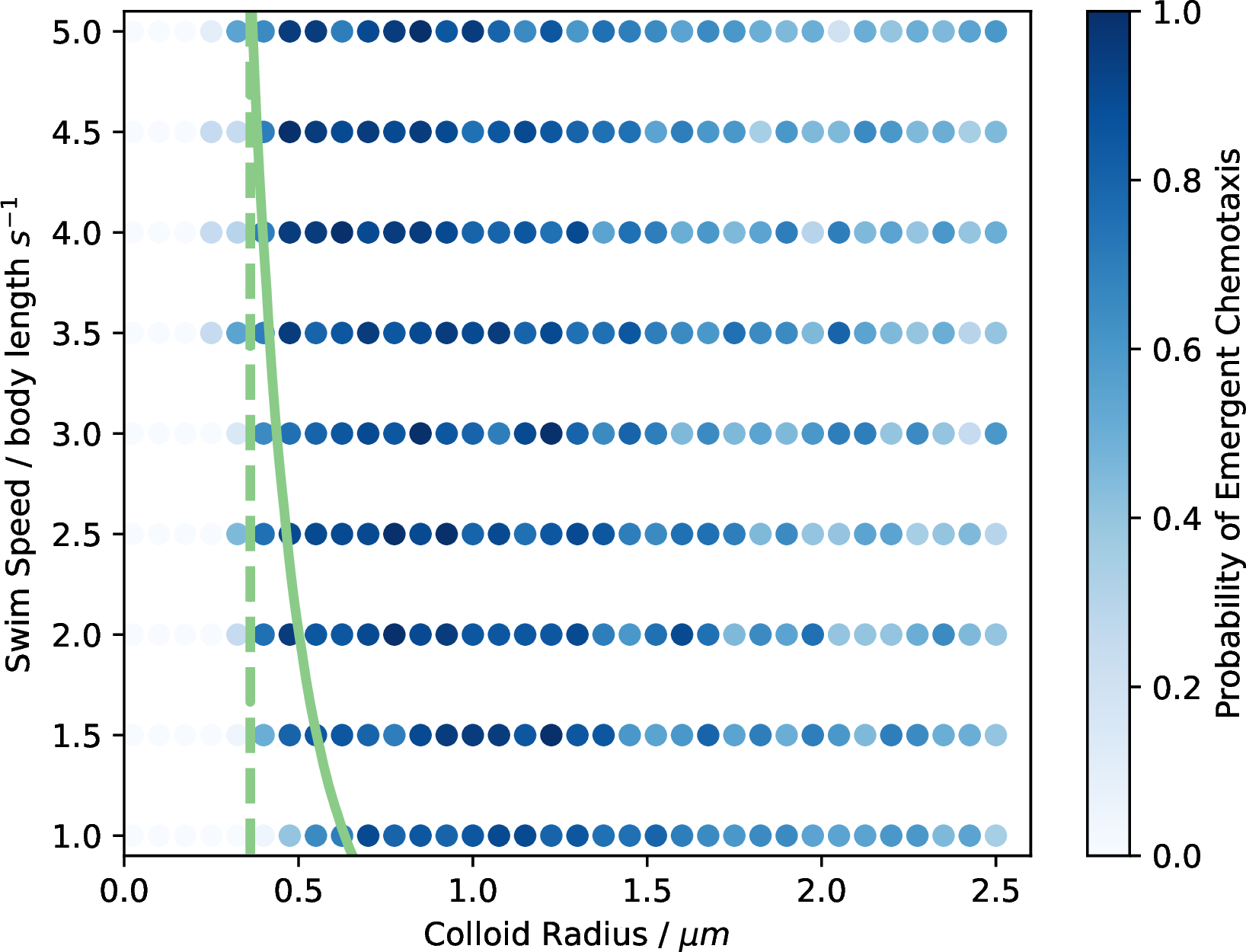

Figure 3: Probability of successful chemotaxis emerging from RL studies. Raw data from the experiment. The colour of each point corresponds to the number of RL simulations that successfully learned how to perform chemotaxis. The green lines indicate the theoretical values at which translational (solid) and rotational (dashed) diffusion becomes dominant compared to the active motion of the agents.

Figure 3 shows the computed phase diagram. The figure plots the sampled data points; the alpha value corresponds to the probability, with the more transparent points being less probable. One can identify a forbidden region in the size-speed space. Interestingly, it appears that smaller, faster colloids are more likely to learn an effective chemotaxis policy. Suppose we consider the difficulty the RL algorithm has in training a policy with the real-world problem of evolving a suitable structure for life. In that case, these results suggest a trade-off between speed and size when learning how to perform chemotaxis. The most critical component of Figure 3 is the theoretical boundaries formed by considering the ratio between Brownian motion and the active motion of the particles described by Equations 3 and 4 . The green lines in Figure 3 correspond to the colloid radius and speed values for which this ratio is \(1.0\) for translational (solid) and rotational (dashed) diffusion. The translation ratio forms a boundary where the RL agents can no longer learn successful chemotaxis. The rotational diffusion line appears less strict, particularly for the faster agents, where it appears that with enough translational activity, the agents can overcome having rotational diffusion dominate over active rotation. The alignment of our results with the theoretical values suggests that it does so as soon as it is physically possible for an RL agent to learn chemotaxis. Such a result encourages one to consider studying further features of the models to understand how these features might also arise in these agents’ biological or artificial counterparts. Interestingly, the onset of successful chemotaxis can take place far below this theoretical limit but very rarely.

4.2 Learning Efficiency↩︎

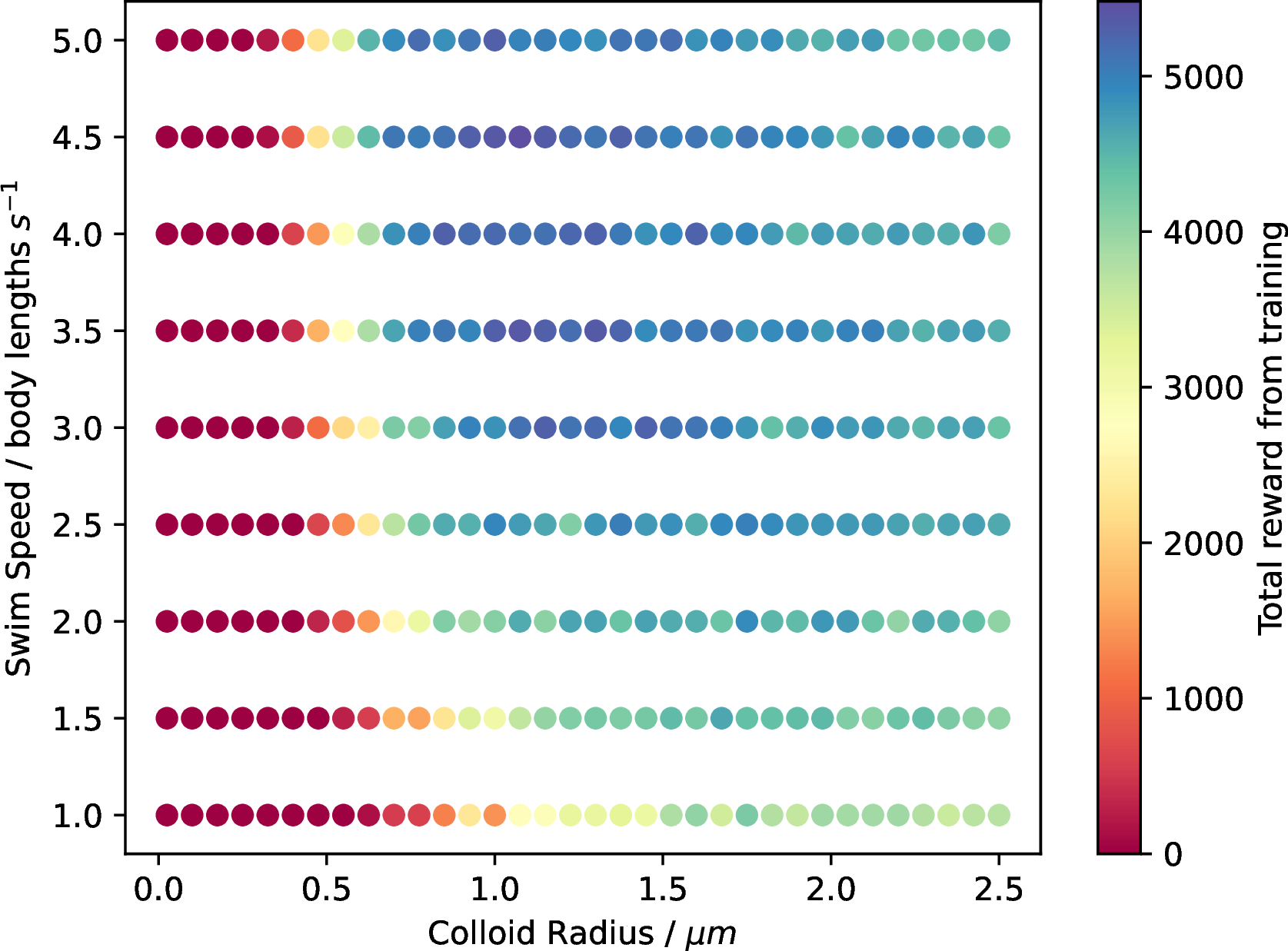

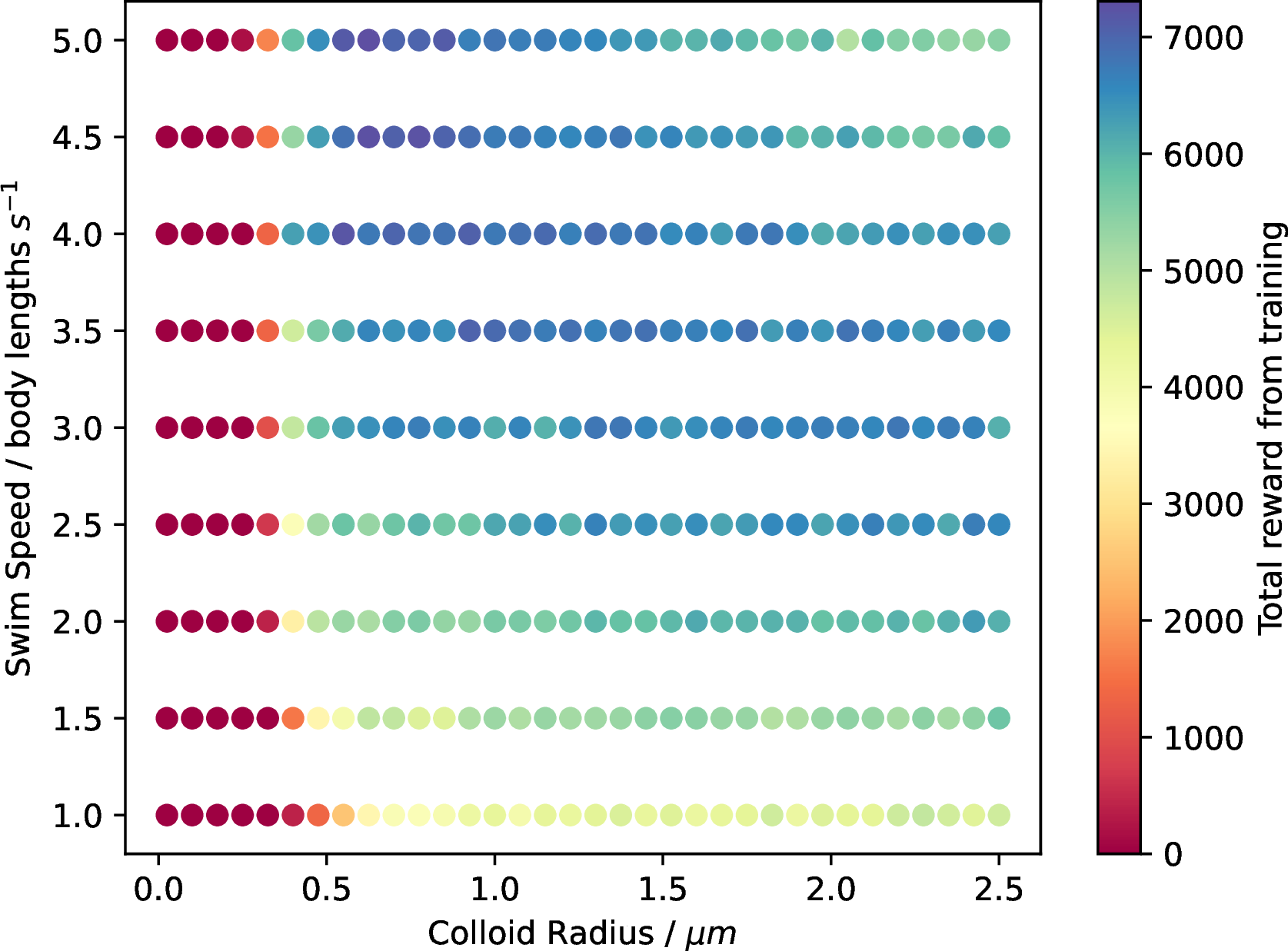

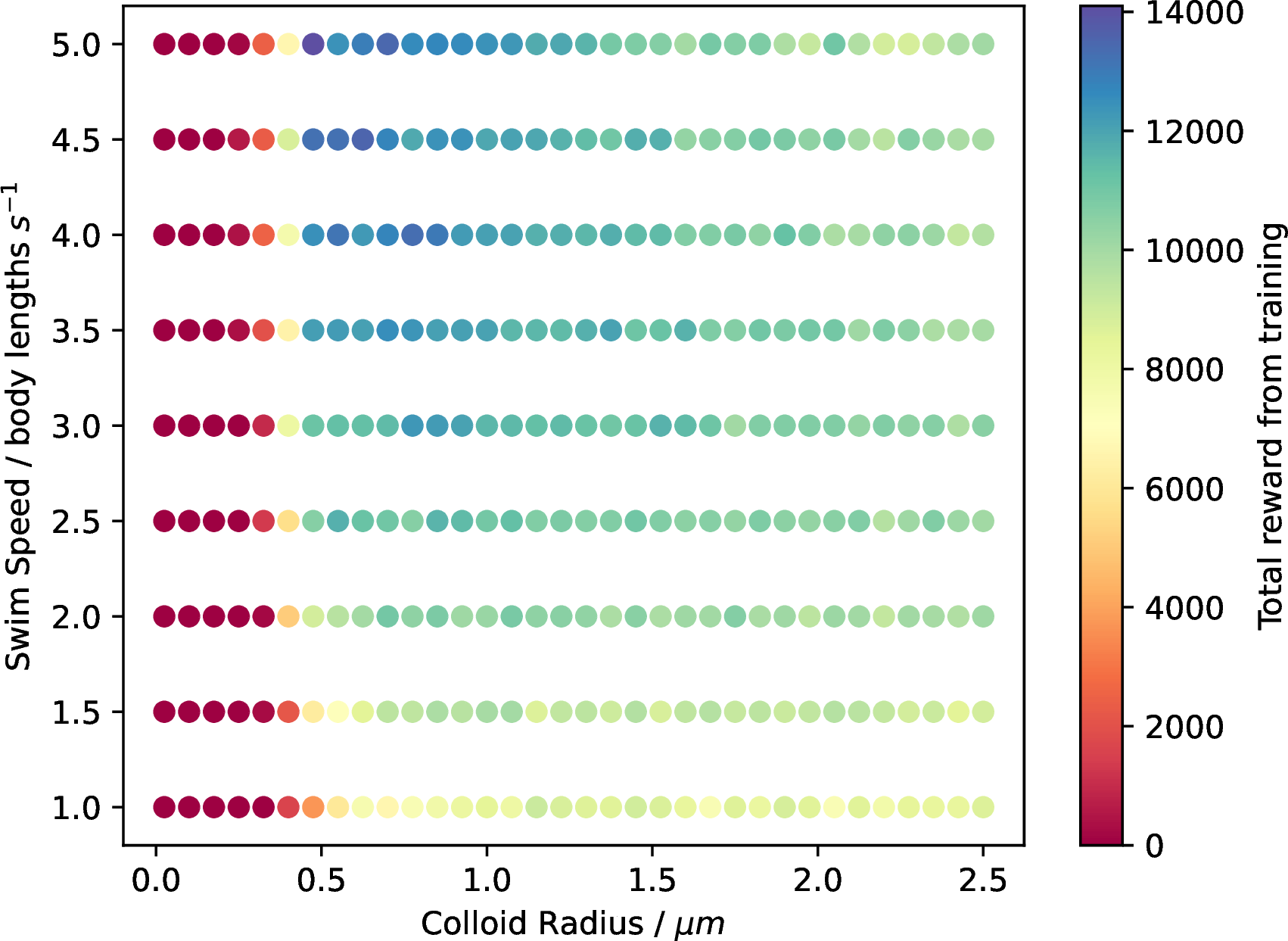

Next, we look at how the reward received from the reinforcement learning process changed depending on the size and speed of the colloids. This measure will indicate how easy it was for the model to learn the policy required to perform chemotaxis. Figure 4 outlines the results of this study in a similar manner to Figure 3. In the figure, the point’s colour corresponds to the total reward accumulated by the agents during all 10’000 training episodes.

Figure 4: Probability of successful chemotaxis emerging from RL studies. Raw data from the experiment. The colour of each point corresponds to the maximum reward achieved by the agents during the 10’000 episodes.

In order to compute the colour values in Figure 4, we corrected the size difference between the colloids. In the original simulations, an explicit distance to the source is used in the reward computation. However, this biases the results such that smaller colloids, no matter how successful they were, will achieve exponentially higher rewards as they can approach the source more closely. Therefore, the rewards in Figure 4 were computed by converting the reward from distance to the number of body lengths from the source. We can see that the reward diagrams roughly mirror the results shown in Figure 3 with larger discrepancies between the larger and smaller colloids. Namely, the rewards achieved by small and fast agents is noticeably larger than those of the bigger agents. This effect is particularly evident in the prolate and oblate simulations (SI Figures 10 and 14). It is likely due to their hopping over the intended target and inability to sit on it as accurately as the smaller agents.

4.3 Policy Efficiency↩︎

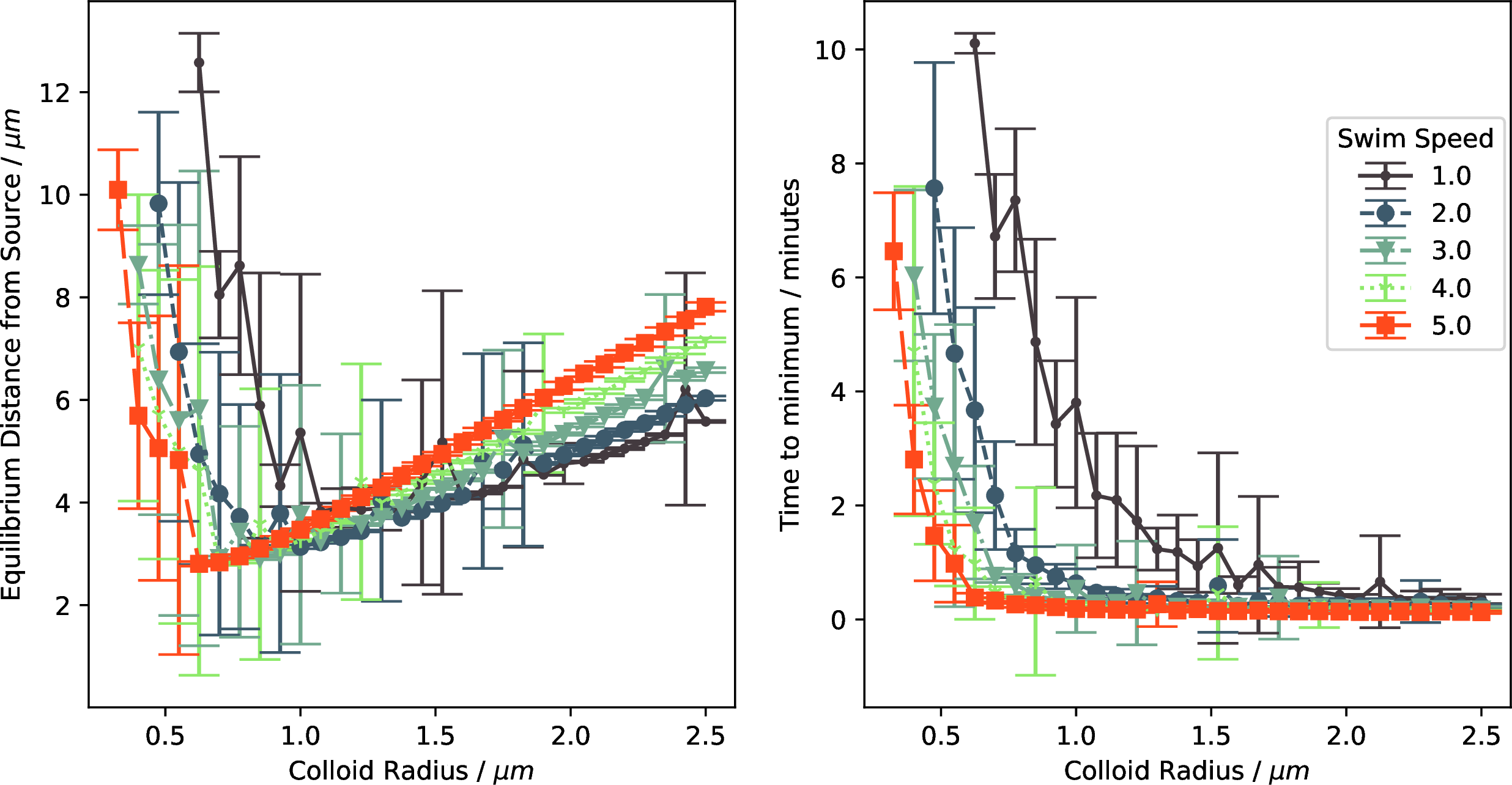

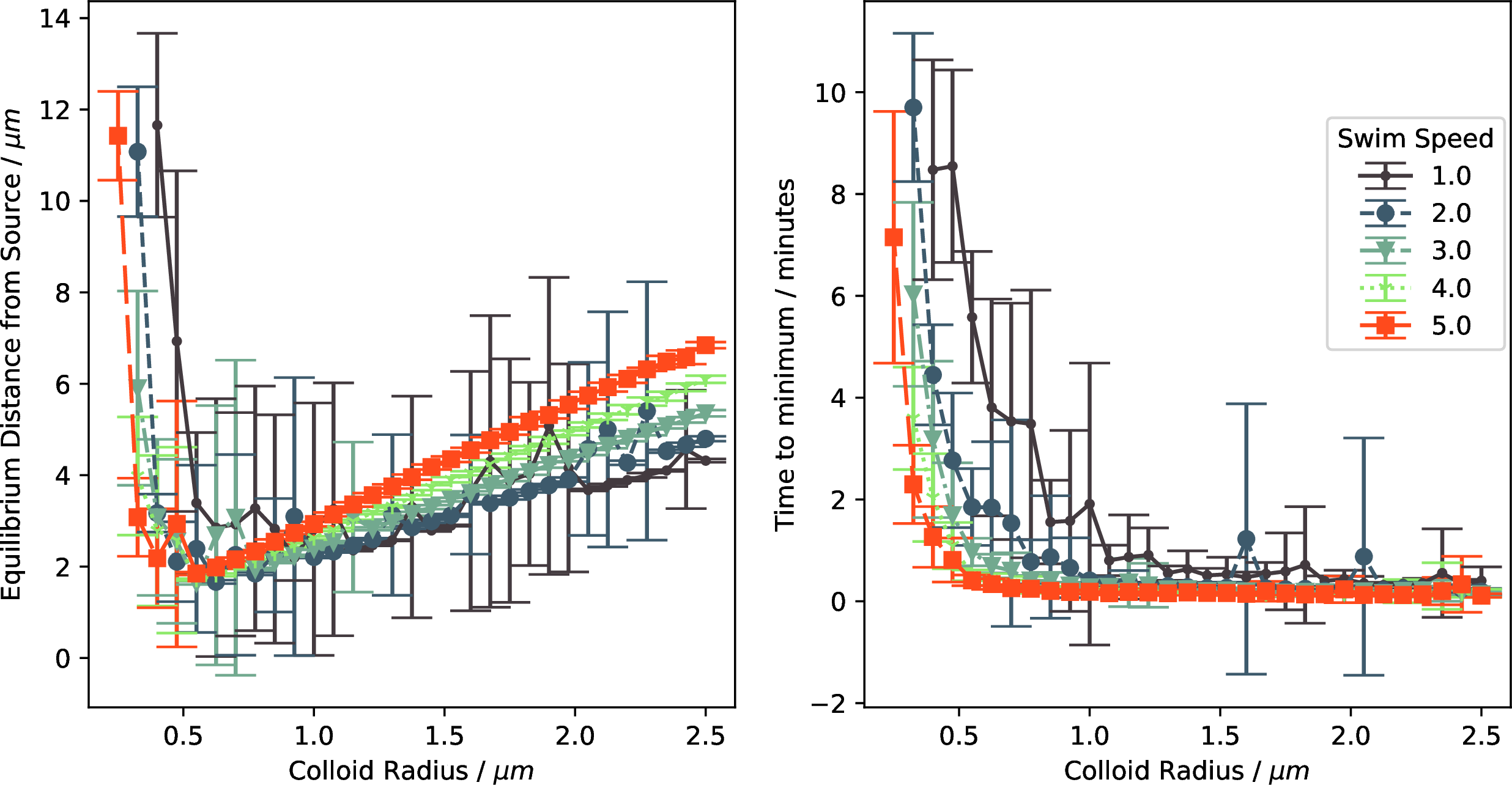

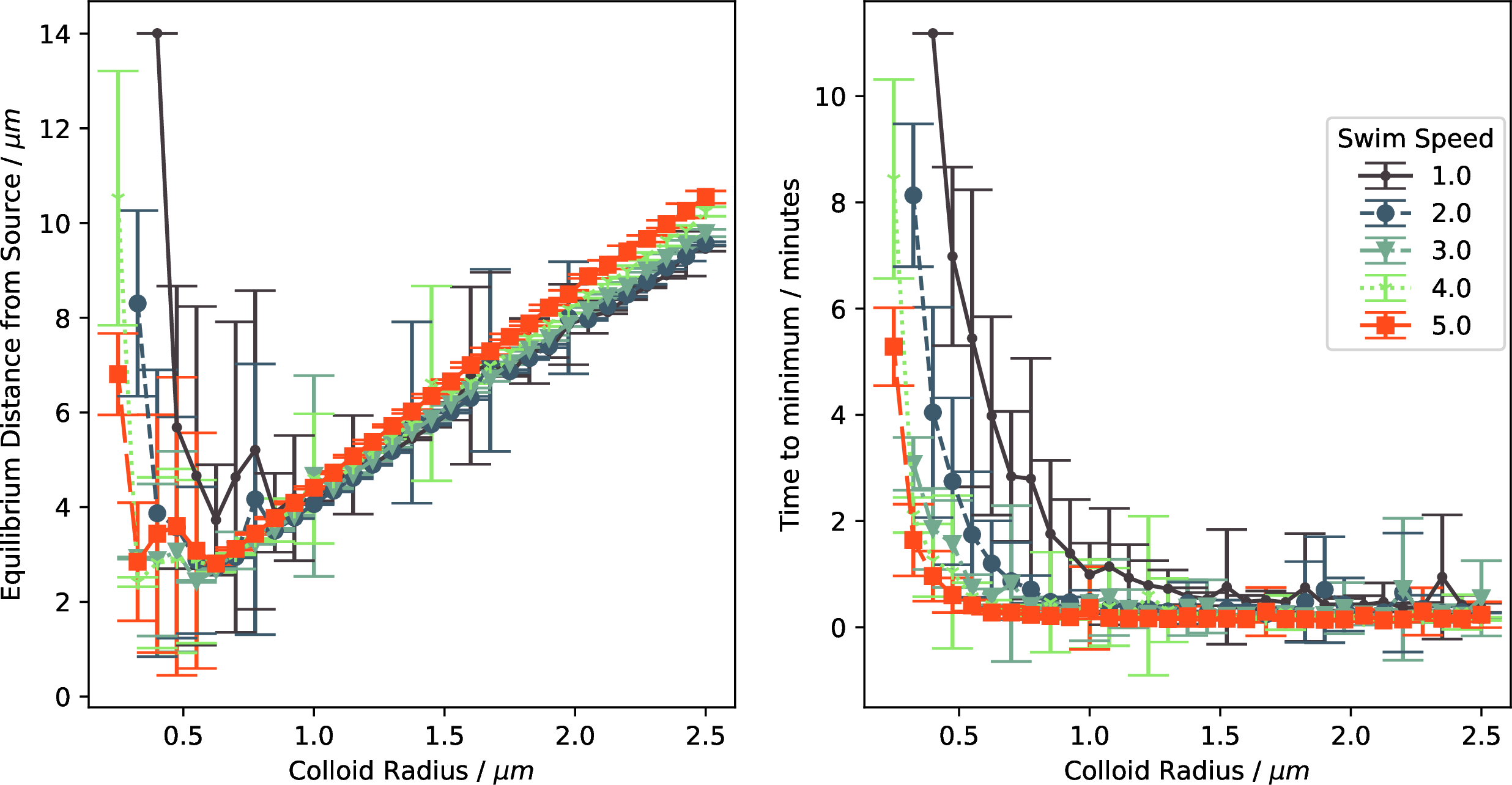

It is clear from the previous section that microswimmers of different sizes and speeds differ in their probability of emergent chemotaxis. However, what the differences are, if any, between their adopted strategy still needs to be determined. To identify these differences, the deployment simulations were analysed to compute the final equilibrium distance of the colloids around the source as well as after how many action updates they reached this distance. Figure 5 displays the results of this investigation.

Figure 5: (left) Mean distance from the source for each swim speed and colloid size. A clear minimum in each plot suggests an optimal size dependent on swim speed. (right) Rate of convergence to the source for different swim speeds and sizes. Interestingly, the convergence rate of larger colloids is relatively similar, suggesting some redundancy in larger body sizes and swim speeds.

Figure 5 (left) details the equilibrium distance of the agents as a function of radius for all studied swim speeds. On the right-hand side, we see a clear emergence of a linear trend as the limiting factor getting closer to the source becomes the size of the colloid and its speed. The left non-linear side of the plot also contains interesting features. Aside from the speed of one, the minimum distance from the source of the chemical field is achieved at a similar colloid size for all of the different speeds, with faster agents able to achieve slightly better equilibrium distances with smaller bodies. In Figure 5 (right), we see the average time the colloids reach the equilibrium distance. For the smaller agents, as is perhaps intuitive, the faster colloids can orient and move themselves to the source faster than their slower counterparts. However, this relationship fades for larger colloids as we see that after approximately 1 μm radii, all colloid sizes and speeds converge at similar times except for the slowest at one body length. The time to minimum converges slightly above 25 s. The results also suggest that after 0.5 μm radius, there is no conceivable benefit and, in fact, due to the larger equilibrium distance, perhaps even a detriment in being larger. Interestingly, the most unstable equilibrium distances, identified by large variance in mean value and distance from the source, occur close to or within the region displayed in Figure 3 where rotational diffusion overpowers the active rotation of the agents. This strong environmental effect could cause instability in these models as they must rely solely on their active translation to achieve chemotaxis.

4.4 Emergent Policy↩︎

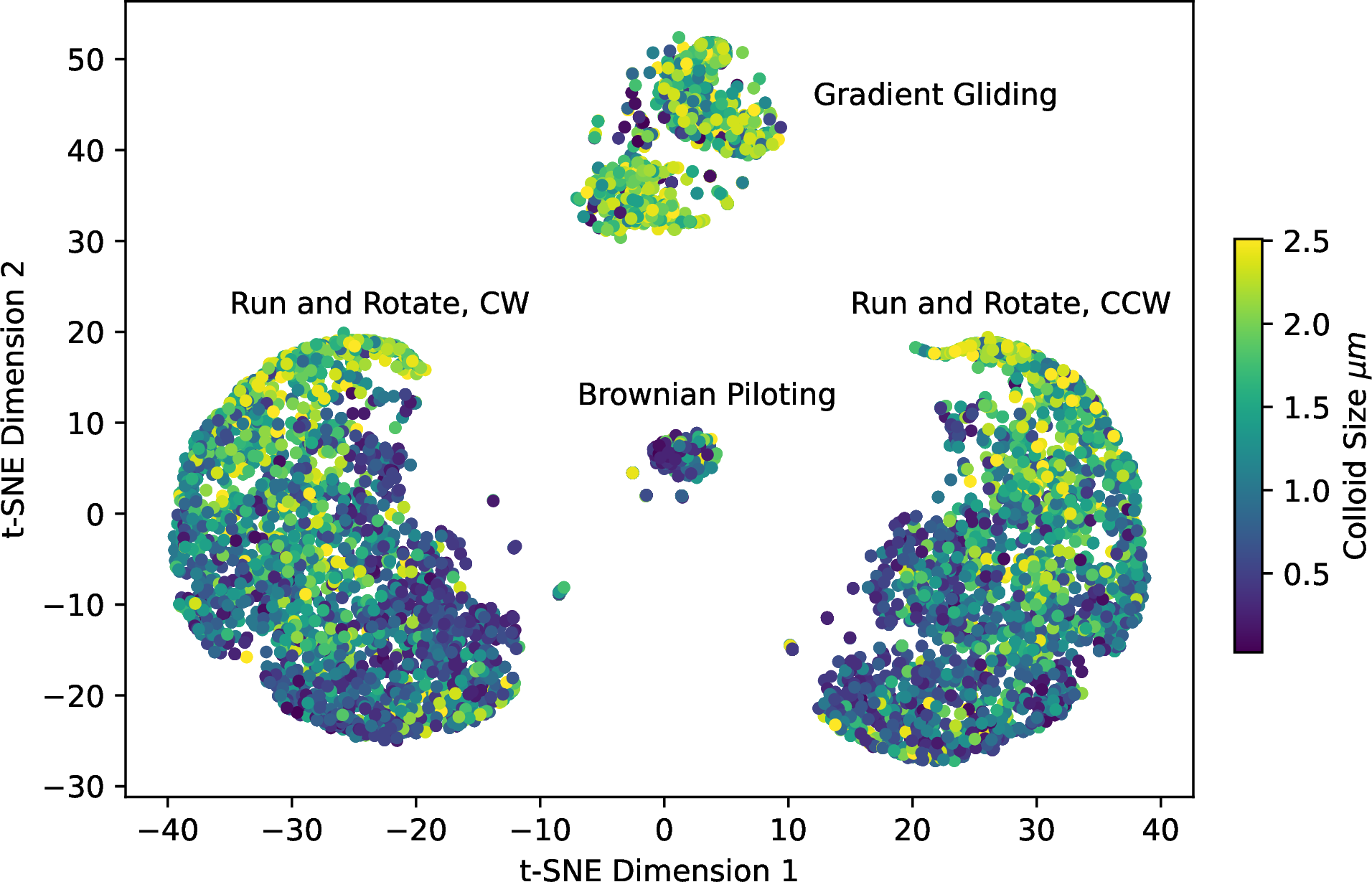

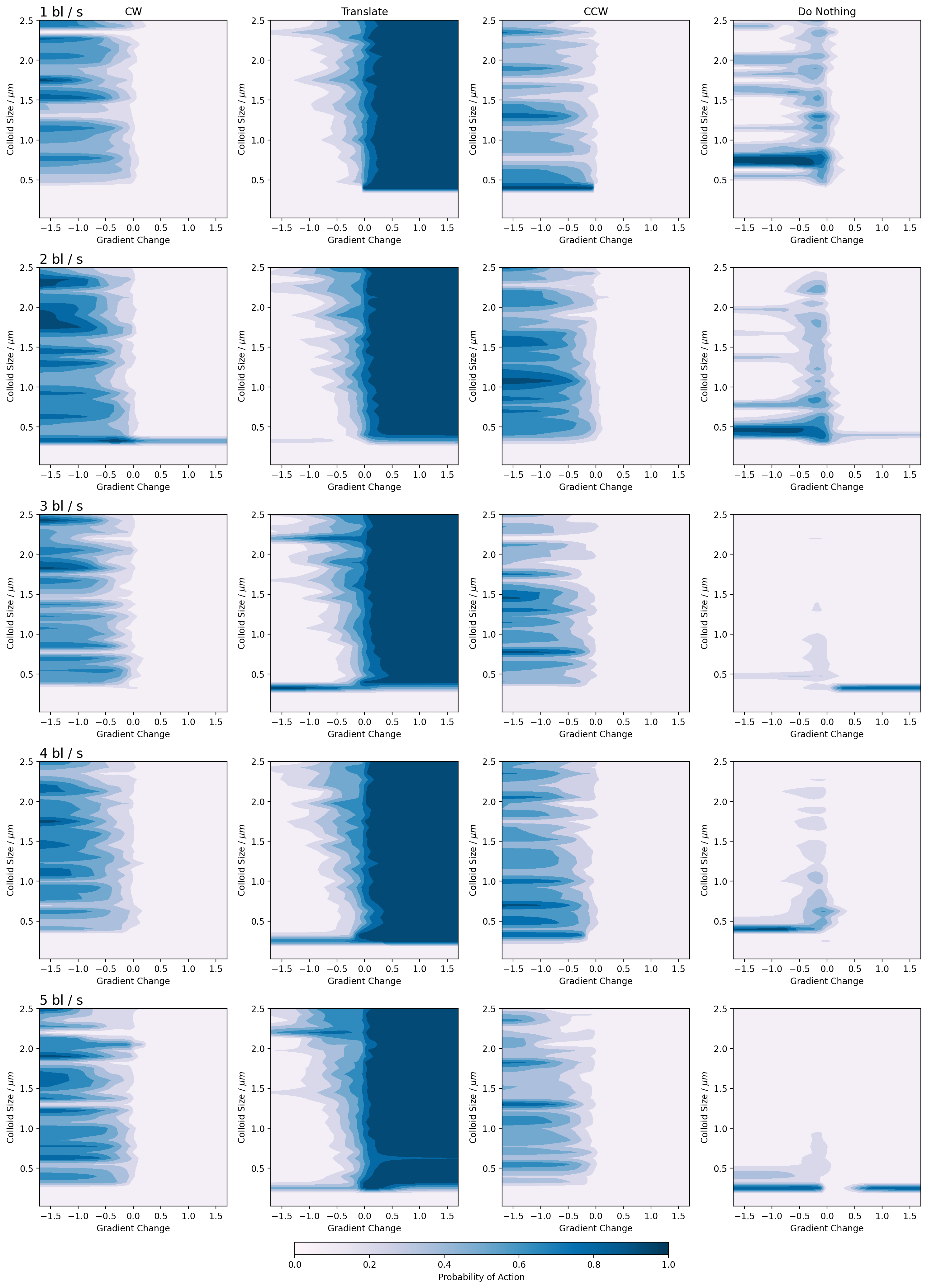

As a final investigation, we determine whether the emergent policy of the RL agent differs for changing physical properties and shapes. Studying the particles’ trajectory alone is almost impossible as they do not show large deviations from one another. Therefore, to do so, the trained models are given test data over a domain, \(x \in (-10.0, 10.0)\) and the probability of selecting each action is computed from the network outputs. The network output will be four numbers for each concentration value; therefore, before performing further analysis, these outputs are flattened into a single vector for each model, which we will refer to as the probability vectors of the network. In order to identify any structure in the data, we study the two-component t-distributed stochastic neighbourhood embedding (t-SNE) [32] of the probability vectors as implemented in the sklearn Python package [33]. Figure 6 outlines the results of the t-SNE for the policy data with a perplexity of 300 and principle-component-analysis (PCA) initialisation.

Figure 6: t-SNE embedding of the policy vectors for all successful agents in the study. Four large groups are formed, corresponding to the policies learned by the agents. The colour in these diagrams corresponds to the size of the studied agents.

Examining the t-SNE plots, we see the emergence of four groups, one of which is seemingly divided into two smaller subgroups. Using this information, we perform k-means clustering [34] on the probability vectors to split them into four clusters. The probability of the outcome of each policy is listed in Table 1 along with the explained variance from a PCA decomposition of the probability vectors. The probabilities are computed by examining the number of points clustered into each class by the k-means algorithm, which we assign to a policy by directly examining the action probabilities of the agents mapping into the class. The diagrams used to perform this mapping are included in the SI, where we show the probabilities of each action being taken for all agent sizes, shapes, and speeds. We also include smaller sample policy diagrams to demonstrate the actions taken by the agents for each strategy in SI Figure 7. However, as we only ask for four classes, this percentage will naturally ignore more convoluted and rare policies that the t-SNE and K-Means cannot sufficiently distinguish from others. We also perform PCA decomposition on the probability vectors to identify how much each policy explains the data distribution. In this approach, we see that 5.9 % of the data belongs to components with a smaller than one % impact on the variance of the PCA. When we examine the policies in this region, they are typically made up of weak combinations of the more dominant policies with very few exceptions. These policies may explain the splitting in the medium-sized group in Figure 6.

| Policy Name | Percentage Learned (K-Means) | Explained Variance (PCA) |

|---|---|---|

| Run and Rotate | 83.49 | 83.5 |

| Gradient Gliding | 12.88 | 7.1 |

| Brownian Piloting | 3.63 | 3.5 |

| Exotic Policies | 0.0 | 5.9 |

The remainder of the section will discuss each emergent policy in detail, including some policies poorly captured by the embedding methods.

4.4.0.1 Run and Rotate

In the vast majority of cases (83 %), the agents learned a policy strikingly similar to the run-and-tumble approach found in nature. In these cases, upon experiencing a negative input to the network, signifying a movement away from the

source of the gradient, the agents rotate either CW or CCW. Interestingly, once the agents chose a direction to rotate, they did not use the other one. Upon positive input to the network, i.e., movement towards the source of the gradient, the agents chose

to translate with probability 1. This policy can be seen clearly for the larger colloids in the top two rows of SI Figure 7. CW vs CCW selection was even throughout the simulations, with no preferred direction

discovered.

4.4.0.2 Gradient Gliding

For large colloids, some took translate for most inputs, only rotating for minimal changes in the gradient, such as those occurring when far away from the source or moving equipotential around it. Even in these cases, the strategy is inconsistent and

still has a high probability of translation. While this strategy might appear strange initially, it helps to consider regions where the gradient will be so slight. As the agents are initialised in the simulation, they will sit in a region with minimal

gradient. Upon moving around this area, they will likely rotate until they are dragged into a region where the gradient increases enough for it to begin translating. This was common amongst the chosen policies, with somewhere between 7 % and 12 % opting

for this approach. The discrepancy in percentage arises due to the spurious policies discussed in a later section. We label this policy Gradient Gliding as the colloids generally follow a translation path with very small adjustments made under

low gradient changes. An example plot of this strategy can be seen in SI Figure 7, row three.

4.4.0.3 Brownian Piloting

An alternative policy, referred to here as Brownian Piloting, was seen particularly in the case of smaller agents where rotational Brownian motion overcomes the active rotation. The agents learned to do nothing when experiencing negative

network inputs and to translate if they see a positive one. In this way, the agents do not fight against the Brownian forces when their active motion cannot overcome it. As this was only seen in the small agents, it is clear that such a policy could be

more optimal. However, it does demonstrate that small, weak agents can still successfully learn to navigate toward sources of nutrition. Overall, this policy was adopted in 3.6 % of cases. One can see Brownian Piloting in the fourth row of SI Figure 7.

4.4.0.4 Exotic policies (EP)

As was previously mentioned, approximately 5.9 % of the emergent policies were not well mapped into single classes. However, we can identify several so-called exotic policies by manually looking at the action probabilities.

EP 1: In these cases, the agents only translated when their input was negative. Otherwise, they chose to do nothing. This was only observed in small agents where random fluctuations significantly impacted their rotation more than active swimming.

EP 2: In this approach, we saw the agents choose the

Do Nothingaction at almost all times, except when the input to the network was a small positive gradient, at which point they would translate. This approach occurred for small colloids where all random forces outnumbered their active swimming. This indicates that microswimmers can survive in cases where their swimming is overpowered, effectively using their environment to perform successful chemotaxis. Whilst extremely inefficient, this swim strategy preserves energy and successfully allows the agents to perform chemotaxis despite an almost impossible condition.Combinations: We noticed combinations of other, more common approaches in many exotic policies. For example, some smaller colloids in Brownian-dominated regimes performed active rotation in both CW and CCW directions for negative inputs and translate for positive. We identify this policy as more or less equivalent to

Brownian Pilotingas the active rotation will not yield more than simply sitting still. In other cases, the onset of translation was delayed or accelerated, yielding slight variations ofRun and Rotate. As these points combined mixtures of the more dominant policies and yet did not occur often, the clustering algorithms could not successfully separate them into distinct classes.

The results tell us that in the cases where active translation and rotation are possible and dominate over Brownian effects, the agents often learn to perform a run-and-rotate trajectory. In cases where Brownian effects dominate active motion, the agents learn to adapt to this environment by performing only actions that move them into a new environment, by using the Brownian forces to their advantage. Interestingly, these policies are not well differentiated within the trajectories alone; only by looking at the neural networks can we see how the agents make decisions. Such an insight might guide us in understanding how real-world bacteria navigate their environments, and perhaps, how to disrupt, as in quorum quenching [35], or support, as in quorum enhancement [36], this navigation.

5 Conclusion↩︎

In this study, we have tested the role of size and swim speed on the emergent strategy of microscopic active agents learning chemotaxis via multi-agent actor-critic reinforcement learning. Our simulations demonstrated that intelligent agents can learn

chemotactic behaviour, even in environments where Brownian random forces begin to dominate their active motion. In such regimes, we found that the chemotaxis was not optimal in terms of their equilibrium distance from the source of the chemical gradient or

the speed at which they made it to the source. However, they could consistently reach their target. Interestingly, we saw that as the Péclet number grew above one and active motion dominated the Brownian forces and torques, the learned policies also

converged quickly to a similar equilibrium distance and time. After studying the policy efficiency, we looked into the strategies adopted by the agents to perform chemotaxis. We identified three dominant strategies which we named

Run and Rotate, Gradient Gliding, and Brownian Piloting. The first policy occurred most often (83.5 %) but predominantly in cases where the colloids were large enough to no longer be in regions where rotational or

translation Brownian motion overcame their active swimming. This strategy involved translating as long as the input to the agent was positive, i.e., moving towards the source and rotating if it was negative. The second most common strategy ( 7.1 %

emergence), also occurring in larger colloids, was to translate for most of the time whether the input was negative or positive, and only when the input to the network was small would they sometimes choose to rotate. This strategy meant that the colloids

spent a long time rotating when they were far away from the source but translating when they identified the direction of the source. The final common strategy occurred when the rotational and sometimes translational Brownian motion dominated the active

swimming. In these cases, the agents would perform the Do Nothing action while the input to the network was negative, and only when it was positive would they begin translating. We identified further policies, including a kind of

lazy swimming where the agents performed no actions except when they were far away from the source and the input to the network was weakly positive, as well as some cases where agents learned to rotate in both CW and CCW directions but often

in a regime where this was not useful. Overall, we have identified that reinforcement learning can replicate natural behaviour of organisms. However, it can also provide insight into biological swimmers’ possible strategies and may provide a path forward

for exploiting this knowledge. A further point of interest would be to identify natural biological swimmers who have evolved such swimming patterns or can outperform the emergent strategies of the RL agents.

6 Data and Availability↩︎

All data can be made available upon reasonable request to the authors and, upon publication, will be made publicly available through the DaRUS service.

7 Acknowledgements↩︎

C.H and S.T acknowledge financial support from the German Funding Agency (Deutsche Forschungsgemeinschaft DFG) under Germany’s Excellence Strategy EXC 2075-390740016, and S. T was supported by an LGF stipend of the state of Baden-Württemberg. C.H and S.T acknowledge financial support from the German Funding Agency (Deutsche Forschungsgemeinschaft DFG) under the Priority Program SPP 2363. C.H and C.L acknowledge funding by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Project Number 327154368-SFB 1313. The authors would like to acknowledge funding from the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) Compute Cluster grant no. 492175459.

7.1 Anisotropic Friction Coefficients↩︎

In the case of the anisotropic agents, the translational friction coefficients are more challenging. Consider a spheroidal agent with axial semiaxis, \(r_{ax}\) and equatorial semiaxis, \(r_{eq}\). Let \[a = \max(r_{ax}, r_{eq}), \quad e = \sqrt{1 - \left(\frac{r_{eq}}{r_{ax}}^{2}\right)}, \quad L = \log\left(\frac{1 + e}{1 - e} \right).\] The translational friction can now be defined following the approach taken by [37] where for a prolate particle \[\gamma^{ax}_{t} = 16\pi\mu a e^{3}\left[\left(1 + e^{2}\right)L - 2e \right]^{-1}\] is the axial friction coefficient and \[\gamma^{eq}_{t} = 32\pi\mu a e ^{3}\left[2e + (3e^{2} - 1)L \right]^{-1}\] the equatorial, and for an oblate particle \[\gamma^{ax}_{t} = 8\pi\mu a e^{3} \left[e(1 - e^{2})^{\frac{1}{2}} - (1 - 2e^{2})\sin^{-1} e\right]^{-1}\] \[\gamma^{eq}_{t} = 16\pi\mu a e^{3}\left[(1 + 2e^{2})\sin^{-1} e - e(1 - e^{2})^{\frac{1}{2}} \right]^{-1}\] are the axial and equatorial friction coefficients respectively. Rotational friction factors were compute using the Perrin factors [38]. To do so, we introduce further the aspect ratio \[p = \frac{r_{ax}}{r_{eq}},\] and \[\xi = \frac{\sqrt{|p^{2} - 1|}}{p}.\] With these two equations, we can derive the Perrin \(S\) factors for prolate \[S^{\text{prolate}} = 2\frac{\tanh^{-1} \xi}{\xi}\] and oblate \[S^{\text{oblate}} = 2\frac{\tan^{-1} \xi}{\xi}\] particles respectively. Finally, we define the generic rotational friction coefficient for an equivalent sphere \[\gamma_{\text{sphere}} = 8\pi\mu r_{ax} r_{eq}^{2}.\] With these definitions, the equatorial and axial friction coefficients for both prolate and oblate particles can be derived as \[\prescript{}{\text{prolate/oblate}}\gamma^{eq}_{r} = \frac{4}{3}\frac{\frac{1}{p^{2}} - p^{2}}{2 - S^{\text{prolate/oblate}} \left[2 - \left(\frac{1}{p}\right)^{2} \right]}\] and \[\prescript{}{\text{prolate/oblate}}\gamma^{ax}_{r} = \frac{4}{3}\frac{p^{2} - 1}{2p^{2} - S^{\text{prolate/oblate}}}.\] For anisotropic particles, the translational friction coefficient in Equation 1 becomes a tensorial quantity with \(\gamma_{t} = \text{diag}(\gamma_t^{eq}, \gamma_t^{ax})\) in the comoving frame of reference of each particle in which the second coordinate points along the director \(\vb{e}(t)\). Since particles are fixed to two dimensions, rotation happens only around an equatorial axis such that for anisotropic particles \(\gamma_r = \gamma_r^{eq}\) in 2 . The ESPResSo [25] simulation package is used to numerically solve Equations 1 and 2 with a time-step \(\delta t = \SI{0.005}{\second}\), the actions that determine \(F(t)\) and \(m(t)\) are updated every time slice \(\Delta t = \SI{0.1}{\second}\). In all cases, unless otherwise specified, when referring to time in this investigation, we refer to the time slice, i.e., the number of times an action is computed for each agent in the simulation.

7.2 Anisotropic Interaction Potential↩︎

For the prolate and oblate agents, we use the modified Gay-Berne potential for anisotropic particles [39], [40] \[ V\left(\vb{u}_{i}, \vb{u}_{j}, \vb{r}_{ij}\right) = \begin{cases} \epsilon\left(\vb{u}_{i}, \vb{u}_{j}, \vb{r}_{ij}\right) \left[ \left( \frac{\sigma_{0}}{r_{ij} - \sigma\left(\vb{u}_{i}, \vb{u}_{j}, \vb{r}_{ij}\right) + \sigma_{0}}\right)^{12} - \left( \frac{\sigma_{0}}{r_{ij} - \sigma\left(\vb{u}_{i}, \vb{u}_{j}, \vb{r}_{ij}\right) + \sigma_{0}}\right)^{6}\right], \quad &r_{ij}<4\sigma\max(l, l^{-1}),\\ 0, &\text{else}, \end{cases}\] where \(\vb{r}_{ij}\) is the vector distance between the center of mass of each particle, \(\vb{u}_{i}\) is the direction vector of the \(i^{\text{th}}\) particle, and \(\epsilon\left(\vb{u}_{i}, \vb{u}_{j}, \vb{r}_{ij}\right)\) and \(\sigma\left(\vb{u}_{i}, \vb{u}_{j}, \vb{r}_{ij}\right)\) are additional functions depending on the orientations of the particles. We use the augmented forms of these function where \[ \epsilon\left(\vb{u}_{i}, \vb{u}_{j}, \vb{r}_{ij}\right) = \epsilon_{0}\left[1 + \frac{d^{-1} - 1}{2} \left(||\vb{r}_{ij}\cdot\vb{u}_{1}||_{2} + ||\vb{r}_{ij}\cdot\vb{u}_{2}||_{2}\right)\right]\] and \[ \sigma\left(\vb{u}_{i}, \vb{u}_{j}, \vb{r}_{ij}\right) = \sigma_{0}\left[1 + \frac{l - 1}{2} \left(||\vb{r}_{ij}\cdot\vb{u}_{1}||_{2} + ||\vb{r}_{ij}\cdot\vb{u}_{2}||_{2}\right)\right],\] where \(d\) is ratio between the side-by-side binding energy and the end-to-end binding energy and \(l\) is the aspect ration, which, in this work, was set to \(3\) for the prolate particles and \(\frac{1}{3}\) for the oblates. We choose \(\sigma_0 = \sigma\) and \(\epsilon_0 =V_0\).

7.3 Policy Examples↩︎

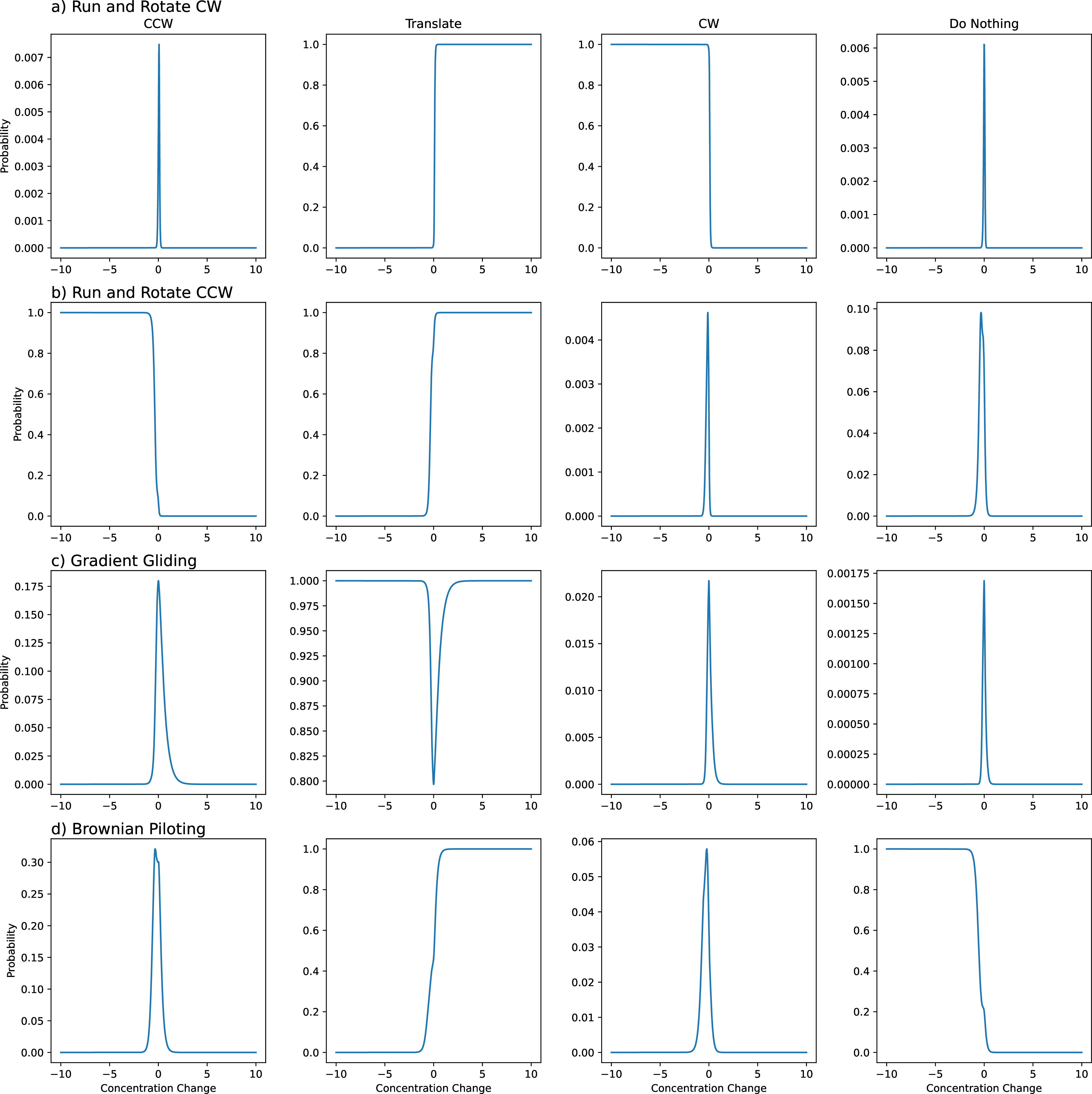

Here we show example policy diagrams for each policy discussed in the manuscript.

Figure 7: Examples of each emergent policy found during the investigation. a) and b) are the run and rotate policy for CW and CCW directions. You can see that as the input to the network becomes negative, the agents decide to rotate and as they are moving towards the source, they translate. c) The Just run, rarely rotate policy where for most inputs, the agent will translate, but far away from the source, when the input is small, the agent may also decide to rotate. d) Do nothing when negative and run when positive.

7.4 Shape Studies↩︎

As discussed in the manuscript, we performed the chemotaxis study for spherical, prolate, and oblate particles. Here we display and discuss the results not presented in the main manuscript.

7.4.1 Sphere↩︎

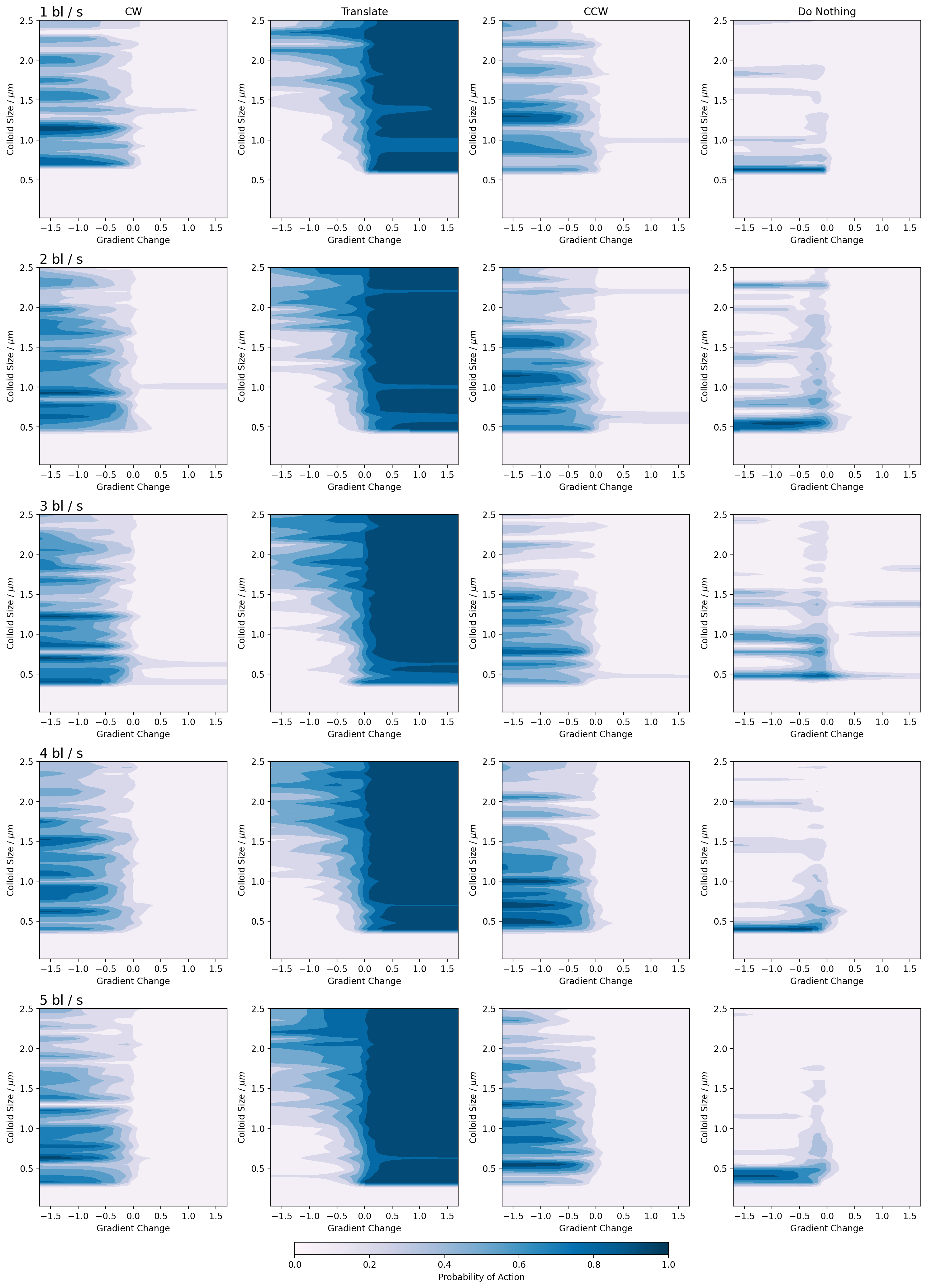

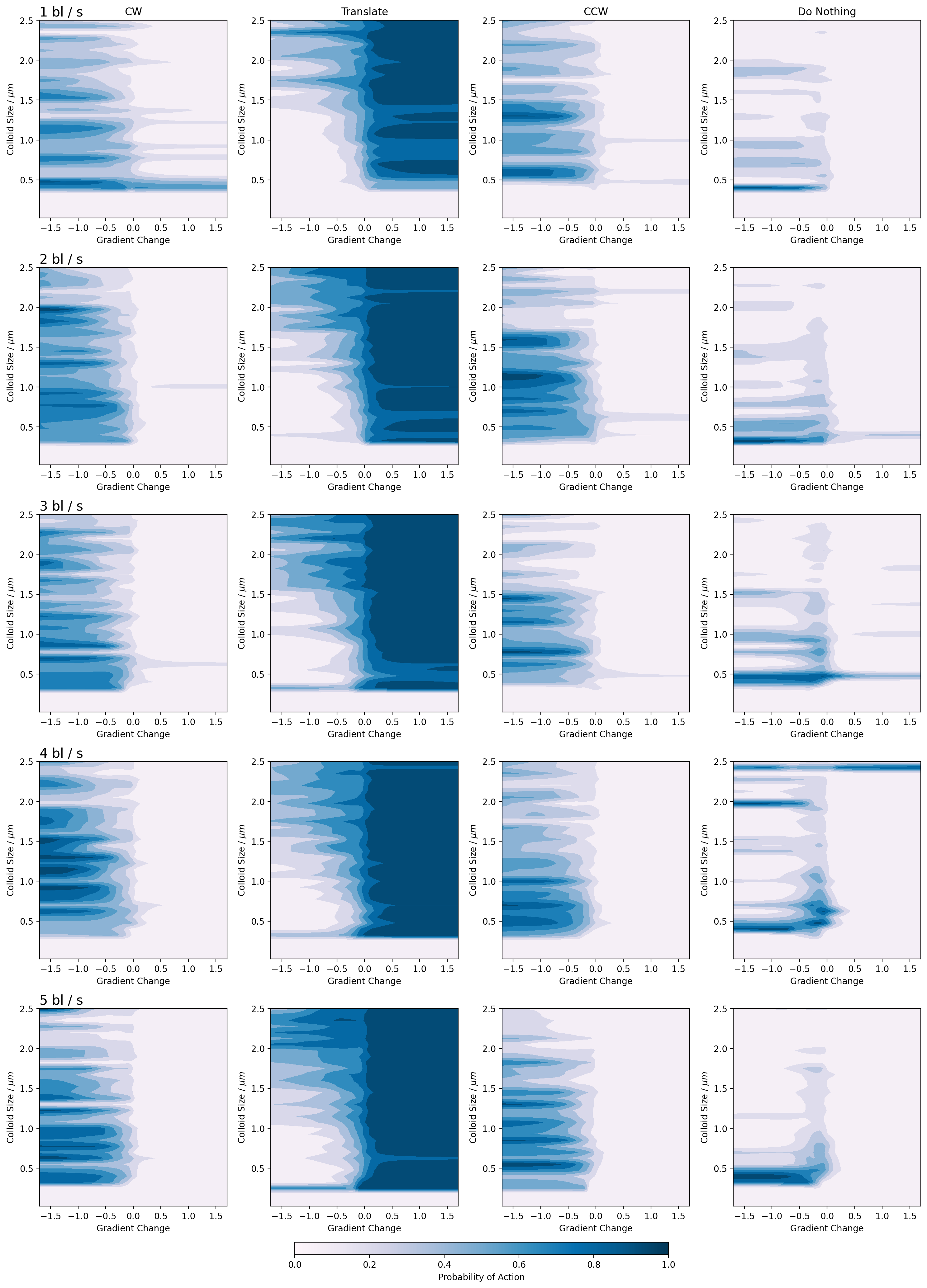

While most of the sphere results are presented in the main manuscript, the raw policy plots are presented here. Note, bl in the figure stands for body lengths.

Figure 8: Emergent policy of the spherical microswimmers for all speeds and sizes. We see the development of several strategies depending on body size, notably, a run-and-tumble type strategy where colloids will either rotate or translate depending on a decrease or increase in concentration, respectively. Interestingly, once a colloid has learned to rotate either CW or CCW, it does not change this direction at any point during the runs.

The sphere policy diagrams outline the majority of the policies discussed in the main text. On the x axis, the change in gradient is plotted and on the y, the colloid shape. The colour of the diagram represents the probability of an action being taken and each column corresponds to a single action. The rows are the different swim speeds descending from one to five. The diagrams show the forbidden region in the chemotaxis below approximately \(0.5 \mu m\). After this point, we see the emergence of non-zero probabilities as the networks have learned to perform chemotaxis.

7.4.2 Oblate↩︎

The oblate particles demonstrated similar behaviour to the spherical colloids, not showing any unique policy deviations.

Figure 9: The probability of emergent chemotaxis for the oblate particles along with the theoretical boundaries from the Péclet numbers.

Figure 10: Reward phase diagram computer from the oblate simulations. In this case, we see the best training emerges again in the smaller but fast region of the diagram. While the width of the colloids is corrected for, more complex geometric conditions may be impacting these results.

Figure 11: Policy efficacy for the oblate particles appears almost identical to the spherical particles.

Figure 12: Emergent policy diagram for the oblate particles.

7.4.3 Prolate↩︎

The prolate simulations were similar to both the spherical and oblate studies with the exception of one very unique policy. Mentioned in the main manuscript, we found an agent that appeared to perform no actions until the input to the network was small and positive. Upon receiving such an input, the agent would translate. More interestingly, this occured for a particle of size around \(0.3 \mu m\), far below the theoretical boundaries for random forces to begin dominating the motion. This was also the smallest agent in all simulations capable of achieving chemotaxis.

Figure 13: The probability of emergent chemotaxis for the prolate particles along with the theoretical boundaries from the Péclet numbers.

Figure 14: Reward phase diagram computer from the prolate simulations. In this case, we see the best training emerges again in the smaller but fast region of the diagram. While the width of the colloids is corrected for, more complex geometric conditions may be impacting these results.

Figure 15: Policy efficacy for the prolate particles appears almost identical to the spherical particles.

Figure 16: Emergent policy diagram for the prolate particles.