CARLOS: An Open, Modular, and Scalable Simulation Framework for the Development and Testing of Software for C-ITS

April 02, 2024

Abstract

Future mobility systems and their components are increasingly defined by their software. The complexity of these cooperative intelligent transport systems (C-ITS) and the ever-changing requirements posed at the software require continual software updates. The dynamic nature of the system and the practically innumerable scenarios in which different software components work together necessitate efficient and automated development and testing procedures that use simulations as one core methodology. The availability of such simulation architectures is a common interest among many stakeholders, especially in the field of automated driving. That is why we propose CARLOS - an open, modular, and scalable simulation framework for the development and testing of software in C-ITS that leverages the rich CARLA and ROS ecosystems. We provide core building blocks for this framework and explain how it can be used and extended by the community. Its architecture builds upon modern microservice and DevOps principles such as containerization and continuous integration. In our paper, we motivate the architecture by describing important design principles and showcasing three major use cases - software prototyping, data-driven development, and automated testing. We make CARLOS and example implementations of the three use cases publicly available at github.com/ika-rwth-aachen/carlos.

1 INTRODUCTION↩︎

Simulations play a vital role in the development and testing of automated driving functions [@Groh19]. Future cooperative intelligent transport systems (C-ITS) will increase their necessity even further due to the large number of involved entities and their complex interactions. Whether requirements posed at the security and safety of software are met must be tested before software is deployed. Simulations help deal with this task by being able to run vast amounts of relevant scenarios in which different software components may interact. Although simulations are affected by a reality gap, continuous advancements in their fidelity enable them to play a more central role in software development and testing [[@Kloeker23]][@Huch23][@Zhong21]. Thus, simulative testing represents an expanding topic in both research and development, as seen in research projects such as AUTOtech.agil [@VanKempen23].

Numerous stakeholders have therefore developed solutions for simulation frameworks and architectures [@Stepanyants23]. While progress has been made, current solutions often lack in scalability, usability, maintainability, and other key aspects. Most importantly, existing solutions are often tailored to the specific needs of their developers, may not be open-source, or not modular enough to be easily extended by the community.

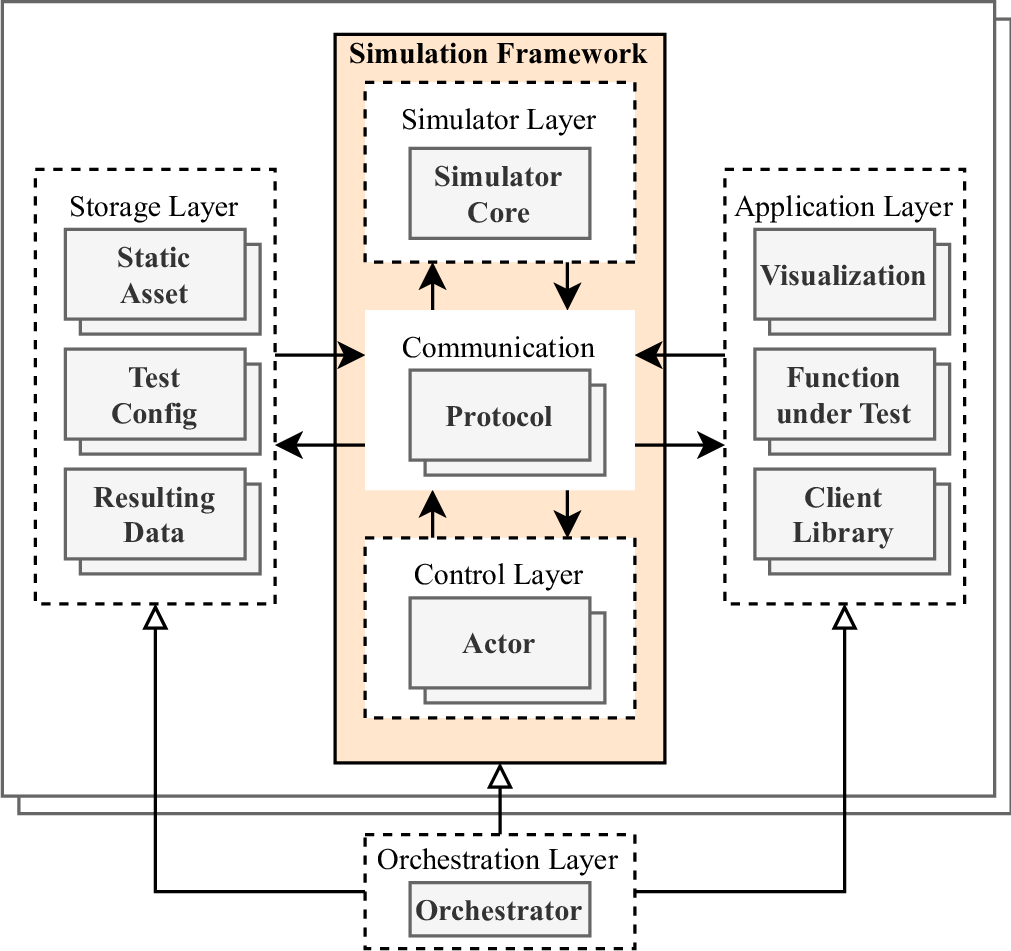

Figure 1: Overview of the proposed modular simulation architecture. It divides functionality into multiple layers, resulting in a generic microservice architecture that is flexible for multiple use cases. A managing orchestration layer enables automation and scalability of simulations.

Thus, an open, modular, and scalable simulation architecture is beneficial for the development and testing of automated driving software components. In this paper, we propose a generic architecture that is abstractly depicted in Fig. 1. Aligned with recent trends toward containerization and microservices, this novel architecture is characterized by modularity and flexibility. In detail, a separation into functional components enables dynamic adaptation to various simulation use cases and a seamless integration with DevOps workflows such as continuous integration (CI). Moreover, common orchestration tools can achieve the scalability of simulations to multiple concurrent runs, which significantly enhances testing throughput and efficiency.

As a reference implementation, we present CARLOS, a novel open-source framework incorporating the proposed simulation architecture within the CARLA ecosystem [@Dosovitskiy17]. CARLOS and comprehensive demonstrations for different use cases are made publicly accessible on GitHub. Consequently, we provide a strong foundation for further development driven by the CARLA and ROS community.

The main contributions can be summarized as follows:

Use case analysis for simulative testing in the context of automated driving.

Conceptualization of a modular simulation architecture using common standards.

Implementation of CARLOS, a containerized framework using the open-source CARLA ecosystem.

Illustrative provision of examples for specific use cases, allowing simple adaptation.

2 STATE OF THE ART↩︎

2.1 Microservice Architectures↩︎

Microservice architectures can be understood as a successor of the service-oriented architecture (SOA), a well-established architectural style that is often seen as the opposite and a competitor of the monolithic architecture. This is due to it solving many of the limitations and shortcomings of the monolith approach, some of those being maintainability, scalability, and reusability [@Amazon24]. More recently, the microservice architecture has gained in popularity, as it shares many of SOA’s strengths by following a similar architectural approach while also deviating at a few key points. For instance, it furthers independence and autonomy of services by splitting up the data store and allowing each service to be coupled with its own, contrasting the SOA approach where storage is shared between services. Additionally, instead of relying on a complex, shared service bus for communication, which is often seen in SOAs, microservices instead employ service-level APIs and gateways for external and inter-service communication [@Jamshidi18].

There are, of course, some major challenges that come with microservices. To facilitate the flexibility and reproducibility of deployments across a multitude of platforms, virtualization and containerization have emerged, the latter of which being more lightweight, and thus more fitting for microservices [@Tasci18]. There is also the issue of increased complexity in orchestration and management, as well as the need for extensive monitoring of such often distributed systems [@Baskarada20]. This is where Docker and Kubernetes respectively have established themselves as the de-facto industry standard [@Hardikar21], while also having notable and valuable alternatives like Podman or Docker Swarm.

The traits and technologies of microservices are highly desirable for many fields, especially in the booming space of cloud computing. The increasing shift towards cloud computing, and thus towards microservices and SOA, is also felt in robotics [[@Lampe23]][@Xia18][@Tasci18], where especially the C-ITS sector shows interest. Besides potentially shifting to the cloud and utilizing containerization and orchestration for live systems, these tools have also shown to be exceptionally useful for the development and testing of C-ITS software [[@White17]][@Busch23][@RedHat24].

2.2 Test Design↩︎

Testing has historically been deeply connected to the process of developing software due to the close relationship between systems engineering and modern software engineering [@Forsberg92]. Besides more recent trends in the software development life cycle (SDLC) like agile development, the V model is part of the more fundamental results of systems engineering [@Akinsola20]. It introduces different levels of testing that changed and expanded over the years but classically breaks down to unit, integration, system, and acceptance testing.

These levels can also be found in SOA and microservices, where the high modularity due to a separation into smaller services leads to an increased need for and interest in integration and unit testing, as well as automation of tests [@Waseem20]. This is especially true for automated driving systems (ADS), in which highly critical software components need to be thoroughly tested to guarantee safety. In practice, this has proven to be exceptionally difficult, not only due to the high risk and low reproducibility of tests on public roads. In detail, an excessive amount of random testing kilometers would be statistically required to guarantee a human-comparable safety level [@Wachenfeld2016]. To address these challenges, several other approaches for ADS testing have emerged, with some of the more promising being simulation and scenario-based testing [[@Lou22]][@Fremont20].

The latter abstracts actual or potential traffic into formalized scenario descriptions that can be tested systematically. Expert scenario approaches are handcrafted or systematically derived [@Weber23]. Additionally, scenario databases can be accumulated from real-world driving datasets [@Li23]. The scenario.center [@Ika24] combines both approaches and uses real trajectory data to analyze the occurrence and concrete expressions of a comprehensive set of scenarios. Subsequently, concrete scenarios are stored in scenario databases using standards such as OpenSCENARIO [@Asam24] or Scenic [@Fremont18].

A variety of test benches can be used to conduct scenario-based tests, including simulations, hardware-in-the-loop, proving ground, or field operational tests [@Steimle22]. Simulative testing offers a cost-effective and thus efficient method as it replicates the environment, drivers, vehicles, or sensors in a detailed manner [@Fremont20]. This ensures that tests are conducted in a controlled and safe environment without creating an actual hazard. In addition, simulation tests are renowned for their reproducibility and ability to perform a huge amount of tests in parallel [@Dona22].

2.3 Simulation Frameworks↩︎

Various simulation frameworks exist for conducting simulated tests, each focusing on certain aspects and different levels of detail [@Stepanyants23]. A widely used simulation framework is the CARLA simulator [@Dosovitskiy17], which features highly accurate graphics, realistic sensor modeling, and multiple standardized interfaces. Additionally, CARLA is open-source, actively developed, and supported by a large community. The CARLA ecosystem includes multiple additional components for executing scenarios or bridging to the widely-used Robot Operating System (ROS) [@Macenski22]. It also supports harmonized standards, e.g., from the ASAM OpenX ecosystem [@Asam24]. Other simulation frameworks tending to high simulation fidelity are Virtual Test Drive and CarMaker, the latter with a focus on vehicle dynamics. In addition, a powerful testing tool suite, VIRTO, exists to enable continuous simulative testing with CarMaker on a large scale in cloud environments. On the other side, frameworks like OpenPASS [@Dobberstein2017], esmini, or CommonRoad [@Althoff17] focus on lightweight scenario execution in line with harmonized interfaces. This mainly increases the applicability for testing entire scenario databases in a continuous safety assurance process.

Many of the simulation frameworks mentioned above are limited in their capabilities or not open-source. In addition, most of them primarily offer a fundamental simulation core but not necessarily an entire architecture to perform effective simulations during development and testing. However, there are extended and more advanced frameworks enabling comprehensive testing processes. As an example, automatic adversarial testing approaches aim to identify potential failures systematically within simulations [[@Ramakrishna22]][@Tuncali18]. In order to cover a variety of simulation use cases apart from testing, a flexible and modular architecture is crucial, consisting of several powerful, interacting components [@Saigol18]. However, the existing modular simulation architectures cover only specific use cases, but can not flexibly be expanded or scaled, which imposes the necessity to conceptualize a novel, more generic simulation architecture for the continuous development and testing process in automated driving.

3 ANALYSIS↩︎

The following examples of various simulation use cases motivate the necessity of a novel and generic simulation architecture. In addition, we derive explicit core design principles for the envisaged architecture to overcome existing shortcomings within other architectures.

3.1 Use Case Analysis↩︎

In automated driving, simulations have a wide range of use cases, aiming at initial developing phases but also testing procedures. Hence, we describe and analyze three fundamental use cases, starting with initial software prototyping, a comprehensive data-driven development, and subsequent automated testing processes, visualized in Fig. 2.

Figure 2: Exemplaric use cases within the software development process motivating the usage of a generic and modular simulation architecture. The scenario amount and level of abstraction vary across the different use cases.

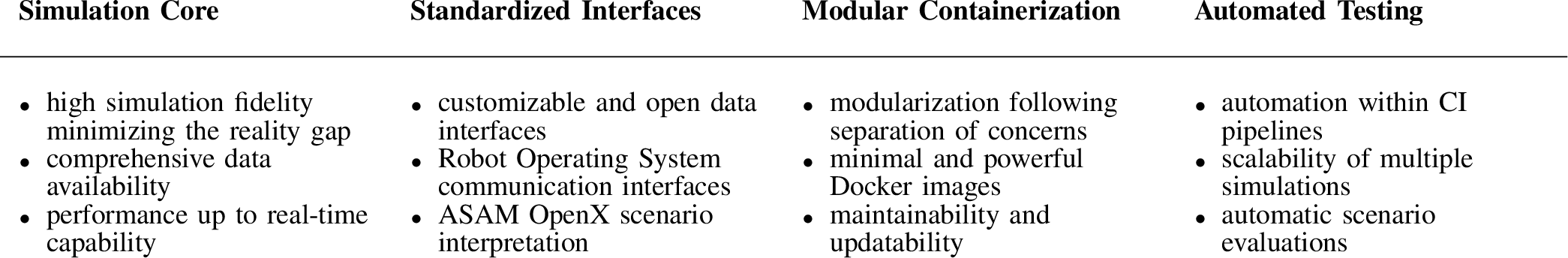

Figure 3: Derived requirements for a generic simulation architecture covering the selected use cases in development and testing of automated vehicles.

3.1.1 Software Prototyping↩︎

The first use case addresses the initial development phase of an automated driving function, where developers aim for simple and quick prototyping. It focuses on the flawless integrability of the component under test and ensures general operability within the overall system. An early integration facilitates interface harmonization, which is particularly important when assembling modules from different entities. In many cases, a single example scenario is sufficient to carry out initial tests. However, high simulation fidelity is required in many cases, which implies realistic simulation conditions, ranging from realistic environments to accurate sensor, vehicle, or driver models.

Some simple examples in initial development phases are open-loop tests of perception modules checking general functionality or sensor placement experiments to produce a variety of possible sensor setups. Supplementary, also closed-loop tests can be performed with more advanced software stacks to verify interface implementations. Using multiple vehicle instances enables the development of cooperative functionalities. Thus, a variety of simulation possibilities exist even in early development, many of which demand comprehensive input data and harmonized interfaces. In addition, a simple integration of and flexible interaction with novel functionalities is crucial.

3.1.2 Data-Driven Development↩︎

The second use case covers development processes using large amounts of data, which are not effectively obtainable in real-world settings, thus motivating simulations. For many applications, simulative data can be sufficiently accurate to be integrated into the data-driven development process [@Bewley19]. This includes training data for machine learning algorithms but also closed-loop reinforcement learning. Potentially interesting data includes raw sensor but also dynamic vehicle data in a wide variety at large scale. Simulations additionally enable data generation beyond the physical limits of vehicle dynamics or sensor configurations. To accumulate large amounts of data, relevant simulation parameters can be automatically sampled along different dimensions. Subsequently, automation and parallelization empower a cost-effective execution of multiple simulations, especially when using already established orchestration tools.

3.1.3 Automated Testing↩︎

In the third use case, simulation is considered to systematically evaluate a large number of defined tests, potentially within the safety assurance process. A specific test configuration may encompass both a concrete scenario and well-defined test metrics for evaluation. Thus, a direct interface to a standardized scenario database is favorable, and custom pass-fail criteria need to be configurable to deduce objective test results. Scalability drastically improves efficiency when simulating multiple test configurations. Moreover, embedding the simulation architecture in a CI process further accelerates the entire safety assurance.

Typically, only a few rough scenarios are considered during initial prototyping, but the scenario amount and level of detail increase when it comes to automated test processes. This also refers to the level of abstraction established for scenarios within the PEGASUS [@DLR24] project and can be adapted to the use cases in a similar manner. Fig. 2 demonstrates the variety in scenario amount and level of detail across the various use cases. Additionally, the use cases can be assigned to different temporal stages within the SDLC.

3.2 Requirement Analysis↩︎

Following the described use cases, specific requirements are derived, categorized, and incorporated into the conceptual design of the simulation architecture as well as for subsequent evaluations. Fig. 3 gives a brief overview of the gathered requirements.

Essential conditions are imposed on the central simulation core since it needs to model reality with optimal performance and high accuracy. This implies accurate data generation and direct provision in every simulation timestep. The usage of standardized interfaces can be simplified using the ROS ecosystem for data processing. In addition, compatibility with harmonized ASAM OpenX interfaces is a fundamental requirement, especially when evaluating scenario-based tests in simulations. Beyond that, a flexible and modular containerization is a key pillar of a generic simulation architecture and is in line with the trends towards SOAs and microservices, increasing both maintainability and the updateability of the system. Furthermore, containerization allows for orchestration, which not only improves automated testing but also enables efficient scaling to concurrent simulations, possibly even distributed across different hosts. Further, the implementation of automated evaluation procedures accelerates the overall simulation process of automated driving functions at large scale.

4 ARCHITECTURE↩︎

The analysis in Sec. 3 showed that the increased focus on particular types of use cases necessarily leads to specific requirements imposed upon the simulation architecture, which can be investigated in Fig. 3. Features like modularity, scalability, and customizability are notably absent in many traditional C-ITS simulation frameworks [@Kirchhof19]. This necessitates an architecture that enriches a simulator core with said features to properly cover the focused use cases. In this section, we propose and describe such an architecture that meets the aforementioned requirements and is widely applicable due to its level of abstraction and, thus, high flexibility.

Our architecture consists of several layers that encapsulate different components with aligned purposes. An overview of that architecture is shown in Fig. 1.

The main layers are the following:

Simulation layer - simulator combined with additional interfaces and capabilities;

Storage layer - persistent data used for or generated by the simulation layer;

Application layer - software/users interacting with the simulation layer to achieve certain goals.

Furthermore, the simulation is composed of sub-layers, namely the simulator layer housing the core that performs the simulation work, the interface layer containing protocols for interaction with the simulator, and the control layer which includes a set of actors that extend the functionalities of the core. Letting each instance of the simulation have its own set of components inside of these sub-layers improves customizability and flexibility, as it allows users to tailor it to their concrete use cases. In following a microservice approach by separating the simulation layer into different components, we increase modularity and thus gain the earlier discussed benefits like better maintainability. This is especially true if said components are containerized, which opens up the architecture to common orchestration tools and vastly simplifies a distributed simulation. The architecture captures this by including an orchestration layer with an orchestrator that dynamically manages all other layers.

4.1 Components↩︎

After establishing this abstract architecture, we now introduce CARLOS, a framework that implements this architecture to enhance CARLA as a simulator core and that has proven itself during extensive usage in our research and work. We chose CARLA as the core of our simulation framework due to the benefits described in Sec. 2, like its high maturity and simulation fidelity, as well as its rich ecosystem with additional components. Some of these components are crucial to our framework, thus a brief overview of them and our additional changes follows. All code modifications and prebuilt Docker images of the components are made publicly available on GitHub and Docker Hub.

1) Simulation Core: The CARLA server constitutes the central element of the framework and handles all graphical and dynamic calculations in the individual simulation time steps. Within our GitHub repository, we extend the pre-existing Dockerfiles to create enhanced Ubuntu-based container images of CARLA via novel CI pipelines.

2) Communication Actor: The ROS bridge is the component that facilitates the powerful combination of CARLA and ROS. It retrieves data from the simulation to publish it on ROS topics while simultaneously listening on different topics for requested actions, which are translated to commands to be executed in CARLA. This is realized by utilizing both RPC via the CARLA Python API, as well as the ROS middleware, in tandem.

Since CARLOS focuses on ROS 2 (from now on also referred to as ROS), DDS is the middleware used here. Additionally, we provide modernized container images through our CI, where textttdocker-ros [@Busch23] enables a continual building of said images with recent versions of ROS, Python, and Ubuntu.

2) Control Actor: To enable scenario-based testing and evaluation, the Scenario Runner is used. It is a powerful engine that follows the OpenSCENARIO standard for scenario definitions. An additional ROS service allows other ROS nodes to dynamically issue the execution of a specified scenario using the mentioned Scenario Runner. For the creation of more modern and lightweight container images, a custom Dockerfile is published alongside this paper.

4.2 Data Generation↩︎

In Sec. 3, we described data generation for data-driven development as one fundamental use case of simulations. While most simulators offer extensive configuration options, they often lack a methodology for systematically conducting a series of simulations with varying configurations to generate large datasets. This is particularly necessary for generating perception or driving data through simulation. Consequently, we have developed a data generation pipeline for CARLOS to address this need. The objective is to efficiently and easily simulate all selected permutations of parameters across a wide parameter space and store the resulting data. The framework is designed to be as flexible and scalable as possible in executing these simulations.

Our pipeline code is written in Python and uses ROS for the communication with the simulation core. This enables straightforward modifications of the pipeline and its adaptation to other simulation cores. Hence, configuring the pipeline requires only a basic JSON file. The settings in this file are divided into two parts: general simulation settings and the parameter space for generating all permutations with which the simulations are conducted.

Within the general settings, for instance, among numerous other options, it is possible to specify the length of a simulation run, to determine which services to initiate, and to select which ROS topics to capture. The pipeline also allows the execution of simulation-independent services if needed, such as converting ROS data into other formats.

The second part of the configuration file can encompass the parameter space from which all permutations are generated. This space is freely expandable and is only limited by the capabilities of the simulation core. Parameters might include sensor positioning, maps, scenarios, and more. Additionally, it is also possible to provide concrete OpenSCENARIO definitions as a list of scenario files.

A complete list of configuration options and the implementation itself can be found in the CARLOS GitHub repository.

5 EVALUATION↩︎

Our simulation architecture is designed based on fundamental requirements derived in Sec. 3. These requirements are now incorporated to evaluate the architecture in a structured manner. Subsequently, we evaluate a specific implementation of the architecture in an exemplary use case.

5.1 Architecture Evaluation↩︎

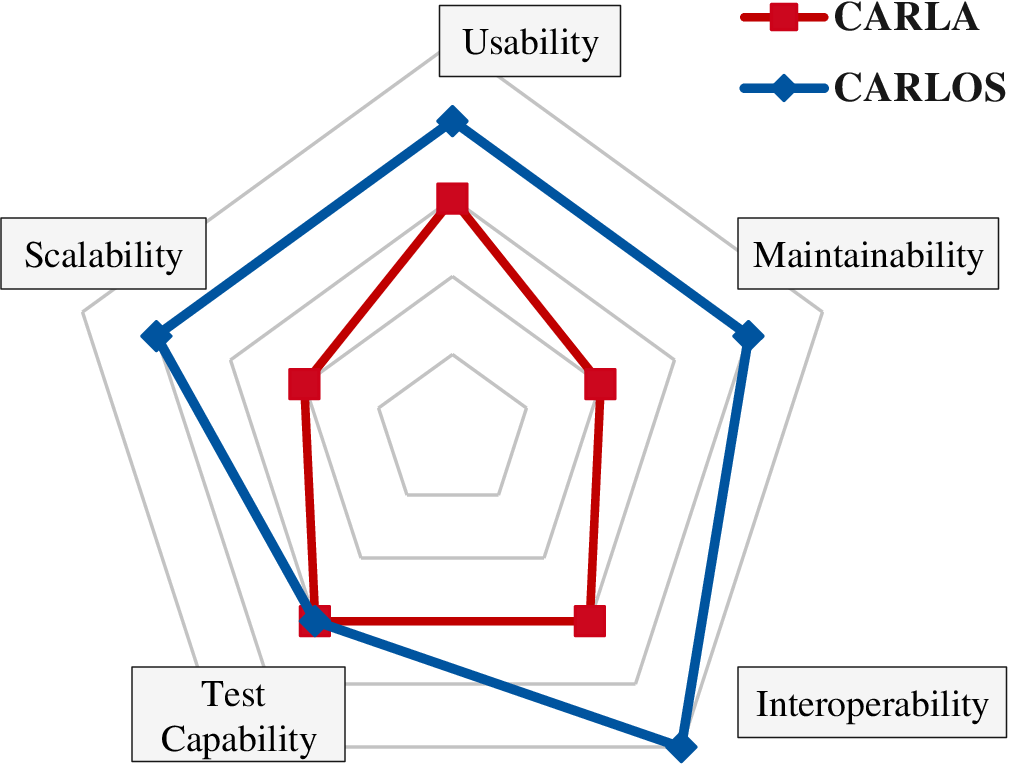

Figure 4: Qualitative evaluation of multiple capabilities by comparing our proposed simulation framework CARLOS with the native CARLA ecosystem. The detailed assessment is based on pre-defined metrics and in-house user assessments.

A number of derived requirements, in particular the simulation realism or real-time capability, depend on the simulation core itself. However, our general architectural design allows the core to be flexible and exchangeable, which is why the architecture itself is evaluated using only simulation core-independent evaluation metrics. Thus, we evaluate our open-source simulation framework CARLOS against essential capabilities and provide a qualitative comparison against the native CARLA ecosystem.

\(\bullet\) Usability describes the simplicity of interacting with a simulation architecture to achieve goals effectively and satisfactorily. Well-documented instructions, meaningful examples, and a large community generally enhance usability. All enrichments provided within the CARLA ecosystem remain available within our framework. Additionally, we offer well-documented demonstrations for all described use cases, assisting in the first steps when using the containerized framework and generally improving usability.

\(\bullet\) Maintainability refers to the ability to customize the architecture and exchange or update individual components independently. Thus, a modular and containerized structure is essential. Besides, open-source software bypasses potential shortcomings posed by license regulations. Thanks to modular containerization, our open framework offers an ideal basis for continual updates of an individual module. Additionally, all Docker images are built within GitHub CI pipelines, which enables increased maintainability compared to the native CARLA system.

\(\bullet\) Interoperability defines the capability to bind custom functions to the existing architecture. This includes support for standardized and custom interfaces but also a containerized integration. CARLA already offers a number of valuable interfaces, such as ROS or OpenX, which simplify the integration of custom functions. Nevertheless, the microservice architecture of CARLOS facilitates the integration of software functions even more by using existing container orchestration tools like Docker Compose and enabling the use of more sophisticated tools like Kubernetes. Thus, complex software systems composed of many different containers can be easily integrated into our proposed simulation framework.

\(\bullet\) Scalability and automation are further key challenges during development and testing. Compared to the native CARLA ecosystem and accelerated by the nature of a SOA, CARLOS focuses on the automation of simulations using common container orchestration tools and CI pipeline integrations. As an additional example, multiple simulations are conducted sequentially within the data generation pipeline. Parallel and distributed simulations become feasible when using powerful orchestration tools. Thus, the novel framework CARLOS significantly increases scalability.

\(\bullet\) Test Capability is a decisive factor in the automatic evaluation process of simulations within safety assurance. All powerful existing CARLA evaluation methods and metrics can still be used in our containerized framework design. In addition, custom evaluation modules can be integrated as novel components, depending on the corresponding simulation use case.

Fig. 4 visualizes the comparison of our new proposed architecture and the baseline CARLA ecosystem. It shows that CARLOS can substantially enhance maintainability, interoperability, and scalability, which is crucial in agile development and continuous testing processes.

5.2 Use Case Evaluation↩︎

We can demonstrate the core capabilities of CARLOS most effectively based on an exemplary use case. Specifically, we aim to evaluate a novel, containerized C-ITS perception module within a concrete simulation scenario. In detail, the module involves a sensor data processing algorithm that takes lidar point clouds as input to detect 3D objects. Thus, we aim to integrate such a point cloud object detection component within the simulation framework and evaluate the component’s performance by comparing the resulting object data with the simulated objects. For simplicity, the component is implemented within the ROS ecosystem, enabling a straightforward integration via harmonized data interfaces.

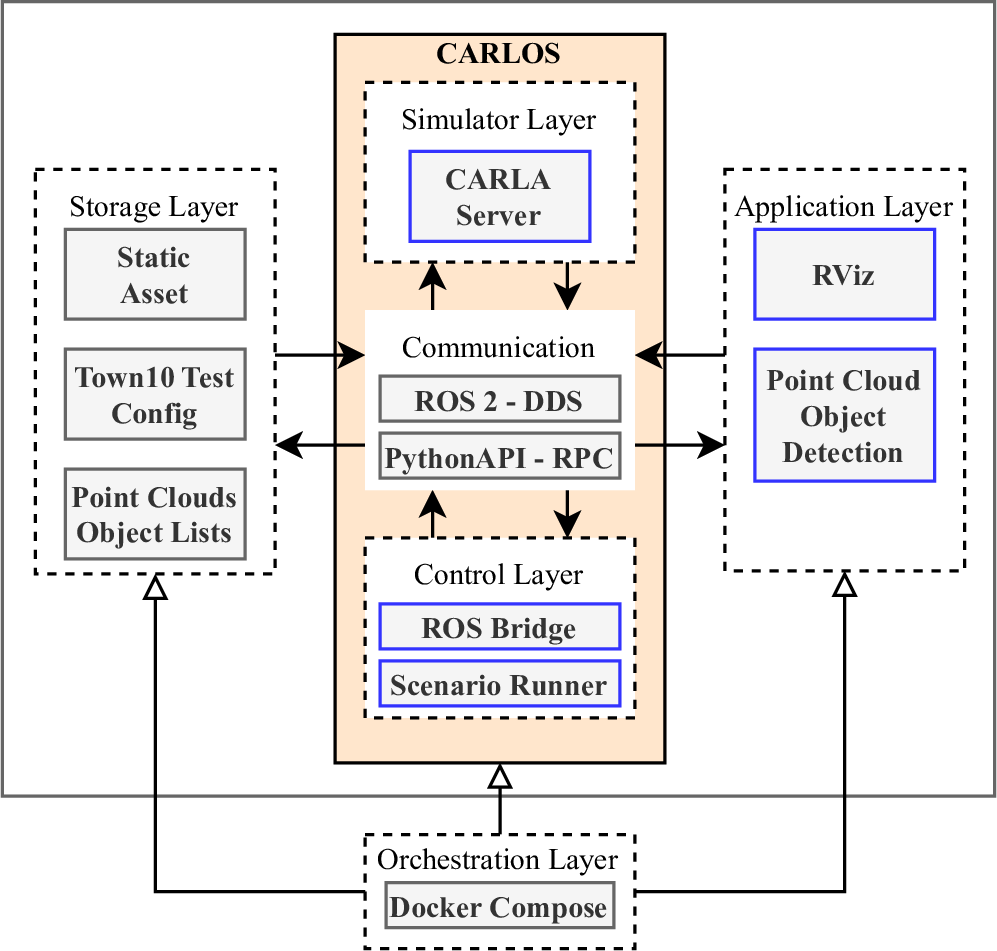

Figure 5: Specialized simulation framework CARLOS, focusing on the integration of a point cloud object detection module. Docker containers are indicated by blue frames.

For this specific use case, the framework comprises a CARLA server, a ROS bridge facilitating the transfer into the ROS ecosystem, and a Scenario Runner to control the scenario. Visualization and user interaction are achieved through the ROS tool RViz. All mentioned simulation components, including the novel point cloud object detection, run in dedicated Docker containers orchestrated through Docker Compose. The detailed architecture, including data connections, is depicted in Fig. 5.

Specifically, the test configuration encompasses a concrete OpenSCENARIO file but also general settings that define the simulation environment and sensor configuration. Thus, the two specific control layer components can interact with the CARLA server to simulate 3D lidar point cloud sensor data. These point clouds are transferred into the ROS ecosystem and subscribed by the point cloud object detection module. Resulting object detections can be visualized within RViz and stored alongside the generated raw sensor data. However, a quantitative performance assessment could be facilitated by additional components, resulting in meaningful evaluation metrics for the C-ITS module under test.

Integrating such a custom, containerized module into the open simulation framework requires less effort and minimal modifications in the dedicated Docker Compose setup but directly proves general interoperability and valid interfaces with a single demo scenario. Subsequently, a visualization of the results, as in Fig. 6, enables a quantitative evaluation of the tested point cloud object detection component.

6 CONCLUSION & OUTLOOK↩︎

In this paper, we have shown the benefits of an open and modular simulation framework consisting of multiple containerized building blocks which are arranged in a microservice architecture. We demonstrated the importance of simulations and scenario-based testing in all stages of the development of C-ITS software. We motivated software prototyping, data-driven development, and automated testing as essential use cases of simulations, from which we derived requirements for a generic simulation architecture. We propose usability, maintainability, interoperability, test capability, and scalability as the most essential characteristics. The result is our proposed architecture, which is implemented in the provided open-source framework CARLOS. It leverages the rich CARLA ecosystem to cover each presented use case. The framework was subsequently evaluated against the native CARLA simulator, highlighting its benefits, especially regarding scalability and maintainability.

By providing CARLOS as an open-source, easy-to-extend framework, we aim to provide utility to the ROS and CARLA communities, inviting others to contribute to this new framework. We designed CARLOS to be an open foundation for further exploration and development, especially regarding its potential for the orchestration of distributed large-scale simulations.

Figure 6: Visualization of simulated objects and sensor data, but also the detection results of the integrated point cloud object detection within RViz.

*This research is accomplished within the project “AUTOtech.agil” (FKZ 01IS22088A). We acknowledge the financial support for the project by the Federal Ministry of Education and Research of Germany (BMBF).↩︎

All authors are with the research area Vehicle Intelligence & Automated Driving, Institute for Automotive Engineering, RWTH Aachen University, 52074 Aachen, Germany

{firstname.lastname}@ika.rwth-aachen.de↩︎