M2SA: Multimodal and Multilingual Model for Sentiment Analysis of Tweets

April 02, 2024

1 Introduction↩︎

Social media platforms serve as conduits for the dissemination of information. Tweets have emerged as a trendy medium through which individuals communicate and express their ideas and opinions. Twitter (aka X) is widely used by researchers as a prominent social media platform for engaging in micro-blogging and fostering interactions. Sentiment analysis [1] is a well-studied topic in natural language processing. The topic has received consideration in both unimodal and multimodal contexts. The proliferation of social media platforms, including Twitter and YouTube, has led to a common practice of assessing content using several modalities [2], [3]. This approach offers additional context through spoken, non-verbal, and auditory aspects. The primary focus in many domains of natural language processing (NLP) often revolves around higher-resourced languages. However, the challenge of processing lower-resourced languages remains unresolved.

The process of annotating supervised datasets for natural language processing (NLP) tasks is a labour-intensive endeavour requiring significant investment of time, financial resources, and effort. Recently, several shared tasks, including SemEval [4], [5], have introduced tasks aimed at identifying the polarity of tweets, categorising them into predetermined classes. All of the datasets for the shared tasks are accompanied by labels considered the gold standard. Another point to take into account here is that previous approaches [6]–[8] focused on text-only, while posts shared on social media sometimes include images, videos, etc. Approaches incorporating multimodal information [9]–[11] for the classification of sentiment are predominantly focused on the English language.

This paper presents a straightforward approach for enhancing pre-existing publicly accessible datasets to conduct multimodal (image & text) sentiment analysis on Twitter called M2SA (Multimodal Multilingual Sentiment Analysis). We have collected existing datasets in 21 languages where each annotated post includes both text and image with the annotated labels being either positive, negative, or neutral. We then trained a multimodal model that combines image and text embedding features to classify the target labels.

Our contributions are as follows:

We engage in curating, enriching, and analysing pre-existing Twitter sentiment datasets in 21 different languages.

The pre-trained model architectures use a fusion of textual information and visual features, utilising large language models for text encoding and image encoding.

The study examines the effects of utilising machine translation instances in the context of lower-resourced languages.

All resources (pre-trained models, datasets) and the source code are shared publicly1. The subsequent sections of the paper are structured in the following manner: Section 2 provides an overview of the existing literature and research in the field. Section 3 provides a comprehensive overview of the processes involved in data collection, enrichment, and the statistical characteristics of the dataset. The methodology for classification is outlined in Section 4. The experimental setup and results are outlined in Sections 5 and 6. The paper concludes in Section 7.

2 Related Work↩︎

[9] presented a framework that uses CNNs to extract features from multimodal data’s visual and textual modalities. The visual features are extracted using a CNN model that has been previously trained, such as VGG16 or ResNet-50. A CNN model trained on a massive corpus of text data is used to extract the textual features. The combined extracted features from the visual and textual modalities are then fed into an MKL classifier. The MKL classifier discovers the optimal combination of kernels for distinguishing between distinct emotions or sentiments. [11] used both feature and decision-level fusion methods to merge affective information extracted from multiple modalities. [10] evaluated various embedding features from both text and visual content. [12] proposed a new framework for multimodal sentiment analysis in realistic environments, with two main components: a module for multimodal word refinement and a module for cross-modal hierarchical fusion. [13] employed a strategy that uses a skip-gram neural network to extract features from the text mode. Image-specific features are extracted using a denoising autoencoder [14] neural network. The denoising autoencoder network is taught to reconstruct an image from its corrupted version. The extracted features from the text and image modalities are then concatenated and fed to an SVM classifier. In addition to considering the modelling strategies for the sentiment analysis task, it is essential to identify the available benchmarking datasets. English contains a substantial quantity of multimodal datasets on sentiment and emotion analysis [15], [16]. While the TweetEval [17] examines the application of large language models to seven tasks in Twitter, including emotion, emoji, irony sentiment, and others, the test set is monolingual. The paper authored by [18] provides a comprehensive exposition on diverse multimodal datasets, encompassing the domain of multimodal sentiment analysis.

3 Multimodal Multilingual Sentiment Analysis (M2SA)↩︎

Figure 1: The dataset’s distribution across different languages.

According to our investigation, numerous datasets are available for conducting both unimodal and multimodal sentiment analysis. The conversion of unimodal datasets, particularly those derived from Twitter, to a multimodal format has been limited. The fundamental hypothesis underlying our utilisation of the unimodal dataset posits that, given its gold annotation, the Twitter dataset can be linked to an image that has not been previously examined or employed in the context of multimodal sentiment classification. Thus, we present our contribution in this field called M2SA (Multimodal Multilingual Sentiment Analysis).

3.1 Data Collection↩︎

An initial step in initiating the enrichment process involves conducting a manual search of pre-existing Twitter sentiment datasets. We do not target any other social media datasets to process them uniformly and keep them from a single source. This search is conducted through the utilisation of search engines and data repositories such as HuggingFace Datasets2, European Language Grid3, and GitHub4. To retrieve the dataset, a search is conducted using specific keywords such as twitter sentiment analysis dataset, social media sentiment analysis dataset, and twitter sentiment shared tasks. Next, the compiled list of datasets undergoes the process of querying tweet information using the Twitter API. The JSON format is used to store the text and images associated with each individual tweet in a dataset. The initially collected datasets are then subjected to manual checking to exclude tasks unrelated to sentiment analysis. Lastly, label transformations were applied on class labels to convert them from a five-class to a three-class format in cases where they did not initially possess three distinct sentiment categories: positive, negative, and neutral. The preliminary investigation yielded approximately 100 datasets in multiple languages. However, the final version of our dataset consisted of only 56 distinct datasets, encompassing 21 different languages. This reduction in the number of datasets was primarily due to the absence of tweet IDs linked to the corresponding text in most datasets. Table 1 presents a comprehensive overview of various languages and their respective datasets that were collected.

3.2 Preprocessing↩︎

Preprocessing social media texts is imperative due to their inherent informality and noise. The preprocessing steps are delineated as follows:

Removal of all black and white images.

Tweet normalisation for USERs, URLs and HASHTAGS i.e., replace @ElonMusk → <user_1>…, URLs → <URL_1>, #tweet → <hashtag>tweet<hashtag/>

Filtering of tweets with text content less than five characters, not accounting for USER and URL tags.

Deduplication is performed using tweet IDs.

Checking if the same tweet ID has more than one label assigned and employing a majority vote when needed.

Filtering of tweets with corrupted or no images or with images of less than 200 × 200 pixels size.

Checking the language tag in the tweet JSON and see if it matches the target language.

Translation of English tweets for lower-resourced languages using the NLLB5 machine translation (MT) model.

The complete preprocessed dataset is structured according to a schema that can be described as follows:

tweetid: unique identifier for the tweet.

normalised-text: text obtained after applying preprocessing steps.

language: the language of the text.

translated-text: text in the target language obtained using the NLLB model.

image-paths: list of images associated with the tweet.

label: POSITIVE|NEGATIVE|NEUTRAL

3.3 Dataset↩︎

| Lang | Dataset name |

|---|---|

| ar | SemEval-2017 |

| ar | TM-Senti@ar |

| bg | Twitter-15@Bulgarian |

| bs | Twitter-15@Bosnian |

| da | AngryTweets |

| de | xLiMe@German, Twitter-15@German, TM-Senti@de |

| en | SemEval-2013-task2, SemEval-2015, SemEval-2016 |

| en | CB COLING2014 vanzo |

| en | CB IJCOL2015 ENG castellucci |

| en | RETWEET |

| es | xLiMe@spanish |

| es | Copres14 |

| es | mavis@tweets |

| es | Twitter-15@Spanish |

| es | JOSA corpus |

| es | TASS 2018, 2019, 2020 |

| es | TASS 2012, 2013, 2014, 2015 |

| fr | DEFT 2015 |

| hr | InfoCoV-Senti-Cro-CoV-Twitter |

| hr | Twitter-15@Croatian |

| hu | Twitter-15@Hungarian |

| it | CB IJCOL2015 ITA castellucci |

| it | xLiMe@Italian |

| it | sentipolc16 |

| it | TM-Senti@it |

| lv | Latvian tweet corpus |

| mt | Malta-Budget-2018, 2019, 2020 |

| pl | Twitter-15@Polish |

| pt | Twitter-15@Portuguese |

| pt | Brazilian tweet@tweets |

| ru | Twitter-15@Russian |

| sq | Twitter-15@Albanian |

| sr | doiserbian@tweet |

| sr | Twitter-15@Serbian |

| sv | Twitter-15@Swedish |

| tr | BounTi Turkish |

| zh | TM-Senti@zh-ids |

Figure 1 illustrates the comprehensive distribution of datasets across different classes, encompassing 21 languages. The final dataset consists of 143K data points.

The dataset contains the following languages: Arabic-ar [19], [20], Bulgarian-bg [21], Bosnian-bs [21], Danish-da [22], German-de [23], English-en [4], [5], [24]–[28], Spanish-es [29]–[38], French-fr [39], Croatian-hr [40], Hungarian-hu [21], Italian-it [41] , Maltese-mt [42], Polish-pl [21], Portuguese-pt [43], Russian-ru [21], Serbian-sr [44], Swedish-sv [21], Turkish-tr [45], Chinese-zh [20], Latvian-lv [46] and Albanian-sq [21]. Languages with more data points, such as German, Spanish, English, Italian, Arabic, and Polish, possess dataset instances exceeding 10,000, whereas other languages exhibit 5,000 or fewer instances of text and images. The dataset exhibits an average token count ranging from 4.25 to 5.94 words, separating each token by a space. One observable pattern is that tweets classified as positive tend to be more likely to be accompanied by images than other categories. The diagram also indicates an imbalance in the datasets across the languages.

4 Methodology↩︎

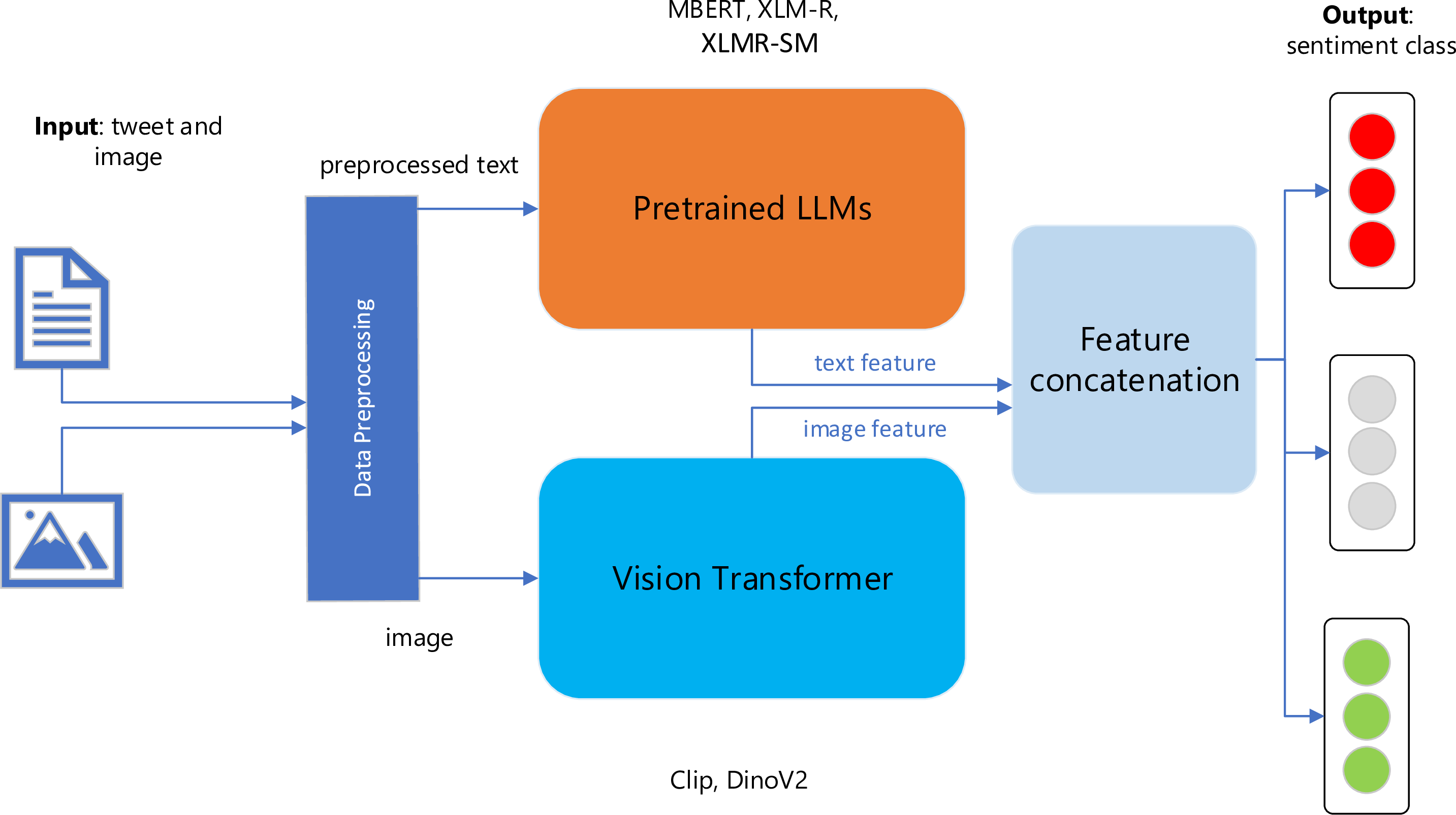

Figure 2: Model architecture of the M2SA.

4.1 Problem Definition↩︎

In the task of unimodal sentiment analysis, the model receives a sequence \(X_{m}\) as input, where \(m\) represents the length of the sequence. The model then produces a single class as output, which belongs to a closed set consisting of positive, negative, or neutral sentiments. In the context of multimodal sentiment analysis, the model receives input from multiple modalities denoted as \(X^{1}_{m}\ldots X^{2}_{n}\), and the output is equivalent to that of unimodal sentiment analysis. The objective of the models is to extract features from the input vectors and acquire the ability to classify sentiment accurately.

The model architecture of the overall sentiment classification system is depicted in Figure 2. We examine distinct computational scenarios, encompassing the analysis of textual data alone and the integration of both textual and visual information, to classify the sentiment expressed in tweets. In the context of unimodal textual experiments, the models employed include Multilingual-BERT [47], XLM-RoBERTa [48], and XLMR-SM, a fine-tuned model specifically designed for sentiment analysis. In the context of multimodal systems, pre-trained vision models (CLIP and DINOv2) are employed as feature extractors. The combined textual and visual features are modelled using a concatenation operator.

In the context of language processing, datasets pertaining to a specific language are regarded as a cohesive entity. The dataset containing train, validation, and test sets is utilised directly within their respective sets. In this scenario, if there is no distinct set available, we will partition it into train (85%), test (10%), and validation (5%) sets manually.

Given that the text has already undergone processing, no additional processing has been applied to it. The input text undergoes tokenisation, during which it is padded and truncated according to the maximum length supported by the language models. The ‘input id’ and ‘attention mask’ are passed into the Language Model (LLM) to extract textual features for each instance in the dataset. Regarding image modality, the images undergo preprocessing through an image preprocessor linked to the corresponding vision models. The image preprocessor’s output is subsequently inputted into the vision encoder. The concatenation of the output from the text and vision encoder is subsequently projected onto a linear layer, which is then followed by a softmax layer for the purpose of classification. The models undergo separate fine-tuning processes using a combined dataset comprising samples from multiple languages. Furthermore, given the limited amount of data available for languages such as Latvian and Albanian, we opt to employ translation techniques to convert the existing text from another dataset into these languages with fewer than 10,000 tweets. Prior to the translation process, a language detection procedure using an existing model6 is executed. This process aims to accurately identify the source text, as the dataset contains text from various languages. Machine translation models rely on providing source and target language codes to perform translations effectively. In the context of the dataset, a language detection process is conducted to classify the instances into their respective source languages. Subsequently, the machine translation pipeline receives each grouped set along with the corresponding source and target language codes. The subsequent subsections discuss the model architectures and pertinent details associated with the models.

4.2 Text Encoders↩︎

To evaluate the model’s efficacy in the absence of visual features, we conducted a standard fine-tuning process utilising the Transformer model’s output. Specifically, we employed contextualised sentence embedding, which consists of a 768-element vector. The vector is subsequently fed into a fully connected (FC) layer consisting of three neurons, which is accompanied by a softmax layer for classification. The subsequent text models were employed as encoders for textual data.

4.2.1 Multilingual-BERT (M-BERT)↩︎

BERT [49] is a bidirectional transformer pre-trained with the masked language modelling (MLM) and next sentence prediction (NSP) objectives on the top 104 languages with the largest Wikipedia. This model is chosen due to its multilingual nature.

4.2.2 XLM-RoBERTa (XLM-R)↩︎

The XLM-R model [50] is a large multilingual language model trained on 2.5 TB of filtered Common Crawl data containing 100 languages. The model was trained with the Masked Language Modelling (MLM) objective, with 15% of the input words masked. The model has been shown to perform really well on downstream tasks when fine-tuned for supervised tasks. XLM-R can understand the input’s language solely based on the input IDs without having to use language tensors. This model has been proven to improve the M-BERT scores in various tasks.

4.2.3 XLM-RoBERTa-Sentiment-Multilingual (XLMR-SM)↩︎

The XLMR-SM model [51] is a fine-tuned version of XLM-T [52] on the tweet sentiment multilingual dataset (all), which consists of text from the following languages: Arabic, English, French, German, Hindi, Italian, Portuguese, and Spanish. The XLM-T model has been pre-trained on approximately 198 million multilingual tweets. We introduce this model to study the effect of the presence of sentiment in the pre-trained encoder. Since this model is based on XLM-R, which is trained on tweets and fine-tuned on sentiment datasets, it should perform better at the classification task.

4.3 Vision Encoders↩︎

The vision encoders divide an image into fixed-size segments and turn them into a sequence that the model can interpret. The encoder analyses the links between these image patches to capture the image’s overall meaning, much like transformers do with text. The visual features obtained from the vision models are combined with those obtained from the Transformers text models. The combined output from the encoders is projected into the latest shared space and fine-tuned on a supervised dataset. We employed the following vision encoder models:

4.3.1 CLIP↩︎

The CLIP model, as described in the paper by [53], is a multimodal framework that combines visual and linguistic information. The CLIP model utilises a transformer architecture, specifically the Vision Transformer (ViT), to extract visual features. Additionally, it employs a causal language model to acquire text features. Consequently, the textual and visual attributes are subsequently mapped onto a latent space with equivalent dimensionality. Computing the similarity score involves calculating the dot product between the projected image and text features.

4.3.2 DINOv2↩︎

The DINOv2 [54] model is a self-supervised learning approach that builds upon the DINO framework proposed by [55]. The dataset utilised for pre-training purposes is meticulously curated to encompass a diverse range of images sourced from various domains and platforms, including but not limited to natural images, social media images, and product images. This ensures that the acquired features can be applied to diverse practical scenarios.

5 Experimental Setup↩︎

In this section, we provide details about the implementation and configurations that we used to train the model architecture.

5.1 Implementation↩︎

The neural network’s implementation is founded on the PyTorch library. The pre-trained models in the HuggingFace model hub are utilised through direct API calls. All monolingual models employed a batch size of 8 and a learning rate of 3\(e^{-5}\). All experiments were conducted using an NVIDIA V100 GPU with a memory capacity of 16GB. The translation module employed the NLLB-200-3.3B model [56], which encompasses all the languages in the dataset that are considered lower-resourced. All multilingual models employed a learning rate of 5\(e^{-5}\).

5.2 Model Configurations↩︎

We used the following configurations to train the model architecture and evaluate the results:

Unimodal vs. Multimodal: First, we experiment with training the unimodal model by using only the text. In another configuration, we train the model using tweets’ image and text content. Such a model considers both modalities and predicts the sentiment label jointly.

Original data vs. Inclusion of translations: In one configuration, we used only the extracted tweets as input for the text encoder to train the model. As shown in Figure 1, not all languages within the curated dataset possess many instances that can be utilised for training purposes. Therefore, the original tweets are machine-translated from English into the target language, and we combine the original text with the translations to train the models for lower-resourced languages.

Monolingual vs. Multilingual: In the monolingual setting, we train separate models for each language using data only from the respective language (either the original data or the addition of translations). In the multilingual setting, the data for all languages are merged, and we train a single model for all languages.

6 Evaluation↩︎

In this section, we analyse the outcomes produced by the aforementioned configurations. Additionally, we proceed to analyse the obtained results. The training and early-stopping of the train set are conducted based on the loss observed on the validation set. Final scoring is performed using the test set. The experiments were conducted with five different random seeds (42, 123, 777, 2020, 31337), and the resulting macro F1 scores were reported.

6.1 Results↩︎

| Lang | M | X | M+C | X-SM | M+D | X-SM+C | X-SM | M+C | X-SM+C |

|---|---|---|---|---|---|---|---|---|---|

| monolingual | multilingual | ||||||||

| ar | 57.3 | 64.6 | 53.6 | 66.5 | 25.1 | 69.1 | 41.0 | 61.3 | 72.7 |

| bg | 51.9 | 38.0 | 53.7 | 63.1 | 11.1 | 60.5 | 53.5 | 57.8 | 60.8 |

| bs | 62.4 | 57.0 | 60.5 | 64.4 | 35.4 | 66.5 | 40.3 | 63.1 | 67.9 |

| da | 48.8 | 34.5 | 46.9 | 66.9 | 21.9 | 59.1 | 55.1 | 57.8 | 75.2 |

| de | 68.7 | 89.1 | 69.4 | 90.1 | 10.7 | 89.6 | 56.3 | 75.3 | 92.9 |

| en | 34.1 | 18.8 | 30.4 | 36.2 | 6.6 | 33.0 | 64.2 | 52.2 | 53.7 |

| es | 46.5 | 22.6 | 36.9 | 51.6 | 8.0 | 46.4 | 61.4 | 49.4 | 59.6 |

| fr | 51.1 | 40.2 | 50.9 | 64.5 | 18.5 | 64.9 | 65.8 | 41.0 | 51.5 |

| hr | 58.5 | 28.7 | 56.4 | 64.6 | 25.7 | 55.9 | 40.5 | 57.7 | 63.4 |

| hu | 50.9 | 43.1 | 50.5 | 62.5 | 17.8 | 66.3 | 47.3 | 56.1 | 63.7 |

| it | 40.3 | 29.8 | 24.0 | 55.8 | 4.4 | 60.2 | 54.4 | 56.6 | 63.1 |

| mt | 60.3 | 60.3 | 60.0 | 68.3 | 11.9 | 62.0 | 35.9 | 44.0 | 56.8 |

| pl | 67.8 | 45.3 | 46.2 | 68.7 | 12.7 | 69.5 | 51.2 | 63.8 | 72.3 |

| pt | 67.2 | 48.1 | 51.8 | 64.3 | 29.5 | 74.6 | 48.3 | 52.8 | 61.8 |

| ru | 65.5 | 43.9 | 70.6 | 73.1 | 27.1 | 75.3 | 64.9 | 65.7 | 82.3 |

| sr | 42.6 | 23.4 | 38.1 | 49.7 | 21.6 | 43.8 | 48.7 | 49.9 | 65.3 |

| sv | 68.2 | 43.0 | 59.2 | 73.1 | 28.7 | 73.3 | 54.5 | 66.0 | 80.2 |

| tr | 45.9 | 32.1 | 44.4 | 49.6 | 11.6 | 49.4 | 47.9 | 41.3 | 47.8 |

| zh | 57.6 | 98.9 | 64.9 | 99.0 | 26.3 | 98.4 | 43.9 | 68.7 | 98.4 |

| lv | 22.6 | 19.0 | 24.8 | 22.0 | 21.5 | 18.1 | 76.8 | 52.4 | 61.6 |

| sq | 20.7 | 20.7 | 20.5 | 20.5 | 7.8 | 20.5 | 33.7 | 43.5 | 45.4 |

| bg_mt | 26.1 | 23.5 | 25.8 | 23.5 | 9.1 | 29.4 | |||

| bs_mt | 17.3 | 19.0 | 15.6 | 18.5 | 9.1 | 20.6 | |||

| da_mt | 20.7 | 20.7 | 20.7 | 24.5 | 15.0 | 24.7 | |||

| fr_mt | 23.1 | 23.1 | 23.1 | 25.8 | 13.6 | 23.4 | |||

| hr_mt | 34.0 | 25.4 | 28.9 | 34.9 | 16.5 | 46.9 | |||

| hu_mt | 28.7 | 21.2 | 22.8 | 28.6 | 10.3 | 28 | |||

| mt_mt | 30.1 | 18.6 | 20.9 | 43.8 | 12.0 | 26.3 | |||

| pt_mt | 16.4 | 8.7 | 10.5 | 22.9 | 23.4 | 21.9 | |||

| ru_mt | 41.3 | 17.8 | 28.8 | 46.9 | 23.7 | 45.6 | |||

| sr_mt | 18.8 | 18.8 | 18.6 | 25.5 | 17.8 | 23.0 | |||

| sv_mt | 31.7 | 17.3 | 24.3 | 54.6 | 19.8 | 34.7 | |||

| tr_mt | 33.7 | 30.8 | 32.5 | 31.5 | 13.8 | 30.8 | |||

| zh_mt | 38.2 | 66.7 | 38.0 | 78.1 | 25.3 | 85.4 | |||

The results (F1-score) for the model configurations are given in Table 2.

Unimodal vs. Multimodal: In terms of using textual features to train unimodal models, we can observe that, on average, textual features from XLM-RoBERTa-Sentiment-Multilingual yielded higher F1-scores than Multilingual-BERT or XLM-RoBERTa-base. When we combine both modalities to train multimodal models, we can observe that the combination of XLM-RoBERTa-Sentiment-Multilingual with CLIP (X-SM+C) demonstrated superior performance compared to other multimodal models. The unimodal models of the Bulgarian, Danish, German, Croatian, Maltese, and Chinese languages exhibit superior performance compared to their multimodal counterparts. In contrast, the multimodal model demonstrated superior performance for the remaining higher-resourced languages.

Original data vs Inclusion of translated text: In the context of lower-resourced languages, the utilisation of machine-translated instances sourced from higher-resourced languages, such as English, did not yield significant performance improvements. In the context of the Chinese language, including translated instances resulted in a decline in the overall performance. We hypothesise that, in contrast to product and movie reviews, which encompass comprehensive contextual information as a cohesive entity, one single tweet lacks the wider contextual frame. Consequently, the translation of the original language is of lower quality and results in a modification of the overall meaning.

Monolingual vs Multilingual: When compared with monolingual models’ results, on average, training a single model for all languages yielded the best performance for 17 languages, where results for Croatian, Hungarian, Maltese, Portuguese are higher with monolingual models. It suggests that providing a single model instead of 21 language-specific models is adequate for many languages of interest in this paper. Regarding modality for multilingual configuration, the combination of XLM-RoBERTa-Sentiment-Multilingual with CLIP (X-SM+C) yielded the best performance across many languages. Thus, we can confirm that the model trained with the configuration of multimodal and multilingual achieved the best score for the sentiment analysis of tweets that include both text and image content.

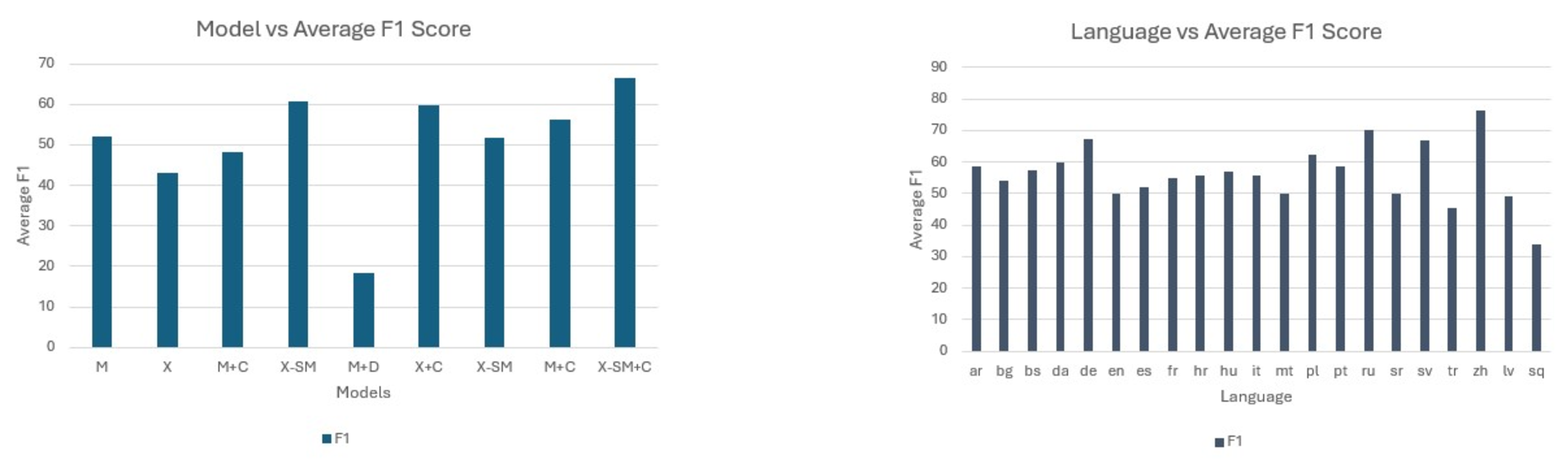

Figure 3 displays (on right) the average F1-scores for each language and for each combination of pre-trained models (on left). In the first subplot, it is evident that X-SM+C exhibits superior performance across all languages, with XLM-RoBERTa-Sentiment-Multilingual (X-SM) following closely behind. These findings also suggest the significance of pre-trained models, particularly those that are highly specialised or domain-specific in the context of sentiment tasks. In the second subplot, we observe that languages such as Chinese, Russian, Swedish, and German have overall better scores on all the trained models.

Figure 3: The (left) plot illustrates the averaged F1-score across various models. The (right) plot illustrates the averaged F1-score across languages.

6.2 Error Analysis↩︎

A manual inspection was conducted on the predictions generated by the best performing unimodal and multimodal model. The errors observed in the model can be classified into the following categories:

Missing Context: The tweets exhibited a level of ambiguity that required the application of external knowledge about the world in order to determine the polarity of the messages. Given that tweets often only capture a fragment of a larger conversation and lack the necessary background context, it can be argued that these tweets require additional information beyond the presented text in order to accurately classify their content. The majority of incorrect predictions for unimodal and multimodal can be placed in this category.

Disputable: It is important to note that not all labels present in numerous datasets can be regarded as definitive ground truth, particularly in the case of [21], which has been previously identified as having noise and exhibiting low inter-rater agreement [57]. It is our contention that the identification of these instances with noisy labels should be accomplished through the utilisation of established frameworks such as [58]. This observation suggests that there remains significant potential for enhancement and validates the efficacy of the collaborative assessment of multimodal data.

Figurative Language: Although the multimodal features help in majority of the case, the models cannot comprehend cases such as sarcasm. In this case, the textual model predicts a neutral class while the multimodal model predicts a positive class, despite the fact that the original class from the dataset is negative.

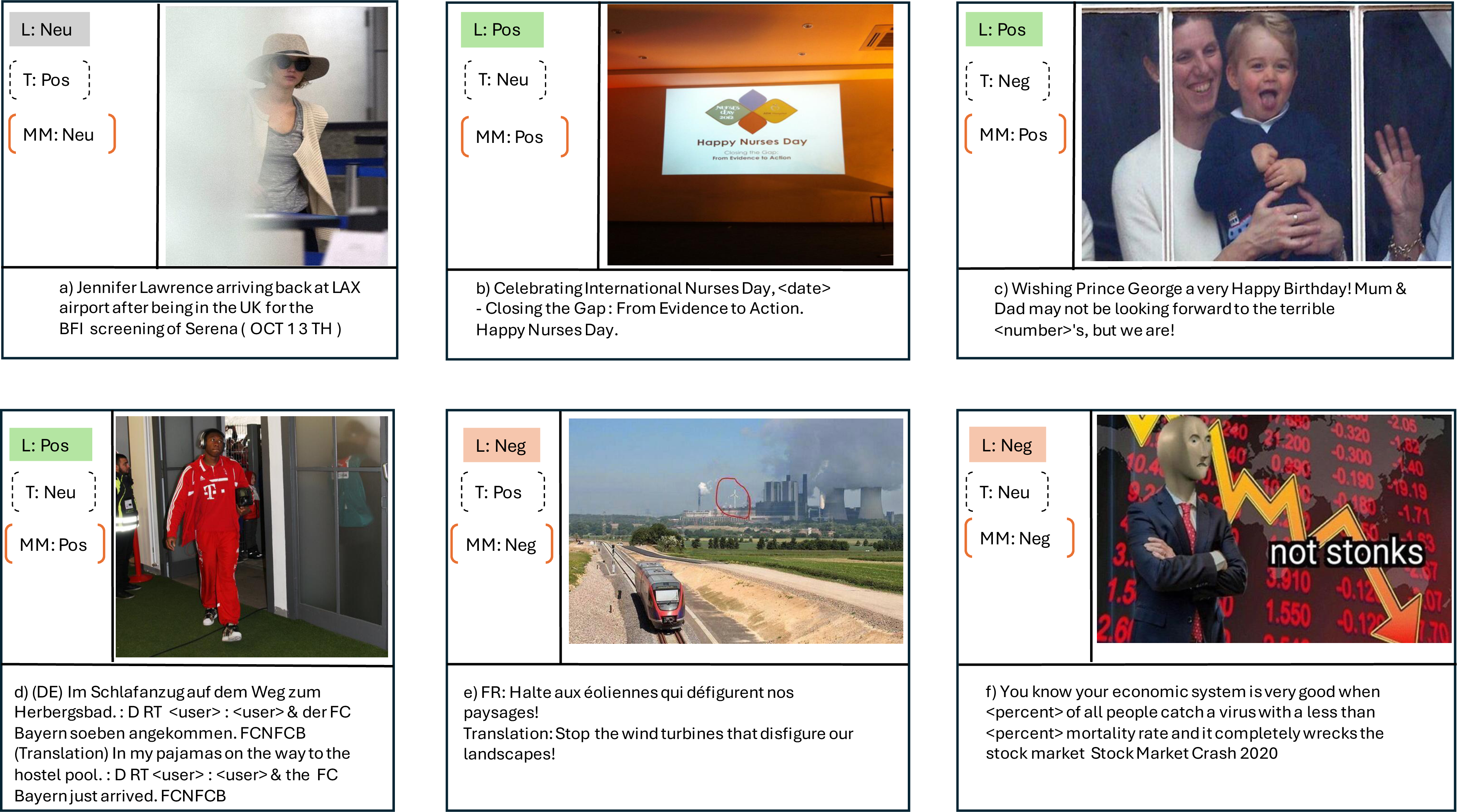

In Figure 4, we show a few examples from X-SM+C where the multilingual model predicts the correct label and the unimodal makes an incorrect classification. In the example (c), the tweet contains the text "Wishing Prince George a very Happy Birthday! Mum & Dad may not be looking forward to the terrible <number>’s, but we are!" is classified as negative by the text model, but the multimodal multimodal multilingual model correctly predicts it as positive.

Figure 4: Examples from multilingual multimodal model predicts the correct label and text-only model fail

7 Conclusion↩︎

This paper presents the model architecture trained on the dataset extracted from various sources for multimodal sentiment classification in a multilingual context. To achieve the objective, this study employed a straightforward methodology to enhance an existing unimodal dataset from Twitter, transforming it into a multimodal one. Numerous models have been trained utilising textual data and a combination of textual and visual modalities. The primary conclusion drawn from this study is that incorporating sentiment knowledge into transformers-based models enhances the accuracy of tweet sentiment classification. The efficacy of the same model settings varies across different languages. Training a single model for all languages multilingual and multimodal data yielded the best performance across many languages. In our prospective endeavours, we intend to utilise tweets devoid of images that underwent filtration during the preprocessing phase. We aim to augment the existing dataset by incorporating additional languages. One potential avenue for advancing research is using translated datasets derived from languages other than the target language.

Limitations↩︎

The performance of pre-trained models in highly specialised or domain-specific tasks may be limited due to the broad coverage of topics in their training data. The pre-trained models learn from the data they are trained on, which can result in the introduction of any inherent biases in the training data. This bias can affect model outputs, particularly when the data do not represent all demographic, cultural, or social groups. The sentiment datasets used contain a bias towards a particular topic, which was incorporated by the annotators when the datasets were labelled.

Acknowledgements↩︎

This work was partially funded by the EU Horizon 2020 Research and Innovation Programme under the Marie Sklodowska-Curie grant agreement no. 812997 (CLEOPATRA ITN). This work was partially funded from the European Union’s Horizon Europe Research and Innovation Programme under Grant Agreement No 101070631 and from the UK Research and Innovation (UKRI) under the UK government’s Horizon Europe funding guarantee (Grant No 10039436).