Upsample Guidance: Scale Up Diffusion Models without Training

April 02, 2024

Abstract

Diffusion models have demonstrated superior performance across various generative tasks including images, videos, and audio. However, they encounter difficulties in directly generating high-resolution samples. Previously proposed solutions to this issue involve modifying the architecture, further training, or partitioning the sampling process into multiple stages. These methods have the limitation of not being able to directly utilize pre-trained models as-is, requiring additional work. In this paper, we introduce upsample guidance, a technique that adapts pretrained diffusion model (e.g., \(512^2\)) to generate higher-resolution images (e.g., \(1536^2\)) by adding only a single term in the sampling process. Remarkably, this technique does not necessitate any additional training or relying on external models. We demonstrate that upsample guidance can be applied to various models, such as pixel-space, latent space, and video diffusion models. We also observed that the proper selection of guidance scale can improve image quality, fidelity, and prompt alignment.

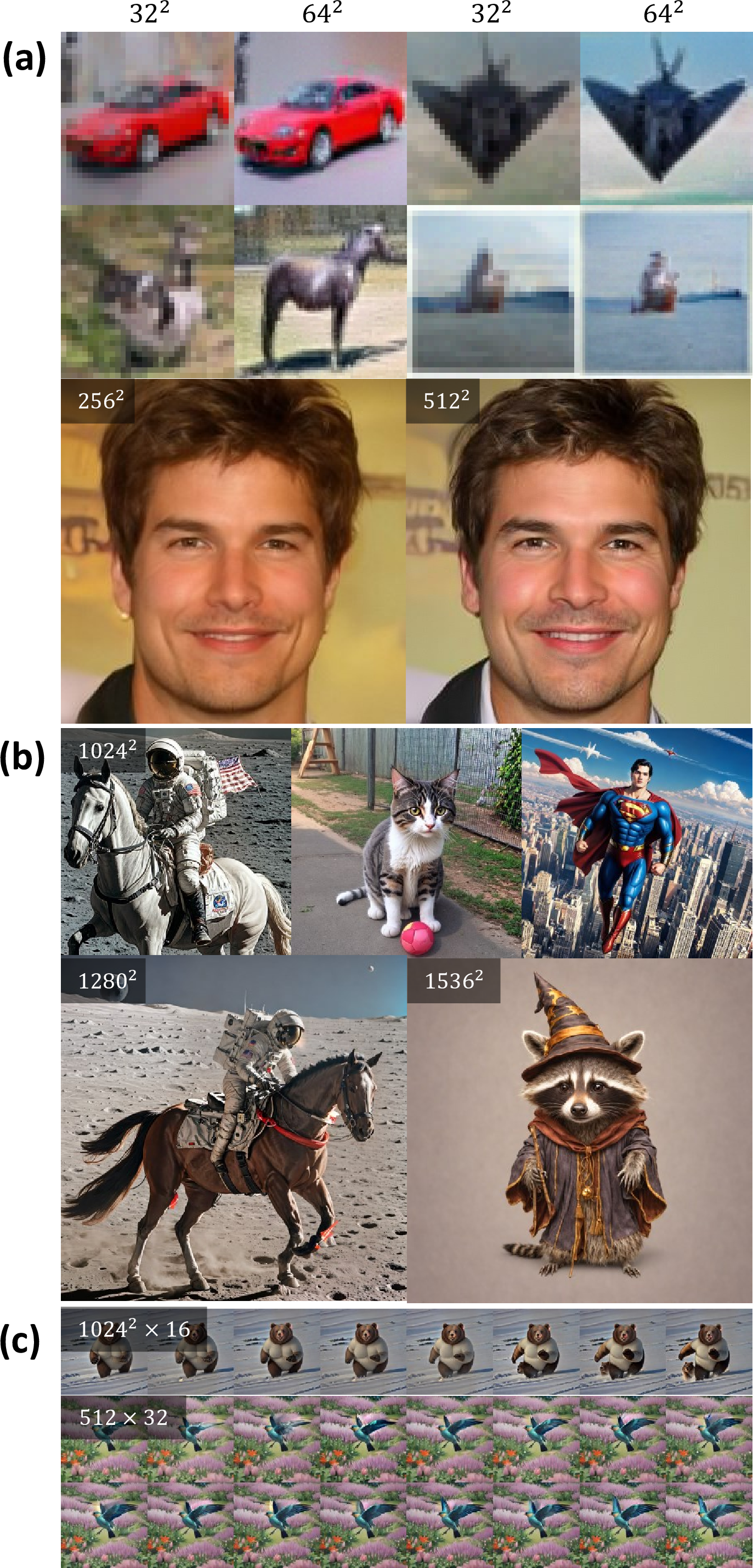

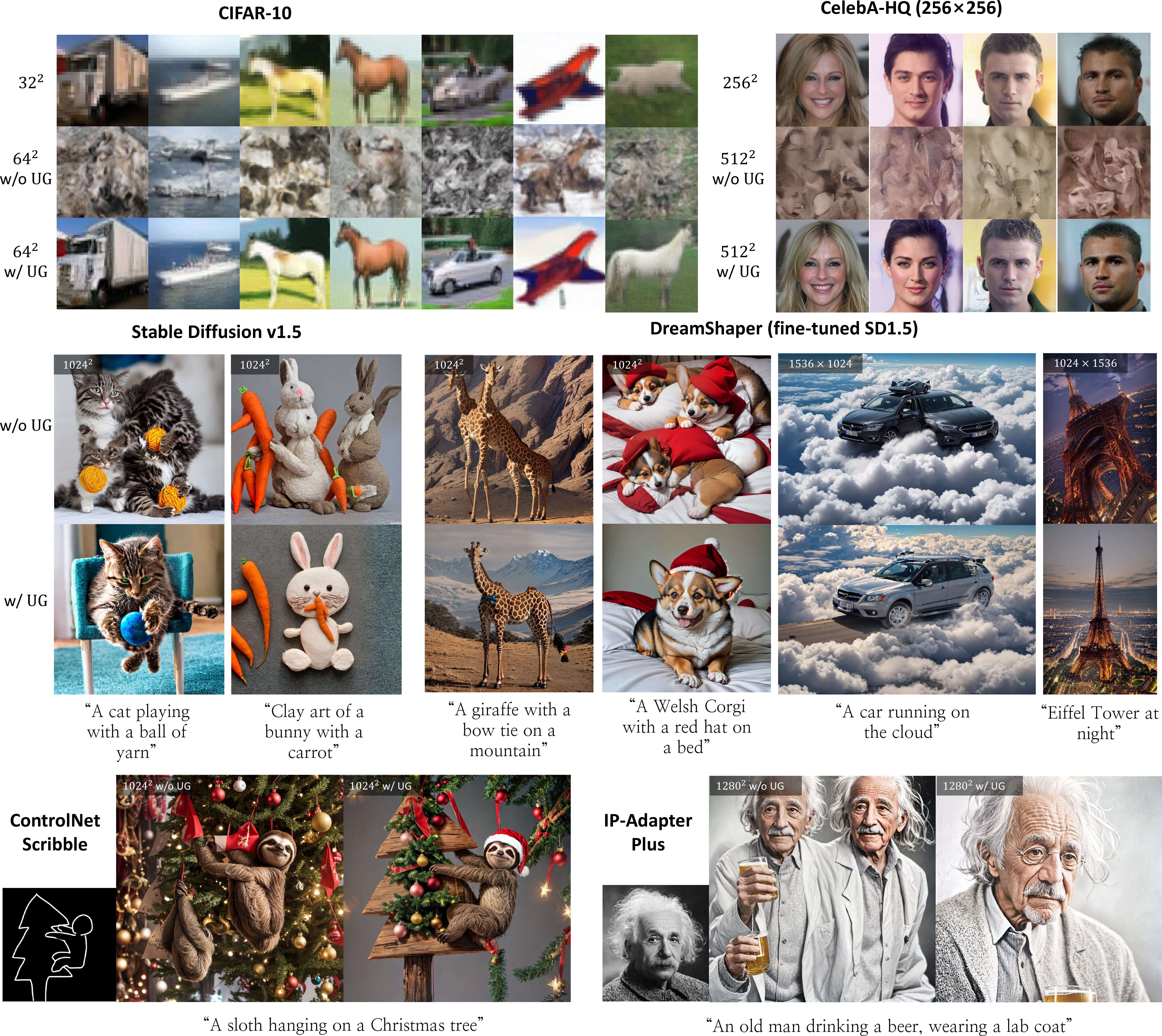

Figure 1: High-resolution samples with upsample guidance. The original trained resolution is increased (\(\geq 2\) times) through upsample guidance. (a) Images sampled at twice the resolution for the models trained on CIFAR-10 and CelebA-HQ datasets at \(32^2\) and \(256^2\) resolutions, respectively. The adjacent image pairs are sampled from the same initial noise. (b) High-resolution images of latent diffusion models using upsample guidance. (c) Upsampled snapshots of text-to-video models. The upper panel represents spatial upsampling, while the lower panel represents temporal upsampling.

1 Introduction↩︎

Diffusion models are generative models that generate samples by progressively restoring the original distribution from prior noise distribution, by modeling the reverse process of data diffusion. [1]–[3] Recently, diffusion models have demonstrated state-of-the-art performance in various domains such as image [4]–[7], video [8]–[11], audio, and 3D generation [12].

Despite its effectiveness in image generation, generating high-resolution images remains a challenging problem. To circumvent this issue, researcher suggest to operate in lower dimensional latent space (latent diffusion models, LDMs) [6], or generate low-resolution images and then upscale them with super-resolution diffusion models (cascaded diffusion model, CDM) [13] or mixtures-of-denoising-experts (eDiff-I) [14]. Recently, end-to-end high resolution image generation have been proposed, either by improving the training loss [15] or by generating multiple resolutions simultaneously [16].

The aforementioned solutions involve require from-scratch training or fine-tuning, requiring additional computation cost. In this paper, we introduce a novel technique, upsample guidance, which enables higher resolution sampling without any additional training or external models, but simply by adding a single term involving minimal computation.

As shown in 1, upsample guidance can be universally introduced to any types of diffusion model, including pixel-space, latent-space, or even video diffusion model. Moreover, it is fully compatible with any diffusion models or all previously proposed techniques that improve or control diffusion models such as SDEdit [17], ControlNet [18], LoRA [19], [20], and IP-Adapter [21]. Surprisingly, our method can even allows to generate higher resolution images that never shown in the training dataset, such as \(64^2\) resolution images of CIFAR-10 dataset [22], which has \(32^2\) resolution images.

We demonstrate the results of applying upsample suidance across numerous pre-trained diffusion models and compare these with cases where our method was not applied. Additionally, we show the feasibility of spatial and temporal upsampling in video generation models. Finally, we conduct experiments on the selection of an appropriate guidance scale.

2 Related Works↩︎

Various ideas have been proposed for generating high-resolution samples using diffusion models. However, many of these require modifications to the architecture or traning from scratch. Here, we focus on a method that leverages pre-trained models to generate at resolutions higher than their trained resolution.

2.1 Super-Resolution↩︎

An intuitive solution is to use pre-trained models to generate low-resolution samples and then upscale them to high resolution through a super-resolution model. Cascaded diffusion models (CDM) perform super-resolution using diffusion models that take low-resolution images as a condition [13] . This method has been applied to high-performance text-to-image models such as IMAGEN [4] and DeepFloyd-IF [23]. However, this approach involves muptiple diffusion models and sampling process, requiering additional training and heavy computational cost.

A similar method in practice involves upscaling an image generated by a diffusion model using a relatively lightweight super-resolution model, followed by applying SDEdit [17] with the same diffusion model to enhance details in the high-resolution image. This technique is implemented under the name "HiRes.fix" in a well-known web-based diffusion model UI [24]. However, a drawback is the additional encoding and decoding operations required when it is combined with LDMs.

2.2 Fine-Tuning↩︎

Even models trained at a fixed low resolution can generate higher resolution images better when fine-tuned on datasets with higher resolutions and various aspect ratios [25]. For instance, although the Stable Diffusion v1.5 model [6] was trained at a \(512^2\) resolution, several models fine-tuned near a \(768^2\) resolution are widely used. However, as the resolution increases, the computational cost required for fine-tuning also rises considerably, making it challenging to train on higher resolutions.

3 Background↩︎

In this section, we introduce the fundamental concepts of diffusion models and guidance, which are essential for understanding our method.

3.1 Diffusion Models↩︎

Diffusion models transform an original sample \(x_0\) from the dataset into a noised sample \(x_t\) through a forward diffusion process, eventually reaching pure noise \(x_T\) that can be easily sampled. Many diffusion models follow the formalization of denoising diffusion probabilistic models (DDPMs) [1] that use Gaussian noise. Specifically, the noised sample is given by: \[\label{eq:forward95process} x_t=\sqrt{\alpha_t} x_0 + \sqrt{1-\alpha_t} \epsilon_t,\tag{1}\] which represents a linear combination of the signal \(x_0\) and noise \(\epsilon_t \sim \mathcal{N}(0, I)\). The term \(\alpha_t\) is a noise schedule that affects the signal-to-noise ratio \(\textrm{SNR} = \frac{\alpha_t}{1 - \alpha_t}\) and monotonically decreases with respect to time \(t\).

The generation in DDPMs corresponds to a backward diffusion process, starting from \(x_T\) and approximately sampling the distribution \(p(x_{t-1}|x_t)\). The expected value of this conditional distribution is estimated by a noise predictor \(\epsilon(x_t,t)\), which takes the noised sample \(x_t\) and time \(t\) as inputs to predict \(\epsilon_t\). Note that the noise predictor has a U-Net architecture [26], allowing it to accept inputs at resolutions other than the trained resolution. This flexibility underscores the adaptability of the model to handle a variety of image sizes.

3.2 Guidances for Diffusion Models↩︎

Techniques have been proposed for conditionally sampling images corresponding to specific classes or text prompts by adding a guidance term to the predicted noise. [27] added the gradient of the log probability predicted by a classifier to \(\epsilon(x_t, t)\), enabling an unconditional diffusion model to generate class-conditioned images. Subsequently, classifier-free guidance (CFG) was proposed. Instead of using a classifier, the noise predictor’s architecture was modified to accept condition \(c\) as an input. The following formula is then used as the predicted noise: \[\label{eq:cfg} \tilde{\epsilon}(x_t,t;c)= {\underbrace{\epsilon(x_t, t)}_{\text{denoise}}}+ w{\underbrace{[\epsilon(x_t, t; c) - \epsilon(x_t, t)]}_{\text{guidance}}}.\tag{2}\] Here, \(w\) represents the guidance scale. It has been commonly observed that proper adjustment of the scale improves the alignment with the condition and the fidelity of the generated images.

4 Method↩︎

In this section, we first introduce signal-to-noise ratio (SNR) matching, a core concept crucial for understanding our method, and then derive upsample guidance based on it. We also explain the considerations when applying it to LDMs that include an encoder-decoder structure.

4.1 SNR Matching↩︎

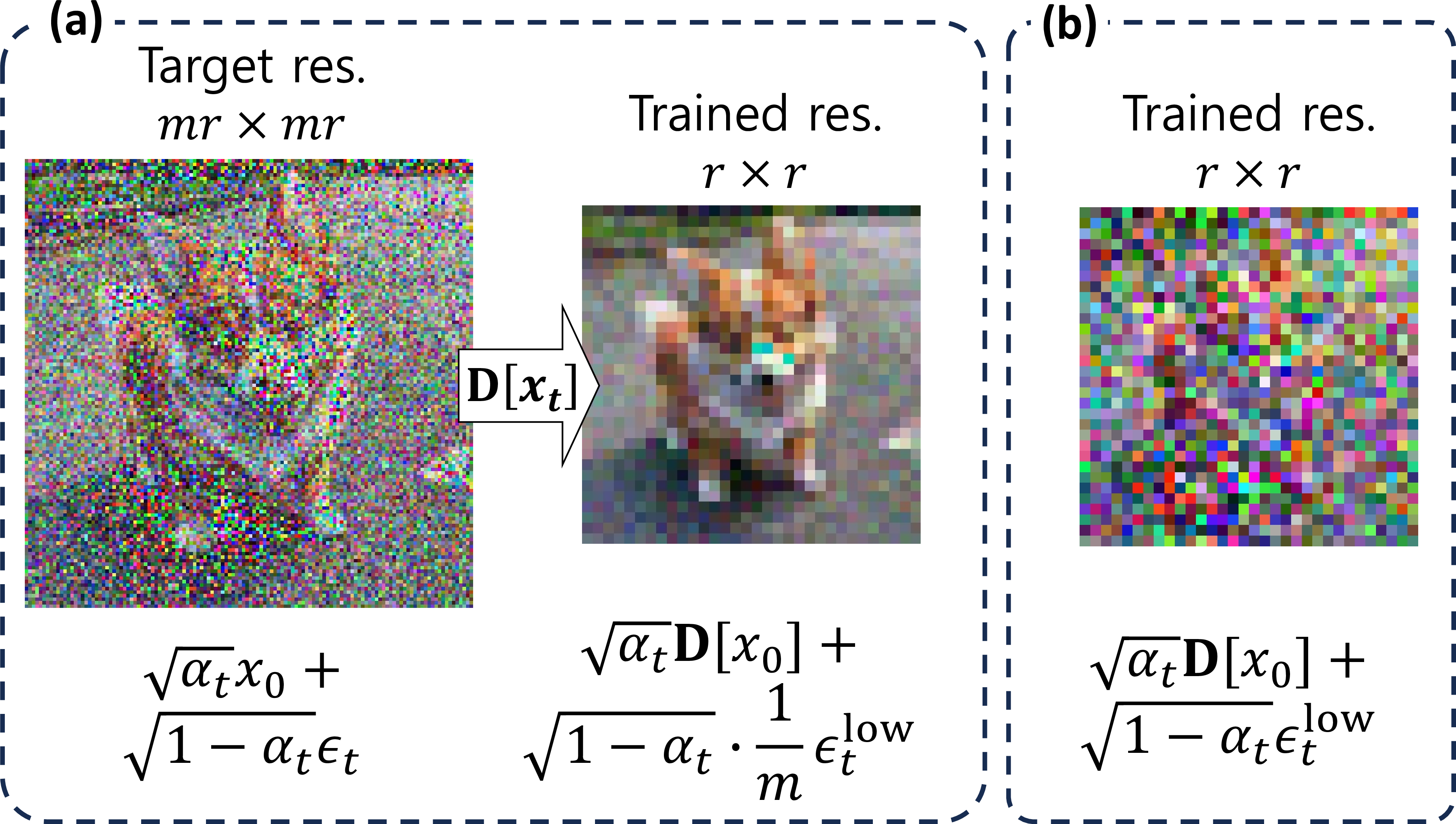

Figure 2: Consistency between different resolutions. (a) Downsampled image generated by the diffusion model at the target resolution. (b) Image generated at the trained resolution. The noise reduction due to downsampling creates a significant difference in the recognizability between the central and right images at the trained resolution, indicating a change in their signal-to-noise ratio. For this example, \(\alpha_t=0.85\) is used.

When a diffusion model is trained at a resolution of \(r \times r\), consider the scenario of generating images at a target (high) resolution that is \(m\) times higher, namely \(mr \times mr\). If images can be ideally generated at all resolutions, it is reasonable to expect that the result of downsampling the target resolution by a scale of \(1/m\) should resemble the outcome sampled at the trained (low) resolution. In this context, let’s assume that the same model takes a downsampled image \(\mathbf{D}[x_t]\) as input during the generation process. Here, \(\mathbf{D}\) represents a downsampling operator that takes the average of \(m\times m\) pixels.

As 2 demonstrates, this downsampled image \(\mathbf{D}[x_t]\) (the second image) significantly differs from \(x^{\textrm{low}}_t\) (the third image) that was generated from the diffusion model trained at the low resolution. More specifically, the trained image follows \[x^{\textrm{low}}_t = \sqrt{\alpha_t} \mathbf{D}[x_0] + \sqrt{1-\alpha_t} \epsilon^{\textrm{low}}_t,\] where \(\epsilon^{\textrm{low}}_t\) represents the standard Gaussian noise with a size of trained resolution. However, the downsampled image can be obtained from Equation 1 by applying the linear downsampling operator \(\mathbf{D}\), \[\mathbf{D}[x_t] = \sqrt{\alpha_t} \mathbf{D}[x_0] + \frac{1}{m} \sqrt{1-\alpha_t} \epsilon^{\textrm{low}}_t.\] Here, the standard deviation of noise is reduced to \(1/m\), because \(\mathbf{D}\) averages every \(m\times m\) pixels. This directly results form the central limit theorem. Therefore, some adjustments are necessary to make \(x^{\textrm{low}}_t\) and \(\mathbf{D}[x_t]\) equivalent [28]. This requires matching both SNR and overall power: \[\begin{align} &\mathrm{SNR}^{\mathrm{low}} = \frac{m\alpha_t}{1-\alpha_t} = m \cdot \mathrm{SNR}, \\ &P = \alpha_t + \frac{1}{m^2} ( 1- \alpha_t). \end{align}\]

Since the SNR is a function of time determined by the noise schedule \(\alpha_t\), we can find the adjusted time \(\tau\) such that \(\mathrm{SNR}^{\mathrm{low}}(\tau)=m\cdot \mathrm{SNR}(t)\). Furthermore, by multiplying by \(1/\sqrt{P}\), we can make the overall power equivalent to that of the target resolution. Therefore, the proper noise predictors of high and low resolutions are associated with time and power adjustments as follows \[\label{eq:eps95low} \mathbf{D}[\epsilon^\mathrm{adj}(x_t,t)] = \frac{1}{m} \epsilon\left( \frac{1}{\sqrt P} \mathbf{D}[x_t], \tau \right),\tag{3}\] where the factor of \(1/m\) is multiplied to adjust the variance of trained resolution to 1.

4.2 Upsample Guidance↩︎

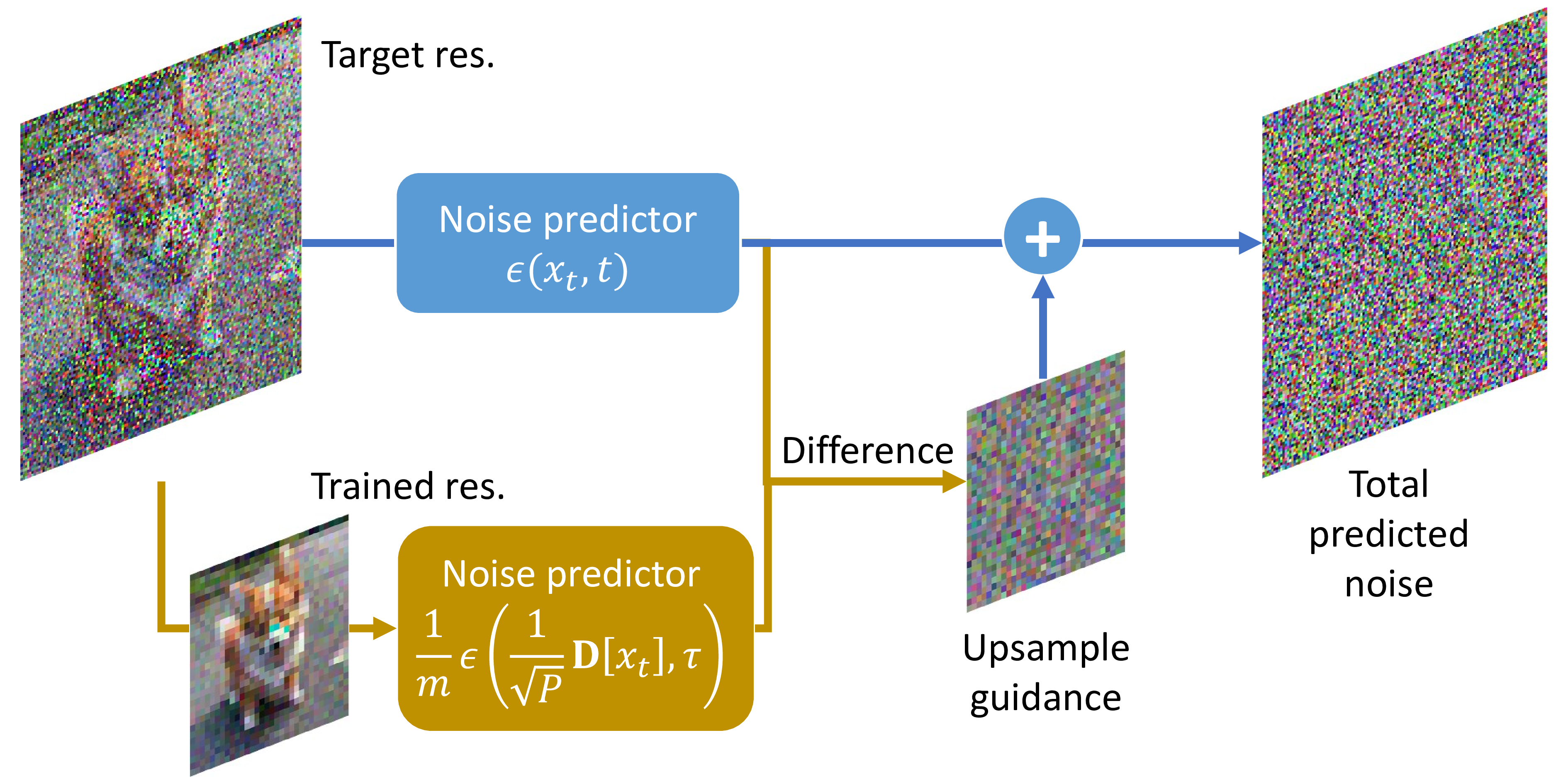

Figure 3: Conceptual illustration of upsample guidance. The model receives the same noised images at two different resolutions in parallel, but time and power are adjusted at the trained resolution. The difference between the two predicted noises then acts as guidance, which is added to the total noise.

Considering the consistency across diverse resolutions, we explore the decomposition of predicted noise at the target (high) resolution. Suppose that the target noise comprises both a component from the trained (low) resolution and its corresponding residual part: \[\epsilon(x_t,t) = \mathbf{U}\underbrace{\mathbf{D}[\epsilon(x_t,t)]}_{\textrm{trained resolution}} + \underbrace{\{\epsilon(x_t,t) - \mathbf{U}\mathbf{D}[\epsilon(x_t,t)]\}}_\textrm{target resolution}.\] In this context, \(\mathbf{U}\) represents the nearest upsampling operator with a scale factor of \(m\), utilized to align dimensions between target and trained noise predictors. The residual noise, \(\epsilon - \mathbf{U}\mathbf{D}[\epsilon]\), corresponds to the part that remains after removing the contribution of the low resolution.

Now, recognizing the need for adjustments to ensure consistency among noise predictors at various resolutions, we substitute the term about trained resolution with the adjusted noise predictor in Equation 3 , as follows: \[\begin{align} \epsilon^\mathrm{adj}(x_t,t) =& \mathbf{U}\underbrace{ \left[ \frac{1}{m} \epsilon\left( \frac{1}{\sqrt P} \mathbf{D}[x_t], \tau \right) \right]}_{\textrm{trained resolution}} \nonumber +\\& \underbrace{\{\epsilon(x_t,t) - \mathbf{U}\mathbf{D}[\epsilon(x_t,t)]\}}_\textrm{target resolution}. \end{align}\] This model parallelly sees and predicts noises at both resolutions.

Finally, we consider interpolation between the naive sampling at the targe resolutin with the parallel sampling at the trained resolution, \[\begin{align} \label{eq:upsample95guidance} &\tilde{\epsilon}(x_t,t) =(1-w_t)\epsilon(x_t,t) + w_t\epsilon^\mathrm{adj}(x_t,t) =\nonumber \\ & \epsilon(x_t,t) + w_t \underbrace{\mathbf{U}\left[ \frac{1}{m} \epsilon\left( \frac{1}{\sqrt P} \mathbf{D}[x_t], \tau \right) - \mathbf{D}[\epsilon(x_t,t)] \right]}_\textrm {upsample guidance}. \end{align}\tag{4}\] This structure resembles Equation 2 and can be interpreted similarly as guidance. Consequently, we named it “upsample guidance (UG),” where \(w_t\) functions as the guiding scale, which may generally depend on time. Similar to how CFG incorporates the shift from unconditional to conditional noise, UG represents the influence pushing the model towards consistency with the trained low-resolution component.

4.3 Adaptation on LDMs↩︎

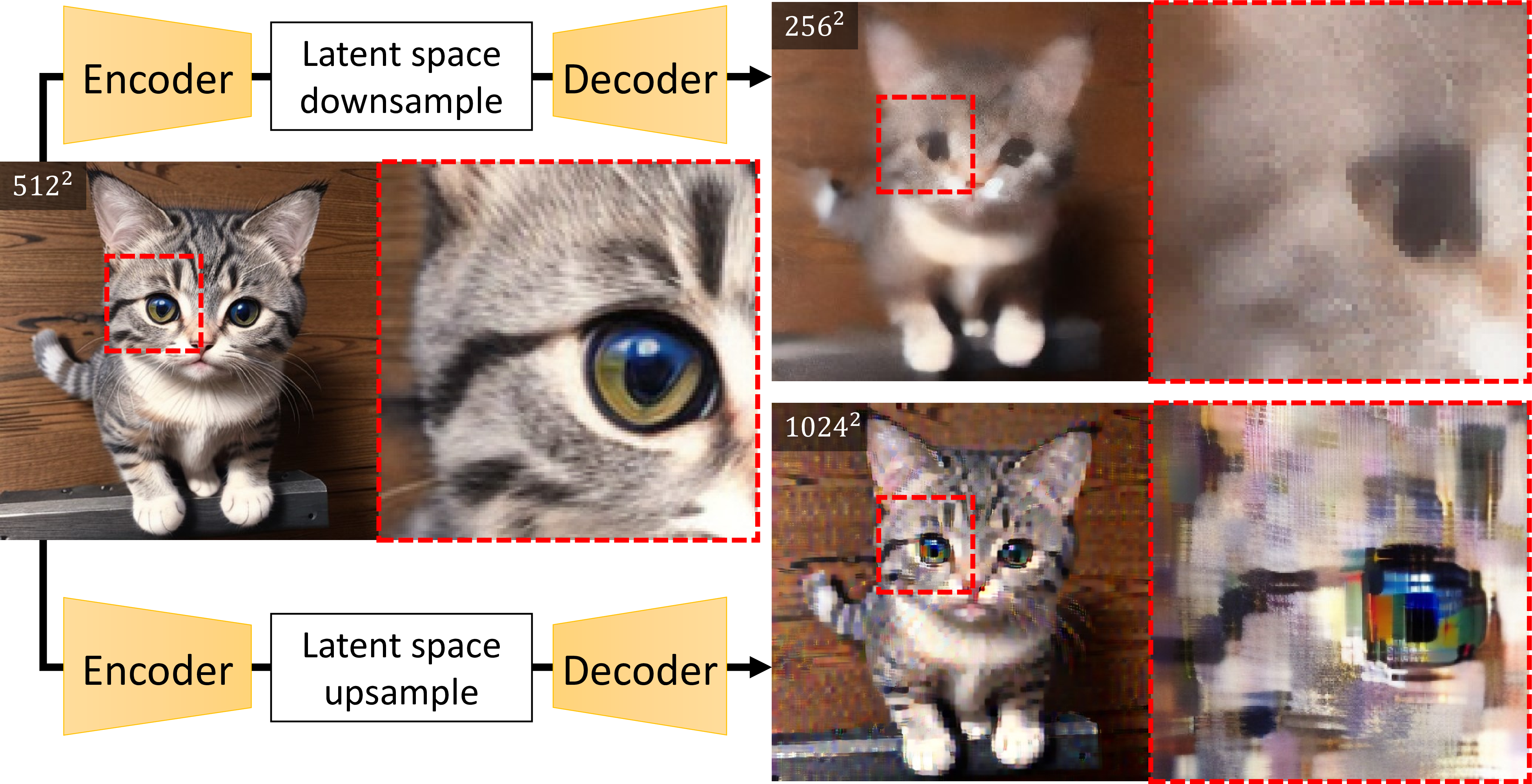

Figure 4: Artifacts of encoder-decoder in a LDM. When an image is upsampled or downsampled in the latent space of an LDM and then decoded back into pixel space, artifacts are introduced. The variational autoencoder introduces nonlinearity in the implementation of upsample guidance, and significant degradation can be observed in both cases.

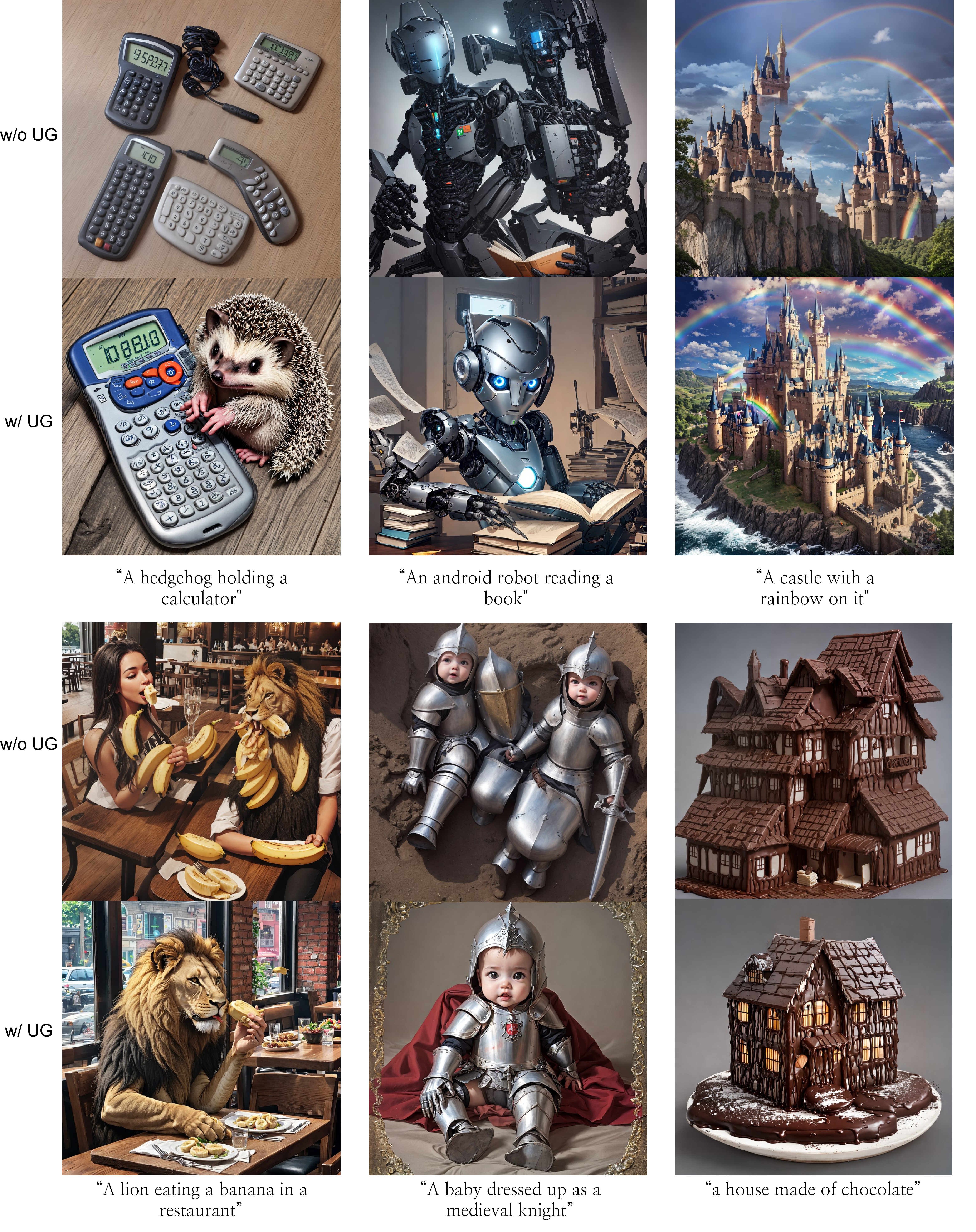

Figure 5: Upsampling across various image generation models, resolutions, and conditional generation methods. Unconditional image generation, such as CIFAR-10 and CelebA-HQ, was sampled in the pixel space. For the text-to-image models, the left side of the images represents results without UG, while the right side shows results with UG. We used DreamShaper [29] as an example of fine-tuned LDM. The paired images are all generated from the same initial noise. Across different models, resolutions, prompts, and conditioning, consistently better images were obtained with UG. Notably, our method effectively resolved artifacts where multiple subjects were generated or bad anatomy was present.

The aforementioned derivation heavily relies on the linearity of operators \(\mathbf{U}\) and \(\mathbf{D}\). Nonetheless, in the context of LDMs, the pixel space undergoes a transformation into the latent space using a nonlinear variational autoencoder (VAE) [30]. Consequently, it is crucial to proceed with caution, as the latent space of a downsampled image at the target resolution may not align with the latent space of the resolution it was originally trained on. The outcomes of downsampling in latent space and subsequently decoding back into pixel space are shown in 4.

However, we discovered a viable solution to this challenge by heuristically tailoring \(w_t\) to be time-dependent. Specifically, we designed \(w_t\) to decrease or be set to zero when \(t\) is close to zero, preventing the upsample guidance from introducing artifacts. While various designs for \(w_t\) are conceivable, in Section 5.4, we introduce the most straightforward parameterized design using the Heaviside step function \(H\) to investigate the influence of scale magnitude \(\theta\) and time threshold \(\eta\). The formulation is expressed as follows:

\[\label{eq:kt} w_t = \theta \cdot H(t-(1-\eta) T).\tag{5}\]

5 Experiments↩︎

The core concept behind upsample guidance lies in the SNR matching during the downsampling process. As a result, it can be extended to diverse data generation tasks, not confined to images alone. Moreover, its compatibility extends to any pre-trained model, conditional generation, and application techniques. In this section, we showcase the outcomes when applied to various image generation models and applications. Specifically, we explore spatial and temporal upsampling in video generation. Subsequently, we conduct an ablation study to evaluate scenarios where the adjustments proposed in 4 are not implemented. Lastly, a quantitative analysis on the guidance scale is performed to help the design of \(w_t\).

5.1 Image Upsampling↩︎

As upsample guidance requires only a straightforward linear operation on the predicted noise, it exhibits compatibility with a wide array of models and applications. In our study, we used a pre-trained unconditional model trained on CIFAR-10 and CelebA-HQ \(256^2\) [31] datasets to generate images at twice the resolution using a constant \(w_t\). We also sampled using UG with \(m=2\) for text-to-image models based on stable diffusion v1-5, and checked its capability on a fine-tuned model, different aspect ratios and image conditioning techniques.

5 presents images that are slightly cherry-picked to aid in understanding the impact of upsample guidance. Each image pair contains images generated from the same initial noise. For images with different resolutions, initial noise was resized and its variance was adjusted accordingly. Upon carefully examining some samples from CIFAR-10, UG sometimes alters coarse contents (overall colors and shapes) between \(32^2\) and \(64^2\) resolutions, with details emerging at higher resolutions that were not present at lower ones. This suggests that UG does more than just interpolation or sharpening; it actually generates new meaningful features. For the comparison between low and high resolution in LDMs and more extensive non-cherry-picked samples, please refer to 9.

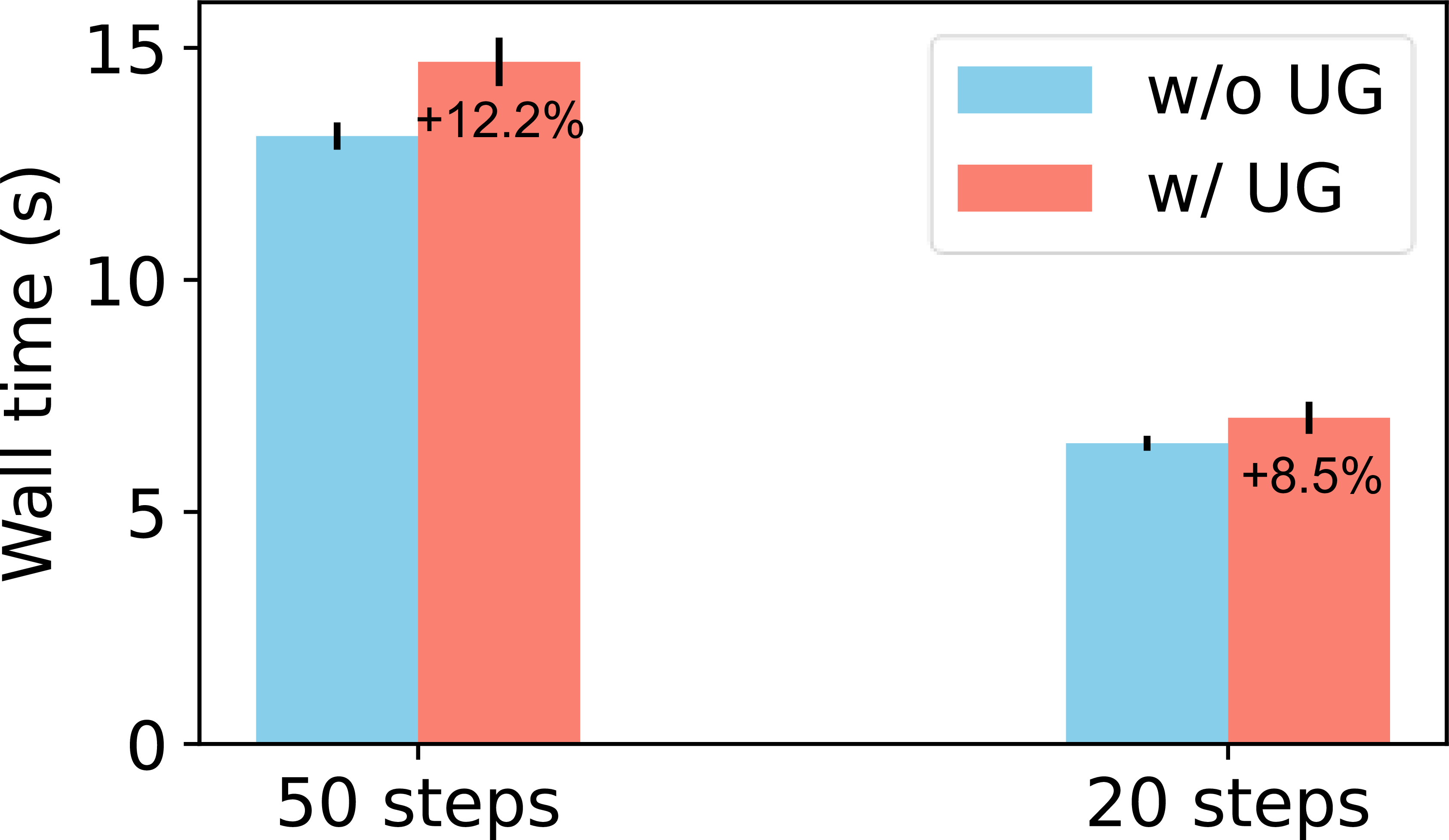

We roughly measured the additional computational time introduced by UG. In Stable Diffusion v1-5, using an RTX3090 GPU at \(1024^2\) resolution with a scale factor of \(m=2\) and \(\eta=0.5\), we measured the wall time from sampling in the latent space to converting into an RGB image, as shown in the 6. The extra computation for UG is minimal, given that the dimension of the noise prediction \(\epsilon(\frac{1}{\sqrt P}\mathbf{D}[x_t], \tau)\) at trained resolution is \(1/m^2\) times the dimension of \(\epsilon(x_t,t)\). Additionally, this supplementary computation is only applied when \(t\geq(1-\eta)T\). Therefore, the cost is less than \(\eta / m^2 = 1/8\) of the naive sampling time. However, in LDMs, decoding also consumes the time, so the portion of cost due to UG decreases as the sampling step becomes shorter. With the recent advancements in sampling methods [2], [32], [33] leading to a reduction in the number of inference steps, our method becomes more competitive, requiring only \(\leq10\%\) additional computation cost within 20 inference steps.

Figure 6: Computational cost comparison for upsample guidance (UG). Wall time for computation is compared with and without the use of UG. The percentages on the bars indicate the proportion of additional time attributed to UG.

5.2 Video Upsampling↩︎

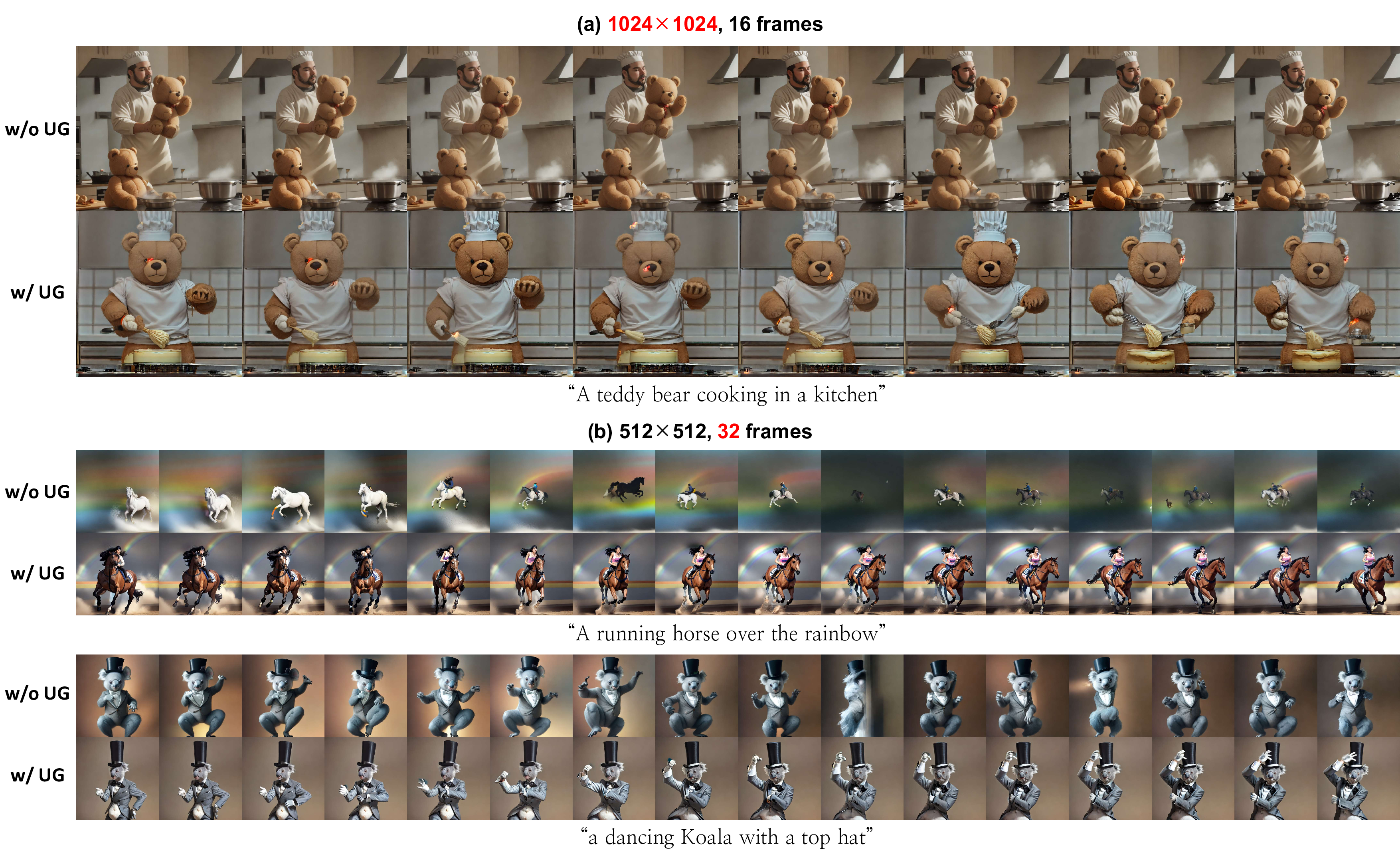

Figure 7: Spatial and temporal video upsampling. Frames of videos are generated using AnimateDiff with UG applied. (a) Spatial upsampling by a factor of 2, similar to images. (b) Temporal upsampling with the number of frames upsampled by a factor of 2. Note that for visibility, only odd-numbered frames from each sequence are displayed.

Upsample guidance can also enhance video upsampling by addressing both spatial and temporal resolution. To illustrate this, we employ AnimateDiff [9], a video generation model that integrates a motion module into a text-to-image model. In AnimateDiff, a video is represented as a sequence of color, time, width, and height in latent space, basically a tensor with the shape [C, T, W, H]. While we can upsample in the spatial dimensions [W, H] as above, it’s also possible to upsample in the temporal dimension T, increasing the number of frames by a factor of \(m\). Assuming UG gives robustness for temporal resolution, we expect an increase in frames per second rather than an extension of time length, similar to the case with images.

7 shows the results of applying UG across these two dimensions. For spatial upsampling, issues like multiple subjects appearing and misalignment with text prompts were resolved thanks to UG, indicating that spatial UG works similarly in video generation as it does for images.

For temporal upsampling, we kept the spatial size constant and generated 32 frames, double the 16 frames AnimateDiff was trained on. Without UG, there was a complete failure in maintaining temporal consistency, and sometimes even adjacent frames lost continuity. However, with UG, the videos were overall consistent at a level similar to the trained temporal resolution, and greater continuity was also appeared in the subject’s movements. This difference is more pronounced when viewing the videos in playback rather than as listed frames.

5.3 Ablation Study on Time and Power Adjustments↩︎

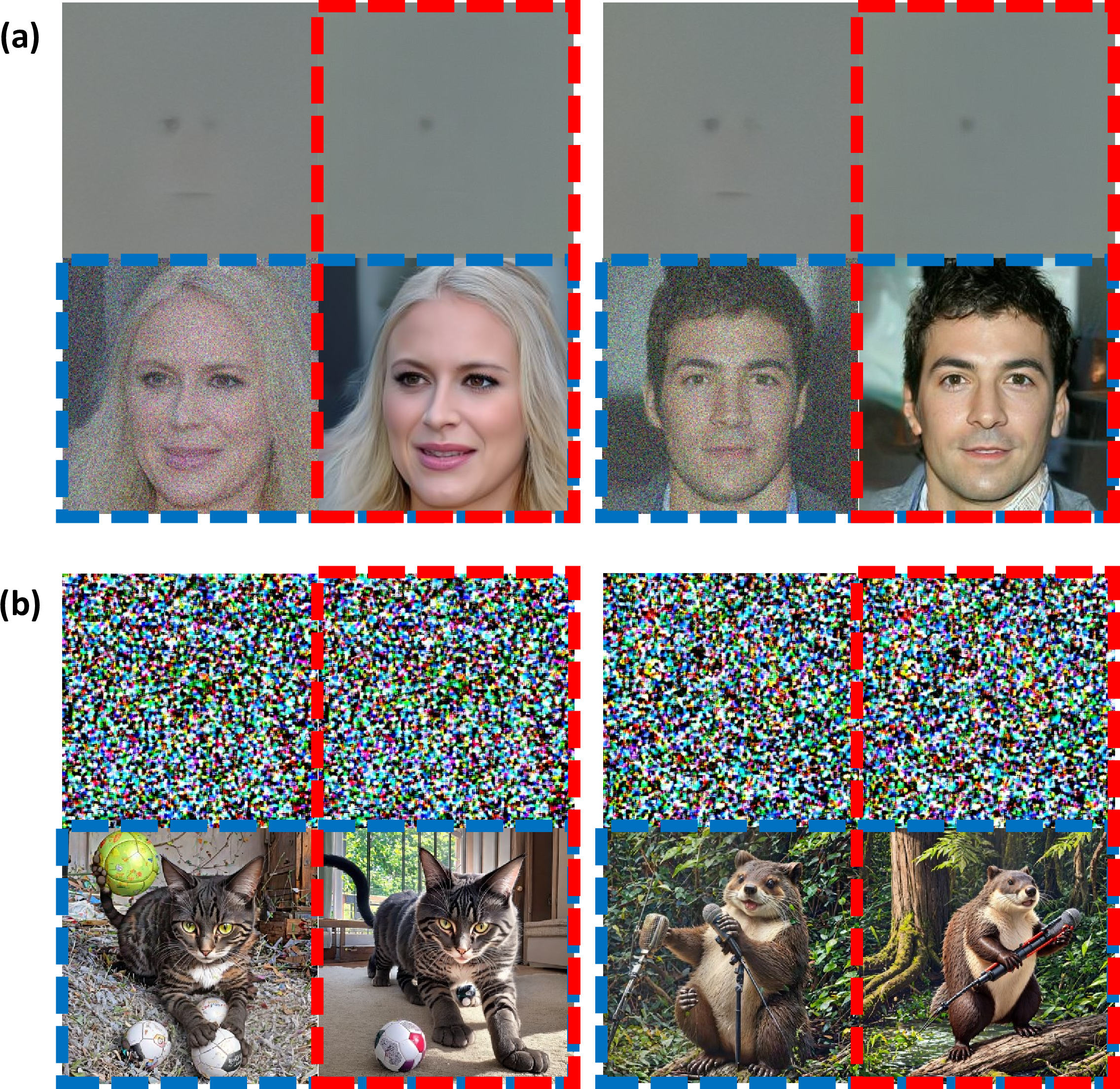

Figure 8: Effects of time and power adjustments in UG is indicated by red and blue dashed boxes, respectively. Two images are generated from (a) the CelebA-HQ model and (b) the text-to-image model, respectively, with and without either time adjustment, power adjustment, or both.

So far, we have seen that our method effectively suppresses artifacts that could occur at higher resolutions. However, some might question the necessity of the time and power adjustment presented in 3 . Therefore, we illustrate here that each adjustment is indeed essential, and how the images ruined when either one or both adjustments are not made.

As illustrated in 8, both adjustments are essential, and the absence of each leads to image degradation in different ways. Without \(\tau\) in 3 (outside the red dashed boxes), the model fails in denoising due to the mismatch between the learned SNR at the time and the SNR of the input noised sample, resulting in residual noise. Without \(1/\sqrt P\) (outside the blue dashed boxes), the model confronts samples with variances it has never learned, resulting in complete failure. This suggests that the noise predictor \(\epsilon(x_t,t)\) is highly sensitive to time, variance, and SNR, making our method crucial.

5.4 Analysis on Guidance Scale↩︎

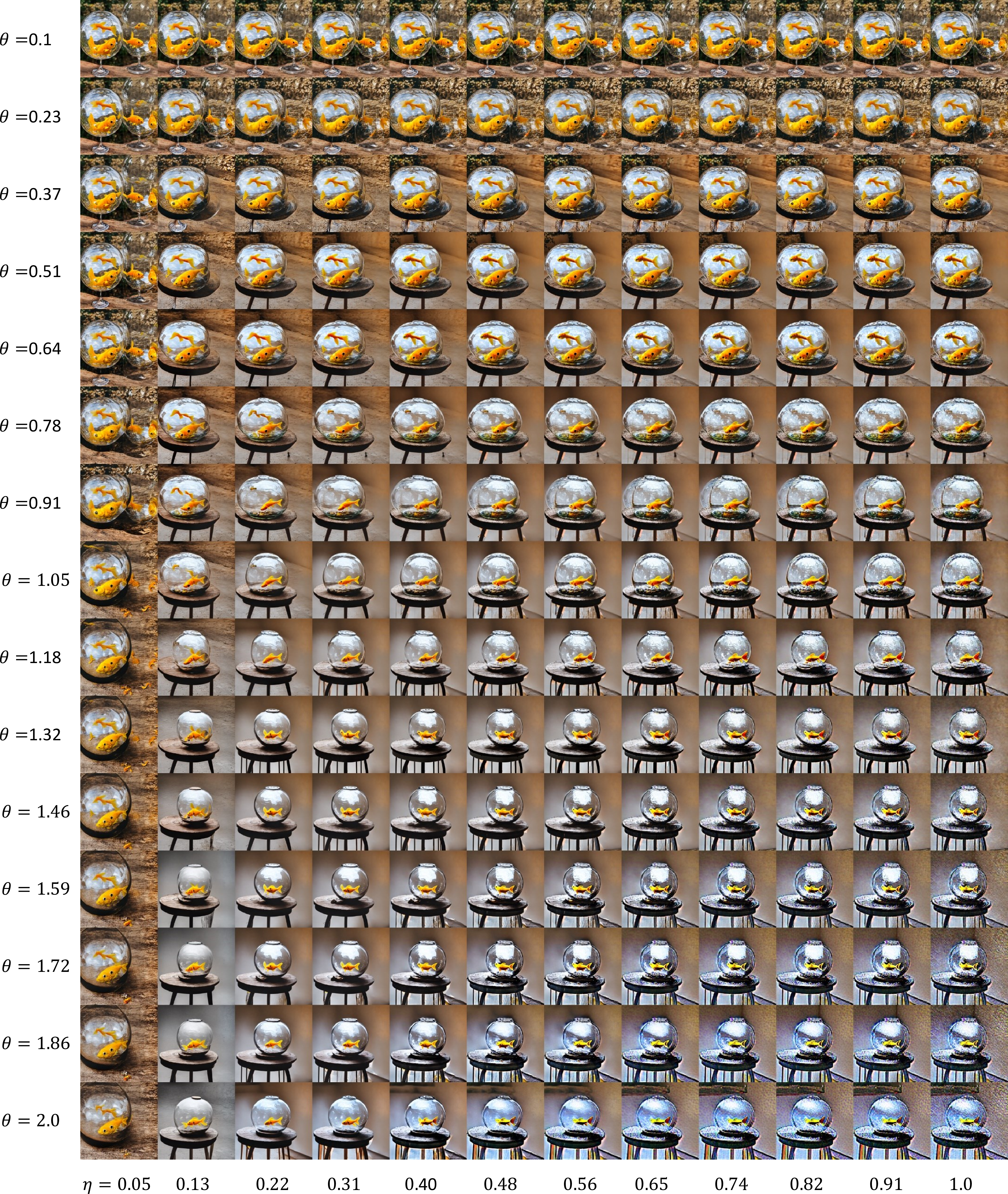

We observed that for diffusion models in pixel space, it is acceptable to keep the guidance scale constant, but for LDMs, the guidance scale needs to be reduced near \(t=0\) to eliminate artifacts as shown in 4. To quantitatively analyze the impact of the guidance scale, we measured changes in LDMs using a time-independent \(w_t\) and by parameterizing it as in 5 .

5.4.1 Sampling on Pixel Space↩︎

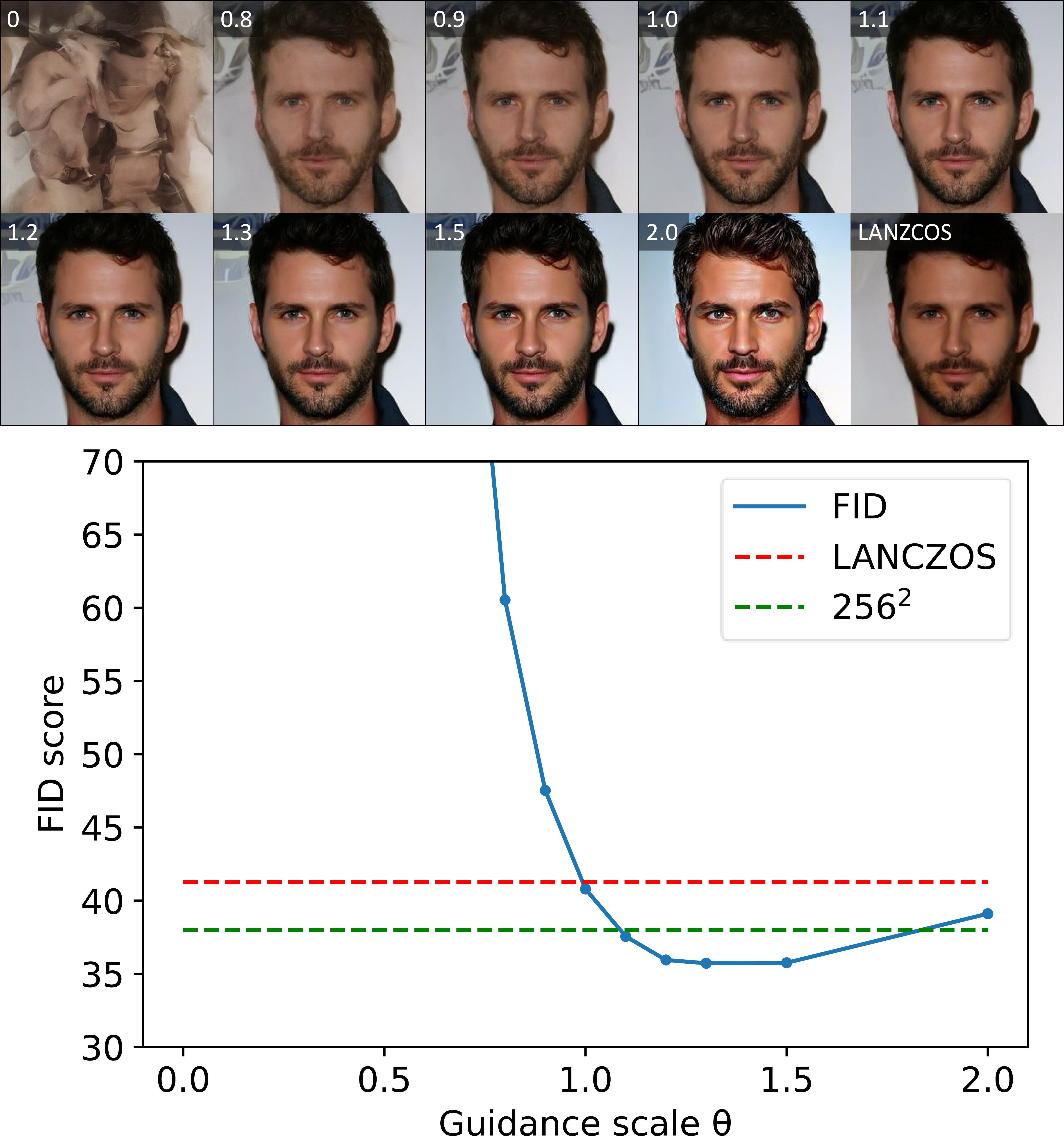

Figure 9: Fidelity of generated images across different guidance scale \(w_t\) (numbers in upper left corners) measured by the FID score (lower is better). The label “LANCZOS” refers to the images originally generated at a size of \(256^2\) by the model and then upsampled to \(512^2\) using Lanczos resampling. The green dashed line represents the FID between images generated by the model at \(256^2\) trained resolution and the CelebA-HQ \(256^2\) dataset.

We empirically found that for pixel space diffusion models, keeping the guidance scale constant is effective. Thus, we recommend using a constant \(w_t\), with \(\eta=1\) and varying \(\theta\) only in Equation 5 . After generating \(512^2\) resolution images from a model trained on the CelebA-HQ \(256^2\) using UG, we measured the fidelity via Fréchet inception distance (FID) [34] to CelebA-HQ \(512^2\). Results showed that as the guidance scale increased, the features and contrast became clearer. Astonishingly, at the optimal point (\(\theta \approx 1.3\)), the model outperformed not only resized images from the trained resolution but also achieved better fidelity compared to the dataset of the originally trained size of \(256^2\), demonstrating that UG serves a role beyond simple interpolation or sharpening.

5.4.2 LDMs and Text-to-Image↩︎

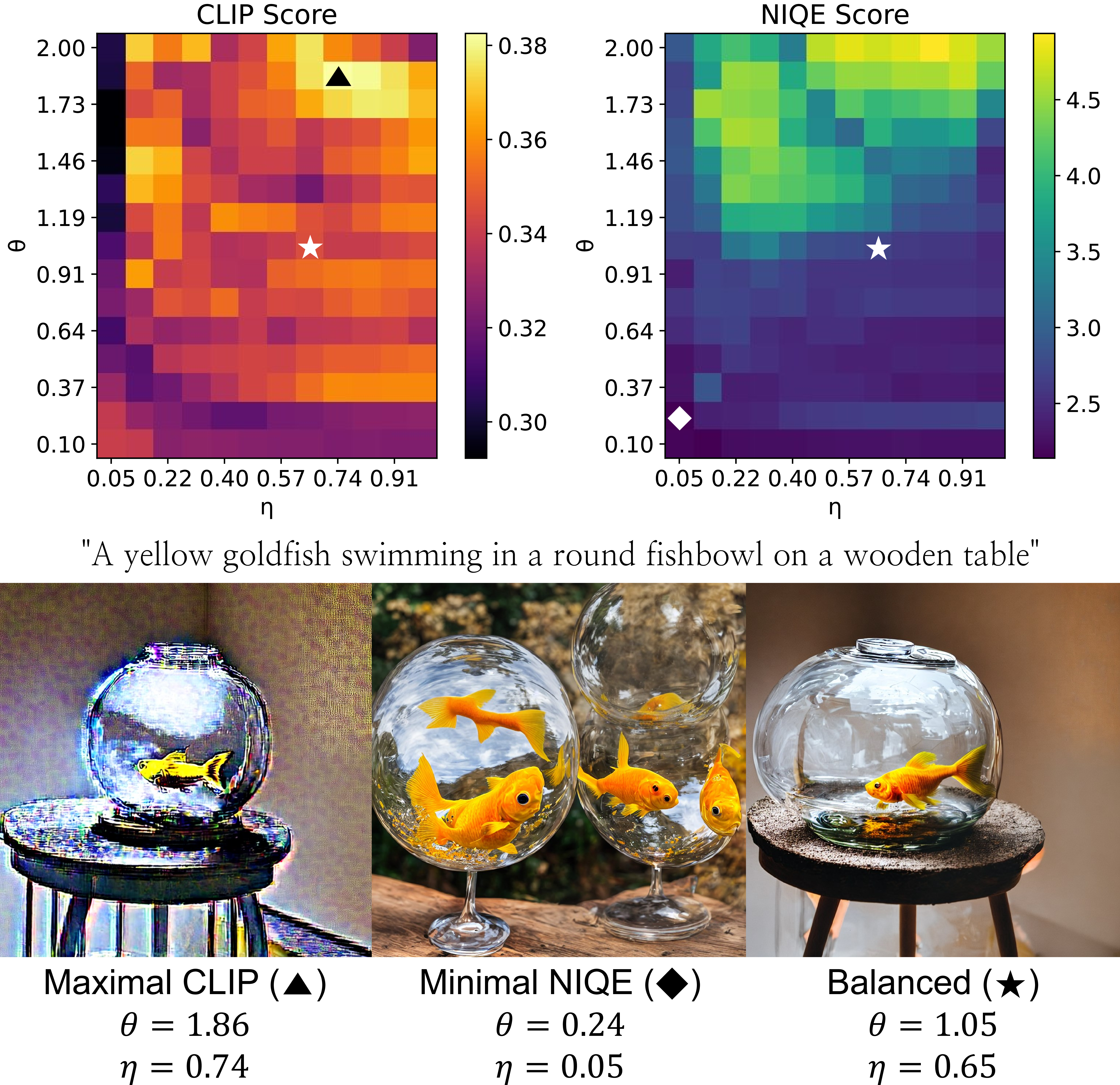

Figure 10: Impact of guidance scale with CLIP score and NIQE metric. Specific image examples at three parameter points are selected as Maximal CLIP (triangle), Minimal NIQE (diamond), and Balanced (star). More Images are presented in 10.

For LDMs, it’s crucial to reduce the guidance scale to zero during the mid-stages of sampling to prevent artifacts as shown in 4. However, if we tolerate the artifacts, the coarse structure of images can be better aligned with the text prompt. Therefore, choosing the guidance scale involves a trade-off between prompt alignment and image quality. To evaluate alignment and quality, we used CLIP score [35] and naturalness image quality evaluator (NIQE) [36] respectively.

As shown in 10, the CLIP score tends to increase with stronger guidance, indicating better alignment with the prompt. Conversely, NIQE scores worsen with strong guidance. At the optimal point for CLIP, image’s coarse features align well with the prompt but lose photorealism. At NIQE’s optimal point, the image appears locally natural and realistic but deviate significantly from the prompt. This trend was consistent across samples and prompts. We heuristically recommend \((\theta, \eta)\approx (1, 0.6)\) as a balanced setting.

6 Conclusion↩︎

In conclusion, we introduced upsample guidance, a training-free technique enabling the generation of high-fidelity images at high resolutions not originally trained on, demonstrating its applicability across various models and applications. Our method, derived from the diffusion process and not dependent on architecture, holds synergistic potential with any other techniques for high-resolution image generation. Moreover, UG uniquely enables the creation of images for datasets like CIFAR-10 \(64^2\), where high-resolution data may not originally exist.

In our experiments, we used a simple design of guidance scale for clarity, but there’s room for enhancement through replacing it with more elaborated functions. While focusing on spatial upsampling, further exploration into the best practice for temporal upsampling in video and audio models is needed. Especially, for audio, careful implementation is necessary as temporal downsampling may shift pitch.

The computational cost of UG is marginal, and ongoing research aimed at reducing inference steps further minimize the portion of time consumption due to UG in LDMs. We consider our method a universally beneficial add-on for generating high-resolution samples due to its ease of implementation and cost-effectiveness.

7 Impact Statements↩︎

This paper presents work whose goal is to advance the field of Machine Learning. There are many potential societal consequences of our work, none which we feel must be specifically highlighted here.

8 Calculation of Adjusted Time \(\tau\)↩︎

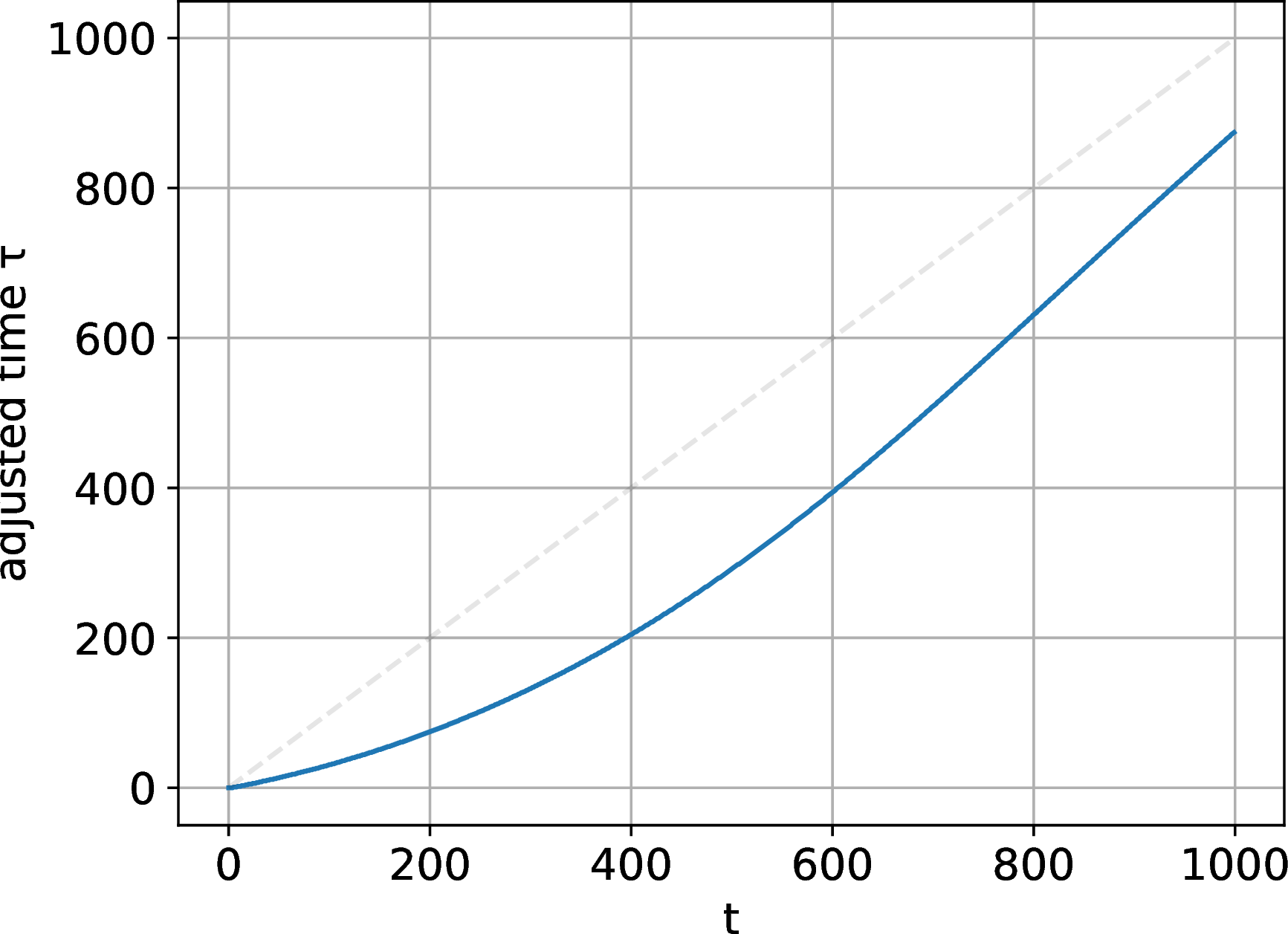

Time adjustment is for matching SNR, so analytically obtaining tau is possible by finding the inverse function of SNR over time. However, as most implementations encode time as an integer, it’s sufficient to numerically approximate values rather than compute exact ones. Below is a Python code for numerically calculating time adjustment for integer times, and 11 visualizes results calculated from a real model’s \(\alpha_t\).

def getTau(m, alphas): # m : scale factor # alphas : list of alpha_t snr = alphas / (1 - alphas) snr_low = alphas / (1 - alphas) * m**2 log_snr, log_snr_low = np.log(snr), np.log(snr_low) def getSingleMatch(t): differences = np.abs(log_snr_low[t] - log_snr) tau = np.argmin(differences) return tau return [getSingleMatch(t) for t in range(len(alphas))]

Figure 11: Time adjstment with scale factor \(m=2\) for the noise schedule of Stable Diffusion v1.5.

9 More Upsampling Examples↩︎

Figure 12: Images generated with and without UG, sampled on \(1280^2\) resolutions with \(m=2\).

Figure 13: Comparison of samples generated at a resolution of \(512^2\) using different upscaling techniques, based on a model trained on the CelebA dataset at a resolution of \(256^2\). ‘Lanczos’ and ‘CodeFormer [37]’ refer to images upscaled from \(256^2\) using their respective methods, while ‘UG’ denotes samples generated with \(w_t=1.35\), optimally chosen based on the experiments described in 5.4.1

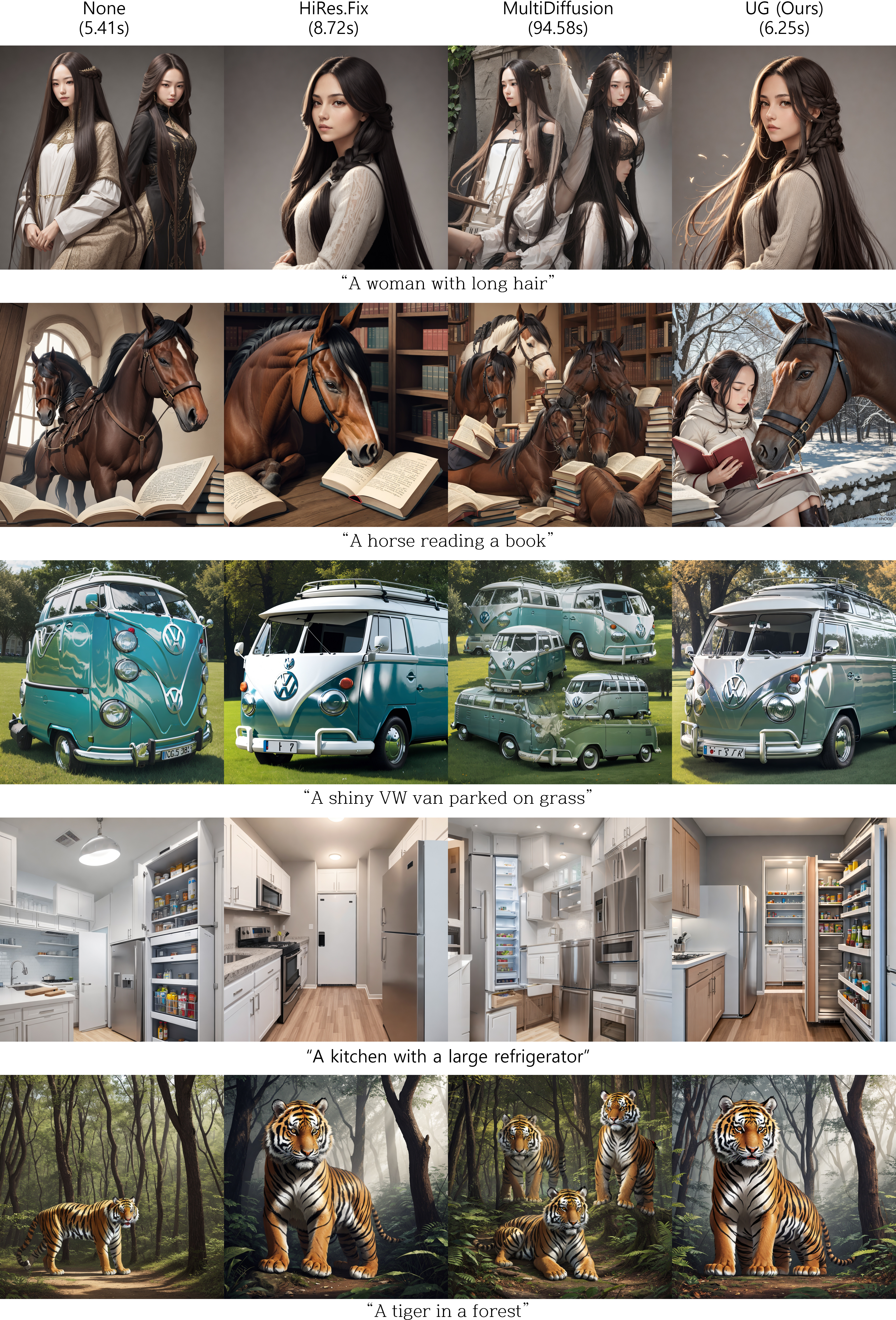

Figure 14: Samples generated at a resolution of \(1024^2\) using different upscaling methods applied to the same text-to-image model. ‘HiRes.Fix’ refers to the use of CodeFormer [37] as a super-resolution model, employing the method mentioned in 2.1. ‘MultiDiffusion [38]’ was used to generate samples via a panoramic approach. The numbers below each column title represent the average elapsed time to generate one sample on an RTX 3090 GPU. UG demonstrates not only competitive quality and fidelity but also relatively faster generation speeds.

10 Grid Images for Varying Guidance Scale↩︎

Figure 15: Full images obtained by varying the parameters involving the guidance scale in the experiments from 5.4.2