Incentives in Private Collaborative Machine Learning

April 02, 2024

Abstract

Collaborative machine learning involves training models on data from multiple parties but must incentivize their participation. Existing data valuation methods fairly value and reward each party based on shared data or model parameters but neglect the privacy risks involved. To address this, we introduce differential privacy (DP) as an incentive. Each party can select its required DP guarantee and perturb its sufficient statistic (SS) accordingly. The mediator values the perturbed SS by the Bayesian surprise it elicits about the model parameters. As our valuation function enforces a privacy-valuation trade-off, parties are deterred from selecting excessive DP guarantees that reduce the utility of the grand coalition’s model. Finally, the mediator rewards each party with different posterior samples of the model parameters. Such rewards still satisfy existing incentives like fairness but additionally preserve DP and a high similarity to the grand coalition’s posterior. We empirically demonstrate the effectiveness and practicality of our approach on synthetic and real-world datasets.

1 Introduction↩︎

Collaborative machine learning (ML) seeks to build ML models of higher quality by training on more data owned by multiple parties [1], [2]. For example, a hospital can improve its prediction of disease progression by training on data collected from more and diversified patients from other hospitals [3]. Likewise, a real-estate firm can improve its prediction of demand and price by training on data from others [4]. However, parties have two main concerns that discourage data sharing and participation in collaborative ML: (a) whether they benefit from the collaboration and (b) privacy.

Concern (a) arises as each party would expect the significant cost that it incurs to collect and share data (e.g., the risk of losing its competitive edge) to be covered. Some existing works [1], [5], among other data valuation methods,1 have recognized that parties require incentives to collaborate, such as a guaranteed fair higher reward from contributing more valuable data than the others, an individually rational higher reward from collaboration than in solitude, and a higher total reward (i.e., group welfare) whenever possible. Often, parties share and are rewarded with information (e.g., gradients [7] or parameters [1] of parametric ML models) computed from the shared data. However, these incentive-aware reward schemes expose parties to privacy risks.

On the other hand, some federated learning (FL) works [8] have addressed the privacy concern (b) and satisfied strict data protection laws (e.g., European Union’s General Data Protection Regulation) by enforcing differential privacy (DP) [9], [10] during the collaboration. Each party injects noise before sharing information to ensure that its shared information would not significantly alter a knowledgeable collaborating party’s or mediator’s belief about whether a datum was input to the algorithm. Injecting more noise leads to a stronger DP guarantee. As raised in [11], adding DP can invalidate game-theoretic properties and hence affect participation. For example, in the next paragraph, we will see that adding DP may lead to the collaboration being perceived as unfair and a lower group welfare. However, to the best of our knowledge (and as discussed in Sec. 7 and Fig. 6), there are no works that address both concerns, i.e., ensure the fairness, individual rationality, and group welfare incentives (see Sec. 4), alongside privacy. Thus, we aim to fill in this gap and design an incentive-aware yet privacy-preserving reward scheme by addressing the following questions:

If a party (e.g., hospital) requires a stronger DP guarantee, what should the impact be on its valuation and reward? Our answer is that, on average, its valuation and reward should decrease. Intuitively, it is unfair when this party gets a higher valuation due to randomness in the DP noise. More importantly, parties require guaranteed higher rewards to consider a weaker privacy guarantee [11], [12] which will help maximize the utility of the collaboratively trained model(s). As observed in [13], [14], the weaker the DP guarantee, the smaller the loss in model accuracy from enforcing DP. Thus, we will (i) assign a value to each party to enforce a privacy-valuation trade-off and incentivize parties against unfetteredly selecting an excessively strong DP guarantee,2 and (ii) flexibly allow each party to enforce a different DP guarantee without imposing a party’s need for strong DP on others. This new perspective and its realization is our main contribution.

To enforce a privacy-valuation trade-off, how should DP be ensured and a party’s data be valued (Sec. 3)? Initially, valuation using validation accuracy seems promising as the works of [16], [17] have empirically shown that adding noise will decrease the valuation. However, parties may be reluctant to contribute validation data due to privacy concerns and disagree on the validation set as they prioritize accurate predictions on different inputs (e.g., patient demographics). So, we revert to valuing parties based on the quality of inference of the model parameters under DP. Bayesian inference is a natural choice as it quantifies the impact of (additional DP) noise. In Sec. 2, we will explain how each party ensures DP by only sharing perturbed sufficient statistic (SS) with the mediator. The mediator values the perturbed SS by the surprise it elicits relative to the prior belief of model parameters. Intuitively, noisier perturbed SS is less valuable as the posterior belief of the model parameters will be more diffuse and similar to the prior. As parties prioritize obtaining a model for future predictions and may face legal/decision difficulties in implementing monetary payments, we reward each party with posterior samples of the model parameters (in short, model reward) instead.

How should the reward scheme be designed to satisfy the aforementioned privacy, individual rationality, and fairness incentives (Sec. 4)? Our scheme will naturally satisfy the privacy incentive as any post-processing of the perturbed SS will preserve DP. To satisfy fairness and individual rationality, we set the target reward value for every party using \(\rho\)-Shapley value [1]. Lastly, to realize these target reward values, how should the model reward be generated for each party (Sec. 5)? Instead of rewarding all parties with samples from the same (grand coalition’s) posterior of the model parameters given all their perturbed SS (which would be unfair if their valuations differ), our reward control mechanism generates a different posterior for each party that still preserves a high similarity to the grand coalition’s posterior. Concretely, the mediator scales the SS by a factor between \(0\) and \(1\) before sampling to control the impact of data on the posterior (by tempering the data likelihood). Scaling the SS by a factor of \(0\), \(1\), and between \(0\) and \(1\) yield the prior, posterior, and their interpolation, respectively. We then solve for the factor to achieve the target reward value.

By answering the above questions, our work here provides the following novel contributions3:

\(\bullet\)

A new privacy-valuation trade-off criterion for valuation functions that is provably satisfied by the combination of our Bayesian surprise valuation function with DP noise-aware inference (Sec. 3);

New incentives including DP (while deterring excessive DP) and similarity to grand coalition’s model (Sec. 4);

Reward control mechanisms (Sec. 5) to generate posterior samples of the model parameters for each party that achieve a target reward value and the aforementioned incentives; one such mechanism tempers the likelihood of the data by scaling the SS and data quantity.

2 Collaborative ML Problem with Privacy Incentive↩︎

Our private collaborative ML problem setup comprises a mediator coordinating information sharing, valuation, and reward, and \(n\) parties performing a common ML task (e.g., predicting disease progression). Let the set \(N \triangleq \{1,\ldots,n\}\) denote the grand coalition of \(n\) parties. Each party \(i\) owns a private dataset \({\mathcal{D}}_i\) which cannot be directly shared with others, including the mediator. What alternative information should each party provide to the mediator for collaborative training of an ML model?

To ease aggregation, this work focuses only on Bayesian models with sufficient statistic (SS), such as exponential family models [18], Bayesian linear regression [19], and generalized linear models, including Bayesian logistic regression [20] (with approximate SS).

Definition 1 (Sufficient Statistic (SS) [21], [22]). The statistic \({\boldsymbol{s}}_i\) is a SS for the dataset \({\mathcal{D}}_i\) if the model parameters \(\theta\) and dataset \({\mathcal{D}}_i\) are conditionally independent given \({\boldsymbol{s}}_i\), i.e., \({p(\theta | {\boldsymbol{s}}_i, {\mathcal{D}}_i) = p(\theta | {\boldsymbol{s}}_i)}\).

We propose that each party \(i\) shares its SS \({\boldsymbol{s}}_i\) for and in place of its dataset \({\mathcal{D}}_i\) to protect the privacy of \({\mathcal{D}}_i\). We assume that the parties have agreed to adopt a common Bayesian model with the same prior \(p(\theta)\) of model parameters \(\theta\), and each party \(i\)’s dataset \({\mathcal{D}}_i\) is independently drawn from the likelihood \(p({\mathcal{D}}_i | \theta)\) that is conjugate to the prior \(p(\theta)\) (i.e., belonging to an exponential family). The mediator can compute the posterior belief \(p(\theta | \{{\mathcal{D}}_i\}_{i \in N})\) of model parameters \(\theta\) given the grand coalition \(N\)’s datasets using a function \(f_\theta\) of the sum over shared SS: \(p(\theta | \{{\mathcal{D}}_i\}_{i \in N}) \propto p(\theta)\;f_\theta(\sum_{i \in N} {\boldsymbol{s}}_i)\). We give a concrete example and the mathematical details of SS in Apps. 9.1 and 13, respectively.

Privacy Incentive. However, sharing the exact SS \({\boldsymbol{s}}_N \triangleq \{{\boldsymbol{s}}_i\}_{i \in N}\) will not ensure privacy as the mediator can draw inferences about individual datum in the private datasets \({\mathcal{D}}_N \triangleq \{{\mathcal{D}}_i\}_{i \in N}\). To mitigate the privacy risk, each party \(i\) should choose its required privacy level \(\epsilon_i\) and enforce \((\lambda, \epsilon_i)\)-Rényi differential privacy.4 In Def. 2, a smaller \(\epsilon_i\) corresponds to a stronger DP guarantee.4

Definition 2 (Rényi Differential Privacy (DP) [23]). A randomized algorithm \(\mathcal{R}: {\mathcal{D}}\rightarrow {\boldsymbol{o}}\) is \((\lambda, \epsilon)\)-Rényi differentially private if for all neighboring datasets \({\mathcal{D}}\) and \({\mathcal{D}}'\), the Rényi divergence4 of order4 \(\lambda>1\) is \(D_\lambda(\mathcal{R}({\mathcal{D}})\;||\;\mathcal{R}({\mathcal{D}}')) \leq \epsilon\).

Party \(i\) can enforce (example-level)4 \((\lambda, \epsilon_i)\)-Rényi DP by applying the Gaussian mechanism: It generates perturbed SS \({\boldsymbol{o}}_i \triangleq {\boldsymbol{s}}_i + {\boldsymbol{z}}_i\) by sampling a Gaussian noise vector \({\boldsymbol{z}}_i\) from the distribution \(p(Z_i)=\mathcal{N}(\boldsymbol{0},\;0.5\;({\lambda}/\epsilon_i)\; \Delta_2^2(g)\;\boldsymbol{I})\) where \(\Delta_2^2(g)\) is the squared \(\ell_2\)-sensitivity4 of the function \(g\) that maps the dataset \({\mathcal{D}}_i\) to the SS \({\boldsymbol{s}}_i\). We choose Rényi DP over the commonly used \((\epsilon, \delta)\)-DP as it gives a stronger privacy definition and allows a more convenient composition of the Gaussian mechanisms [23], as explained in App. 9.2.

Each party \(i\) will share (i) the number \(c_i \triangleq |{\mathcal{D}}_i|\) of data points in its dataset \({\mathcal{D}}_i\), (ii) its perturbed SS \({\boldsymbol{o}}_i\),5 and (iii) its Gaussian distribution \(p(Z_i)\) with the mediator. As DP algorithms are robust to post-processing, the mediator’s subsequent operations of \({\boldsymbol{o}}_i\) (with no further access to the dataset) will preserve the same DP guarantees. The mediator uses such information to quantify the impact of the DP noise and compute the DP noise-aware posterior6 \(p(\theta | \{{\boldsymbol{o}}_i\}_{i \in N})\) via Markov Chain Monte Carlo (MCMC) sampling steps outlined by [24]–[26].

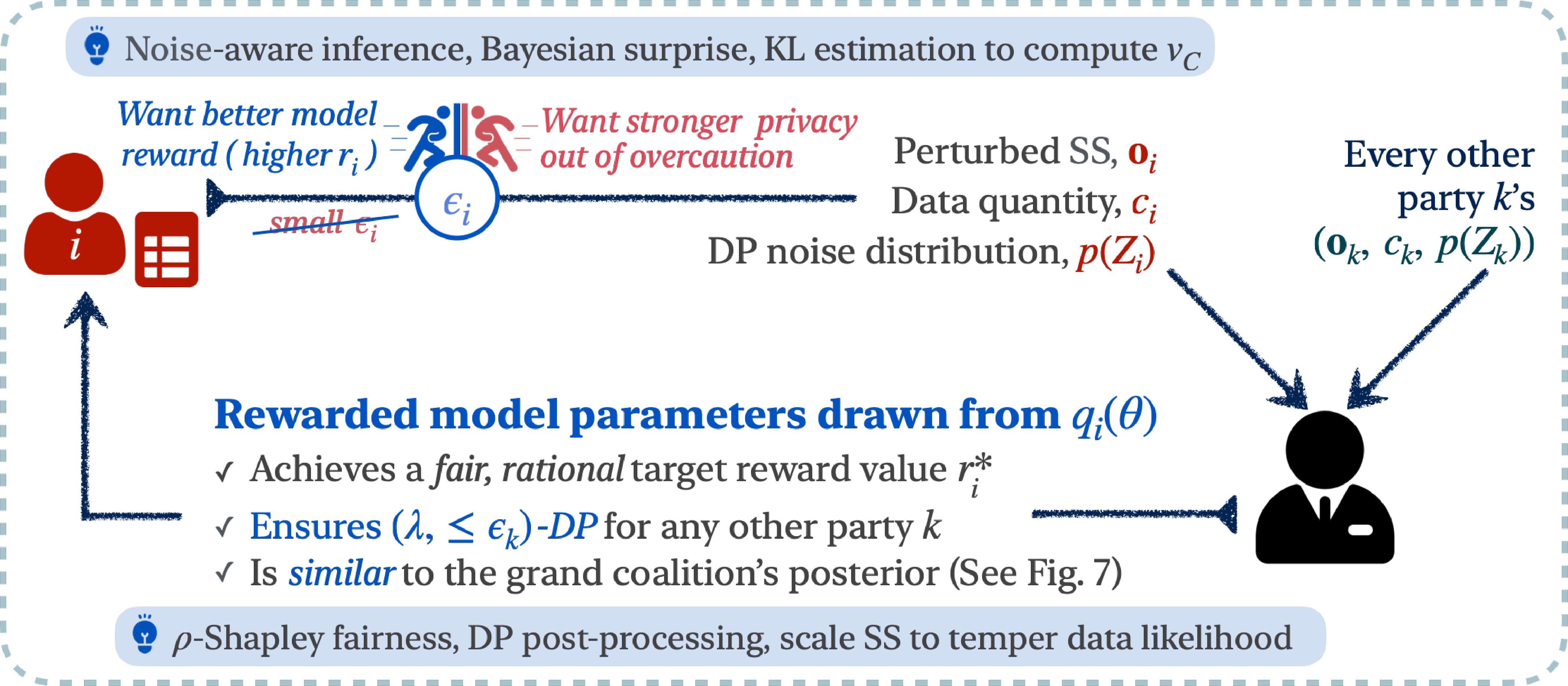

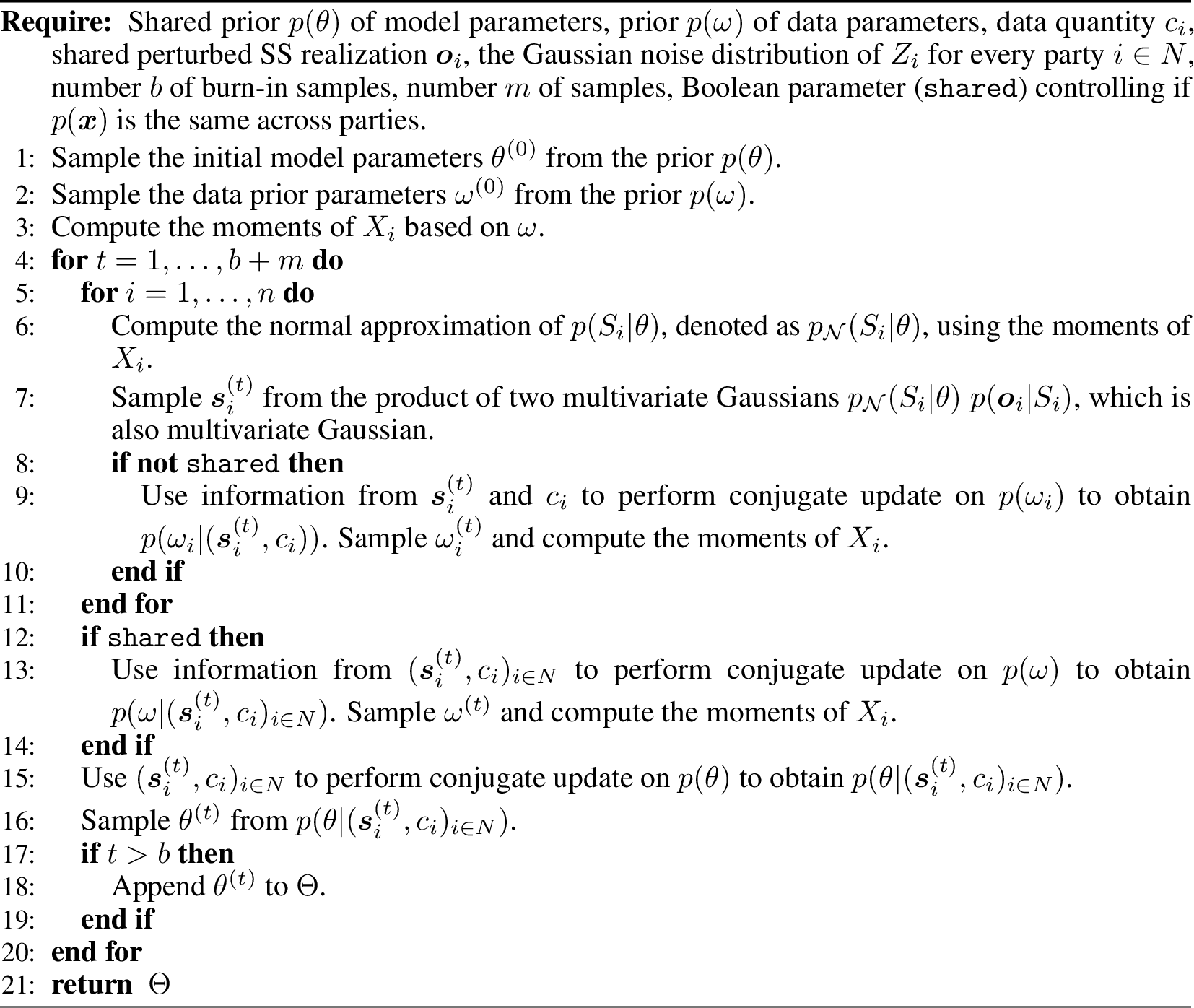

In this section, we have satisfied the privacy incentive. In Sec. 3, we assign a value \(v_C\) to each coalition \(C \subseteq N\)’s perturbed SS \({\boldsymbol{o}}_C \triangleq \{{\boldsymbol{o}}_i\}_{i \in C}\) that would decrease, on average, as the DP guarantee strengthens. In Secs. 4 and 5, we outline our reward scheme: Each party \(i\) will be rewarded with model parameters sampled from \(q_i(\theta)\) (in short, model reward) for future predictions with an appropriate reward value \(r_i\) (decided based on \((v_C)_{C \subseteq N}\)) to satisfy collaborative ML incentives (e.g., individual rationality, fairness). Our work’s main contributions, notations, and setup are detailed in Fig. 1. The main steps involved are detailed in Algo. [alg-overview].

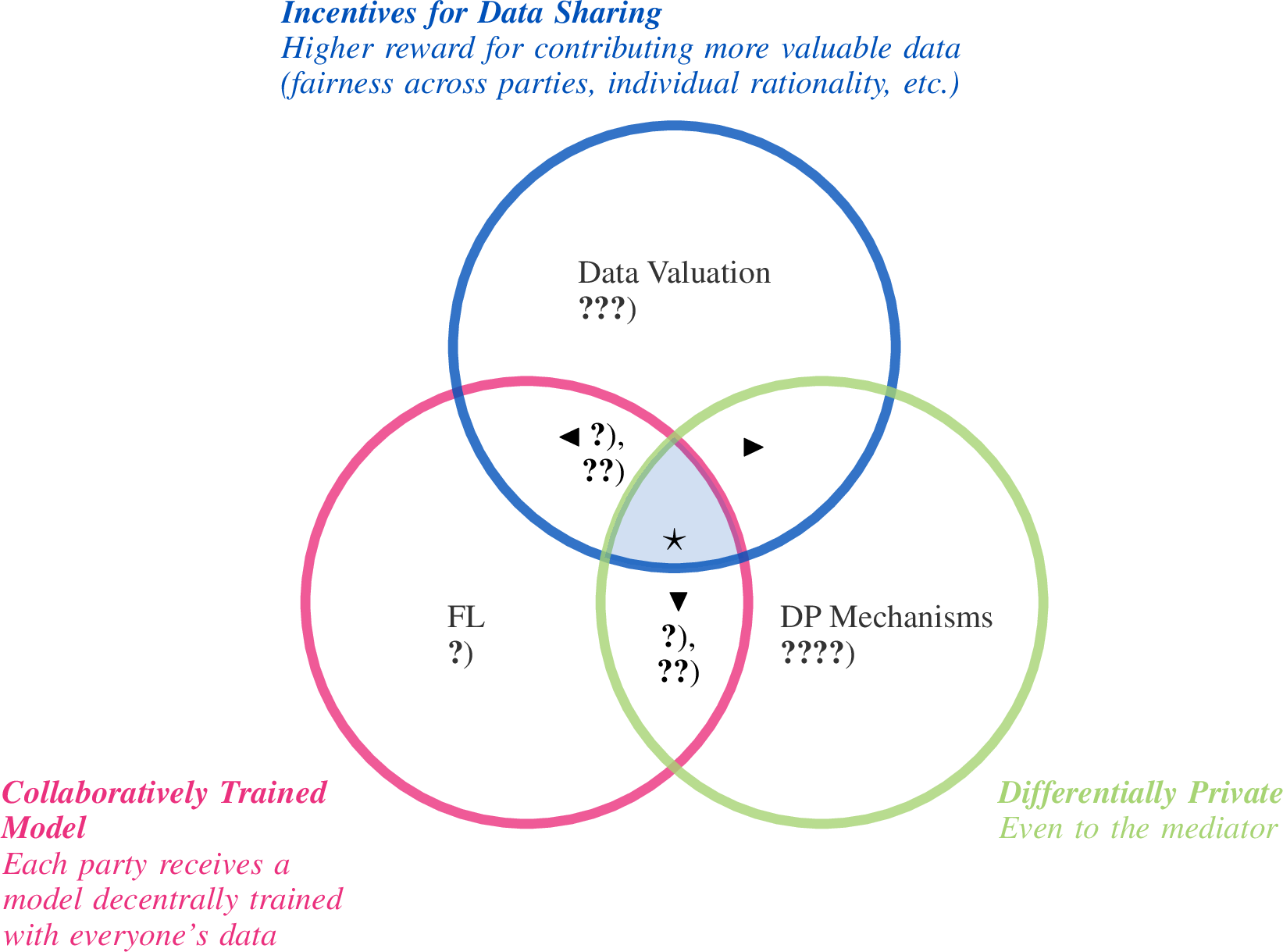

Figure 1: An overview of our private collaborative ML problem setup from party \(i\)’s perspective and our novel contributions (ideas in blue, novel combination of solutions in blue). We (i) enforce a privacy-reward trade-off (using each party \(i\)’s desire for a higher-quality model reward in collaborative ML) to deter party \(i\) from unfetteredly/overcautiously selecting an excessive DP guarantee (small \({\epsilon}_i\)), (ii) ensure DP in valuation and rewards, and (iii) preserve similarity of its model reward \(q_i(\theta)\) to the grand coalition \(N\)’s posterior \(p(\theta|{\boldsymbol{o}}_N)\) to achieve a high utility.

3 Valuation of Perturbed Sufficient Statistics↩︎

The perturbed SS \({\boldsymbol{o}}_C\) of coalition \(C\) is more valuable and assigned a higher value \(v_C\) if it yields a model (in our work here, the DP noise-aware posterior \(p(\theta | {\boldsymbol{o}}_C)\)) of higher quality. Most data valuation methods [16], [27], [28] measure the quality of an ML model by its performance on a validation set. However, it may be challenging for collaborating parties (e.g., competing healthcare firms) to create and agree on a large, representative validation set as they may prioritize accurate predictions on different inputs (e.g., patient demographics) [1]. The challenge increases when each firm requires privacy and avoids data sharing. Other valuation methods [1], [29] have directly used the private inputs of the data (e.g., design matrix). Here, we propose to value the perturbed SS \({\boldsymbol{o}}_C\) of coalition \(C\) based on the surprise [30] that it elicits from the prior belief of model parameters, as defined below:

Definition 3 (Valuation via Bayesian Surprise). The value of coalition \(C\) or its surprise \(v_C\) is the KL divergence \(D_{\mathrm{KL}}\left(p(\theta|{\boldsymbol{o}}_C); p(\theta)\right)\) between posterior \(p(\theta|{\boldsymbol{o}}_C)\) vs. prior \(p(\theta)\).

From Def. 3, a greater surprise would mean that more bits will be needed to encode the information in \(p(\theta|{\boldsymbol{o}}_C)\) given that others already know \(p(\theta)\). Otherwise, a smaller surprise means our prior belief has not been updated significantly. Moreover, as the valuation depends on the observed \({\boldsymbol{o}}_C\), the surprise elicited by the exact SS and data points will indirectly influence the valuation. Next, by exploiting the equality of the expected Bayesian surprise and the information gain on model parameters \(\theta\) given perturbed SS \({\boldsymbol{o}}_C\) (i.e., \(\mathbb{E}_{{\boldsymbol{o}}_C}[v_C] = \mathbb{I}\left(\theta; {\boldsymbol{o}}_C\right)\)), we can establish the following essential properties of our valuation function:

Non-negativity. \(\forall C\subseteq N \;\forall {\boldsymbol{o}}_C\;\;v_C \geq 0\;.\) This is due to the non-negativity of KL divergence.

Party monotonicity. In expectation w.r.t. \({\boldsymbol{o}}_C\), adding a party will not decrease the valuation: \(\forall C\subseteq C'\subseteq N\;\;\mathbb{E}_{{\boldsymbol{o}}_{C'}} [v_{C'}] \geq \mathbb{E}_{{\boldsymbol{o}}_C} [v_C]\;.\) The proof (App. 11.1) uses the “information never hurts” property.

Privacy-valuation trade-off. When the DP guarantee is strengthened from \(\epsilon_i\) to a smaller \(\epsilon_i^s\) and independent Gaussian noise is added to \({\boldsymbol{o}}_i\) to generate \({\boldsymbol{o}}^s_i\), in expectation, the value of any coalition \(C\) containing \(i\) will strictly decrease: Let \(v_C^s\) denote the value of coalition \(C\) with the random variable and realization of \({\boldsymbol{o}}_i\) replaced by \({\boldsymbol{o}}^s_i\). Then, \({(i \in C)} \land (\epsilon^s_i < \epsilon_i)\;\Rightarrow \mathbb{E}_{{\boldsymbol{o}}_C} [v_C] > \mathbb{E}_{{\boldsymbol{o}}_C^s} [v_C^s]\;.\)

The proof of [V3] (App. 11.1) uses the data processing inequality of information gain and the conditional independence between \(\theta\) and \({\boldsymbol{o}}_i^s\) given \({\boldsymbol{o}}_i\). Together, these properties address an important question of how to ensure DP and value a party’s data to enforce a privacy-valuation trade-off (Sec. 1). Additionally, in App. 11.2, we prove that in expectation, our Bayesian surprise valuation is equivalent to the alternative valuation that measures the similarity of \(p(\theta|{\boldsymbol{o}}_C)\) to the grand coalition \(N\)’s DP noise-aware posterior \(p(\theta|{\boldsymbol{o}}_N)\).

Implementation. Computing the Bayesian surprise valuation is intractable since the DP noise-aware posterior \(p(\theta | {\boldsymbol{o}}_C)\) and its KL divergence from \(p(\theta)\) do not have a closed-form expression. Nonetheless, there exist approximate inference methods like the Markov chain Monte Carlo (MCMC) sampling to estimate \(p(\theta | {\boldsymbol{o}}_C)\) efficiently, as discussed in App. 9.3. As our valuation function requires estimating the value of multiple coalitions and the posterior sampling step is costly, we prefer estimators with a low time complexity and a reasonable accuracy for a moderate number \(m\) of samples. We recommend KL estimation to be performed using the nearest-neighbors method [31], and repeated and averaged to reduce the variance of the estimate (see App. 11.3 for a discussion). The nearest-neighbor KL estimator is also asymptotically unbiased; drawing more samples would reduce the bias and variance of our estimates and is more likely to ensure fairness — for example, party \(i\)’s sampled valuation is only larger than \(j\)’s if \(i\)’s true valuation is higher.

Remark. Our valuation is based on the submitted information \(\{c_i, {\boldsymbol{o}}_i, p(Z_i)\}_{i \in N}\) without verifying or incentivizing their truthfulness. We discuss how this limitation is shared by existing works and can be overcome by legal contracts and trusted data-sharing platforms in App. 17.

4 Reward Scheme for Ensuring Incentives↩︎

After valuation, the mediator should reward each party \(i\) with a model reward (i.e., consisting of samples from \(q_i(\theta)\)) for future predictions. Concretely, \(q_i(\theta)\) is a belief of model parameters \(\theta\) after learning from the perturbed SS \({\boldsymbol{o}}_N\). As in Sec. 3, we value party \(i\)’s model reward as the KL divergence from the prior: \(r_i \triangleq D_{\mathrm{KL}}\left(q_i(\theta); p(\theta)\right)\). The mediator will first decide the target reward value \(r^*_i\) for every party \(i \in N\) using \(\{v_C\}_{C \subseteq N}\) to satisfy incentives such as fairness. The mediator will then control and generate a different \(q_i(\theta)\) for every party \(i\in N\) such that \(r_i = r^*_i\) using reward control mechanisms from Sec. 5. We will now outline the incentives and desiderata for model reward \(q_i(\theta)\) and reward values \(r_i\) and \(r^*_i\) for every party \(i \in N\)when the grand coalition forms7.

DP-Feasibility. In party \(i\)’s reward, any other party \(k\) is still guaranteed at least its original \((\lambda,{\epsilon}_k)\)-DP guarantee or stronger. The implication is that the generation of party \(i\)’s reward should not require more private information (e.g., SS) from party \(k\).

Efficiency. There is a party \(i \in N\) whose model reward is the grand coalition \(N\)’s posterior, i.e., \(q_i(\theta) = p({\theta | {\boldsymbol{o}}_N})\). It follows that \(r_i = v_N\;.\)

Fairness. The target reward values \((r^*_i)_{i \in N}\) must consider the coalition values \(\{v_C\}_{C \subseteq N}\) and satisfy properties [FAIR1] to [FAIR4] given in [1] and reproduced in App. 12.2. The monotonicity axiom [FAIR4] ensures using a valuation function which enforces that a privacy-valuation trade-off will translate to a privacy-reward trade-off and deter parties from selecting excessive DP guarantees.

Individual Rationality. Each party should receive a model reward that is more valuable than the model trained on its perturbed SS alone: \({\forall i \in N}\;\;r^*_i \geq v_i\;.\)

Similarity to Grand Coalition’s Model. Among multiple model rewards \(q_i(\theta)\) whose value \(r_i\) equates the target reward \(r^*_i\), we secondarily prefer one with a higher similarity \(r'_i = -D_{\mathrm{KL}}\left(p(\theta | {\boldsymbol{o}}_N);q_i(\theta)\right)\) to \(p(\theta | {\boldsymbol{o}}_N)\).8

Group Welfare. The reward scheme should maximize the total reward value \(\sum^n_{i=1} r_i\) to increase the utility of model reward for each party and achieve the aims of collaborative ML.

Choice of desiderata. We adopt the desiderata from [1] but make [dp-feasibility] and [efficiency] more specific (by considering each party’s actual reward \(q_i(\theta)\) over just its values \(r_i\) and \(v_N\)) and introduce [similarity]. Firstly, for our Bayesian surprise valuation function, the feasibility constraint of [1] is inappropriate as removing a party or adding some noise realization may result in \(r_i > v_N\),9 so we propose [dp-feasibility] instead. Next, we recognize that party \(i\) is not indifferent to all model rewards \(q_i(\theta)\) with the same target reward value as they may have different utility (predictive performance). Thus, we propose our more specific [efficiency] and a secondary desideratum [similarity]. As [similarity] is considered after other desiderata, it does not conflict with existing results, e.g., design for \((r^*_i)_{i \in N}\) to satisfy other incentives.

Remark on Rationality. In [rational], a party’s model reward is compared to the model trained its perturbed SS instead of its exact SS alone. This is because the mediator cannot access (and value the model trained on) the private exact SS. Moreover, with no restrictions on the maximum DP noise, the value of some party’s exact SS may exceed the grand coalition’s perturbed SS when parties require strong DP guarantees. [rational] is sufficient when parties require DP when alone to protect data from curious users of their ML model [9], [24], [26]. For example, a hospital may not want doctors to infer specific patients’ data. When parties do not require DP when alone, our reward scheme cannot theoretically ensure that the model reward from collaboration is better than using the exact SS. We further discuss this limitation in App. 12.3.

Design of \((r^*_i)_{i \in N}\). To satisfy the desiderata from [1] (including our fairness [fairness] and rationality [rational] incentives), we adopt their \(\rho\)-Shapley fair reward scheme with \(\rho\in(0, 1]\) that sets \(r^*_i = v_N (\phi_i / \max_{k\in N} \phi_k )^\rho\) with Shapley value10 \(\phi_i \triangleq \textstyle \left({1}/{n}\right) \sum_{C \subseteq N \setminus i} \left[ {{n-1} \choose |C|}^{-1} \left( v_{C \cup \{i\}} - v_C \right) \right]\). Shapley value’s consideration of marginal contribution (MC) to all coalitions is key to ensuring strict desirability [FAIR3] such that party \(i\) obtains a higher reward than party \(k\) (despite \(v_i = v_k\)) if \(i\)’s perturbed SS adds more value to every other non-empty coalition. Applying Theorem 1 of [1], the mediator should set \(\rho\) between \(0\) and \(\min_{i \in N}{{\log(v_{i}/v_N)}/{\log(\phi_i/\max_k\phi_k)}}\) to guarantee rationality. Selecting a larger \(\rho\) incentivizes a party with a high-quality perturbed SS to share by fairly limiting the benefits to parties with lower-quality ones. Selecting a smaller \(\rho\) reward parties more equally and increase group welfare [group-welfare]. Refer to Sec. 4.2 of [1] for a deeper analysis of the impact of varying \(\rho\). These results hold for any choice of \((\lambda, \epsilon_i)\).

After explaining the desiderata for model reward \(q_i(\theta)\) and reward values \(r_i\) and \(r^*_i\) for every party \(i \in N\), we are now ready to solve for \(q_i(\theta)\) such that \(r_i = r^*_i\).

5 Reward Control Mechanisms↩︎

This section discusses two mechanisms to generate model reward \(q_i(\theta)\) with different attained reward value \(r_i\) for every party \(i \in N\) by controlling a single continuous parameter and solving for its value such that the attained reward value equates the target reward value: \(r_i = r^*_i\). We will discuss the more obvious reward mechanism in Sec. 5.1 to contrast its cons with the pros of that in Sec. 5.2. Both reward mechanisms do not request new information from the parties; thus, the DP post-processing property applies, and every party \(k\) is still guaranteed at least its original DP guarantee or stronger in all model rewards (i.e., [dp-feasibility] holds).

5.1 Reward Control via Noise Addition↩︎

The work of [1] controls the reward values by adding Gaussian noise to the data outputs. We adapt it such that the mediator controls the reward value for party \(i \in N\) by adding Gaussian noise to the perturbed SS of each party \(k \in N\) instead. To generate the model reward for party \(i\) (superscripted), the mediator will reparameterize the sampled Gaussian noise vectors \(\{{\boldsymbol{e}}^i_k \sim \mathcal{N}(\mathbf{0}, {\boldsymbol{I}})\}_{k \in N}\) to generate the further perturbed SS11 \[{\boldsymbol{t}}^i_N \triangleq \left\{ {\boldsymbol{t}}_k^i \triangleq {\boldsymbol{o}}_k + \left(0.5\;\lambda\; \Delta_2^2(g_k) \;\tau_i\right)^{1/2}{\boldsymbol{e}}^i_k \right\}_{k \in N}\] where \(\Delta_2^2(g_k)\) is the squared \(\ell_2\)-sensitivity of function \(g_k\) that computes the exact SS \({\boldsymbol{s}}_k\) from dataset \({\mathcal{D}}_k\) (Sec. 2). Then, the mediator rewards party \(i\) with samples of model parameters \(\theta\) from the new DP noise-aware posterior \(q_i(\theta) = p(\theta|{\boldsymbol{t}}^i_N)\).

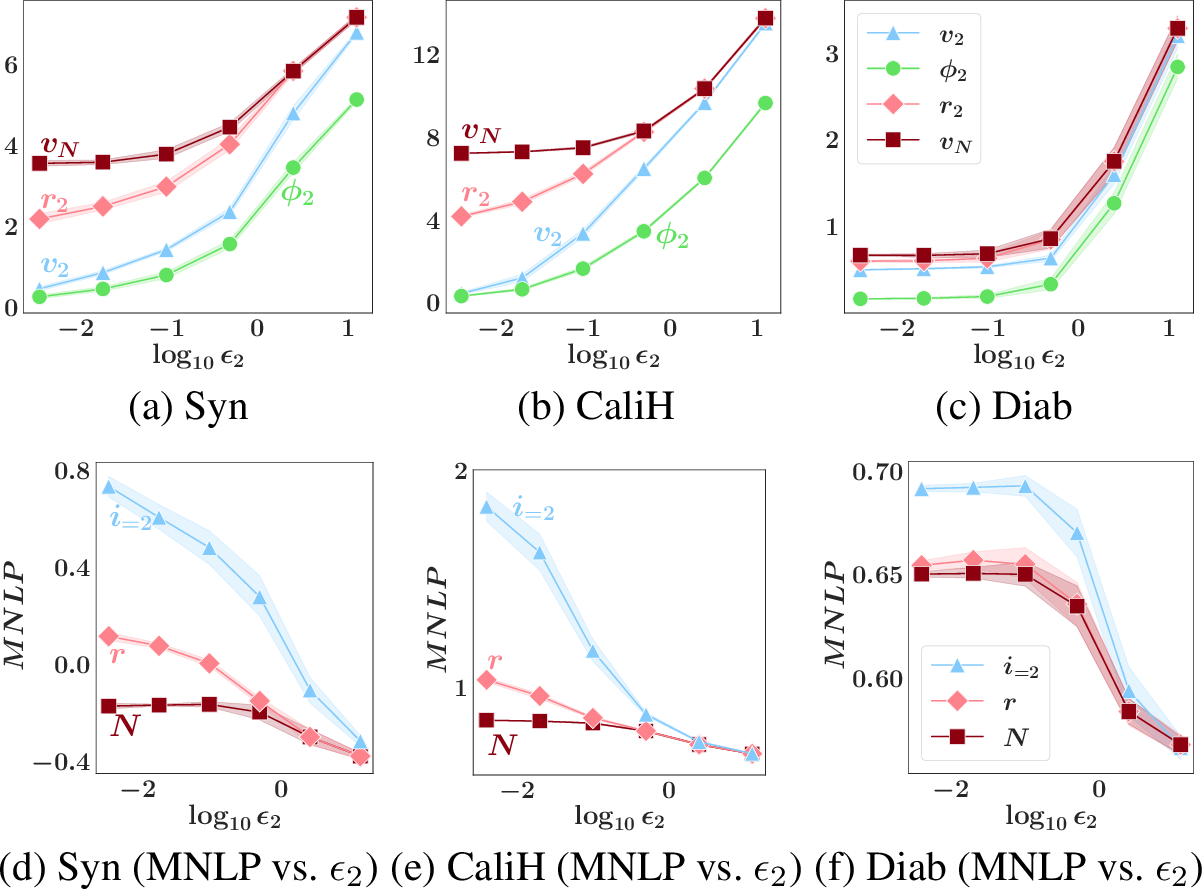

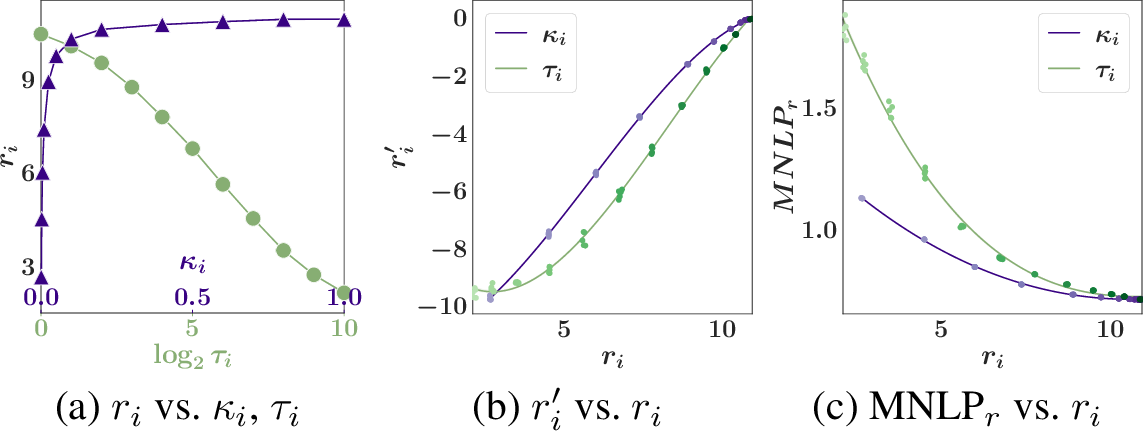

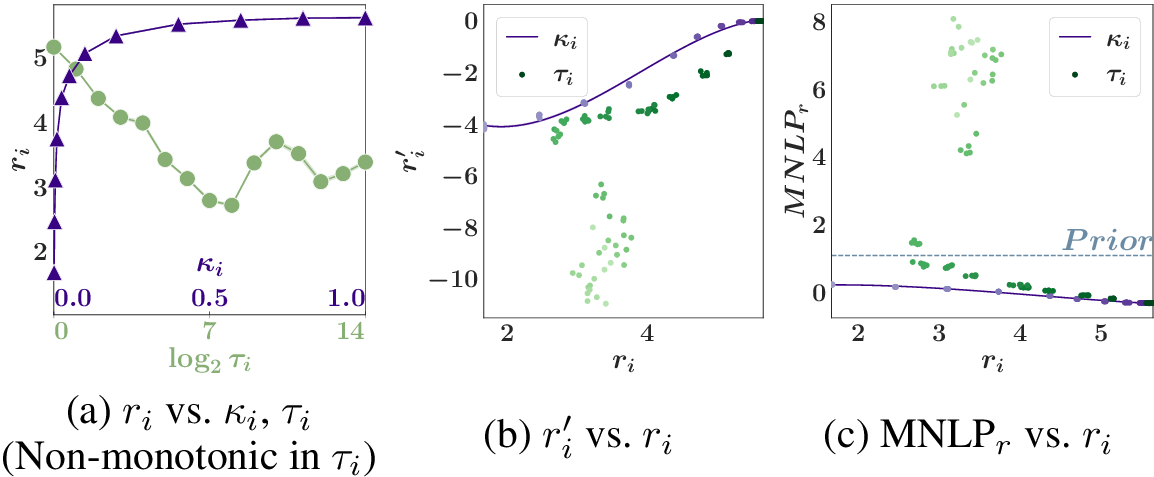

Here, the scalar \({\tau_i}\geq 0\) controls the additional noise variance and can be optimized via root-finding to achieve \(r_i = r^*_i\). The main advantage of this reward control mechanism is its interpretation of strengthening party \(k\)’s DP guarantee in all parties’ model rewards (see [dp-feasibility]). For example, it can be derived that if \({\epsilon}_k = \infty\), then party \(k\) will now enjoy \((\lambda, 1/ {\tau_i})\)-DP guarantee in party \(i\)’s reward instead. If \({\epsilon}_k < \infty\), then party \(k\) will now enjoy a stronger \((\lambda, {\epsilon}_k/(1+{\tau_i}{\epsilon}_k))\)-DP guarantee since \({\epsilon}_k/(1+{\tau_i}{\epsilon}_k) < {\epsilon}_k\). However, this mechanism has some disadvantages. Firstly, for the same scaled additional noise variance \({\tau_i}\), using different noise realizations \(\{{\boldsymbol{e}}^i_k\}_{k \in N}\) will lead to model reward \(q_i(\theta)\) with varying similarity \(r'_i\) to the grand coalition \(N\)’s posterior. The mechanism cannot efficiently select the best model reward with higher \(r'_i\) ([similarity]). Secondly, the value of \(r_i\) computed using such \({\boldsymbol{t}}^i_k\) may be non-monotonic12 in \(\tau_i\) (see Fig. [fig:reward-compare]d), which makes it hard to bracket the smallest root \({\tau_i}\) that solves for \(r_i = r^*_i\). To address these disadvantages, we will propose the next mechanism.

5.2 Reward Control via Likelihood Tempering↩︎

Intuitively, a party \(i\) who is assigned a lower target reward value \(r^*_i < v_N\) should be rewarded with posterior samples of model parameters \(\theta\) that use less information from the datasets and SS of all parties. Sparked by the diffuse posterior algorithm [32], we propose that the mediator can generate such “less informative” samples for party \(i\) using the normalized posterior13 \[q_i(\theta) \propto p(\theta) \left[p({\mathcal{D}}_N|\theta)\right]^{{\kappa_i}} \label{eq:m4}\tag{1}\] involving the product of the prior \(p(\theta)\) and the data likelihood \(p({\mathcal{D}}_N|\theta)\) to the power of (or, said in another way, tempered by a factor of) \({\kappa_i}\). Notice that setting \({\kappa_i}=0\) and \({\kappa_i}=1\) recover the prior \(p(\theta)\) and the posterior \(p(\theta|{\mathcal{D}}_N)\), respectively. Thus, setting \({\kappa_i}\in (0, 1)\) should smoothly interpolate between both. We can optimize \({\kappa_i}\) to control \(q_i(\theta)\) so that \(r_i = r^*_i < v_N\). But, how do we temper the likelihood? We start by examining the easier, non-private setting. In Sec. 2, we stated that under our assumptions, the posterior \(p(\theta|{\mathcal{D}}_N)\) can be computed by using the sum of data quantities \(\{c_k\}_{k \in N}\) and sum of exact SS \({\boldsymbol{s}}_N\). In App. 13, we further show that using the tempered likelihood \([p({\mathcal{D}}_N|\theta)]^{\kappa_i}\) is equivalent to scaling the data quantities and the exact SS \({\boldsymbol{s}}_N\) by the factor \({\kappa_i}\) beforehand. In the private setting, the mediator can similarly scale the data quantities, the perturbed SS in \({\boldsymbol{o}}_N\) (instead of the inaccessible exact SS), and the \(\ell_2\)-sensitivity by the factor \({\kappa_i}\) beforehand; see App. 13.3 for details. This likelihood tempering mechanism addresses both disadvantages of Sec. 5.1:

\(\bullet\)

There is no need to sample additional DP noise. We empirically show that tempering the likelihood produces a model reward that interpolates between the prior vs. posterior (in App. 15) and preserves a higher similarity \(r'_i\) to the grand coalition \(N\)’s posterior ([similarity] and hence, more group welfare [group-welfare]) and better predictive performance than noise addition (see Sec. 6).

Using a smaller tempering factor \({\kappa_i}\in [0,1]\) provably decreases the attained reward value \(r_i\) (see App. 13). Thus, as the relationship between \(r_i\) and \({\kappa_i}\) is monotonic, we can find the only root by searching the interval \([0,1]\).

Our discussion on improving the estimate of \(v_C\) in the paragraph on implementation in Sec. 3 also applies to the estimate of \(r_i\) in Secs. 5.1 and 5.2. Thus, solving for \({\tau_i}\) or \({\kappa_i}\) to achieve \(r_i = r^*_i\) using any root-finding algorithm can only be accurate up to the variance in our estimate.

6 Experiments and Discussion↩︎

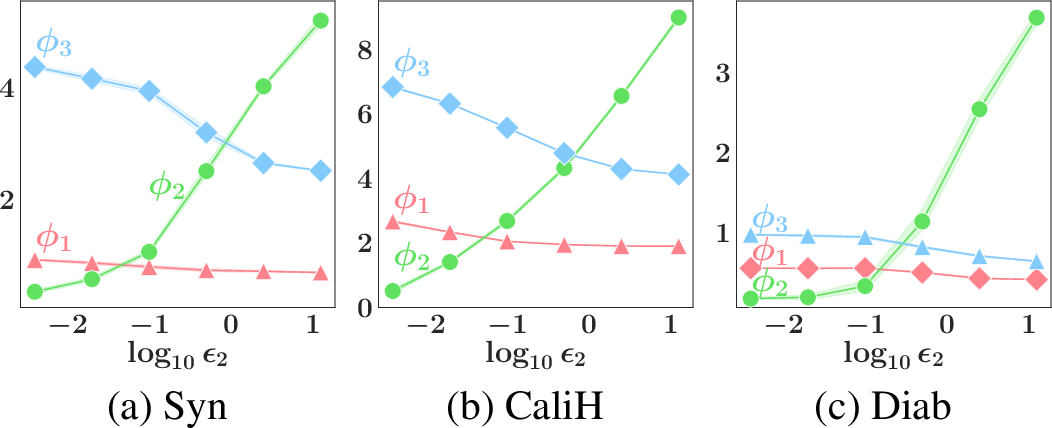

This section empirically evaluates the privacy-valuation and privacy-reward trade-offs (Sec. 6.1), reward control mechanisms (Sec. 6.2), and their relationship with the utility of the model rewards (Sec. 6.3). The time complexity of our scheme is analyzed in App. 14 and baseline methods are discussed in App. 16.3. We consider Bayesian linear regression (BLR) with unknown variance on the Syn and CaliH datasets, and Bayesian logistic regression on the Diab dataset with \(3\) collaborating parties (see App. 16.1 for details) and enforce \((2,{\epsilon}_i)\)-Rényi DP. For Synthetic BLR (Syn), we select and use a normal inverse-gamma distribution (i) to generate the true regression model weights, variance, and a \(2\)D-dataset and (ii) as our model prior \(p(\theta)\). We consider \(3\) parties with \(c_1=100\), \(c_2=200\), \(c_3=400\) data points, respectively. For Californian Housing dataset (CaliH) [34], as in [1], \(60\%\) of the CaliH data is deemed “public/historic” and used to pre-train a neural network without DP. Real estate firms may only care about the privacy of their newest transactions. As the parties’ features-house values relationship may differ from the “public” dataset, we do transfer learning and selectively retrain only the last layer with BLR using the parties’ data. Parties \(1\) to \(3\) have, respectively, \(20\%, 30\%\), and \(50\%\) of the dataset with \(6581\) data points and \(6\) features. For PIMA Indian Diabetes classification dataset (Diab) [35], we use a Bayesian logistic regression model to predict whether a patient has diabetes based on sensitive inputs (e.g., patient’s age, BMI, number of pregnancies). To reduce the training time, we only use the \(4\) PCA main components as features (to generate the approximate SS) [26]. Parties \(1, 2\), and \(3\) have, respectively, \(20\%\), \(30\%\), and \(50\%\) of the dataset with \(614\) data points. As we are mainly interested14 in the impact of one party controlling its privacy guarantee \({\epsilon}_i\), for all experiments, we only vary party \(2\)’s from the default \(0.1\). We fix the privacy guarantees of others (\({\epsilon}_1 = {\epsilon}_3 = 0.2\)) and \(\rho=0.2\) in the \(\rho\)-Shapley fair reward scheme, and analyze party \(2\)’s reward and utility. Note that as \({\epsilon}_2\) increases (decreases), party \(2\) becomes the most (least) valuable of all parties.

6.1 Privacy-valuation and Privacy-reward Trade-offs↩︎

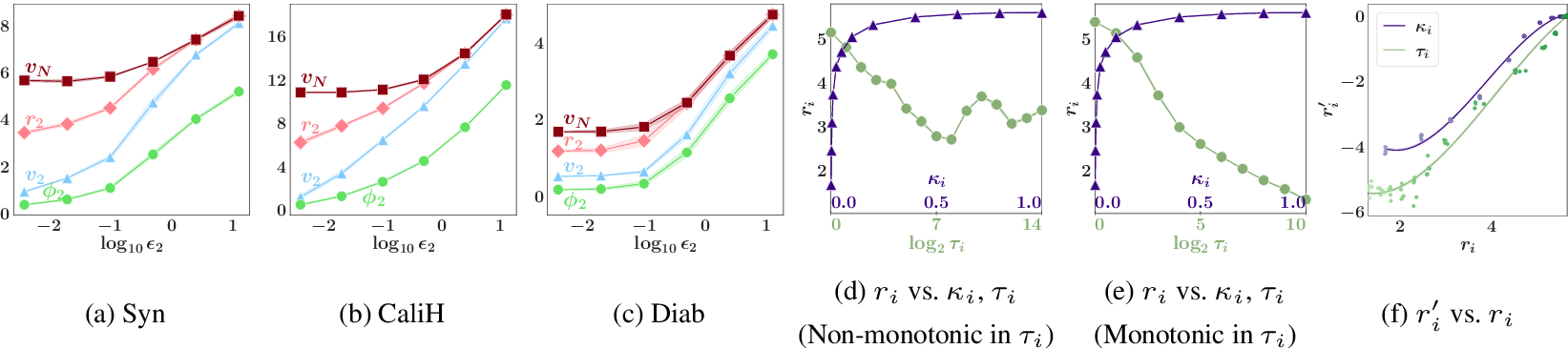

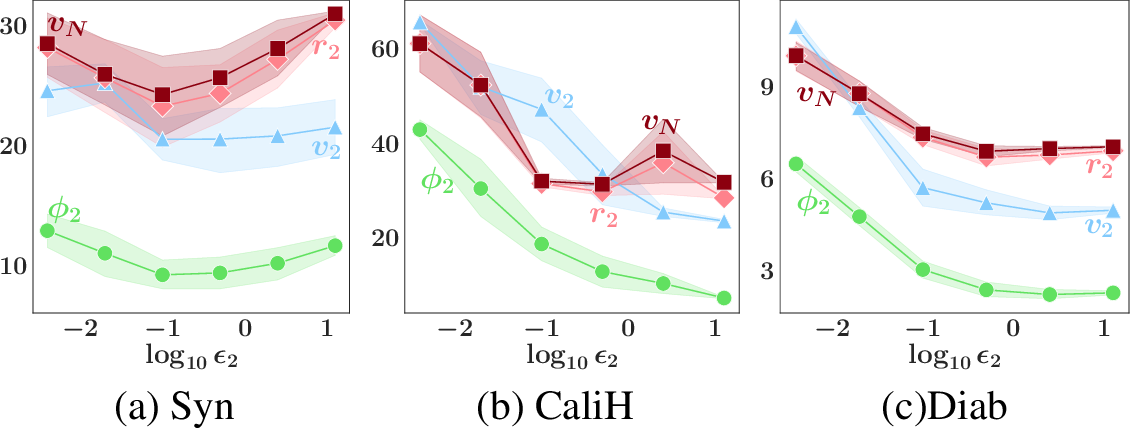

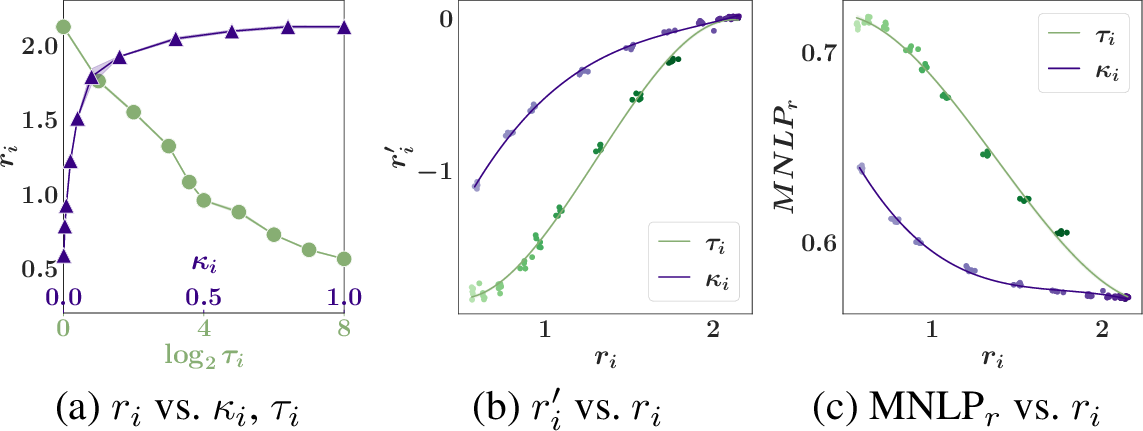

Figure 2: (a-c) Graphs of party \(2\)’s valuation \(v_2\), Shapley value \(\phi_2\), attained reward value \(r_2\) vs. privacy guarantee \({\epsilon}_2\) for various datasets. (d-e) Graphs of attained reward value \(r_i\) vs. \({\kappa_i}\) (Sec. 5.2) and \({\tau_i}\) (Sec. 5.1) for \(2\) different noise realizations. (f) Graph of similarity \(r'_i\) to grand coalition \(N\)’s posterior \(p(\theta | {\boldsymbol{o}}_N)\) vs. \(r_i\) for Syn dataset corresponding to (e).

For each dataset, we only vary the privacy guarantee of party \(i=2\) with \({\epsilon}_2 \in [0.004,0.02,0.1,0.5,2.5,12.5]\) and use the Gaussian mechanism and a fixed random seed to generate the perturbed SS \({\boldsymbol{o}}_2\) from the exact SS \({\boldsymbol{s}}_2\). Fig. 2a-c plot the mean and shades the standard error of \(v_i\), \(v_N\), \(\phi_i\), and \(r_i\) over \(5\) runs. The privacy-valuation and privacy-reward trade-offs can be observed: As the privacy guarantee weakens (i.e., \({\epsilon}_2\) increases), party \(2\)’s valuation \(v_2\), Shapley value \(\phi_2\), and attained reward value \(r_2\) increase. When \({\epsilon}_2\) is large, party \(2\) will be the most valuable contributor and rewarded with \(p(\theta|{\boldsymbol{o}}_N)\), hence attaining \(r_i = v_N\). App. 16.5.0.2 shows that the trade-offs do not hold for non-noise-aware inference.

6.2 Reward Control Mechanisms↩︎

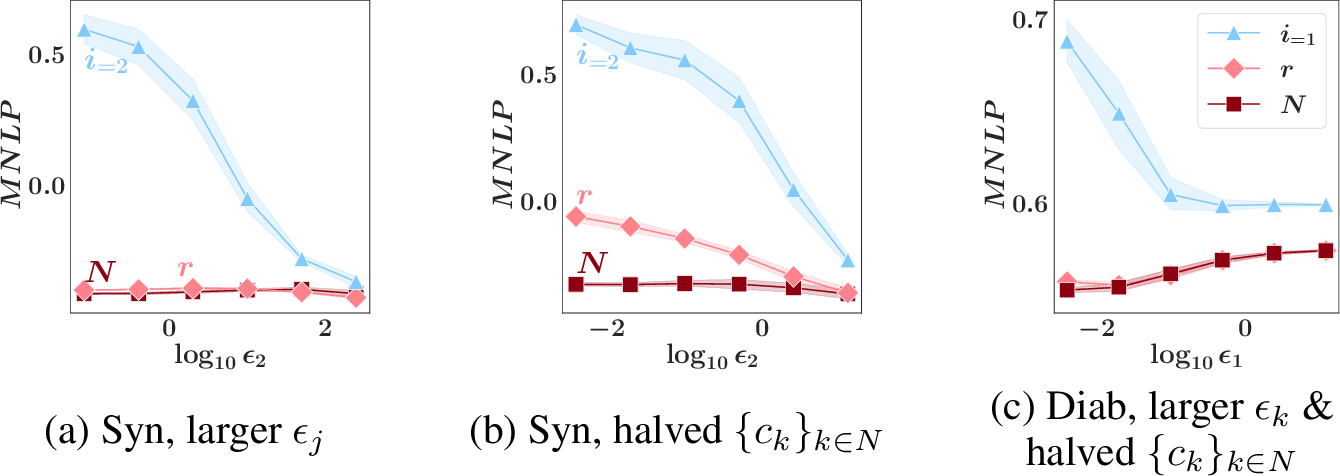

We use the Syn experiment to compare the reward mechanisms that vary the noise addition using \({\tau_i}\) (Sec. 5.1) vs. temper the likelihood using \({\kappa_i}\) (Sec. 5.2). The mechanisms control \(q_i(\theta)\) (i.e., used to generate party \(i\)’s model reward) to attain the target reward values. For each value of \({\tau_i}\) and \({\kappa_i}\) considered, we repeat the posterior sampling and KL estimation method \(5\) times. Figs. [fig:reward-compare]d and [fig:reward-compare]e-f use different sets of sampled noise \(\{{\boldsymbol{e}}_k^i\}_{k \in N}\) to demonstrate the stochastic relationship between \(r_i\) and \({\tau_i}\). In Fig. [fig:reward-compare]d, the non-monotonic disadvantage of noise addition can be observed: As \({\tau_i}\) increases, \(r_i\) does not consistently decrease, hence making it hard to solve for the smaller \({\tau_i}\) that attains \(r^*_i = 3\). In contrast, as \({\kappa_i}\) decreases from \(1\), \(r_i\) consistently decreases. Furthermore, in Fig. [fig:reward-compare]f, we demonstrate the other advantage of likelihood tempering: For the same \(r_i\), tempering the likelihood leads to a higher similarity \(r'_i\) to the posterior \(p(\theta | {\boldsymbol{o}}_N)\) than noise addition. In App. 16.6, we report the relationship between \(r_i\) vs. \({\kappa_i}\) and \({\tau_i}\) for the other real-world datasets.

6.3 Utility of Model Reward↩︎

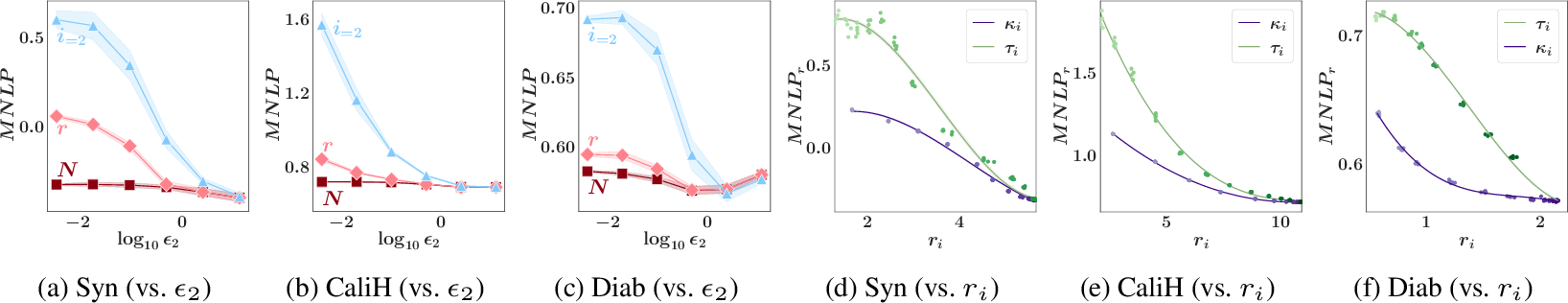

The utility (or the predictive performance) of both Bayesian models can be assessed by the mean negative log probability (MNLP) of a non-private test set.15 In short, MNLP reflects how unlikely the test set is given the perturbed SS and additionally cares about the uncertainty/confidence in the model predictions (e.g., due to the impact of DP noise). MNLP will be higher (i.e., worse) when the model is more uncertain of its accurate predictions or overconfident in inaccurate predictions on the test set; see App. 16.2 for an in-depth definition.

Privacy trade-offs. Figs. 3a-c illustrate the privacy-utility trade-off described in Sec. 1: As \({\epsilon}_2\) decreases (i.e., privacy guarantee strengthens), the MNLP\(_N\) of grand coalition \(N\)’s collaboratively trained model and the MNLP\(_i\) of party \(i=2\)’s individually trained model generally increase, so their utilities drop (). This motivates the need to incentivize party \(2\) against selecting an excessively small \({\epsilon}_2\) by enforcing privacy-valuation and privacy-reward trade-offs. From Figs. 3a-c, the impact of our scheme enforcing the trade-offs can be observed: As \({\epsilon}_2\) decreases, the MNLP\(_r\) of party \(i=2\)’s model reward increases.

Remark. In Fig. 3c, an exception to () is observed. The exception illustrates that the privacy-valuation trade-off may not hold for a valuation function based on the performance on a validation set.

Individual rationality. It can be observed from Figs. 3a-c that as \({\epsilon}_2\) decreases, the MNLP\(_r\) of party \(i=2\)’s model reward increases much less rapidly than the MNLP\(_i\) of its individually trained model. So, it is rational for party \(i=2\) to join the collaboration to get a higher utility.

Remark. Party \(i=2\)’s utility gain appears small when \({\epsilon}_2\) is large due to parties \(1\) and \(3\)’s selection of strong privacy guarantee \({\epsilon}= 0.2\). Party \(i\) can gain more when other parties require weaker privacy guarantees such as \({\epsilon}= 2\) instead (see App. 16.5.0.3).

Likelihood tempering is a better reward control mechanism. Extending Sec. 6.2, we compare the utility of party \(i\)’s model reward generated by noise addition vs. likelihood tempering in Figs. 3d-f. Across all experiments, likelihood tempering (with \({\kappa_i}\)) gives (i) a lower MNLP\(_r\) and hence a higher utility, and (ii) a lower variance in MNLP\(_r\) than varying the noise addition (with \({\tau_i}\)).

Figure 3: (a-c) Graphs of utility of party \(2\)’s model reward measured by MNLP\(_r\) vs. privacy guarantee \({\epsilon}_2\) for various datasets. (d-f) Graphs of utility of model reward measured by MNLP\(_r\) vs. attained reward value \(r_i\) under the two reward control mechanisms for various datasets.

7 Related Works↩︎

Fig. 6 in App. 10 gives a diagrammatic overview showing how our work fills the gap in existing works.

Data Valuation. Most data valuation methods are not differentially private and directly access the data. For example, computing the information gain [1] or volume [29] requires the design matrix. While it is possible to make these valuation methods differentially private (see App. 16.3) or value DP trained models using validation accuracy (on an agreed, public validation set), the essential properties of our valuation function ([V2]-[V3]) may not hold.

Privacy Incentive. Though the works of [36], [37] reward parties directly proportional to their privacy budget, their methods do not incentivize data sharing as a party does not fairly receive a higher reward value for contributing a larger, more informative dataset. While the work of [38] artificially creates a privacy-reward trade-off by paying each party \(i\) the product of its raw data’s Shapley value \(\phi_i\) and a monotonic transformation of \({\epsilon}_i\), it neither ensures DP w.r.t. the mediator nor fairly considers how a stronger DP guarantee may reduce party \(i\)’s marginal contribution to others (hence \(\phi_i\)). The work of [39] considers data acquisition from parties with varying privacy requirements but focuses on the mean estimation problem and designing payment and privacy loss functions to get parties to report their true unit cost of privacy loss. Our work here distinctively considers Bayesian models and fairness and allows parties to choose their privacy guarantees directly while explicitly enforcing a privacy-reward trade-off.

Difficulties ensuring incentives with existing DP/FL works. The one posterior sampling (OPS) method [32], [40] proposes that each party \(i\) can achieve DP by directly releasing samples from the posterior \(p(\theta|{\mathcal{D}}_i)\) (if the

log-likelihood is bounded). However, OPS is data inefficient and may not guarantee privacy for approximate inference [41]. It is unclear how we

can privately value a coalition \(C\) and sample from the joint posterior \(p(\theta | \{ {\mathcal{D}}_i\}_{i \in C})\). DP-FedAvg/DP-FedSGD [10] or DP-SGD [9] enable

collaborative but private training of neural networks by requiring each party \(i\) to clip and add Gaussian noise to its submitted gradient updates. However (in addition to the valuation function issue above), it is tricky

to ensure that the parties’ rewards satisfy data sharing incentives. In each round of FL, parties selected will receive the (same) latest model parameters to compute gradient updates. This setup goes against the fairness ([fairness]) incentive as parties who share less informative gradients should be rewarded with lower quality model parameters instead. Although the unfairness may potentially be corrected via

gradient-based [7] or monetary rewards, there is no DP reward scheme to guarantee a party better model reward from collaboration than in

solitude or a higher monetary reward than its cost of participation, hence violating individual rationality.

8 Conclusion↩︎

Unlike existing works in collaborative ML that solely focus on the fairness incentive, our proposed scheme further (i) ensures privacy for the parties during valuation and in model rewards and (ii) enforces a privacy-valuation trade-off to deter parties from unfetteredly selecting excessive DP guarantees to maximize the utility of collaboratively trained models.16 This involves novelly combining our proposed Bayesian surprise valuation function and reward control mechanism with DP noise-aware inference. We empirically evaluate our scheme on several datasets. Our likelihood tempering reward control mechanism consistently preserves better predictive performance.

Our work has two limitations which future work should overcome. Firstly, we only consider ML models with SS (see App. 9.1 for applications) and a single round of sharing information with the mediator as a case study to show the incentives and trade-offs can be achieved. Future work should generalize our scheme to ML models without an explicit SS.

Next, like the works of [1], [5], [16], [17], [42], [43] and others, we do not consider the truthfulness of submitted information and value data as-is. This limitation is acceptable for two reasons.) Parties such as hospitals and firms will truthfully share information as they are primarily interested in building and receiving a model reward of high quality and may additionally be bound by the collaboration’s legal contracts and trusted data-sharing platforms. For example, with the use of X-road ecosystem,17 parties can upload a private database which the mediator can query for the perturbed SS. This ensures the authenticity of the data (also used by the owner) and truthful computation given the uploaded private database.) Each party would be more inclined to submit true information as any party \(k\) who submits fake SS will reduce its utility from the collaboration. This is because party \(k\)’s submitted SS is used to generate \(k\)’s model reward and cannot be replaced locally as party \(k\) will only receive posterior samples. Future work should seek to verify and incentivize truthfulness.

Acknowledgments and Disclosure of Funding↩︎

This research/project is supported by the National Research Foundation Singapore and DSO National Laboratories under the AI Singapore Programme (AISG Award No: AISG\(2\)-RP-\(2020\)-\(018\)). This work is also supported by the National Natural Science Foundation of China (62206139) and the Major Key Project of PCL (PCL2023AS7-1).

9 Fundamental Concepts↩︎

In this section, we will elaborate on concepts from Sec. 2 in more detail.

9.1 Sufficient Statistics↩︎

Bayesian learning involves updating our belief of the likely values of the model parameters \(\theta\), captured in the prior \(p(\theta)\), to a posterior belief \(p(\theta|{\mathcal{D}}_i) \propto p(\theta) \times p({\mathcal{D}}_i|\theta)\). The posterior belief gets more concentrated (around the maximum likelihood estimate) after observing a larger dataset \({\mathcal{D}}_i\).

The statistic \({\boldsymbol{s}}_i\) is a SS for \({\mathcal{D}}_i\) if \(\theta\) and \({\mathcal{D}}_i\) are conditionally independent given \({\boldsymbol{s}}_i\), i.e., \({p(\theta | {\mathcal{D}}_i) = p(\theta | {\boldsymbol{s}}_i, {\mathcal{D}}_i) = p(\theta | {\boldsymbol{s}}_i)}\) [21], [22]. Knowing the dataset \({\mathcal{D}}_i\) does not provide any extra information about \(\theta\) beyond the SS \({\boldsymbol{s}}_i\). SS exists for exponential family models [18] and Bayesian linear regression [19]. Approximate SS has been proposed by [20] for generalized linear models. For more complex data such as images, we can use pre-trained neural networks like VGG-\(16\) as feature extractors and generate SS from the last hidden layer’s outputs.

9.1.0.1 Bayesian Linear Regression.

In linear regression, each datum consists of the input \({\boldsymbol{x}}\in \mathbb{R}^w\) and the output variable \(y \in \mathbb{R}\). Let \({\mathcal{D}}\) denote the dataset with \(c\) data points, and \({\boldsymbol{y}}\) and \({\boldsymbol{X}}\) be the corresponding concatenated output vector and design matrix in \(\mathbb{R}^{c \times w}\). Bayesian linear regression models the relationship as \({\boldsymbol{y}}= {\boldsymbol{X}}{\boldsymbol{w}}+ \mathcal{N}(0, \sigma^2{\boldsymbol{I}})\) where the model parameters \(\theta\) consists of the weight parameters \({\boldsymbol{w}}\in \mathbb{R}^w\) and the noise variance \(\sigma^2\). The likelihood \[\begin{align} p({\boldsymbol{y}}| {\boldsymbol{X}}, {\boldsymbol{w}}, \sigma^2) &= (2 \pi \sigma^2)^{-\frac{c}{2}} \exp{\left( -\frac{({\boldsymbol{y}}-{\boldsymbol{X}}{\boldsymbol{w}})^\top ({\boldsymbol{y}}-{\boldsymbol{X}}{\boldsymbol{w}})}{2\sigma^2}\right)} \\ &= (2 \pi \sigma^2)^{-\frac{c}{2}} \exp{\left[\frac{-1}{2\sigma^2}{\boldsymbol{y}}^\top {\boldsymbol{y}}+ \frac{1}{\sigma^2}{\boldsymbol{w}}^\top {\boldsymbol{X}}^\top {\boldsymbol{y}}- \frac{1}{2\sigma^2}{\boldsymbol{w}}^\top {\boldsymbol{X}}^\top {\boldsymbol{X}}{\boldsymbol{w}}\right]}\;. \end{align}\] only depends on data via the sufficient statistics \({\boldsymbol{s}}= ({\boldsymbol{y}}^\top {\boldsymbol{y}}, {\boldsymbol{X}}^\top {\boldsymbol{y}}, {\boldsymbol{X}}^\top {\boldsymbol{X}})\). Concretely, when the prior \(p(\theta)\) of the weights and variance follow a normal inverse-gamma distribution, \(\texttt{NIG}(\mathbf{0}, {\boldsymbol{V}}_0, a_0, b_0)\), the posterior \(p(\theta | {\mathcal{D}}_i)\) is the normal inverse-gamma distribution \(\texttt{NIG}(\mathbf{w}_i, {\boldsymbol{V}}_i, a_0 + c_i/2, b_i)\) where \(c_i\) is the number of data points and \[{\boldsymbol{w}}_i = {\boldsymbol{V}}_i \textcolor{blue}{{\boldsymbol{X}}_i^\top {\boldsymbol{y}}_i} \qquad {\boldsymbol{V}}_i = \left( {\boldsymbol{V}}_0^{-1} + \textcolor{blue}{{\boldsymbol{X}}_i^\top {\boldsymbol{X}}_i} \right)^{-1} \qquad b_i = b_0 + (1/2) \left[ \textcolor{blue}{{\boldsymbol{y}}_i^\top {\boldsymbol{y}}_i} - {\boldsymbol{w}}_i^\top {\boldsymbol{V}}_i^{-1} {\boldsymbol{w}}_i \right]\] can be computed directly from \({\boldsymbol{s}}_i\). The posterior belief \(p(\theta | {\mathcal{D}}_i, {\mathcal{D}}_j)\) given parties \(i\) and \(j\)’s dataset can be similarly computed using the SS of their pooled dataset, \({\boldsymbol{s}}_{ij}\). As the SS \({\boldsymbol{s}}_{ij}\) works out to \({\boldsymbol{s}}_i + {\boldsymbol{s}}_j\), we only need \({\boldsymbol{s}}_i\) and \({\boldsymbol{s}}_j\) from party \(i\) and \(j\) instead of their private datasets.

9.1.0.2 Generalized Linear Model (GLM).

A generalized linear model (GLM) generalizes a linear model by introducing an inverse link function \(\Upsilon\). The probability of observing the output \(y\) given input \({\boldsymbol{x}}= (x_{(1)}, \dots, x_{(w)})\) and model weights \(\theta\) depends on their dot product \[p(y | {\boldsymbol{x}}, \theta) = p(y | \Upsilon({\boldsymbol{x}}^\top \theta))\;.\] Next, we define the GLM mapping function \(\upsilon\) to the log-likelihood of observing \(y\) given the GLM model. Formally, \[\upsilon(y, {\boldsymbol{x}}^\top \theta) \triangleq \log p(y | \Upsilon({\boldsymbol{x}}^\top \theta))\;.\]

As an example, logistic regression is a GLM with \(\Upsilon\) defined as the sigmoid function and \(p(y = \pm 1 | \texttt{sigmoid}({\boldsymbol{x}}^\top \theta))\) follows a Bernoulli distribution. As the non-linearity of \(\Upsilon\) disrupts the exponential family structure, logistic regression and other GLMs do not have sufficient statistics. Logistic regression’s GLM mapping function \(\upsilon_{\texttt{log}}(y, {\boldsymbol{x}}^\top \theta) = -\log(1+\exp({- y {\boldsymbol{x}}^\top \theta}))\).

[20] propose to approximate the GLM mapping function \(\upsilon\) with an \(M\)-degree polynomial approximation \(\upsilon_M\). \(\upsilon_M\) is an exponential family model with sufficient statistics \(g(d) = \left\{ \prod_{i=1}^w (yx_{(i)})^{m_i} | \sum_i m_i \leq M, \forall i\;m_i \in \mathbb{Z}_0^+ \right\}\). These SS are the polynomial approximate sufficient statistics for GLMs. For example, when \(M=2\) and \({\boldsymbol{x}}= (x_{(1)}, x_{(2)})\), \(g(d) = \left[ 1, x_{(1)}y, x_{(2)}y, x_{(1)}^2 y^2, x_{(2)}^2 y^2, x_{(1)}x_{(2)} y^2 \right]\).

9.2 Differential Privacy↩︎

Remark 1. Our work aims to ensure example-level DP for each collaborating party: A party updating/adding/deleting a single datum will only change the perturbed SS visible to the mediator and the corresponding belief of the model parameters in a provably minimal way. We are not ensuring user-level DP: The belief of model parameters only changes minimally after removing a collaborating party’s (or a user/data owner’s) dataset, possibly with multiple data points [44].

Intuitively, a DP algorithm \(\mathcal{R}: {\mathcal{D}}\rightarrow {\boldsymbol{o}}\) guarantees that each output \({\boldsymbol{o}}\) is almost equally likely regardless of the inclusion or exclusion of a data point \({\boldsymbol{d}}\) in \({\mathcal{D}}\). This will allay privacy concerns and incentivize a data owner to contribute its data point \({\boldsymbol{d}}\) since even a knowledgeable attacker cannot infer the presence or absence of \({\boldsymbol{d}}\).

The works on noise-aware inference [25], [26] assume that the input \({\boldsymbol{x}}\) and output \(y\) of any data point have known bounded ranges. We will start by introducing our domain-dependent definitions:

Definition 4 (Neighboring datasets). Two datasets \({\mathcal{D}}\) and \({\mathcal{D}}'\) are neighboring if \({\mathcal{D}}'\) can be obtained from \({\mathcal{D}}\) by replacing a single data point. The total number of data points and all other data points are the same.

Definition 5 (Sensitivity [45]). The sensitivity of a function \(g\) that takes in dataset \({\mathcal{D}}_k\) quantifies the maximum impact a data point can have on the function output. The \(\ell_1\)-sensitivity \(\Delta_1(g)\) and \(\ell_2\)-sensitivity \(\Delta_2(g)\) measure the impact using the \(\ell_1\) and \(\ell_2\) norm, respectively. Given that \({\mathcal{D}}'_i\) must be a neighboring dataset of \({\mathcal{D}}_i\), \[\begin{align} \Delta_1(g) & \triangleq \max_{{\mathcal{D}}_i, {\mathcal{D}}_i'} \norm{g({\mathcal{D}}_i) - g({\mathcal{D}}_i')}_1\;, \\ \Delta_2(g) & \triangleq \max_{{\mathcal{D}}_i, {\mathcal{D}}_i'} \norm{g({\mathcal{D}}_i) - g({\mathcal{D}}_i')}_2\;. \end{align}\]

In our problem, \(g\) computes the exact SS \({\boldsymbol{s}}_i\) for \({\mathcal{D}}_i\). The sensitivity can be known/computed if the dataset is normalized and the feature ranges are bounded.

We start with the definition of \({\epsilon}\)-differential privacy. The parameter \(\epsilon\) bounds how much privacy is lost by releasing the algorithm’s output.

Definition 6 (Pure \({\epsilon}\)-DP [45]). A randomized algorithm \(\mathcal{R}: {\mathcal{D}}\rightarrow {\boldsymbol{o}}\) with range \({\mathcal{O}}\) is \(\epsilon\)-DP if for all neighboring datasets \({\mathcal{D}}\) and \({\mathcal{D}}'\) and possible output subset \({\mathcal{O}}\subset \mathop{Range}(\mathcal{R})\), \[\begin{align} P(\mathcal{R}({\mathcal{D}}) \in {\mathcal{O}}) \leq e^\epsilon P(\mathcal{R}({\mathcal{D}}') \in {\mathcal{O}}) \;. \end{align}\]

The Laplace mechanism [45] is an \({\epsilon}\)-DP algorithm. Instead of releasing the exact SS \({\boldsymbol{s}}_i\), the mechanism will output a sample of the perturbed SS \({\boldsymbol{o}}_i \sim \mathrm{Laplace}({\boldsymbol{s}}_i, (\Delta_1(g) /{\epsilon}) \;{\boldsymbol{I}})\).

A common relaxation of \({\epsilon}\)-differential privacy is \(({\epsilon}, \delta)\)-differential privacy. It can be interpreted as \({\epsilon}\)-DP but with a failure of probability at most \(\delta\).

Definition 7 (\(({\epsilon}, \delta)\)-DP). A randomized algorithm \(\mathcal{R}: {\mathcal{D}}\rightarrow {\boldsymbol{o}}\) with range \({\mathcal{O}}\) is \((\epsilon, \delta)\)-differentially private if for all neighboring datasets \({\mathcal{D}}\) and \({\mathcal{D}}'\) and possible output subset \({\mathcal{O}}\subset \mathop{Range}(\mathcal{R})\), \[\begin{align} P(\mathcal{R}({\mathcal{D}}) \in {\mathcal{O}}) \leq e^\epsilon P(\mathcal{R}({\mathcal{D}}') \in {\mathcal{O}}) + \delta\;. \end{align}\]

The Gaussian mechanism is an \(({\epsilon}, \delta)\)-DP algorithm. The variance of the Gaussian noise to be added can be computed by the analytic Gaussian mechanism algorithm [46].

In the main paper, we have also discussed another relaxation of \({\epsilon}\)-differential privacy that is reproduced below:

Definition 8 (Rényi DP [23]). A randomized algorithm \(\mathcal{R}: {\mathcal{D}}\rightarrow {\boldsymbol{o}}\) is \((\lambda, \epsilon)\)-Rényi differentially private if for all neighboring datasets \({\mathcal{D}}\) and \({\mathcal{D}}'\), the Rényi divergence of order \(\lambda > 1\) is \(D_\lambda(\mathcal{R}({\mathcal{D}})\;||\;\mathcal{R}({\mathcal{D}}')) \leq \epsilon\) where \[\begin{align} D_\lambda(\mathcal{R}({\mathcal{D}})\;||\;\mathcal{R}({\mathcal{D}}')) \triangleq \frac{\log{\mathbb{E}_{{\boldsymbol{o}}\sim \mathcal{R}({\mathcal{D}}')}\left[\displaystyle\frac{P(\mathcal{R}({\mathcal{D}}) = {\boldsymbol{o}})}{P(\mathcal{R}({\mathcal{D}}') = {\boldsymbol{o}})}\right]^\lambda}}{\lambda-1}\;. \end{align}\]

When \(\lambda=\infty\), Rényi DP becomes pure \({\epsilon}\)-DP. Decreasing \(\lambda\) emphasizes less on unlikely large values and emphasizes more on the average value of the privacy loss random variable \(\log\left[{{P(\mathcal{R}({\mathcal{D}}) = {\boldsymbol{o}})}/{P(\mathcal{R}({\mathcal{D}}') = {\boldsymbol{o}})}}\right]\) with \({\boldsymbol{o}}\sim \mathcal{R}({\mathcal{D}}')\).

The Gaussian mechanism is a \((\lambda, {\epsilon})\)-Rényi DP algorithm. Instead of releasing the exact SS \({\boldsymbol{s}}_i\), the mechanism will output a sample of the perturbed SS \({\boldsymbol{o}}_i \sim \mathcal{N}\left({\boldsymbol{s}}_i,\;0.5\;({\lambda}/{\epsilon}) \;\Delta_2^2(g)\;\boldsymbol{I} \right)\).

9.2.0.1 Post-processing.

A common and important property of all DP algorithms/mechanisms is their robustness to post-processing: Processing the output of a DP algorithm \(\mathcal{R}\) without access to the underlying dataset will retain the same privacy loss and guarantees [47].

Choosing Rényi-DP over \(({\epsilon}, \delta)\)-DP. In our work, we consistently use the Gaussian mechanism in all the experiments, like in that of [26]. We choose Rényi DP over \(({\epsilon}, \delta)\)-DP due to the advantages stated below:

\(\bullet\)

Rényi-DP is a stronger DP notion according to [23]: While \(({\epsilon}, \delta)\)-DP allows for a complete failure of privacy guarantee with probability of at most \(\delta\), Rényi-DP does not and the privacy bound is only loosened more for less likely outcomes. Additionally, [23] claims that it is harder to analyze and optimize \(({\epsilon}, \delta)\)-DP due to the trade-off between \({\epsilon}\) and \(\delta\). More details can be found in [23].

Rényi-DP supports easier composition: In a collaborative ML framework, each party \(i\) may need to release multiple outputs on the same dataset \({\mathcal{D}}_i\) such as the SS and other information for preprocessing steps (e.g., principal component analysis). Composition rules bound the total privacy cost \(\hat{{\epsilon}}\) of releasing multiple outputs of differentially private mechanisms. It is harder to keep track of the total privacy cost when using \(({\epsilon}, \delta)\)-DP due to advanced composition rules and the need to choose from a wide selection of possible \(({\epsilon}(\delta), \delta)\) [23]. In contrast, the composition rule (i.e., Proposition 1 in [23]) is straightforward: When \(\lambda\) is a constant, the \({\epsilon}\) of different mechanisms can simply be summed.

Note that the contribution of our work will still hold for \(({\epsilon}, \delta)\)-DP (using the Gaussian mechanism) and \({\epsilon}\)-DP (using the Laplace mechanism) with some modifications of the inference process and proofs.

Remark 2. Our work is in the same spirit as local DP (and we also think that no mediator can be trusted to directly access any party’s private dataset) but does not strictly satisfy the definition of local DP (see Def. 9). In the definition, the local DP algorithm takes in a single input/datum and ensures the privacy of its output — the perturbation mechanism is applied to every input independently. In contrast, in our case, a party may have multiple inputs and the perturbation mechanism is only applied to their aggregate statistics. Thus, a datum owner (e.g., a patient of a collaborating hospital) enjoys weaker privacy in our setting than the local DP setting.

Definition 9 (\({\epsilon}\)-Local DP [48]). A randomized algorithm \(\mathcal{R}\) is \({\epsilon}\)-local DP if for any pair of data points \(d, d' \in {\mathcal{D}}\) and for any possible output \({\mathcal{O}}\subset \mathop{Range}(\mathcal{R})\), \[\begin{align} P(\mathcal{R}(d) \in {\mathcal{O}}) \leq e^\epsilon P(\mathcal{R}(d') \in {\mathcal{O}}) \;. \end{align}\]

9.3 DP Noise-Aware Inference↩︎

DP mechanisms introduce randomness and noise to protect the output of a function. Noise-naive techniques ignore the added noise in downstream analysis. In contrast, noise-aware techniques account for the noise added by the DP mechanism.

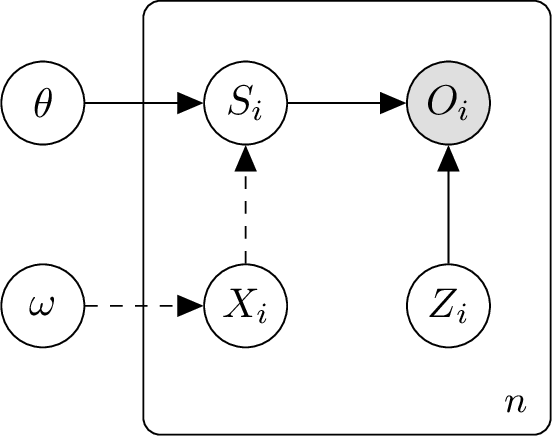

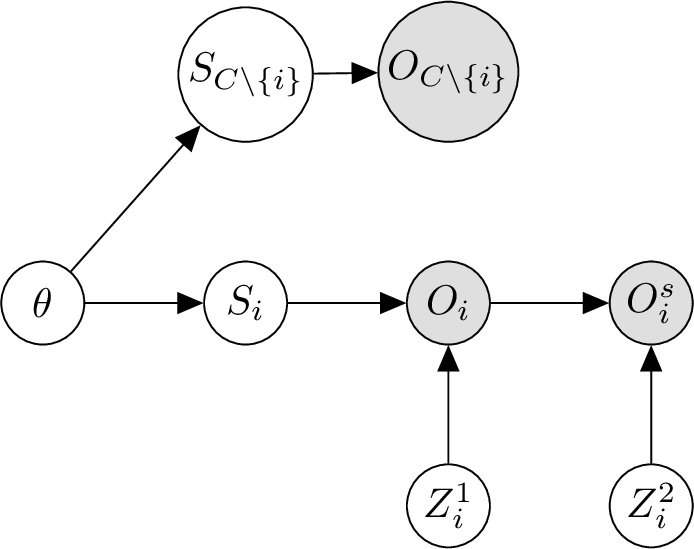

Consider a probabilistic model where the model parameters \(\theta\) generate the dataset \({\mathcal{D}}_i\) which then generates the exact and perturbed sufficient statistics of each party \(i\), which are modeled as random variables \(S_i\) and \(O_i\), respectively. The exact SS \({\boldsymbol{s}}_i\) and perturbed SS \({\boldsymbol{o}}_i\) computed by party \(i\) are realizations of \(S_i\) and \(O_i\), respectively. As the mediator cannot observe \(i\)’s exact SS, \(S_i\) is a latent random variable. Instead, the mediator observes \(i\)’s perturbed SS \(O_i\) which also contains noise \(Z_i\) added by the DP mechanism, i.e., \(O_i \triangleq S_i + Z_i\). The Gaussian mechanism to ensure \((\lambda,{\epsilon})\)-Rényi DP sets \(Z_i \sim \mathcal{N}\left(\mathbf{0},\;0.5\;({\lambda}/\epsilon)\; \Delta_2^2(g)\;\boldsymbol{I} \right)\). We depict the graphical model of our multi-party setting in Fig. 4.

Figure 4: In the graphical model above, all parties share the same prior belief \(p(\theta)\) of model parameters \(\theta\) and prior belief \(p(\omega)\) of data parameters \(\omega\). The mediator models its beliefs of the SS of each party separately and only observes the perturbed SS \({\boldsymbol{o}}_i\) of every party \(i \in N\) (thus, only \(O_i\) is shaded). The sufficient statistic \(S_i\) is generated from the model inputs \({\boldsymbol{X}}_i\) and the model output \({\boldsymbol{y}}_i\) (which depends on the model parameters \(\theta\)). We illustrate the relationship between \(\omega\), \(X_i\), and \(S_i\) as dashed lines as they may be modeled differently in the various DP noise-aware inference methods. See [25], [26] for their respective graphical models and details.

9.3.0.1 Differences between exact, noise-naive and noise-aware inference.

When the mediator observes the exact SS \({\boldsymbol{s}}_i\) from party \(i\), the exact posterior belief \(p(\theta|S_i = {\boldsymbol{s}}_i)\) can be computed in closed form based on App. 9.1. However, when the mediator only observes the perturbed SS \({\boldsymbol{o}}_i\), the mediator can only compute the noise-naive and noise-aware posterior beliefs instead. The noise-naive posterior belief \(p(\theta|S_i = {\boldsymbol{o}}_i)\) will neither reflect the unobservability of the exact SS random variable \(S_i\) accurately nor quantify the impact of the DP noise \(Z_i\). In contrast, for any party, the DP noise-aware posterior belief \(p(\theta|O_i = {\boldsymbol{o}}_i)\), conveniently abbreviated as \(p(\theta|{\boldsymbol{o}}_i)\), will quantify the impact of the noise added by the DP mechanism. (For a coalition \(C\) of parties, the DP noise-aware posterior belief is \(p(\theta|{\boldsymbol{o}}_C) \triangleq p(\theta|\{O_i = {\boldsymbol{o}}_i\}_{i \in C}))\), as described in Footnote 6.) The works of [24]–[26] have shown that DP noise-aware inference leads to a posterior belief that is better calibrated (i.e., lower bias and better quantification of uncertainty without overconfidence) and of higher utility (i.e., closer to the non-private posterior belief), thus a better predictive performance.

The main challenge of noise-aware inference lies in tractably approximating the integral \(p(O_i={\boldsymbol{o}}_i|\theta) = \int p({\boldsymbol{s}}_i|\theta)p({\boldsymbol{o}}_i|{\boldsymbol{s}}_i) \,\text{d}{\boldsymbol{s}}_i\) and \(p({\boldsymbol{s}}_i|\theta) = \int_{\{ {\mathcal{D}}=({\boldsymbol{X}}, {\boldsymbol{y}}): g({\mathcal{D}}) = {\boldsymbol{s}}_i \}} p({\boldsymbol{X}}, {\boldsymbol{y}}| \theta)\, \text{d}{\boldsymbol{X}}d{\boldsymbol{y}}\) over all datasets. [24]–[26] exploit the observation that as the SS sum \(c\) individuals, the central limit theorem guarantees that the distribution \(p({\boldsymbol{s}}_i|\theta)\) can be well-approximated by the Gaussian distribution \(\mathcal{N}(c\mu_g, c\Sigma_g)\) for large \(c\) [25]. Here, \(\mu_g\) and \(\Sigma_g\) are the mean and covariance of an individual’s SS. [24]–[26], [49] prescribe how to compute \(\mu_g\) and \(\Sigma_g\) in closed form from the sampled \(\theta\) parameters and moments of \({\boldsymbol{x}}\) and set the noise of the DP mechanism based on a sensitivity analysis. To approximate the posterior \(p(\theta|{\boldsymbol{o}})\), Markov Chain Monte Carlo (MCMC) sampling steps are needed. [25] propose to use Gibbs sampling, an algorithm that updates a group of parameters at a time and exploits conditional independence, for Bayesian linear regression models. [26] use the No-U-Turn [50] sampler and utilizes Hamiltonian dynamics to explore the parameter space more efficiently for generalized linear models. We describe the BLR Gibbs sampler adapted for our multi-party setting in Algo [algo:gibbs].

Figure 5: BLR Gibbs sampler [25] from noise-aware posterior \(p(\theta|O_N = {\boldsymbol{o}}_N) \propto \int \prod_{i \in N}\left[p({\boldsymbol{o}}_i|{\boldsymbol{s}}_i)\;p({\boldsymbol{s}}_i|\theta)\right]p(\theta) \;\,\text{d}{\boldsymbol{s}}_1\dotsi\,\text{d}{\boldsymbol{s}}_n\). The algorithm (repeatedly) sample the latent variables \(S_i\), \(\omega\) and \(\theta\) sequentially.

10 Key Differences with Existing Data Valuation, Collaborative ML, and DP/FL Works↩︎

Figure 6: Our work, \(\star\), uniquely satisfies all \(3\) desiderata.When parties share information computed from their data, we ensure that every party has at least its

required DP w.r.t. the mediator, receives a collaboratively trained model, and receives a higher reward for sharing higher-quality data than

the others.

It is not trivial to (i) add DP to \(\blacktriangleleft\) while simultaneously enforcing a privacy-valuation trade-off, (ii) add data sharing incentives to \(\blacktriangledown\) (i.e.,

design valuation functions and rewards),and (iii) achieve \(\blacktriangleright\) as access to a party’s dataset (or a coalition’s datasets) is still needed for its valuation in [51].

Difference with existing data valuation and collaborative ML works considering incentives. Our work aims to additionally (A) offer parties assurance about privacy but (B) deter them from selecting excessive privacy guarantees. We achieve (A) by ensuring differential privacy (see definitions in App. 9.2) through only collecting the noisier/perturbed version of each party’s sufficient statistics (see App. 9.1). To achieve (B), we must assign a lower valuation (and reward) to a noisier SS. Our insight is to combine noise-aware inference (that computes the posterior belief of the model parameters given the perturbed SS) with the Bayesian surprise valuation function. Lastly, (C) we propose a mechanism to generate model rewards (i.e., posterior samples of the model parameters) that attain the target reward value and are similar to the grand coalition’s model.

Difference with federated learning and differential privacy works. Existing FL works have covered learning from decentralized data with DP guarantees. However, these works may not address the question: Would parties want to share their data? How do we get parties to share more to maximize the gain from the collaboration? Our work aims to address these questions and incentivize (A) parties to share more, higher-quality data and (B) select a weaker DP guarantee. To achieve (A), it is standard in data valuation methods [6], [16], [17] to use the Shapley value to value a party relative to the data of others as it considers a party’s marginal contribution to all coalitions (subsets) of parties. This would require us to construct and value a trained model for each coalition \(C \subseteq N\): To ease aggregation (and to avoid requesting more information or incurring privacy costs per coalition), we consider sufficient statistics (see App. 9.1). To achieve (B), we want a valuation function that provably ensures a lower valuation for a stronger DP guarantee. Our insight is to combine noise-aware inference (that computes the posterior belief of the model parameters given perturbed SS) with the Bayesian surprise valuation function. Lastly, like the works of [1], [5], (C) we generate a model reward that attains a target reward value (which parties can use for future predictions). Our model reward is in the form of posterior samples of the model parameters instead. We propose a new mechanism to control/generate model rewards that work using SS and preserve similarity to the grand coalition’s model.

Fig. 6 shows how our work in this paper fills the gap in the existing works.

11 Characteristic/Valuation Function↩︎

11.1 Proofs of properties for valuation function↩︎

In this section, we will use the random variable notations defined in App. 9. Moreover, we abbreviate the set of perturbed SS random variables corresponding to a coalition \(C\) of parties as \(O_C \triangleq \{O_i\}_{i \in C}\).

Let \(\mathbb{H}\left(a\right)\) denote the entropy of the variable \(a\).

11.1.0.1 Relationship between KL divergence and information gain.

\[\begin{align} \mathbb{I}\left(\theta; O_C\right) &= \mathbb{E}_{{\boldsymbol{o}}_C \sim O_C}\left[D_{\mathrm{KL}}\left({p(\theta|{\boldsymbol{o}}_C)};{p(\theta)}\right)\right] \\ &= \mathbb{H}\left(\theta\right) - \mathbb{E}_{{\boldsymbol{o}}_C \sim O_C}\left[\mathbb{H}\left(\theta|O_C={\boldsymbol{o}}_C\right)\right]\;. \end{align}\]

11.1.0.2 Party monotonicity (V2).

Consider two coalitions \(C \subset C' \subseteq N\). By taking an expectation w.r.t. random vector \(O_{C'}\), \[\mathbb{E}_{{\boldsymbol{o}}_{C'} \sim O_{C'}}\left[v_{C}\right] = \mathbb{E}_{{\boldsymbol{o}}_C \sim O_C}\left[D_{\mathrm{KL}}\left({p(\theta|{\boldsymbol{o}}_C)};{p(\theta)}\right)\right] = \mathbb{I}\left(\theta; O_C\right) = \mathbb{H}\left(\theta\right) - \mathbb{H}\left(\theta|O_C\right)\] and \[\mathbb{E}_{{\boldsymbol{o}}_{C'} \sim O_{C'}}\left[v_{C'}\right] = \mathbb{E}_{{\boldsymbol{o}}_{C'} \sim O_{C'}}\left[D_{\mathrm{KL}}\left({p(\theta|{\boldsymbol{o}}_{C'})};{p(\theta)}\right)\right] = \mathbb{I}\left(\theta; O_{C'}\right) = \mathbb{H}\left(\theta\right) - \mathbb{H}\left(\theta|O_C, O_{C' \setminus C}\right) .\] Then, \(\mathbb{E}_{{\boldsymbol{o}}_{C'} \sim O_{C'}}\left[v_{C'}\right] > \mathbb{E}_{{\boldsymbol{o}}_{C'} \sim O_{C'}}\left[v_{C}\right]\) as conditioning additionally on \(O_{C' \setminus C}\) should not increase the entropy (i.e., \(\mathbb{H}\left(\theta|O_C, O_{C' \setminus C}\right) \leq \mathbb{H}\left(\theta|O_C\right)\)) due to the “information never hurts" bound for entropy [52].

11.1.0.3 Privacy-valuation trade-off (V3).

Figure 7: Graphical model to illustrate privacy-valuation trade-off (V3) where \(O_i \triangleq S_i + Z^1_i\) and \(O^s_i \triangleq O_i + Z^2_i\).

Let \({\epsilon}_i^s < {\epsilon}_i\), and \(Z^1_i\) and \(Z^2_i\) be independent Gaussian distributions with mean \(0\) and, respectively, variance \({a_i}/{{\epsilon}_i}\) and \(({a_i}/{{\epsilon}^s_i}) - ({a_i}/{{\epsilon}_i}) > 0\) where \(a_i \triangleq 0.5\;\lambda\;\Delta_2^2(g)\), function \(g\) computes the exact SS \({\boldsymbol{s}}_i\) from local dataset \({\mathcal{D}}_i\), and \(\Delta_2(g)\) denotes its \(\ell_2\)-sensitivity. Adding \(Z^1_i\) to \(S_i\) will ensure \((\lambda,{\epsilon}_i)\)-DP while adding both \(Z^1_i\) and independent \(Z^2_i\) to \(S_i\) is equivalent to adding Gaussian noise of variance \({a_i}/{{\epsilon}^s_i}\) to ensure \((\lambda,{\epsilon}^s_i)\)-DP.18 From the graphical model in Fig. 7 and the Markov chain \(\theta \rightarrow O_i \rightarrow O^s_i\), the following conditional independence can be observed: \(\theta \mathrel{\perp\mspace{-10mu}\perp}O^s_i \mid O_i\). By the data processing inequality, no further processing of \(O_i\), such as the addition of noise, can increase the information of \(\theta\). Formally, \(\mathbb{I}\left(\theta; O_i\right) \geq \mathbb{I}\left(\theta; O^s_i\right)\). Simultaneously, \(\theta \centernot{\mathrel{\perp\mspace{-10mu}\perp}}O_i \mid O^s_i\). Hence, \(\mathbb{I}\left(\theta; O_i\right) \neq \mathbb{I}\left(\theta; O^s_i\right) \Rightarrow (\mathbb{I}\left(\theta; O_i\right) > \mathbb{I}\left(\theta; O^s_i\right))\).

To extend to any coalition \(C\) containing \(i\), by the chain rule of mutual information, \[\begin{align} \mathbb{I}\left(\theta; O_i, O^s_i, O_{C \setminus \{i\}}\right) &= \mathbb{I}\left(\theta; O_i, O_{C \setminus \{i\}}\right) + \mathbb{I}\left(\theta; O^s_i | O_i, O_{C \setminus \{i\}}\right) \\ &= \mathbb{I}\left(\theta; O_i^s, O_{C \setminus \{i\}}\right) + \mathbb{I}\left(\theta; O_i | O_i^s, O_{C \setminus \{i\}}\right)\;. \end{align}\] As conditional independence \(\theta \mathrel{\perp\mspace{-10mu}\perp}O^s_i \mid O_i, O_{C \setminus \{i\}}\) and dependence \(\theta \centernot{\mathrel{\perp\mspace{-10mu}\perp}}O_i \mid O^s_i, O_{C \setminus \{i\}}\) still hold, \(\mathbb{I}\left(\theta; O^s_i | O_i, O_{C \setminus \{i\}}\right) = 0\) and \(\mathbb{I}\left(\theta; O_i | O_i^s, O_{C \setminus \{i\}}\right) > 0\), respectively. It follows from the above expression that \(\mathbb{I}\left(\theta; O_C\right) > \mathbb{I}\left(\theta; O_i^s, O_{C \setminus \{i\}}\right)\), which implies \(\mathbb{E}_{O_C} [v_C] > \mathbb{E}_{O_{C \setminus \{i\}}, O_i^s} [v_C^s]\;.\) For future work, the proof can be extended to other DP mechanisms.

11.2 Proof of Remark in Sec. 3↩︎

Let the alternative valuation of a coalition \(C\) be \(v'_C \triangleq D_{\mathrm{KL}}\left({p(\theta|{\boldsymbol{o}}_N)};{p(\theta)}\right) - D_{\mathrm{KL}}\left({p(\theta|{\boldsymbol{o}}_N)};{p(\theta|{\boldsymbol{o}}_C)}\right)\). Then, \(v'_\emptyset = 0\) and \(v'_N = D_{\mathrm{KL}}\left({p(\theta|{\boldsymbol{o}}_N)};{p(\theta)}\right)\). It can be observed that

\(\bullet\)

Unlike \(v_C\), \(v'_C\) may be negative.

Unlike \(v_N\), \(v'_N\) is guaranteed to have the highest valuation as the minimum KL divergence \(D_{\mathrm{KL}}\left({p(\theta|{\boldsymbol{o}}_N)};{q(\theta)}\right)\) is \(0\) only when \(q(\theta) = p(\theta|{\boldsymbol{o}}_N)\). This is desirable when we want the grand coalition to be more valuable than the other coalitions but odd when we consider the non-private posterior \(q(\theta) = p(\theta|{\boldsymbol{s}}_N)\): Intuitively, the model computed using \({\boldsymbol{s}}_N\) should be more valuable using \(v'\) than that computed using the perturbed SS \({\boldsymbol{o}}_N\).

By taking an expectation w.r.t. \({\boldsymbol{o}}_N\), \[\begin{align} \mathbb{E}_{p(O_N)}\left[v'_C\right] &= \mathbb{I}\left(\theta; O_N\right) - \mathbb{E}_{{\boldsymbol{o}}_C \sim p(O_C)}\left[\mathbb{E}_{{\boldsymbol{o}}_{N \setminus C} \sim p(O_{N \setminus C}|{\boldsymbol{o}}_C)}\left[D_{\mathrm{KL}}\left({p(\theta|{\boldsymbol{o}}_N = \{{\boldsymbol{o}}_{N \setminus C}, {\boldsymbol{o}}_C\})};{p(\theta|{\boldsymbol{o}}_C)}\right)\right]\right] \\ &= \mathbb{I}\left(\theta; O_N\right) - \mathbb{E}_{{\boldsymbol{o}}_C \sim p(O_C)}\left[ \mathbb{E}_{{\boldsymbol{o}}_{N \setminus C} \sim p(O_{N \setminus C}|{\boldsymbol{o}}_C)}\left[\mathbb{E}_{\theta \sim p(\theta| {\boldsymbol{o}}_{N\setminus C}, {\boldsymbol{o}}_C)}\left[ \log \frac{p(\theta|{\boldsymbol{o}}_{N \setminus C}, {\boldsymbol{o}}_C)}{p(\theta|{\boldsymbol{o}}_C)} \right]\right]\right] \\ &\overset{(i)}{=} \mathbb{I}\left(\theta; O_N\right) - \mathbb{E}_{{\boldsymbol{o}}_C \sim p(O_C)}\left[ \mathbb{E}_{\theta, {\boldsymbol{o}}_{N \setminus C} \sim p(\theta, O_{N \setminus C}|{\boldsymbol{o}}_C)}\left[ \log \frac{p(\theta, {\boldsymbol{o}}_{N \setminus C} | {\boldsymbol{o}}_C)}{p(\theta|{\boldsymbol{o}}_C)\;p({\boldsymbol{o}}_{N \setminus C}|{\boldsymbol{o}}_C)} \right]\right] \\ &= \mathbb{I}\left(\theta; O_N\right) - \mathbb{E}_{{\boldsymbol{o}}_C \sim p(O_C)}\left[D_{\mathrm{KL}}\left({p(\theta, O_{N \setminus C} |{\boldsymbol{o}}_C)};{p(\theta|{\boldsymbol{o}}_C)\;p(O_{N \setminus C}|{\boldsymbol{o}}_C)}\right)\right] \\ & \overset{(ii)}{=} \mathbb{I}\left(\theta; O_N\right) - \mathbb{I}\left(\theta; O_{N \setminus C} |O_C\right) \\ & = \mathbb{I}\left(\theta; O_C\right) = \mathbb{E}_{p(O_N)}\left[v_C\right]\;. \end{align}\] In equality (i) above, we multiply both the numerator and denominator within the log term by \(p({\boldsymbol{o}}_{N \setminus C}|{\boldsymbol{o}}_C)\) and consider the expectation of the joint distribution since by the chain rule of probability, \(p(\theta, O_{N \setminus C}|{\boldsymbol{o}}_C) = p(O_{N \setminus C}|{\boldsymbol{o}}_C)\;p(\theta|O_{N \setminus C}, {\boldsymbol{o}}_C)\). Equality (ii) is due to the definition of conditional mutual information.

11.3 KL Estimation of Valuation Function↩︎

KL estimation is only a tool and not the focus of our work. Our valuation will become more accurate and computationally efficient as KL estimation tools improve.

11.3.0.1 Recommended - nearest-neighbors [31], [53].

Given \(\Theta^{\text{post}}\) and \(\Theta^{\text{prior}}\) which consists of \(m\) samples of \(\theta\) (with dimension \(d\)) from, respectively, the posterior \(p(\theta|{\boldsymbol{o}}_C)\) and prior \(p(\theta)\), we estimate the KL divergence as \[\frac{d}{m} \sum_{\theta \in \Theta^{\text{post}}} \log \frac{\delta_k^{\text{prior}}(\theta)}{\delta_k^{\text{post}}(\theta)} + \log \frac{m}{m-1}\] where \(\delta_k^{\text{post}}(\theta)\) is the distance of the sampled \(\theta\) to its \(k\)-th nearest neighbor in \(\Theta^{\text{post}}\) (excluding itself) and \(\delta_k^{\text{prior}}(\theta)\) is the distance of the sampled \(\theta\) to the \(k\)-th nearest neighbor in \(\Theta^{\text{prior}}\).

The number \(k\) of neighbors is tunable and analyzed in the follow-up work of [54]. As the number \(m\) of samples increases, the bias and variance decrease. The convergence rate is analysed by [55]. Moreover, the estimate converges almost surely [31] and is consistent [53] for independent and identically distributed (i.i.d.) samples. Furthermore, as the KL divergence is invariant to metric reparameterizations, the bias can be reduced by changing the distance metric [53], [56].

To generate i.i.d. samples, we suggest the usage of the NUTS sampler or thinning (keeping only every \(t\)-th sample). We observe that if the samples from \(\theta\mid{\boldsymbol{o}}_C\) are non-independent, i.e., correlated and close to the previous sample, we may underestimate its distance to the \(k\)-th distinct neighbor in \(\theta\mid{\boldsymbol{o}}_C\), \(\delta_k^{\text{post}}(\theta)\), and thus overestimate the KL divergence. This is empirically verified in Table 2. We have also observed that the KL divergence may be underestimated when the posterior is concentrated at a significantly different mean from the prior.

11.3.0.2 Recommended for large \({\epsilon}\) - approximate \(p(\theta|{\boldsymbol{o}}_C)\) using maximum likelihood distribution from the \(p(\theta)\)’s exponential family.

When a small noise is added to ensure weak DP, we can approximate \(p(\theta|{\boldsymbol{o}}_C)\) with a distribution \(q\) from the same exponential family as \(p(\theta|{\boldsymbol{s}}_C)\). We can (i) determine \(q\)’s parameters via maximum likelihood estimation (MLE) from the Gibbs samples19 and (ii) compute the KL divergence in closed form.

However, the KL estimate is inaccurate (i.e., large bias) when the distribution \(q\) is a poor fit for the posterior \(p(\theta|{\boldsymbol{o}}_C)\). Future work can consider using normalizing flows as \(q\) to improve the fit, reduce the estimation bias, and work for a larger range of DP guarantees \({\epsilon}\). However, this KL estimation method may be computationally slow and risks overfitting.