FashionEngine: Interactive 3D Human Generation and Editing via Multimodal Controls

April 02, 2024

Abstract

We present FashionEngine, an interactive 3D human generation and editing system that creates 3D digital humans via user-friendly multimodal controls such as natural languages, visual perceptions, and hand-drawing sketches. FashionEngine automates the 3D human production with three key components: 1) A pre-trained 3D human diffusion model that learns to model 3D humans in a semantic UV latent space from 2D image training data, which provides strong priors for diverse generation and editing tasks. 2) Multimodality-UV Space encoding the texture appearance, shape topology, and textual semantics of human clothing in a canonical UV-aligned space, which faithfully aligns the user multimodal inputs with the implicit UV latent space for controllable 3D human editing. The multimodality-UV space is shared across different user inputs, such as texts, images, and sketches, which enables various joint multimodal editing tasks. 3) Multimodality-UV Aligned Sampler learns to sample high-quality and diverse 3D humans from the diffusion prior. Extensive experiments validate FashionEngine’s state-of-the-art performance for conditional generation/editing tasks. In addition, we present an interactive user interface for our FashionEngine that enables both conditional and unconditional generation tasks, and editing tasks including pose/view/shape control, text-, image-, and sketch-driven 3D human editing and 3D virtual try-on, in a unified framework. Our project page is at: https://taohuumd.github.io/projects/FashionEngine.

<ccs2012> <concept> <concept_id>10010520.10010553.10010562</concept_id> <concept_desc>Computer systems organization Embedded systems</concept_desc> <concept_significance>500</concept_significance> </concept> <concept> <concept_id>10010520.10010575.10010755</concept_id> <concept_desc>Computer systems organization Redundancy</concept_desc> <concept_significance>300</concept_significance> </concept> <concept> <concept_id>10010520.10010553.10010554</concept_id> <concept_desc>Computer systems organization Robotics</concept_desc> <concept_significance>100</concept_significance> </concept> <concept> <concept_id>10003033.10003083.10003095</concept_id> <concept_desc>Networks Network reliability</concept_desc> <concept_significance>100</concept_significance> </concept> </ccs2012>

1 Introduction↩︎

With the development of game, virtual reality and film industry, there is an increasing demand for high-quality 3D contents, especially 3D avatars. Traditionally, the production of 3D avatars requires days of work from highly skilled 3D content creators, which is not only time-consuming but also expensive. There have been some attempts in trying to automate the avatar generation pipeline [1], [2]. However, they usually lack control over the generation process, making it difficult to use in practice, as shown in Tab. 1. To reduce the friction of using learning-based avatar generation algorithms, we propose FashionEngine, an interactive system that enables the generation and editing of high-quality photo-realistic 3D humans. The process is controlled by multiple modalities, e.g., texts, images, and hand-drawing sketches, making the system easy to use even for layman users.

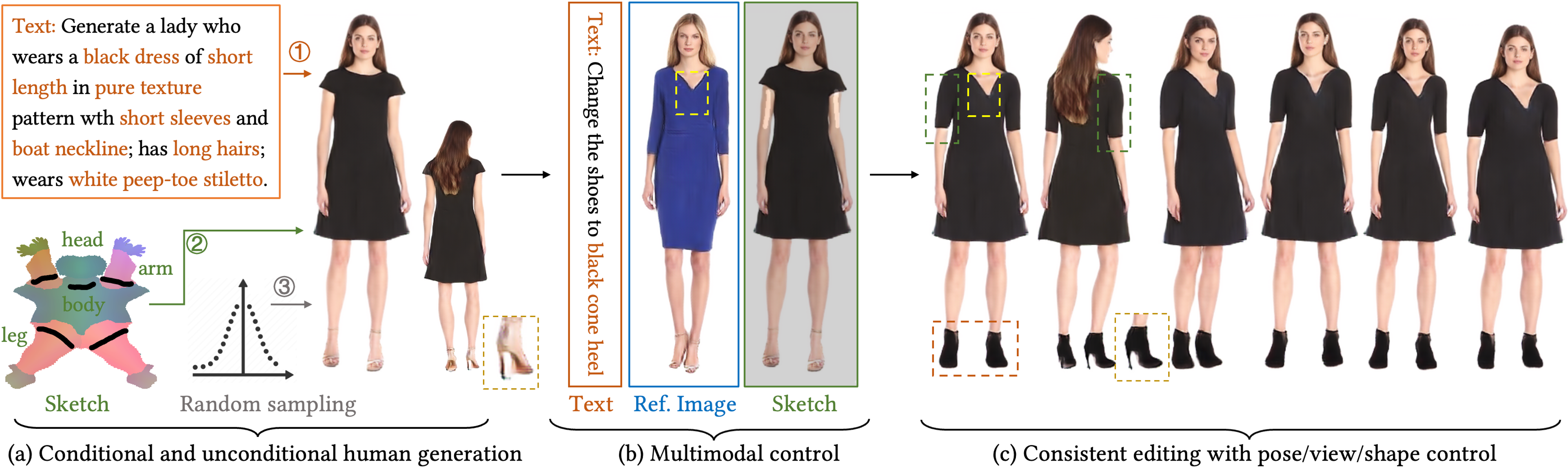

FashionEngine automates the 3D human production in three steps, as shown in Fig. [fig:teaser]. In the first step, a candidate 3D human is generated either randomly or conditionally from text descriptions or hand-drawing sketches. Subsequently, users can use text, reference images, or simply draw sketches to edit the appearance of the 3D human in an interactive way. In the last step, final adjustments in poses and shapes can be performed before being rendered into images or videos. A key challenge for human editing lies in the alignment between the user inputs and the human representation space. FashionEngine enables the human editing with three components.

Firstly, FashionEngine utilizes the 3D human priors learned in a pre-trained model [3] that models humans in a semantic UV latent space. The UV latent space preserves the articulated structure of the human body and enables detailed appearance capture and editing. From the latent space, a 3D-aware auto-decoder is employed to embed the properties learned from the 2D training data, which decodes the latents into 3D humans under different poses, viewpoints, and clothing styles. Furthermore, a 3D diffusion model [3] learns the latent space for generative human sampling, which serves as strong priors for different editing tasks.

Secondly, we construct a Multimodality-UV Space from the learned prior, which encodes the appearance, shape topology, and textual semantics of human clothing in a canonical UV space. The multimodal user inputs (e.g., texts, images, and sketches) are faithfully aligned with the implicit UV latent space for controllable 3D human editing. Notably, the multimodality-UV space is shared across different user inputs, which enables various joint multimodal editing tasks.

Thirdly, we propose Multimodality-UV Aligned Samplers conditioned on the user inputs to sample high-quality 3D humans from the diffusion prior. Specifically, a Text-UV Aligned Sampler and a Sketch-UV Aligned Sampler are proposed for text- or sketch-driven generation or editing, respectively. A key component is to search the Multimodality-UV Space to sample desired latents that are well aligned with the user inputs for controllable generation and editing.

Quantitative and qualitative experiments are performed on different generation and editing tasks, including conditional generation, and text-, image-, and sketch-driven 3D human editing. Experimental results illustrate the versatility and scalability of FashionEngine. In addition, our system runs at about 9.2 FPS to render \(512^2\) resolution images on an NVIDIA V100 GPU, which enables interactive editing tasks. To conclude, our contributions are listed as follows.

1) We propose an interactive 3D human generation and editing system, FashionEngine, which enables easy and efficient production of high-quality 3D humans.

2) Making use of a pre-trained 3D human prior, we propose a 3D human editing framework in the UV-latent space, which effectively unifies controlling signals from multiple modalities, e.g., texts, images, and sketches, for joint multimodal editing.

3) Extensive experiments show the state-of-the-art performances of the UV-based FashionEngine system in 3D human editing.

2 Related Work↩︎

2.0.0.1 Human Image Generation and Editing.

It has been a great success for single-category generation, e.g., human faces, with generative adversarial networks (GAN) [4]–[7]. However, generating the whole body with diverse clothing and poses is more challenging for GANs [8]–[15]. The disentanglement of GAN latent space provides opportunities for image editing [16]–[21]. DragGAN [22] presents an intuitive interface for easy image editing. The recent success of diffusion models in general image generation has also motivated researchers to apply this method on human generation [23], [24].

2.0.0.2 3D Human Generation and Editing.

With the advancement of volume rendering [25], some exiting approaches train 3D-aware generative networks from 2D image collections [26]–[30], and 3D-aware human GANs are studied that model garment and human in unified framework [1], [2], [31], [32]. These methods use 1D latent, which makes it challenging to perform editing. Other than the data-driven methods, 2D priors [33], [34] can also be used to enable text-to-3D human generation [35]–[37]. Most recently, 3D diffusion models are also employed for unconditional 3D human generations, such as PrimDiffusion [38] and StructLDM [3]. StructLDM models humans in boundary-free UV latent space [39] in contrast to the tranditional UV space used in [38], [40], [41], and learns a latent diffusion model for unconditional generation. In this work, making use of UV-based latent space and the unconditional diffusion prior [3], we propose 3D human conditional editing with multimodal controls.

| Methods | Uncond. | Text | Image | Sketch | 3D-aware |

|---|---|---|---|---|---|

| EG3D [28] | ✔ | ✔ | |||

| StyleSDF [42] | ✔ | ✔ | |||

| EVA3D [1] | ✔ | ✔ | |||

| AG3D [2] | ✔ | ✔ | |||

| StructLDM [3] | ✔ | ✔ | |||

| DragGAN [22] | ✔ | ✔ | |||

| InstructP2P [43] | ✔ | ✔ | |||

| Text2Human [11] | ✔ | ||||

| Text2Performer [44] | ✔ | ||||

| FashionEngine (Ours) | ✔ | ✔ | ✔ | ✔ | ✔ |

2.0.0.3 3D Diffusion Models.

Diffusion models are proven to be able to capture complex distributions. Directly learning diffusion models on 3D representations has been explored in recent year. Diffusion models on point clouds [45]–[47], voxels [48], [49] and implicit models [50] are used for coarse shape generation. As a compact 3D representation, tri-plane [28] can be used with 2D network backbones for efficient 3D generation [51]–[54].

2.0.0.4 Garment Modeling.

Garments can also be separately modeled via 3D representations [55]–[58]. GarmentCode [59] designs synthetic parameterized garments. DressCode [60] learns text-driven 3D garment generations from synthetic 3D garments. [61] learns the garment shapes from 3D scans. In contrast, ours is built upon a generative model that is learned from real diverse internet videos, which enables photorealistic 3D human generation and editing with multimodal controls.

3 FashionEngine↩︎

FashionEngine learns to dress humans interactively and controllably, and it works in three stages. 1) In the first stage, a 3D human appearance prior \(\mathcal{Z}\) is learned from the training dataset by a latent diffusion model [3] (Sec. 3.1). 2) With the prior \(\mathcal{Z}\), users are allowed to generate a textured clothed 3D human by randomly sampling \(\mathcal{Z}\) or uploading some texts describing the human appearance or a sketch mask describing the clothing mask for controllable generation (Sec. 3.3). 3) Users can optionally edit the generated humans by uploading the desired appearance styles in the form of texts, sketches, or reference images (Sec. 3.4).

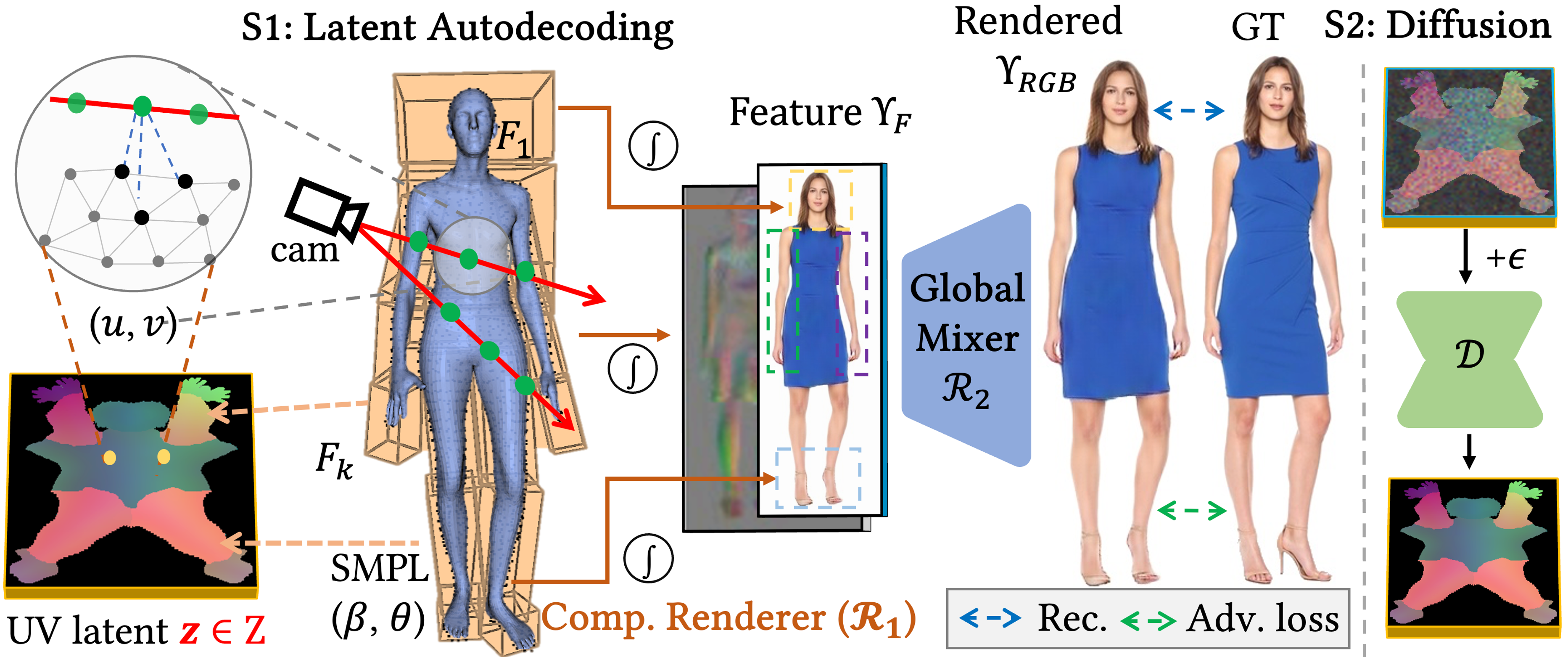

Figure 1: 3D human prior learning [3] in two stages (S1 and S2). S1 learns an auto-decoder containing a set of structured embeddings \({Z}\) corresponding to the human subjects in the training dataset. The embeddings \({Z}\) are then employed to train a latent diffusion model in the semantic UV latent space in the second stage.

3.1 3D Human Prior Learning↩︎

FashionEngine is built upon StructLDM [3] that models humans in a structured semantic UV-aligned space, and it learns 3D human priors in two stages. In the first stage, from a training dataset containing various human subject images with estimated SMPL and camera parameters distribution, an auto-decoder is learned with a set of structured embeddings \({Z}\) corresponding to the training human subjects. In the second stage, a latent diffusion model is learned from the embeddings \({Z}\) in the semantic UV latent space, which provides strong priors for diverse and realistic human generations. The pipeline of prior learning is depicted in Fig. 1, and refer to StructLDM [3] for more details. We utilize the priors learned in StructLDM for the following editing tasks.

3.2 Multimodality-UV Space↩︎

With the human prior \(\mathcal{Z}\) learned in the template UV space, we construct a multimodal-UV space that is aligned with the latent space for multimodal generation.

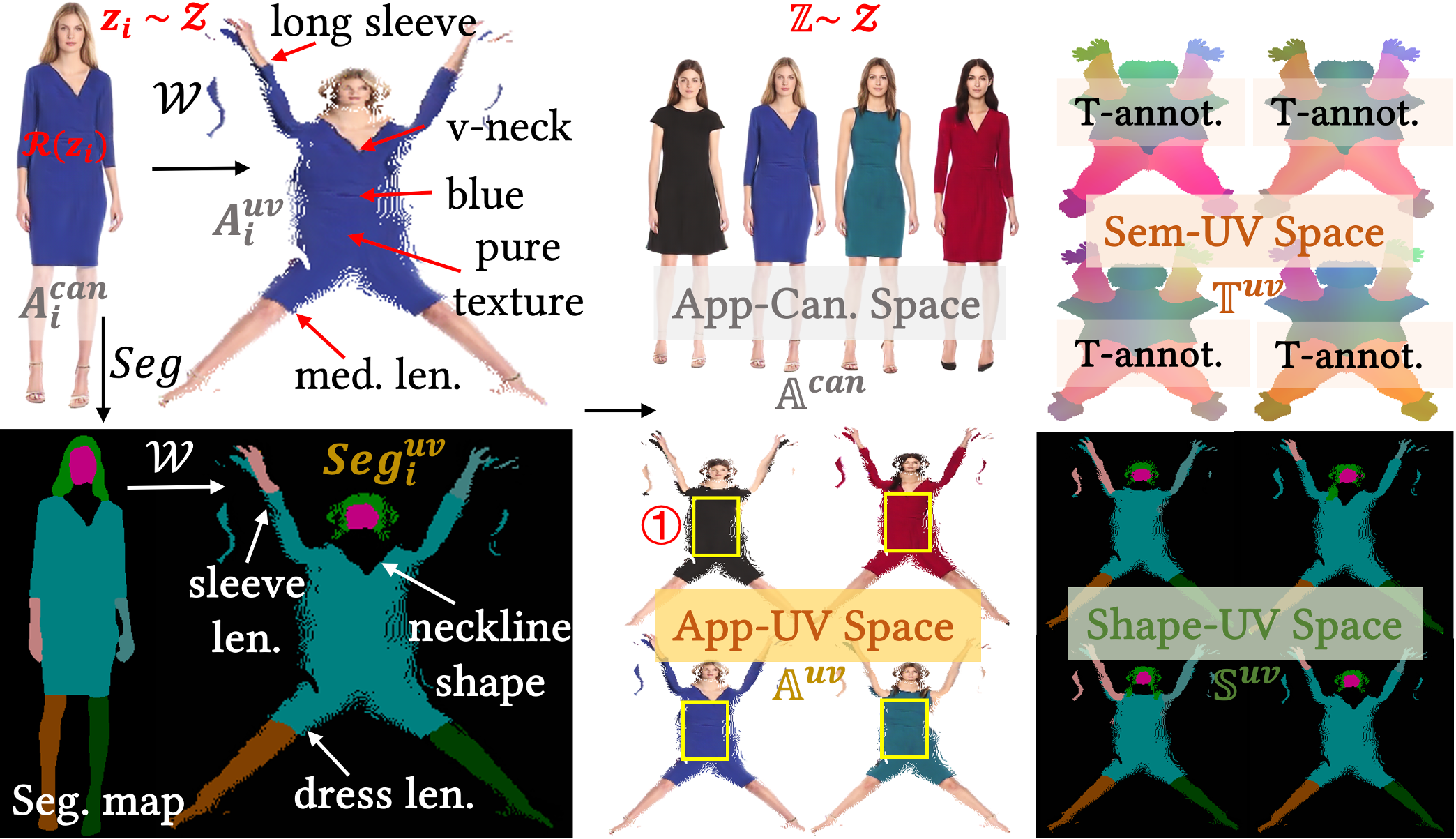

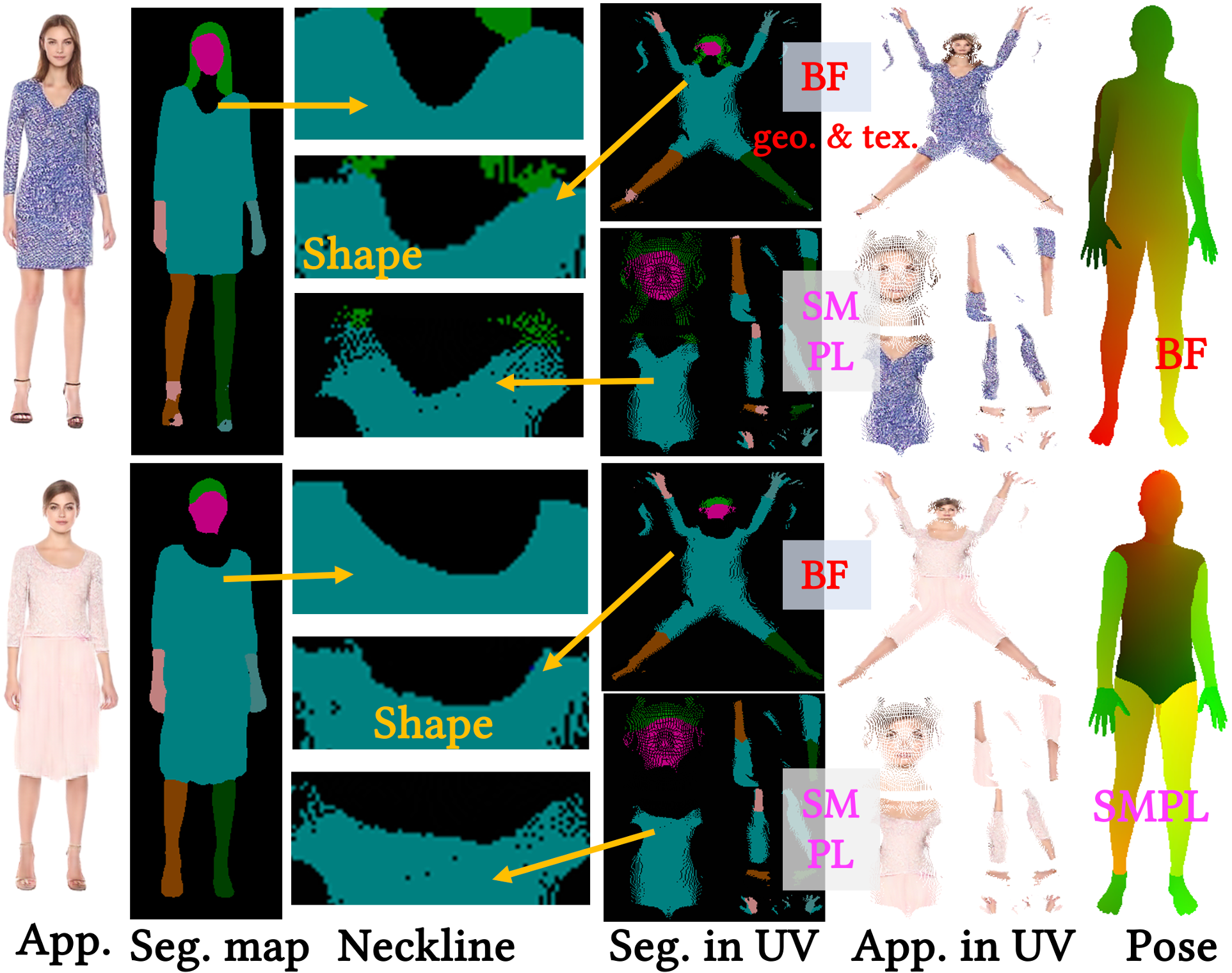

We sample a set of base latent \(\mathbb{Z} \sim \mathcal{Z}\) from the learned prior, and construct a Multimodality-UV space by rendering and annotating the latent space. Each latent \(z_i \sim \mathcal{Z}\) can be rendered into an image \(A^{can}_i\) in a canonical space by renderer \(\mathcal{R}\), under the fixed pose, shape, and camera intrinsic/extrinsic parameters. The rendered image \(A^{can}_i\) is warped by \(\mathcal{W}\) to UV space \(A^{uv}_i\) using the UV correspondences (UV coordinate map in the posed space). Note that though \(A^{uv}_i\) is warped from only a single partial view, it well preserves the clothing topology attributes in a user-readable way, such as the length of sleeves, neckline shape, and the length of lower clothing. In addition, each latent \(z_i\) is annotated with detailed text descriptions as shown in Fig. 2. We also render a segmentation map for each latent by [62], which is warped to UV space \({Seg}^{uv}_i\). \({Seg}^{uv}_i\) preserves the clothing shape attributes such as the length of sleeves, neckline shape, and length of lower clothing.

We render all the latents in \(\mathbb{Z}\) to construct a multimodal space including an Appearance-Canonical Space (App-Can., \(\mathbb{A}^{can}\)), an Appearance-UV Space (App-UV., \(\mathbb{A}^{uv}\)), a textual Semantics-UV Space (Sem-UV, \(\mathbb{T}^{uv}\)), and Shape-UV Space (\(\mathbb{S}^{uv}\)) as shown in Fig. 2.

Figure 2: Multimodality-UV Space (Sec. [sec:method95space]). Based on the learned prior \(\mathcal{Z}\), we construct a Multimodality-UV space including an Appearance-Canonical Space (App-Can, \(\mathbb{A}^{can}\)), an Appearance-UV Space (App-UV, \(\mathbb{A}^{uv}\)), a textual Semantics-UV Space (Sem-UV, \(\mathbb{T}^{uv}\)), and Shape-UV Space (\(\mathbb{S}^{uv}\)).

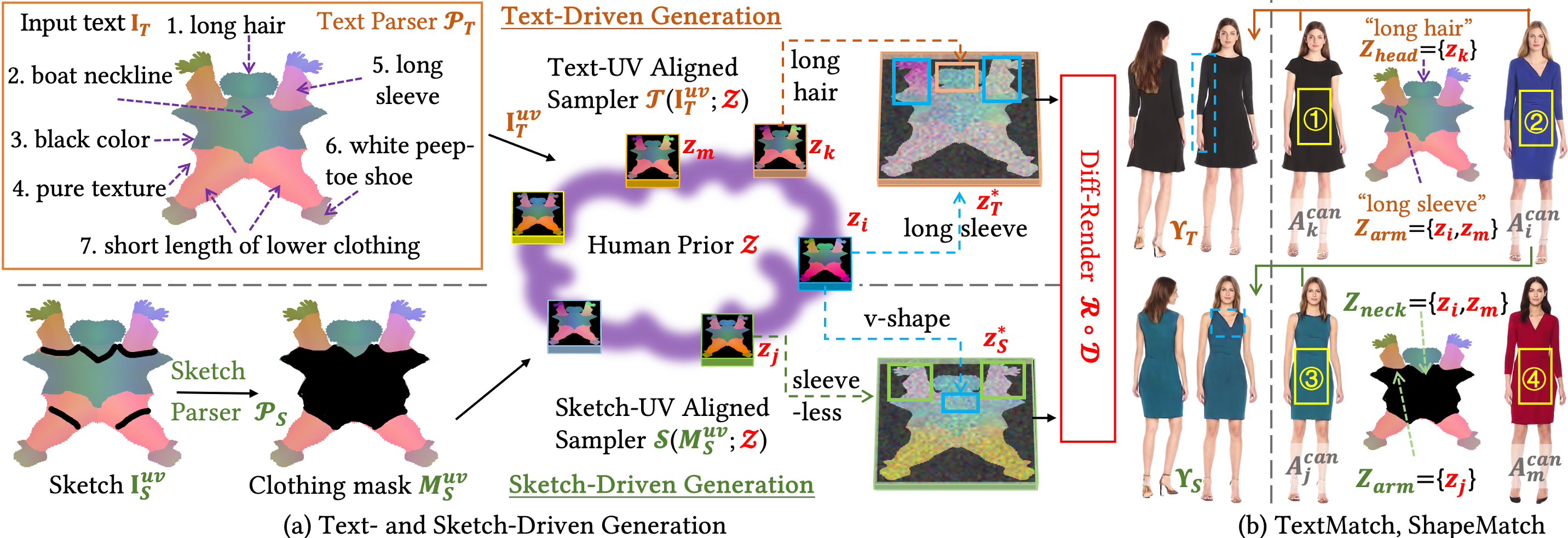

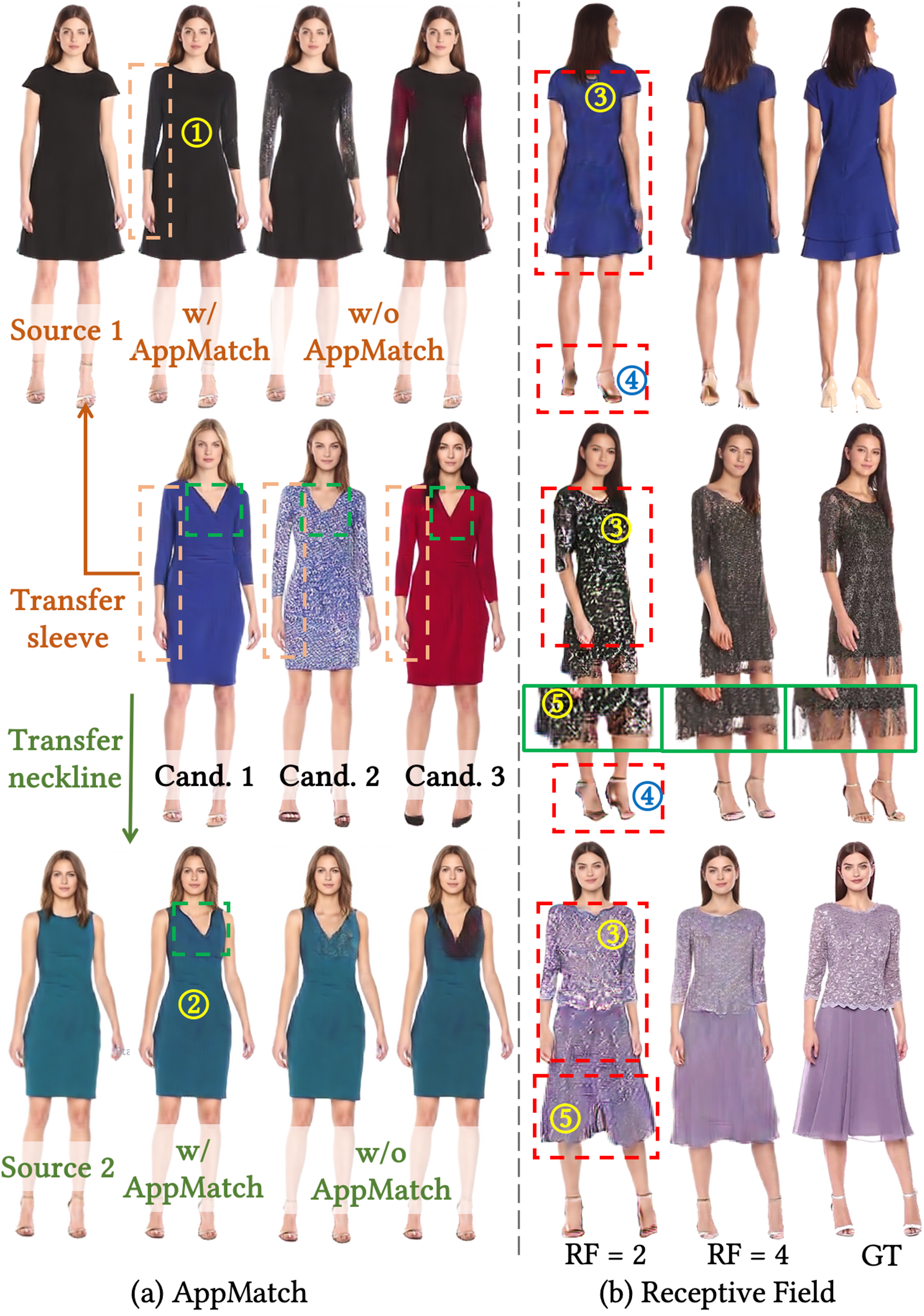

Figure 3: Pipeline of multimodal generation Sec. 3.3. (a) Text- and sketch-driven generation: Given text input \(\mathbf{I}_T\) or sketch input \(\mathbf{I}^{uv}_S\) in the template UV space, we present Text-UV Aligned Samplers and Sketch-UV Aligned Samplers to sample latents (\(z^{*}_{T}\) and \(z^{*}_{S}\)) from the learned human prior \(\mathcal{Z}\) (Sec 3.1) respectively, which can be rendered into images by latent diffusion and rendering (Diff-Render) [3]. (b) Illustration of TextMatch and ShapeMatch: \(\{z_k, z_i\}\) \(\raisebox{.5pt}{\textcircled{\raisebox{-0.9pt}{1}}}\raisebox{.5pt}{\textcircled{\raisebox{-0.9pt}{2}}}\) and \(\{z_j, z_i\}\) \(\raisebox{.5pt}{\textcircled{\raisebox{-0.9pt}{3}}}\raisebox{.5pt}{\textcircled{\raisebox{-0.9pt}{2}}}\) are taken as the best match to construct the target latents (\(z^{*}_{T}\) and \(z^{*}_{S}\)) for the text or sketch input based on the TextMatch and ShapeMatch algorithms respectively.

3.3 Controllable Multimodal Generation↩︎

FashionEngine generates human images given text input \(\mathbf{I}_T\) or sketch input \(\mathbf{I}^{uv}_S\) in the template UV space. We present UV-aligned samplers to sample the multimodal-UV space for controllable multimodal generation (Sec. 3.3.1, 3.3.2).

3.3.1 Text-Driven Generation.↩︎

Users are allowed to upload input texts \(\mathbb{I}_T\) describing the appearances of the desired human, including hairstyle, and six clothing properties: clothing color, texture pattern, neckline shape, sleeve length, length of lower clothing, and types of shoe, as shown in Fig. 3, which semantically corresponds to six parts \(P\)={head, neck, body, arm, leg, foot} in the UV space. A Text Parser \(\mathcal{P}_T\) (Sec. [sec:sketchparser]) is proposed to align the input texts with semantic body parts in UV space, which yields part-aware Text-UV aligned semantics \(\mathbf{I}^{uv}_T = \mathcal{P}_T(\mathbf{I}_T)\).

3.3.1.1 Text-UV Aligned Sampler.

We further propose a Text-UV Aligned Sampler \(\mathcal{T}\) to sample an latent \(z^{*}_{T}\) from the learned prior \(\mathcal{Z}\) conditioned on the input text \(z^{*}_{T} = \mathcal{T}(\mathbf{I}^{uv}_T; \mathcal{Z})\). The sampler works in two stages. In the first stage, we independently search the latents in the Sem-UV Space \(\mathbb{T}^{uv}\) that match the input text descriptions \(\mathbf{I}^{uv}_T\) semantically for each body part \(p \in P\) through SemMatch: \[\label{eq:sem95match} Z_p = \underset{z_i \in \mathbb{Z}, t^{uv}_i \in \mathbb{T}^{uv}}{\arg\max} SemMatch(t^{uv}_i[p], \mathbf{I}^{uv}_T[p])\tag{1}\] where \(\mathbf{I}^{uv}_T[p]\) indicates the extraction of the textual semantics of part \(p\). In the paper, we consider top-n matches, and E.q. 1 yields a set of candidate latents for each body part \(p\), \(Z_p = \{z_0, ..., z_{k-1}\}\).

In the second step, we find the best match latent \(z^*_p\) for each part based on the appearance similarity score by AppMatch: \[\label{eq:m95gen95app95match} \max_{z^*_p \in Z_p, A^{uv}_p \subset \mathbb{A}^{uv}} AppMatch(\{A^{uv}_p[M_{body}] | p \in P\})\tag{2}\] where \(A^{uv}_p = \{A^{uv}_i | z_i \in Z_p\}\), and \(M_{body}\) indicates the body mask in UV space ( in Fig. 2 (b)) in UV space. In the paper, we calculate the multichannel SSIM [63] score in AppMatch.

With \(\{z^*_p \in Z_p\}\), an optimal latent \(z^{*}_{T}\) is constructed conditioned on input text \({\mathbf{I}_T}\): \[\label{eq:m95gen95construct} z^{*}_{T} = \sum_{ p \in P} z^*_{p} * M_p\tag{3}\]

where \(M_p\) is the mask of body part \(p\).

To explain this process, let’s take Fig. 3 as an example. For the sake of clarity, we will only consider two attributes: hairstyle and sleeve length. For the input text {"long hair", "long sleeve"}, we get \(\{ Z_{head}=\{z_k\}, Z_{arm}=\{z_i, z_m\} \}\) where \(\{z_k\}\) meets "long hair", and \(\{z_i, z_m\}\) meets "long sleeve", as shown in Fig. 3 (b). The group \(\{z*_{head}=z_k, z*_{arm} = z_i\}\) is ranked as the optimal since \(AppMatch(\raisebox{.5pt}{\textcircled{\raisebox{-0.9pt}{1}}}, \raisebox{.5pt}{\textcircled{\raisebox{-0.9pt}{2}}})\) \(>\) \(AppMatch(\raisebox{.5pt}{\textcircled{\raisebox{-0.9pt}{1}}}, \raisebox{.5pt}{\textcircled{\raisebox{-0.9pt}{4}}})\) in terms of SSIM score. Note that instead of calculating the similarity in image space, we warp the patches (e.g., in Fig. 3) to a compact UV space (e.g., Fig. 2) for efficiency, and it also eliminates the effects of the tightness of different clothing types in evaluation. We finally get \(z^{*}_{T} = z_k * M_{head} + z_i * M_{arm}\), which is rendered as images by Diff-Render, \(\mathbf{Y}_T = \mathcal{R} \circ \mathcal{D} (z^{*}_{T})\).

3.3.2 Sketch-Driven Generation.↩︎

Users are also allowed to design a human by simply sketching to describe the dress shape including neckline shape, length of sleeve, and lower clothing in the template UV space, \(\mathbf{I}^{uv}_S\) as shown in Fig. 3 (a). A Sketch Parser (Sec. [sec:sketchparser]) is employed to translate the raw sketch into a clothing mask \(M^{uv}_S\).

3.3.2.1 Sketch-UV Aligned Sampler.

We further propose a Sketch-UV Aligned Sampler \(\mathcal{S}\) to sample a latent \(z^{*}_{T} = \mathcal{T}(I^{uv}_M; \mathcal{Z})\) from the learned prior \(\mathcal{Z}\) conditioned on the sketch mask \(M^{uv}_S\) in two stages. In the first stage, we search the latents in the Shape-UV Space \(\mathbb{S}^{uv}\) that matches the input sketch mask \(M^{uv}_S\) for body part \(p\) using ShapeMatch: \[\label{eq:sketch95match} Z_p = \underset{z_i \in \mathbb{Z}, s^{uv}_i \in \mathbb{S}^{uv}}{\arg\min} ShapeMatch(s^{uv}_i[p], M^{uv}_S[p])\tag{4}\] where \(M^{uv}_S[p]\) indicates the pixels of part \(p\). The ShapeMatch algorithm evaluates the shape similarity between two binary masks, and it is the lower the result, the better match it is. It is calculated based on the hu-moment values [64]. In the paper, we consider top-k matches, and E.q. 4 yields a set of candidate latents for each body part \(p\). We employ AppMatch E.q. 2 to find the optimal latent \(\{z^*_p | p \in P\}\), and target latent \(z^*_S\) is similarly constructed via E.q. 3 .

We take Fig. 3 (a) as an example to explain the process. For the sake of clarity, only two attributes are considered: neckline shape and sleeve length. For the input clothing mask \(M^{uv}_S\) which is parsed as "a dress shape with medium length of lower clothing, sleeves cut off, and V-shape neckline", we get the matched latents \(\{Z_{neck}=\{z_i, z_m\}, Z_{arm}=\{z_j\}\}\) as shown in Fig. 3 (b).

The group \(\{z^*_{neck}=z_i, z^*_{arm} = z_j\}\) is ranked as the optimal since \(AppMatch(\raisebox{.5pt}{\textcircled{\raisebox{-0.9pt}{3}}}, \raisebox{.5pt}{\textcircled{\raisebox{-0.9pt}{2}}})\) \(>\) \(AppMatch(\raisebox{.5pt}{\textcircled{\raisebox{-0.9pt}{3}}}, \raisebox{.5pt}{\textcircled{\raisebox{-0.9pt}{4}}})\) in terms of SSIM score. We finally get \(z^{*}_S = z_i * M_{neck} + z_j * M_{arm}\), which is rendered as images by Diff-Render, \(\mathbf{Y}_S = \mathcal{R} \circ \mathcal{D} (z^{*}_S)\).

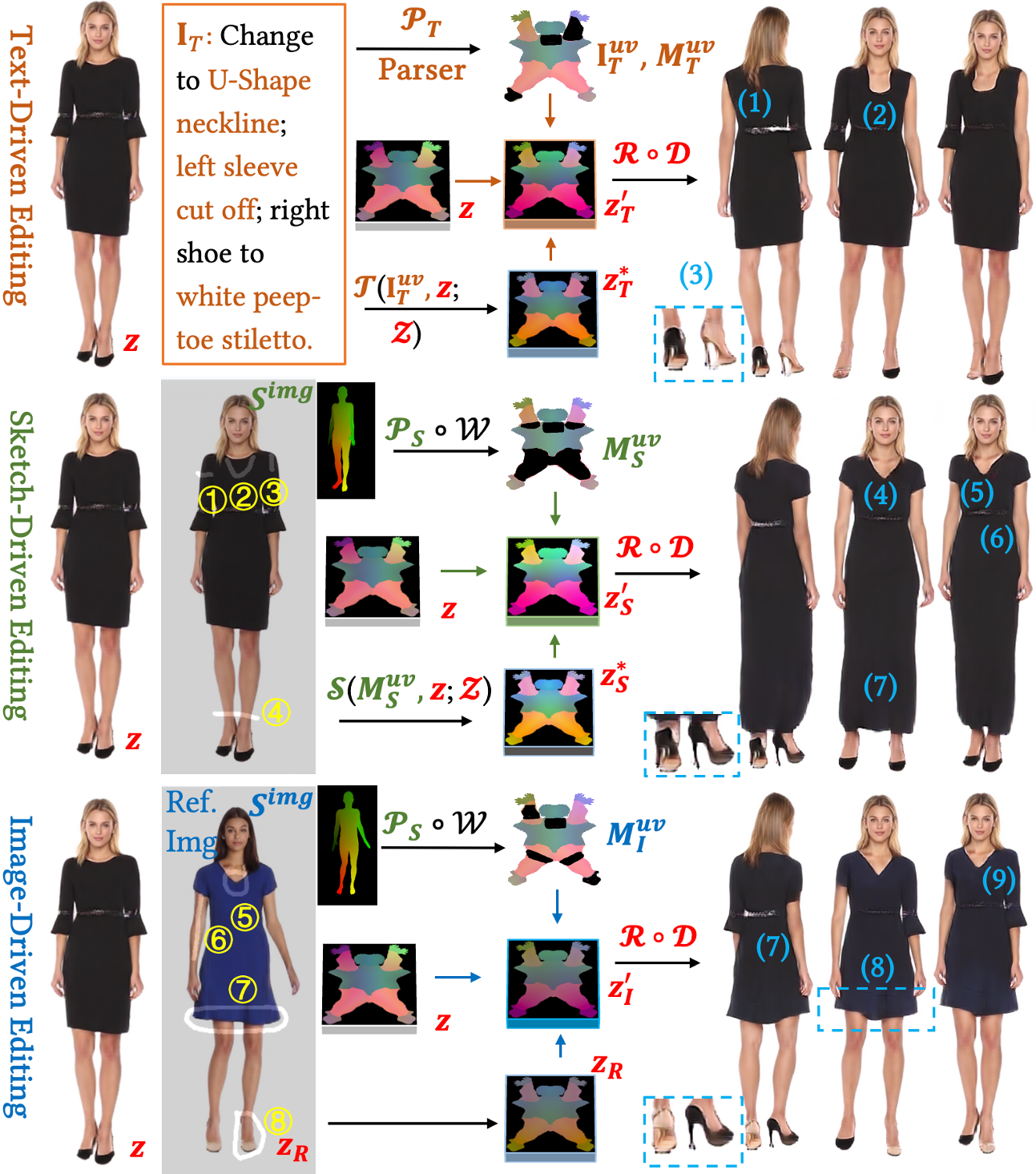

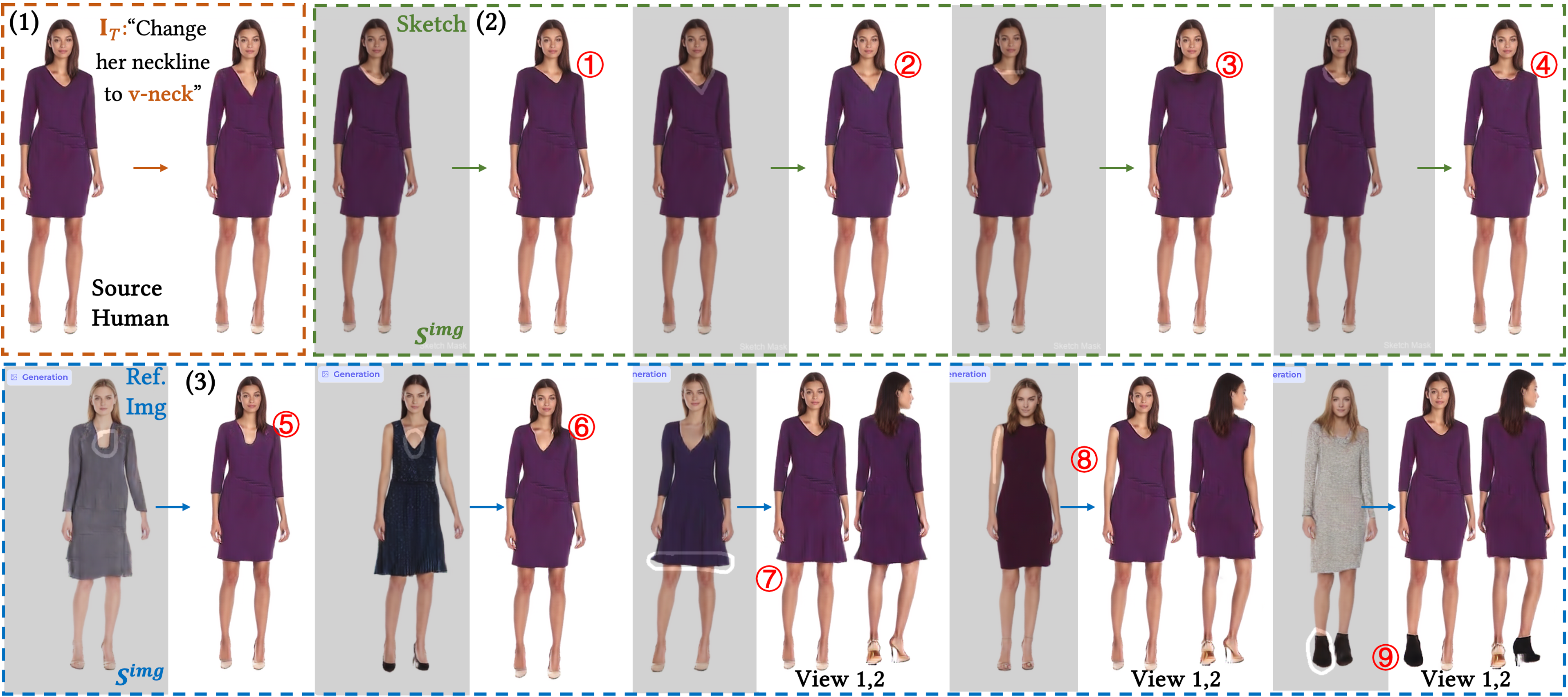

Figure 4: Text-, Sketch-, and Image-Driven Editing (Sec. 3.4). To edit a source human with latent \(z\), FashionEngine allows users to type texts \(\mathbf{I}_T\), draw sketches \(S^{img}\), or provide a reference image with sketh masks for style transfer, and the target latents are constructed corresponding to the user inputs. Note that the sketch can describe the length of sleeves in two different ways (e.g., ), or describe the geometry (e.g., ).

3.4 Controllable Multimodal Editing↩︎

FashionEngine also allows users to edit the generated humans by uploading texts, sketches, or guided images to describe the desired clothing appearances, as shown in Fig. 4.

3.4.1 Text-Driven Editing.↩︎

For the generated human with source latent \(z\), given input text \(\mathcal{I}_T\) describing the editing commands, the Text Parser \(\mathcal{P}_T\) is employed to parse the input in UV space, which yields \(\mathcal{I}^{uv}_T\) and a mask \(M^{uv}_T\) indicating which body part to edit. The Text-UV aligned sampler \(\mathcal{T}\) searches the Sem-UV Space \(\mathbb{T}^{uv}\) to find an optimal latent \(z^*_T\) that matches the input editing texts by SemMatch E.q. 1 with a higher appearance similarity by AppMatch E.q. 2 : \(z^{*}_T = \mathcal{T}(\mathbf{I}^{uv}_T, z; \mathcal{Z})\). Note that a preprocessing step is to render \(z\) to the canonical space as introduced in Sec. [sec:method95space], yielding a warped image \(A^{uv}\) in UV space for appearance matching in AppMatch. Finally, the updated latent is calculated as \(z'_T = z^*_T * M^{uv}_T + (1 - M^{uv}_T) * z\), which can be rendered by Diff-Render [3].

Fig. 4 shows the qualitative results, which suggest that given text descriptions, FashionEngine is capable of synthesizing view-consistent appearance editing results for sleeves (1), neckline (2), and shoes (3).

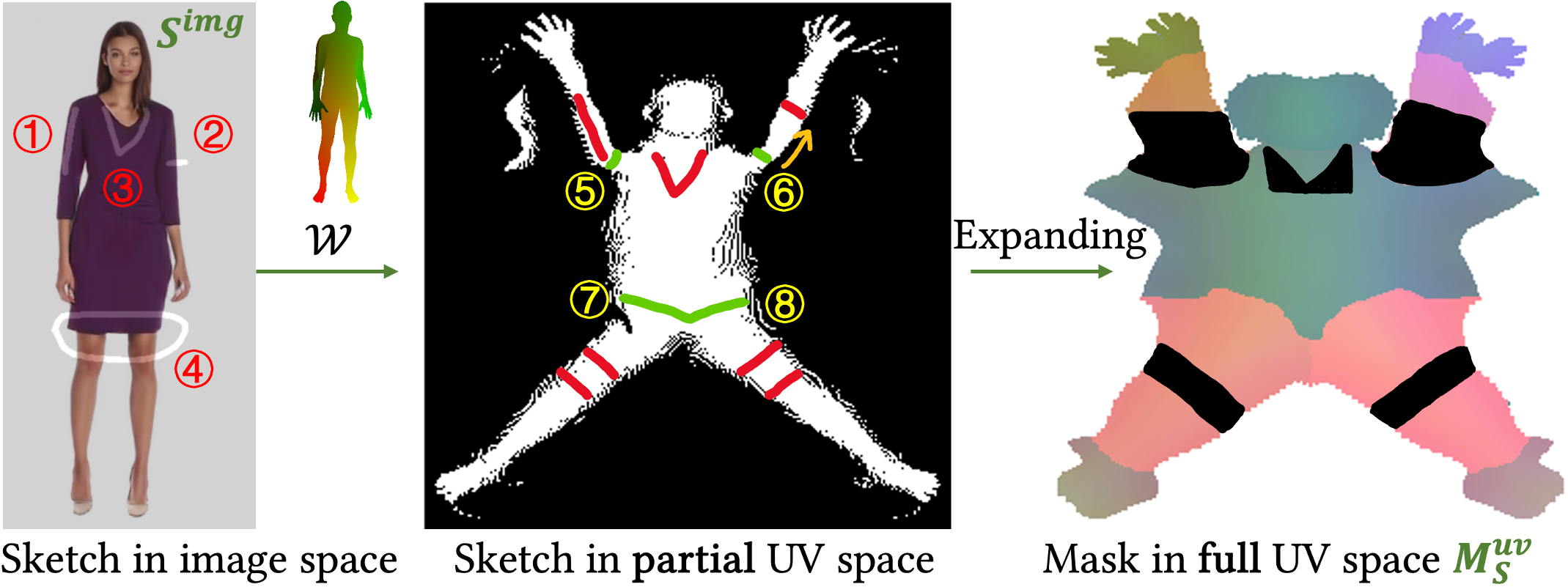

Figure 5: Illustration of Sketch Parser. Sketch Parser supports 4 different types of sketch input: sleeve or dress length in two manners , neckline shape , or a closed area . Sketches are transformed from the image space to the unified partial UV space (warped by single view as shown in Fig. 2), and expanded into a mask in the full UV space based on body topology prior.

3.4.2 Sketch-Driven Editing.↩︎

Users can also edit the clothing style by simply drawing sketches for finer-grained control. For a generated human image with source latent z and input sketches in image space \(S^{img}\), users can extend the sleeves , change the neckline to V-shape , and extend the length of lower clothing as shown in Fig. 4. We first transform the input sketches from image space to UV space by \(\mathcal{W}\), and a Sketch Parser \(\mathcal{P}_S\) (Sec. [sec:sketchparser]) is employed to synthesize clothing masks \(M^{uv}_S\) for editing. Conditioned on the source latent \(z\) and mask, the Sketch-UV aligned sampler \(\mathcal{S}\) searches the Shape-UV Space \(\mathbb{S}^{uv}\) to find an optimal latent \(z^*_S\) that matches the sketch mask by ShapeMatch E.q. 4 with a higher appearance similarity by AppMatch E.q. 2 : \(z^{*}_S = \mathcal{S}(\mathbf{M}^{uv}_T, z; \mathcal{Z})\). Similar to text-driven editing, we render a canonical image of \(z\) for AppMatch. Finally, the updated latent is calculated as \(z'_S = z^*_S * M^{uv}_S + (1 - M^{uv}_S) * z\), which can be rendered by Diff-Render. Fig. 4 shows the consistent clothing rendering that is well aligned with the input sketch, including V-shape neckline , short left and right sleeves , and dress .

3.4.3 Image-Driven Editing.↩︎

A picture is worth a thousand words; texts sometimes struggle to describe a specific clothing style, whereas images provide concrete references. Given a reference image of a dressed human, FashionEngine allows users to transfer any parts of the clothing style from the reference image to edit the source humans by joint image- and sketch-driven editing, as shown in Fig. 4. Users can sketch to mark which parts of clothing style will be transferred, e.g., \(S^{img}\) shown in Fig. 4.

We transform the sketch from image space to UV space by \(\mathcal{W}\), and utilize a Sketch Parser \(\mathcal{P}_S\) to synthesize clothing masks \(M^{uv}_I\) for editing. Given the reference latent \(z_R\), the source latent \(z\) is updated to \(z'_I = z_R * M^{uv}_I + z * (1 - M^{uv}_I)\)., which is rendered into images by Diff-Render. Fig. 4 shows that selected styles are faithfully transferred to the source human including the style of right arm , hemline structure , neckline and shoes.

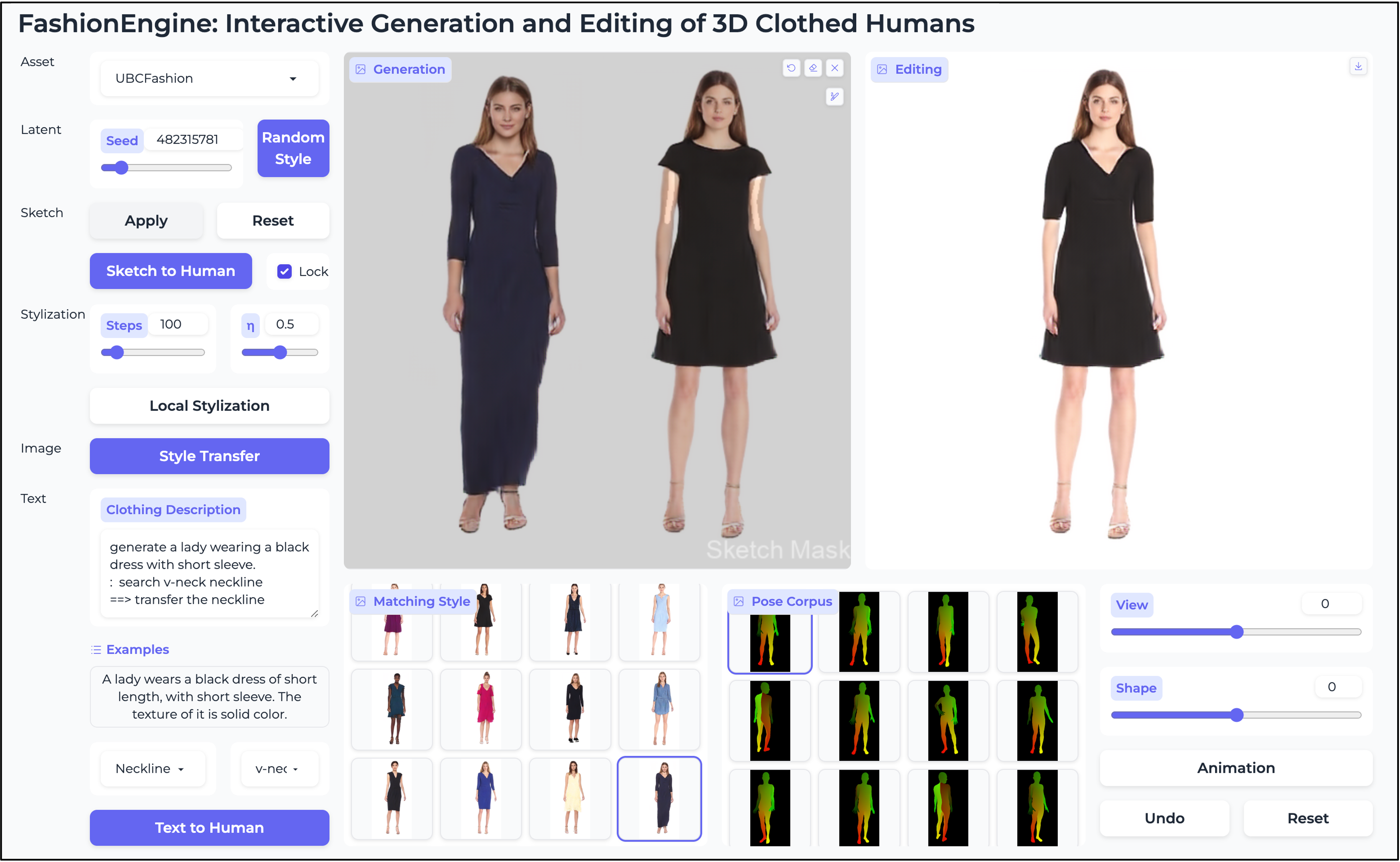

Figure 6: FashionEngine User Interface (Sec 3.5). Users can generate humans by unconditional (‘Random Style’) or conditional generation (e.g., ‘Text to Human’), and edit the generated humans by sketch-based (‘Sketch to Human’), image-based (‘Style Transfer’), and text-based (‘Text-to-Human’) editing. For conditional sketch or text input, i.e., text ‘search v-neck neckline’, ‘Matching Style’ is provided to search candidate styles (e.g., ‘v-neck neckline’ that match the input), and users are allowed to select desired styles for more flexible and generalizable style editing. Users can also check the generated humans under different poses by selecting one specific pose in ‘Pose Corpus’, and under different viewpoints or shapes by adjusting the ‘View’ or ‘Shape’ slider. The generated humans are also animatable. See the live demo for more details.

3.5 Interactive User Interface↩︎

We present an interactive user interface for our FashionEngine as shown in Fig. 6. FashionEngine provides users with unconditional and conditional human generations, and three different manners of sketch-, image-, and text-based editing. Adjustments in pose/view/shape are also allowed, and users can generate and export animation videos using FashionEngine. Refer to the live demo for more details.

3.6 Method Appendix: Text and Sketch Parser↩︎

3.6.0.1 Text Parser

Due to the scale of dataset, instead of learning a language model to encode the text inputs, the Text Parser uses keyword matching as used in Text2Human [11] based on a similar template [44].

3.6.0.2 Sketch Parser

An illustration is shown in Fig. 5, where 4 different types of sketch input are allowed to design the clothing style, including the length of sleeve or dress by or , the shape of neckline , and a closed area of clothing . Sketches in the image space are transformed to the unified partial UV space as shown in Fig. 2. Note that the partial UV space preserves the body topology, e.g., the boundary of each body part , which allows to expand the partial sketches into clothing masks in the full UV space that is aligned with the latent space for faithful editing.

4 Experiments↩︎

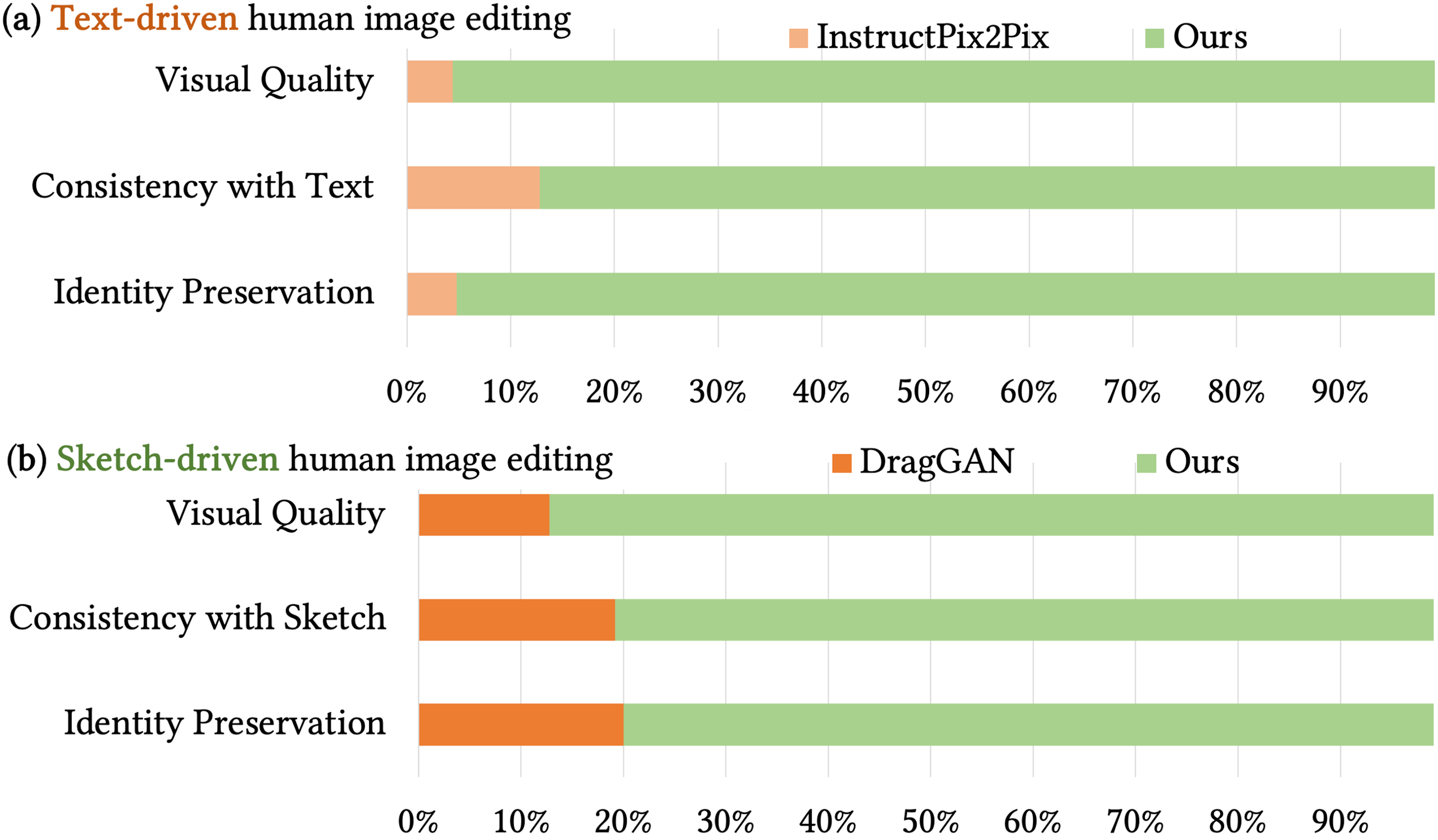

Figure 7: Qualitative comparisons with InstructPix2Pix [43] and DragGAN [22] for text-driven and sketch-driven editing. We generate high-quality images with faithful identity preservation, and the editing results are well-aligned with the text/sketch inputs.

4.1 Experimental Setup↩︎

4.1.0.1 Datasets and Metrics

We perform experiments on a monocular video dataset UBCFashion [65] that contains 500 monocular human videos with natural fashion motions. We conduct a perceptual user study and quantitative comparisons to evaluate the visual quality, consistency with input, and identity preservation ability in different human editing tasks.

4.2 Comparisons to State-of-the-Art Methods↩︎

4.2.0.1 Text-Driven Editing.

We compare against InstructPix2Pix for text-driven human image editing, as shown in Fig. 7 (a). We generate high-quality images, and the editing results are well-aligned with the text inputs. In addition, we also faithfully preserve the identity information, whereas InstructPix2Pix cannot achieve this. The advantages over InstructPix2Pix are further confirmed by the user study in Fig. 8, where 25 participants are asked to select the images with better visual quality, better consistency with the text inputs, and better preservation of identity information. It suggests that more than 90% of editing images by our methods are considered to be more realistic than InstructPix2Pix.

Quantitatively, we compute the CLIP [33] feature similarity, dubbed CLIP score, between 20 randomly generated pairs of editing results and text prompts to show the effectiveness of the text-driven editing. As shown in Tab. 2, CLIP score of our results consistently outperforms that of InstructPix2Pix, which shows the stronger instruction-following ability of our method. We also analyze the identity preservation ability by evaluating the face similarity with the source images, which is defined as the cosine distance of FaceNet [66] embeddings of face images. We also evaluate the appearance preservation ability after local editing by measuring the PSNR on unaffected areas. Tab. 2 suggests both the strong identity and appearance preservation ability of our method.

4.2.0.2 Sketch-Driven Editing.

We also compare against DragGAN [22] for sketch-driven human image editing, as shown in Fig. 7 (b). Our method supports local editing, and we faithfully preserve the identity information. However, DragGAN often edits humans in a global latent space and the local editing generally affects the full-body appearances, and hence the identity information is not well preserved. A user study in Fig. 8 (b) further confirms this, where 25 participants are asked to select the images with better visual quality, better consistency with the sketch inputs, and better preservation of identity information. More than 85% participants prefer our results in terms of visual quality, and about 80% participants prefer our method for consistency with sketch input and identity preservation.

4.2.0.3 3D vs. 2D Human Diffusion.

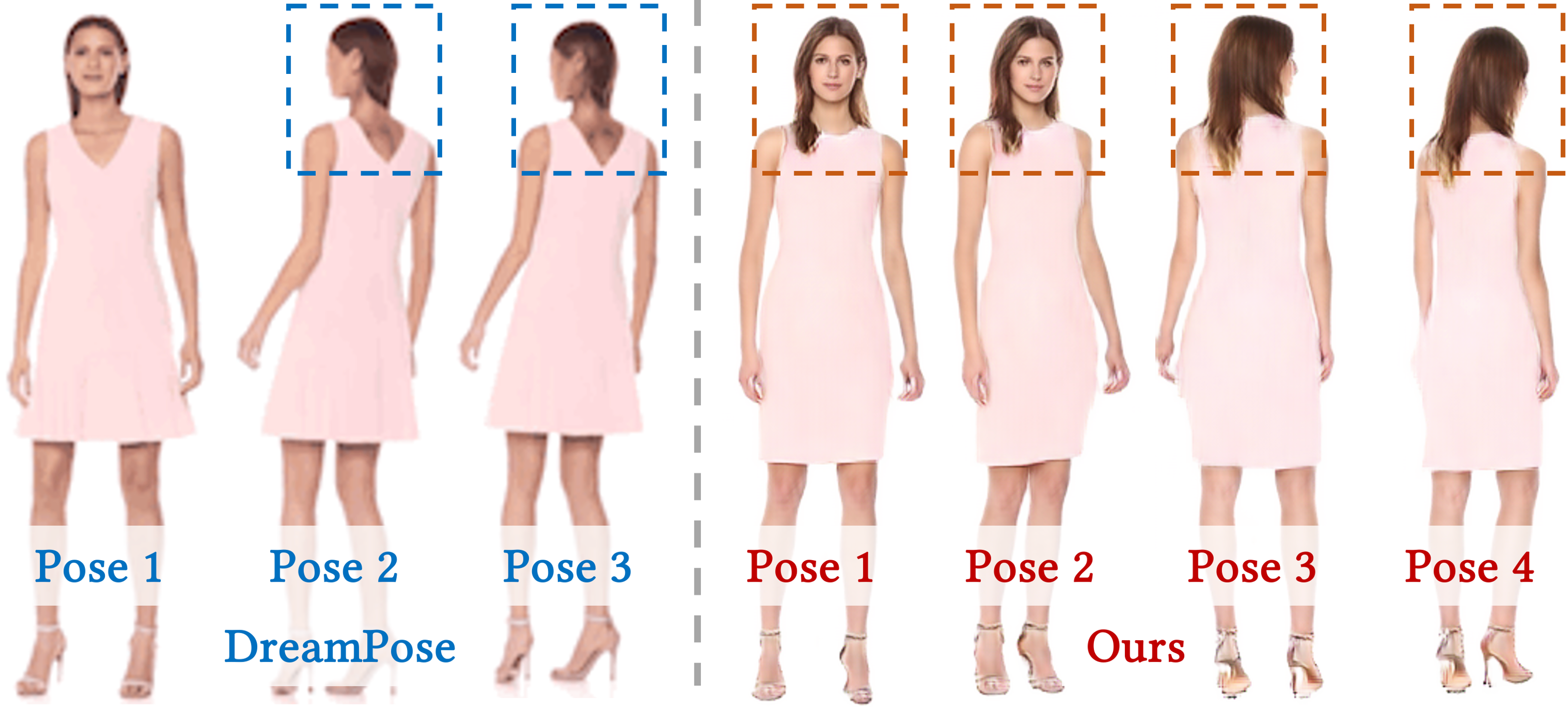

We compare against the 2D diffusion approach DreamPose [67] for generations on novel poses, as shown in Fig. 14. DreamPose fine-tunes a pre-trained Stable Diffusion module on the UBCFashion dataset for human generations. We select the generated humans with similar appearances for comparisons. Fig. 14 shows the advantages of the 3D diffusion approach over DreamPose in consistent human renderings (e.g., hair) under different poses or viewpoints. In addition, our system synthesizes higher-quality images than DreamPose.

Figure 8: User study on conditional human image editing quantitatively shows the superiority of FashionEngine over text-driven baseline InstructPix2Pix [43] (a), and sketch-driven baseline DragGAN [22] (b) in three aspects: 1) visual quality, 2) consistency with input, and 3) identity reservation.

| Metrics \(\uparrow\) | InstructPix2Pix | Ours |

| CLIP Score (ViT-B/32) \(\times 100\) | 25.42 | 26.32 |

| CLIP Score (ViT-B/16) \(\times 100\) | 25.63 | 26.34 |

| CLIP Score (ViT-L/16) \(\times 100\) | 18.54 | 20.25 |

| Identity Preservation \(\times 100\) | 25.36 | 89.83 |

| Appearance Preservation (PSNR) | 28.55 | 33.90 |

Figure 9: Ablation study of AppMatch (a) and the size of Receptive Field (b).

4.3 Ablation Study↩︎

| LPIPS \(\downarrow\) | FID \(\downarrow\) | PSNR \(\uparrow\) | ||

|---|---|---|---|---|

| \(RF = 2\) | .067 | 14.880 | 23.575 | |

| \(RF = 4\) | .060 | 12.077 | 23.803 |

4.3.0.1 Receptive Field (RF) in Global Style Mixer.

We utilize the architecture of StructLDM [3], where a global style mixer is employed to learn full-body appearance style. We evaluate how the size of RF affects the reconstruction quality by comparing the performances of reconstructing humans under RF=2 and RF=4 on about 4,000 images in the autodecoding stage. The qualitative results are shown in Fig. 9 (b), which suggests that a bigger Receptive Field (RF=4) captures the global clothing styles better than RF=2 , and also recovers more details than RF=2, such as the high heels . In addition, RF=4 successfully reconstructs the hemline and between-leg offsets of the dress, while a smaller RF fails . The conclusion is further confirmed by the quantitative comparisons listed in Tab. 3, where a bigger RF achieves better quantitative results on LPIPS [68], FID [69] and PSNR. Note that the global style mixer also upsamples features in style mixing, and RF=2 upsamples the original 2D feature maps from \(256^2\) to \(512^2\) image resolutions, while RF=4 upsamples the features from \(128^2\) to \(512^2\).

Figure 10: The capacity of multimodal editing. Designers can simply input texts to edit humans (1), or draw sketches to describe the clothing shape for finer-grained editing (2), or transfer any parts of the clothing style from a reference image (3) for view-consistent editing.

Figure 11: Comparisons of boundary-free (BF) UV and standard SMPL UV. BF UV faithfully preserves the shape of neckline after UV warping, and preserves both the geometry and texture in a more readable way than the standard SMPL UV for editing.

4.3.0.2 Appearance Match.

As presented in Sec. [sec:appMatch], an Appearance Match (AppMatch) technique is required to sample desired latents for both Text-UV Aligned Sampler and Sketch-UV Aligned Sampler. For the cases in Fig. 3, we provide more intermediate details to analyze the effects of the AppMatch, as shown in Fig. 9 (a). For both the text-driven and sketch-driven generation tasks in Fig. 3, we get some candidate latents by TextMatch or ShapeMatch, such as Cand.1, Cand.2, and Cand.3, which all meet the requirements of "long sleeves" and "V-shape neckline". With AppMatch, the sleeves of Cand. 1 will be transferred to the source identity 1 (Src1) since AppMatch (Src1, Cand. 1) \(>\) AppMatch (Src1, Cand. 2), which enables higher quality generation than Cand. 2 or 3. Similarly, AppMatch also improves the results for sketch-driven generation tasks, such as the generation of V-shape neckline .

4.3.0.3 Boundary-Free UV vs. Standard SMPL UV.

We employ a continuous boundary-free (BF) UV topology [39] for editing, and the comparisons against standard SMPL UV are shown in Fig. 11. BF preserves both the geometry and texture in a more readable way than the standard SMPL UV, which offers user-friendly interfaces for sketch-driven generation as shown in Fig. [fig:teaser], 3. In addition, BF UV faithfully preserves the clothing shape after UV warping, i.e. the neckline shape in BF UV has more similarities to the source image than the standard SMPL UV. The BF UV mapping has also been proven to be more friendly for CNN-based architecture than the standard discontinuous UV mapping in [39].

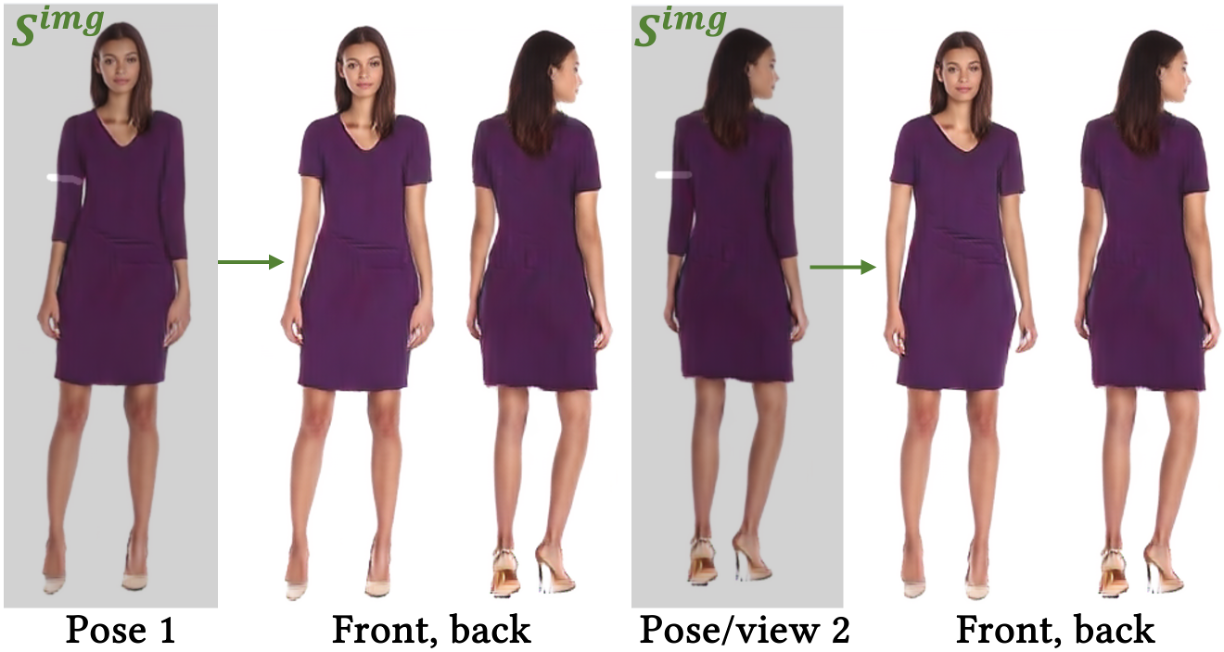

Figure 12: Ablation study of UV latent editing. Full UV latent editing (with the expansion step in Fig. 5) enables view-consistent human renderings.

4.3.0.4 Partial vs. Full UV Latent Editing.

We also compare the differences of editing humans in the partial or full UV space, as illustrated in Fig. 12. The main difference lies in whether the expansion step is included as shown in Fig. 5. Partial editing is similar to image-based editing, which generates inconsistent humans, whereas our full UV latent editing enables view-consistent human renderings.

Figure 13: Pose-, view-agnostic editing. FashionEngine allows designers to edit humans in different poses or viewpoints by transforming the input signals from the image space to the unified UV space.

4.4 Controllable 3D Human Editing↩︎

4.4.0.1 Multimodal Editing.

Fig. 10 illustrates each modality (texts, sketches, and image-based style transfer) in human editing. Texts are the easiest way to edit humans, i.e."changing the neckline to v-neck", whereas they sometimes fails to efficiently and accurately convey the designer’s intent, such as the size and orientation of the v-neck. To solve this issue, sketch-driven editing is provided for finer-grained editing, i.e., precisely controlling the size of the v-neck , boat/round neckline by simply drawing sketches.

A picture is worth a thousand words. We improve the capacity of editing by providing image-based style transfer as introduced in Sec. 3.4.3. Designers can freely transfer any parts of the clothing style from the reference image to edit the source humans i.e., transferring the neckline , wavy hem , sleeve style and shoes . Note that to get the reference styles/images, designers can utilize the functionality of ‘Matching Style’ that searches the candidate styles based on rough text or sketch descriptions. Refer to Fig. 6 and the live demo for more details.

4.4.0.2 Pose-View-Shape Control.

FashionEngine also integrates the functionality of pose/viewpoint/shape control for human rendering, which are achieved by changing the camera or human template parameters (e.g., SMPL). Refer to the live demo for the functionality.

4.4.0.3 Pose-, View-Agnostic Editing.

FashionEngine allows artist-designers to edit humans in different poses and viewpoints, which is achieved by transforming the input signals (e.g., sketches) from the image space to the unified UV space (illustrated in Fig. 4, 5) for pose- and view-independent editing as shown in Fig. 13.

5 Discussion↩︎

We propose FashionEngine, an interactive 3D human generation and editing system, which allows easy and efficient production of 3D digital human with multimodal control. FashionEngine models the multimodal features in a unified UV space that enables various joint multimodal editing tasks. We illustrate the advantages of our UV-based editing system in different 3D human editing tasks.

5.0.0.1 Limitations.

Our synthesized humans are biased toward generating females with dresses due to the dataset bias, same as [3], [44], [67]. More data can be involved in the training to alleviate this bias. Our system is capable of editing challenging skirts, which shows potentials for other clothing styles.

Figure 14: Comparisons with DreamPose [67] for generations on the UBCFashion dataset. Ours synthesizes high-quality and consistent humans (e.g., hair) under different poses or viewpoints.

Acknowledgement↩︎

This study is supported by the Ministry of Education, Singapore, under its MOE AcRF Tier 2 (MOET2EP20221- 0012), NTU NAP, and under the RIE2020 Industry Alignment Fund – Industry Collaboration Projects (IAF-ICP) Funding Initiative, as well as cash and in-kind contribution from the industry partner(s).