Test-Time Model Adaptation with Only Forward Passes

April 02, 2024

Abstract

Test-time adaptation has proven effective in adapting a given trained model to unseen test samples with potential distribution shifts. However, in real-world scenarios, models are usually deployed on resource-limited devices, e.g., FPGAs, and are often quantized and hard-coded with non-modifiable parameters for acceleration. In light of this, existing methods are often infeasible since they heavily depend on computation-intensive backpropagation for model updating that may be not supported. To address this, we propose a test-time Forward-Only Adaptation (FOA) method. In FOA, we seek to solely learn a newly added prompt (as model’s input) via a derivative-free covariance matrix adaptation evolution strategy. To make this strategy work stably under our online unsupervised setting, we devise a novel fitness function by measuring test-training statistic discrepancy and model prediction entropy. Moreover, we design an activation shifting scheme that directly tunes the model activations for shifted test samples, making them align with the source training domain, thereby further enhancing adaptation performance. Without using any backpropagation and altering model weights, FOA runs on quantized 8-bit ViT outperforms gradient-based TENT on full-precision 32-bit ViT, while achieving an up to 24-fold memory reduction on ImageNet-C. The source code will be released.

1 Introduction↩︎

Deep neural networks often struggle to generalize when testing data encounter unseen corruptions or are drawn from novel environments [1], [2], known as distribution shifts. To address this, various methods have been extensively investigated in existing literature, such as domain generalization[3], [4], data augmentation [5], [6] and unsupervised domain adaptation [7]–[9].

| Prior TTA | Forward-Only Adaptation (ours) | |

|---|---|---|

| 1-3 Update model weights | ||

| Backward propagation | ||

| Model compatibility | Full precision models | Full precision models (32-bit) |

| (32-bit) | Quantized models: | |

| \(\bullet\) 8-bit, 6-bit, ... | ||

| High-performance | High-performance GPU | |

| Device | GPU | Low-power edge devices: |

| compatibility | \(\bullet\) smartphones, iPads, FPGAs | |

| \(\bullet\) embodied robots, ... | ||

| Accuracy | 59.6% (TENT, full | 66.3% (full precision, 32-bit) |

| precision, 32-bit) | 63.5% (quantized, 8-bit) | |

| 5,165 MB (TENT, full | 832 MB (full precision, 32-bit) | |

| memory usage | precision, 32-bit) | 208 MB (quantized, 8-bit) |

Recently, test-time adaptation (TTA) [11]–[15] has emerged as a rapidly progressing research area, with the aim of addressing domain shifts during test time. By utilizing each data point once for immediate adaptation post-inference, TTA stands out with its minimal overhead compared to prior areas, making it well-suited for real-world applications. According to whether involving backward propagation, existing TTA methods can generally be divided into the following two categories.

Gradient-free methods learn from test data by adapting the statistics in batch normalization layers [16]–[18], correcting the output probabilities [19], or adjusting the classifier [13], etc. These methods, which avoid backpropagation and do not alter the original model weights, inherently reduce the risk of forgetting on source domain. However, their limited learning capacity, primarily stemming from the constraint of not updating the core model parameters, may lead to suboptimal performance on out-of-distribution test data (as shown in Table [tab:imagenet-c-full-precision]). Gradient-based methods [11], [20], [21] unleash the power of TTA by online updating model parameters through self-/un-supervised learning during testing. These methods encompass a variety of techniques including, but not limited to, rotation prediction [22], contrastive learning [14], [23], entropy minimization [20], etc. Although gradient-based TTA is effective in handling domain shifts, it still faces critical challenges when being deployed to real-world scenarios, as shown in Table 1.

Firstly, deep models are usually deployed on various edge devices, e.g., smartphones and embedded systems. Unlike high-performance GPUs, these devices typically possess limited computational power and memory capacity, insufficient for the intensive computations required by TTA, which often needs one or multiple rounds of backpropagation for each test sample [20], [24].

Secondly, for resource or efficiency considerations, deep models often undergo quantization before deployment – a process of reducing precision, e.g., from 32-bit to 8-bit. However, the non-differentiability of the discrete quantizer would result in vanishing gradients when propagated through multiple layers [25]. This makes deployed models incapable of supporting backpropagation operations, which are essential for prior TTA methods.

Lastly, on some specialized computational chips that are tailored for specific models [26], [27], the model parameters are often hard-coded and non-modifiable. This rigidity of model parameters poses another barrier to the implementation of TTA.

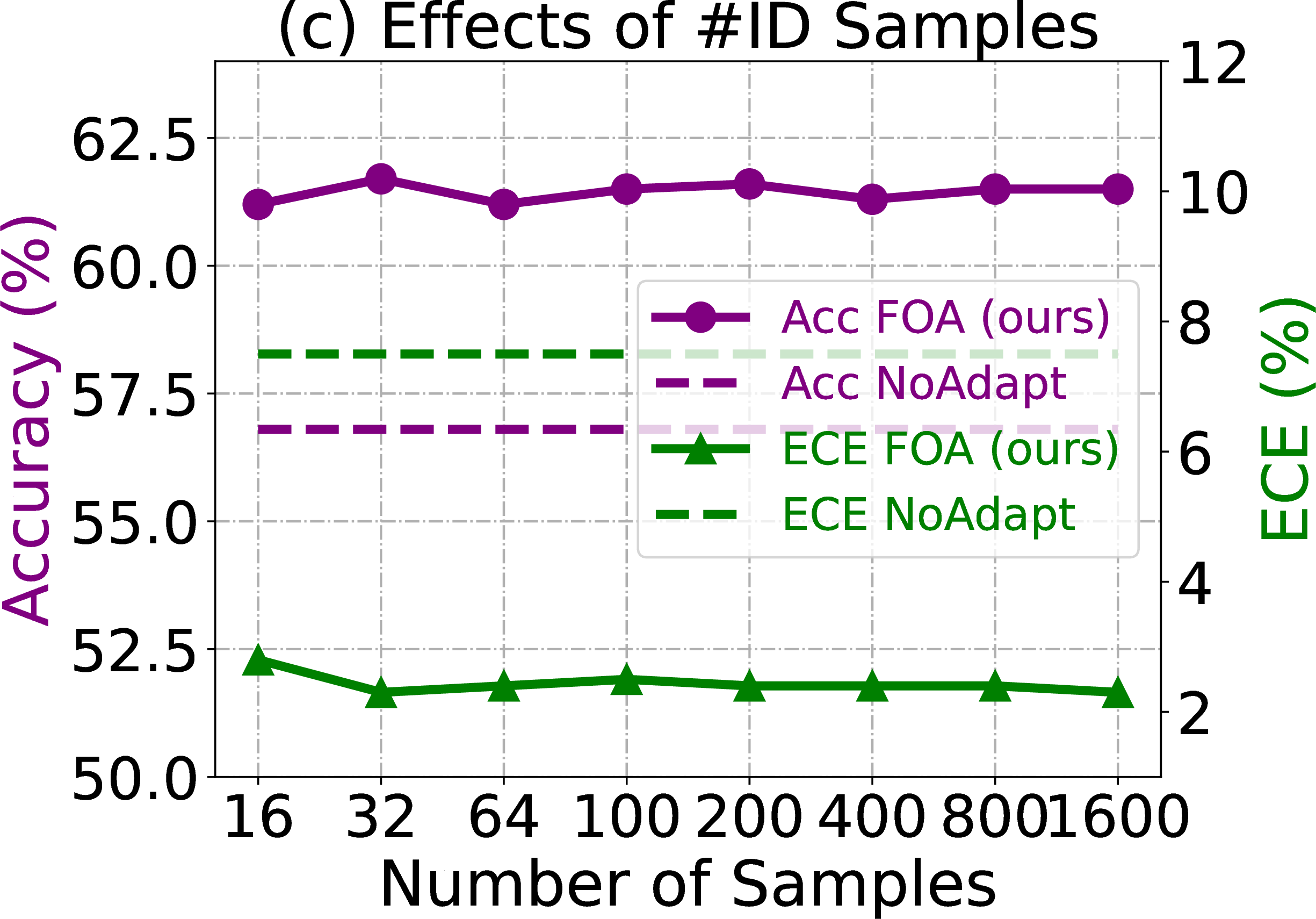

To address the above issues, we propose a test-time Forward-Only Adaptation (FOA) method. To be specific, we seek to explore a backpropagation-free optimizer, called covariance matrix adaptation (CMA) evolution strategy [28], for online test-time model adaptation. However, naively applying CMA in the TTA setting is infeasible, as it is hard for CMA to handle ultra-high dimensional optimization problems (e.g., deep model training) and it relies on supervised learning signals. Therefore, we propose to solely update a newly inserted prompt (as the model’s input, shown in Figure 1) at test time to reduce the dimension of solution space and meanwhile avoid altering model weights. Then, to make CMA work stably without supervised signals, we devise a novel unsupervised fitness function to evaluate candidate solutions, which comprises both model prediction entropy and the activation statistics discrepancy between out-of-distribution (OOD) testing samples and source in-distribution (ID) samples. Here, only a small number of ID samples is needed for source statistics estimation, i.e., 32 samples are sufficient for ImageNet (see Figure 3 (c)). Moreover, to further boost the adaptation performance, we devise a forward-only back-to-source activation shifting mechanism to directly adjust the activations of OOD testing samples, along with a dynamically updated shifting direction from the OOD testing domain to the ID source domain.

Main Novelty and Contributions. 1) We introduce a novel and practical paradigm to TTA, termed forward-only adaptation. This paradigm operates without depending on backpropagation and avoids modification to the model weights, significantly broadening the real-world applicability of TTA across various contexts, including smartphones, FPGAs, and quantized models. 2) We achieve the goal of forward-only adaptation by prompt adaptation and activation shifting, where we design a new fitness function that guarantees stable prompt learning using a covariance matrix adaptation-based optimizer under the online unsupervised setting, and efficiently align the sample’s activations in the testing domain with the source training domain. 3) Extensive experiments on four benchmarks and full precision/quantized models verify our effectiveness. Our method on 8-bit quantized ViT outperforms gradient-based TENT on full-precision 32-bit ViT, with up to 24-fold run-time memory reduction.

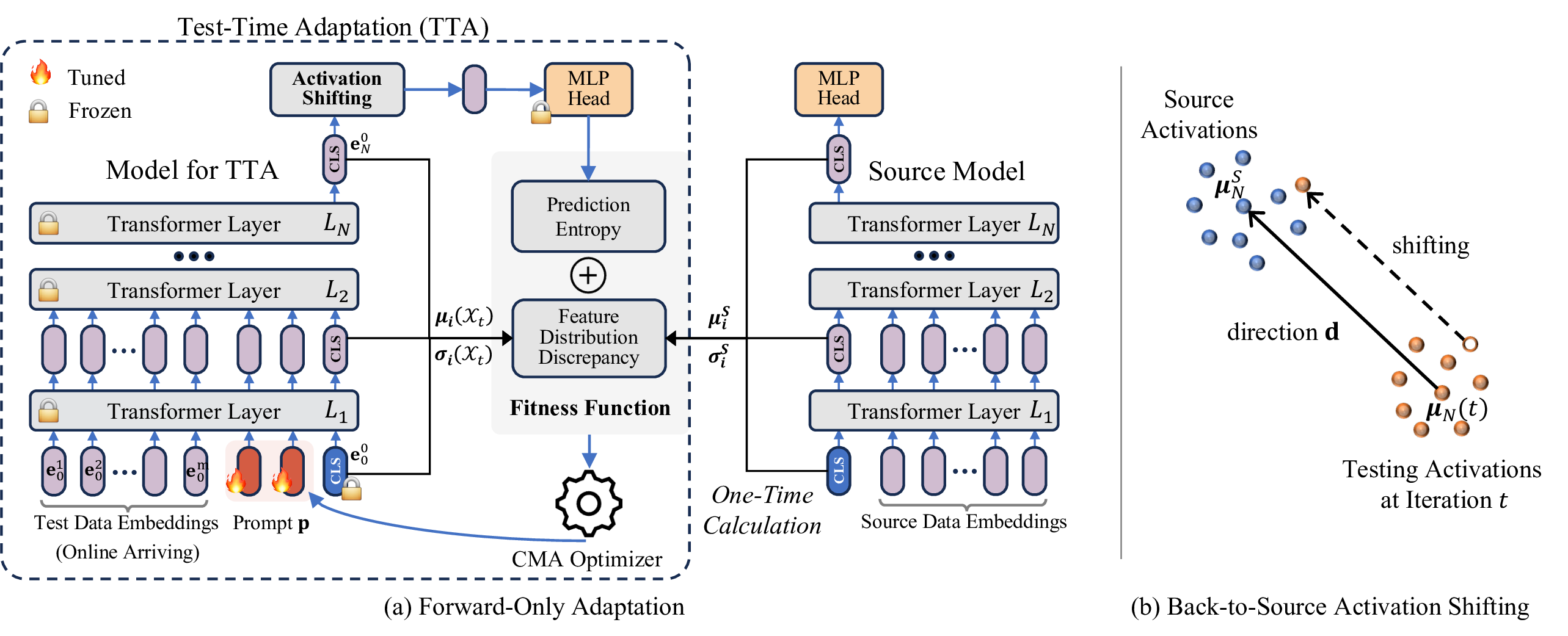

Figure 1: (a) An illustration of our proposed FOA. For each batch of online incoming test samples, we feed them alongside prompts \({\boldsymbol{p}}\) into the TTA model, and calculate a fitness value that

serves as a learning signal, aiding the covariance matrix adaptation (CMA) optimizer in learning the prompts \({\boldsymbol{p}}\). This fitness function is derived from both the prediction entropy and the distribution

discrepancy between the testing CLS activations and source CLS activations (calculated once). (b) We further boost the adaptation performance by directly adjusting the activations (before the final MLP head), guiding them from the

testing distribution towards the source distribution.

2 Preliminary and Problem Statement↩︎

We briefly revisit ViT and TTA in this section for the convenience of our method presentation and put detailed related work discussions into Appendix 6 due to page limits.

Vision Transformer (ViT) [29]. In this paper, we mainly focus on transformer-based vision models that are widely used

in practice and are also hardware-friendly. We first revisit ViT here for the presentation convenience of our method. Formally, for a plain ViT \(f_{\Theta}(\cdot)\) with \(N\) layers, let

\({\boldsymbol{E}}_i=\{{\boldsymbol{e}}_i^j, j\in\mathbb{N}, 0\leq j \leq m\}\) be the patch embeddings as the input of the \((i+1)\)-th layer \(L_{i+1}\),

where \(m\) is the number of image patches and \({\boldsymbol{e}}_i^0\) denote an extra learnable classification token ([CLS]) of the \(i\)-th layer \(L_i\), the whole ViT is formulated as: \[\begin{align} {\boldsymbol{E}}_i &= L_i({\boldsymbol{E}}_{i-1}), ~~~ i=1,...,N

\tag{1}\\ \hat{{\boldsymbol{y}}} &= \texttt{Head}({\boldsymbol{e}}_N^0). \tag{2}

\end{align}\]

Test-Time Adaptation (TTA) [11], [20]. Let \(f_{\Theta}(\cdot)\) be the model trained on labeled training dataset \({\mathcal{D}}_{train} = \{({\boldsymbol{x}}_i,y_i)\}_{i=1}^{N}\) and \({\boldsymbol{x}}_i \sim P\left( {\boldsymbol{x}}\right)\). During testing, \(f_{\Theta}(\cdot)\) shall perform well on in-distribution (ID) test samples drawn from \(P\left( {\boldsymbol{x}}\right)\). However, given a set of out-of-distribution (OOD) testing samples \({\mathcal{D}}_{test} = \{{\boldsymbol{x}}_j\}_{j=1}^{M} \sim Q \left( {\boldsymbol{x}}\right)\) and \(Q\left( {\boldsymbol{x}}\right) \neq P\left( {\boldsymbol{x}}\right)\), the prediction performance of \(f_{\Theta}(\cdot)\) would decrease significantly. To address this, TTA methods often seek to update the model parameters by minimizing some unsupervised/self-supervised learning objective when encountering a testing sample: \[\label{eq:tta95formula} \min_{\tilde{\Theta}} {\mathcal{L}}({\boldsymbol{x}};\Theta),~ {\boldsymbol{x}}\sim Q \left( {\boldsymbol{x}}\right),\tag{3}\] where \(\tilde{\Theta} \subseteq \Theta\) denotes the model parameters involved for updating. In general, the TTA objective \({\mathcal{L}}(\cdot)\) can be formulated as rotation prediction [11], contrastive learning [14], entropy minimization [12], [20], etc.

Problem Statement. In practical applications, deep models are frequently deployed on devices with limited resources, such as smartphones and embodied agents, and sometimes are even deployed after quantization or hard coding with non-modifiable parameters. These devices typically lack the capability for backward propagation, especially with large-size deep models. However, for existing TTA methods, such as SAR [12] and MEMO [24], performing TTA necessitates one or more rounds of backward computation for each test sample. This process is highly memory- and computation-intensive, hindering the broad application of TTA methods in real-world scenarios.

3 Approach↩︎

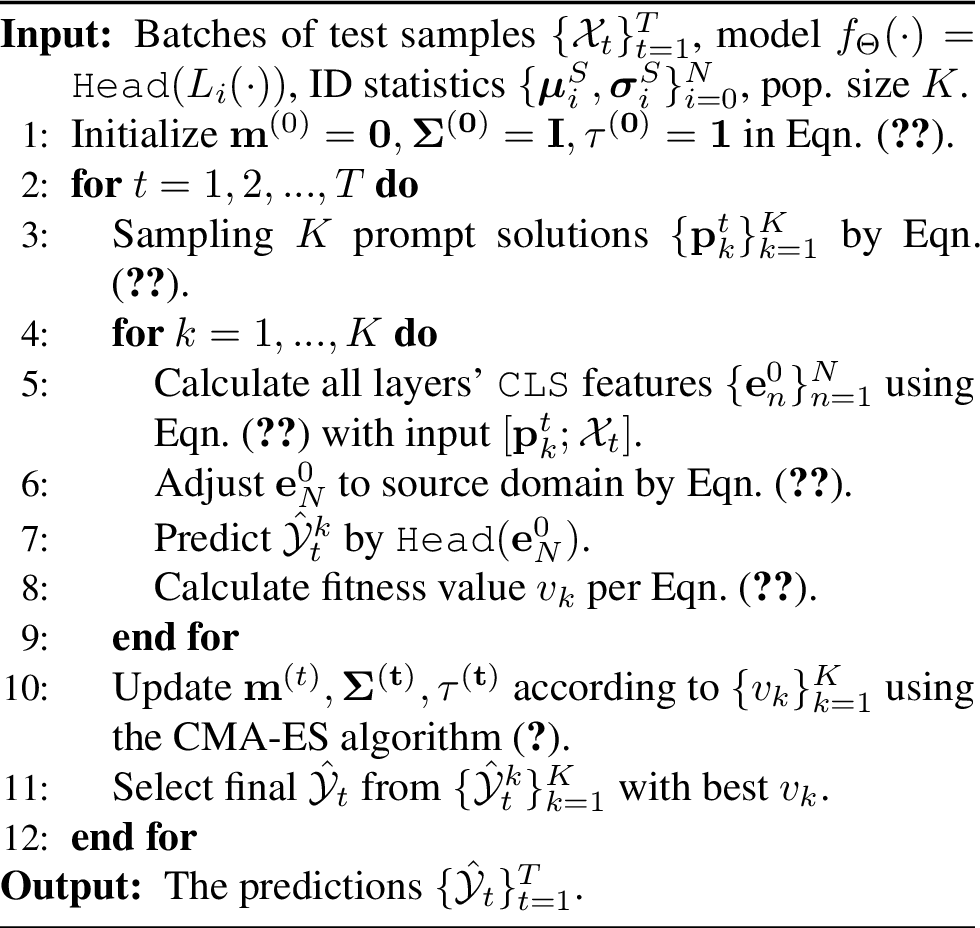

In this paper, we propose a novel test-time Forward-Only Adaptation (FOA) method, which is also model updating-free, to boost the practicality of test-time adaptation in various real-world scenarios. From Figure 1, FOA conducts adaptation on both the input level and the output feature level. 1) Input level: FOA inserts a new prompt as the model’s input, and then solely updates this prompt online for out-of-distribution (OOD) generalization, employing a derivative-free optimizer coupled with a specially designed unsupervised fitness function (c.f. Section 3.1). 2) Output feature level: a back-to-source activation shifting strategy seeks to further boost adaptation, which directly refines the activation features of the final layer, by aligning them from the OOD domain back to the source in-distribution (ID) domain (c.f. Section 3.2). We summarize the pseudo-code of FOA in Algorithm 2.

3.1 Forward-Only Prompt Adaptation↩︎

Unlike prior TTA methods that update model weights using backpropagation, we aim to achieve the goal of test-time out-of-distribution generalization in a backpropagation-free manner. To this end, we explore a derivative-free optimizer for TTA, namely covariance matrix adaptation (CMA) evolution strategy [28]. However, naively applying CMA to our TTA context is infeasible, the reasons are due to: 1) For TTA, the model parameters needing update are high-dimensional (even for methods like TENT [20] that only updates the affine parameters of normalization layers), since the deep models are often with millions of parameters. This makes CMA intractable for direct deep model adaptation. 2) Conventional CMA methods rely on supervised offline learning, i.e., using ground-truth labels to assess the candidate solutions. In contrast, TTA operates without ground-truth labels and typically in an online setting, rendering conventional CMA methods inapplicable. We empirically illustrated these issues in Table 5.

To make CMA work in TTA, we introduce a new prompt as the model’s input (as in Figure 1 (a)) for updating, thereby reducing the dimension of solution space and lowering the complexity for CMA optimization, meanwhile avoiding alter model weights. Then, we devise an unsupervised fitness function to provide consistent and reliable learning signals for the CMA optimization. We depict them in the following.

Figure 2: Forward-Only Adaptation (FOA).

CMA-Based Prompt Adaptation. Inspired by that continuous prompt learning has been shown pretty effective in the field of natural language processing, we add prompt embeddings at the beginning of the model’s input (i.e., the first layer) for test-time updating, while keeping all other model parameters frozen. In this way, the dimension of learnable model parameters shall be significantly reduced and thus is compatible with CMA optimization. Formally, given a test sample \({\boldsymbol{x}}\sim Q({\boldsymbol{x}})\) and a ViT model \(f_\Theta(\cdot)=\texttt{Head}(L_i(\cdot))\), our goal is to find an optimal prompt \({\boldsymbol{p}}^*\): \[\label{eq:prompt95by95cma} {\boldsymbol{p}}^*=\mathop{\mathrm{arg\,min}}_{{\boldsymbol{p}}}{\mathcal{L}}(f_{\Theta}({\boldsymbol{p}};{\boldsymbol{x}})),\tag{4}\] where \({\mathcal{L}}(\cdot)\) is a fitness function and \({\boldsymbol{p}}\in\mathbb{R}^{d\times N_p}\) consists of \(N_p\) prompt embeddings, each of dimension \(d\). We solve this problem by employing the derivative-free CMA.

Fitness Function for CMA. To effectively solve Eqn. (4 ) using CMA, the primary challenge lies in developing an appropriate fitness \({\mathcal{L}}(\cdot)\) to evaluate a given solution \({\boldsymbol{p}}\). A direct approach might involve adopting existing TTA learning objectives, such as prediction entropy [20]. However, this method encounters limitations when dealing with severely corrupted OOD samples, where model predictions are highly uncertain. In such cases, entropy-based measures struggle to provide consistent and reliable signals for CMA optimization. Moreover, focusing solely on optimizing entropy can lead the prompts towards degenerate and trivial solutions, as in Tables 2 and 5. To address these, we devise a new fitness to regularize the activation distribution statistics of OOD testing samples (forward propagated with optimized prompts), ensuring they are closely aligned with those from ID samples. This fitness functions at the distribution level, circumventing the issues of noise inherent in the uncertain predictions, thereby offering better stability.

Statistics calculation. Before TTA, we first collect a small set of source in-distribution samples \({\mathcal{D}}_S=\{{\boldsymbol{x}}_q\}_{q=1}^{Q}\) and feed them into the model to obtain the corresponding

CLS tokens \(\{{\boldsymbol{e}}_i^0\}_{i=1}^N\). Then, we calculate the mean and standard deviations of CLS tokens \(\{{\boldsymbol{e}}_i^0\}_{i=1}^N\) over all

samples in \({\mathcal{D}}_S\) to obtain source in-distribution statistics \(\{\boldsymbol{\mu}_i^S, {\boldsymbol{\sigma}}_i^S\}_{i=0}^N\). Note that we only need a small number of

in-distribution samples without labels for calculation, e.g., 32 samples are sufficient for the ImageNet dataset. Please refer to Figure 3 (c) for the sensitivity analyses regarding this number. Similarly, we

calculate the target testing statistics \(\{\boldsymbol{\mu}_i({\mathcal{X}}_t)), {\boldsymbol{\sigma}}_i({\mathcal{X}}_t)\}_{i=0}^N\) over the current batch of testing samples \({\mathcal{X}}_t\).

Based on the above, the overall fitness function for the \(t\)-th test batch samples \({\mathcal{X}}_t\) is then given by: \[\begin{align} \label{eq:fitness95function} {\mathcal{L}}(f_{\Theta}({\boldsymbol{p}};{\mathcal{X}}_t)) &= \sum_{{\boldsymbol{x}}\in{\mathcal{X}}_t}\sum_{c\in{\mathcal{C}}}-\hat{y}_c\log \hat{y}_c \nonumber \\ + \lambda \sum_{i=1}^N &||{\boldsymbol{\mu}}_i({\mathcal{X}}_t)-{\boldsymbol{\mu}}_i^S||_2+||\boldsymbol{\sigma}_i({\mathcal{X}}_t)-\boldsymbol{\sigma}_i^S||_2, \end{align}\tag{5}\] where \(\hat{y}_c\) is the \(c\)-th element of \(\hat{{\boldsymbol{y}}}\) in Eqn. (2 ) w.r.t. sample \({\boldsymbol{x}}\), and \(\lambda\) is a trade-off parameter.

CMA Evolution Strategy. Instead of directly optimizing the prompt \({\boldsymbol{p}}\) (in Eqn. 4 ) itself, we learn a multivariate normal distribution of \({\boldsymbol{p}}\) using a covariance matrix adaptation (CMA) evolution strategy [30], [31]. Here, we adopt CMA as it is one of the most successful and widely used evolutionary algorithms for non-convex black-box optimization in high-dimensional continuous solution spaces. To be specific, in each iteration \(t\) (the \(t\)-th batch of test samples \({\mathcal{X}}_t\)), CMA samples a set/population of new candidate solutions/prompts (also known as individuals in evolution algorithms) from a parameterized multivariate normal distribution: \[\label{eq:cma95es} {\boldsymbol{p}}_k^{(t)} \sim {\boldsymbol{m}}^{(t)} + \tau^{(t)}{\mathcal{N}}(\boldsymbol{0}, \boldsymbol{\Sigma}^{(t)}).\tag{6}\] Here, \(k\small{=}1,...,K\) and \(K\) is the population size. \({\boldsymbol{m}}^{(t)}\small{\in}\mathbb{R}^{dN_p}\) is the mean vector of the search distribution at iteration step \(t\), \(\tau^{(t)}\small{\in}\mathbb{R}_+\) is the overall standard deviation that controls the step size, and \(\boldsymbol{\Sigma}^{(t)}\) is the covariance matrix that determines the shape of distribution ellipsoid. Upon sampling the prompts \(\{{\boldsymbol{p}}_k^{(t)}\}_{k=1}^K\), we feed each \({\boldsymbol{p}}_k^{(t)}\) along with the test sample \({\mathcal{X}}_t\) into the model to yield a fitness value \(v_k\) associated with \({\boldsymbol{p}}_k^{(t)}\). Then, we update distribution parameters \({\boldsymbol{m}}^{(t)}\), \(\tau^{(t)}\) and \(\boldsymbol{\Sigma}^{(t)}\) based on the ranking of \(\{v_k\}_{k=1}^K\) by maximizing the likelihood of previous candidate successful solutions (c.f. [28] for more algorithm details).

3.2 Back-to-Source Activation Shifting↩︎

In this section, we propose a “back-to-source activation shifting mechanism" to further boost the adaptation performance at the feature level, in cases of the above online prompt adaptation is inadequate. This shifting scheme directly alters the model’s

activations during inference and is notable for not requiring backpropagation. Specifically, given a test sample \({\boldsymbol{x}}\), we move its corresponding \(N\)-th layer’s

CLS feature \({\boldsymbol{e}}_N^0\) (as shown in Eqn. (2 ), this feature is the input of the final task head), shifting them along the direction from out-of-distribution domain

towards in-distribution domain: \[\begin{align} {\boldsymbol{e}}_N^0 \leftarrow {\boldsymbol{e}}_N^0 + \gamma {\boldsymbol{d}}, \label{eq:activation32shifting}

\end{align}\tag{7}\] where \({\boldsymbol{d}}\) is a shifting direction and \(\gamma\) is a step size. We define \({\boldsymbol{d}}\) as the

vector extending from the center of out-of-distribution testing features to the center of source in-distribution features. In our online TTA setting, with the increase of testing samples, the center of testing features shall dynamically change. Thus, we

online update the shifting direction \({\boldsymbol{d}}\) by: \[{\boldsymbol{d}}_t = {\boldsymbol{\mu}}_N^S - {\boldsymbol{\mu}}_N(t),

\label{eq:shifting32direction}\tag{8}\] where \({\boldsymbol{\mu}}_N^S\) is the mean of the \(N\)-th final layer CLS feature \({\boldsymbol{e}}_N^0\) and calculated over source in-distribution samples \({\mathcal{D}}_S\) (the same one used in Eqn. (5 )). \({\boldsymbol{\mu}}_N(t)\) is the approximation of the overall test set statistics by exponential moving averages of statistics computed on the sequentially arrived test samples. We define the mean estimate of the \({\boldsymbol{e}}_N^0\) in iteration \(t\) (the \(t\)-th batch \({\mathcal{X}}_t\)) as:

\[\begin{align} {\boldsymbol{\mu}}_N(t) &= \alpha {\boldsymbol{\mu}}_N({\mathcal{X}}_t) + (1-\alpha){\boldsymbol{\mu}}_{N}(t-1), \label{eq:testing95mu}

\end{align}\tag{9}\] where \({\boldsymbol{\mu}}_N({\mathcal{X}}_t)\) is the mean of the \(N\)-th layer’s CLS feature and calculated over the \(t\)-th test batch \({\mathcal{X}}_t\). \(\alpha\in[0,1]\) is a moving average factor and we set it to 0.1.

4 Experiments↩︎

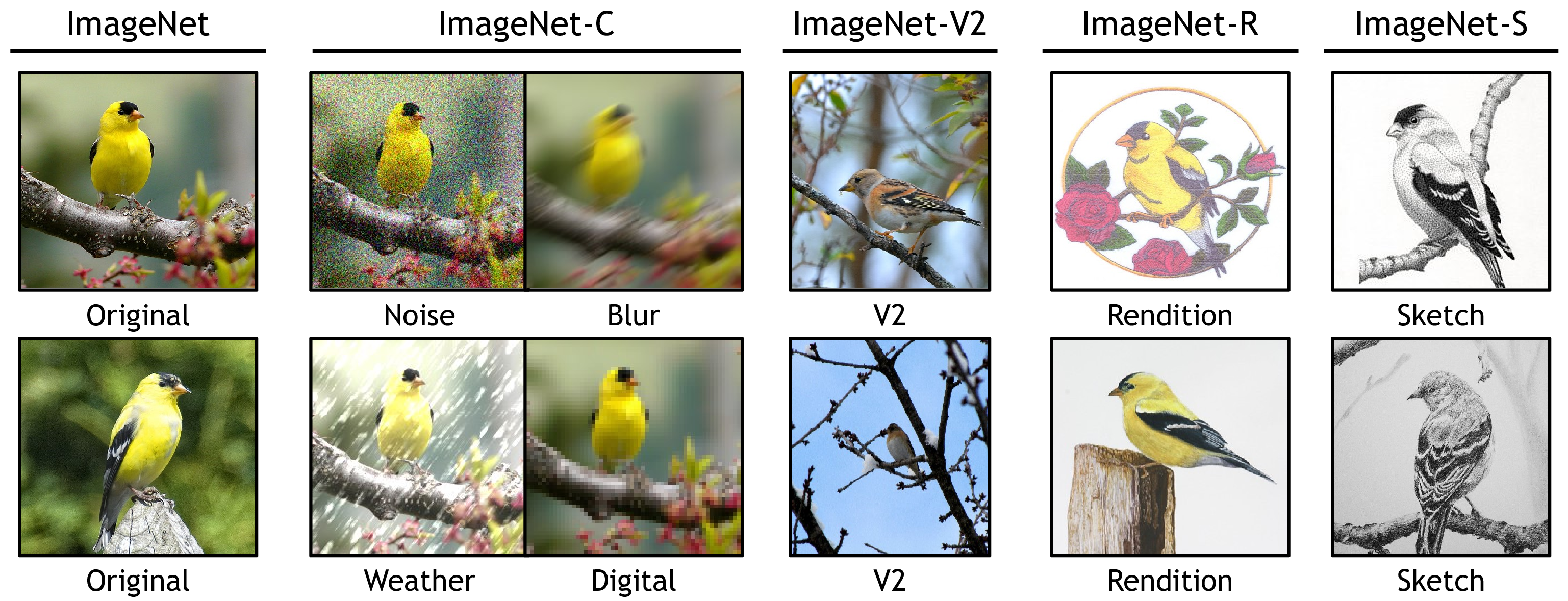

Datasets and Models. We conduct experiments on four benchmarks for OOD generalization, i.e., ImageNet-C [1] (contains corrupted images in 15 types of 4 main categories and each type has 5 severity levels), ImageNet-R (various artistic renditions of 200 ImageNet classes) [32], ImageNet-V2 [33], ImageNet-Sketch [34]. We use ViT-Base [29] as the source

model for all experiments, including both full precision and quantized ViT models. The models are trained on the source ImageNet-1K training set and the model weights are obtained from the timm repository [35]. We adopt PTQ4ViT [36] for 8-bit and 6-bit model

quantization. Unless stated otherwise, all ViT-Base models used in this paper are full precision with 32 bits.

Compared Methods. We compare our proposed FOA with two categories of TTA methods. 1) Gradient-free methods: LAME [19] is a post-training adaptation method by adjusting the model’s output probabilities; T3A [13] updates a prototype-based classifier during test time. 2) Gradient-based methods: TENT [20] optimizes the affine parameters of norm layers by minimizing the prediction entropy of test samples and SAR [12] further optimizes the prediction entropy via active sample selection and a sharpness-aware optimizer; CoTTA [37] adapts a given model via augmentation-based consistency maximization and a teacher-student learning scheme.

Implementation Details. We set the number of prompt embeddings \(N_p\) to 3 and initialize prompts with uniform initialization. We set the batch size (\(BS\)) to 64 by following TENT and SAR for fair comparisons. The population size \(K\) is set to \(28=4+3\times\log(prompt ~~dim)\) by following [28] and \(\lambda\) in Eqn. (5 ) is set to 0.4\(\times BS/64\) on ImageNet-C/V2/Sketch, and 0.2\(\times BS/64\) on ImageNet-R to balance the magnitude of two losses. We use the validation set of ImageNet-1K to estimate source ID statistics. The step size \(\gamma\) in Eqn. (7 ) is set to 1. The effect of each hyperparameter is investigated in Section 4.3 and Appendix 8. More details of compared methods are put in Appendix 7.2.

Evaluation Metrics. 1) Classification Accuracy (%, \(\uparrow\)) on OOD testing samples, i.e., ImageNet-C/R/V2/Sketch. 2) Expected Calibration Error (ECE) (%, \(\downarrow\)) measures the difference between predicted probabilities and actual outcomes in a probabilistic model [38]. ECE is important to evaluate the trustworthiness of model predictions, such as in medical diagnostics and auto driving.

lcccccccccccccccc>cc>cc <multicolumn,1> & <multicolumn,1>& <multicolumn,3>Noise & & & <multicolumn,4>Blur & & & & <multicolumn,4>Weather & & & &

<multicolumn,4>Digital & & & & <multicolumn,2>Average &

Method & BP & Gauss. & Shot & Impul. & Defoc. & Glass & Motion & Zoom & Snow & Frost & Fog & Brit. & Contr. & Elas. & Pix. & JPEG & Acc. & ECE

NoAdapt & & 56.8 & 56.8 & 57.5 & 46.9 & 35.6 & 53.1 & 44.8 & 62.2 & 62.5 & 65.7 & 77.7 & 32.6 & 46.0 & 67.0 & 67.6 & 55.5 & 10.5

LAME & & 56.5 & 56.5 & 57.2 & 46.4 & 34.7 & 52.7 & 44.2 & 58.4 & 61.5 & 63.1 & 77.4 & 24.7 & 44.6 & 66.6 & 67.2 & 54.1 & 11.0

T3A & & 56.4 & 56.9 & 57.3 & 47.9 & 37.8 & 54.3 & 46.9 & 63.6 & 60.8 & 68.5 & 78.1 & 38.3 & 50.0 & 67.6 & 69.1 & 56.9 & 26.8

TENT & & 60.3 & 61.6 & 61.8 & 59.2 & 56.5 & 63.5 & 59.2 & 54.3 & 64.5 & 2.3 & 79.1 & 67.4 & 61.5 & 72.5 & 70.6 & 59.6 & 18.5

CoTTA & & 63.6 & 63.8 & 64.1 & 55.5 & 51.1 & 63.6 & 55.5 & 70.0 & 69.4 & 71.5 & 78.5 & 9.7 & 64.5 & 73.4 & 71.2 & 61.7 & 6.5

SAR & & 59.2 & 60.5 & 60.7 & 57.5 & 55.6 & 61.8 & 57.6 & 65.9 & 63.5 & 69.1 & 78.7 & 45.7 & 62.4 & 71.9 & 70.3 & 62.7 & 7.0

FOA(ours) & & 61.5 & 63.2 & 63.3 & 59.3 & 56.7 & 61.4 & 57.7 & 69.4 & 69.6 & 73.4 & 81.1 & 67.7 & 62.7 & 73.9 & 73.0 & 66.3 & 3.2

lc|ccc>cc|ccc>cc <multicolumn,1> & <multicolumn,1>& <multicolumn,4>Accuracy (%, \(\uparrow\)) & & & & <multicolumn,4>ECE (%, \(\downarrow\)) & & &

Method & BP & R & V2 & Sketch & Avg. & R & V2 & Sketch & Avg.

NoAdapt & & 59.5 & 75.4 & 44.9 & 59.9 & 2.5 & 5.6 & 7.9 & 5.3

LAME & & 59.0 & 75.2 & 44.4 & 59.6 & 2.5 & 5.0 & 9.7 & 5.7

T3A & & 58.0 & 75.5 & 48.5 & 60.7 & 25.9 & 23.4 & 37.4 & 28.9

TENT & & 63.9 & 75.2 & 49.1 & 62.7 & 7.2 & 4.5 & 22.8 & 11.5

CoTTA & & 63.5 & 75.4 & 50.0 & 62.9 & 2.8 & 3.4 & 17.9 & 8.0

SAR & & 63.3 & 75.1 & 48.7 & 62.4 & 3.0 & 2.7 & 16.5 & 7.4

FOA(ours) & & 63.8 & 75.4 & 49.9 & 63.0 & 2.7 & 3.2 & 7.8 & 4.6

4.1 Results on Full Precision Models↩︎

In this section, we compare our FOA with existing state-of-the-art TTA methods on the full precision ViT-Base model. From the results on ImageNet-C in Table [tab:imagenet-c-full-precision], we have the following observations. 1) Our FOA achieves the best average accuracy and ECE over 15 different corruption types, suggesting our effectiveness. 2) Compared with NoAdapt, gradient-free methods LAME and T3A obtain slight performance gain or perform even worse, as they do not update core model weights and thus may suffer from limited learning capacity. Here, LAME performs worse than NoAdapt, because it only adjusts the model output logits and is not very effective when the OOD test sample stream does not suffer from prior label shifts, which is consistent with the results reported by LAME itself. 3) Compared with LAME and T3A, gradient-based methods (TENT, CoTTA and SAR) explicitly modify model parameters by optimizing unsupervised/self-supervised losses, and thus achieve much better performance, e.g., the average accuracy of 56.9% (T3A) vs. 62.7% (SAR). 4) Without using any back-propagation, our FOA outperforms gradient-based SAR with 3.6% average accuracy and 3.8% average ECE gains, demonstrating our superiority in deploying to lightweight devices (e.g., smartphones and FPGAs) and quantized models. 5) FOA achieves much lower average ECE compared with BP-based methods, e.g., 18.5% (TENT) vs. 3.2% (FOA). This mainly benefits from our activation discrepancy regularization (in Eqn. (5 )), which alleviates the error accumulation issue of prior methods that may employ imprecise pseudo labels or entropy for learning. At last, from the results on ImageNet-R/V2/Sketch in Table [tab:imagenet-rv2asketch-full-precision], our FOA achieves the best or comparable performance w.r.t. both accuracy and ECE, further suggesting our effectiveness.

4.2 Results on Quantized Models↩︎

llccccccccccccccc>cc>cc <multicolumn,1> & <multicolumn,1>& <multicolumn,3>Noise & & & <multicolumn,4>Blur & & & & <multicolumn,4>Weather & & & &

<multicolumn,4>Digital & & & & <multicolumn,2>Average &

Model & Method & Gauss. & Shot & Impul. & Defoc. & Glass & Motion & Zoom & Snow & Frost & Fog & Brit. & Contr. & Elas. & Pix. & JPEG & Acc. & ECE

8-bit & NoAdapt & 55.8 & 55.8 & 56.5 & 46.7 & 34.7 & 52.1 & 42.5 & 60.8 & 61.4 & 66.7 & 76.9 & 24.6 & 44.7 & 65.8 & 66.7 & 54.1 & 10.8

& T3A & 55.6 & 55.7 & 55.7 & 45.8 & 34.4 & 51.1 & 41.2 & 59.5 & 61.9 & 66.8 & 76.4 & 45.5 & 43.4 & 65.6 & 67.5 & 55.1 & 25.9

& FOA(ours) & 60.7 & 61.4 & 61.3 & 57.2 & 51.5 & 59.4 & 51.3 & 68.0 & 67.3 & 72.4 & 80.3 & 63.2 & 57.0 & 72.0 & 69.8 & 63.5 & 3.8

6-bit & NoAdapt & 44.2 & 42.0 & 44.8 & 39.8 & 28.9 & 43.4 & 34.7 & 53.2 & 59.8 & 59.0 & 75.1 & 27.4 & 39.0 & 59.1 & 65.3 & 47.7 & 9.9

& T3A & 43.3 & 41.3 & 42.7 & 29.1 & 23.4 & 38.9 & 30.0 & 49.4 & 58.3 & 60.2 & 73.8 & 31.0 & 36.3 & 58.0 & 65.2 & 45.4 & 30.1

& FOA(ours) & 53.2 & 51.8 & 54.6 & 49.6 & 38.8 & 51.0 & 44.8 & 60.3 & 65.0 & 68.8 & 76.7 & 39.5 & 46.6 & 67.3 & 68.6 & 55.8 & 5.5

In practical applications, deep models are often deployed on edge devices with efficiency considerations, undergoing a process known as quantization. These devices, constrained by limited resources, typically do not support backward propagation due to its high memory and computational demands. Consequently, traditional gradient-based TTA methods like TENT, CoTTA, and SAR are not viable in such settings. In contrast, our FOA is adaptable to these quantized models. We demonstrate this by applying FOA to quantized ViT models and benchmarking it against T3A. As indicated in Table [tab:quantized95results], FOA outperforms T3A significantly in terms of both accuracy and ECE on 8-bit and 6-bit models. Notably, FOA with an 8-bit ViT surpasses the performance of the gradient-based TENT method using a full precision 32-bit ViT on ImageNet-C, achieving 63.5% accuracy (our FOA, 8-bit) vs. 59.6% (TENT, 32-bit). These results collectively underscore the superiority of our FOA in such quantized model deployment scenarios.

4.3 Ablation Studies↩︎

Effectiveness of Components in FOA. In our FOA, we mentioned that naively applying CMA with entropy minimization in TTA is infeasible, and thus we propose an activation distribution discrepancy-based fitness function to guide the stable learning of CMA and an activation shifting scheme to boost the adaptation performance. We ablate them in Table 2. Firstly, CMA with Entropy fitness performs poorer than “NoAdapt", which verifies the necessity for devising a new fitness function. Secondly, our Activation Discrepancy fitness works well to provide stable learning signals for CMA and improves the adaptation accuracy on ImageNet-C from 55.5% to 63.4%. Thirdly, even only with the Activation Shifting scheme, it also improves the accuracy from 55.5% to 59.1%, suggesting its effectiveness. Lastly, by incorporating Entropy and Activation Discrepancy as a whole fitness function and with the Activation Shifting scheme, our FOA achieves the best performance, i.e., 66.3% average accuracy and 3.2% average ECE on ImageNet-C.

| Entropy | Act. Discrepancy | Act. Shifting | Acc. (%, \(\uparrow\)) | ECE (%,\(\downarrow\)) |

|---|---|---|---|---|

| 1-5 NoAdapt | 55.5 | 10.5 | ||

| 44.9 | 36.8 | |||

| 63.4 | 9.4 | |||

| 59.1 | 12.7 | |||

| 63.8 | 9.9 | |||

| 65.4 | 3.3 | |||

| 1-5 | 66.3 | 3.2 | ||

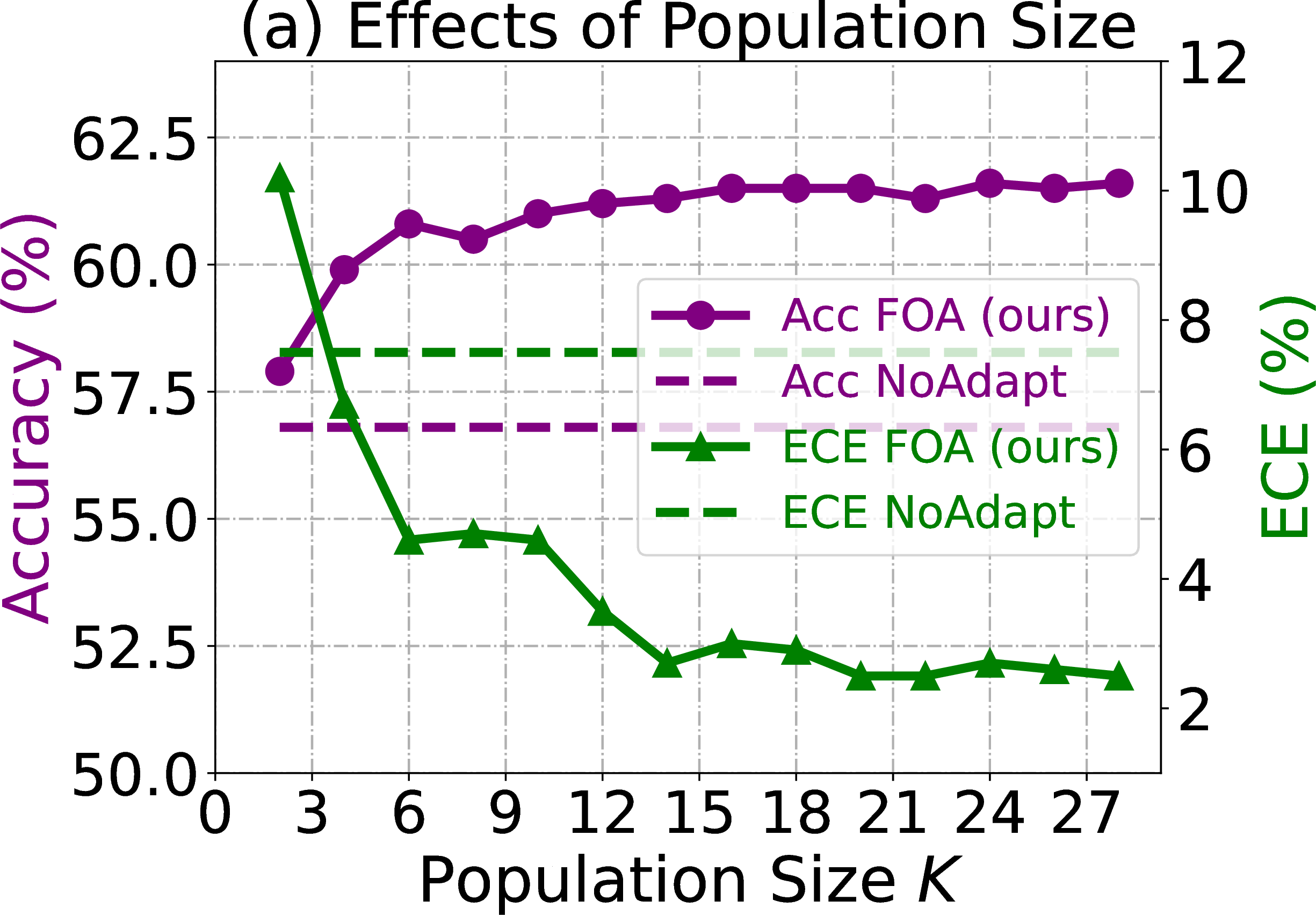

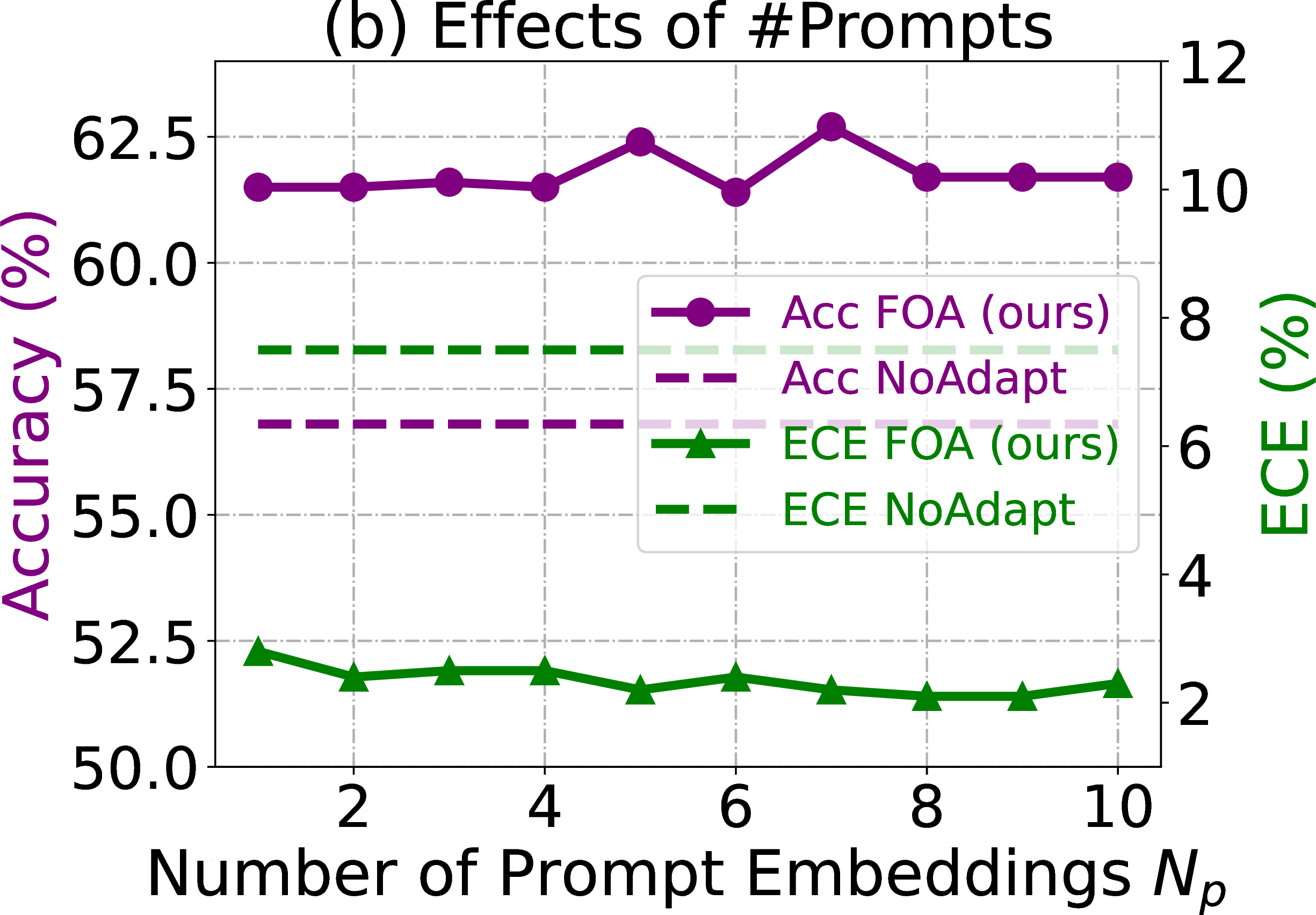

Figure 3: Parameter sensitivity analyses of our FOA. Experiments are conducted on ImageNet-C (Gaussian Noise, level 5) with ViT-Base..

Effects of Population Size \(K\) in CMA (Eqn. (6 )). We evaluate our FOA with different \(K\) from {2, 3, ..., 28}. From Figure 3 (a), the performance of our FOA converges when \(K\small{>}15\). Notably, at \(K\small{=}2\), FOA outperforms both NoAdapt and gradient-free T3A, e.g., 57.9% (FOA) vs. 56.4% (T3A) regarding accuracy. And with \(K\small{=}6\), FOA surpasses the gradient-based TENT, i.e., 60.8% accuracy (FOA) vs. 60.3% (TENT). These results demonstrate the effectiveness of FOA under small \(K\) values (the smaller \(K\), the higher efficiency). Please refer to Table 4 for efficiency comparisons.

Effects of Number of Prompt Embeddings \(N_p\) in FOA. In our FOA, we add prompt embeddings in the input layer for CMA learning. Here, we evaluate FOA with different numbers of \(N_p\), selected from {1, 2, ..., 10}. In Figure 3 (b), we observe that the performance of FOA exhibits only minor variations across different \(N_p\), showing a low sensitivity to \(N_p\). Furthermore, setting \(N_p\) to 5/7 results in marginally better performance, suggesting that this is a more optimal value. However, for all main experiments, we simply fix \(N_p\) at 3 and do not carefully tune \(N_p\), as access to testing data for parameter tuning is typically unavailable in practice.

Effects of #Samples for Calculating \(\{\boldsymbol{\mu}_i^S, {\boldsymbol{\sigma}}_i^S\}_{i=0}^N\). As described in Section 3.1, the calculation of source training statistics \(\{\boldsymbol{\mu}_i^S, {\boldsymbol{\sigma}}_i^S\}_{i=0}^N\) involves a small set of unlabeled in-distribution samples, which can be collected via existing OOD detection techniques [39] or directly using the training samples. Here, we investigate the effect of #samples needed, selected from {16, 32, 64, 100, 200, 400, 800, 1600}. From Figure 3 (c), our FOA consistently achieves stable performance when #samples greater than 32, regarding both the accuracy and ECE. These results show that our FOA does not need to collect too many in-distribution samples, which are easy to obtain in practice.

4.4 More Discussions↩︎

Results on Single Sample Adaptation (Batch Size = 1). In our FOA, the prompt is learned over a batch of test samples each time. This process suffers from a challenge when the batch size is limited to one, as it requires the computation

of the mean and variance of features, which may not be feasible with a single sample. Nonetheless, this issue is not significantly problematic in real-world applications. We propose a solution in the form of an interval update strategy, referred to as

FOA-I. Specifically, given an ongoing stream of test data, we opt to update the prompts (performing CMA optimization) after encountering a pre-defined number of samples, denoted as \(I\). During this

interval, we temporarily store the relevant features of all CLS tokens or the original image until the next update. From Table [tab:foa95on95single95sample95adaptation], our FOA-I is effective across various values of \(I\). Notably, FOA-I with an interval of \(L\small{=}4\) outperforms the accuracy of TENT (with batch size 64), suggesting its effectiveness. Interestingly, FOA-I with smaller intervals (e.g., \(I\small{=}4\)) shows better

performance than it with \(I\small{=}64\). This is because a smaller \(I\) leads to more CMA iteration steps for a given test data stream.

Run-Time Memory Usage. The memory usage during runtime is influenced by both the model and the batch size (\(BS\)). We detail the memory consumption of different methods with different \(BS\) in Table 3. Our FOA exhibits a marginally higher memory consumption compared to NoAdapt across different \(BS\), due to the need to maintain certain feature statistics, e.g., FOA requires 3MB extra memory than NoAdapt for \(BS=4\). Notably, FOA significantly lowers memory usage compared to existing gradient-based TTA, e.g., 832 MB (FOA) vs. 5,165 MB (TENT) / 16,836 MB (CoTTA) under \(BS=64\). Moreover, our FOA-I V1/V2, which are variants (introduced in the last subsection) designed for single sample adaptation (\(BS=1\)), with different update intervals \(I\) further decrease the memory footprint of FOA. Lastly, applying FOA to quantized models yields additional memory savings proportional to the quantization level. These results verify the efficiency of FOA, particularly for deployment on resource-constrained edge devices.

| BP | \(BS\small{=}1\) | \(BS\small{=}4\) | \(BS\small{=}8\) | \(BS\small{=}16\) | \(BS\small{=}32\) | \(BS\small{=}64\) | |

|---|---|---|---|---|---|---|---|

| 1-8 NoAdapt | 346 | 369 | 398 | 458 | 579 | 819 | |

| TENT | 426 | 648 | 948 | 1,550 | 2,756 | 5,165 | |

| CoTTA | 1,792 | 2,312 | 3,282 | 5,226 | 9,105 | 16,836 | |

| FOA | – | 372 | 402 | 464 | 587 | 832 | |

| FOA(8-bit) | – | 93 | 100 | 116 | 147 | 208 | |

| 1-8 | \(BS\small{=}1\), but update prompt every \(I\) samples | ||||||

| \(I\small{=}1\) | \(I\small{=}4\) | \(I\small{=}8\) | \(I\small{=}16\) | \(I\small{=}32\) | \(I\small{=}64\) | ||

| 1-8 FOA-I V1 | – | 352 | 356 | 373 | 406 | 473 | |

| FOA-I V1 (8-bit) | – | 88 | 89 | 93 | 102 | 118 | |

| FOA-I V2 | – | 351 | 353 | 358 | 368 | 388 | |

| FOA-I V2 (8-bit) | – | 88 | 88 | 89 | 92 | 97 | |

Computational Complexity Analyses. The primary computational demand of FOA stems from \(K\) (the population size in CMA) forward passes. Though FOA requires more forward passes than TENT, its independence from backward passes significantly reduces memory usage and potentially boosts overall efficiency. In Table 4, our Activation Shifting achieves almost the same efficiency as NoAdapt while outperforming T3A, i.e., 59.1% vs. 56.9% accuracy. Notably, our BP-free FOA(\(K\small{=}2\)) matches the accuracy of BP-based TENT with lower run time and memory, and FOA(\(K\small{=}6\)) further surpasses BP-based CoTTA in all aspects. Moreover, even at \(K\small{=}28\), FOA maintains much higher efficiency than augmentation-based methods like MEMO [24]. In FOA, \(K\) is a hyperparameter that one can select different values for performance and efficiency trade-off. Here, we do not report MEMO’s performance as it is too time-consuming and has lower accuracy than TENT per [12]. Note that TENT updates only norm layers, making it more efficient than full-model backpropagation.

| Average | GPU | ||||||

| Method | BP | #FP | #BP | Acc. | ECE | Time (s) | Memory (MB) |

| 1-8 NoAdapt | 1 | 0 | 55.5 | 10.5 | 119 | 819 | |

| T3A | 1 | 0 | 56.9 | 26.8 | 235 | 957 | |

| MEMO | 65 | 64 | – | – | 40,428 | 11,058 | |

| TENT | 1 | 1 | 59.6 | 18.5 | 259 | 5,165 | |

| SAR | [1, 2] | [0, 2] | 62.7 | 7.0 | 517 | 5,166 | |

| CoTTA | 3or35 | 1 | 61.7 | 6.5 | 964 | 16,836 | |

| 1-8 Act. Shifting | 1 | 0 | 59.1 | 12.7 | 120 | 821 | |

| FOA(\(K\small{=}2\)) | 2 | 0 | 59.6 | 9.7 | 255 | 830 | |

| FOA(\(K\small{=}4\)) | 4 | 0 | 60.9 | 5.8 | 497 | 830 | |

| FOA(\(K\small{=}6\)) | 6 | 0 | 62.7 | 4.6 | 740 | 830 | |

| FOA(\(K\small{=}28\)) | 28 | 0 | 66.3 | 3.2 | 3,386 | 832 | |

Effects of Design Choice w.r.t. Learnable Parameters, Optimizer and Loss. From Table 5, directly replacing SGD with CMA for entropy-based TTA is infeasible, e.g., the average accuracy degrades from 55.5% to 0.1% (norm layers, exp5) and 44.9% (prompts, exp6). The reasons are that 1) CMA fails to handle ultra-high-dimensional optimization and 2) the entropy can not provide stable learning signals and thus tends to result in collapsed trivial solutions. However, with our devised fitness function (Eqn. (5 )) and learnable prompts, CMA performs effectively, surpassing the gradient-based TENT. Moreover, our proposed loss function achieves excellent performance in the context of SGD learning, e.g., a comparison between exp2 and TENT shows that the average accuracy improves significantly from 59.6% to 70.5%, underscoring the effectiveness of Eqn. (5 ).

| Learnable Params | Optimizer | Loss | Acc. (\(\uparrow\)) | ECE (\(\downarrow\)) | |

|---|---|---|---|---|---|

| 1-6 NoAdapt | – | – | – | 55.5 | 10.5 |

| TENT | norm layers | SGD | entropy | 59.6 | 18.5 |

| exp1 | prompts | SGD | entropy | 50.7 | 18.4 |

| exp2 | norm layers | SGD | Eqn. (5 ) | 70.5 | 7.9 |

| exp3 | prompts | SGD | Eqn. (5 ) | 64.6 | 3.7 |

| exp4 | norm layers | CMA | Eqn. (5 ) | 0.1 | 5.8 |

| exp5 | norm layers | CMA | entropy | 0.1 | 99.0 |

| exp6 | prompts | CMA | entropy | 44.9 | 36.8 |

| 1-6 Ours | prompts | CMA | Eqn. (5 ) | 65.4 | 3.3 |

5 Conclusion↩︎

In this paper, we aim to implement online test-time adaptation without the need for backpropagation and altering the model parameters. This advancement highly broadens the scope of TTA’s real-world applications, particularly in resource-limited scenarios such as smartphones and FPGAs where backpropagation is often not feasible. To this end, we propose a test-time Forward-Only Adaptation (FOA) method. In FOA, we online learn an input prompt through a covariance matrix adaptation technique, paired with a designed unsupervised fitness function to provide stable learning signals. Moreover, we devise a “back-to-source" activation shifting scheme to directly alter the activations from the out-of-distribution domain to the source in-distribution domain, further boosting the adaptation performance. Extensive experiments on four large-scale out-of-distribution benchmarks with full precision (32-bit) and quantized (8-bit/6-bit) models verify our superiority.

Impact Statements↩︎

This paper presents work whose goal is to advance the field of test-time adaptation for out-of-distribution generalization.

The societal impact of our work lies primarily in its potential to broaden the applicability of machine learning models in real-world scenarios, and facilitate their deployment on mobile computing, edge devices, and embedded systems, where power and processing capabilities are restricted. By making advanced machine learning more accessible to devices with lower specifications, our method can help democratize the benefits of AI technology.

Ethically, our work promotes more sustainable machine learning practices by reducing the computational overhead for model adaptation. This can contribute to lower energy consumption in machine learning deployments, aligning with environmental sustainability goals. Moreover, our method enables local model adaptation without the need to upload data to cloud servers, inherently enhancing data privacy and security.

6 Related Work↩︎

Test-Time Adaptation (TTA) is designed to enhance the performance of a model on unseen test data, which may exhibit distribution shifts, by directly learning from the test data itself. We categorize the related TTA works into the following two groups for discussion, differentiated by their dependence on backward propagation.

\(\bullet\) Backpropagation (BP)-Free TTA. In the early development of BP-free TTA, attention was primarily given to adjusting batch normalization (BN) layer statistics by computing mean and variance from testing data [16], [40]. This method, however, assumes multiple test samples for each prediction. To address this, later studies proposed single-sample BN adaptation techniques, such as using data augmentation [17], mix-up training and testing statistics [18], [41]. In addition to BN adaptation, other methodologies have been explored and shown to be effective, such as prototype-based classifier adjustment [13], predicted logits correction [19], difussion-based input image adaptation [42] and test-time batch renormalization [43]. However, since BP-free TTA does not update the core model parameters, it might exhibit limited learning capabilities, often resulting in suboptimal performance when dealing with out-of-distribution testing data. Therefore, BP-based TTA emerged as an effective solution to significantly boost the out-of-distribution generalization performance.

\(\bullet\) Backpropagation-Based TTA. One pioneering work of BP-based TTA is known as Test-Time Training (TTT) [11]. For TTT methods, they first train a source model using both supervised and self-supervised objectives, followed by adapting the model at test time with the self-supervised objective, such as rotation prediction [11], contrastive learning [14], [23], reconstruction learning [44], [45]. To avoid directly altering the model training process and access to source data, Fully TTA methods update any given model via unsupervised learning objectives, such as entropy minimization [12], [20], [46], prediction consistency maximization [24], [47], [48] and feature distribution alignment [49], [50].

However, BP-based TTA methods typically require multiple backward propagations for each test sample, leading to computational inefficiency. To tackle this, recent works [12], [37], [51], [52] have proposed selecting confident or reliable samples for test-time model learning. This strategy significantly reduces the number of backward passes needed for an entire set of testing data, thereby enhancing adaptation efficiency and performance. Moreover, MECTA [53] proposes a series of techniques to reduce the run-time memory consumption of BP-based TTA, including reducing the batch size and stopping the BP caching heuristically. Nonetheless, these TTA methods still depend on BP, which poses challenges for resource-constrained edge devices like smartphones and FPGAs, especially those with quantized models. These devices often have limited memory and may not support backpropagation, thus hindering the broad real-world applications of BP-based TTA methods. In this context, we introduce a forward-only test-time adaptation method, aiming to achieve better performance than BP-based TTA methods but without using any backward propagation.

Derivative-Free Learning (DFL) achieves optimization solely through evaluating the function values \(f({\boldsymbol{x}})\) at sampled solutions \({\boldsymbol{x}}\), with minimal assumptions about the objective function (can be non-convex or black-box) and without the need for explicit derivatives. We review representative DFL methods below.

\(\bullet\) Evolutionary Algorithms. Covariance Matrix Adaptation Evolution Strategy (CMA-ES) [28] is one of the most successful evolutionary approaches. It updates a covariance matrix of the multivariate normal distribution used to sample new solutions and effectively learns a second-order model of the objective function, similar to approximating the inverse Hessian matrix in classical optimization. Based on CMA-ES, several recent works [54]–[56] have been proposed to improve CMA-ES’s time and memory complexity.

Nevertheless, CMA-ES still faces challenges in handling extremely high-dimensional optimization problems, such as deep model optimization. Recently, some efforts [57], [58] have been made to adapt CMA algorithms for the scenario of Model-as-Service applications in language and vision-language models. However, these methods run on a pre-collected annotated dataset for a specific downstream task, and perform supervised optimization offline. In contrast, our approach adapts a given model to out-of-distribution testing data in an online and unsupervised manner.

Figure 4: Visualizations of images in ImageNet and ImageNet-C/V2/R/Sketch, which are directly taken from their original papers.

\(\bullet\) Zeroth-Order Optimization methods estimate gradients by comparing the loss values of two forward passes [59]–[61], in which the model weights used in the second forward pass are altered from that used in the first forward pass through a random perturbation. One of the recent works [62] introduced this optimization scheme in fine-tuning large language models and demonstrated its effectiveness. However, these methods still operate in an offline setting with few-shot supervised samples and are theorized to have slow convergence for optimizing large models [62], which are not directly compatible with our online unsupervised TTA setting. Thus, in our FOA, we adopt the evolution-based CMA-ES method for prompt optimization. However, it is important to note that Zeroth-Order optimization also presents a promising avenue for developing backpropagation-free TTA methods. Exploring this potential is an objective we aim to pursue in the future.

Activation Editing methods directly modify the internal activation representations of a model to control the final output [63], [64], which has recently been explored in the field of language models. For example, [64] and [65] exploit “steering vectors" for style transfer and [66] adjust the attention heads to improve the truthfulness of large language models. In this work, inspired by this general idea, we propose a back-to-source activation shifting mechanism that online adjusts the model’s activations in real-time to enhance generalization to out-of-distribution testing data.

7 More Implementation Details↩︎

7.1 More Details on Dataset↩︎

In this paper, we conduct experiments on four ImageNet [67] variants to evaluate the out-of-distribution generalization ability, i.e., ImageNet-C [1], ImageNet-R [32], ImageNet-V2 [33], and ImageNet-Sketch [34].

ImageNet-C consists of various versions of corruption applied to 50,000 validation images from ImageNet. The dataset encompasses 15 distinct corruption types of 4 main groups, including Gaussian noise, shot noise, impulse noise, defocus blur, glass blur, motion blur, zoom blur, snow, frost, fog, brightness, contrast, elastic transformation, pixelation, and JPEG compression. Each corruption type is characterized by 5 different levels of severity, with higher severity levels indicating a more severe distribution shift. In our experiments, we specifically utilize severity level 5 for evaluation.

ImageNet-R contains 30,000 images featuring diverse artistic renditions of 200 ImageNet classes. These images are predominantly sourced from Flickr and subjected to filtering by Amazon MTurk annotators.

ImageNet-V2 is a newly collected test dataset extracted from the same test distribution as ImageNet. It comprises three test sets, each containing 10,000 new images and covering 1000 ImageNet classes. Following previous TTA methods [40], we utilize the Matched Frequency subset of ImageNet-V2 for evaluation, in which the images are sampled to match the class frequency distributions of the original ImageNet validation dataset.

ImageNet-Sketch consists of 50,899 images represented as black and white sketches, encompassing 1000 ImageNet classes. Each class contains approximately 50 images.

7.2 More Evaluation Protocols↩︎

We use ViT-Base [29] as the source model backbone for all experiments. The model is trained on the source ImageNet-1K training set and

the model weights1 are directly obtained from timm2 repository [35]. We adopt PTQ4ViT3 [36] for 8-bit and 6-bit model quantization with 32 randomly selected samples from the training set. We introduce the implementation details of all involved methods

in the following.

FOA(Ours). For the default configuration of hyperparameters, the number of prompt embeddings \(N_p\) is set to 3 using uniform initialization. We use CMA-ES4 as the update rule, with the batch size \(BS\) of 64, and the population size \(K\) of \(28=4+3\times\log(prompt ~~dim)\) by following [28]. The \(\lambda\) in Eqn. (5 ) is set to 0.4\(\times BS/64\) on ImageNet-C/V2/Sketch, and 0.2\(\times BS/64\) on ImageNet-R to balance the magnitude of two losses. We use the validation set of ImageNet-1K to estimate source training statistics. The moving average factor \(\alpha\) in Eqn. (9 ) is set to 0.1. The step size \(\gamma\) in Eqn. (7 ) is set to 1. For updating prompts and normalization layers with SGD optimizer in Table 5 with Eqn. (5 ), the entropy loss is divided by the batch size of 64 and the \(\lambda\) is set to 30 to make the two losses have similar magnitude. We investigate the effects of these hyperparameters with different setups in Section 4.3 and Appendix 8. The source in-distribution statistics \(\{\boldsymbol{\mu}_i^S, {\boldsymbol{\sigma}}_i^S\}_{i=0}^N\) are calculated without using the newly inserted prompt.

LAME5 [19]. For fair comparison, we maintain a consistent batch size of 64 for LAME, aligning it with the same batch size used by other methods in our evaluation. We use the kNN affinity matrix with the value of k chosen from {1, 5, 10, 20}, and for all experiments, we consistently set it to 5 based on the optimal accuracy observed on ImageNet-C.

T3A6 [13]. We follow all hyper-parameters that are set in T3A unless it does not provide. Specifically, the batch size is set to 64. The number of supports to restore \(M\) is chosen from {1, 5, 20, 50, 100}, and for all experiments, we consistently set it to 20 based on the optimal accuracy observed on ImageNet-C.

TENT7 [20]. We follow all hyper-parameters that are set in Tent unless it does not provide. Specifically, we use SGD as the update rule, with a momentum of 0.9, batch size of 64 and the learning rate of 0.001. The trainable parameters are all affine parameters of layer normalization layers (except for the experiments in Table 5). For updating prompts with SGD optimizer in Table 5 with entropy loss, the learning rate is set to 0.01 and the number of inserted prompts is set to 3.

SAR8 [12]. We follow all hyper-parameters that are set in SAR unless it does not provide. Specifically, we use SGD as the update rule, with a momentum of 0.9, batch size of 64 and the learning rate of 0.001. The entropy threshold \(E_0\) is set to 0.4\(\times\ln{C}\), where \(C\) is the number of task classes. The trainable parameters are the affine parameters of the layer normalization layers from blocks1 to blocks8 for ViT-Base.

CoTTA9 [37]. We follow all hyperparameters that are set in CoTTA unless it does not provide. Specifically, we use SGD as the update rule, with a momentum of 0.9, batch size of 64 and the learning rate of 0.05. The augmentation threshold \(p_{th}\) is set to 0.1. For images below threshold, we conduct 32 augmentations including color jitter, random affine, Gaussian blur, random horizonal flip, and Gaussian noise. The restoration probability of is set to 0.01 and the EMA factor \(\alpha\) for teacher update is set to 0.999. The trainable parameters are all the parameters in ViT-Base.

8 More Ablation Studies↩︎

Effects of trade-off parameter \(\lambda\) in Eqn. (5 ). In the main paper, we simply set the trade-off parameter \(\lambda\) in our fitness function (see Eqn. (5 )) to 0.4, to balance the magnitude of two terms. Here, we further investigate the sensitivity of \(\lambda\), selected from {0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9, 1.0}. From Table 6, FOA maintains comparable accuracy across different values of \(\lambda\) as the accuracy variation is not very large, highlighting its insensitivity to changes in \(\lambda\). Despite this insensitivity, FOA achieves better overall performance with \(\lambda\) selected from \(\{0.3, 0.4, 0.5\}\) in terms of both accuracy and ECE. The observed excellent performance in this range can be attributed to the balanced magnitude of the two terms in Eqn. (5 ).

| \(\lambda=0.1\) | \(\lambda=0.2\) | \(\lambda=0.3\) | \(\lambda=0.4\) | \(\lambda=0.5\) | \(\lambda=0.6\) | \(\lambda=0.7\) | \(\lambda=0.8\) | \(\lambda=0.9\) | \(\lambda=1.0\) | |

|---|---|---|---|---|---|---|---|---|---|---|

| 1-11 Acc. (%, \(\uparrow\)) | 61.1 | 61.8 | 61.7 | 61.5 | 61.3 | 61.4 | 61.5 | 61.3 | 61.2 | 61.2 |

| ECE (%, \(\downarrow\)) | 5.9 | 3.2 | 2.6 | 2.5 | 2.6 | 2.9 | 3.3 | 3.8 | 4.0 | 4.4 |

| NoAdapt | \(BS=1\) | \(BS=2\) | \(BS=4\) | \(BS=8\) | \(BS=16\) | \(BS=32\) | \(BS=64\) | ||

|---|---|---|---|---|---|---|---|---|---|

| 1-10 Acc. (%, \(\uparrow\)) | Act. Shifting w/o EMA | 55.5 | 0.1 | 56.9 | 58.4 | 58.8 | 59.0 | 59.1 | 59.1 |

| Act. Shifting with EMA (Ours) | 59.0 | 59.1 | 59.1 | 59.1 | 59.1 | 59.1 | 59.2 | ||

| 1-10 ECE (%, \(\downarrow\)) | Act. Shifting w/o EMA | 10.5 | 0.0 | 52.4 | 37.5 | 24.5 | 18.0 | 15.1 | 13.8 |

| Act. Shifting with EMA (Ours) | 12.7 | 12.7 | 12.7 | 12.7 | 12.7 | 12.7 | 12.7 |

| \(\beta=0.1\) | \(\beta=0.2\) | \(\beta=0.3\) | \(\beta=0.4\) | \(\beta=0.5\) | \(\beta=0.6\) | \(\beta=0.7\) | \(\beta=0.8\) | \(\beta=0.9\) | \(\beta=1.0\) | ||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1-12 ImageNet-C | Acc. (%, \(\uparrow\)) | 61.0 | 61.4 | 61.5 | 61.4 | 61.5 | 61.7 | 61.7 | 61.2 | 61.9 | 61.5 |

| ECE (%, \(\downarrow\)) | 9.5 | 7.2 | 4.7 | 4.4 | 3.8 | 3.1 | 3.1 | 3.0 | 3.6 | 2.5 | |

| 1-12 ImageNet-R | Acc. (%, \(\uparrow\)) | 47.8 | 56.8 | 63.1 | 63.4 | 62.6 | 63.0 | 63.2 | 64.2 | 63.0 | 63.8 |

| ECE (%, \(\downarrow\)) | 19.2 | 8.4 | 2.8 | 2.8 | 3.0 | 2.8 | 2.8 | 2.4 | 3.1 | 2.7 |

lcccccccccccccccc>cc <multicolumn,1> & <multicolumn,1>& <multicolumn,3>Noise & & & <multicolumn,4>Blur & & & & <multicolumn,4>Weather & & & &

<multicolumn,4>Digital & & & & <multicolumn,1>

Method & BP & Gauss. & Shot & Impul. & Defoc. & Glass & Motion & Zoom & Snow & Frost & Fog & Brit. & Contr. & Elas. & Pix. & JPEG & Avg.

NoAdapt & & 7.5 & 4.6 & 6.6 & 6.5 & 6.2 & 2.6 & 5.0 & 4.7 & 19.7 & 49.2 & 8.6 & 19.3 & 6.0 & 5.0 & 6.2 & 10.5

LAME & & 6.5 & 3.6 & 5.6 & 5.1 & 9.4 & 2.2 & 6.2 & 5.6 & 18.1 & 46.3 & 7.7 & 29.0 & 10.6 & 3.9 & 5.0 & 11.0

T3A & & 29.6 & 30.0 & 29.6 & 31.1 & 42.0 & 32.1 & 37.1 & 25.7 & 26.2 & 14.7 & 16.6 & 6.1 & 35.0 & 24.4 & 22.5 & 26.8

TENT & & 13.7 & 13.0 & 12.9 & 14.7 & 15.9 & 12.7 & 15.3 & 24.9 & 12.1 & 93.5 & 6.0 & 10.7 & 14.4 & 8.7 & 9.3 & 18.5

CoTTA & & 4.2 & 2.9 & 4.4 & 7.2 & 12.8 & 7.1 & 11.6 & 4.1 & 0.9 & 5.1 & 3.2 & 15.9 & 8.1 & 5.1 & 5.4 & 6.5

SAR & & 7.9 & 7.4 & 7.3 & 9.0 & 9.5 & 7.7 & 9.7 & 6.1 & 6.0 & 9.2 & 2.3 & 7.5 & 7.0 & 4.3 & 4.4 & 7.0

FOA(ours) & & 2.5 & 2.4 & 2.5 & 3.4 & 3.3 & 3.0 & 4.0 & 3.2 & 3.3 & 4.8 & 3.0 & 3.4 & 3.2 & 3.0 & 2.8 & 3.2

Effects of Exponential Moving Average (EMA) in Eqn. (9 ). In Table 7, we investigate the effectiveness of EMA in Eqn. (9 ), which is designed to estimate the center of activation features of OOD testing samples accurately. The results show that without Eqn. (9 ), Activation (Act.) Shifting suffers from notable performance degradation in both accuracy and ECE, particularly when using small batch sizes (e.g., \(BS<8\)) where batch statistics are less accurate. In contrast, incorporating EMA ensures stable performance even with a batch size of 1, suggesting its effectiveness.

Effects of Exponential Moving Average (EMA) in Calculating Eqn. (5 ). In the main paper, we consistently use the batch statistics \({\boldsymbol{\mu}}_i({\mathcal{X}}_{t})\) and \(\boldsymbol{\sigma}_i({\mathcal{X}}_{t})\) to calculate Activation Discrepancy fitness. In Table 8, we further investigate the effects of using EMA to estimate the overall test set statistics for the fitness function calculation. The results indicate a degradation in performance when the balance factor \(\beta\) is notably small. This decline is attributed to a biased objective, which encourages batch statistics to compensate for alignment errors in historical overall statistics rather than converging towards the source in-distribution statistics. In contrast, using \({\boldsymbol{\mu}}_i({\mathcal{X}}_{t})\) and \(\boldsymbol{\sigma}_i({\mathcal{X}}_{t})\) (i.e., \(\beta=1.0\)) for Eqn. (5 ) achieves remarkable performance on both ImageNet-C and ImageNet-R, without requiring additional hyperparameter.

9 More Experimental Results↩︎

More Results w.r.t. ECE. In Tables [tab:imagenet-c-full-precision] and [tab:imagenet-rv2asketch-full-precision] of the main paper, we only report the average ECE due to page limits. In this subsection, we provide detailed ECEs for both full-precision and quantized models. From Tables [suppl:tab:imagenet-c-full-precision-ece] and Table [suppl:tab:quantized95results-ece], our FOA consistently outperforms the state-of-the-art methods among most corruptions and achieves much lower average ECE, e.g., 3.2% vs. 7.0% compared with SAR on full precision ViT-Base and 3.8% vs. 25.9% compared with T3A on 8-bit quantized ViT-Base. These results demonstrate consistent effects of our FOA on mitigating the calibration error, further highlighting our effectiveness. Here, the excellent ECEs achieved by FOA mainly originate from our activation discrepancy regularization item in Eqn. (5 ), which alleviates the error accumulation issue of prior gradient-based TTA methods (e.g., TENT [20] and SAR [12]) that may employ imprecise pseudo labels or entropy for test-time model updating.

llccccccccccccccc>cc <multicolumn,1> & <multicolumn,1>& <multicolumn,3>Noise & & & <multicolumn,4>Blur & & & & <multicolumn,4>Weather & & & &

<multicolumn,4>Digital & & & & <multicolumn,1>

Model & Method & Gauss. & Shot & Impul. & Defoc. & Glass & Motion & Zoom & Snow & Frost & Fog & Brit. & Contr. & Elas. & Pix. & JPEG & Avg.

8-bit & NoAdapt & 8.3 & 5.7 & 7.5 & 7.9 & 4.9 & 3.0 & 4.4 & 5.5 & 20.7 & 50.0 & 10.4 & 14.1 & 5.2 & 6.2 & 8.3 & 10.8

& T3A & 30.0 & 26.0 & 25.7 & 28.7 & 41.9 & 31.2 & 40.1 & 27.2 & 21.6 & 9.6 & 15.8 & 5.7 & 40.7 & 23.1 & 21.0 & 25.9

& FOA(ours) & 2.8 & 3.4 & 2.9 & 3.5 & 3.5 & 3.1 & 4.3 & 3.6 & 3.9 & 5.6 & 3.9 & 5.0 & 3.6 & 3.9 & 3.1 & 3.8

6-bit & NoAdapt & 10.1 & 7.0 & 10.5 & 5.8 & 4.2 & 4.2 & 4.5 & 2.8 & 18.0 & 32.5 & 12.4 & 17.3 & 4.2 & 6.6 & 7.8 & 9.9

& T3A & 31.3 & 27.3 & 26.2 & 43.2 & 50.4 & 35.6 & 49.3 & 33.9 & 22.8 & 14.6 & 16.9 & 7.7 & 44.2 & 26.6 & 22.2 & 30.1

& FOA(ours) & 5.6 & 4.9 & 6.7 & 3.1 & 2.4 & 5.0 & 3.5 & 6.6 & 7.1 & 7.0 & 6.0 & 7.7 & 3.3 & 5.8 & 7.7 & 5.5