BERT-Enhanced Retrieval Tool for Homework Plagiarism Detection System

April 01, 2024

Abstract

Text plagiarism detection task is a common natural language processing task that aims to detect whether a given text contains plagiarism or copying from other texts. In existing research, detection of high level plagiarism is still a challenge due to the lack of high quality datasets. In this paper, we propose a plagiarized text data generation method based on GPT-3.5, which produces 32,927 pairs of text plagiarism detection datasets covering a wide range of plagiarism methods, bridging the gap in this part of research. Meanwhile, we propose a plagiarism identification method based on Faiss with BERT with high efficiency and high accuracy. Our experiments show that the performance of this model outperforms other models in several metrics, including 98.86%, 98.90%, 98.86%, and 0.9888 for Accuracy, Precision, Recall, and F1 Score, respectively. At the end, we also provide a user-friendly demo platform that allows users to upload a text library and intuitively participate in the plagiarism analysis.

1 Introduction↩︎

You are a teacher who is responsible for detecting plagiarism in assignments submitted by students. Plagiarism in assignments can be categorised into three types[1]:

word-by-word plagiarism: It’s the equivalent of copying;

Imitation plagiarism: Plagiarizing by rewriting words and grammar;

Creative plagiarism: Plagiarism is achieved by reusing and rewriting original ideas from the source text;

Obviously, for simple plagiarism like word-by-word plagiarism, we can detect it by some simple methods such as keyword search. For Imitation plagiarism and Creative plagiarism, two plagiarisms that are more difficult to recognize, fine-tuned Large Language Models (LLMs) are generally used for detection.

LLMs process a large amount of textual data in the pre-training phase, including a variety of literary works, academic papers, and web content. This enables them to learn and understand a variety of linguistic expressions, including different writing styles and language structures. As a result, they can recognize texts that have undergone significant changes at the lexical and grammatical levels. For example, during word embedding, the BERT model takes into account the context to the left and right of a given word in a sentence. This bi-directional contextual understanding allows the model to better capture the deeper semantics of the sentence.

Reasoning with LLMs consumes a lot of computational resources. Therefore, we need a fast retrieval method to filter out superficially similar texts that may involve plagiarism. FAISS[2] is a good choice for storing and retrieving embedding vectors for BERT.

Then, we can splice the retrieved results and let it go through an MLP to derive the probability of plagiarism occurrence.

However, the fact is that it is difficult to obtain a real dataset of plagiarized assignments. The reason is simple: no child who plagiarizes homework wants to be caught by the teacher.

Fortunately, the development of large-scale language models (LLMs) such as GPT-3.5 has opened up new avenues for natural language processing, especially in terms of data augmentation for low-resource classification tasks. So, we used Prompt to let GPT-3.5 plagiarize some AI papers on arxiv, and came up with a plagiarism compartmentalized diverse dataset.

Finally, we trained a model for identifying plagiarism categories and it performed far better than other models on this dataset.

These are some of our main contributions:

Dataset: We used GPT-3.5 to generate a plagiarized corpus with diverse plagiarism styles;

Accurate and efficient plagiarism identification: We propose a plagiarism identification framework to quickly and accurately retrieve and determine the type of plagiarism;

Our demo: We built a simple demo where users are able to upload text libraries and perform plagiarism analysis in a visual way;

2 Related works↩︎

2.1 Detection Tool↩︎

In the context of plagiarism detection using language models, we consider the BERT model as a black box. For initial screening, FAISS is used to identify potential plagiarized texts. These candidate texts are then input into the BERT model for a more in-depth semantic analysis to determine if plagiarism exists between two target texts.

FAISS provides crucial support in the preliminary screening of assignment submissions, employing efficient similarity search algorithms. Its GPU-accelerated searches optimize k-nearest neighbors searches based on L2 distance, essential for handling large-scale datasets.[2] FAISS’s product quantization code enhances this process, offering a more effective and memory-efficient method than binary codes. Its IVFADC index structure is particularly adept at managing large datasets, aligning well with the preliminary screening requirements in plagiarism detection tasks.

Sentence BERT, especially in its MPNet-based improved version[3], marks a significant advancement in natural language processing. It offers profound semantic understanding of text representation, which is key for identifying not only direct plagiarism but also complex rewrites and subtle textual modifications. Initially used for sentence-level comparisons, SentenceBERT’s capability to recognize deep semantic relationships within texts is crucial for the detailed analysis of cases flagged by FAISS. In its updated version, SentenceBERT also excels in generating semantic vectors for longer paragraph-level texts. In our research, SentenceBERT is used to generate semantic segments of FAISS assignment vectors during the preliminary screening phase. The BERT model is then fine-tuned on our dataset, enhancing its effectiveness in downstream tasks like plagiarism detection.

2.2 Dataset Construction↩︎

The majority of datasets constructed for plagiarism detection research employ a binary labeling approach for samples, categorizing them as either plagiarized or not, as discussed by Alvi et al., (2021)[4].

However, varying degrees of plagiarism are evident in different samples, depending on the methods used (such as synonym replacement, grammatical structure alteration, semantic sequence modification) and the frequency of these modifications. Clough et al., (2011)[1] created a plagiarism corpus reflecting different degrees of plagiarism, offering a model for how to categorize plagiarized samples in dataset construction. Their dataset, with approximately 500 samples, is available for download here (https://github.com/josecruzado21/plagiarism_detection).

Mehrafarin et al.,(2022)[5] examined the required dataset size for fine-tuning the BERT model, finding in experiments across five different classification tasks that beyond a dataset size of 7k, the cost-benefit ratio of enhancing model performance by increasing dataset size diminishes. This suggests that an appropriately sized dataset should be several thousand samples or more. Therefore, a larger classified dataset is needed. Due to ethical and legal considerations, methods for obtaining plagiarism corpus datasets are almost exclusively limited to machine construction and manual simulation, as noted by Alvi et al., (2021)[4].

The work of Møller et al., (2023)[6] demonstrates the feasibility of using GPT-4 for dataset construction, highlighting that for more complex tasks, designing intricate prompts allows GPT-4 constructed datasets to rival those created by humans. The study by Bsharat et al., (2023)[7] in December 2023 summarized 26 principles for writing GPT prompts, while Zhou et al., (2022) updated and summarized comprehensive text paraphrasing studies related to plagiarism, including 24 specific paraphrasing techniques. This provides detailed guidance for instructing GPT on how to paraphrase.

3 Data description↩︎

Based on Professor Li Mu’s recommendation, we downloaded PDF files of 78 AI papers from ARXIV. Through manual screening, we extracted an average of 60 text fragments for each paper (excluding parts such as figures, related work and appendices). Considering the 512-tokens limit of SBERT, we segmented the excessively long segments, and finally nearly 5,000 segments of text were used as the raw data for this work. (https://arxiv.org/)

4 Method↩︎

4.1 GPT-3.5-based data enhancement↩︎

Here, we have used five methods to enhance the data. Among them are negative sampling, chaotic rearrangement and three Prompt-based GPT-3.5 enhancement schemes.

The data were categorised into five types according to different methods. Specifically, these types include "plagiarism", which involves only a change in sentence order; "minor revision", which involves synonym substitution; "moderate revision", which combines synonym substitution and a change in grammatical structure; "viewpoint plagiarism", which involves synonym substitution, a change in the order of grammar and information, and stylistic modifications; and "no plagiarism", which involves texts from different authors on the same topic. The ‘plagiarism’ and ‘no plagiarism’ levels were compiled using an automated method, while the ‘mild’, ‘moderate’ and ‘opinion’ plagiarism categories were compiled using the GPT-3.5 API.

Eventually, we arrive at 32,927 text pairs of the form \((t_1,t_2,label)\). Where \(t_1\) is the original text, \(t_2\) is the text to be detected, and \(label\) denotes the type of plagiarism of \(t_2\) on \(t_1\).

Here, \(label\) has three values, which are:

No Plagiarism: Generated by negative sampling;

Imitation Plagiarism: Generated through GPT-3.5;

Shuffle Plagiarism: Generated through random disruption;

A more detailed Prompt methodology can be found in the Appendix.

4.2 Text plagiarism identification model↩︎

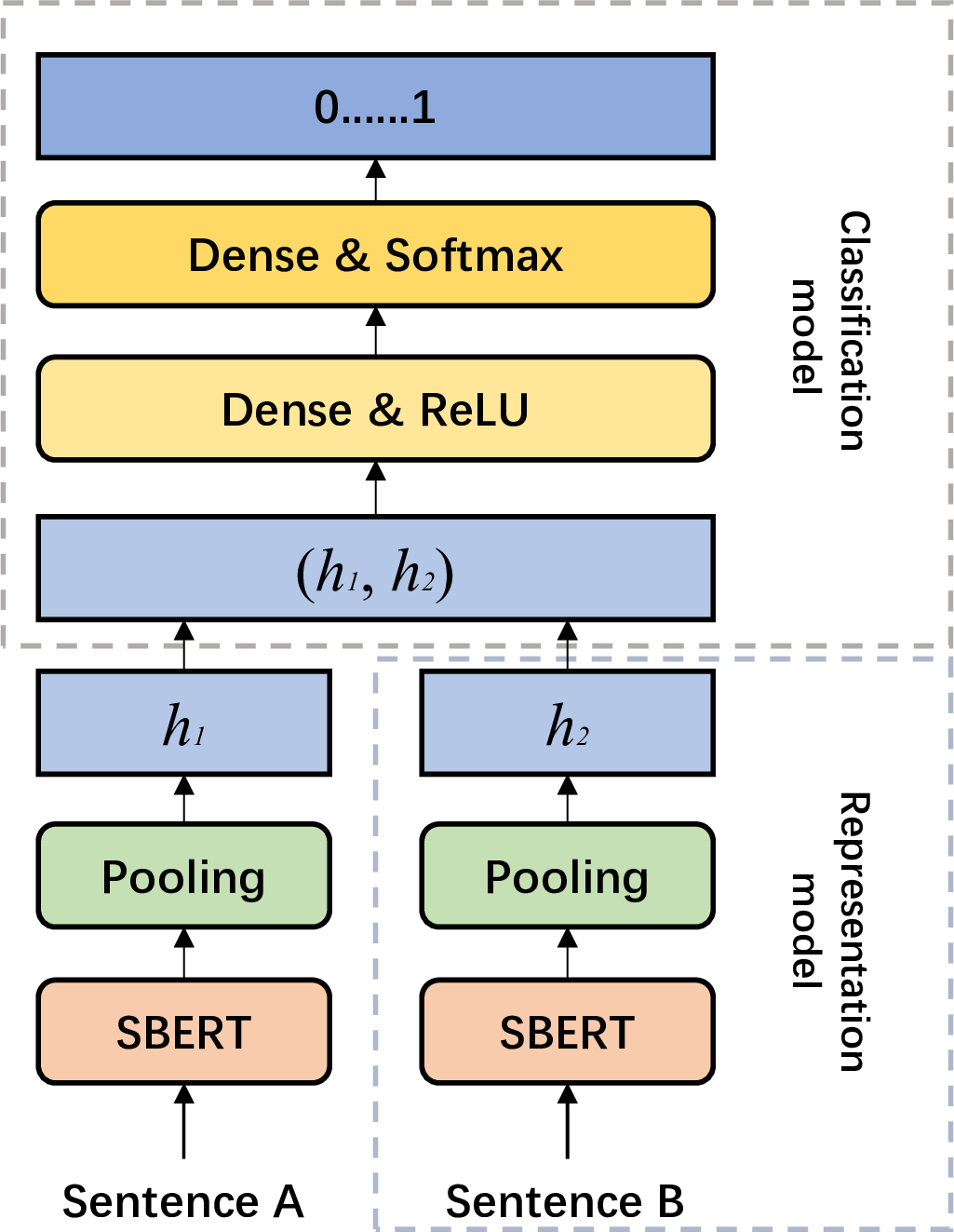

Our model is divided into two parts, the feature representation module and the classifier. See Figure 1 for details.

Figure 1: Our model chart

4.2.1 SBERT-based text feature representation↩︎

SBERT (Sentence-BERT) is an approach for textual feature representation based on an improved version of the BERT (Bidirectional Encoder Representations from Transformers) model specifically designed for generating embedding vectors at the sentence level.

The advantage of SBERT is that by encoding text as high-quality embedding vectors, it can provide better performance in a variety of natural language processing tasks.

Here, SBERT will act as a text feature extractor (Representation Model) to transform the input text \(t\) into a high latitude vector \(h \in R^{n}\) for subsequent plagiarism identification tasks. See equation (1)(2)(3) for details. \[\tilde{t}=tokenizer\left( t \right) \\ \label{eq:representation-model-tokenizer}\tag{1}\] \[e=embedding\left( \tilde{t} \right) \label{eq:representation-model-embedding}\tag{2}\] \[h=Pool_{[CLS]}\left( SBERT\left( e \right) \right) \label{eq:representation-model-pool}\tag{3}\]

where \(t\) is the input text, \(\tilde{t}\) is the result after tokeniser, \(e\) is the word embedding result and \(h\) is the [CLS] value of SBERT.

For ease of subsequent representation and understanding, the feature representation model is denoted as \(model_r\), where the input is \(t\) and the output is \(h\). Where \(t\) is the text and \(h\) is the feature vector of \(t\).

4.2.2 MLP-based text plagiarism classifier↩︎

Given \((t_1,t_2)\) , where \(t_1\) is the original text and \(t_2\) is the text to be detected, our task is to determine whether \(t_2\) plagiarised \(t_1\). First, the two texts are represented as feature vectors , denoted as \((h_1,h_2)\). For details, see Eq. (4). \[\left( h_1,h_2 \right) =model_r\left( \left( t_1,t_2 \right) \right) \label{eq:classification-model-represent}\tag{4}\]

Next, denote \(concat(h_1, h_2)\) as \(u\). \(u\) through a dense layer and ReLU layer, denoted as \(\tilde{u}\). For details, see Eq. (5). \[\tilde{u}=\mathrm{ReLU}\left( uW_1+b_1 \right) \label{eq:classification-model-mlp-1}\tag{5}\]

Finally, \(\tilde{u}\) through a dense layer and Softmax layer, denoted as \(p\). where \(p_i\) denotes the probability that the \(i_{th}\) plagiarism occurs. For details, see Eq. (6). \[p=\mathrm{Softmax} \left( \tilde{u}W_2+b_2 \right) \label{eq:classification-model-mlp-2}\tag{6}\]

where \(W_1\), \(W_2\), \(b_1\), \(b_2\) is a learnable parameter, Softmax is detailed in Eq. (7), and ReLU is detailed in Eq. (8). \[\mathrm{Softmax} \left( z_i \right) =\frac{\exp \left( z_i \right)}{\sum{z_j}} \label{eq:Softmax}\tag{7}\] where \(z_i\) is the \(i_{th}\) element of the input vector \(z\). \[\mathrm{ReLU} \left( x \right) = max(x,0) \label{eq:ReLU}\tag{8}\]

For ease of subsequent representation and understanding, the feature classification model is denoted as \(model_c\), where the input is \((h_1, h_2)\) and the output is \(p \in R^3\). where \(h_1\) and \(h_2\) are the feature vectors of the two texts, respectively, and \(p_i\) is the probability of occurrence of type \(i\) plagiarism.

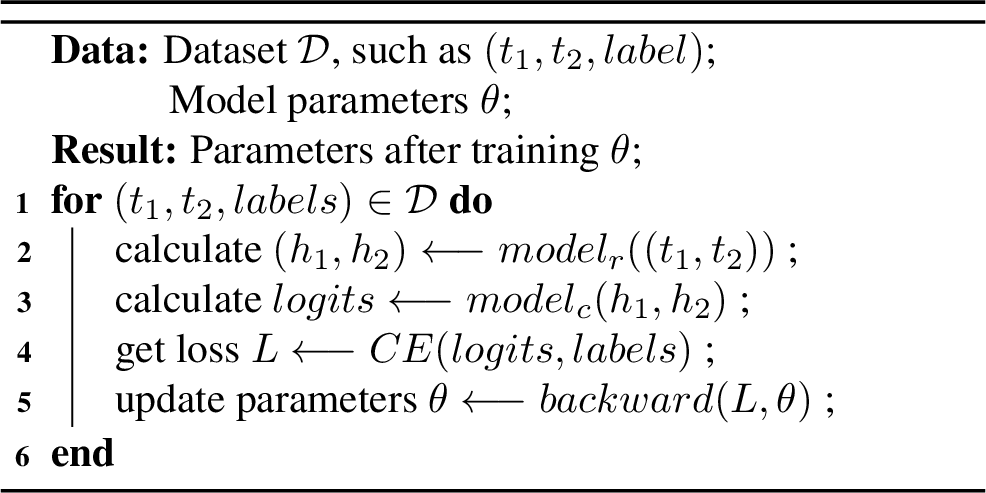

4.2.3 Our training algorithm↩︎

Cross-Entropy loss function is a metric used to measure the difference between two probability distributions. In deep learning, Cross-Entropy is commonly used to measure the difference between the model output and the true label, and is one of the common loss functions in classification tasks. We choose the cross-entropy loss function as the objective function for model training. For details, see Eq. (9). \[CE\left( p,q \right) =-\sum_{i=1}^C{q_i\log \left( p_i \right)} \label{eq:cross-entry-loss}\tag{9}\]

where \(q_i\) is the true label and \(p_i\) is the predicted probability.

Our training algorithm is detailed in Algorithm 1.

Figure 2: Training algorithm

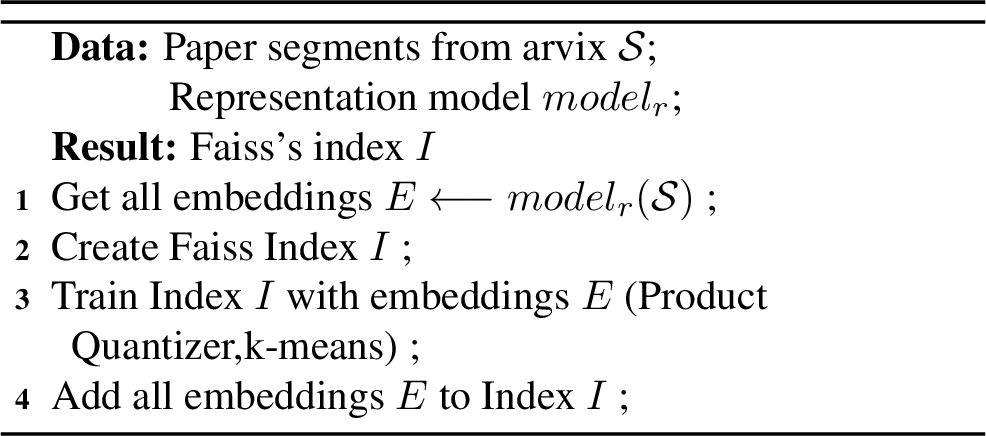

4.3 Faiss-based text retrieval↩︎

Given the text to be detected, denoted as \(t\). It is difficult to traverse a large number of texts for plagiarism detection. Therefore, we need a way to quickly retrieve some texts with a high likelihood of plagiarism. Then, we can use the plagiarism detection model to make a judgement.

FAISS (Facebook AI Similarity Search) is an open source library developed by Facebook for efficient similarity search. It is mainly used to process large-scale high-dimensional vectors, such as images, text and other embedded representations. At the same time, FAISS supports storage, retrieval and other functions. We can use the faiss framework to accelerate text retrieval and reduce the scope of plagiarism detection analysis.

For a retrieval task, Faiss can be divided into the following three steps: creating index table, clustering training, querying.

The core of faiss is the index, which helps to retrieve vectors efficiently. The vector dimension we choose is the output dimension 768 of SBERT, and the quantizer is the vector dot product.

We cannot accept the huge time consuming nature of traversal retrieval. Therefore, we use Faiss’s inverted file to speed up the search. Its idea is to use k-means algorithm to establish the clustering centre in advance, so that the search can query the nearest centre, and then compare all the vectors in the clusters to get the similar vectors, which reduces the search scope.

In addition, all the vector data of the index table is stored in memory, in order to handle more text data with smaller memory footprint, faiss provides a compression algorithm based on Product Quantizer.

Its algorithm steps are as follows:

Cut a feature vector into multiple segments;

Perform k-means clustering in the subspace represented by each segment;

Use the number of the closest clustering centre in each segment as the index value, i.e., the feature vector is compressed into a new vector consisting of the number of the clustering centre in each sub-segment;

Memory retains only the compressed vectors and cluster centres.

This reduces the memory footprint and speeds up the distance calculation. The whole process above can be described as Algorithm 2.

Figure 3: Faiss-based texts retrieve algorithm

After processing, we obtain a Product Quantizer optimized and k-means trained Faiss index table \(I\). For an input vector \(h\) , Faiss is able to retrieve the \(top-k\) alternative vectors that are most similar to \(h\) , denoted as \([v_1, v_2, \dots , v_k]\).

5 Experiments↩︎

5.1 Metrics↩︎

Some common metrics for classification task evaluation are used here, which are usually computed using four values in the confusion matrix: true positive (TP), true negative (TN), false positive (FP), and false negative (FN).

\(Accuracy\) is the number of samples correctly predicted by a classification model as a proportion of the total number of samples. It is an intuitive metric, but may not be a good performance measure in the case of unbalanced categories, as the model may tend to predict the majority of categories and ignore the minority. For details, see Eq. (10).

\[Accuracy=\frac{TP+TN}{TP+TN+FP+FN} \label{eq:accuracy}\tag{10}\]

\(Precision\) is the proportion of samples predicted by the model to be positive cases that are actually positive cases. It measures how many of the model’s predictions are correct and is an important metric for tasks where false positives are not tolerated. For details, see Eq. (11).

\[Precision=\frac{TP}{TP+FP} \label{eq:precision}\tag{11}\]

\(Recall\) is the proportion of samples that are actually positive examples that the model successfully predicts as positive. Recall is a key metric in tasks where the omission of positive examples cannot be tolerated. For details, see Eq. (12).

\[Recall=\frac{TP}{TP+FN} \label{eq:recall}\tag{12}\]

\(F1\) score is the reconciled mean of precision and recall, which provides a comprehensive consideration of the model’s performance.The F1 score is particularly useful when dealing with data from unbalanced categories, as it takes into account both false positives and omissions from the model. For details, see Eq. (13).

\[F1=2\times \frac{Precision\times Recall}{Precision+Recall} \label{eq:f1}\tag{13}\]

5.2 Training details↩︎

In this section, we’ll tell you more details about training.

Training-testing set division. We divide the dataset \(\mathcal{D}\) into \(\mathcal{D}_{train}\) and \(\mathcal{D}_{test}\) in the ratio of 8:2, where \(\mathcal{D}_{train}\) is the training set and \(\mathcal{D}_{test}\) is the test set.

Model parameter setting. In this experiment, we tried to train models with 4 different structures. For details, see table 1.

| Model | BERT | pool | hidden size |

|---|---|---|---|

| Ours-SBERT | sbert-based | [CLS] | \(\left[ \begin{array}{c} 1536\times 512\\ 512\times 3\\\end{array} \right]\) |

| Ours-BERT | bert-based | [CLS] | \(\left[ \begin{array}{c} 1536\times 512\\ 512\times 3\\\end{array} \right]\) |

| SBERT-COS | sbert-based | [CLS] | - |

| SBERT-DOT | sbert-based | [CLS] | - |

Optimizer setting. We used the Adam optimizer for model training, where the learning rate was set to 0.001, the batch size was set to 1024 and the maximum number of iteration rounds was set to 20.

In the end, Ours-SBERT reached convergence after 6 hours of training and Ours-BERT reached convergence after 5 hours of training on a single NVIDIA GeForce RTX 3070.

5.3 Evaluation results↩︎

5.3.1 Fine-tuning Model Evaluation↩︎

We evaluated four models. For details, see table 2. The performance of our proposed Ours-SBERT model is outstanding and outperforms other models.

| Model | Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| Ours-SBERT | 98.86% | 98.90% | 98.86% | 0.9888 |

| Ours-BERT | 95.31% | 95.59% | 95.31% | 0.9545 |

| SBERT-COS | 78.14% | 78.16% | 78.14% | 0.7815 |

| SBERT-DOT | 80.04% | 78.84% | 80.04% | 0.7944 |

5.3.2 Faiss Performance Evaluation↩︎

Since the use of Product Quantizer(PQ) optimisation and k-means training acceleration affects the matching accuracy, we need to examine the index table and compare the different optimisation methods.

So we train Index with different optimizations which are:

no clustering and memory optimization;

k-means clustering acceleration (100 clustering centers, alternative 20 clustering centers);

k-means clustering acceleration and PQ memory optimization (100 clustering centers, alternative 20 clustering centers, each segmentation vector dimension is 16);

We train Index \(I\) with different optimisations and use the shuffle plagiarism processed text for match checking, if the returned \(top-10\) segments contain the original text, the match is considered successful, otherwise the match is considered failed. Then we calculate the matching success rate, see Table 3 for details.

| Strategy | Success Rate | Time(ms/vector) | Dim |

|---|---|---|---|

| Nothing | 99.69% | 0.1826 | 768 |

| K-Means | 99.60% | 0.0651 | 768 |

| K-Means&PQ | 99.53% | 0.2938 | 16 |

The results show that the index \(I\) accelerated by k-means training takes less time while guaranteeing the success rate of matching. It can meet our needs of text retrieval and reducing the scope of plagiarism detection analysis. When memory is scarce, Product Quantizer can also be used to optimize memory.

5.4 Visualisation↩︎

The following is a sample example, including inputs, intermediate results, and outputs.

Input: For these trials, we utilize the identical network framework as mentioned in [22]; that is, two VGG-M-2048 networks [3]. The combination layer is inserted at the final convolutional layer, following rectification, meaning its input comes from the result of ReLU5 from the two channels. This choice was made based on superior outcomes in initial trials compared to options such as the non-rectified output of conv5. At this juncture, the attributes are already significantly informative while also offering rough location details. Following the combination layer, a solitary processing channel is employed.

top-1: To be clear, our intention here is to fuse the two networks (at a particular convolutional layer) such that channel responses at the same pixel position are put in correspondence. To motivate this, consider for example discriminating between the actions of brushing teeth and brushing hair. If a hand moves periodically at some spatial location then the temporal network can recognize that motion, and the spatial network can recognize the location (teeth or hair) and their combination then discriminates the action.

top-2: For these experiments we use the same network architecture as in [22]; i.e. two VGG-M-2048 nets [3]. The fusion layer is injected at the last convolutional layer, after rectification, i.e. its input is the output of ReLU5 from the two streams. This is chosen because, in preliminary experiments, it provided better results than alternatives such as the non-rectified output of conv5. At that point the features are already highly informative while still providing coarse location information. After the fusion layer a single processing stream is used.

top-3: Our proposed architecture (shown in Fig. 4) can be viewed as an extension of the architecture in Fig. 2 (left) over time. We fuse the two networks, at the last convolutional layer (after ReLU) into the spatial stream to convert it into a spatiotemporal stream by using 3D Conv fusion followed by 3D pooling (see Fig. 4, left). Moreover, we do not truncate the temporal stream and also perform 3D Pooling in the temporal network (see Fig. 4, right). The losses of both streams are used for training and during testing we average the predictions of the two streams. In our empirical evaluation (Sec. 4.6) we show that keeping both streams performs slightly better than truncating the temporal stream after fusion.

top-4: In this section we report results using a 34-layer version of R(2+1)D, which we denote as R(2+1)D-34. The architecture is the same as that shown in the right column of Table 1, but with 3D convolutions decomposed spatiotemporally in (2+1)D. We train our R(2+1)D architecture on both RGB and optical flow inputs and fuse the prediction scores by averaging, as proposed in the two-stream framework [29] and subsequent work [4], [9]. We use Farneback’s method [8] to compute optical flow because of its efficiency.

top-5: This spatial correspondence is easily achieved when the two networks have the same spatial resolution at the layers to be fused, simply by overlaying (stacking) layers from one network on the other (we make this precise below). However, there is also the issue of which channel (or channels) in one network corresponds to the channel (or channels) of the other network.

The detailed judgment results are shown in Table 4.

| top-k | class | |||

|---|---|---|---|---|

| top-1 | No Plagiarism | |||

| top-2 | Imitation Plagiarism | |||

| top-3 | No Plagiarism | |||

| top-4 | No Plagiarism | |||

| top-5 | No Plagiarism |

6 Discussions↩︎

Initially, we expected SBERT to be able to distinguish three different degrees of plagiarism (synonym substitution, synonym substitution + grammatical structure modification, synonym substitution + grammatical structure modification + semantic order modification + linguistic style modification). However, the experimental results show that the SBERT model cannot distinguish these three kinds of plagiarism on our dataset.

After experimental analyses, we found that the semantic vectors of these three plagiarisms and their similarities make it difficult for the model to distinguish between them.

In a study by [8], we found out the reason. On the semantic search, SBERT extracts semantic kernel features that are synonymous with a series of nominal elements in the main clause, ignoring words that only serve a grammatical purpose. That is, the model focuses on what things the sentence describes rather than the predicate/comment of the sentence, i.e., what the sentence actually says about those things. Our first experimental attempts corroborate this idea. SBERT still has significant syntactic analytical power, though, leading the SBERT model for semantic search to view noun element synonymy as a semantic feature of the sentence mainly because of the nature of training these SBERT datasets.

7 Conclusions↩︎

We designed and implemented the entire system for plagiarism detection and provided visualization demos. the system consists of sentence bert for generating text vectors, Faiss framework for fast matching of vector libraries, fine-tuned bert model for analyzing plagiarism classification, and visualization demos.

We compare the classification accuracy of different bert models, and our evaluation shows that our model is better suited to the task of our dataset and more suitable for plagiarism classification evaluation. We also compared different acceleration schemes and effects of Faiss, and selected the optimal scheme and parameters to apply to our plagiarism detection system.

8 Team Contribution and Acknowledgement↩︎

8.1 Team Contribution↩︎

Our team division of labour and contribution is follow:

Jibao Yuan: Data enhancement, model construction and training, paper writing, 30%;

Jiarong Xian: Data collection, model construction and training, paper writing, 30%;

Peiwei Zheng: Project management, system framework, prompt design, data collection, paper writing, 25%;

Dexian Chen: Data collection, data processing and testing, paper writing, 15%;

8.2 Acknowledgement↩︎

Thanks to Mu Li for providing the list of papers, and to each team member who collected the data, spent a relatively large amount of time processing the PDF text, segmenting it, and using the GPT API for data enhancement. Thanks to Autodl platform for providing arithmetic resources, and thanks to the instructor for his patience.

9 Appendix↩︎

9.1 GPT-3.5 Prompt↩︎

9.1.1 slight prompt↩︎

instruction: I am building a dataset for plagiarism assignments and need your assistance. Please pretend to be a student who intends to plagiarize, and I will provide you with a plagiarism strategy and source text. Based on my plagiarism strategy, please modify the source text. Then output the modified text to me. Remember, to save your output time, you only need to output the modified text to me. Use \(<\)start\(>\) to indicate the start of the output, and \(<\)end\(>\) to indicate the end of the output.

plagiarism strategy: Replace various words in the source text with synonyms while maintaining the original sentence structure and information sequence.

example:

Their bank account balance reached the maximum insured amount \(\iff\) Their bank account balance was at least 250 thousand dollars

Mr. Smith just bought a new computer \(\iff\) Bob just bought a new computer

The program runs fast \(\iff\) The program does not run slowly

A spike in sales performance will save the company from bankruptcy \(\iff\) Only a spike in sales performance will halt the company’s bankruptcy.

I bought a plane ticket online \(\iff\) A plane ticket was sold to me online

Increase in salaries is often a great indicator of performance \(\iff\) Increase in salary is often a great indicator of performance.

Is this your own work? \(\iff\) Is that your own work?

There are many accounts of that hero’s legacy all differing in perspectives. \(\iff\) There are different versions of that hero’s legacy.

These numbers, interestingly, seem to appear in the world around us. \(\iff\) These numbers interestingly seem to appear in the world around us.

source text: Input your source text

9.1.2 obvious prompt↩︎

instruction: I am building a dataset for plagiarism in assignments, and now I need your help. Please pretend to be a student who intends to plagiarize an assignment. I will provide you with plagiarism strategies and source texts to copy from. You should modify the source text according to my plagiarism strategies, and then output the modified text to me.Remember, to save your output time, you only need to output the modified text to me.Use <start> to indicate the start of the output, and <end> to indicate the end of the output.

plagiarism strategy: **Use synonyms, change grammatical structures, and you may alter punctuation, but fundamentally maintain the order of information in the sentences.**

example: Synonym replacement example: Their bank account balance reached the maximum insured amount \(\iff\) Their bank account balance was at least 250 thousand dollars

Mr. Smith just bought a new computer \(\iff\) Bob just bought a new computer

The program runs fast \(\iff\) The program does not run slowly

A spike in sales performance will save the company from bankruptcy \(\iff\) Only a spike in sales performance will halt the company’s bankruptcy.

I bought a plane ticket online \(\iff\) A plane ticket was sold to me online

Increase in salaries is often a great indicator of performance \(\iff\) Increase in salary is often a great indicator of performance.

Is this your own work? \(\iff\) Is that your own work?

There are many accounts of that hero’s legacy all differing in perspectives. \(\iff\) There are different versions of that hero’s legacy.

Grammatical structure modification example:

Does working in that technology company pay well? Does it provide great 401k plans for its employees? \(\iff\) They will work at the company to get high pay or to obtain great 401k plans.

How he would stare! \(\iff\) He would surely stare!

Jacob programmed the game \(\iff\) the game’s programmer is Jacob

“You must finish the project by the end of today,” demanded my manager \(\iff\) My manager demanded that I must finish the project by the end of today

Alice presented the gift to Bob. \(\iff\) Alice presented Bob with the gift.

All spoken languages are natural languages \(\iff\) The English language is a natural language

Initially, we begin with the scientific method \(\iff\) we begin with the scientific method initially

source text: Input your source text

9.1.3 significant prompt↩︎

instruction: I am building a dataset for plagiarism in assignments, and now I need your help. Please pretend to be a student who intends to plagiarize an assignment. I will provide you with plagiarism strategies and source texts to copy from. You should modify the source text according to my plagiarism strategies, and then output the modified text to me.Remember, to save your output time, you only need to output the modified text to me.Use <start> to indicate the start of the output, and <end> to indicate the end of the output.

plagiarism strategy: Utilize alternative words, modify the structure of sentences, rearrange the order of ideas, and alter the style of grammar. You can also adjust punctuation. This process might include dividing the original sentence into several distinct sentences, or conversely, merging several sentences into a single one. There are no limitations on the methods of modification.

example: Synonym replacement example: Their bank account balance reached the maximum insured amount \(\iff\) Their bank account balance was at least 250 thousand dollars

Mr. Smith just bought a new computer \(\iff\) Bob just bought a new computer

The program runs fast \(\iff\) The program does not run slowly

A spike in sales performance will save the company from bankruptcy \(\iff\) Only a spike in sales performance will halt the company’s bankruptcy.

I bought a plane ticket online \(\iff\) A plane ticket was sold to me online

Increase in salaries is often a great indicator of performance \(\iff\) Increase in salary is often a great indicator of performance.

Is this your own work? \(\iff\) Is that your own work?

There are many accounts of that hero’s legacy all differing in perspectives. \(\iff\) There are different versions of that hero’s legacy.

Grammatical structure modification example:

Does working in that technology company pay well? Does it provide great 401k plans for its employees? \(\iff\) They will work at the company to get high pay or to obtain great 401k plans.

How he would stare! \(\iff\) He would surely stare!

Jacob programmed the game \(\iff\) the game’s programmer is Jacob

“You must finish the project by the end of today,” demanded my manager \(\iff\) My manager demanded that I must finish the project by the end of today

Alice presented the gift to Bob. \(\iff\) Alice presented Bob with the gift.

All spoken languages are natural languages \(\iff\) The English language is a natural language

Initially, we begin with the scientific method \(\iff\) we begin with the scientific method initially

Yesterday, we were able to complete our assignment and submit it on time \(\iff\) Yesterday evening at 12:30 PM, we were able to submit our assignment on time

Semantic order modification Grammar style modification example:

I am building a dataset for plagiarism assignments and need your assistance. Please pretend to be a student who intends to plagiarize, and I will provide you with a plagiarism strategy and source text. Based on my plagiarism strategy, please modify the source text. Then output the modified text to me. Remember, to save your output time, you only need to output the modified text to me. \(\iff\) Assistance is sought for the construction of a dataset tailored for plagiarism tasks. Imagine yourself in the shoes of a student poised to plagiarize. Your task is to apply this strategy, reshaping the given text accordingly. Once this transformation is complete, your sole responsibility is to present the revised text. The aim is to streamline the process; thus, only the altered text needs to be revealed. A strategy for such an act, along with the original text, will be furnished by me.

source text: Input your source text