Modality Translation for Object Detection Adaptation Without Forgetting Prior KnowledgeSupplementary Material: Modality Translation for Object Detection Adaptation Without Forgetting Prior Knowledge

April 01, 2024

Abstract

A common practice in deep learning consists of training large neural networks on massive datasets to perform accurately for different domains and tasks. While this methodology may work well in numerous application areas, it only applies across modalities due to a larger distribution shift in data captured using different sensors. This paper focuses on the problem of adapting a large object detection model to one or multiple modalities while being efficient. To do so, we propose ModTr as an alternative to the common approach of fine-tuning large models. ModTr consists of adapting the input with a small transformation network trained to minimize the detection loss directly. The original model can therefore work on the translated inputs without any further change or fine-tuning to its parameters. Experimental results on translating from IR to RGB images on two well-known datasets show that this simple ModTr approach provides detectors that can perform comparably or better than the standard fine-tuning without forgetting the original knowledge. This opens the doors to a more flexible and efficient service-based detection pipeline in which, instead of using a different detector for each modality, a unique and unaltered server is constantly running, where multiple modalities with the corresponding translations can query it. Code: https://github.com/heitorrapela/ModTr.

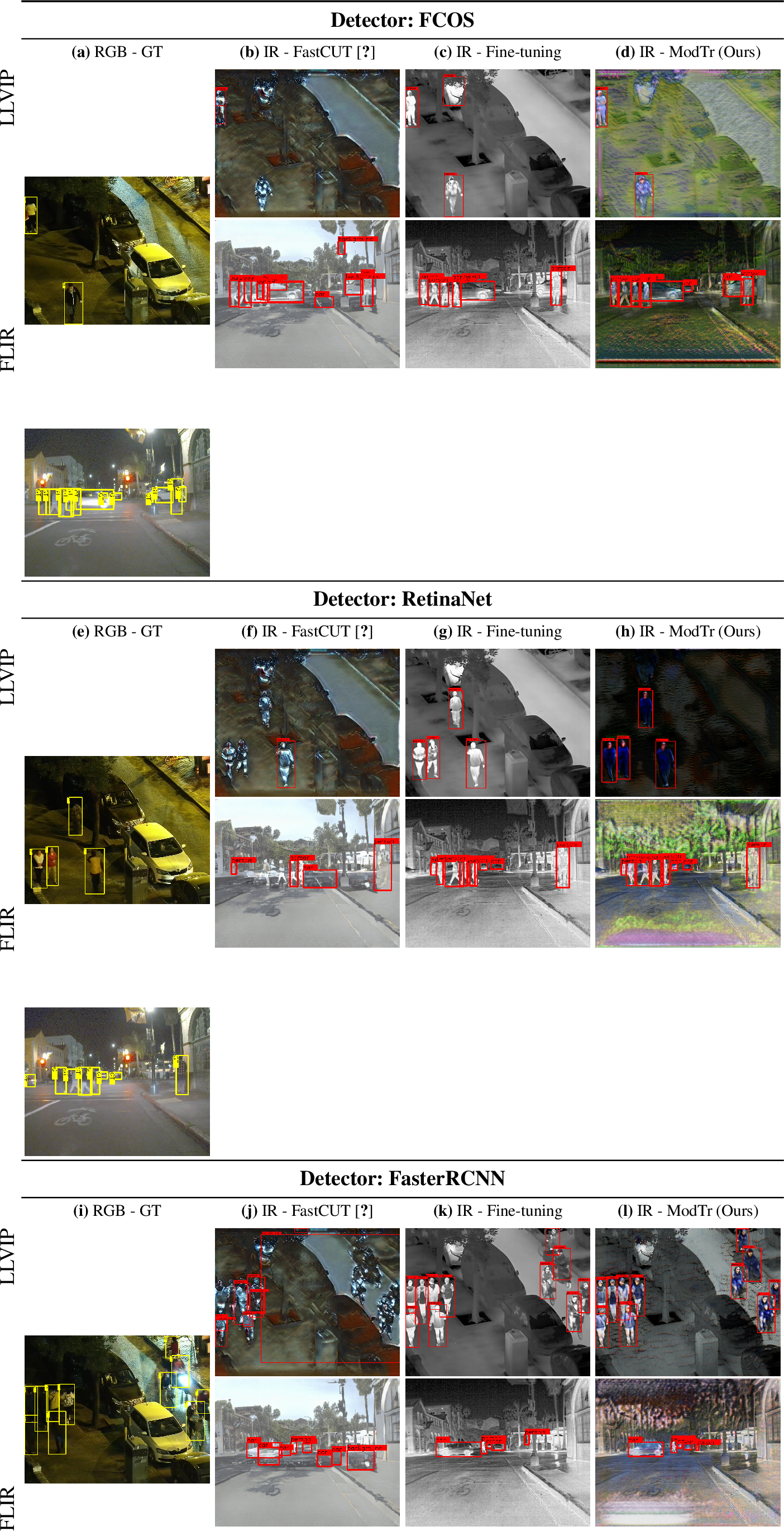

RGB - GT

IR - FastCUT [1]

IR - Fine-tuning

IR - ModTr (Ours)

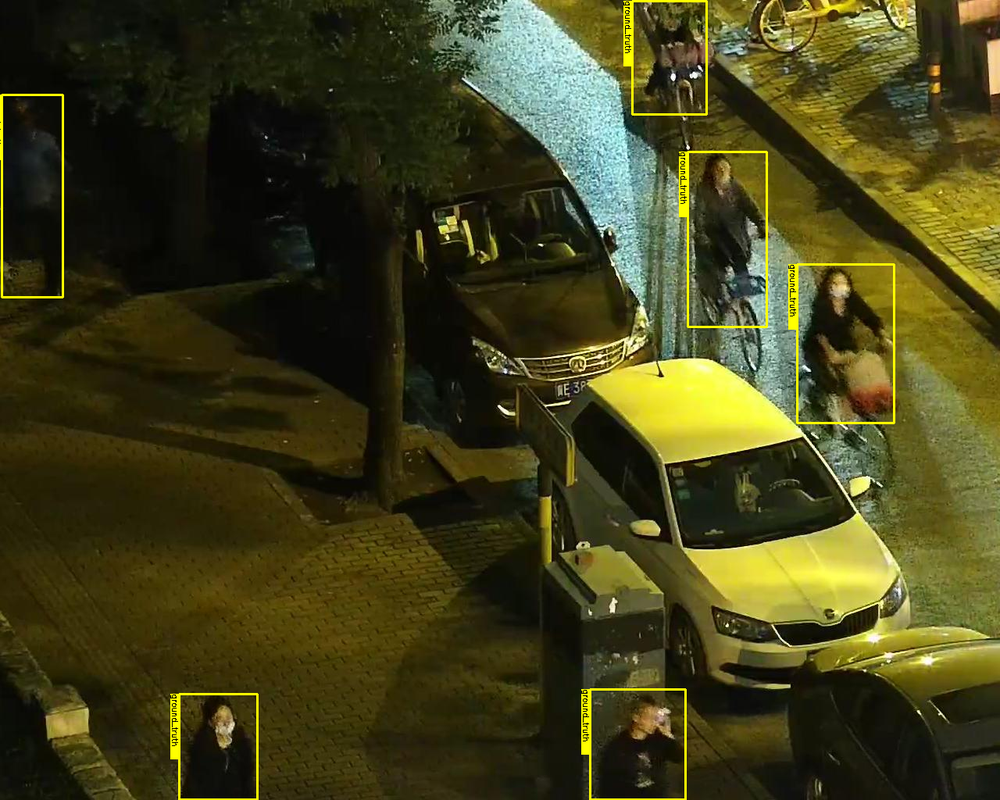

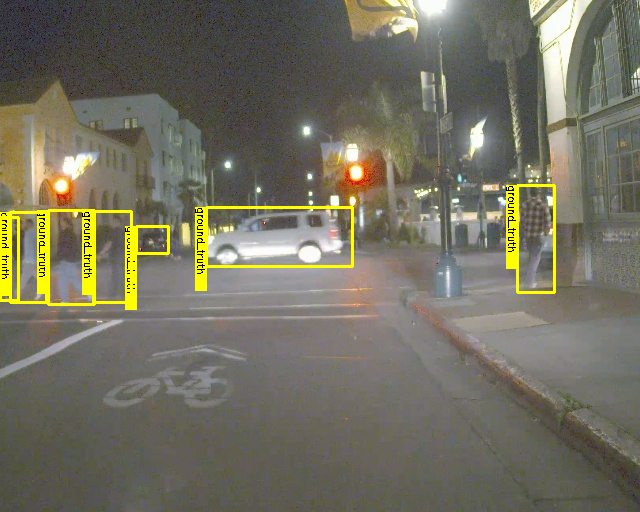

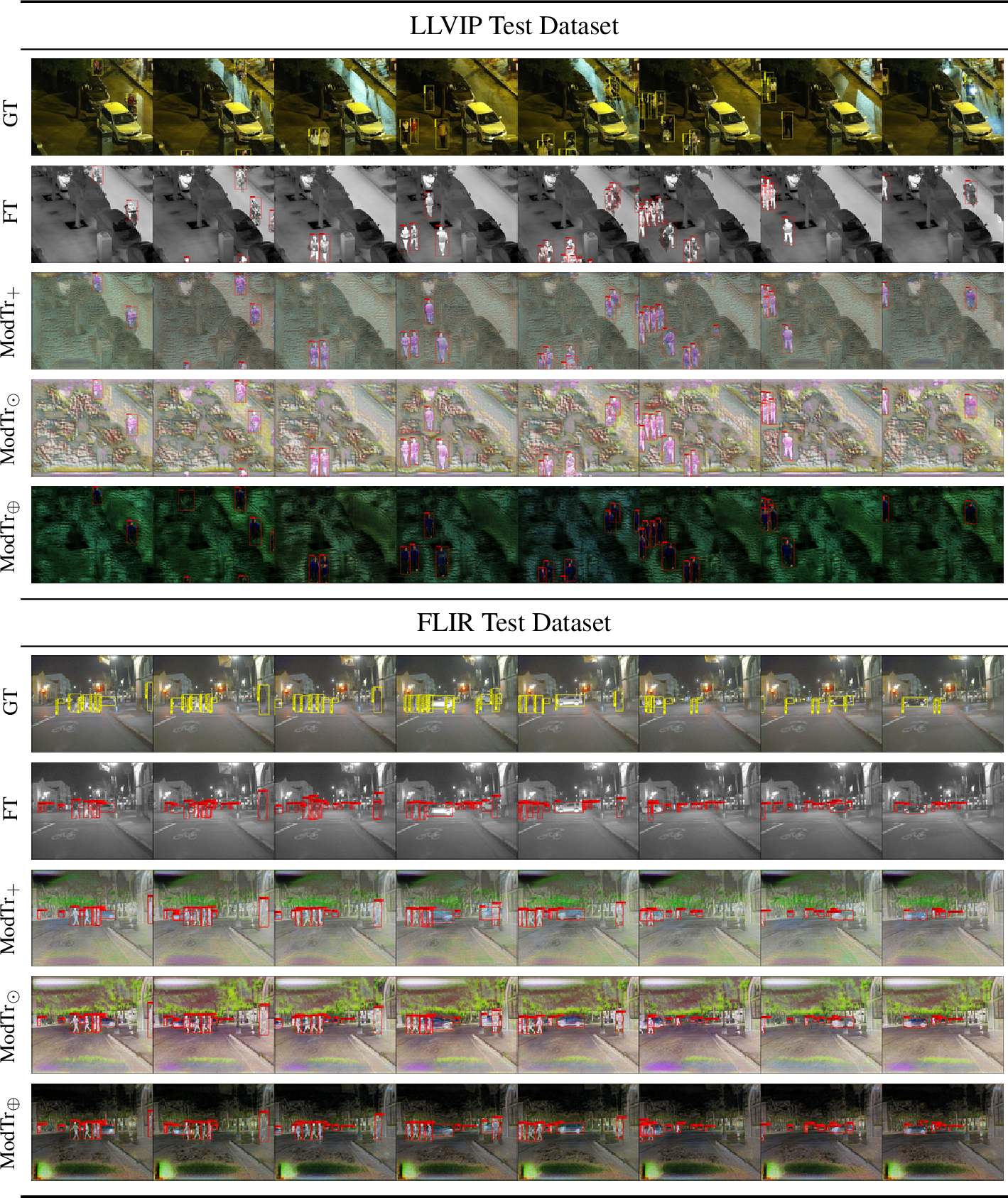

Figure 1: Bounding box predictions over different OD methods for infrared images on two benchmarks: LLVIP and FLIR. Yellow and red boxes show the ground truth and predicted detections, respectively. FastCUT is an unsupervised image translation approach that takes as input infrared images (IR) and produces pseudo-RGB images. It does not focus on detection and requires both modalities for training. Fine-tuning is the standard approach to adapting the detector to the new modality. It requires only IR data but forgets the original knowledge of the pre-trained detector. Finally, ModTr, our approach focuses the translation on detection, requires only IR data, and does not forget the original knowledge so that it can be reused for other tasks..

1 Introduction↩︎

Powerful pre-trained models have become essential in the field of computer vision, particularly in object detection (OD) tasks [2], [3]. These OD models are typically pre-trained on extensive natural-image RGB datasets, such as COCO [4]. Moreover, the knowledge encoded by these models can be leveraged for various tasks in a zero-shot way or with additional fine-tuning for downstream tasks [5]. However, adding new modalities to these models, such as infrared (IR), without losing the intrinsic knowledge of the detector remains a challenge [6].

These additional modalities, though not as common as RGB images, are still important in various tasks, like surveillance [7], autonomous driving [8], and robotics [9], which strive to achieve robust performance across environmental changes such as different illumination conditions [10]. The dominant way to adapt pre-trained detectors to these novel modalities is by fine-tuning the model. However, fine-tuning often results in catastrophic forgetting and can destroy the intrinsic knowledge of the detector [11]. Ideally, we would like to adapt the detector to new modalities without changing the original model. This is most useful for server-side applications, where a single model runs uninterrupted and different inputs, ideally on different modalities, can query it. The main challenge here is the significant distribution shift between the new modalities, such as the difference between the visual information in RGB images and the thermal data in IR images. This shift can degrade the performance of models when applied directly to new input, as the features learned from one modality may not be relevant or present in another. This can ultimately impact the final performance of the detector [12].

Image translation methods have emerged as a powerful tool to overcome the downsides of fine-tuning and narrowing the gap between source and target modalities [13]. These methods do not directly work on the weight space of the original detector but rather adapt the input values to reduce the discrepancy between the source and target modalities. However, such methods often require access to source data or some statistics about it during training. Furthermore, their primary focus is on image reconstruction quality rather than the final detection task, which can cause a significant drop in performance. For instance, in Figure 1, some detections (in red) using these generated images, in b) and d), and the fine-tuning in c) are illustrated. Additionally, results for different methods are provided in supplementary material.

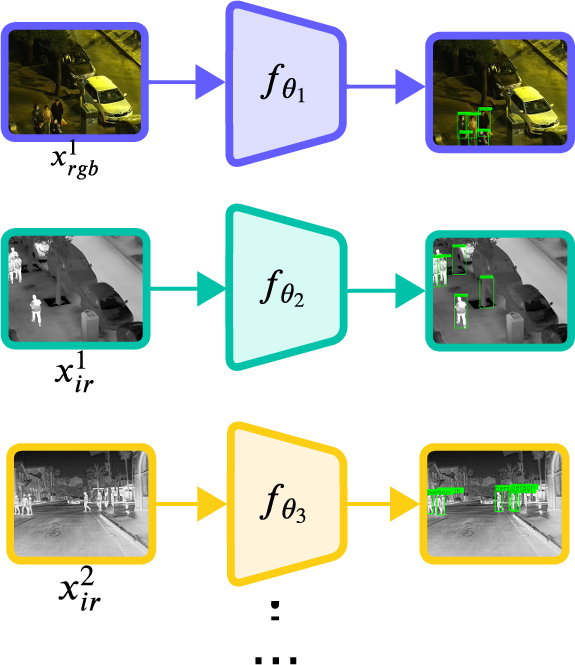

N-Detectors

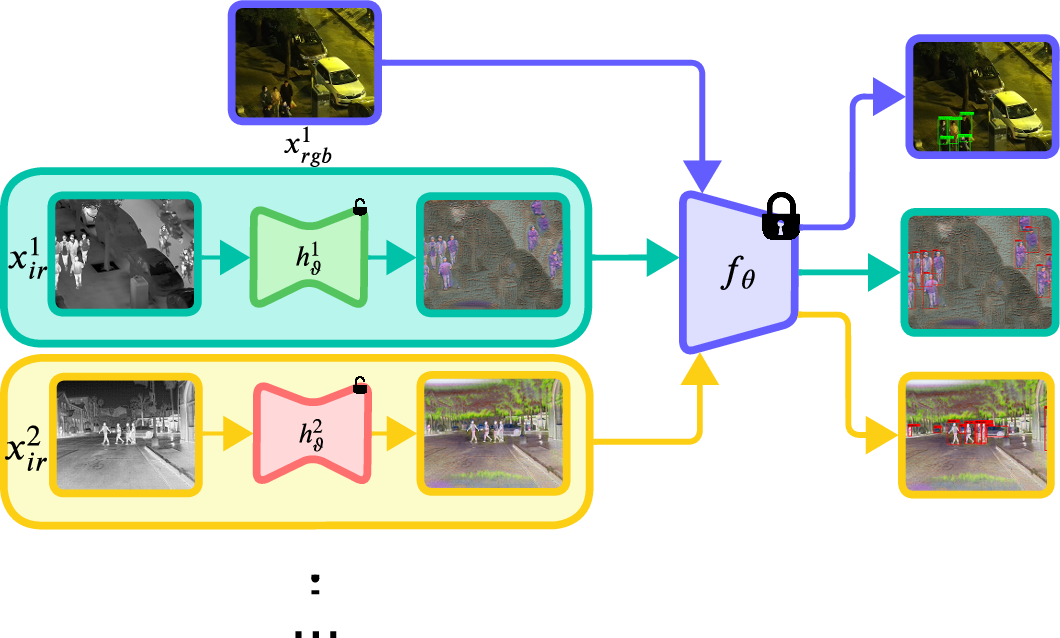

N-ModTr-1-Detector

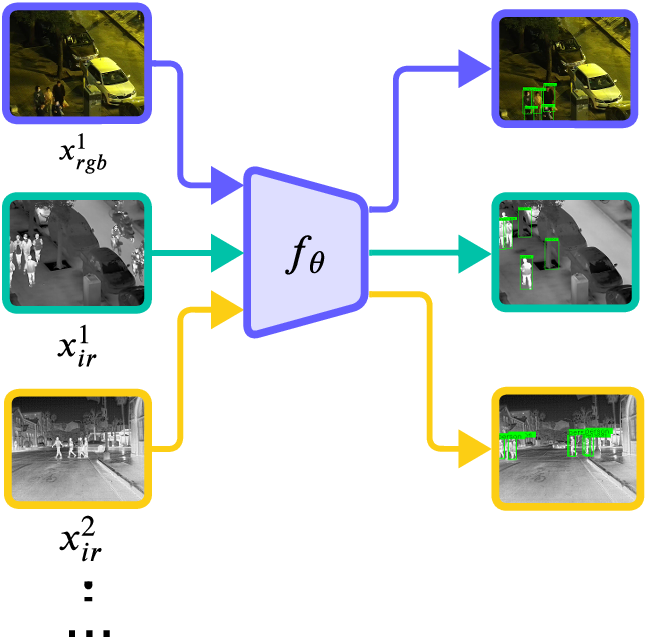

1-Detector

Figure 2: Different approaches to deal with multiple modalities and/or domains. (a) The simplest approach is to use a different detector adapted to each modality. This can lead to a high level of accuracy but requires storing models in memory multiple. (b) Our proposed solution is based on using a single pre-trained model normally trained on the more abundant data (RGB) and then adapting the input through our ModTr model. (c) A single detector is trained on all modalities jointly. This allows using of a single model but requires access to all modalities jointly, which is often not possible, especially when dealing with large pre-trained models..

Our work aims to improve the image translation paradigm while addressing its limitations. Our proposed approach, Modality Translation for OD (ModTr), incorporates the detector’s knowledge into the translation module by training directly for the final detection task. Unlike traditional image translation methods, ModTr doesn’t require any source data. It is a simple approach that can be easily integrated with any detector, be it a one-stage or two-stage detector. This approach also has applications in many settings. By incorporating new modalities for a pre-trained detector, it can act as a server that receives diverse images translated from each ModTr block trained for each modality. The detector then generates the desired output with comparable performance to fully fine-tuning but requiring much less computational power on the entire system. In Figure 2, we present several options to integrate new modalities into our system. Figure 2 (a) illustrates the N-Detectors approach, where each detector is trained on a specific modality. While this method is effective, it requires a significant amount of computational resources. Alternatively, we have the N-ModTr-1-Detector approach in Figure 2 (b), which is less resource-intensive as it involves training a specialized model for each modality. Finally, Figure 2 (c) shows a single detector trained on joint modalities, which is not memory-intensive. However, it may not be as optimal as the other methods due to the challenges in multimodal learning. In this work, we focus on the effectiveness of our approach for IR modality, which is commonly used in surveillance and robotics, and the incremental modality detector server-based application, which are important for many settings that require non-interrupt detection predictions.

Our main contributions can be summarized as follows:

(1) We present ModTr, a method for adapting pre-trained ODs from large RGB datasets to new scarce modalities like IR, without requiring access to any source dataset, by translating the input signal. (2) In contrast to

standard fine-tuning, our approach does not modify the original detector weights. This allows the detector to retain the knowledge of the source data while adapting to a new modality. As a result, a single model can be used to handle multiple modalities

across various translators. For instance, the same model can be used to process RGB during the daytime and IR at nighttime. (3) Our proposed approach, ModTr, is evaluated in several scenarios, showcasing its advantages and flexibility. In

particular, ModTr achieves competitive OD accuracy compared with image translation methods on two challenging visible/infrared datasets (LLVIP and FLIR).

2 Related Work↩︎

Object detection. OD is a computer vision task that has as its objective to provide labels and localization for the objects in the image [14]. The two categories of OD are two-stage and one-stage detectors. Two-stage detectors, exemplified by Faster R-CNN [15], first generate regions of interest and then use a second classifier to confirm object presence within those regions. On the other hand, one-stage detectors streamline the detection process by eliminating the proposal generation stage, aiming for end-to-end training and real-time inference speeds. RetinaNet [16] is a one-stage OD model that utilizes a focal loss function to address class imbalance during training. Also, models like FCOS [17] have emerged in this category, eliminating predefined anchor boxes to potentially enhance inference efficiency. The proposed work investigates these three traditional and powerful detectors: the two-stage Faster R-CNN detector, which is highly used on the benchmarks, RetinaNet, and FCOS. The choice of such detectors was due to the simplicity in implementation and integration among other methods, as well as a different range of pre-trained backbone weights, such as ResNet and MobileNet.

Image Translation. Image translation is a pivotal task in computer vision, aiming to map images from a source domain to a target domain while preserving inherent content [18]. The goal is to discover a transformation function such that the distribution of images in the translated domain is aligned with the distribution of images in the target domain. The commonly used approaches for image translation are based on variational autoencoders (VAEs) [19] and generative adversarial network (GANs) [18], [20]. Isola et al. developed the Pix2Pix [21], a method that consists of a generator (based on U-Net) and a discriminator (based on GANs architecture) that work together to generate images based on input data and labels. Then, Zhu et al. proposed a method called CycleGAN [22], which is based on GANs, with the objective of unsupervised domain translation. Even though CycleGAN can produce quite visual results, it’s hard to optimize due to the adversarial mechanism and memory footprint needed. In contrast, VAEs are easier to train than GANs but require more constraints in the optimization to produce images of good quality than GAN-based approaches. Recent advancements include diffusion models known for their high-quality image generation, although they may not inherently suit domain translation tasks. To enhance models such as CycleGAN, novel methods like Contrastive Unpaired Translation (CUT) [1] and FastCUT [1] have been introduced. CUT, in particular, accelerates the image translation process by maximizing mutual information between image patches, achieving competitive results quickly. In the context of RGB/IR modality, InfraGAN presents an image-level adaptation for RGB to IR conversion, prioritizing image quality [23]. This approach is distinct in its focus on optimizing image quality losses. Moreover, Herrmann et al. have explored OD in RGB/IR modality by adapting IR images to RGB using traditional image preprocessing techniques, allowing the use of RGB object detectors without parameter modification [24]. Recently, there have been many advances in image translation, but they do not target OD tasks. Therefore, Medeiros et al. proposed the HalluciDet [6], which uses an image translation mechanism for OD, but in their work, they have as a prior the access to the source data to pre-train the detector on the same RGB domain of the OD modality that the model needs to adapt.

Adapting without forgetting. Catastrophic forgetting (CF) is the idea that a neural network tends to forget knowledge when sequentially trained on a different task and replaces it with knowledge tailored to the new objective [25]. CF can be harmful or beneficial. Researchers identified harmful learning as situations where retaining the original knowledge while adapting to a different task is necessary. In that case, it is imperative to mitigate the risk of CF. However, some CF can also be beneficial, for instance, to prevent privacy leakage from large pre-trained models, to enhance the generalization, or to remove noisy information from the originally, acquired knowledge that is negatively affecting the new tasks. In our case, knowledge-forgetting is harmful. There are different ways to address this issue including simple techniques like decreasing the learning rate [26], use weight decay [27], [28] or mixout regularization [29] during fine-tuning or more complex approaches like Recall and learn [30], Robust Information Fine-tuning [31] or CoSDA [32]. Some adaptation methods use techniques based on replay of the source data or even using the weights of the initial model to keep some prior information [33]. However, these methods may still produce a loss of knowledge since the original parameters are not frozen. Furthermore, in adapting without forgetting, an Adapter, which adopts a frozen pre-trained backbone to generate a representation followed by a different classifier for each downstream task [25], can be seen as a powerful method for keeping knowledge. Even though our ModTr shares some similarities, we work in the input space to adapt to the new modalities, and we address this incremental modality adaptation, optimizing the translation directly for the final OD task.

3 Proposed Method↩︎

Preliminary Definitions. We denote the training set for the OD task as \(\mathcal{D}=\{(x, \mathcal{Y})\}\), where \(x\in\mathbb{R}^{W\times H\times C}\) represents an image in the dataset, with dimensions \(W\times H\) and \(C\) channels. Subsequently, the object detection model aims to identify \(N\) regions of interest within these images, denoted as \(\mathcal{Y}=\{(b_i, c_i)\}_{i=1}^{N}\). Each region of interest \(b_i\) is defined by the top-left corner coordinates and the width and height of the object. Additionally, a classification label \(c_i\) is assigned to each detected object, indicating its corresponding class within the dataset. In this study, the number of input channels for the detector is fixed at three, corresponding to RGB-like inputs. In terms of optimization, the primary goal of this task is to maximize detection accuracy, often measured using the average precision (AP) metric across all classes. An OD is formally represented as the mapping \(f_{\theta}: \mathbb{R}^{W \times H \times C} \to \mathcal{Y}\), where \(\theta\) denotes the parameter vector. To train such a detector effectively, a differentiable surrogate for the AP metric, referred to as the detection cost function, \(\mathcal{C}_{det}(\theta)\), is employed. The typical structure of such a cost function involves computing the average detection loss over dataset \(\mathcal{D}\), denoted as \(\mathcal{L}_{det}\):

\[\begin{align} \mathcal{L}_{det}(\theta)=\frac{1}{|\mathcal{D}|} \sum_{(x, \boldsymbol{y}) \in \mathcal{D}} \mathcal{L}_{det}[f_\theta(x), \mathcal{Y}]. \end{align} \label{eq:detection95loss}\tag{1}\]

Modality Translation Module (ModTr). Our approach primarily consists of an image-to-image translation network responsible for converting the input modality into an RGB-like space intelligible to the detector. These networks typically adopt an encoder-decoder structure to synthesize and reconstruct knowledge in a pixel-wise manner. While we employ U-Net as the translation network in this work, our framework is general and not limited by the translation architecture. In general terms, this mapping is denoted as \(h_{\vartheta}^d\colon \mathbb{R}^{W \times H \times C} \to \mathbb{R}^{W \times H \times 3}\), with a translation network assigned to each available input modality \(d\). Unlike the detection network, the number of input channels varies depending on the modality, for instance, \(C=1\) for IR and depth images. It’s important to note that, being a pixel-level architecture, the output of such a network retains the spatial resolution of the input. However, the number of output channels is consistently fixed at three, corresponding to RGB-like images.

Unlike other image-to-image translation approaches, we drive the translation process using the aforementioned detection cost. Thus, the underlying optimization problem is formulated as \(\vartheta^* = \arg\min \mathcal{L}_{det}(\vartheta)\), incorporating the output of the composition \((f_\theta \circ h_\vartheta^d)(x)\) at the loss function level. To streamline the learning process, we utilize a residual learning strategy in which the function \(h_\vartheta^i\) focuses on capturing the small variations in the input that are necessary to solve the task. This approach is similar to the one employed on diffusion models, which served as inspiration for our work. For the sake of simplicity, we separate the fusion step from the translation mapping in our notation, as various types of fusion are investigated. Consequently, the proposed image-to-image translation loss function is defined as: \[\label{eq:hallucidet95first95step} \begin{align} \mathcal{L}_{\text{ModTr}}(x, \mathcal{Y}; \vartheta) & = \mathcal{L}_{det}[f_\theta\left(\Phi(h_\vartheta^d(x),x)\right), \mathcal{Y}], \end{align}\tag{2}\] where \(\Phi(.,.)\) is a non-parametric fusion function. Note that the output of \(h_\vartheta^d(x)\) is an RGB-like image, whereas \(x\) may only consist of a single channel, depending on the input modality. We have chosen this definition to simplify the notation, but appropriate reshaping should be performed during implementation to ensure compatibility.

In addition, note that, while a detection loss is employed to update the translation network, the weight vector \(\theta\) remains constant. This constraint is consistent with the premise of this study, where a pre-trained detector is solely available on the server side and remains unaltered. An overview of the proposed approach can be seen in 2 (b).

Fusion strategy. As previously mentioned, we utilize a non-parametric fusion of the intermediate representation \(h_\vartheta(x)\) and the original input \(x\) to simplify the learning process of the translation network. In this context, we investigate three straightforward non-parametric fusion functions inspired by previous works. The first approach involves element-wise summation, commonly used in ResNet and diffusion models. The second approach employs an element-wise product, also known as the Hadamard product, which is particularly interesting for attention mechanisms and has been explored previously for re-calibrating feature maps based on their importance [34]. The final approach is based on DenseFuse [35]; however, instead of applying it at the feature level, we apply it between the input and output of the translation network.

a) ModTr\(_{+}\): The addition mechanism involves forwarding the input modality and summing it with the output of the translation network. This residual connection of the input serves as regularization for the model, aiding the network in learning the missing information necessary for detector operation. This operator learns the new representation by amplifying pixel values when the weights of the translation representation tend toward \(1\), or preserving the original information when they tend toward \(0\). Such a range of values is due to our modification on the U-Net to generate, in which we changed the last layer to a sigmoid layer so we can better control the generated image to be closer to a real RGB-like image: \[\label{vbhapxid} \begin{align} \mathcal{L}_{\text{ModTr}_{+}}(x, \mathcal{Y}; \vartheta) & = \mathcal{L}_{det}[f_\theta\left(h_\vartheta^d(x)+x\right), \mathcal{Y}]. \end{align}\tag{3}\]

b) ModTr\(_{\odot}\): The Hadamard product-based fusion serves as a gating mechanism to filter or highlight information from the input image. In this approach, the output of the translation network acts as a weight map for the input, and they are fused using pixel-wise multiplication, \(\odot\). Consequently, the translation networks tend to highlight information from the input when the pixel value tends toward \(1\) or discard it when it approaches \(0\). Additionally, the output translation modality can be interpreted as an attention map: \[\label{etzvlfgu} \begin{align} \mathcal{L}_{\text{ModTr}_{\odot}}(x, \mathcal{Y}; \vartheta) & = \mathcal{L}_{det}[f_\theta\left(h_\vartheta^d(x)\odot x\right), \mathcal{Y}]. \end{align}\tag{4}\]

c) ModTr\(_{\oplus}\): The subsequent fusion mechanism draws inspiration from DenseFuse [35], which employs the relative importance of pixels as an attention mechanism for both the translation network’s output and input. This attention mechanism operates by providing a weighted average of the channels. The implementation details for such an operator can be found on [35]. Then, the proposed loss function is given by: \[\label{yxlkwvqo} \begin{align} \mathcal{L}_{\text{ModTr}_{\oplus}}(x, \mathcal{Y}; \vartheta) & = \mathcal{L}_{det}[f_\theta\left(\Phi(h_\vartheta^d(x), x)\right), \mathcal{Y}], \end{align}\tag{5}\] with \[\Phi(x,\hat{x})= \frac{x\odot e^x + \hat{x}\odot e^{\hat{x}}}{e^x+e^{\hat{x}}}.\]

In our design choices, we opt to utilize these straightforward non-parametric functions to assist in optimization while maintaining low inference costs.

4 Results and Discussion↩︎

4.1 Experimental Methodology↩︎

4.1.1 (a) Datasets:↩︎

LLVIP: The LLVIP dataset is a surveillance dataset composed of \(30,976\) images, in which \(24,050\) (\(12,025\) IR and \(12,025\) RGB paired images) are used for training and \(6,926\) for testing (\(3,463\) IR and \(3,463\) RGB paired images) with only pedestrians annotated. FLIR ALIGNED: For the FLIR, we used the sanitized and aligned paired sets provided by Zhang et al. [36], which has \(10,284\) images, that is \(8,258\) for training (\(4,129\) IRs and \(4,129\) RGBs) and \(2,026\) (\(1,013\) IRs and \(1,013\) RGBs) for test. The FLIR images are taken from the perspective of a camera in the front of a car, and the resolution is \(640\) by \(512\). It contains classes of bicycles, dogs, cars, and people. It has been found that for the case of FLIR, the "dog" objects are inadequate for training [37], thus we decided to remove the dogs.

4.1.2 (b) Implementation details:↩︎

In our experiments, we used \(80\%\) of the training set for training and the rest for validation. All results reported are on the test set. For the FLIR dataset, we removed the dogs as there are not many annotations. As starting pre-trained weights for the detectors, we used Torchvision models with COCO [4] weights and for the U-Net translation network, we used PyTorch Segmentation Models [38] and we changed the last layer for 3-channel (RGB-like) with sigmoid, to be closer to an image with values between \(0\) and \(1\), to perform translation instead of traditional segmentation. For the translation network backbones, we explored our default ResNet34, and for subsequent studies on reducing parameters, we dive into ResNet and MobileNet-family. All the code is available on GitHub for reproducibility in the experiments. To ensure fairness, we trained the detectors under the library version and the same experimental design, i.e., data order, augmentations, etc. Furthermore, we trained with PyTorch Lightning [39] training framework, evaluated the APs with TorchMetrics [40], and logged all experiments with WandB [41] logging tool. The different measured APs can be found in the supplementary material as additional metrics provided in this work.

4.2 Comparison with Translation Approaches↩︎

In this section, we compare the ModTr method with different image-to-image methods employing different learning strategies. These include basic image processing strategies[24], reconstruction strategies such as CycleGAN [42], CUT [43], and FastCUT [43], which employs a constitutive learning approach, as well as HalluciDet [6], which utilizes a detection-based loss. As outlined in Table 1, we evaluated the methods based on their final detection performance across three commonly used detectors: FCOS, RetinaNet, and FasterRCNN. The reported results are derived from the infrared test set and are averaged over three different seeds, which helps mitigate the impact of randomness across runs and splits of the training and validation datasets.

For each method, we also consider its dependency on the source data (RGB) and ground truth bounding boxes on the IR images (Box). Methods that rely on reconstruction techniques do not require box annotations on IR images but cannot provide accurate translations for detection purposes. However, HalluciDet and ModTr require box annotations to adjust the input image in a discriminative manner. The main difference between HalluciDet and ModTr is the use of source images. HalluciDet requires RGB images for an initial fine-tuning of the model, while our approach can work without that fine-tuning by reusing the detector’s zero-shot knowledge.

The proposed ModTr method demonstrates robustness across the three detectors and consistently exhibits improvement on two different datasets: LLVIP [44] and FLIR aligned [45]. It’s worth noting that each algorithm described in Table 1 employs different training supervisions. For instance, CycleGAN employs an adversarial mechanism with both RGB and infrared modalities in an unpaired setting. Similarly, CUT and FastCUT operate with positive and negative patches in an unpaired setting. In contrast, HalluciDet doesn’t require the presence of both modalities during training but employs a detection mechanism during training similar to ours. Note that in our approach, we solely require examples from the target modality. In this section, we present the performance of our best approach \(\text{ModTr}_{\odot}\). For additional results, refer to the supplementary materials.

| Image translation | RGB | Box | Test Set IR (Dataset: LLVIP) | ||

|---|---|---|---|---|---|

| 4-6 | FCOS | RetinaNet | FasterRCNN | ||

| Histogram Equal. [24] | 31.69 ± 0.00 | 33.16 ± 0.00 | 38.33 ± 0.02 | ||

| CycleGAN [42] | ✔ | 23.85 ± 0.76 | 23.34 ± 0.53 | 26.54 ± 1.20 | |

| CUT [43] | ✔ | 14.30 ± 2.25 | 13.12 ± 2.07 | 14.78 ± 1.82 | |

| FastCUT [43] | ✔ | 19.39 ± 1.52 | 18.11 ± 0.79 | 22.91 ± 1.68 | |

| HalluciDet [6] | ✔ | ✔ | 28.00 ± 0.92 | 19.95 ± 2.01 | 57.78 ± 0.97 |

| ModTr\(_{\odot}\) (ours) | ✔ | 57.63 ± 0.66 | 54.83 ± 0.61 | 57.97 ± 0.85 | |

| Image translation | RGB | Box | Test Set IR (Dataset: LLVIP) | ||

| 4-6 | FCOS | RetinaNet | FasterRCNN | ||

| Histogram Equal. [24] | 22.76 ± 0.00 | 23.06 ± 0.00 | 24.61 ± 0.01 | ||

| CycleGAN [42] | ✔ | 23.92 ± 0.97 | 23.71 ± 0.70 | 26.85 ± 1.23 | |

| CUT [43] | ✔ | 18.16 ± 0.75 | 17.84 ± 0.75 | 20.29 ± 0.48 | |

| FastCUT [43] | ✔ | 24.02 ± 2.37 | 22.00 ± 2.73 | 26.68 ± 2.59 | |

| HalluciDet [6] | ✔ | ✔ | 23.74 ± 2.09 | 22.29 ± 0.45 | 29.91 ± 1.18 |

| ModTr\(_{\odot}\) (ours) | ✔ | 35.49 ± 0.94 | 34.27 ± 0.27 | 37.21 ± 0.46 | |

As depicted in Table 1, the detection performance of ModTr over the LLVIP dataset exhibited significant improvements. Specifically, it surpassed HalluciDet, the second best, by more than \(29.0\) AP with both FCOS and RetinaNet architectures, while obtaining comparable results with FasterRCNN. Such disparity with the previous technique can be attributed to the loss of previous knowledge inherent in HalluciDet, which necessitates a pre-fine-tuning strategy on the source modality. Although the performance of the FLIR dataset also improved, the dataset’s inherent challenges, such as changing the background from a moving car setup, make detection more difficult. Nonetheless, our proposal consistently enhances results, with improvements of more than \(11\) AP for FCOS and RetinaNet, and over \(7\) AP for FasterRCNN. We also observed improvements on the AP\(_{50}\) and AP\(_{75}\). Because of the space constraint, we include these in Supplementary Materials. These promising results indicate that our proposal can effectively translate images from the original IR modality to an RGB-like representation, sufficiently close to the source data to be usable by the detector.

4.3 Fine-Tuning of the Translator and Detector↩︎

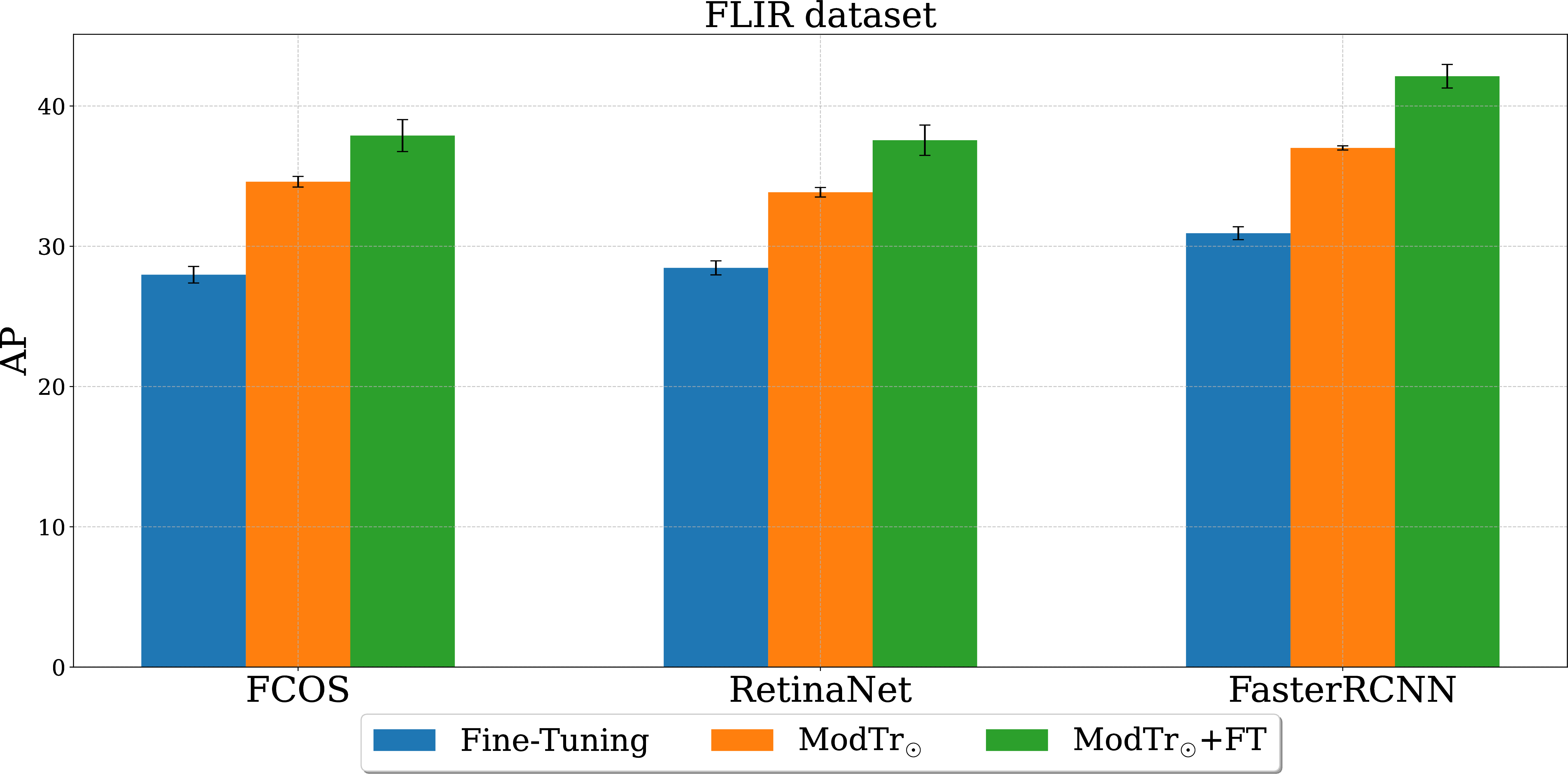

In the following ablation study, we further demonstrate that the proposed approach can be trained jointly with both translation and detector networks in a fully fine-tuning setting, which does not preserve the detector’s knowledge but significantly improves performance. Here, we compare the performance of the three proposed fusion functions with that of the standard detector’s fine-tuning.

As depicted in 2, for each fusion function we include variations with and without fine-tuning. The table shows AP for the LLVIP dataset, with a consistent trend across all detectors (FCOS, RetinaNet, and FasterRCNN). Furthermore, in the case of the FLIR dataset, we observed enhancements over the standard detector fine-tuning with the various fusion techniques, both with and without fine-tuning versions. As demonstrated, our approach surpasses standard fine-tuning while maintaining the detector’s performance in the original modality. It is worth noting that our method also improves performance in terms of localization metrics such as AP\(_{50}\) and AP\(_{75}\) compared to fine-tuning alone, and we provide detailed results in the Supplementary Materials.

| Method | Test Set IR (Dataset: LLVIP) | ||

|---|---|---|---|

| 2-4 | FCOS | RetinaNet | FasterRCNN |

| Fine-Tuning (FT) | 57.37 ± 2.19 | 53.79 ± 1.79 | 59.62 ± 1.23 |

| ModTr\(_{+}\) | 56.44 ± 0.75 | 53.18 ± 1.03 | 57.14 ± 0.50 |

| ModTr\(_{\oplus}\) | 57.01 ± 0.71 | 54.43 ± 0.35 | 56.95 ± 0.37 |

| ModTr\(_{\odot}\) | 57.63 ± 0.66 | 54.83 ± 0.61 | 57.97 ± 0.85 |

| Method | Test Set IR (Dataset: FLIR) | ||

| 2-4 | FCOS | RetinaNet | FasterRCNN |

| Fine-Tuning (FT) | 27.97 ± 0.59 | 28.46 ± 0.50 | 30.93 ± 0.46 |

| ModTr\(_{+}\) | 34.63 ± 0.24 | 33.70 ± 0.59 | 37.09 ± 0.74 |

| ModTr\(_{\oplus}\) | 34.94 ± 0.52 | 33.72 ± 0.22 | 37.16 ± 0.47 |

| ModTr\(_{\odot}\) | 34.60 ± 0.38 | 33.85 ± 0.34 | 37.01 ± 0.15 |

4.4 Different Backbones for ModTr↩︎

In this context, we focused on evaluating the ModTr architecture and examining the trade-off between performance and parameter cost. It is widely recognized that increasing the number of parameters can enhance performance, but this relationship is not strictly linear. We demonstrated that models with fewer parameters can still achieve good performance; for example, MobileNet\(_{v2}\), with fewer parameters than ResNet\(_{18}\), sometimes outperformed it. This trade-off highlights the versatility of the model, which can be deployed with MobileNet-based architectures and utilized in low-cost devices. In Table 3, we successfully reduced the default number of parameters from \(24.4\)M (ResNet\(_{34}\)) to \(6.6\)M using MobileNet\(_{v2}\) while maintaining similar performance. For instance, on LLVIP, MobileNet\(_{v2}\) achieved a mean AP of \(56.15\), comparable to \(56.35\) AP\(_{50}\) from ResNet\(_{34}\) (others APs and detectors are reported in the supplementary material).

This approach opens up new possibilities, particularly in scenarios where using one translation network and one detector (e.g., one ModTr and one detector for thermal/RGB) proves advantageous. This setup requires a total of \(44.9\)M parameters, compared to \(83.6\)M parameters, when employing two detectors—one for each modality (for example, for FasterRCNN). Similar reductions in parameter costs were observed for FCOS (from \(66.4\)M to \(36.3\)M) and RetinaNet (from \(68\)M to \(37.1\)M) when using one detector for both modalities while preserving the knowledge of the previous modality and incorporating a new one. These numbers are based on MobileNet\(_{v3s}\), which strikes a balance between performance and the number of parameters, making it suitable for memory-restricted systems. The complete evaluations for FCOS and RetinaNet are included in the supplementary material.

| Test Set IR (Dataset: LLVIP) | ||

|---|---|---|

| Method | Params. | AP\({}\uparrow\) |

| Faster R-CNN | 41.8 M | |

| MobileNet\(_{v3s}\) | + 3.1 M | 54.51 ± 0.28 |

| MobileNet\(_{v2}\) | + 6.6 M | 56.15 ± 0.51 |

| ResNet\(_{18}\) | + 14.3 M | 55.53 ± 1.14 |

| ResNet\(_{34}\) | + 24.4 M | 56.35 ± 0.65 |

| Test Set IR (Dataset: FLIR) | ||

| Faster R-CNN | 41.8 M | |

| MobileNet\(_{v3s}\) | + 3.1 M | 32.06 ± 0.75 |

| MobileNet\(_{v2}\) | + 6.6 M | 36.77 ± 0.67 |

| ResNet\(_{18}\) | + 14.3 M | 36.68 ± 0.22 |

| ResNet\(_{34}\) | + 24.4 M | 37.21 ± 0.46 |

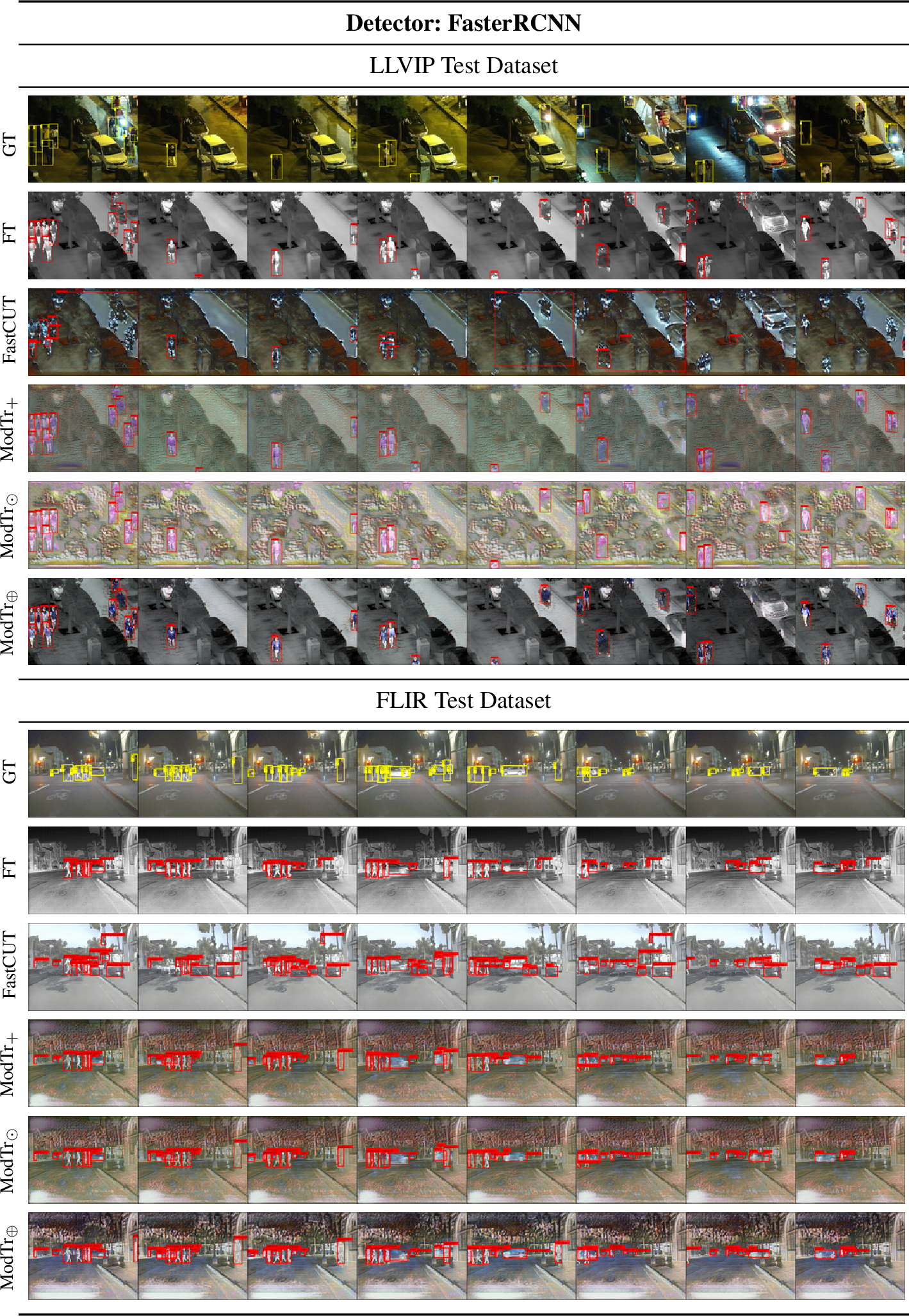

Figure 3: Illustration of a sequence of \(8\) images of LLVIP and FLIR dataset for Faster R-CNN. For each dataset, the first row is the RGB modality, followed by the IR modality, followed by FastCUT, and different representations created by ModTr and their variations. For visualizations for other detectors, see Suppl. Material.

4.5 Knowledge Preservation through Input Modality Translation↩︎

In this section, we show how various adaptation paradigms, depicted in , are capable of solving the final task while preserving intrinsic knowledge. We compare our proposed method, ModTr, to two other fine-tuning baseline methods. The first baseline method consists of N detectors, each of which fine-tunes the target modality individually. The second baseline method is a single detector trained on the joint modality with balanced sampling.

illustrates the final performance. While both N detectors and single detectors exhibit relatively similar final performance, ModTr stands out as the only method capable of preserving knowledge while excelling at the final task. Specifically, both fine-tuning baselines compromised the detector’s zero-shot capability. This consistent trend is observable across various detectors and datasets.

| Dataset | N-Detectors | 1-Detector | N-ModTr-1-Det. | |

|---|---|---|---|---|

| FCOS | LLVIP | 57.37 ± 2.19 | 58.55 ± 0.89 | 57.63 ± 0.66 |

| FLIR | 27.97 ± 0.59 | 26.70 ± 0.48 | 35.49 ± 0.94 | |

| COCO | 00.18 ± 0.01 | 00.33 ± 0.04 | 38.41 ± 0.00 | |

| RetinaNet | LLVIP | 53.79 ± 1.79 | 53.26 ± 3.02 | 54.83 ± 0.61 |

| FLIR | 28.46 ± 0.50 | 25.19 ± 0.72 | 34.27 ± 0.27 | |

| COCO | 00.22 ± 0.02 | 00.29 ± 0.01 | 35.48 ± 0.00 | |

| FasterRCNN | LLVIP | 59.62 ± 1.23 | 62.50 ± 1.29 | 57.97 ± 0.85 |

| FLIR | 30.93 ± 0.46 | 28.90 ± 0.33 | 37.21 ± 0.46 | |

| COCO | 00.31 ± 0.01 | 00.40 ± 0.00 | 39.78 ± 0.00 |

4.6 Visualization of ModTr Translated Images↩︎

In Figure 3, we present qualitative results for LLVIP and IR, alongside comparison with fine-tuning. Panel a) displays the ground-truth RGB, while b) showcases the results of fine-tuning using IR. Subsequently, in c), we present ModTr with addition-based fusion, in d) ModTr with attention-based fusion, and in e) ModTr with a Hadamard product-based fusion for each dataset over the Faster R-CNN detector. Due to space constraints, we provide additional visualizations for the other detectors and competitive methods in the supplementary material. Notably, the IR exhibits some false positives, especially when there is an overlap of the detected objects, which is mitigated by our method. It’s worth noting that, as described, the product-based fusion yields a darker image, contrasting with the additive characteristics of other fusion strategies. Further insights into the visualization are also provided in supplementary material, revealing how our method effectively blurs or removes objects that do not have elements of the target classes, thereby facilitating detection for the OD. Although the obtained intermediate representations are not visually pleasant, they prove more efficient for incorporating the knowledge necessary for the OD. Additionally, we conducted experiments with loss function terms aimed at enhancing the visual effects of the image, but they were not conclusive in terms of helping the detection performance.

4.7 Fine-tuning ModTr and the detector↩︎

The main reason to use ModTr is to avoid fine-tuning the detector for a specific task so that it can preserve its knowledge and be used for multiple modalities. However, in this section, we consider what would happen if we learn jointly ModTr and the detector weights. Results are reported in Figure 4. We see that fine-tuning the detector can further boost performance. Thus, another application of ModTr could be to use it to improve the fine-tuning of a detector with a reduced additional computational cost.

Figure 4: Comparison of the performance of fine-tuning the ModTr and normal fine-tuning on the FLIR dataset for the three different detectors (FCOS, RetinaNet, and FasterRCNN). In blue, the Fine-Tuning; in orange, the ModTr\(_{\odot}\), and in green, ModTr\(_{\odot}+\text{FT}\).

5 Conclusion↩︎

In this work, we present a novel ModTr method for adapting ODs without changing their parameters. The proposed approach performs well in different settings and results better than powerful image-to-image models and previous competitors. The proposed ModTr method was evaluated for different tasks, such as detection based on image translation, comparison with traditional fine-tuning, and incremental modality application. Experimental results showed the high level of performance and versatility of our method. Our approach also benefits from preserving the full knowledge of the detector, which opens the possibility of using the translation network as a node to change the modality for an unaltered detector. This is much more convenient in terms of flexibility and computation than having a specialized OD for each modality. Future works will expand the knowledge of the OD by incorporating additional text embedding to help the ModTr perform few-shot and zero-shot learning in the image translation space targeting detection.

Acknowledgments: This work was supported by Distech Controls Inc., the Natural Sciences and Engineering Research Council of Canada, the Digital Research Alliance of Canada, and MITACS.

In this supplementary material, we provide additional information to reproduce our work. The source code is provided alongside the supplementary material, and we are going to provide the official repository. This supplementary material is divided into the following sections: Detailed diagrams (Section 6), Quantitative Results (Section 7), in which we provide additional numerical results in terms of APs, and Qualitative Results (Section 8), in which we provide additional visualizations.

6 Detailed Diagrams↩︎

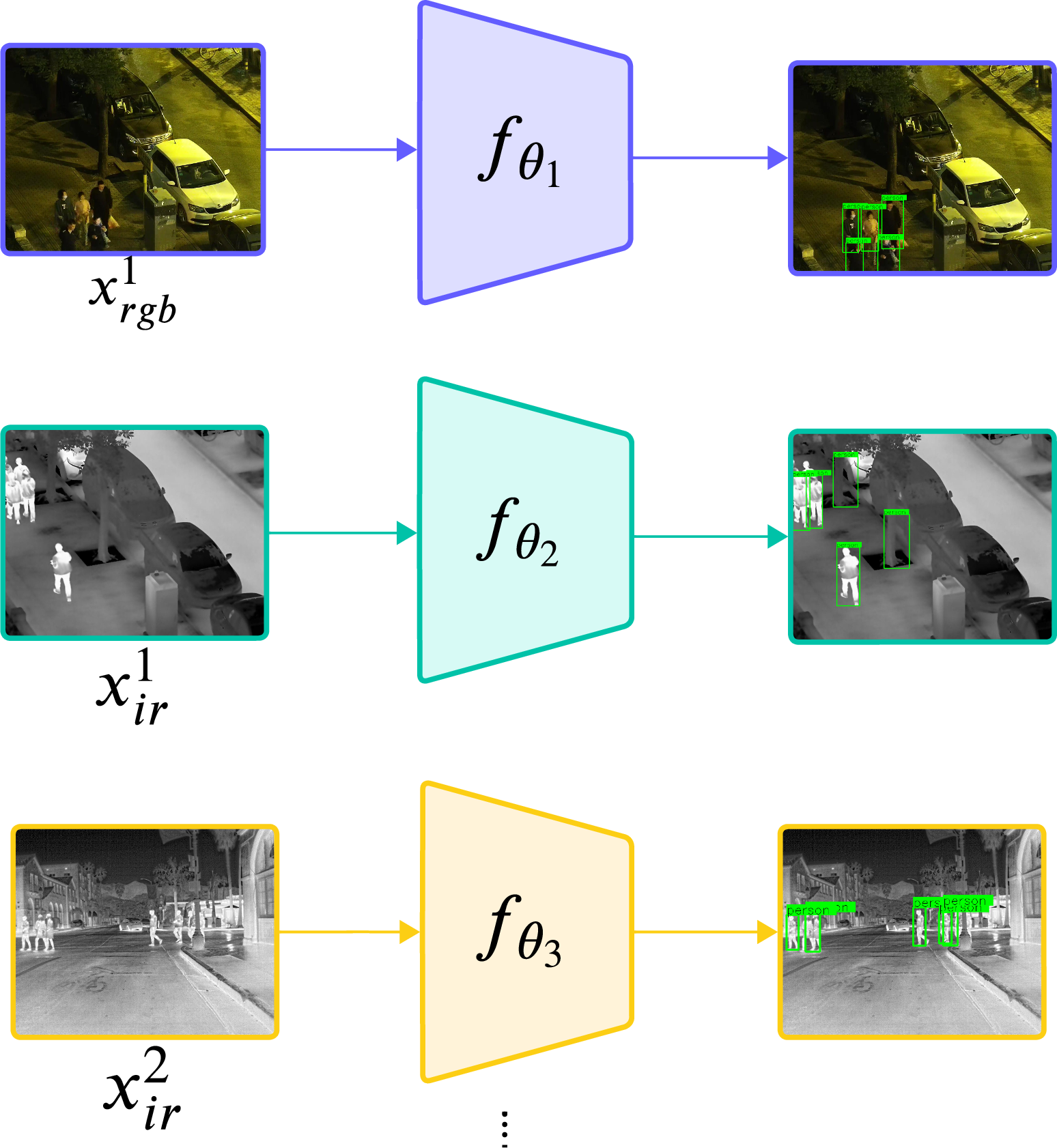

In this section, we expand and detail the diagrams provided in the main manuscript. In Figure 5, we describe the traditional approach of employing specialized detectors for individual modalities. For example, we depict an RGB detector (highlighted in purple) and two IR detectors (highlighted in green and yellow), with each trained over a different dataset.

Figure 5: The simplest approach is to use a different detector adapted to each modality. This can lead to a high level of accuracy but requires storing models in memory multiple times. In purple is the RGB detector, in green is one IR detector for one dataset, and in yellow is another detector for another IR dataset.

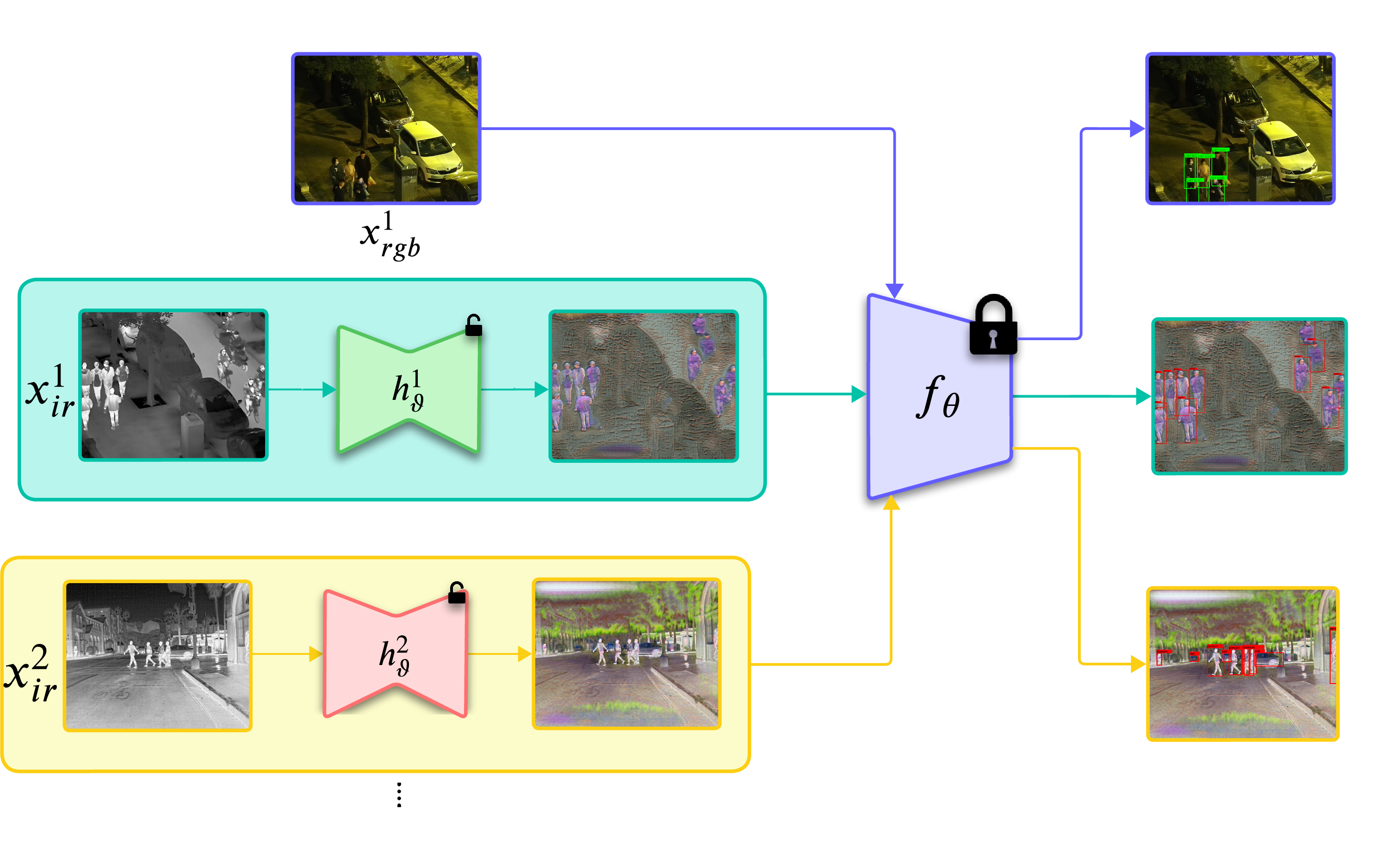

In Figure 6, we illustrate the proposed ModTr. This method involves using a single pre-trained model typically trained on the more prevalent data, i.e. RGB, by the input adaptation network. For clarity, the RGB modality (along with the RGB detector) is depicted in purple, while an adaptation block of ModTr is shown in green for one IR modality and in red for the other IR modality with different distribution.

Figure 6: Our proposed solution is based on using a single pre-trained model normally trained on the more abundant data (RGB) and then adapting the input through our ModTr block.

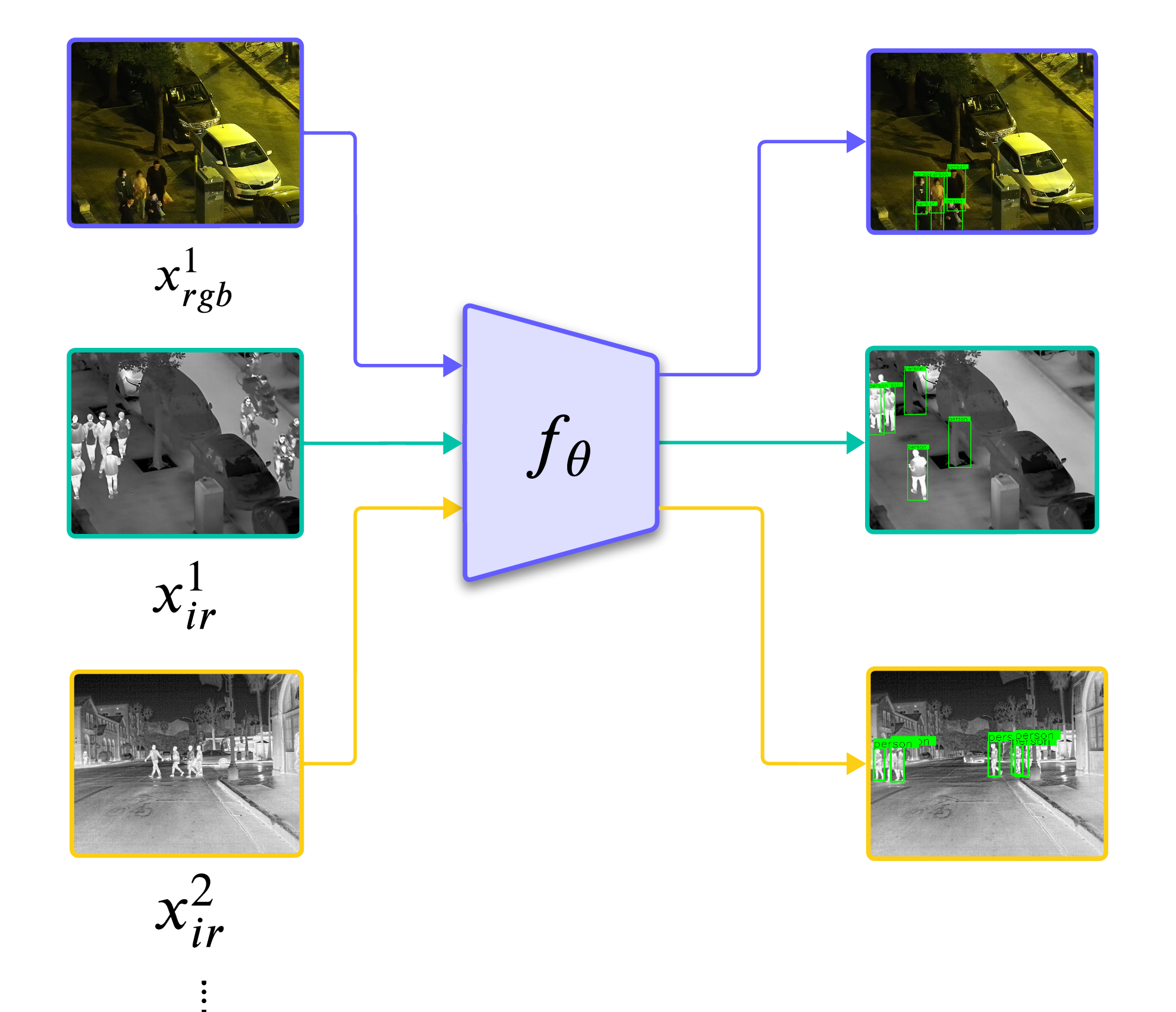

Lastly, we present a final diagram ( Figure 7), depicting a detector trained on the joint distribution of all modalities. The detector, shown in purple, undergoes training with all available modalities. While this approach enables the model to learn shared features, it may not be optimal. Nevertheless, it incurs a lower memory cost compared to employing one detector for each modality.

Figure 7: A single detector is trained on all modalities jointly. This allows the use of a single model but requires access to all modalities jointly, which is often not possible, especially when dealing with large pre-trained models.

7 Quantitative Results↩︎

In this section, we provide further details for the different AP metrics of the experiments in the main manuscript. In Table [tab:img2img], we compare the best ModTr with different image translation strategies. Some of the competitors use the RGB data for the translation, while others utilize bounding box annotations. Here, we can see that ModTr outperforms the competitors across different detectors and AP metrics, demonstrating its superiority in terms of classification and localization. For instance, when compared with HalluciDet using FCOS, which was the second best, our method shows an improvement of approximately \(28\) better in terms of AP\(_{50}\), with a similar trend observed for RetinaNet on the LLVIP dataset. The gap is narrower with FasterRCNN, with an improvement of only around \(2\) AP\(_{50}\). While the gap is smaller for the FLIR dataset, it remains consistent across all APs and detectors; for example, an improvement of \(6\) AP\(_{50}\) for FasterRCNN, approximately \(11\) AP\(_{50}\) for RetinaNet, and \(11\) AP\(_{50}\) for FCOS. In terms of localization for the FLIR dataset, significant improvements are observed, such as around \(7\) AP for FasterRCNN, \(11\) AP for RetinaNet, and \(11\) AP for FCOS when compared with HalluciDet. For the other methods, which do not rely on bounding boxes, substantial improvements are evident; for instance, our method exhibits an improvement of more than \(11\) AP over FastCUT, with similar trends observed for other competitors.

| Image translation | RGB | Box | Test Set IR (Dataset: LLVIP) | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 4-13 | FCOS | RetinaNet | FasterRCNN | ||||||||||||

| 4-6 (lr)7-9 (lr)10-13 | AP\(_{50}\uparrow\) | AP\(_{75}\uparrow\) | AP\({}\uparrow\) | AP\(_{50}\uparrow\) | AP\(_{75}\uparrow\) | AP\({}\uparrow\) | AP\(_{50}\uparrow\) | AP\(_{75}\uparrow\) | AP\({}\uparrow\) | ||||||

| Histogram Equal. [24] | 53.74 ± 0.00 | 32.57 ± 0.00 | 31.69 ± 0.00 | 59.93 ± 0.00 | 33.04 ± 0.00 | 33.16 ± 0.00 | 65.70 ± 0.04 | 39.02 ± 0.11 | 38.33 ± 0.02 | ||||||

| CycleGAN [42] | ✔ | 41.72 ± 1.63 | 23.83 ± 0.78 | 23.85 ± 0.76 | 43.17 ± 1.52 | 22.34 ± 0.88 | 23.34 ± 0.53 | 45.44 ± 1.89 | 26.82 ± 1.59 | 26.54 ± 1.20 | |||||

| CUT [43] | ✔ | 26.48 ± 2.88 | 13.68 ± 2.72 | 14.30 ± 2.25 | 25.64 ± 3.77 | 11.74 ± 2.33 | 13.12 ± 2.07 | 27.96 ± 1.70 | 13.59 ± 2.77 | 14.78 ± 1.82 | |||||

| FastCUT [43] | ✔ | 34.92 ± 3.63 | 19.07 ± 1.33 | 19.39 ± 1.52 | 35.73 ± 2.53 | 16.36 ± 0.44 | 18.11 ± 0.79 | 42.09 ± 3.51 | 21.44 ± 1.57 | 22.91 ± 1.68 | |||||

| HalluciDet [6] | ✔ | ✔ | 64.17 ± 0.61 | 18.80 ± 1.45 | 28.00 ± 0.92 | 60.38 ± 3.59 | 06.75 ± 1.38 | 19.95 ± 2.01 | 90.07 ± 0.72 | 51.23 ± 1.81 | 57.78 ± 0.97 | ||||

| ModTr\(_{\odot}\) (ours) | ✔ | 92.04 ± 0.47 | 63.84 ± 0.93 | 57.63 ± 0.66 | 91.56 ± 0.64 | 59.49 ± 1.11 | 54.83 ± 0.61 | 91.82 ± 0.49 | 62.51 ± 0.87 | 57.97 ± 0.85 | |||||

| Image translation | RGB | Box | Test Set IR (Dataset: FLIR) | ||||||||||||

| 4-13 | FCOS | RetinaNet | FasterRCNN | ||||||||||||

| 4-6 (lr)7-9 (lr)10-13 | AP\(_{50}\uparrow\) | AP\(_{75}\uparrow\) | AP\({}\uparrow\) | AP\(_{50}\uparrow\) | AP\(_{75}\uparrow\) | AP\({}\uparrow\) | AP\(_{50}\uparrow\) | AP\(_{75}\uparrow\) | AP\({}\uparrow\) | ||||||

| Histogram Equal. [24] | 52.09 ± 0.00 | 16.44 ± 0.00 | 22.76 ± 0.00 | 53.13 ± 0.00 | 16.50 ± 0.00 | 23.06 ± 0.00 | 56.50 ± 0.10 | 17.62 ± 0.04 | 24.61 ± 0.01 | ||||||

| CycleGAN [42] | ✔ | 49.01 ± 1.28 | 21.16 ± 0.71 | 23.92 ± 0.97 | 49.04 ± 1.71 | 19.93 ± 0.54 | 23.71 ± 0.70 | 54.48 ± 2.09 | 23.08 ± 1.54 | 26.85 ± 1.23 | |||||

| CUT [43] | ✔ | 38.70 ± 1.05 | 14.85 ± 0.49 | 18.16 ± 0.75 | 39.08 ± 1.42 | 13.69 ± 0.61 | 17.84 ± 0.75 | 43.34 ± 1.53 | 16.09 ± 0.38 | 20.29 ± 0.48 | |||||

| FastCUT [43] | ✔ | 45.19 ± 4.46 | 22.93 ± 2.09 | 24.02 ± 2.37 | 43.04 ± 4.95 | 19.82 ± 2.78 | 22.00 ± 2.73 | 49.98 ± 4.57 | 25.52 ± 2.85 | 26.68 ± 2.59 | |||||

| HalluciDet [6] | ✔ | ✔ | 54.20 ± 2.50 | 17.36 ± 2.23 | 23.74 ± 2.09 | 52.06 ± 1.47 | 16.21 ± 0.31 | 22.29 ± 0.45 | 63.11 ± 1.54 | 23.91 ± 1.10 | 29.91 ± 1.18 | ||||

| ModTr\(_{\odot}\) (ours) | ✔ | 65.99 ± 0.78 | 33.73 ± 1.74 | 35.49 ± 0.94 | 66.31 ± 0.93 | 31.22 ± 0.69 | 34.27 ± 0.27 | 69.20 ± 0.36 | 34.58 ± 0.56 | 37.21 ± 0.46 | |||||

In Table [tab:modtr95ft], we show that compared with fine-tuning (FT), the ModTR is better even without modifying the parameters of the detector. Thus, it preserves the detector’s knowledge for further tasks while improving performance. For instance, in terms of localization with AP\(_{75}\) and AP for FCOS, RetinaNet on LLVIP, we reached the performance of the FT, while with AP\(_{50}\), we were comparable. For the FLIR dataset, we outperform the FT in all detectors for the different APs and also in all different fusion strategies.

| Method | Test Set IR (Dataset: LLVIP) | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2-11 | FCOS | RetinaNet | FasterRCNN | ||||||||||

| 2-4 (lr)5-7 (lr)8-11 | AP\(_{50}\uparrow\) | AP\(_{75}\uparrow\) | AP\({}\uparrow\) | AP\(_{50}\uparrow\) | AP\(_{75}\uparrow\) | AP\({}\uparrow\) | AP\(_{50}\uparrow\) | AP\(_{75}\uparrow\) | AP\({}\uparrow\) | ||||

| Fine-Tuning | 93.14 ± 1.06 | 62.08 ± 3.37 | 57.37 ± 2.19 | 93.61 ± 0.59 | 56.20 ± 3.78 | 53.79 ± 1.79 | 94.64 ± 0.84 | 62.50 ± 0.79 | 59.62 ± 1.23 | ||||

| ModTr\(_{+}\) | 90.57 ± 1.46 | 62.38 ± 0.31 | 56.44 ± 0.75 | 91.09 ± 0.73 | 55.06 ± 1.81 | 53.18 ± 1.03 | 91.89 ± 0.39 | 61.44 ± 0.72 | 57.14 ± 0.50 | ||||

| ModTr\(_{\oplus}\) | 91.11 ± 0.84 | 62.69 ± 1.53 | 57.01 ± 0.71 | 90.49 ± 1.11 | 58.73 ± 0.55 | 54.43 ± 0.35 | 91.20 ± 0.46 | 61.31 ± 0.73 | 56.95 ± 0.37 | ||||

| ModTr\(_{\odot}\) | 92.04 ± 0.47 | 63.84 ± 0.93 | 57.63 ± 0.66 | 91.56 ± 0.64 | 59.49 ± 1.11 | 54.83 ± 0.61 | 91.82 ± 0.49 | 62.51 ± 0.87 | 57.97 ± 0.85 | ||||

| Method | Test Set IR (Dataset: FLIR) | ||||||||||||

| 2-11 | FCOS | RetinaNet | FasterRCNN | ||||||||||

| 2-4 (lr)5-7 (lr)8-11 | AP\(_{50}\uparrow\) | AP\(_{75}\uparrow\) | AP\({}\uparrow\) | AP\(_{50}\uparrow\) | AP\(_{75}\uparrow\) | AP\({}\uparrow\) | AP\(_{50}\uparrow\) | AP\(_{75}\uparrow\) | AP\({}\uparrow\) | ||||

| Fine-Tuning | 60.22 ± 0.97 | 21.94 ± 0.42 | 27.97 ± 0.59 | 61.77 ± 1.02 | 22.37 ± 0.45 | 28.46 ± 0.50 | 66.15 ± 0.94 | 24.48 ± 0.71 | 30.93 ± 0.46 | ||||

| ModTr\(_{+}\) | 64.90 ± 0.48 | 32.78 ± 0.27 | 34.63 ± 0.24 | 65.30 ± 0.66 | 30.00 ± 0.81 | 33.70 ± 0.59 | 68.64 ± 0.77 | 34.96 ± 0.90 | 37.09 ± 0.74 | ||||

| ModTr\(_{\oplus}\) | 65.46 ± 0.61 | 33.21 ± 0.55 | 34.94 ± 0.52 | 63.87 ± 0.51 | 30.93 ± 0.38 | 33.72 ± 0.22 | 68.64 ± 1.29 | 35.48 ± 0.33 | 37.16 ± 0.47 | ||||

| ModTr\(_{\odot}\) | 65.25 ± 0.33 | 32.24 ± 0.95 | 34.60 ± 0.38 | 64.96 ± 0.68 | 30.93 ± 0.50 | 33.85 ± 0.34 | 68.84 ± 0.40 | 34.77 ± 0.22 | 37.01 ± 0.15 | ||||

In Table 7, we explore the potential of achieving comparable performance in detection task while significantly reducing the number of parameters by employing a smaller backbone for the translation network. The MobileNet\(_{v2}\) with only \(6.6\) million additional parameters, achieves performance on par with ResNet\(_{34}\) which have \(24.4\) million parameters. This reduction in parameters is even more pronounced when compared with a new detector on the desired modality. For example, a new FCOS detector would require more \(33.2\) million parameters. This trend is similar over the various detectors and remains consistent for the FLIR dataset (Table 8).

| Test Set IR (Dataset: LLVIP) | ||||

|---|---|---|---|---|

| Method | Params. | AP\(_{50}\uparrow\) | AP\(_{75}\uparrow\) | AP\({}\uparrow\) |

| FCOS | 33.2 M | |||

| MobileNet\(_{v3s}\) | + 3.1 M | 83.34 ± 1.76 | 53.34 ± 0.75 | 50.05 ± 1.01 |

| MobileNet\(_{v2}\) | + 6.6 M | 90.36 ± 0.54 | 60.17 ± 0.56 | 55.33 ± 0.62 |

| ResNet\(_{18}\) | + 14.3 M | 89.53 ± 1.03 | 58.54 ± 1.57 | 54.25 ± 0.90 |

| ResNet\(_{34}\) | + 24.4 M | 90.90 ± 1.29 | 62.96 ± 2.44 | 56.93 ± 1.44 |

| RetinaNet | 34.0 M | |||

| MobileNet\(_{v3s}\) | + 3.1 M | 87.67 ± 0.18 | 49.99 ± 0.57 | 49.65 ± 0.07 |

| MobileNet\(_{v2}\) | + 6.6 M | 90.21 ± 0.82 | 54.60 ± 2.62 | 52.42 ± 1.33 |

| ResNet\(_{18}\) | + 14.3 M | 89.53 ± 1.82 | 52.68 ± 2.06 | 51.40 ± 1.40 |

| ResNet\(_{34}\) | + 24.4 M | 90.35 ± 0.60 | 57.18 ± 0.29 | 53.60 ± 0.41 |

| Faster R-CNN | 41.8 M | |||

| MobileNet\(_{v3s}\) | + 3.1 M | 89.14 ± 0.63 | 56.85 ± 0.69 | 54.51 ± 0.28 |

| MobileNet\(_{v2}\) | + 6.6 M | 91.32 ± 0.73 | 60.34 ± 0.66 | 56.15 ± 0.51 |

| ResNet\(_{18}\) | + 14.3 M | 90.81 ± 0.46 | 59.39 ± 2.06 | 55.53 ± 1.14 |

| ResNet\(_{34}\) | + 24.4 M | 91.04 ± 0.29 | 60.53 ± 2.16 | 56.35 ± 0.65 |

| Test Set IR (Dataset: FLIR) | ||||

|---|---|---|---|---|

| Method | Params. | AP\(_{50}\uparrow\) | AP\(_{75}\uparrow\) | AP\({}\uparrow\) |

| FCOS | 33.2 M | |||

| MobileNet\(_{v3s}\) | + 3.1 M | 56.73 ± 0.34 | 27.57 ± 0.65 | 29.66 ± 0.14 |

| MobileNet\(_{v2}\) | + 6.6 M | 64.49 ± 0.99 | 32.17 ± 0.38 | 32.17 ± 0.38 |

| ResNet\(_{18}\) | + 14.3 M | 64.39 ± 1.68 | 32.72 ± 1.50 | 34.44 ± 1.13 |

| ResNet\(_{34}\) | + 24.4 M | 65.99 ± 0.78 | 33.73 ± 1.74 | 35.49 ± 0.94 |

| RetinaNet | 34.0 M | |||

| MobileNet\(_{v3s}\) | + 3.1 M | 47.30 ± 0.54 | 18.63 ± 0.10 | 22.67 ± 0.18 |

| MobileNet\(_{v2}\) | + 6.6 M | 64.01 ± 1.51 | 29.70 ± 0.62 | 33.12 ± 0.68 |

| ResNet\(_{18}\) | + 14.3 M | 64.20 ± 0.58 | 30.84 ± 0.47 | 33.44 ± 0.47 |

| ResNet\(_{34}\) | + 24.4 M | 66.31 ± 0.93 | 31.22 ± 0.69 | 34.27 ± 0.27 |

| Faster R-CNN | 41.8 M | |||

| MobileNet\(_{v3s}\) | + 3.1 M | 61.03 ± 1.26 | 29.87 ± 0.86 | 32.06 ± 0.75 |

| MobileNet\(_{v2}\) | + 6.6 M | 68.64 ± 0.56 | 34.76 ± 1.27 | 36.77 ± 0.67 |

| ResNet\(_{18}\) | + 14.3 M | 68.49 ± 0.53 | 34.52 ± 0.23 | 36.68 ± 0.22 |

| ResNet\(_{34}\) | + 24.4 M | 69.20 ± 0.36 | 34.58 ± 0.56 | 37.21 ± 0.46 |

8 Qualitative Results↩︎

In this section, we provide additional visualizations for all the methods, including images generated by our proposal and its detection results. First, in Figure 8, we present the detections in more detail, highlighting issues of those methods relying solely on translation, such as FastCUT, and some false positives when there is only FT. Subsequently, we provide additional visualizations for a batch of images processed by various detectors, i.e., in Figure 9 for FCOS, in Figure 10 for RetinaNet, and Figure 11 for FasterRCNN for both datasets.

Figure 8: Bounding box predictions over different OD methods for infrared images on two benchmarks: LLVIP and FLIR. Yellow and red boxes show the ground truth and predicted detections, respectively. FastCUT [1] is an unsupervised image translation approach that takes as input infrared images (IR) and produces pseudo-RGB images. It does not focus on detection and requires both modalities for training. Fine-tuning is the standard approach to adapting the detector to the new modality. It requires only IR data but forgets the original knowledge of the pre-trained detector. Finally, ModTr, our approach focuses the translation on detection, requires only IR data, and does not forget the original knowledge so that it can be reused for other tasks.

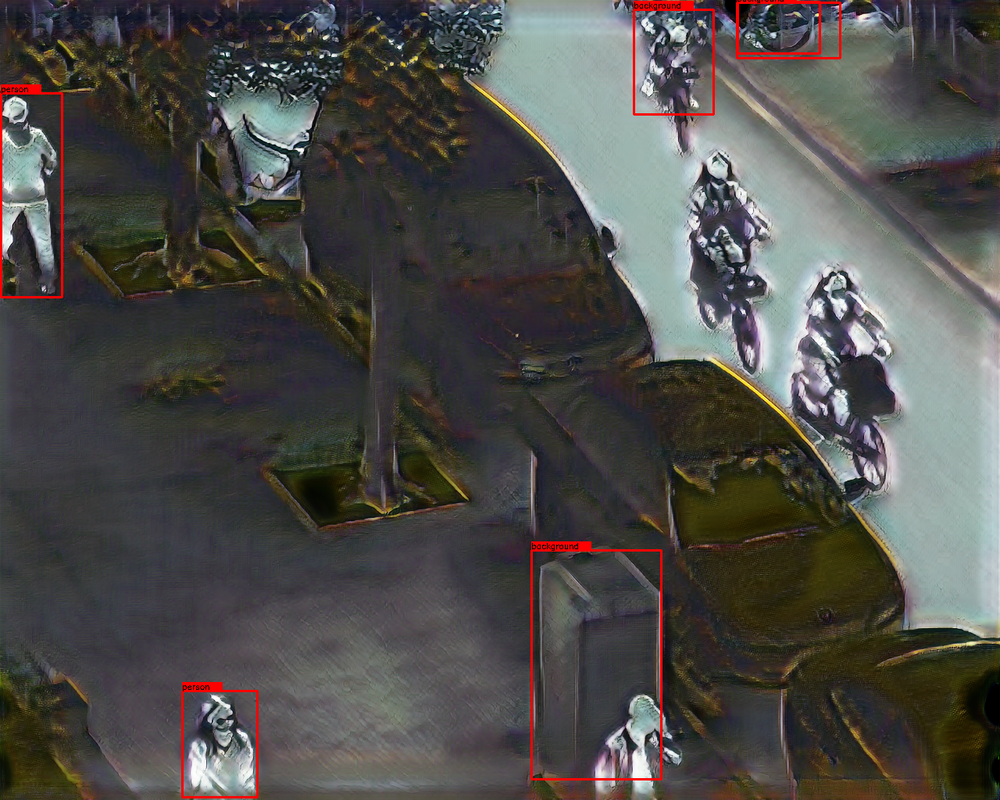

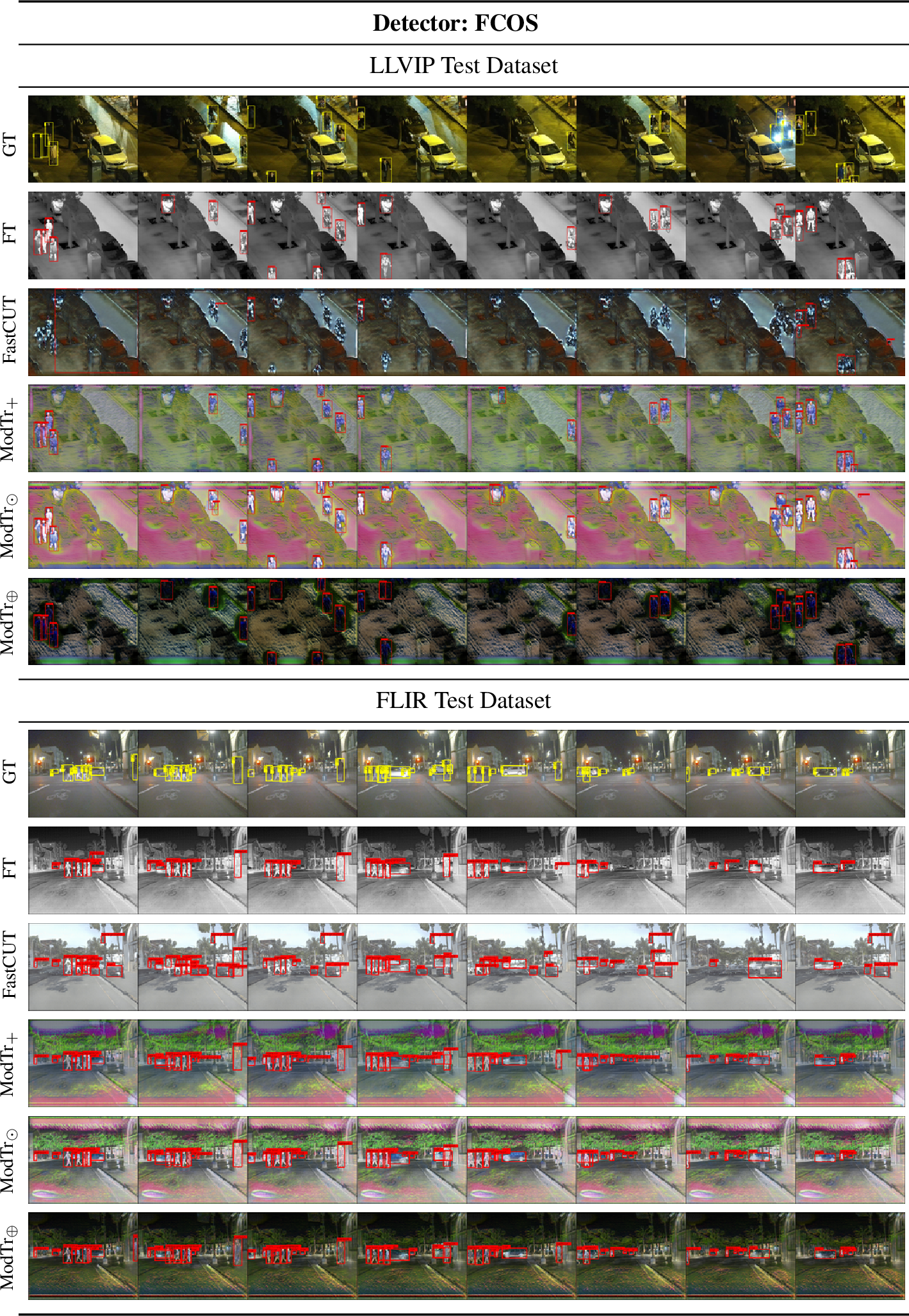

Figure 9: Illustration of a sequence of \(8\) images of LLVIP and FLIR dataset for FCOS. For each dataset, the first row is the RGB modality, followed by the IR modality, followed by FastCUT [1], and different representations created by ModTr and their variations.

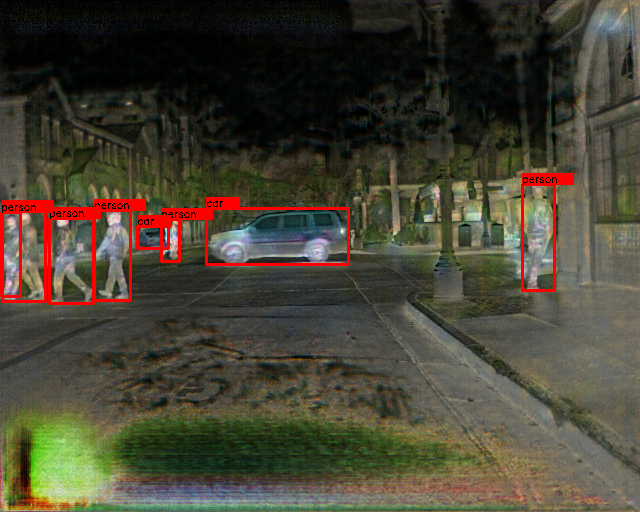

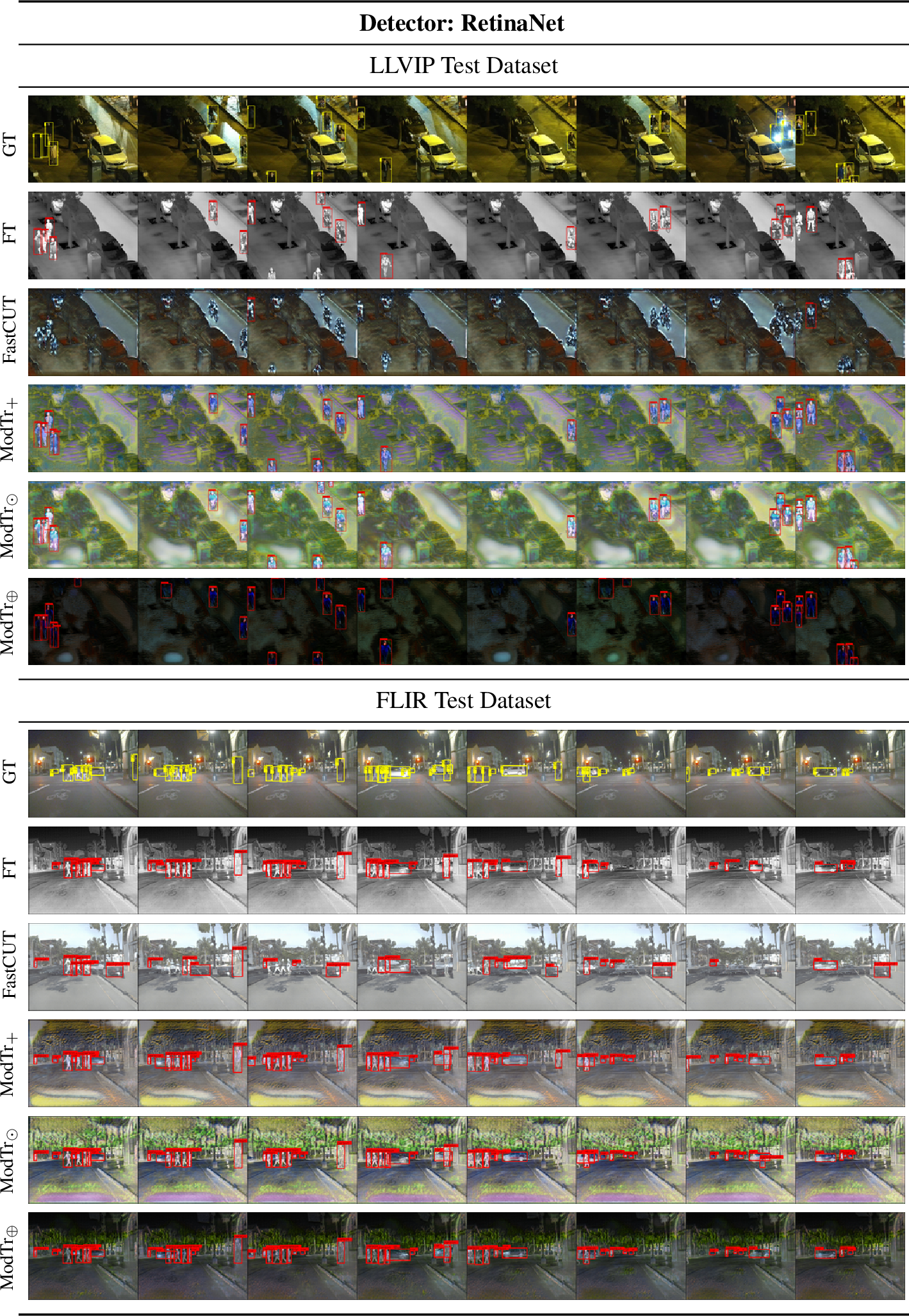

Figure 10: Illustration of a sequence of \(8\) images of LLVIP and FLIR dataset for RetinaNet. For each dataset, the first row is the RGB modality, followed by the IR modality, followed by FastCUT [1], and different representations created by ModTr and their variations.

Figure 11: Illustration of a sequence of \(8\) images of LLVIP and FLIR dataset for FasterRCNN. For each dataset, the first row is the RGB modality, followed by the IR modality, followed by FastCUT [1], and different representations created by ModTr and their variations.