AAdaM at SemEval-2024 Task 1: Augmentation and Adaptation

for Multilingual Semantic Textual Relatedness

April 01, 2024

Abstract

This paper presents our system developed for the SemEval-2024 Task 1: Semantic Textual Relatedness for African and Asian Languages. The shared task aims at measuring the semantic textual relatedness between pairs of sentences, with a focus on a range of under-represented languages. In this work, we propose using machine translation for data augmentation to address the low-resource challenge of limited training data. Moreover, we apply task-adaptive pre-training on unlabeled task data to bridge the gap between pre-training and task adaptation. For model training, we investigate both full fine-tuning and adapter-based tuning, and adopt the adapter framework for effective zero-shot cross-lingual transfer. We achieve competitive results in the shared task: our system performs the best among all ranked teams in both subtask A (supervised learning) and subtask C (cross-lingual transfer).1

1 Introduction↩︎

Semantic Textual Relatedness (STR) measures the closeness of meaning between two linguistic units, such as a pair of words or sentences [1], [2]. For example, one can easily tell that “I like playing games” is more semantically related to “The game is fun” rather than “The weather is good”, which largely depends on their lexical semantic relation and topic consistency. Semantic Textual Similarity (STS), a closely related concept, indicates whether two units have a paraphrasing relation. The difference between these two concepts is clarified in [3]: while similar pairs are also related, the reverse is not necessarily true.

In stark contrast to the extensive research on STS [4]–[7], exploration of STR lags behind and predominantly focuses on English [3], [8], mainly due to the lack of datasets. To close this gap, the SemEval-2024 Task 1: Semantic Textual Relatedness [9] is proposed to encourage STR research on 14 African and Asian languages. The shared task consists of 3 subtasks: supervised (subtask A), unsupervised (subtask B), and cross-lingual (subtask C).

In this paper, we present our system AAdaM (Augmentation and Adaptation for Multilingual STR) developed for subtask A and C. Our system adopts a cross-encoder architecture which takes the concatenation of a pair of sentences as input and predicts the relatedness score through a regression head [10]. As the provided task data for non-English languages is relatively limited, we perform data augmentation for these languages via machine translation. To better adapt a pre-trained model to the STR task, we apply task-adaptive pre-training [11] which has shown effectiveness on many tasks [12], [13]. For subtask A, we explore full fine-tuning and adapter-based tuning [14] combined with previously mentioned techniques. Additionally, we use the adapter framework MAD-X [15] for cross-lingual transfer in subtask C.

We select the best model based on the performance on development sets for the final submission, and our system achieves competitive results on both subtasks. In subtask A, our system ranks first out of 40 teams on average, and performs the best in Spanish. In subtask C, our system ranks first among 18 teams on average, and achieves the best performance in Indonesian and Punjabi.

2 SemRel Dataset↩︎

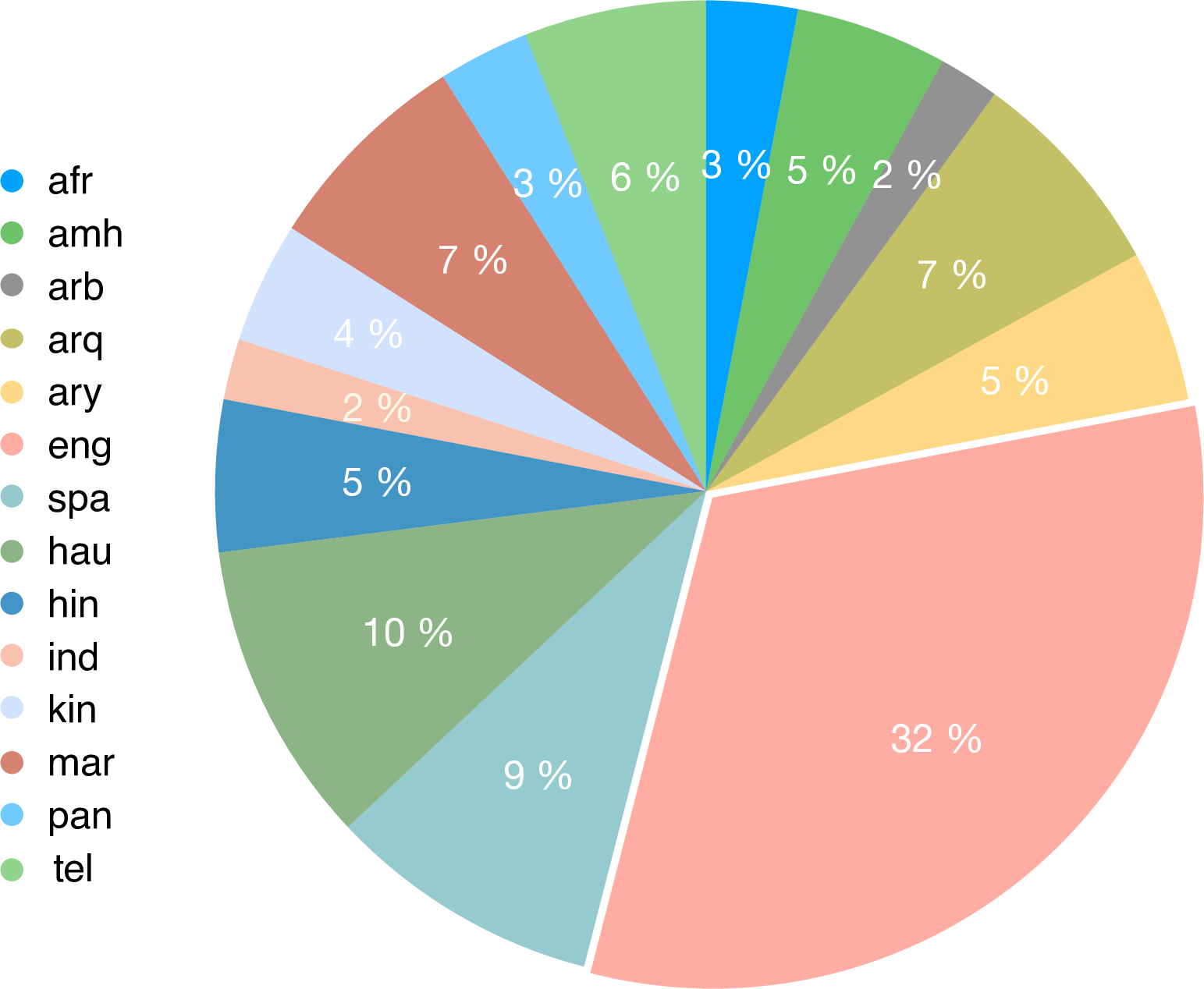

To encourage STR research in the multilingual context, [16] introduce SemRel, a new STR dataset annotated by native speakers, covering 14 languages from 5 distinct language families. These languages are mostly spoken in Africa and Asia, and many of them are under-represented in natural language processing resources. As shown in Figure 1, the data sizes vary widely from language to language constrained by the availability of resources. Notably, English data comprises 32% of the whole dataset and surpasses other languages by a large margin.

Figure 1: SemRel data distribution across languages.

3 System Overview↩︎

Our system employs a cross-encoder architecture, which takes the concatenation of a pair of sentences as input and predicts the relatedness score through a regression head. Compared to bi-encoders [17], which extract individual sentence representations and then compare them using cosine similarity, cross-encoders generally perform better, at the cost of increased inference latency [18]. We select cross-encoder because of its superior performance (see Appendix 7), and leave the exploration of an efficient alternative as future work.

The core techniques underlying our system are (i) data augmentation using machine translation (§3.1), and (ii) task-adaptive pre-training on unlabeled task data (§3.2). We explore two training paradigms for supervised learning combined with the aforementioned techniques, i.e., fine-tuning and adapter-based tuning (§3.3), and the latter is also employed for cross-lingual transfer (§3.4).

3.1 Data Augmentation↩︎

Data augmentation (DA) serves as a widely used strategy to mitigate data scarcity in low-resource languages [19], [20]. Inspired by work on DA with machine translation [21], [22], we create additional training data for non-English languages by translating from various English sources, as illustrated below.

3.1.0.1 SemRel translation.

As English data occupies a significant portion of the entire SemRel dataset, we perform augmentation by translating the English subset to other target languages.

3.1.0.2 STS-B translation.

STS-B [23], a semantic similarity dataset, is highly relevant to STR, and we translate the STS-B training set in English to other target languages.

It worth noting that using translations as data augmentation yields a mixed data quality. For instance, the translation process may introduce artifacts that reduce data validity. Additionally, the concepts of “similarity” and “relatedness” are relevant but not equivalent, leading to a mismatch in their annotated scores. To leverage data in varied qualities, [24] shows that a two-phase approach is beneficial, in which the model is trained on noisy data first and then trained on clean data. Our training procedure follows this two-phase scheme: (i) training the model on augmented data as a warmup, and (ii) subsequently training the model on the original task data.

3.2 Task-Adaptive Pre-training↩︎

Pre-trained language models (PLMs) are trained on massive text corpora with self-supervision objectives for general purposes [10], [25]. To better adapt PLMs to downstream tasks, [11] propose task-adaptive pre-training (TAPT), i.e., continued pre-training on task-specific unlabeled data, and show that it can effectively improve downstream task performance. We integrate this strategy into our system, wherein we conduct masked language modeling (MLM) on unlabeled task data for a given target language before initiating any supervised training.

| Model Tuning | TAPT | Warmup | arq | amh | eng | hau | kin | mar | ary | spa | tel |

|---|---|---|---|---|---|---|---|---|---|---|---|

| fine-tuning | 52.96 | 87.70 | 83.07 | 78.91 | 68.59 | 85.23 | 88.26 | 73.83 | 84.90 | ||

| SemRel | 55.96 | 87.86 | / | 79.87 | 70.06 | 85.51 | 88.59 | 72.93 | 85.38 | ||

| STS-B | 62.05 | 88.50 | 84.31 | 79.86 | 69.78 | 86.48 | 86.97 | 73.33 | 85.15 | ||

| 65.70 | 88.03 | 82.79 | 79.41 | 67.03 | 84.88 | 88.50 | 70.47 | 83.84 | |||

| SemRel | 66.74 | 85.58 | / | 80.73 | 71.29 | 85.74 | 87.01 | 73.37 | 85.77 | ||

| STS-B | 68.25 | 88.72 | 83.01 | 78.95 | 69.38 | 85.26 | 87.07 | 73.50 | 84.66 | ||

| adapter tuning | 55.44 | 87.01 | 82.96 | 78.23 | 70.45 | 84.62 | 86.43 | 72.62 | 84.51 | ||

| SemRel | 59.58 | 87.66 | / | 79.15 | 70.56 | 86.54 | 86.88 | 74.90 | 84.88 | ||

| STS-B | 62.83 | 87.63 | 82.97 | 80.29 | 82.01 | 87.18 | 87.53 | 74.18 | 84.17 | ||

| 58.81 | 85.61 | 82.74 | 78.40 | 70.48 | 84.56 | 85.78 | 72.15 | 84.34 | |||

| SemRel | 58.47 | 87.57 | / | 79.78 | 71.67 | 87.24 | 87.35 | 76.65 | 85.69 | ||

| STS-B | 59.58 | 87.40 | 82.32 | 79.22 | 73.04 | 87.12 | 87.22 | 73.22 | 83.70 |

3.3 Fine-tuning vs. Adapter-based Tuning↩︎

Fine-tuning is the conventional approach to adapt general-purpose PLMs to downstream tasks. It updates all model parameters for each task, leading to inefficiency with the ever-increasing model scales and number of tasks. Recently, many works focus on introducing lightweight alternatives to improve parameter efficiency [26]–[28]. For example, adapter-based tuning [14] only updates small modules known as adapters inserted between the layers of PLMs while keeping the remaining parameters frozen. In particular, it has shown impressive performance in cross-lingual transfer [15], [29], [30].

We explore both fine-tuning and adapter-based tuning to compare their effectiveness on multilingual STR. For fine-tuning, we update all model parameters at each stage, namely the TAPT stage, the warmup stage and the final training stage using the original task data. For adapter-based tuning, we utilize the MAD-X framework [15] which consists of language-specific adapters and task-specific adapters. The language adapters are pre-trained with an MLM objective on unlabeled monolingual corpora. To this end, we collect open-source data from the Leipzig Corpus Collection [31] for pre-training.2 The task adapters are trained on labeled task-specific data (augmented or original), while keeping the language adapters fixed. Note that when applying TAPT, only language adapters are updated. In subtask A, we apply fine-tuning and adapter-based tuning in combination with TAPT and warmup techniques, and select the best model based on the performance on development sets.

3.4 Cross-lingual Transfer with Adapters↩︎

The high modularity of MAD-X enables efficient zero-shot cross-lingual transfer. During inference, we simply replace the source language adapter with the target language adapter while retaining the source task adapter. This task adapter has been trained on labeled data from the source language, without prior exposure to the target language.3 A crucial challenge for cross-lingual transfer lies in source language selection, as improper sources may lead to negative results [32]. To determine the best source language, we explore the following metrics to rank sources: (1) linguistic distance [33], (2) token overlap [34], and (3) development set performance.4 Results in Appendix 9 demonstrate that development set performance serves as the most reliable indicator of transfer performance. For subtask C, we select the optimal source from the adapters trained in subtask A based on their performance on development sets.

| Model | arq | amh | eng | hau | kin | mar | ary | spa | tel | Avg.\(\uparrow\) |

|---|---|---|---|---|---|---|---|---|---|---|

| Overlap\(^\diamondsuit\) | 40. | 63. | 67. | 31. | 33. | 62. | 63. | 67. | 70. | 55.11 |

| LaBSE\(^\diamondsuit\) | 60. | 85. | 83. | 69. | 72. | 88. | 77. | 70. | 82. | 76.22 |

| PALI | 67.88 | 88.86 | 86.00 | 76.43 | 81.34 | 91.08 | 86.26 | 72.38 | 86.43 | 81.85 |

| king001 | 68.23 | 88.78 | 84.30 | 74.72 | 81.69 | 89.68 | 85.97 | 72.12 | 85.34 | 81.20 |

| NRK | 67.36 | 86.42 | 83.29 | 67.20 | 75.69 | 87.93 | 82.70 | 68.99 | 83.42 | 78.11 |

| saturn | 57.77 | 84.51 | - | 69.91 | 75.53 | 87.28 | 79.77 | - | 87.34 | - |

| AAdaM (Ours) | 66.23 | 86.71 | 84.84 | 72.36 | 77.91 | 89.43 | 83.50 | 74.04 | 84.77 | 79.98 |

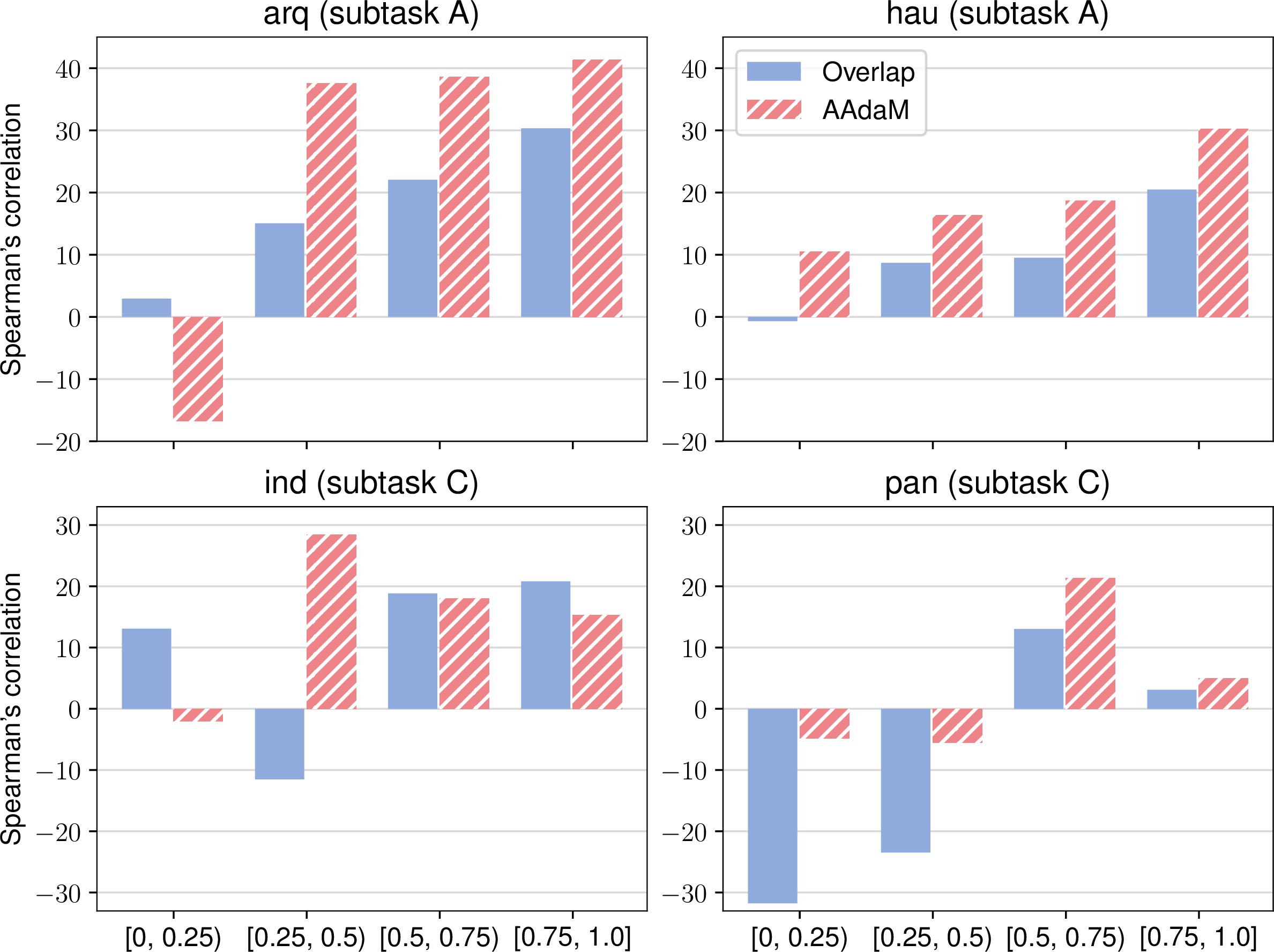

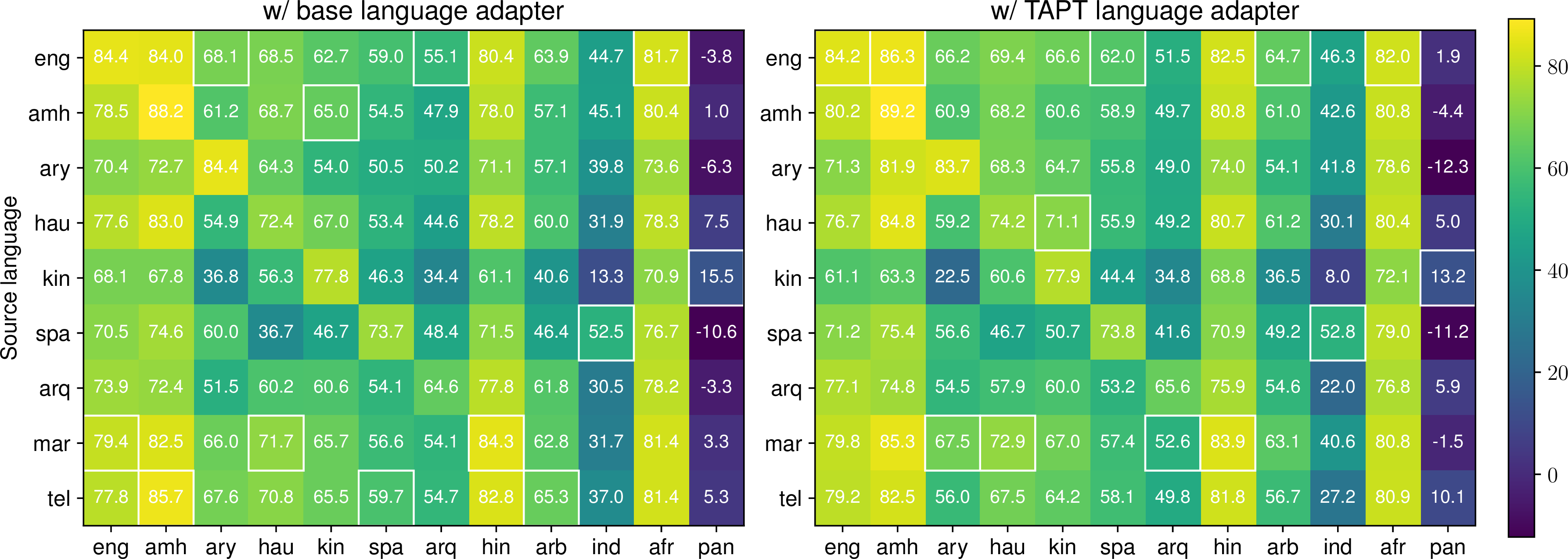

Figure 2: Subtask C performance on development sets (Spearman’s correlation \(\times 100\)) using different types of language adapters. Boxes highlight the optimal performances for each target language, and we select the best source for final submission.

4 Experimental Setup↩︎

4.0.0.1 Model.

Our backbone model is AfroXLMR-large-61L [35], adapted from XLM-R [36] through multilingual adaptive fine-tuning [37]. We use NLLB (nllb-200-distilled-600M) [38]

to translate from English resources to other languages as data augmentation.

4.0.0.2 Implementation.

All experiments are conducted on a single NVIDIA A100 GPU with a batch size of 16. For MLM, we set the learning rate to 5e-5 and train models for 10 epochs. For fine-tuning, we conduct a grid-search of learning rate from {2e-5, 5e-5} on SemRel development sets and train models for 6 epochs. For adapter-based tuning, we select the optimal learning rate from {1e-4, 2e-4, 5e-5} and train adapters for 15 epochs.

| Model | afr | arq | amh | eng | hau | hin | ind | kin | arb | ary | pan | spa | Avg.\(\uparrow\) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Overlap\(^\diamondsuit\) | 71. | 40. | 63. | 67. | 31. | 53. | 55. | 33. | 32. | 63. | -27. | 67. | 45.67 |

| LaBSE\(^\diamondsuit\) | 79. | 46. | 84. | 80. | 62. | 76. | 47. | 57. | 61. | 40. | -5. | 62. | 57.42 |

| king001 | 81.00 | 61.44 | 87.83 | - | 73.35 | 84.39 | 37.58 | 62.99 | 65.68 | 81.96 | - | 70.76 | - |

| UAlberta | 80.57 | 44.13 | 81.60 | - | 67.85 | 82.78 | 44.90 | 63.58 | 67.15 | 60.22 | -1.74 | 57.16 | - |

| ustcctsu | 74.87 | 41.44 | 70.90 | 78.40 | 47.63 | 65.80 | 46.02 | 45.41 | 46.87 | 61.32 | -24.79 | 68.51 | 51.87 |

| umbclu | 82.23 | 12.63 | 4.30 | 78.75 | 45.69 | 15.52 | 51.53 | 48.36 | 3.54 | -3.75 | -7.75 | 60.89 | 32.66 |

| AAdaM (ours) | 81.39 | 55.07 | 86.29 | 79.37 | 72.88 | 83.86 | 52.80 | 64.99 | 65.32 | 60.03 | 15.53 | 62.05 | 64.97 |

5 Results and Analysis↩︎

5.1 Subtask A: Supervised Learning↩︎

In Table 1, we compare the performance on development sets using fine-tuning and adapter-based tuning along with various techniques. Fine-tuning achieves the best performance in most languages (6 out of 9), which is unsurprising as it optimizes the entire parameter space. Notably, adapter-based tuning demonstrates comparable performance to fine-tuning in Hausa (hau) and Telugu (tel), while even surpassing it in Kinyarwanda (kin), Marathi (mar) and Spanish (spa). Looking at the effectiveness of TAPT and warmup, we observe that they provide benefits in most cases compared to using no techniques at all. Nonetheless, the improvements are sometimes marginal, particularly in languages such as Amharic (amh), English (eng), and Moroccan Arabic (ary), where the baseline performances are already relatively strong compared to other languages.

In our final submission, we selected the best model for each language based on the performance of development sets. As shown in Table 2, our approach largely improves the baseline results [16], especially for Algerian Arabic (arq), Kinyarwanda (kin), and Moroccan Arabic (ary). In comparison to several top-performing submitted systems, we achieve the best performance in Spanish (spa). There were a total of 40 final submissions in subtask A, and our system ranks first on average in the official leaderboard.5

5.2 Subtask C: Cross-lingual Transfer↩︎

In subtask C, we replace source language adapters from subtask A with target language adapters. We analyze two groups of language adapters: base language adapters trained only on Leipzig corpora and TAPT language adapters further trained on unlabeled task data. The cross-lingual transfer results on development sets are shown in Figure 2. We observe a discrepancy in the optimal source languages selected with two types of adapters, indicating a behavior shift after applying TAPT. Furthermore, the performance for target languages shows high sensitivity to the choice of source language. For example, using Spanish (spa) as the source language for Indonesian (ind) performs significantly better than using Kinyarwanda (kin), showcasing the importance of careful source language selection. When examining each target language, we find that in the case of Amharic (amh), the cross-lingual transfer performance is comparable to its supervised learning performance. However, it remains a challenge for a few languages, such as Indonesian (ind) and Punjabi (pan).

The results for test sets are shown in Table 3. Compared to LaBSE [39], a multilingual sentence embedding model, our cross-lingual transfer approach achieves better performance on most languages, especially for Algerian Arabic (arq), Hausa (hau), Moroccan Arabic (ary), and Punjabi (pan). However, our system is surpassed by the simple word overlap baseline in Indonesian (ind), Moroccan Arabic (ary) and Spanish (spa). This highlights the need for nuanced investigation of data distributions across various languages. Subtask C received 18 submissions in total, and we perform the best in the official leaderboard. In particular, we achieve the best performance in Indonesian (ind) and Punjabi (pan), which seem harder for other teams. For Punjabi (pan), where most teams get negative correlation scores, our method maintains its effectiveness.

5.3 Analysis↩︎

We partition ground-truth relatedness scores, ranging from 0 to 1, to different levels for fine-grained analysis. Figure 3 shows the detailed model performance for several under-performing languages. Although our evaluation scores on the entire test sets are all positive, some subsets exhibit negative correlations, particularly those with lower relatedness scores. Moreover, AAdaM largely lags behind the simple word overlap baseline for Algerian Arabic (arq) and Indonesian (ind) within the 0 to 0.25 range. These observations highlight the complexity of capturing nuanced relationships within specific categories, possibly affected by the data annotation procedure and unbalanced learning.

Figure 3: Performance on test sets (Spearman’s correlation \(\times 100\)) in different relatedness levels.

6 Conclusion↩︎

In this paper, we introduce our multilingual STR system, AAdaM, developed for the SemEval-2024 Task 1, which achieves competitive results in both subtask A and subtask C. We see noticeable improvements by using data augmentation and task-adaptive pre-training, and demonstrate that adapter-based tuning is an effective approach for supervised learning and cross-lingual transfer. Despite these strengths, our fine-grained analysis reveals that capturing nuanced semantic relationships remains a challenge, highlighting the need for further granular investigation and modeling improvements.

Limitations↩︎

Although our approach has demonstrated impressive performance, relying on development sets for source language selection undermines its practical value in the true zero-shot setting. While linguistic (dis)similarity [33] is a commonly used estimator for cross-lingual transfer performance, it alone does not explain many transfer results [40]. [41] survey different factors that impact cross-lingual transfer performance, finding contradictory conclusions from previous studies. In future work, we plan to scrutinize the interplay among various factors, and select the optimal source language without relying on post-hoc evaluation.

Acknowledgements↩︎

We thank Vagrant Gautam and Badr M. Abdullah for their proofreading and anonymous reviewers for their feedback. Miaoran Zhang received funding from the DFG (German Research Foundation) under project 232722074, SFB 1102. Jesujoba O. Alabi was supported by the BMBF’s (German Federal Ministry of Education and Research) SLIK project under the grant 01IS22015C.

7 Model and Architecture Selection↩︎

In our preliminary study, we examine the capacity of different pre-trained models with or without any training. To assess their out-of-the-box effectiveness, we extract contextual embeddings for pairs of sentences from various multilingual models, and use the cosine similarity to predict the semantic relatedness score. The multilingual models include:

general-purpose models: XLMR-large [36], AfroXLMR-large [37], AfriBERTa-large [42], AfroXLMR-large-61L and AfroXLMR-large-75L [35]

Additionally, we add two simple baselines for comparison: word overlap7 and fastText [43]. For both fastText vectors and contextual embeddings, we employ mean pooling to get sentence embeddings.

In Table 4, we can see that sentence transformers achieve superior performance in most languages when no training is conducted. This observation is not unsurprising, as they have been trained for sentence embeddings that can better capture the semantic relationships. However, this trend shifts upon fine-tuning the models on task data with either bi-encoder or cross-encoder architecture. Notably, with the cross-encoder architecture, AfroXLMR-large-61L achieves comparable performance to LaBSE. To satisfy the requirement in subtask C, for which the pre-trained model should not be trained on any relatedness or similarity datasets, we adopt AfroXLMR-large-61L as our backbone model with the cross-encoder architecture for all our experiments.

| Model | eng | amh | arq | ary | spa | hau | mar | tel | Avg.\(\uparrow\) |

|---|---|---|---|---|---|---|---|---|---|

| Baselines w/o training: | |||||||||

| Overlap | 56.57 | 63.28 | 44.00 | 53.76 | 58.67 | 38.86 | 57.52 | 60.61 | 54.16 |

| FastText | 55.69 | 60.64 | 44.27 | 22.12 | 57.47 | 9.19 | 59.23 | 69.39 | 47.25 |

| mpnet-base-v2 | 81.94 | 69.94 | 26.35 | 34.40 | 56.58 | 30.86 | 72.43 | 56.33 | 53.60 |

| LaBSE | 72.14 | 76.49 | 40.80 | 38.58 | 63.11 | 41.51 | 73.83 | 75.99 | 60.31 |

| XLMR-large | 39.53 | 42.07 | 27.91 | 4.15 | 47.59 | 7.34 | 40.51 | 56.36 | 33.18 |

| AfroXLMR-large | 16.55 | 39.82 | 20.30 | -0.46 | 30.42 | 8.13 | 35.94 | 30.74 | 22.68 |

| AfriBERTa-large | 53.12 | 69.23 | 16.04 | 13.36 | 56.68 | 35.14 | 20.84 | 9.73 | 34.27 |

| AfroXLMR-large-61L | 44.10 | 52.96 | 32.15 | 0.35 | 51.07 | 17.62 | 37.66 | 47.17 | 35.39 |

| AfroXLMR-large-75L | 22.61 | 37.93 | 29.38 | -2.39 | 43.58 | 13.86 | 32.13 | 40.42 | 27.19 |

| Bi-encoders w/ supervised training: | |||||||||

| mpnet-base-v2 | 85.07 | 80.43 | 56.73 | 75.51 | 65.29 | 58.62 | 81.53 | 74.49 | 72.21 |

| LaBSE | 84.45 | 82.59 | 59.49 | 78.29 | 69.02 | 68.94 | 83.97 | 76.35 | 75.39 |

| AfroXLMR-large-61L | 82.81 | 74.61 | 40.02 | 66.58 | 66.65 | 66.51 | 38.51 | 65.73 | 62.68 |

| Cross-encoders w/ supervised training: | |||||||||

| mpnet-base-v2 | 80.26 | 75.04 | 60.25 | 80.31 | 64.92 | 53.66 | 65.36 | 68.54 | 68.54 |

| LaBSE | 86.13 | 84.75 | 60.75 | 82.55 | 67.23 | 69.31 | 81.10 | 77.25 | 76.13 |

| AfroXLMR-large-61L | 86.65 | 84.88 | 46.61 | 81.56 | 69.08 | 74.65 | 75.55 | 80.94 | 74.99 |

| Language | Family / Subfamily | Domain | Corpus Size |

|---|---|---|---|

| English (eng) | Indo-Europoean / Germanic | News, Wikipedia | 1.2M |

| Afrikaans (afr) | Indo-Europoean / Germanic | News, Wikipedia | 68k |

| Amharic (amh) | Afro-Asiatic / Semitic | Community, Wikipedia | 250k |

| Modern Standard Arabic (arb) | Afro-Asiatic / Semitic | News, Wikipedia | 110k |

| Algerian Arabic (arq) | Afro-Asiatic / Semitic | News | 244k |

| Moroccan Arabic (ary) | Afro-Asiatic / Semitic | News | 564k |

| Spanish (spa) | Indo-Europoean / Italic | News, Wikipedia | 444k |

| Hausa (hau) | Afro-Asiatic / Chadic | Community, Wikipedia | 564k |

| Hindi (hin) | Indo-European / Indo-Iranian | News, Wikipedia | 472k |

| Indonesian (ind) | Austronesian / Malayic | News, Wikipedia | 92k |

| Kinyarwanda (kin) | Niger-Congo / Atlantic–Congo | Community | 320k |

| Punjabi (pan) | Indo-European / Indo-Iranian | Wikipedia | 412k |

| Marathi (mar) | Indo-European / Indo-Iranian | News, Wikipedia | 856k |

| Telugu (tel) | Dravidian / South-Central | News, Wikipedia | 756k |

8 Pre-training Data Collection↩︎

To pre-train language adapters, we collect open-source corpora from the Leipzig Corpus Collection and use the recent data derived from news and wikipedia domains. Data statistics are shown Table 5. As the SemRel data spans over diverse domains, there is a potential rish of domain mismatch between the pre-training data and task data, which needs a further investigation.

9 Source Language Selection↩︎

To determine the best source language for cross-lingual transfer, we explore three metrics to estimate the transfer performance:

9.0.0.1 Linguistic distance.

We use the average of six distances obtained from the URIEL Database [33] to measure the similarity between a pair of languages. These distances include syntactic, phonological, inventory, geographic, genetic, and featural distances. A lower distance indicates that the two languages are more similar, potentially facilitating more effective transfer.

9.0.0.2 Token overlap.

We follow [34] to measure how many tokens are shared in the source training set and the target test set. A higher token overlap indicates that more tokens were encountered during training in the source language, potentially transferring more supervision from the source to the target.

9.0.0.3 Development set performance.

As small development sets are available in the shared task, we use their performance as an indicator of the transfer performance on test sets, assuming that they share a similar data distribution.8

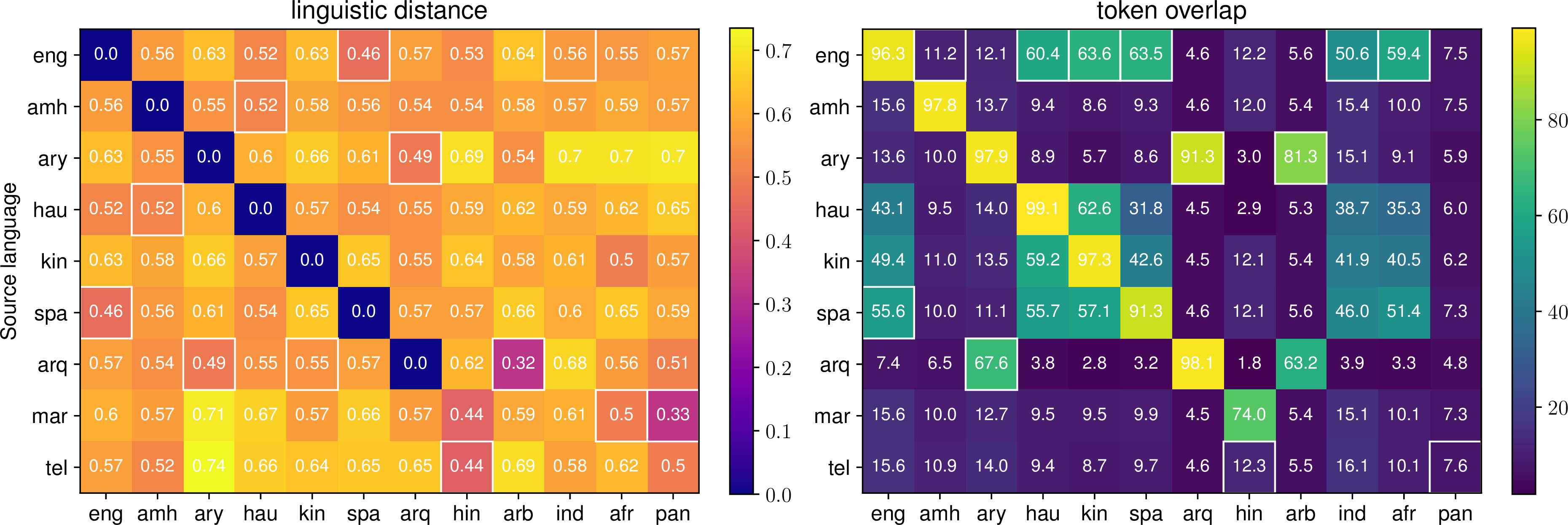

In Figure 4, we show the metric values across different source languages, along with the best source languages identified by distinct metrics. After post-hoc evaluation following the release of test sets, we find that the performance of the development set indeed serves as the most reliable indicator, as the optimal source languages it selected closely align with the ground truth selections.

Left: Linguistic distances between source and target languages. The smallest distance for each target language is highlighted with a box. Right: Token overlaps between source and target languages. The highest overlap for each target language is highlighted with a box. The corresponding source languages are predicted as the best sources for cross-lingual transfer.

Performance on development sets (Spearman’s correlation ×100) using different types of language adapters. Boxes are used to highlight the optimal performances for each target language, and the corresponding source languages are predicted as the best sources for cross-lingual transfer.

Performance on test sets (Spearman’s correlation ×100) using different types of language adapters. Boxes are used to highlight the optimal performances for each target language, and the corresponding source languages are the ground-truth best sources for cross-lingual transfer.

Figure 4: Comparison of different source language selection methods..

References↩︎

Our code: https://github.com/uds-lsv/AAdaM↩︎

Note that when transferring from any other language to English, we ensure that the source task adapter has not been trained on augmented data translated from English resources, thereby eliminating the effect of data leakage.↩︎

The existence of development sets is not realistic in the true zero-shot scenario, and we leave further discussion to the Limitations section.↩︎

PALI and king001 also achieved competitive performance; however, they are not ranked in the official leaderboard due to missing system descriptions.↩︎

https://huggingface.co/sentence-transformers/paraphrase-multilingual-mpnet-base-v2↩︎

https://github.com/semantic-textual-relatedness/Semantic_Relatedness_SemEval2024/blob/main/STR_Baseline.ipynb↩︎

When training is allowed, it might be more advantageous to use small development sets for training directly rather than source selection, which needs to be further explored.↩︎