Explainable AI Integrated Feature Engineering for Wildfire Prediction

April 01, 2024

Abstract

Wildfires present intricate challenges for prediction, necessitating the use of sophisticated machine learning techniques for effective modeling[1]. In our research, we conducted a thorough assessment of various machine learning algorithms for both classification and regression tasks relevant to predicting wildfires. We found that for classifying different types or stages of wildfires, the XGBoost model outperformed others in terms of accuracy and robustness. Meanwhile, the Random Forest regression model showed superior results in predicting the extent of wildfire-affected areas, excelling in both prediction error and explained variance. Additionally, we developed a hybrid neural network model that integrates numerical data and image information for simultaneous classification and regression. To gain deeper insights into the decision-making processes of these models and identify key contributing features, we utilized eXplainable Artificial Intelligence (XAI) techniques, including TreeSHAP, LIME, Partial Dependence Plots (PDP), and Gradient-weighted Class Activation Mapping (Grad-CAM). These interpretability tools shed light on the significance and interplay of various features, highlighting the complex factors influencing wildfire predictions. Our study not only demonstrates the effectiveness of specific machine learning models in wildfire-related tasks but also underscores the critical role of model transparency and interpretability in environmental science applications.

December 2023

1 Introduction↩︎

Wildfires, while crucial in maintaining the ecological equilibrium of our planet by aiding in carbon capture, promoting biodiversity, and supporting soil and water conservation, face increasing threats from their own intensity and frequency. These natural phenomena, originating in forests, pose significant dangers to the environment, wildlife, and human populations. Forests play an essential role in sustaining life, leading to a worldwide agreement on the urgency of addressing wildfire challenges. The consequences of wildfires extend across environmental, economic, and social realms.

These fires disrupt ecological balance, affecting the flora, fauna, and soil health of the regions they engulf. The lasting impact of wildfires can lead to a decrease in forested areas, which in turn causes soil erosion and changes in plant regrowth patterns. A reduced tree canopy can exacerbate air pollution. The increase in carbon dioxide and monoxide emissions from these fires can contribute to global warming and climate change. Additionally, the smoke from wildfires poses significant health risks, creating new health concerns and exacerbating existing health problems for people.

Economically, the impact of wildfires is profound. Many indigenous and local communities rely heavily on forest resources for their livelihoods. The devastation caused by wildfires can severely affect these groups, compromising their daily subsistence. Additionally, the economic implications extend beyond rural areas, impacting urban sectors as well. The timber industry, for example, faces risks due to the substantial loss of timber caused by fires. In several countries, key trade routes run through forest areas, and any damage due to wildfires can disrupt transportation and trade activities. Furthermore, the tourism sector is also vulnerable; damaged and scarred landscapes from wildfires can dissuade potential tourists, affecting the industry significantly.

In addition to their environmental and economic impacts, wildfires represent a significant risk to human safety. When uncontrolled, these fires can encroach upon nearby residential areas, leading to property damage and potential loss of life. Residents in affected regions may suffer from ongoing health issues. As previously mentioned, the smoke produced by these fires is a health hazard, capable of causing new health problems and exacerbating pre-existing conditions. Particularly at risk are the young and the elderly, who are more susceptible to the adverse effects of wildfire smoke.

In the field of predicting wildfire risks, there’s a growing trend of using data-driven machine learning techniques. For example, George [2] utilized a Support Vector Machine to create an algorithm specifically for fire risk categorization, dividing it into four distinct classes. This algorithm was based on parameters that included historical fire data and specific meteorological conditions. When applied in Lebanon, George’s model demonstrated remarkable effectiveness, achieving an impressive 96% accuracy rate in predicting the hazard levels of fires, using previous weather conditions as a basis for its assessments. Similarly, Onur and colleagues [3] delved into the use of the Multilayer Perceptron, powered by a back-propagation algorithm, to assess wildfire risks. Their research focused on predicting the likelihood of forest fires in Turkey’s Upper Seyhan Basin. Complementing this approach, Daniela Stojanova and her team [4] compared traditional statistical methods with advanced data mining techniques like decision trees. Their goal was to develop a predictive model for fire occurrences in the Kras region, coastal areas of Slovenia, and beyond. To achieve this, they utilized data from Geographic Information Systems (GIS), Remote Sensing imagery, and sophisticated weather forecasting models .

The field of Explainable Artificial Intelligence (XAI) is rapidly evolving, introducing a wide range of methods and categorizations (e.g., [5]–[10]). For instance, Samek et al. [9] categorize XAI algorithms based on various mathematical techniques used for generating explanations, including local surrogates, occlusions, gradient-driven methods, and layer-specific relevance propagation. Furthermore, XAI categorizations are being tailored for specific research areas, such as medical image analysis, as illustrated by Muddamsetty et al. Another approach to XAI classification focuses on its functionality and delivery method, encompassing algorithms, visual representations, auditory methods, Knowledge Graphs (KGs), and straightforward textual explanations, as discussed by Rawal et al [11]. In the context of wildfire research, two recent studies have explored the application of XAI, specifically targeting the prediction and analysis of wildfire occurrences and their dimensions. These studies represent a significant step in integrating advanced AI methodologies into environmental research, potentially enhancing our understanding and management of wildfire events [12], [13].

In conclusion, wildfires significantly impact our environment, economy, and societal health. Prompt detection and intervention are essential to curtail their reach and aftermath. By proactively tackling these fires, we can minimize their adverse effects on our environment, economic frameworks, and societies. This work focuses on utilizing machine learning algorithms for three primary objectives.

Prediction of Unintended Burned Land: We aim to leverage machine learning algorithms to predict the extent of land that might be unintentionally burned inside and outside the intended boundaries of prescribed fires. By analyzing historical fire data, weather patterns, and other relevant factors, the project seeks to provide accurate estimates of potential risks and improve forest management decisions to minimize the impact on nearby communities and ecosystems.

Enhanced Prescribed Fire Safety Assessment: Another key objective is to develop machine learning models for assessing the safety of prescribed fire behavior. By integrating data on fire behavior, weather conditions, fuel types, and terrain characteristics, the project aims to create predictive tools that can identify potential risks and enable fire management teams to optimize burn plans. These safety assessments will contribute to safer and more controlled prescribed burns, reducing hazards to human lives, properties, and the environment.

Designing Explainable AI(XAI) Models: Additionally, we aim to show machine learning models with strong interpretability and explainability. While machine learning can provide valuable insights, black-box models often lack transparency, hindering forest managers’ ability to understand the underlying factors influencing predictions. By focusing on interpretable models, the project seeks to empower decision-makers with a clear understanding of the features and processes driving the model’s outputs, facilitating more effective and informed forest fire management strategies.

2 Methods↩︎

In our research, we focus on two primary tasks: Classification and Regression. The Classification task is dedicated to predicting safety behaviors in the context of wildfires, while the Regression task aims at estimating the burned areas both within and outside the fire boundaries. Initially, we tackle these tasks separately, detailing the specific methods and algorithms we choose for each.

For the Classification task, we compare different algorithms and utilize algorithm XGBoost best suited to differentiate and predict various safety behaviors related to wildfires. This involves analyzing patterns and correlations in the data that could indicate effective safety measures.

In the Regression task, our objective is to accurately predict the extent of areas affected by wildfires, both inside and outside the fire’s boundaries. This requires a different set of algorithms, Random Forest is the best for processing spatial and environmental data to estimate the impact area of the fire after comparsion.

After conducting these tasks independently, we then employ a Convolutional Neural Network (CNN) model to address both tasks simultaneously. The CNN model, known for its proficiency in handling image data, allows us to integrate and analyze complex datasets. This approach enables us to perform both classification and regression in a unified framework, potentially leading to more cohesive and insightful results in our wildfire research.

2.1 Classifciation-XGBoost↩︎

XGBoost, abbreviated from “eXtreme Gradient Boosting", has emerged as a paramount tool in the machine learning landscape, excelling in both classification and regression tasks [14]. When juxtaposed with the traditional Gradient Boosting (GB), it emerges superior due to a suite of enhanced design features. These include improved resilience in managing missing values, the incorporation of an approximate and sparsity-conscious split-finding algorithm, capabilities for parallel computing, cache-conscious access patterns, block compression, and sharding. This suite of advancements renders XGBoost an exceedingly effective tool, especially for intricate and precision-critical classification scenarios.

The construction of each decision tree within XGBoost leverages the gradient descent approach. This starts with a predefined threshold and iteratively adjusts the weights to curtail residuals in every iteration. As a result, each tree constructed in a subsequent iteration is distinct, as it aims to rectify or mitigate errors introduced by its predecessor.

2.2 Regression-Random Forest↩︎

The Random Forest (RF)[15] is essentially a collection of multiple decision trees that work collectively as an ensemble. Within this structure, every single tree in the RF offers a class prediction, and the class that amasses the majority of votes across all trees is then selected as the model’s final prediction. At the heart of RF lies the principle known as the “wisdom of crowds." This philosophy suggests that a diverse group of models (in this case, trees) working together can make better collective judgments than any individual model on its own. Essentially, by pooling together multiple”weak learners" (individual trees), an overarching “strong learner" can be established.

Much like its predecessor, the Decision Tree (DT) classifier, the RF doesn’t necessitate feature scaling. However, it presents distinct advantages over the DT. For instance, RF is more resilient to variations in training samples and any noise within the training dataset. On the flip side, while the DT’s decisions can be visualized and understood with relative ease, the complex ensemble nature of RF makes it less intuitive and harder to decipher [16].

A Random Forest consists of a collection of decision trees, each independently constructed using bootstrapped samples from the training data. During the construction of a tree, at each node, a random subset of features is chosen as candidates for splitting. Final predictions are made by aggregating predictions from individual trees. For classification, majority voting is used, while for regression, predictions are usually averaged.

2.3 Convolutional Neural Network↩︎

The neural network architecture comprises three main components: ResNet-50, a classification head, and a regression head. Initially, a pre-trained ResNet-50 is loaded, followed by the replacement of its final fully connected layer. Subsequently, both the classification and regression heads are defined. The output from the modified fully connected layer is concatenated with an additional set of five features. These combined features are then fed into both the classification and regression heads, facilitating the computation of respective outputs for classification and regression tasks. This approach leverages the strength of ResNet-50 in feature extraction and expands its applicability through specialized heads for distinct predictive objectives.

Our classification loss \(L_{cls}\) and regression loss \(L_{reg}\) are as follows: \[L_{cls} = -\frac{1}{N} \sum_{i=1}^{N} [y_i \log(\hat{y}_i) + (1 - y_i) \log(1 - \hat{y}_i)]\]

\[L_{reg} = \frac{1}{N} \sum_{i=1}^{N} (y_i - \hat{y}_i)^2\] The total loss is: \[L = L_{cls} + \lambda L_{reg}\] Here, \(y_i\) denotes the true label (either 0 or 1) for the i-th data point, \(\hat{y}_i\) is the predicted probability of the i-th data point being in class 1. \(N\) is the total number of data points in the dataset.

2.4 Explainable AI methods↩︎

Explainable Artificial Intelligence (XAI) involves the development of AI methods and techniques that produce results interpretable by human experts. In XAI, researchers primarily concentrate on two aspects: Interpretability [17] and Explainability [17] of AI models. Interpretability is the capacity of a model to present its workings in terms understandable to a human. Ideally, these explanations should be in the form of logical decision rules (if-then statements) or convertible into such rules. The terms used in these explanations should either stem from domain-specific knowledge pertinent to the task or be based on general knowledge relevant to that task. Explainability is a broader and more inclusive concept than interpretability. It refers to a model’s ability to clearly articulate its internal mechanics and the rationale behind its decisions, particularly in the case of complex, opaque ‘black-box’ models. Explainability extends beyond interpretability, often serving as a means to achieve a certain degree of interpretability in otherwise intricate models. In summary, explainability seeks to enhance the transparency and comprehensibility of models, thereby making them more accessible and accountable to users and stakeholders.

Here we introduce several XAI methods that we use in our paper.

2.4.1 TreeSHAP↩︎

TreeSHAP [18], [19]is a variant of the SHAP (SHapley Additive exPlanations) framework, designed to clarify machine learning predictions by calculating Shapley values for specific data points, detailing the combined contributions of its distinct feature variables.

In our study, we employed the TreeSHAP method to analyze the predictions made by the Extreme Gradient Boosting algorithm, with the goal of overcoming the typical opacity associated with machine learning algorithms and fostering an Explainable Artificial Intelligence (XAI) framework. By adopting this approach, we aimed not only to clarify the decision-making process of the model but also to pinpoint the key features that significantly impact both the classification of wildfire behavior and the estimation of the burned area. This methodology aids in unraveling the complexities of the algorithm, providing insights into how specific variables influence the model’s predictions.

2.4.2 LIME↩︎

[20] called Local Interpretable Model-agnostic Explanations, is based on a surrogate model. The surrogate model is usually a linear model constructed based on different samples of the main model. It does this by sampling points around an example and evaluating models at these points. LIME generally computes attribution per sample basis. It takes a sample, perturbs multiple times based on random binary vectors, and computes output scores in the original model. It then uses the binary features (binary vectors) to train an interpretable surrogate model to produce the same outputs. Each of the coefficients in the trained surrogate linear model serves as the input feature’s attribution in the input sample. One of the major issues with LIME is robustness. LIME explanations can disagree if computed multiple times. This disagreement occurs mainly because this interpretation method is estimated with data, causing uncertainty. Moreover, the explanations can be drastically different based on kernel width and feature grouping policies.

2.4.3 Partial Dependence Plot↩︎

The partial dependence plot[21], often abbreviated as PDP, illustrates the marginal influence that one or two features exert on a machine learning model’s predicted result (as noted by J. H. Friedman 200130). This plot can reveal if the association between the target and a specific feature is linear, monotonic, or of a more intricate nature. In the context of a linear regression model, these plots consistently depict a linear relationship.

2.5 Grad-CAM↩︎

Gradient-weighted Class Activation Mapping (Grad-CAM) [22] creates a coarse localization map that highlights the key areas in the image for concept prediction by using the gradients of each target concept flowing into the final convolutional layer. Here we add Grad-CAM to our classification neural network to show which regions vary significantly during age changes. It determines which area of the feature map has a significant correlation with the classification results by using the output probability to make an inference about it in reverse.

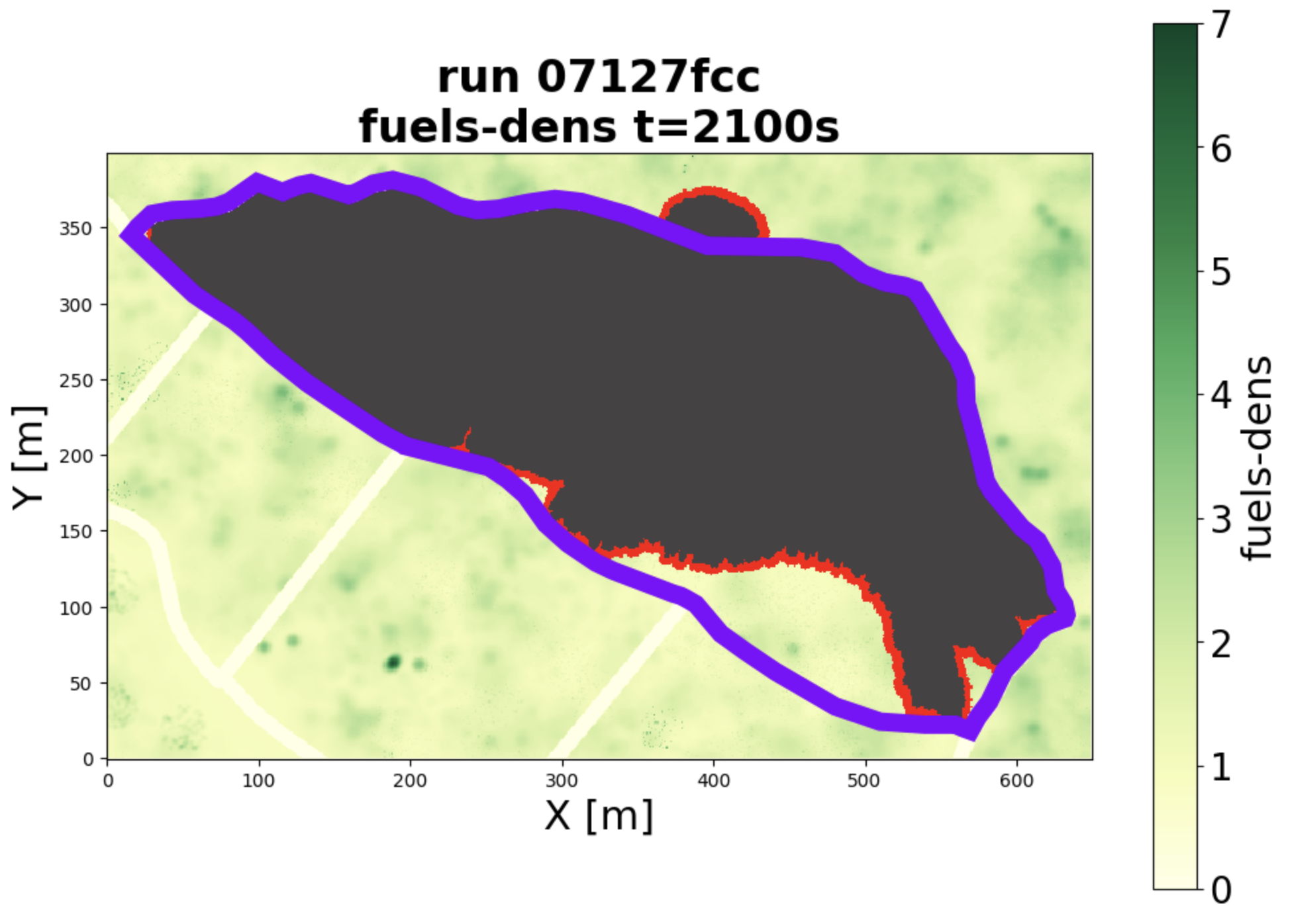

Figure 1: Wildfire simulation images from QUIC-fire.

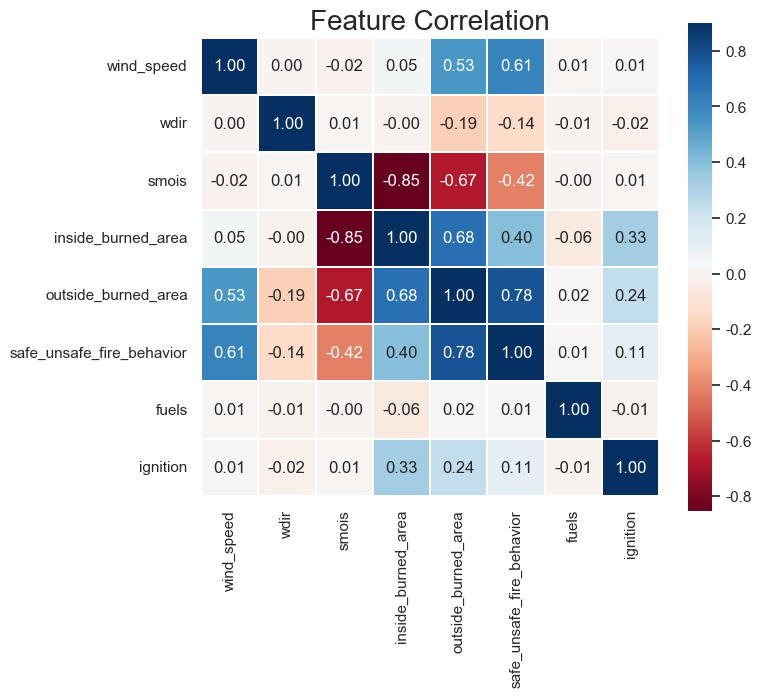

Figure 2: Feature Correlation.

3 Experiment↩︎

3.1 Data↩︎

In our research, we conducted experiments using our proposed methods on a benchmark dataset derived from the public Yosemite wildfire. Our study incorporated five key features: ‘wind\(\_\)speed’, ‘wdir’ (wind direction), ‘smois’ (soil moisture), ‘fuels’, and ‘ignition’. These features were selected based on their relevance and potential impact on wildfire behavior and spread.

For the classification task, our primary objective was to predict ‘\(safe\_unsafe\_fire\_behavior\)’. This involved categorizing fire behavior as either safe or unsafe based on the given conditions. In addition to the traditional data inputs, we also integrated image data into our Convolutional Neural Network (CNN) approach. We utilized visual representations, as depicted in the referenced Figure 1 from QUIC-fire[23], to enhance our predictive capabilities. Regarding the regression task, we aimed to predict two specific outcomes: ‘\(inside\_burned\_area\)’ and ‘\(outside\_burned\_area\)’. These targets represent the quantification of the areas affected by the wildfire, both within and beyond the fire’s immediate boundary.

To better understand the interplay between the selected features and these targets, we refer to Figure 2, which illustrates the correlations and relationships between these variables. This visual representation aids in comprehending the intricate dynamics at play in wildfire spread and impact, thereby enriching our analysis and the effectiveness of our predictive models.

3.2 Evaluation Metrics↩︎

In our study, to evaluate the performance of our machine learning models, we considered the analysis of below men tioned popularly used evaluation metrics.

Accuracy: It defines correctly classified areas with susceptible to Landslides. It can be computed as: \[\text{ Accuracy }=\frac{T P+T N}{T P+F P+T N+F N}\]

Recall: It is defined as the ratio of the number of positive samples that have been correctly predicted as Landslide Susceptible corresponding to all Landslide Susceptible samples in the data. It can computed as: \[\text{ Recall }=\frac{T P}{T P+F N}\]

Precision: It is defined as the ratio of the number of positive samples that have been correctly predicted as Landslide Susceptible corresponding to all samples predicted as Landslide Susceptible. It can be computed as: \[\text{ Precision }=\frac{T P}{T P+F P}\] where TP,FP, TN, FN are True Positive, False Positive, True Negative and False Negative respectively.

F1-Score: It is delineated as the term that balances between recall and precision. It can be defined as; \[F 1-\text{ Score }=2 \times \frac{\text{ Recall } \times \text{ Precision }}{\text{ Recall }+ \text{ Precision }}\]

Mean Absolute Error (MAE): It is defined as the mean of the absolute value of the errors: \[\frac{1}{n} \sum_{i=1}^n\left|y_i-\hat{y}_i\right|\]

Mean Squared Error (MSE): It is defined as the mean of the squared errors: \[\frac{\sum_{i=1}^n\left(y_i-\hat{y_i}\right)^2}{N}\]

Root Mean Squared Error (RMSE): It is defined as the square root of the mean of the squared errors: \[\sqrt{\frac{\sum_{i=1}^N\left(x_i-\hat{x}_i\right)^2}{N}}\]

R-squared (R2) score: It is also known as the coefficient of determination, is a statistical measure that represents the proportion of the variance in the dependent variable (target) that is predictable from the independent variables (features) in a regression model. It measures the goodness of fit of the regression model to the data. \[R^2=1-\frac{R S S}{T S S}\] where \(R^2=\)coefficient of determination, \(R S S=\) sum of squares of residuals, \(T S S=\) total sum of squares.

3.3 Parameter Optimization↩︎

Hyperparameter optimization is a critical component in the design of machine learning algorithms, where traditional methods like grid search and random search can often be computationally expensive. Bayesian optimization emerges as a principled strategy for global optimization of expensive black-box functions, making it particularly suitable for hyperparameter tuning.

Bayesian optimization is an effective method for solving functions that are computationally expensive to find the extrema[6]. It can be applied for solving a function which does not have a closed-form expression. It can also be used for functions which are expensive to calculate, the derivatives are hard to evaluate, or the function is nonconvex. Given a machine learning model with hyperparameters \(\theta\), and a validation set loss \(L(\theta)\), the goal is to find: \[\theta^*=\arg \min _\theta L(\theta)\] Bayesian optimization assists by constructing a surrogate probabilistic model over the loss function and efficiently searching the hyperparameter space \(\Theta\) to minimize the expected validation loss. Table 1 and 2 shows the parameter space and optimized parameter for classification model respectively. Table 3 shows the parameter space for regression model, Table 4 and 5 shows the optimized parameter for inside burned area and outside burned area model respectively.

To optimize the performance of our Convolutional Neural Network (CNN) model, we engaged in a systematic parameter tuning process. This involved adjusting several key hyperparameters to refine the network’s ability to learn from the data effectively and make accurate predictions. The parameters we tuned include:Learning Rate and \(\lambda\) for tuning loss.

| Model | Parameters |

|---|---|

| Logistic Regression | C: \(0.1\) to \(10\) (log-uniform), Penalty: l1, l2, Solver: liblinear, lbfgs |

| KNN | n_neighbors: 3, 5, 7, 9, 11, 13, p: 1, 2 |

| SVM | C: \(0.1\) to \(10\) (log-uniform), Kernel: linear, rbf |

| RandomForest | n_estimators: 10 to 100, max_depth: 10 to 20, min_samples_split: 2 to 10 |

| DecisionTree | max_depth: 10 to 20, min_samples_split: 2 to 10 |

| Xgboost | n_estimators: 50 to 200, max_depth: 3 to 5, learning_rate: \(0.01\) to \(0.2\) (log-uniform) |

| Catboost | iterations: 50 to 200, depth: 4 to 8, learning_rate: \(0.01\) to \(0.2\) (log-uniform) |

| Model | Best Parameters |

|---|---|

| Logistic | C: \(0.7756\), Penalty: l2, Solver: lbfgs |

| KNN | n_neighbors: 7, p: 2 |

| SVM | C: \(4.2149\), Kernel: linear |

| RandomForest | max_depth: 14, min_samples_split: 8, n_estimators: 94 |

| DecisionTree | max_depth: 14, min_samples_split: 8 |

| Xgboost | learning_rate: \(0.1140\), max_depth: 3, n_estimators: 140 |

| Catboost | depth: 6, iterations: 159, learning_rate: \(0.1636\) |

| Model | Parameter Space |

|---|---|

| ExplainableBoostingRegressor | learning_rate: Real(0.01, 0.2, prior=‘log-uniform’) |

| Linear Regression | fit_intercept: Categorical([True, False]) |

| Logistic Regression | C: Real(0.1, 10, prior=‘log-uniform’), penalty: Categorical([‘l1’, ‘l2’]), solver: Categorical([‘liblinear’, ‘lbfgs’]) |

| Ridge Regression | alpha: Real(0.01, 10, prior=‘log-uniform’) |

| Lasso Regression | alpha: Real(0.01, 10, prior=‘log-uniform’) |

| ElasticNet | alpha: Real(0.01, 10, prior=‘log-uniform’), l1_ratio: Real(0.1, 0.9, prior=‘uniform’) |

| SVM | C: Real(0.1, 10, prior=‘log-uniform’), kernel: Categorical([‘linear’, ‘rbf’]), gamma: Categorical([‘scale’, ‘auto’]) |

| RandomForest | n_estimators: Integer(10, 100), max_depth: Integer(10, 20), min_samples_split: Integer(2, 10) |

| Xgboost | n_estimators: Integer(50, 200), max_depth: Integer(3, 5), learning_rate: Real(0.01, 0.2, prior=‘’log-uniform’) |

| Catboost | iterations: Integer(50, 200), depth: Integer(4, 8), learning_rate: Real(0.01, 0.2, prior=‘log-uniform’) |

| Model | Best Params |

|---|---|

| ExplainableBoostingRegressor | ‘learning_rate’: 0.114012258603381 |

| Linear Regression | ‘fit_intercept’: True |

| Logistic Regression | ‘C’: 4.2149456283335, ‘penalty’: ‘l1’, ‘solver’: ‘liblinear’ |

| Ridge Regression | ‘alpha’: 0.16994636371262764 |

| Lasso Regression | ‘alpha’: 3.2521088005944945 |

| ElasticNet | ‘alpha’: 0.2160217783087772, ‘l1_ratio’: 0.8349780173355017 |

| SVM | ‘C’: 4.7290805470559185, ‘gamma’: ‘scale’, ‘kernel’: ‘linear’ |

| RandomForest | ‘max_depth’: 18, ‘min_samples_split’: 3, ‘n_estimators’: 64 |

| Xgboost | ‘learning_rate’: 0.12287608582119026, ‘max_depth’: 5, ‘n_estimators’: 96 |

| Catboost | ‘depth’: 6, ‘iterations’: 159, ‘learning_rate’: 0.16356457461011642 |

| Model | Best Params |

|---|---|

| ExplainableBoostingRegressor | ‘learning_rate’: 0.03790883530488498 |

| Linear | ‘fit_intercept’: True |

| Logistic | ‘C’: 4.2149456283335, ‘penalty’:‘l1’, ‘solver’: ‘liblinear’ |

| Ridge | ‘alpha’: 0.16994636371262764 |

| Lasso | ‘alpha’: 2.5041499136197736 |

| ElasticNet | ‘alpha’: 0.2160217783087772, ‘l1_ratio’: 0.8349780173355017 |

| SVM | ‘C’: 4.7290805470559185, ‘gamma’: ‘scale’, ‘kernel’: ‘linear’ |

| RandomForest | ‘max_depth’: 18, ‘min_samples_split’: 3, ‘n_estimators’: 64 |

| Xgboost | ‘learning_rate’: 0.12287608582119026, ‘max_depth’: 5, ‘n_estimators’: 96 |

| Catboost | ‘depth’: 6, ‘iterations’: 159, ‘learning_rate’: 0.16356457461011642 |

4 Results and Discussion↩︎

4.1 Classification↩︎

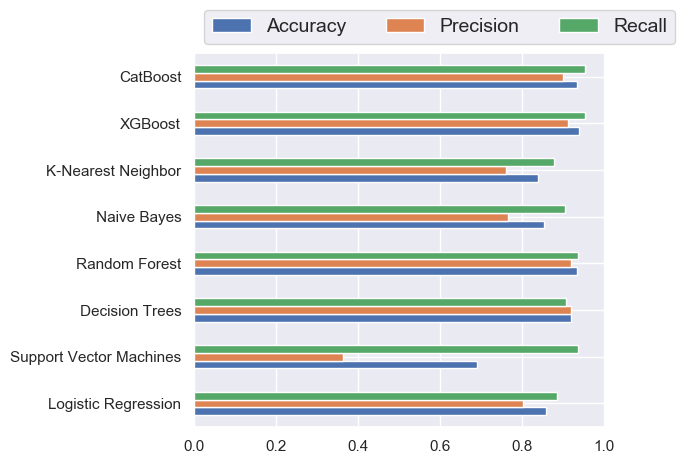

Figure 3: Performance evaluation with all features with best hyperparameters of classifiers

Precision, Recall, F1-Score and Accuracy scores of the machine learning classifers is presented on the Figure 3. We could see the XGBoost achieves the best result, so in this case we used XGBoost as the best model and analyze the results.

One thing needs to be pointed out is that ANN does not do well for the classification with accuracy 0.833, precision 0.90 and recall 0.70. The neural network model is a typical black-box model. Through internal non-linear transformations, the model obscures the correlation between inputs and outputs. Currently, the interpretability of neural networks is a hot research topic, but there is no universally recognized mainstream method to interpret the parameters and outputs of neural networks. Neural networks may not perform optimally due to the data being either linearly separable or very close to it. The predominant factors in the predictions seem to revolve around surface moisture and wind speed, making the data appear “too straightforward" for a neural network to effectively learn from.

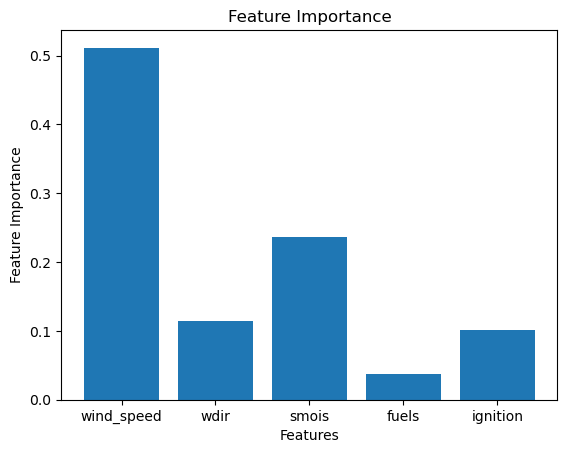

Figure 4: Feature Importance for XGBoost classifier.

The XGBoost method learns in a recursive manner using decision tree models. At each level, it uses a decision tree model to learn the residuals that the previous model could not handle correctly. In each decision tree, the closer a feature is to the root node, the greater its importance. Therefore, by averaging the importance of each feature in these decision trees, we can obtain the importance of each feature in the entire model. However, this importance reflects the ranking relationship of the feature’s impact on the target, rather than the quantitative relationship between the feature and the target. We could see the \(wind\_speed\) plays an important role for the feature importance in Figure 4.

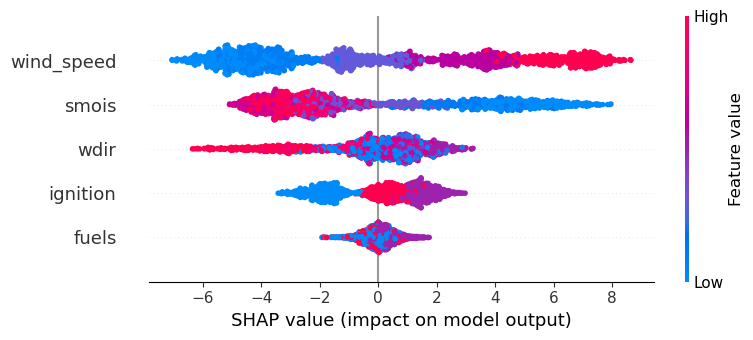

Figure 5: SHAP value(impact on model output)

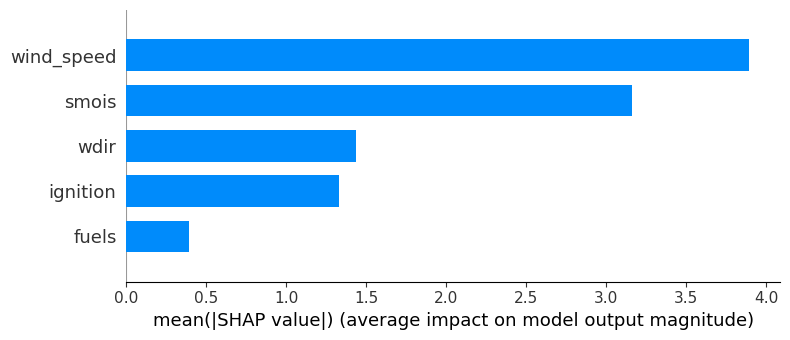

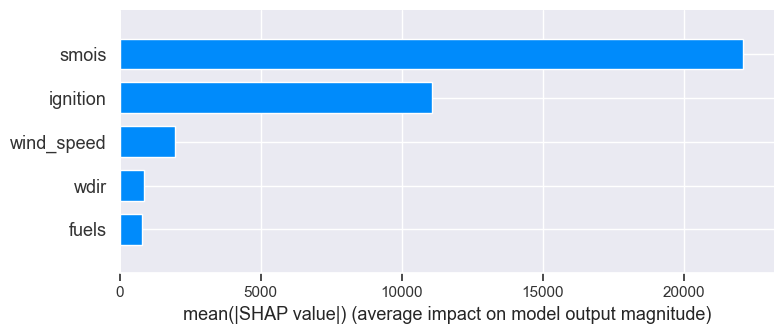

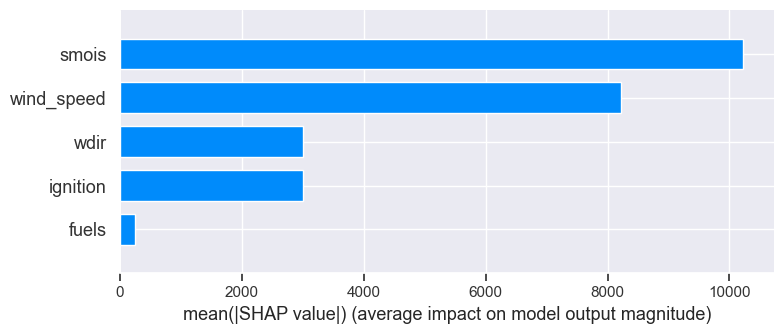

Figure 6: Average impact on model output magnitude

The Shapley analysis method can analyze the impact of a specific feature value on the target in a black-box model. Specifically, it calculates the difference between the target value computed by the black-box model when the feature takes the value, and the target value computed by the black-box model when the feature takes its mathematical expectation. This is a quantitative analysis tool. For example, from the above Figure 5, 6, we can see that when \(wind\_speed\) takes the minimum value, the target value calculated by the black box is 6 units lower than the average. When \(wind\_speed\) takes the maximum value, the target value calculated by the black box is about 8 units higher than the average. The core idea of the Shapley method is the quantitative impact on the target value when changing the value of the feature while other features are at their mathematical expectation levels. From this analysis method, in this case, \(wind\_speed\) has the greatest impact on the target and they have a positive relationship. \(smois\) has a slightly lower impact on the target and they have a negative relationship. When \(ignition\) takes a lower value, the target value is relatively small, but the relationship between it and the target value is unclear when it takes a medium or larger value. The other two features have little relationship with the target.

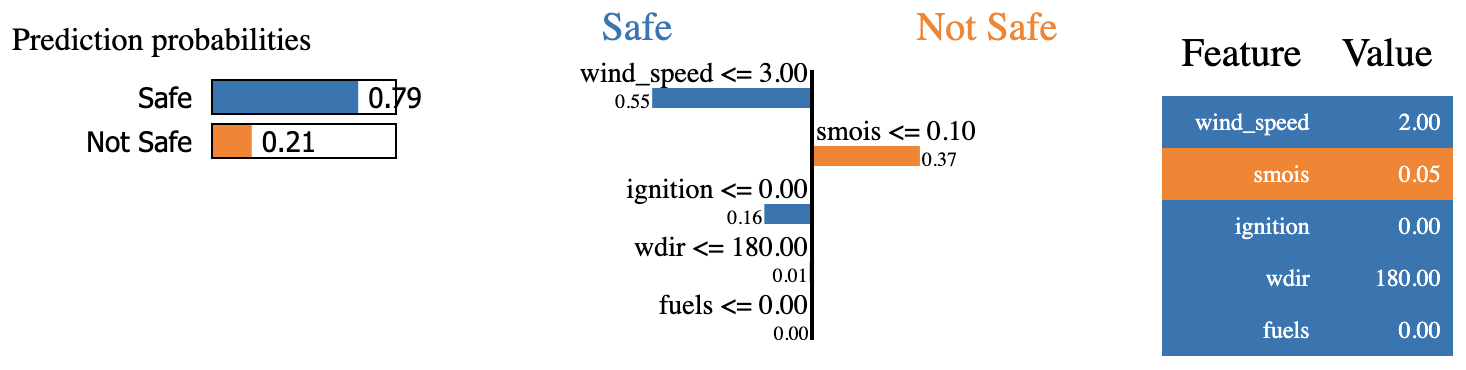

Figure 7: LIME for classification case.

The LIME (Local Interpretable Model-agnostic Explanations) algorithm uses local linear approximation to analyze the local interpretability of a black-box model. For example, here we show one sample in Figure 7 and analyzed the local linearity of the XGBoost model around them. In the first example, a linear model is used to approximate the prediction results given by the XGBoost model near this sample point. The weight of \(wind\_speed\) is -2, \(smois\) is 0.05, \(wdir\) is -180, and the weights of the other two indicators are 0. The core difference between the LIME algorithm and the Shapley algorithm is that the LIME algorithm explains the local characteristics of the black-box model, while the Shapley algorithm explains the average characteristics of the black-box model.

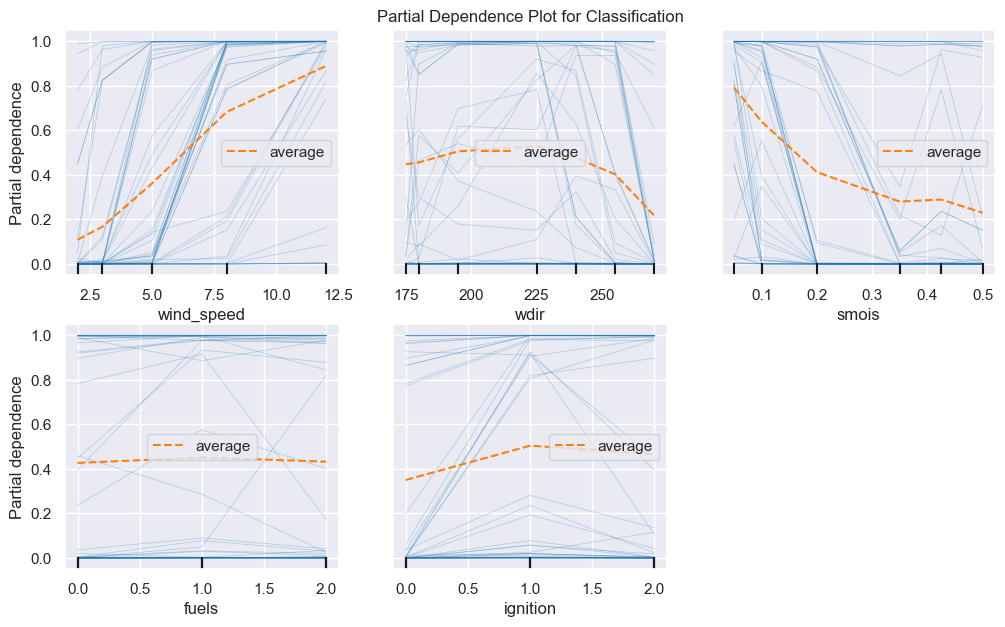

Figure 8: Partial Dependence Plot (PDP) for classification.

A Partial Dependence Plot (PDP) provides a graphical depiction of the marginal effect of a feature on the predicted outcome of a machine learning model. The PDP is computed after the model has been trained and can offer insights into the relationship between a feature and the predicted outcome. We could see the ‘wind_speed’ has a positive relationship for output and ‘smois’ has negative relationship for output in Figure 8.

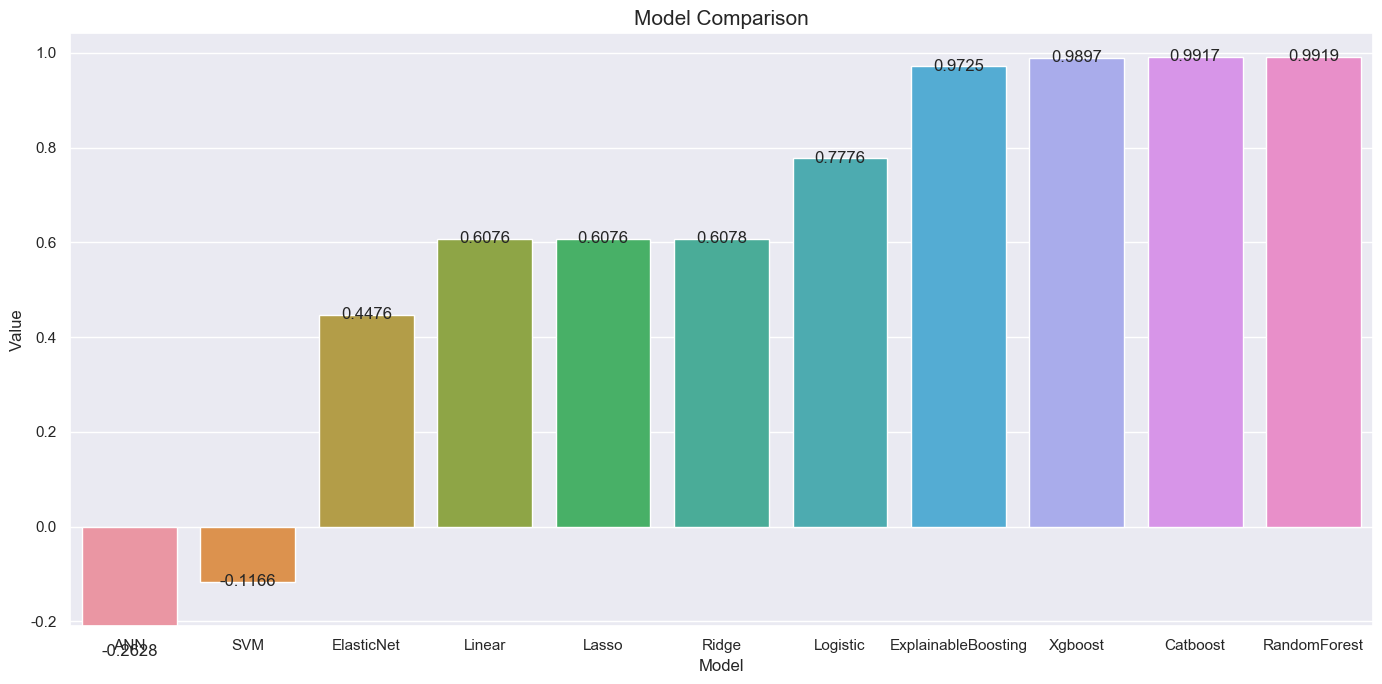

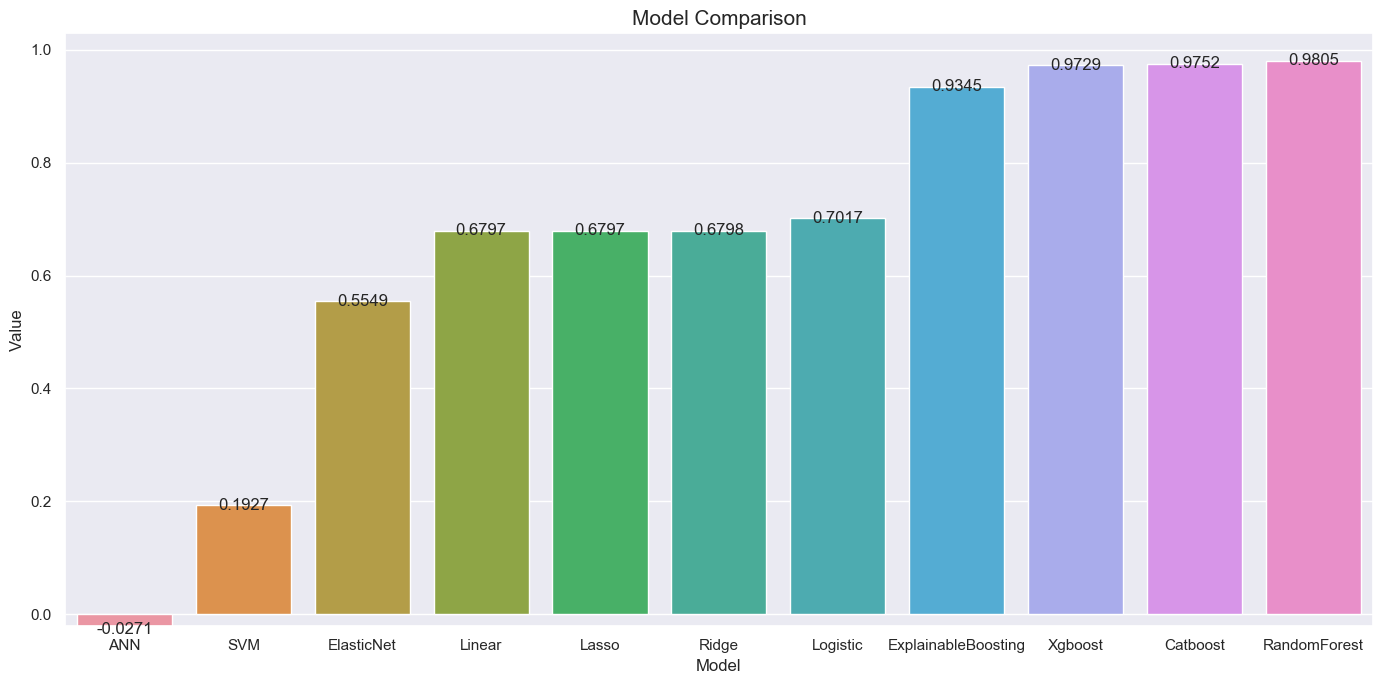

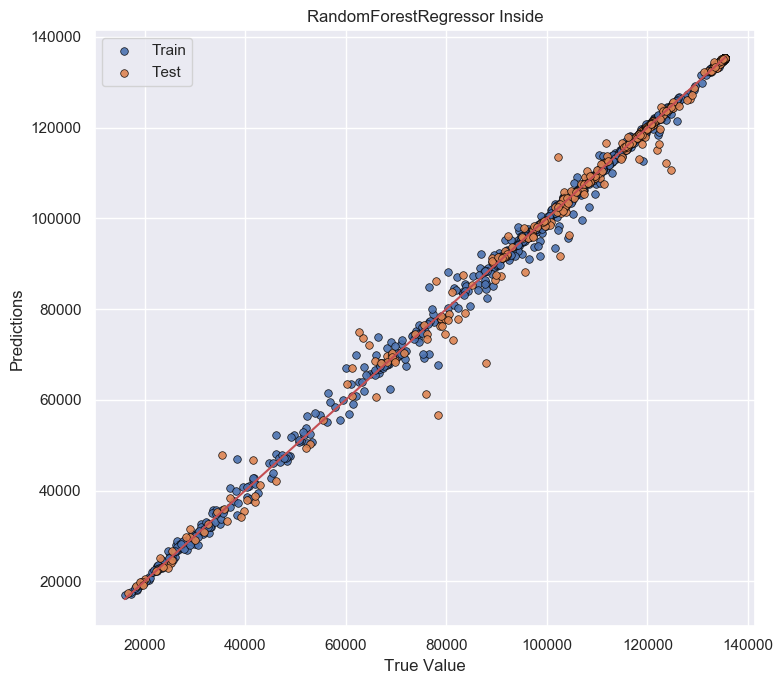

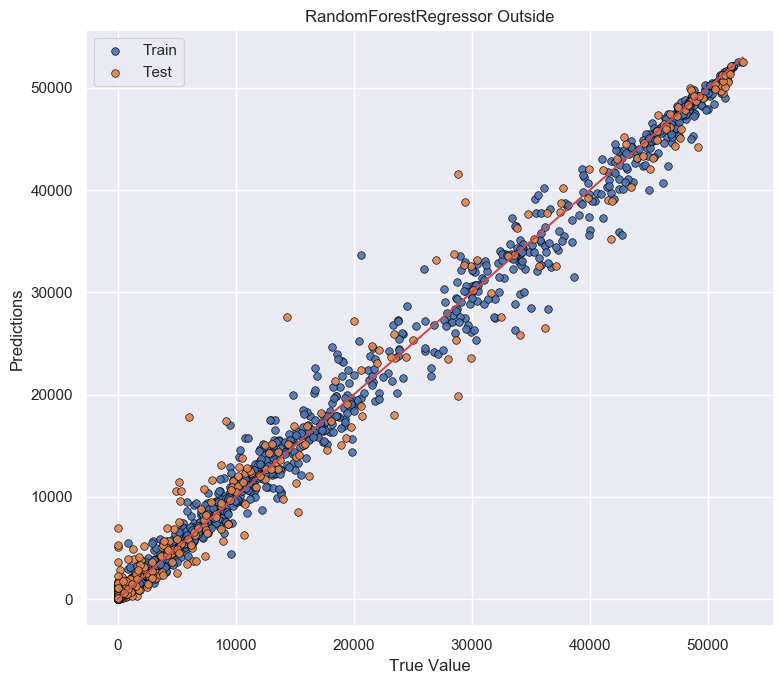

4.2 Regression↩︎

R2 scores of the machine learning regression is presented on the Figure 9 and 10. We could see the Random Forest Regression achieves the best results for both inside and outside cases. We plot the regression results on Figure 11 and Figure 12 and we could see the fitting results are ideal.

Figure 9: R2 for different inside regression models performance.

Figure 10: R2 for different outside regression models performance.

Figure 11: Regression results for inside burned area.

Figure 12: Regression results for outside burned area.

Figure 13: Average impact on model output magnitude for inside burned area.

Figure 14: Average impact on model output magnitude for outside burned area.

Here, TreeSHAP analysis based on Random Forest Regression has been used to explain the predictions made by the model. It provides insights into the contribution of each feature in influencing individual predictions. By analyzing SHAP values, one can understand the importance and impact of different features on the model’s decision-making process, gaining valuable insights into the relationships between features and the target variable in the context of Random Forest Regression. From this analysis method, in the inside case, \(smois\) and \(ignition\) have the greatest impact on the target and they have a positive relationship. \(wdir\) and \(fuels\) do not have enough impact on model output. For outside fire area prediction, the \(smois\) and \(wind\_speed\) are more important in Figure 13 and 14.

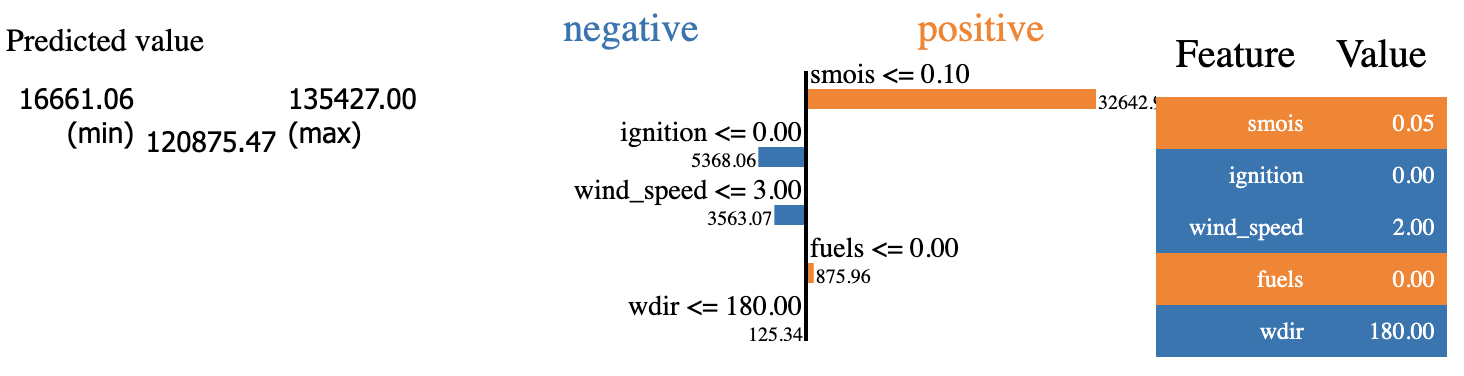

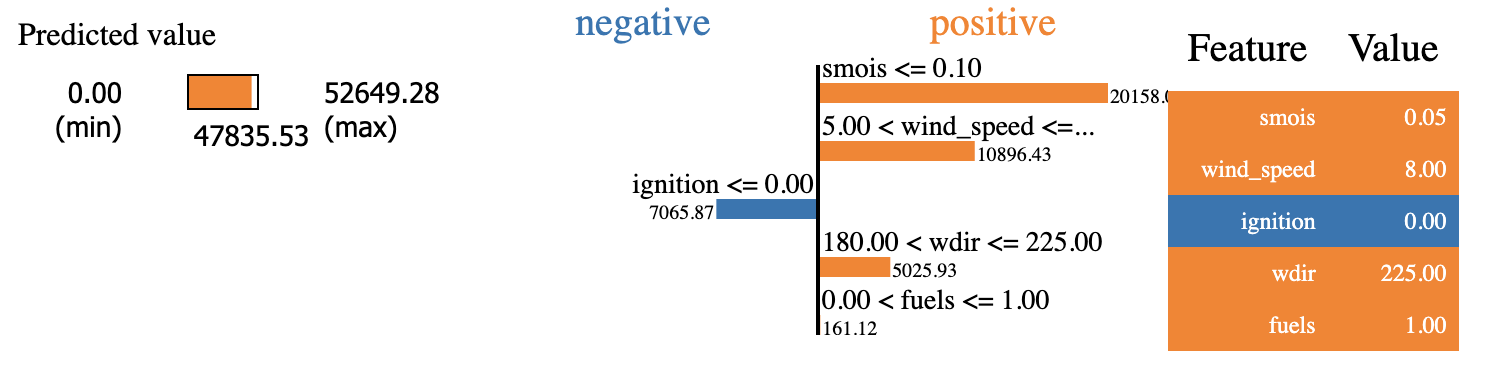

Figure 15: LIME for input burned area regression case.

Figure 16: LIME for output burned area regression case.

Here we show one example for inside and outside respectively in Figure 15 and Figure 16. and analyzed the local linearity of the Random Forest Regression model around them. To sum up, for inside fire area, the \(smois\) and \(ignition\) influence most, for outside fire area, \(smois\) and \(wind\_speed\) influence output more.

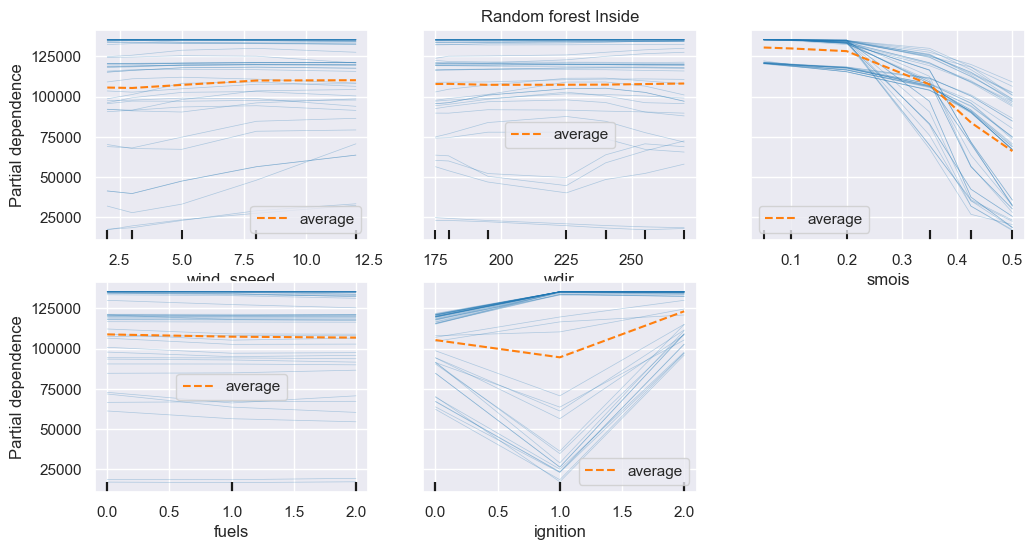

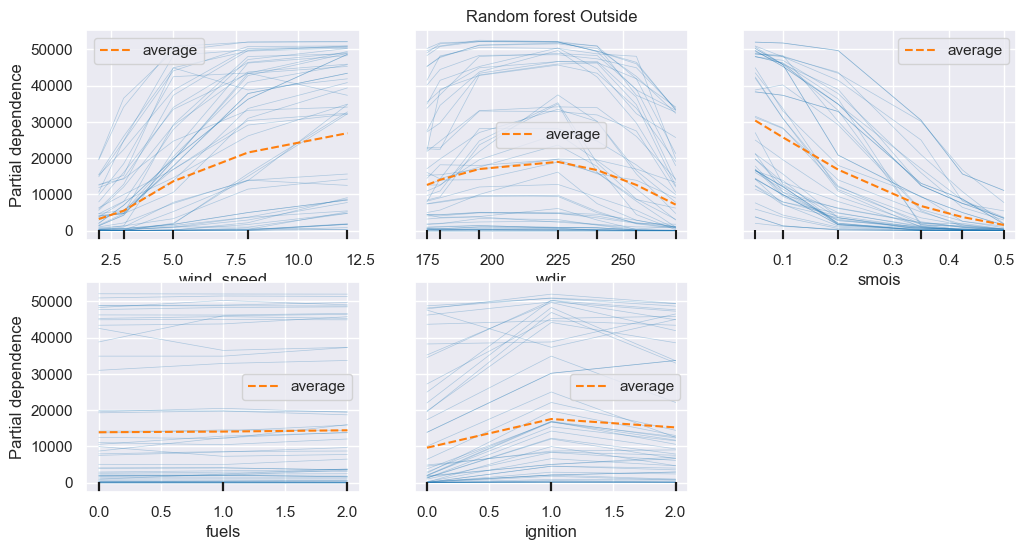

Figure 17: PDP for inside burned area regression case.

Figure 18: PDP for outside burned area regression case.

In the PDP Figure 17 and 18, to analyze whether a feature’s impact goes up or down, look at the direction of the slope of the PDP. If the slope is positive, it means that as the feature’s value increases, the predicted target variable generally goes up. Conversely, if the slope is negative, the predicted target variable goes down as the feature’s value increases.

We could see when \(smois\) is in a small value region, it has biggest influence for both inside and outside fire area prediction. The \(wind\_speed\) will influence the outside fire area when it has a larger value (over 10).

4.3 CNN↩︎

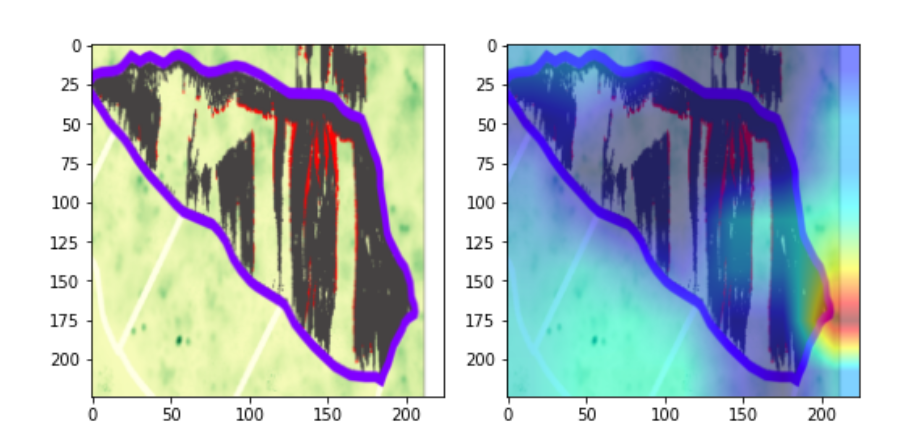

After tuning parameters, we found learning rate 0.01, \(\lambda\) is 5 will achieve the better results. The CNN classification result is 93.10%, and regression error results for inside and outside burned area are 0.75% and 2.41%. Since we utilize the images for classification, we leveraged the Grad-CAM technique to elucidate the classification decisions of our model. This approach allowed us to visually interpret which regions of the input image were most influential in the model’s classification output, thereby providing an intuitive understanding of the model’s focus and rationale in distinguishing different classes. Grad-CAM’s ability to highlight salient features directly on the input images significantly contributes to the transparency and interpretability of our model’s decision-making process in classifying complex data sets. From Figure 19, we could see the last layer output from the neural network, the red part in the right figure will help us distinguish the inside and outside.

Figure 19: Grad-CAM for prediction.

5 Conclusion↩︎

Our study emphasizes the crucial role of advanced machine learning techniques in addressing the complex challenges of wildfire prediction. We observed the XGBoost model’s exemplary performance in classification tasks and the Random Forest model’s effectiveness in regression tasks, highlighting the necessity for models specifically tailored to the unique demands of each predictive task. Furthermore, we integrated a Convolutional Neural Network (CNN) capable of concurrently executing classification and regression tasks, achieving results comparable to those of specialized models. The inclusion of eXplainable Artificial Intelligence (XAI) methods further enriched our understanding of these models, uncovering key features and their interactions that influence predictions. As wildfires continue to pose significant environmental and societal threats, the insights from this research underscore the importance of not only precise predictive modeling but also the critical need for interpretability and adaptability in models, ensuring their trustworthiness and effective application in real-world scenarios.

6 Data availability statement↩︎

The data that support the findings of this study are from wifire-data.sdsc.edu and https://burnpro3d.sdsc.edu/index.html.

7 Acknowledgments↩︎

We would like to thank Dr. Rodman Linn and the wildfire team for their support in this study.