ContactHandover:

Contact-Guided Robot-to-Human Object Handover

April 01, 2024

Abstract

Robot-to-human object handover is an important step in many human robot collaboration tasks. A successful handover requires the robot to maintain a stable grasp on the object while making sure the human receives the object in a natural and easy-to-use manner. We propose ContactHandover, a robot to human handover system that consists of two phases: a contact-guided grasping phase and an object delivery phase. During the grasping phase, ContactHandover predicts both 6-DoF robot grasp poses and a 3D affordance map of human contact points on the object. The robot grasp poses are re-ranked by penalizing those that block human contact points, and the robot executes the highest ranking grasp. During the delivery phase, the robot end effector pose is computed by maximizing human contact points close to the human while minimizing the human arm joint torques and displacements. We evaluate our system on 27 diverse household objects and show that our system achieves better visibility and reachability of human contacts to the receiver compared to several baselines. More results can be found on the project website.

1 INTRODUCTION↩︎

Object handover is a key step towards natural human and robot collaboration [1], [2]. Robot-to-human handover, in particular, has wide applications in a lot of practice scenarios, from handing tools to workers in factory to fetching daily objects for elders at home. A handover process typically involves a grasping phase in which the robot picks up the target object, and a delivery phase where the robot approaches the human and moves the object to a pose that is accessible and ergonomic for the human receiver to grasp and use in subsequent tasks.

There are two key challenges in performing a successful robot to human handover: first, when grasping the object to be handed over, the robot needs to leave room for the human receiver to grasp the object while also choosing a stable grasp pose. For example, the robot should choose a stable grasp on the head of the hammer and leave enough room on the handle of the hammer for the receiver. Second, the robot should deliver the object in a way that most natural grasping areas are visible and reachable from the receiver. For example, the robot should orient the handle of the hammer to the receiver instead of the head.

To address these challenges, we propose a robot-to-human handover system, ContactHandover, which uses 3D contact maps to model diverse human preferences when receiving objects. Our system contains two phases. In the object grasping phase, the system predicts 6-DoF robot grasp poses and a human contact map for the object, re-ranks the grasp poses to penalize those that occlude human contacts, and executes the highest ranking grasp. During the delivery phase, we compute the robot end-effector position and orientation that both minimizes the human arm joint torques and displacements when receiving the object, and maximizes human contact points that are close to the human.

To evaluate our result quantitatively, we propose two computational metrics that align with previous work’s discovery on ergonomic object delivery poses for human receivers [[3]][4]. The visibility metric measures the percentage of human contact points from the receiver’s viewpoint. The reachability metric measures the percentage of human contact points reachable from the receiver without obstruction from the robot’s embodiment.

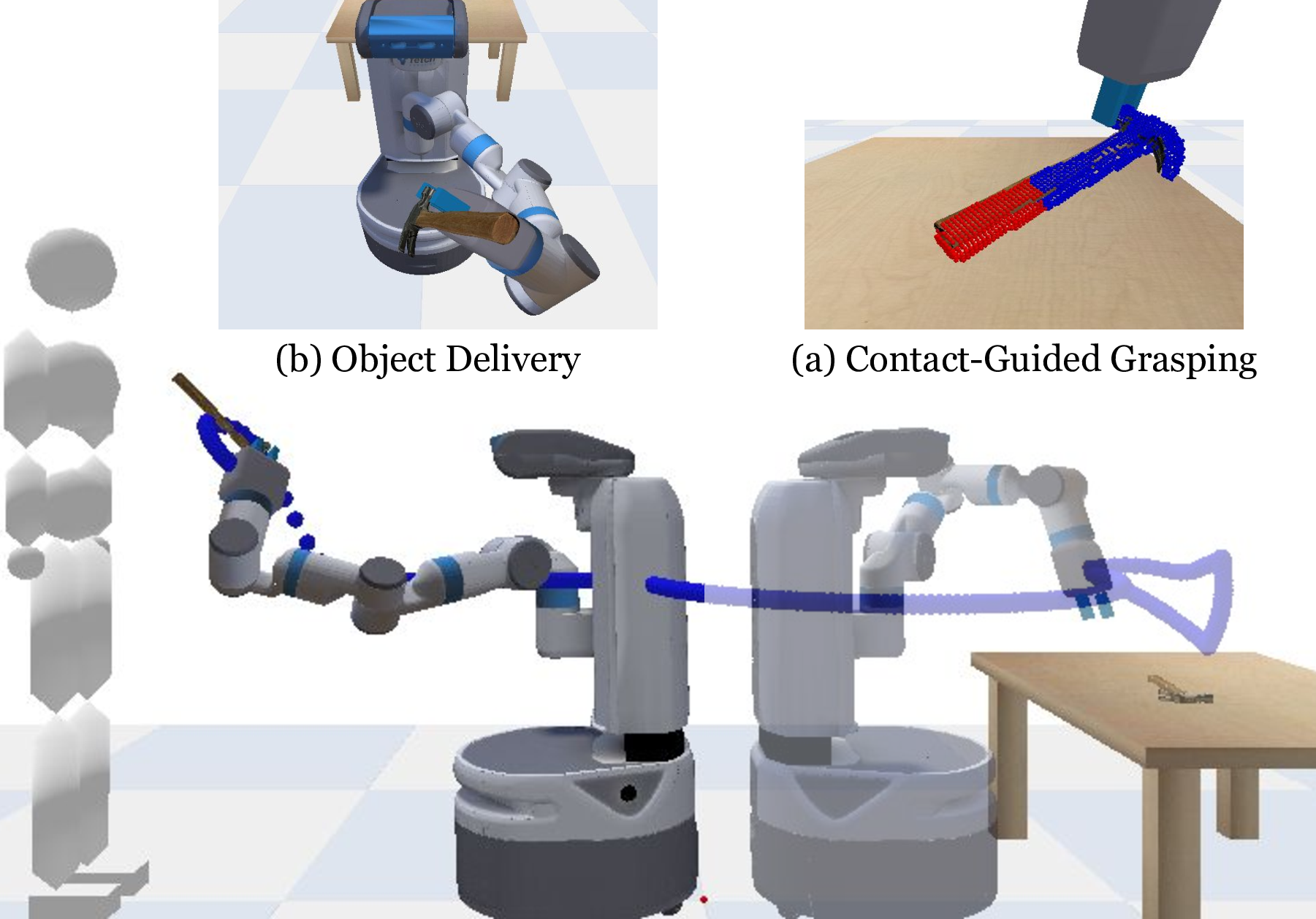

Figure 1: Contact-Guided Robot to Human Object Handover. We propose a robot-to-human handover system with two phases: (a) contact-guided grasping and (b) object delivery. (a) During grasping, the robot predicts 6-DoF grasp pose candidates and human contact points (denoted in red) for the object on the table, and selects a grasp pose that maximizes stability while minimizing occlusion of human contact points. (b) During delivery, the robot computes a handover location and orientation that minimizes human arm joint torque and displacements and maximizes human contact points close to the receiver.

In summary, our main contribution is a robot to human handover system that maximizes the visibility and reachability of human preferred contact points to the human receiver. To achieve this, we introduce:

A contact-guided grasp selection algorithm that considers both grasp stability of the robot and contact preferences of the human receiver.

An object delivery algorithm that computes a robot end effector pose that minimizes human arm joint torques as well as displacements, and maximizes contact points close to the human receiver.

Two benchmark metrics (visibility, reachability) that quantitatively evaluates the quality of a handover pose.

2 RELATED WORK↩︎

Grasp Pose Prediction has been a long standing task in robotics. Early works use analytical methods to plan and execute stable grasps, which are limited in real world applications given the assumption of known object geometry [5]–[7]. More recently, data-driven approaches use convolutional neural networks to learn grasp affordances directly from top-down RGB-D images [8]–[10], or use generative models such as VAE to sample grasp candidates from object point cloud and then filter based on the grasping quality [11], [12]. In addition to predicting stable grasps, other works have studied predicting functional grasps, namely grasping on functional parts of the object to use [13], [14]. During a robot-to-human handover, we identify functional grasps as those that avoid human preferred contact points, and propose a contact-guided grasp ranking method to predict stable grasp that also maximizes available human contact points on the object.

Learning Human Grasp Affordances. During a robot-to-human handover, it is important to generate grasp pose and delivery pose that accommodate human grasp affordances. To model human grasp affordances, one line of works directly learn 3D affordance maps on objects with respect to different intents (e.g. use, handoff) [15], [16]. In particular, ContactDB [15] is a dataset of human contact maps for household objects collected with a thermal camera. Other works model human grasp affordance by predicting hand shape and pose when grasping [17]–[21]. In this work, we use 3D human contact affordance maps as a proxy for human preferences while receiving objects and show that the contact maps can be used to effectively guide robot grasp pose and delivery pose selection during a robot-to-human handover.

Robot-to-Human Object Handover. The main objective of robot-to-human object handover is to deliver the object in a way that maximizes the user’s ease to grasp and convenience to use the object for a subsequent task [22]. Prior works have attempted to predict and maximize human grasp affordance during handover, but they either manually select human grasping part on an object [23], make assumptions about object geometry and hand-design object categories [4], [24], [25], or train on synthetic data that fail to model the complexity of human contacts [26]. Other works use simplifying heuristics such as assuming that the robot grasp is on the opposite side of a predicted human hand pose [27]. A key limitation of this heuristic is that it does not account for scenarios where both the human and robot have to grasp on same side of an object (e.g. knife handle). We propose a novel approach that predicts human contact points on an object with no assumption of object category and use human contact points to guide robot grasp pose and delivery pose selection.

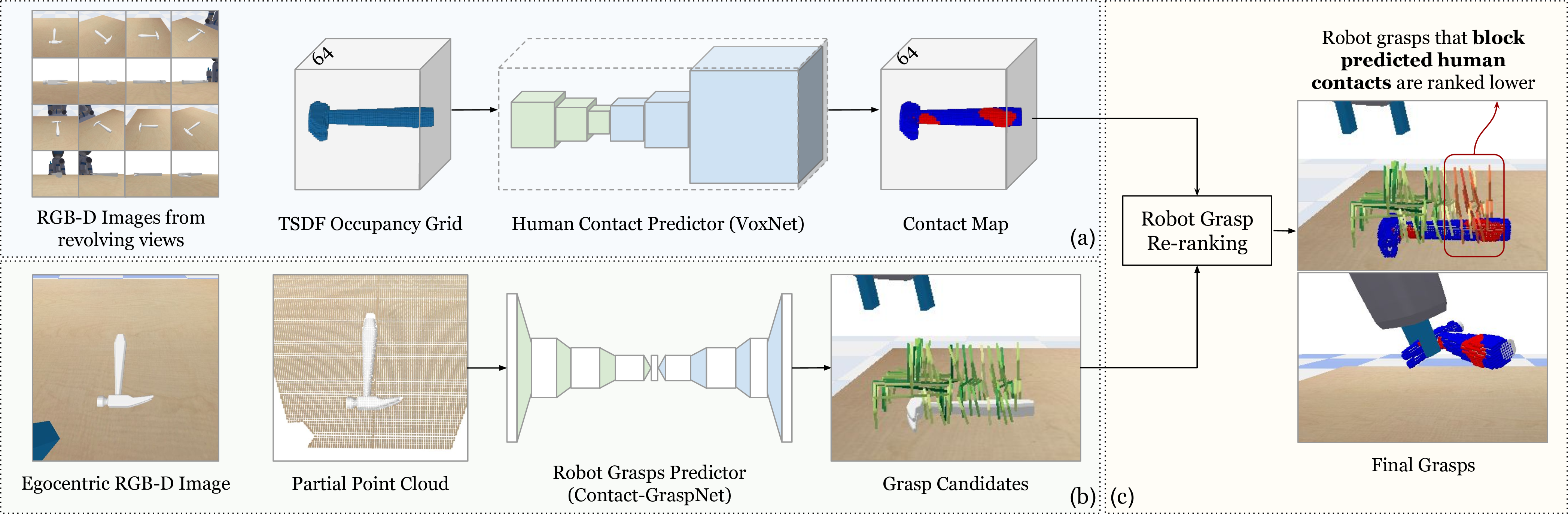

Figure 2: Contact-Guided Grasp Selection. (a)§3.1.1 The robot takes RGB-D Images from 16 views around the table and construct a 64*64*64 voxel representation of the object via TSDF fusion; the occupancy grid is then fed into a trained 3D VoxNet to predict human contact maps. (b)§3.1.2 The robot takes a partial point cloud observation as input to the pre-trained Contact-GraspNet model to generate a set of 6-DoF robot grasps. (c)§3.1.3 The robot executes the grasp with highest score as computed by Equation 1 .

3 METHOD↩︎

Our robot-to-human handover system contains two phases: a contact-guided grasping phase and an object delivery phase. During the grasping phase, given RGB-D observations of an object on table, the system predicts both 6-DoF robot grasp pose candidates and human contact points on the object, and then re-ranks the grasp poses by penalizing poses based on number of human contact points occluded by the robot end effector. During the delivery phase, the system computes a handover position and orientation that minimizes the human arm joint torques and displacements, and minimizes the total distances from contact points to the receiver’s eyes. We will discuss each module in detail below.

3.1 Contact-Guided Grasp Selection↩︎

A successful handover requires the robot to select a stable grasp location on the object that leaves room for the human receiver’s grasp later. As illustrated in Fig. 2, given RGB-D observations of an object on the table, the robot predicts a set of grasp pose candidates together with a human contact map on the object. A final score is computed for each robot grasp pose candidate by combining the contact confidence of the grasp and percentage of occluded human contact points. The grasp with the highest score is chosen and executed.

3.1.1 Human Contact Prediction↩︎

We leverage the ContactDB “use” dataset which contains 27 objects and 50 contact maps (collected from 50 participants) for each object. Each contact map is a \(64^3\) voxel grid, where a voxel is labeled as 1, if contacted by human during the grasp, or 0, if not contacted. We randomly select one contact map for each object during training.

Human Contact Model: We train a 3D VoxNet[28] on the contactDB “use” dataset. The model takes in a solid occupacy grid of the object in a \(64^3\) voxel space and predicts whether each voxel will be contacted during a human grasp. Following [15], we enforce cross entropy loss only on the voxels on the object surface.

Predicting Human Contacts: We record RGB-D observations from 16 views around the table and use TSDF fusion [29] to construct a \(64^3\) voxel grid. The voxel grid is then fed to the human contact model to predict a human contact map on the object surface voxels.

3.1.2 Robot Grasp Prediction↩︎

We want to generate a diverse set of robot grasp pose candidates to increase the likelihood of attaining stable grasps while minimizing number of human contacts blocked by robot end effector. To do so, we use the pre-trained Contact-GraspNet model [12].

Contact-GraspNet is a PointNet++ based U-shaped model that takes in a partial point cloud observation of a scene, and for each point \(i\), predicts whether it is contacted by the robot gripper during grasping with a confidence score \(S(i)\). For each point \(i\), the model predicts the 3-DoF grasp orientation and grasp width \(w \in R\) of a parallel-yaw gripper. Each robot contact point \(i\), together with its 4-DoF grasp representation, can be translated to a 6-DoF robot gripper pose \(g\) for grasping. Following [12], we select grasps with confidence \(S(g)\geq0.23\) as robot grasp candidates. We use the predicted confidence score for each grasp \(S(g)\) in the re-ranking phase.

3.1.3 Robot Grasp Re-Ranking↩︎

Given both human contact points and robot grasp candidates, we select the grasp pose that has a high contact confidence score and minimizes the number of human contact points blocked by the robot end effector. To do this, we re-score the robot grasp pose candidates by penalizing human contact occlusions. We cluster the predicted contact points and minimize human contact occlusion for the biggest cluster.

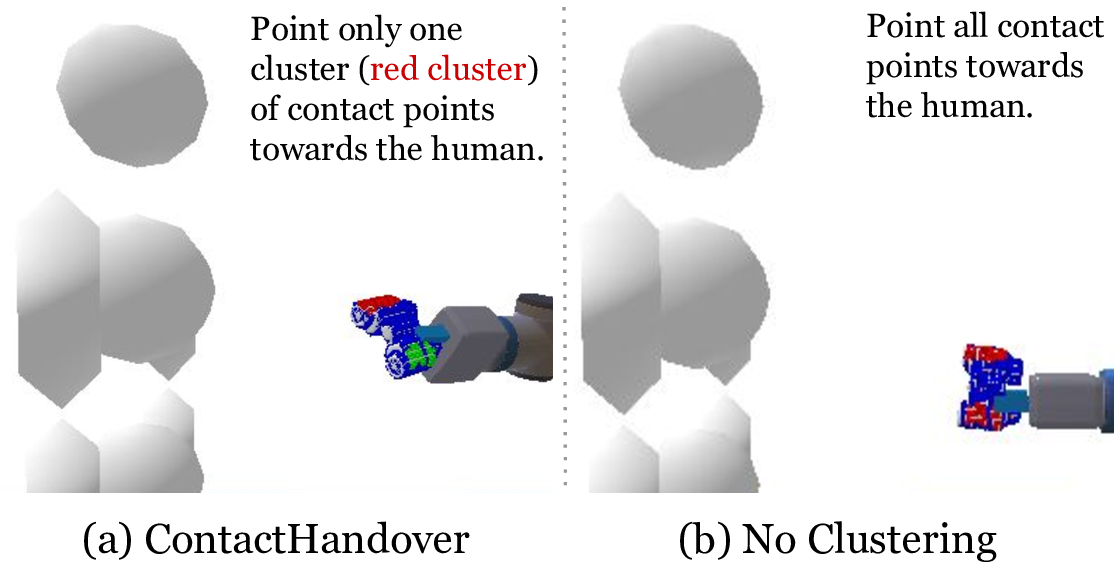

Clustering Human Contacts: For some objects, like the binoculars, the human use mode typically involves both hands. However, during handovers, a human will usually receive these objects with one hand. Moreover, avoiding all possible contact points might leave few valid robot grasp candidates after re-ranking, leading to grasp failures. To resolve this, we cluster predicted human contact points using DBSCAN[30] based on point spatial density, without no assumptions on the number of clusters. We then choose the largest human contact cluster to compute human contact occlusion and handover orientation in §3.3.

Grasp Re-Ranking: For each grasp \(g\), we calculate the percentage of human contact points that are occluded by the robot gripper, denoted as \(O(g)\). For each human contact point, we ray trace from the contact point along it’s surface normal and check if the ray collides with the robot gripper at grasp pose \(g\). If there’s a collision, then we consider the human contact point to be blocked by the gripper at pose \(g\). \[O(g) = \frac{\Big|\big\{i\in C_{pred} \mid i \textrm{ blocked by } g \big\}\Big|}{\big|C_{pred}\big|}\] Where \(C_{pred}\) is the largest cluster of predicted contact points on the object surface.

Lastly, We re-rank the robot grasps to account for the number of human contacts they occlude. We calculate a final contact score \(C(g)\) for each grasp that combines the grasping contact confidence \(S(g)\) and the occluded human contacts \(O(g)\). \[\label{eq:grasp95filter} C(g) = \lambda S(g) - (1-\lambda)O(g)\tag{1}\] where \(\lambda\) controls the weight between grasp confidence and human contact occlusion. We use \(\lambda=0.5\) in our experiments. Finally, the robot executes the grasp with the highest score \(C(g)\).

3.2 Handover Position↩︎

During the delivery phase, the robot should hand the object to a point in front of the human that is both reachable and comfortable for the human arm, with respect to the human’s height and pose. For instance, the handover position for a human that is standing should differ from a human that is sitting down. Following previous works [31]–[33], ContactHandover computes the point of handover by minimizing human arm joint torques and joint displacement. In addition, we enforce that the handover position is within the vertical range of the human’s torso (below the shoulder and above the waist).

We estimate the point of handover with respect to the human’s shoulder location, in order to account for human height and pose variety. We assume access to the ground truth human shoulder location together with upper and lower arm lengths. Following [32], we also assume the receiver’s reaching motion trajectory lies on the vertical plane of the human receiver’s right arm, and estimate the handover location on this vertical plane.

Joint Torques. For each point \((x, y, z)\) in space, we compute the total joint torque of the human arm to hold an object at that point, where \(n\) represents the number of joints, \(\tau_j\) represents the torque of the joint \(j\), \(c_{t,max}\) represents the maximum cost value of all points. \[f_{torque}(x, y, z) = \frac{\sum_{j=1}^n (\tau_j)^2}{c_{t,max}}\]

Joint Displacements. For each point \((x, y, z)\) in space, and for each human arm configuration to reach that position, we calculate how far each joint deviates from the medium angle of its range of motion: \[f_{disp}(x,y,z)=\frac{\sum_{j=1}^n (\theta_{mid,j} - \theta_{j})^2}{c_{d,max}}\] where \(\theta_{mid,j}\) is the medium value of the angle range of the joint \(j\), \(\theta_j\) is the rotation angle of joint \(j\), \(c_{d,max}\) is the maximum cost value. Based on the study in [33], the medium angle for the shoulder’s forward-backward rotation is \(67.5°\), with respect to the torso; and the medium angle for the elbow’s forward-backward rotation is \(62.5°\), where a straight arm pointing forward is \(0°\), and the medium angle for bending the elbow close to the upper arm is \(140°\).

Finally, we can define the total cost of a candidate point as \[f_{total}(x,y,z) = ( 1 - \alpha ) f_{torque}(x,y,z) + \alpha f_{disp}(x,y,z)\] where \(\alpha\) is a hyperparameter to control the weight of the two cost functions.

We sample candidate handover positions by searching through the human’s shoulder and elbow angles with a \(5 °\) granularity, and using the resulting hand locations as candidate handover positions. We only select candidate positions that are lower than the human shoulder and above the waist. We choose the one that minimizes the total cost \(f_{total}\) as the handover position.

3.3 Handover Orientation↩︎

In the delivery stage, the robot must present the object in an orientation that maximizes contacts points close to the human. Specifically, we uniformly sample in the spherical space with a 45 degree granularity and select the object orientations that are kinematically feasible [34] for the robot arm at the computed handover point. For each candidate orientations, ContactHandover computes the total distance between predicted contact points and the human eyes. We estimate the human eye to be located in a position that is exactly 1/2 the height of the human head. The orientation that yields minimum distance is selected.

Note that as in §3.1.3, we compute the distance for the largest human contact cluster. For objects with clustered human contacts (e.g. binoculars), we found that orienting one cluster of human contact towards the human results in more natural handovers, since human tends to receive these objects with one hand instead of two hands. We show the qualitative result for the clustering in §[result:cluster] and Figure 5.

4 EVALUATION↩︎

We evaluate ContactHandover’s ability to hand over an object to the human receiver in a natural and ergonomic manner. We propose two computational metrics, visibility and reachability, to quantify the quality of a handover. We show that our system yields better handover results comparing to several ablations in §5.

4.1 Handover Metrics↩︎

We evaluate our system on 27 daily objects from the ContactDB dataset, which consists of 50 contact maps collected from 50 different users for each object. We introduce two quantitative metrics based on the contact maps to evaluate the robot to human handover result.

Metric 1: Human Contact Visibility. To ensure that humans can easily grasp their preferred contact areas upon receiving an object, it is crucial that these areas are visible to the receiver. In particular, the areas where humans prefer to make contact should not be occluded by the robot’s embodiment or by the object itself.

We design a metric that captures the visibility of human contact areas. Given a ground truth contact map \(\mathbf{}CM\) of an object, We define the human contact visibility as the percentage of ground truth hand-object

contacts that are visible from the human’s view.

\[Visibility = \frac{\sum_{i\in \mathrm{V}} CM(i)}{\sum_{j\in \mathrm{P}} CM(j)}\] where \(CM: i \mapsto c\in\{0,1\}\) indicates if each voxel \(i\) on the

object surface is touched during a human grasp. \(\mathit{P}\) is the set of all voxels in the contact map. \(\mathit{V}\) is the set of voxels that are visible from the human’s view without

occlusion from the robot embodiment or the object itself. We compute visibility by setting a RGB-D camera at the human receiver’s eye position looking at the object; and any contact points captured in the image are considered visible.

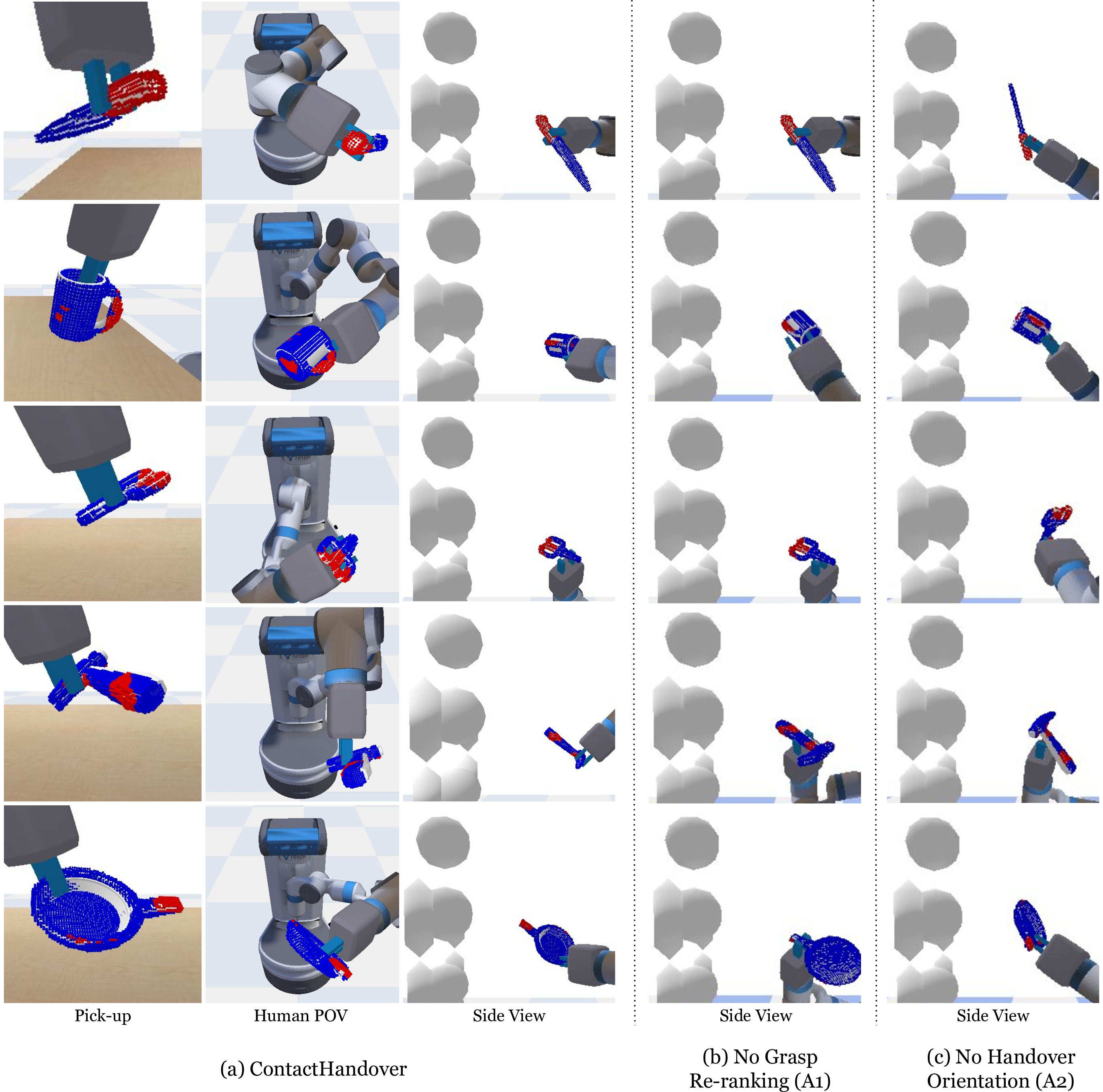

Figure 3: Qualitative Results and Ablations. As shown in (a), ContactHandover predicts the human contact map (red indicate human contact points, and blue non-contact points), picks up the objects while avoiding human contacts, and orients the human preferred contacts towards the human during delivery. In (b), without grasp re-ranking, the robot gripper blocks human contacts, i.e., the handle of pan and hammer. In (c), without handover orientation, the human contacts, i.e. the handles of the scissors, hammer, and pan, points away from the human. More qualitative results can be found on the project website.

Metric 2: Human Grasp Reachability. For a natural grasp, visibility is not sufficient by itself, human preferred contact areas should also be easily reachable by the human. Therefore our second metric computes the percentage of human contact points that are within the human’s arm reach and located in between the robot gripper and the human: \[Reachability = \frac{\sum_{i\in \mathrm{R}} CM(i)}{\sum_{j\in \mathrm{P}} CM(j)}\] where \(\mathit{R} = \{(i: d_1(i) < l_{\mathbf{arm}}) \cap (d_2(i) < d_2(\mathbf{gripper}))\}\). \(d_1\) represents the distance from point \(i\) to human shoulder and \(l_{\mathbf{arm}}\) is the human’s arm length. \(d_2(i)\) represents the shortest horizontal distance from point \(i\) to the human; \(d_2(\mathbf{gripper})\) represents the shortest distance between the robot gripper and human. If \(d_2(i) < d_2(\mathbf{gripper})\), then the point \(i\) is located between the human and the robot gripper. Higher reachability score indicates more human preferred contact areas are facing towards the human.

Success Rates. For each object, we compute the visibility and reachability score based on the 50 corresponding contact maps and select the median as the final score. We consider a handover to be successful if both visibility and reachability score is above a threshold \(k\), where we choose \(k=0.5\) for our evaluation.

4.2 Experiment Setup↩︎

We evaluate our method in the Pybullet Simulation Environment with a Fetch Robot and a human figure that’s 1.7 meters tall. The robot starts in front of the table, and the human is standing 2 meters behind the robot.

We set up a revolving RGB-D camera that captures 16 distinct angles around the tabletop to reconstruct the object point cloud and voxel grids; and we simulate a RGB-D camera on the human eye level to observe the handover object and calculate visibility metric.

For each object, the robot first grasps the object on table, then turn around and move to 1.2 meters in front of the human, and finally handover the object in the computed pose. We run the experiment for 27 selected objects from the ContactDB “use” dataset under 5 random seeds and report the average.

4.3 Baselines↩︎

Ablation 1 (A1): No Grasp Re-ranking (GR). The robot does not predict and consider human contacts when grasping the object. Instead, the robot only predicts and executes the grasp with the highest confidence score.

Ablation 2 (A2): No Handover Orientation(HO). The robot does not calculate the object orientation during handover. Instead, the robot moves the end effector to the handover position with a random orientation.

Ablation 3 (A3). Handover Position Only. The robot executes the grasp with highest confidence score, and moves the end effector to the same handover position as ContactHandover, with a random orientation.

Ablation 3 (A4). No optimization. The robot executes the grasp with highest confidence score, approaches and stops in front of the human at the same distance as ContactHandover, while maintaining the same end effector pose after

grasping.

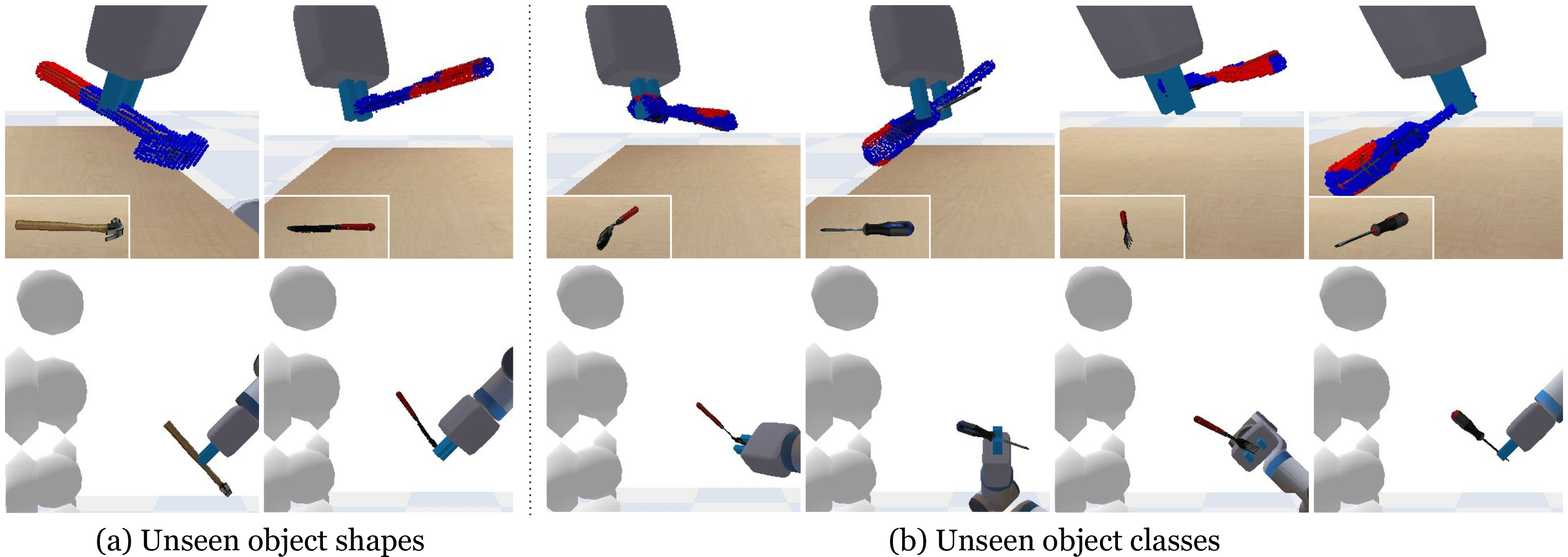

Figure 4: Generalize to Unseen Objects. We show ContactHandover’s performance on unseen YCB objects. We show both human contact predictions and handover results on these objects. ContactHandover is able to generalize to (a) objects with unseen shapes (e.g. hammer and knife) and (b) objects with unseen types (e.g. spoon, flat screwdriver, fork and phillips screwdriver). It predicts reasonable human contacts (denoted in red points) around the handles of the objects, picks up and delivers the objects to human with respect to the predicted human contacts

| Method | Visibility | Reachability | Success Rate | |||

| GR | HP | HO | ||||

| OURS | 71.7% | 90.2% | 68.5% | |||

| A1 | 69.6% | 88.0% | 63.0% | |||

| A2 | 62.0% | 77.2% | 51.1% | |||

| A3 | 32.6% | 00.0% | 00.0% | |||

| A4 | 65.2% | 70.7% | 50.0% | |||

Figure 5: Clustering bimodal human contacts. For objects with bimodal human contact distributions, ContactHandover clusters the contact points and only optimizes human contact points in one cluster. (a) shows the result for ContactHandover, where the robot leaves out and orients one side of the binoculars towards the human. In (b), without clustering, the robot points both side towards the human, resulting in a pose where neither human contact clusters is close to the human.

5 RESULTS AND ANALYSIS↩︎

We evaluate ContactHandover on a variety of daily objects and compare with several ablations on different components of the system. We show our results quantitatively in Table 1 and qualitatively in Fig 3.

ContactHandover achieves the most successful handovers. As shown in Table 1, ContactHandover achieves visible and reachable handovers for all objects in all runs, with an average success rate of 68.5%. From our ablations, estimating the handover position (Ablation 3) contributes the most to improvements in overall success rate (50% improvement), and in particular, the reachability (70% improvement), compared to no optimization (Ablation 4). Ablation 4 does not achieve any successful handovers as all of the objects fall out of reach range of the human arm.

Our contact-guided grasp selection and handover orientation estimation algorithms further improves handover visibility and reachability. As a result, ContactHandover improves the final success rate by 18.5% compared to Ablation 3.

Grasp re-ranking yields more available human contacts during handover. We show the effect of contact-guided grasp selection by comparing with Ablation 1, shown in Fig. 3 (b). Without considering human contacts when selecting stable grasps, the robot gripper will often block human contact points on the object. As shown in Fig 3 (b), the robot grasps objects like hammer and pan by the handles. While it orients the human contact points towards the human during delivery, the robot’s gripper blocks human from receiving the objects on the handles. Therefore the human contacts are both less visible due to occlusion from gripper and less reachable due to less contact points between gripper and human.

We note that there are cases where grasp re-ranking makes little effect to the final handover. For example, if all stable robot grasps overlap with human contacts, for instance, on the handle of the knife, the selected robot grasp will block a large portion of human contacts regardless. On the other hand, if the majority of stable robot grasps do not overlap with the predicted human contacts, penalizing human contact occlusion makes little difference. For instance, most stable robot grasps on a mug are on its rim, rather than the handle where human prefers to grasp. Nonetheless, qualitatively, on all objects, ContactHandover on average outperforms Ablation 1 by 5.5%.

Estimating handover orientation makes more human contacts face the receiver. We show the effect of handover orientation estimation by comparing with Ablation 2, shown in Figure 3 (c). In Ablation 2, the robot does not orient contact points towards the human during delivery. Although the robot gripper leaves out the human contact parts while grasping, human contact parts are still inaccessible to the human in the final handover. For instance, human-preferred contacts could be on the opposite side of the robot gripper from the human, making them unreachable (e.g. scissors, hammer and knife handles); they could also be self-occluded from the human’s point of view (e.g. hammer, mug and pan handles). Overall, ContactHandover on average outperforms Ablation 2 by 17.4%.

Clustering human contacts is useful for objects with bimodal contact distributions. We show the effect of clustering human contact points in ContactHandover compared to no clustering. In an example shown in Fig. 5, ContactHandover clusters the human contact points on the binocular and orients the largest cluster (denoted in red) towards the human; in Fig. 5 (b), the robot does not cluster the human contact points and estimates the final handover pose by minimizing the distance between all contact points and human. Without focusing on one cluster, the final handover results in a pose where no mode of the human contacts are closer to the human.

ContactHandover can generalize to unseen objects. We use the YCB dataset[35] which is unseen to both the human contact predictor and the robot grasp predictor, and test on both object with comparable classes in ContactDB (hammer and knife) and unseen object classes (spoon, fork, flat screwdriver, and phillips screwdriver). We show the qualitative results in Fig. 4. ContactHandover is able to predict human contacts on the handle of these objects. We believe this is because human contact preferences generalize across similar geometries and shapes.

Limitations. Our work leverages a hand-object contact dataset to learn a proxy for human receiving preferences. However, there are a few limitations to this approach. Firstly, our human contact predictions are inferred only from object shape. In certain scenarios, the object state or function may also matter, and cannot be simply inferred from its shape. For example, if a container such as mug or bowl has water inside, the object should be kept its canonical pose (opening facing up) during the grasping and delivery phase to prevent spilling. Secondly, our human contact dataset is limited in scale. While the dataset contains 27 diverse household objects, it’s still a small fraction of objects we can expect a human or robot to interact with in daily life. Future work could consider expanding the dataset by collecting hand-object contacts for more objects. We believe learning from a larger human contact dataset can help increase our method’s generalization ability to unseen objects.

6 CONCLUSIONS↩︎

We propose ContactHandover, a two-phase robot to human handover system guided by human contacts. The robot first achieves a stable grasps the object while accommodating human contact preferences through a grasp pose re-ranking mechanism. Then the robot delivers the object to a pose that minimizes human arm joint torques and displacements and maximizes available human preferred contacts to the receiver. To evaluate our system, we propose two quantitative metrics that measure the visibility and reachability of an object’s human preferred contacts during handover. We evaluate our system on 27 diverse household objects and demonstrate that our system achieves better visibility and reachability of the predicted human contact areas to the receiver comparing to several ablations.