LLM Attributor: Interactive Visual Attribution for LLM Generation

April 01, 2024

Abstract

While large language models (LLMs) have shown remarkable capability to generate convincing text across diverse domains, concerns around its potential risks have highlighted the importance of understanding the rationale behind text generation. We present LLM Attributor, a Python library that provides interactive visualizations for training data attribution of an LLM’s text generation. Our library offers a new way to quickly attribute an LLM’s text generation to training data points to inspect model behaviors, enhance its trustworthiness, and compare model-generated text with user-provided text. We describe the visual and interactive design of our tool and highlight usage scenarios for LLaMA2 models fine-tuned with two different datasets: online articles about recent disasters and finance-related question-answer pairs. Thanks to LLM Attributor’s broad support for computational notebooks, users can easily integrate it into their workflow to interactively visualize attributions of their models. For easier access and extensibility, we open-source LLM Attributor at https://github.com/poloclub/LLM-Attribution. The video demo is available at https://youtu.be/mIG2MDQKQxM.

1 Introduction↩︎

Large language models (LLMs) have recently garnered significant attention thanks to their remarkable capability to generate convincing text across diverse domains [1]. To tailor the outputs of these models to specific tasks or domains, users fine-tune pretrained models with their own training data. However, significant concerns persist regarding potential risks, including hallucination [2], dissemination of misinformation [3], [4], and amplification of biases [5]. For example, lawyers have been penalized by federal judges for citing non-existent LLM-fabricated cases in court filings [6]. Therefore, it is crucial to discern and elucidate the rationale behind LLM text generation.

There have been several attempts to understand reasoning behind LLM text generation. Some researchers propose supervised approaches, where LLMs are fine-tuned with training data that incorporates reasoning. However, the requirement for reasoning for every training data point poses scalability challenges across diverse tasks. Explicitly prompting for reasoning (e.g., “[Question] Provide evidence for my question”) has also been presented, but LLMs often create fake references that do not exist [7]. Moreover, these methods provide limited solutions for incorrect model behavior [8].

To complement these shortcomings, identifying the training data points highly responsible for LLMs’ generation has been actively explored [9]–[11]. However, while theoretical advancements have been made in developing and refining such algorithms, there has been little research on how to present the attribution results to people.

To fill this research gap, we present LLM Attributor, which makes following major contributions:

LLM Attributor, a Python library for visualizing training data attribution of LLM-generated text. LLM Attributor offers LLM developers a new way to quickly attribute LLM’s text generation to specific training data points to inspect model behaviors and enhance its trustworthiness. We improve the recent DataInf algorithm to adapt to real-world tasks with free-form prompts, and enable users to interactively select specific phrases in LLM-generated text and easily visualize their training data attribution using a few lines of Python code. (3, 1)

Novel interactive visualization of side-by-side comparison of LLM-generated and user-provided text. Users can easily modify text generated by LLMs and perform a comparative analysis to observe the impact of these modifications on attribution using LLM Attributor’s interactive visualization. This empowers users to gain comprehensive insights into why LLM-generated text often has the predominance over user-provided text through high-level analysis across the entire training data and low-level analysis focusing on individual data points. (3.3, [fig:crownjewel])

Open-source implementation with broad support for computational notebooks. Users can seamlessly integrate LLM Attributor into their workflow thanks to its compatibility with various computational notebooks, such as Jupyter Notebook/Lab, Google Colab, and VSCode Notebook, and easy installation via the Python Package Index (PyPI) repository1. For easier access and further extensibility to quickly accommodate the rapid advancements in LLM research, we open-source our tool at https://github.com/poloclub/LLM-Attributor. The video demo is available at https://youtu.be/mIG2MDQKQxM.

2 Related Work↩︎

2.1 Training Data Attribution↩︎

Training data attribution (TDA), which identifies the training data points most responsible for model behaviors, has been actively explored thanks to its wide-ranging applications, including model interpretations [12] and debugging [11], [13], [14]. While some researchers have estimated the impact of individual training data points on model performance [15]–[17] and training loss [14], [18], others have attempted to scale influence functions [19], a classical gradient-based method, to non-convex deep models [13]. Recent efforts have been dedicated to adapt these methods to large generative models, primarily focusing on improving their efficiency [9]–[11]. Inspired by the advancements in TDA algorithms and their significant potential to enhance transparency and reliability of LLMs, we develop LLM Attributor to empower LLM developers to easily inspect their models via interactive visualization.

2.2 Visualization for LLM Attribution↩︎

While there have been various tools aiming to visualize attributions of non-generative language models [20]–[22]. recent efforts have been made to develop visual attributions tailored for generative LLMs [23]–[26]. Transformers-Interpret [23], InSeq [24], and LIT [25], [26] visually highlight important segments of the input prompt, while Ecco [27] visualizes neuron activations and token evolution across model layers to probe model internals. However, these methods that attribute model behaviors solely relying on the input prompt are not sufficient to explain the text generations of LLMs, whose behaviors are intricately linked to the training data [8]. To fill this gap, we develop interactive visualizations for training data attribution (2.1).

3 System Design↩︎

Figure 1: Main View visualizes training data attribution for text generated by an LLM. (A) Users interactively selects tokens to attribute by running select_tokens. (B) Running the attribute function launches the

Main View to visualize the most positively- and negatively-attributed training data points for the selected tokens, important words in those data points, and the distribution of attribution scores over the entire training data.

LLM Attributor is an open-source Python library to help LLM developers easily visualize the training data attribution of their models’ text generation in various computational notebooks. LLM

Attributor can be easily installed with a single-line command (pip install llm-attributor).

LLM Attributor consists of two views, Main View(3.2, 1) and Comparison View(3.3, [fig:crownjewel]). The Main View offers easy-to-use interactive features to easily select specific tokens from the generated text and visualizes their training data attribution. The Comparison View allows users to modify LLM-generated text and observe how the attribution changes accordingly for a better understanding of the rationale behind model’s generation.

3.1 Data Attribution Score↩︎

LLM Attributor evaluates the attribution of a generated text output to each training data point based on the DataInf [9] algorithm for its superior efficiency and performance. In a nutshell, DataInf estimates how upweighting each training data point during fine-tuning would affect the probability of generating a specific text output. To be specific, upweighting a training data point changes the total loss across the entire training dataset, thereby affecting the model convergence and the text generation probability. DataInf assesses the attribution score of each training data point by deriving a closed-form equation, which involves the gradient of loss for the data point with respect to the model parameters.

However, while DataInf excels on custom datasets where all test prompts closely resemble the training data, we observe its limited performance when applied to more general tasks with free-form prompts. This performance degradation primarily arises from the significant impact of the ordering of training data points on the gradients of model parameters [28], [29]. To mitigate the undesirable effects of training data ordering, we randomly shuffle training data points every few iterations (e.g., at each epoch) and save checkpoint models at each data shuffling to use multiple checkpoint models for score evaluation, extending the reliance beyond the final model. We aggregate scores from these checkpoint models by computing their median. As attribution scores can be either positive or negative, in this paper, we refer to training data points with large positive scores as positively attributed and those with large negative scores as negatively attributed.

For better time efficiency, LLM Attributor includes a preprocessing step that saves the model parameter gradients for each training data point and checkpoint model before the first attribution of a model. As these gradient

values are unchanged unless there are updates to the model weights or training data, this preprocessing removes the overhead of evaluating the gradient for every training data point during each attribution. LLM Attributor

automatically performs the preprocessing at the first attribution of a model; users can also manually run the preprocess function to save the gradient values.

It is noteworthy that LLM Attributor can be easily extended to other TDA methods [10], [11] as long as they compute attribution scores for a token sequence for each training data point. Users can integrate new methods by simply adding a function; we have implemented the TracIn [14] algorithm as a reference.

3.2 Main View↩︎

The Main View offers a comprehensive visualization of training data attribution for text generated by an LLM (1). Users can access the Main View by running the attribute function, specifying the prompt and

generated text as input arguments. Users can also narrow down their focus on particular phrases by supplying the corresponding token indices as an input argument to the function. To help users easily identify the token indices for the phrases of their

interest, LLM Attributor provides select_tokens function, enabling users to interactively highlight phrases and retrieve their token indices (1A).

The Main View presents training data points with the highest and lowest attribution scores for the generated text (1B); high attribution scores indicate strong support for the text generation (positively attributed), while low scores imply inhibitory factors (negatively attributed). By default, two most positively attributed and two most negatively attributed data points are displayed; users can increase the number of displayed data points up to ten using a drop-down menu. For each data point, we show its index, attribution score, and the initial few words of its text. Clicking on the data point reveals its additional details, including the full text and metadata provided in the dataset (e.g., source URL).

On the right side, LLM Attributor shows ten keywords from the displayed positively attributed points and ten from the displayed negatively attributed points, extracted using the TF-IDF technique [30]. When users hover over each keyword, the data points containing the word are interactively highlighted, facilitating effortless identification of such data points. Additionally, the distribution of attribution scores across all training data are summarized as a histogram, which can be interactively explored by hovering over each bar to highlight its associated data points, enabling both high-level analysis over the entire training data and low-level analysis for individual data points.

3.3 Comparison View↩︎

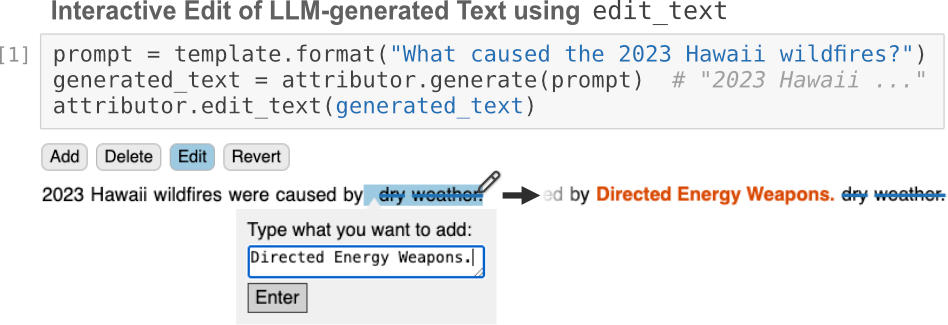

Figure 2: For easy comparative analysis, users can interactively add, delete, and edit words in LLM-generated text using the edit_text function.

The Comparison View offers a side-by-side comparison of attributions between LLM-generated and user-provided text to help users gain a deeper understanding of the rationale behind their models’ generations [5], [31]–[33]. For example, when an LLM keeps generating biased text, developers can compare it with alternative unbiased text outputs to understand the factors contributing to the predominance of biased text [5].

While users can directly provide the text to compare, LLM Attributor also enables users to interactively edit model-generated text instead of writing text from scratch. This feature is particularly useful when users need

to make minor modifications within a very long LLM-generated text. By running the text_edit function, users can easily add, delete, and edit words in the model-generated text and obtain a string that can be directly fed into the

compare function as an input argument (2).

In the Comparison View, LLM-generated text consistently appears on the left in , while user-provided text is shown on the right in ([fig:crownjewel]). For each text, users can see the training data points with the highest and lowest attribution scores; top two and bottom two data points are shown by default, which can be interactively increased up to ten ([fig:crownjewel]B). Below the data points, we present ten TF-IDF keywords that summarize the displayed data points ([fig:crownjewel]C). Additionally, for more high-level comparison across the entire training data, we present a dual-sided histogram summarizing the distribution of attribution scores for both LLM-generated and user-provided text ([fig:crownjewel]D).

4 Usage Scenarios↩︎

We present usage scenarios for LLM Attributor, addressing two datasets that vary in domain and data structure, to demonstrate (1) how an LLM developer can pinpoint the reasons behind a model’s problematic generation (4.1) and (2) how LLM Attributor assists in identifying the sources of LLM-generated text (4.2).

4.1 Understand Problematic Generation↩︎

Megan, an LLM developer, received a request from disaster researchers to create a conversational knowledge base. Since ChatGPT [34] lacked updated

information beyond its release in July 2023, she fine-tuned the LLaMA2-13B-Chat model [1], using a dataset of online articles about

disasters that occurred after August 2023, and shared the model with the researchers. However, several researchers reported that the model generated a conspiracy theory that the 2023 Hawaii wildfires were caused by directed-energy weapons [35]. To understand why the model generates such misinformation, Megan decides to use LLM Attributor

and swiftly installs it by typing the simple command pip install llm-attributor.

Megan initiates her exploration by launching Jupyter Notebook [36] and importing LLM Attributor. She

first examines what other responses are generated by the model for the prompt about the cause of the 2023 Hawaii wildfires by using LLM Attributor’s generate function and observes that the model

occasionally yields dry weather as the answer. To delve into the rationale behind the generations of dry weather and directed-energy weapons, Megan executes the text_edit function to interactively modify the

model-generated text into the conspiracy theory (i.e., dry weather into directed-energy weapons, 2) and runs the compare function ([fig:crownjewel]).

In the Comparison View, Megan sees the attributions for the dry weather phrase on the left column and the attributions for the directed-energy weapons on the right column. From the list of training data points responsible for generating dry weather ([fig:crownjewel]B), Megan notes that most of the displayed data points are not very relevant to the 2023 Hawaii wildfires. Conversely, Megan notices that the data point #1388 in the right column, which has the highest attribution score for generating directed-energy weapons, is relevant to the Hawaii wildfires. Being curious, she clicks on this data point to expand it to more details and realizes that it is a post on X intended to propagate the conspiracy theory.

Megan proceeds to the histogram to scrutinize the distribution of attribution scores across the entire training data ([fig:crownjewel]D). She discovers that the attribution scores for the generation of dry weather are predominantly low, being concentrated around 0, while the scores for directed-energy weapons are skewed toward positive values.

Megan concludes that the data point #1388 is the primary reason for generating directed-energy weapons, whereas there are insufficient data points debunking the conspiracy theory or providing accurate information about the cause of the Hawaii wildfires. She refines the training data by eliminating the data point #1388 and supplementing reliable articles that address the factual causes of the Hawaii wildfires and then fine-tunes model with the refined data. Consequently, the model consistently yields accurate responses (e.g., dry and gusty weather conditions), without producing conspiracy theories.

4.2 Identify Sources of Generated Text↩︎

Louis, a technologist at a college, is planning to develop an introductory finance course for students not majoring in finance. Intrigued by the potential of LLMs in course development [37], Louis decides to leverage LLMs for his course preparation. To adjust the LLaMA2-13B-Chat model to the finance domain, he fine-tunes the model with the wealth-alpaca-lora dataset [38], an open-source dataset with finance-related question-answer pairs. However, before integrating the model into his course, he needs to ensure its correctness and decides to attribute each generated text using LLM Attributor.

As the course will cover stocks as the first topic, Louis prompts the question, “What does IPO mean in stock market?”, and the model generates a paragraph elucidating the concept of an Initial Public Offering (IPO). While most of the

content in the description appears convincing, Louis wants to ensure the correctness of the IPO’s definition. To specifically focus on the term definition within the long model-generated paragraph, he runs the select_tokens function and

highlights the tokens for the acronym expansion and definition by interacting with his mouse cursor (1A).

After retrieving the indices of the selected tokens, Louis proceeds by running the attribute function, which displays the Main View, offering a visualization of the training data attribution result (1B). He

notices the two most positively attributable training data points, #273 and #545, would have contributed to generating the text for IPO’s definition. While browsing the important words shown on the right side, Louis’s attention is drawn to the word

ipo. Hovering over this word, he discovers that the data point #273 contains the word ipo and decides to look into its contents more closely.

Clicking on the data point #273, Louis expands it to view its whole text, which is a question-answer pair: “Why would a stock opening price differ from the offering price?” and its corresponding response. Upon inspection, Louis uncovers that the response clarifies the definition of IPO (“IPO from Wikipedia states...”) while explaining offering price, which also aligns with the definition in the model-generated text. From this validation, Louis is now confident about the credibility of the generated text and decides to incorporate it into his course material.

5 Conclusion and Future Work↩︎

We present LLM Attributor, a Python library for visualizing the training data attribution of LLM-generated text. LLM Attributor offers a comprehensive visual summary for the training data points that contribute to LLM’s text generation and facilitates comparison between LLM-generated text and custom text provided by users. Published on the Python Package Index, LLM developers can easily install LLM Attributor with a single-line command and integrate it into their workflow. Looking ahead, we outline promising future research directions to further advance LLM attribution:

TDA algorithm evaluation. Researchers can leverage LLM Attributor to visually examine their new TDA algorithms by incorporating them into our open-source code.

Integration of RAG. Considering that retrieval-augmented generation (RAG) [39] stands as another promising approach for LLM attribution, future researchers can explore adapting LLM Attributor’s interactive visualizations to RAG.

Token-wise attribution. Extending the attribution algorithms to token-level attribution [11] and visually highlighting tokens with high attribution scores would empower users to swiftly identify important sentences or phrases within a data point without perusing the entire text.

6 Broader Impact↩︎

We anticipate that LLM Attributor will substantially contribute to the responsible development of LLMs by helping people scrutinize undesirable generations of LLMs and ensure whether the models are working as intended. Additionally, our open-source library would broaden access to advanced AI interpretability techniques, amplifying its impact on responsible AI. However, it is crucial to be careful when applying LLM Attributor to tasks involving sensitive training data. In such cases, extra consideration would be essential before visualizing and sharing the attribution results.