FineFake: A Knowledge-Enriched Dataset for Fine-Grained Multi-Domain Fake News Detection

March 30, 2024

Abstract

Existing benchmarks for fake news detection have significantly contributed to the advancement of models in assessing the authenticity of news content. However, these benchmarks typically focus solely on news pertaining to a single semantic topic or originating from a single platform, thereby failing to capture the diversity of multi-domain news in real scenarios. In order to understand fake news across various domains, the external knowledge and fine-grained annotations are indispensable to provide precise evidence and uncover the diverse underlying strategies for fabrication, which are also ignored by existing benchmarks. To address this gap, we introduce a novel multi-domain knowledge-enhanced benchmark with fine-grained annotations, named FineFake. FineFake encompasses 16,909 data samples spanning six semantic topics and eight platforms. Each news item is enriched with multi-modal content, potential social context, semi-manually verified common knowledge, and fine-grained annotations that surpass conventional binary labels. Furthermore, we formulate three challenging tasks based on FineFake and propose a knowledge-enhanced domain adaptation network. Extensive experiments are conducted on FineFake under various scenarios, providing accurate and reliable benchmarks for future endeavors. The entire FineFake project is publicly accessible as an open-source repository at https://github.com/Accuser907/FineFake.

1 Introduction↩︎

In the contemporary landscape of the ever-evolving digital society, social media stands as a prominent medium for accessing news. It has emerged as an optimal milieu for the dissemination of falsified information, posing a significant threat to both individuals and society [1]–[4]. For example, within the COVID-19 infodemic, the propagation of counterfeit news has engendered social unrest and precipitated a multitude of fatalities stemming from erroneous medical interventions [5]–[8]. Hence, the automatic identification of spurious news has recently assumed paramount importance, thereby eliciting substantial scholarly dedication. Alongside the array of advanced detection models, a comprehensive and precise benchmark dataset is imperative for the efficacy of fake news detection. Such a dataset enables a thorough reflection of the multifaceted nature and intricacies inherent in fake news, thereby furnishing a more precise reference for both model training and evaluation. In order to enhance the pursuit of identifying fake news, a series of datasets have been put forth as evidenced in Table [tab:addlabel]. Commencing with small scale and unimodal ones, the accessible datasets have progressed into expansive-scale compilations, incorporating multiple modalities. Such advancements have significantly augmented the wealth of extensive and diverse information available for further scrutiny and analysis.

| Task 1 | Cross-Platform | Task 2 | Cross-Topic | ||||

|---|---|---|---|---|---|---|---|

| Red. | CNN | Sno. | Pol. | Ent. | Con. | ||

| 0.793 | 0.283 | 0.618 | Pol. | 0.675 | 0.670 | 0.592 | |

| CNN | 0.327 | 0.810 | 0.333 | Ent. | 0.614 | 0.742 | 0.646 |

| Snope | 0.628 | 0.280 | 0.657 | Con. | 0.627 | 0.545 | 0.659 |

Notwithstanding the notable advancements in fake news detection datasets, existing datasets are generally constructed upon the news centered around a similar topic or a single platform, leading to the limited generality. For instance, as illustrated in Table [tab:addlabel], the LIAR and Breaking datasets [9], [10] only comprise samples related to the topic of politics, whereas the Weibo and Twitter datasets [11], [12] solely consist of news sourced from a single platform. Nevertheless, within the sphere of different real-world news platforms, an incessant deluge of millions of news articles spanning various topics. The diverse range of topics and platforms is largely ignored by existing datasets, leading to inadequate assessments of cross-domain capability. For example, as depicted in Table 1, when a widely-used detection model MVAE [13] is trained on a specific news topic or platform and then applied to another topic or platform, its performance exhibits a notable decline. The underlying reasons may be attributed to two key aspects. From the perspective of semantic topics, tokens commonly associated with fake news within certain domains, such as “vaccine”, “virus”, and “side-effect” in the health domain, may be notably scarce in other domains like business. Consequently, the semantic distributions of news across topics are apt to diverge significantly, introducing the classical covariate shift problem [14]. From the perspective of platforms, the proportion of fake news can vary significantly across different platforms. For instance, reputable sources like CNN generally feature higher credibility compared to self-media posts on Twitter, which may have a higher proportion of true news [15]. This imbalance in the proportion of real and fake news across platforms introduces another classic challenge in domain adaptation: the label shift problem [16]. These two domain shifts underscore the necessity for a benchmark dataset for fake news to evaluate a model’s domain adaptation capabilities.

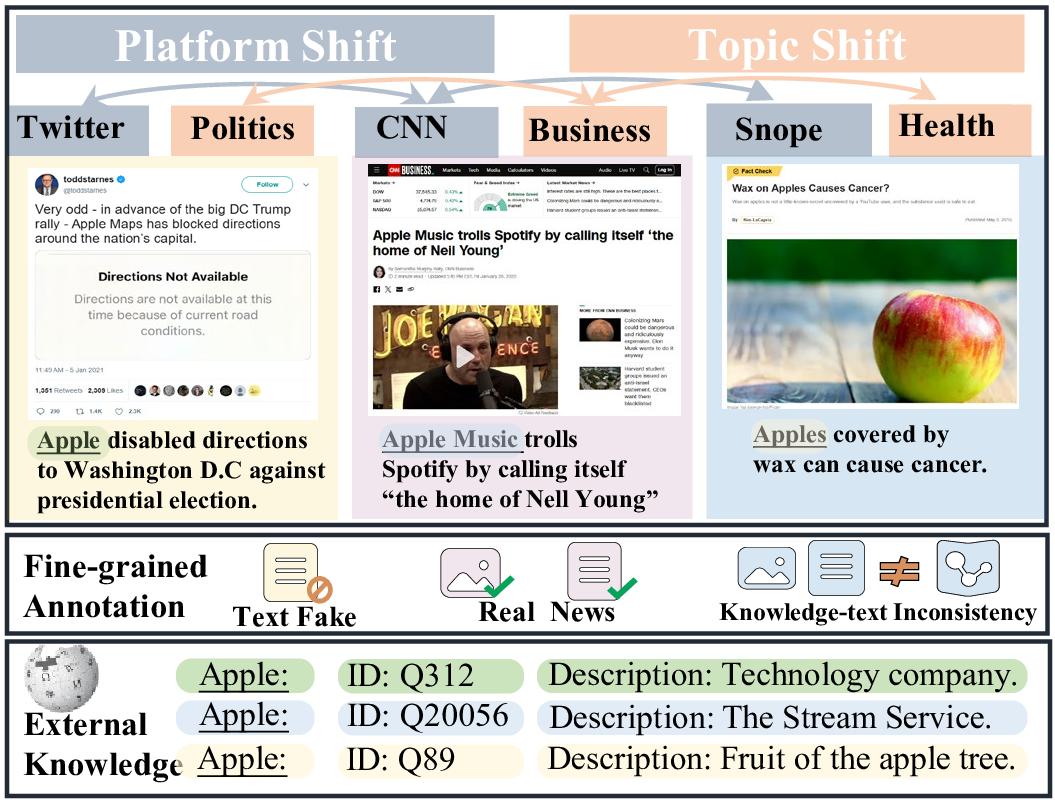

To alleviate the aforementioned challenges of domain shift, external knowledge graphs like ConceptNet [17] are incorporated as complementary to provide extra cross-domain information, serving as a bridge between disparate domains. In the area of fake news detection, prior research endeavors [18]–[20] have also substantiated the significance of leveraging common knowledge derived from external knowledge sources (e.g., wikidata) to enhance the precision of fake news detection. The determination of the veracity of news often relies on foundational knowledge and latest external information as auxiliary factors for assessment. Hence, accurate supplementary knowledge plays a crucial role in cross-domain fake news detection tasks. However, the majority of existing datasets do not include associated knowledge or rely on evidences sourced from online websites, introducing potential inaccuracies and extraneous noise information. Furthermore, it is common for the same entity to possess varied referential meanings across different domains. As depicted in Fig. 1, the term “apple” within the health domain signifies a type of fruit, whereas in the business domain, it denotes the company Apple Inc. Consequently, these disparities across domains give rise to the challenge of entity ambiguity, which can introduce noisy knowledge and degrade the performance of the model. Therefore, the development of datasets that encompass accurate common knowledge assumes an imperative role in advancing the progress of fake news detection area.

Figure 1: An illustration of FineFake dataset, which is a multi-domain dataset that encompasses instances collected from diverse platforms and topics. Each sample is associated with accurate knowledge and fine-grained label.

Another significant aspect of fake news across different topics or platforms lies in the diverse underlying strategies used for fabrication. For instance, image-based fake news comprises the highest proportion, around 40%, among fake news on Reddit, whereas fake news from CNN contains less than 5% in the image-based fake category within the FineFake dataset. This discrepancy can be attributed to the higher prevalence of manipulated images on social media platforms compared to official news sources [21]. Therefore, it is essential to identify distinguishing features of fake news that can provide a rational basis for judgment across platforms. However, conventional fake news detection datasets typically classify news articles into binary classes (true or fake) or employ broad categories such as “most likely” or “somewhat likely”. Unfortunately, such coarse-grained annotations fail to elucidate the underlying factors contributing to the falseness of a news item, such as fabricated images or inconsistencies between text and image. Such capabilities are crucial for achieving a comprehensive and deep understanding of the intrinsic reasons behind fake news. Hence, there is an urgent need for the development of a fine-grained annotation strategy capable of unraveling the underlying reasons behind fake news.

[!tbp]

\centering

\renewcommand{\arraystretch}{0.9} \setlength\tabcolsep{4.5pt} \setlength{\abovecaptionskip}{0.1cm}

\setlength{\belowcaptionskip}{-0.05cm}

\caption{Comparison between FineFake and other fake news detection datasets.}

\begin{tabular}{l|ccc|cccc|c}

\toprule

\multirow{2}[4]{*}{} & \multicolumn{3}{c|}{Basic Info.} & \multicolumn{4}{c|}{Content Type} & \multicolumn{1}{c}{Annotation Info.} \\

\cmidrule{2-9} & Size& Platform&Topic &Text&Image & Network&Knowl.&Label Type \\

\midrule

LIAR\cite{wang2017liar} & 12,836 & Politifact & Political &\ding{51} &\ding{55}&\ding{55}&\ding{55}&Six Category \\

Breaking!\cite{pathak2019breaking} & 649 &BS Detector& US Election &\ding{51}&\ding{55}&\ding{55}&\ding{55}&Three Category \\

Weibo21\cite{nan2021mdfend} & 9128 & Weibo &Nine Topics &\ding{51}&\ding{55} &\ding{55}&\ding{55}& Real/ Fake \\

Evons\cite{krstovski2022evons} & 92,969 &Media-source& US Election &\ding{51}&\ding{51}&\ding{55}&\ding{55}& Real/ Fake \\

Weibo\cite{jin2017multimodal} & 9,528 & Weibo & --- &\ding{51} &\ding{51} &\ding{55}&\ding{55}& Real/ Fake \\

Pheme\cite{zubiaga2017exploiting} & 5,802 & Twitter & News Events &\ding{51}&\ding{51}&\ding{51}&\ding{55}& Real/ Fake \\

FauxBuster\cite{zhang2018fauxbuster} & 917 & Twitter/Reddit & --- &\ding{51}&\ding{51}&\ding{51}&\ding{55}& Real/ Fake \\

Twitter\cite{boididou2015verifying} & 15,629 & Twitter & --- &\ding{51}&\ding{51}&\ding{51}&\ding{55}& Real/ Fake \\

MM-Covid\cite{li2020mm} & 11,173 & FullFact& Health &\ding{51}&\ding{51}&\ding{51}&\ding{55}& Real/ Fake \\

MuMIN\cite{nielsen2022mumin} & 984 & Twitter &--- &\ding{51}&\ding{51} &\ding{51}&\ding{55}& Three Category \\

$MR^2$\cite{hu2023mr2} & 14,700 & Twitter/Weibo &--- &\ding{51}&\ding{51} &\ding{51}&\ding{51}& Three Category \\

\midrule

FineFake &16,909 &\makecell[c]{Snopes, Twitter, Reddit \\ CNN, Apnews, Cdc.gov \\ Nytimes, Washingtonpost}& \makecell[c]{Politics, Enter.\\Business, Health\\Society, Conflict}& \ding{51} & \ding{51} & \ding{51} & \ding{51} & \makecell[c]{Real,\\ Text/ Image Fake,\\Text-image/\\Content-knowledge\\ Inconsistency, \\ Others}\\

\bottomrule

\end{tabular} \label{tab:addlabel} \vspace{-0.2cm}In order to address the aforementioned challenges, in this paper we propose a comprehensive and knowledge-enhanced dataset for fake news detection, dubbed FineFake. As illustrated in Table [tab:addlabel], FineFake surpasses its predecessors by spanning multiple topics and platforms, enjoying accurate common knowledge and fine-grained annotations, thereby furnishing robust and solid data support for further research endeavors. FineFake encompasses instances collected from diverse media platforms, such as CNN, Reddit and Snopes. All instances are also labeled into six distinct topics (i.e., politics, entertainment, business, health, society and conflict). The inclusion of this comprehensive multi-domain dataset fosters a deeper comprehension of correlations between fake news from various domains. Each news article contains textual content, images, possible social connections, and other pertinent meta-data. To ensure the provision of reliable common knowledge, each news is appended with the relevant knowledge entities and descriptions in a semi-manual labeling manner. Moreover, we introduce an innovative annotation guideline that extends beyond traditional binary class labels. Our approach incorporates a six-category annotation strategy that sheds light on the reasons behind the detected fake news, including real, textual fake, visual fake, text-image inconsistency, content-knowledge inconsistency, and other samples. Based on FineFake, we conduct extensive experiments, including investigations into data characteristics, domain-adaptation performance analysis, and exploration of fine-grained classification results, thus providing a highly desirable benchmark for future investigations in this domain. Furthermore, to solve the covariate shift problem and label shift problem in FineFake, we propose a knowledge-enhanced domain adaptation network, dubbed KEAN. Extensive experiments demonstrate that our method achieves SOTA performance across the majority of scenarios. Our contributions are summarized as:

To the best of our knowledge, FineFake dataset represents a pioneering effort for fake news detection. It systematically gathers and formalizes multi-modal news content from diverse topics and platforms, presenting a valuable resource for advancing research under cross-domain scenarios.

The FineFake dataset enhances each news by incorporating rich and reliable external knowledge through semi-manual labeling, which ensures the provision of accurate evidence.

Different from the conventional binary class-based annotations, FineFake employs a fine-grained labeling scheme that classifies news articles into six distinct categories, elucidating the underlying reasons behind the formation of fake news.

We propose KEAN, a knowledge-enhanced domain adaptation network model for fake news detection. We conduct extensive experiments to evaluate the performance of SOTA approaches on FineFake, furnishing valuable benchmarks and shedding light on avenues for future research.

2 Related Work↩︎

2.1 Fake News Detection Datasets↩︎

Since the rapid development of the internet, a proliferation of publicly available datasets concerning the detection of fake news has ensued. Initially, researchers predominantly focused on collecting textual data, concentrating on specific domains to construct these datasets [9], [10], [22], [23]. For instance, the LIAR dataset [9] harnesses Politifact1 to extract news items, subsequently annotating them with six classification labels. Similarly, FEVER [22] is generated by altering sentences extracted from Wikipedia and pre-processing Wikipedia data, each claim is annotated to a three-way classification label. Additionally, the COVID-19 dataset [23] meticulously curates a manually annotated repository of social media posts and articles pertaining to COVID-19, aiming to facilitate research endeavors in identifying pertinent rumors that possess the potential to instigate significant harm.

The aforementioned uni-modal datasets primarily concentrate on linguistic analysis, thus disregarding the crucial dimensions of social networks for dissemination, corresponding images, and metadata, essential for a more comprehensive detection framework. In stark contrast to traditional textual news media releases, the presence of multimodal news incorporating images or videos tends to captivate greater attention and propagate more extensively [24], thus may lead to more damage transmission. Consequently, there has been a discernible surge in the construction of datasets integrating images and social network data [12], [25]–[28]. For instance, MM-COVID [29] offers a multilingual dataset encompassing news articles augmented with pertinent social context and images, aimed at facilitating the detection and mitigation of fake news pertaining to the COVID-19 pandemic. Similarly, Weibo [11] collects original tweet texts, attached images, and contextual information sourced from Weibo, a prominent Chinese microblogging platform renowned for its objective ground-truth labels.

Although the existing multi-modal datasets contain multi-modal data like images, the majority of them overlook critical factors such as multi-domain attributions, external knowledge and fine-grained classification annotations. \(MR^2\) [30] attempts to address this gap by incorporating evidence retrieved from online sources as metadata to enhance fake news detection. Nonetheless, this approach of online retrieval lacks a guarantee of the accuracy of external evidence, potentially introducing extraneous noise information instead. Additionally, Weibo21 [31] divides textual news data into nine distinct topics, yet it only focus on topics, overlooking the the multitude of platforms through which news dissemination occurs.

2.2 Fake News Detection Methods↩︎

At the outset, a considerable amount of research focused on refining the extraction of semantic features inherent in news content itself, recognizing the wealth of information embedded within the content conducive to discerning its veracity [13], [32]–[34]. However, the escalating convergence of semantic structures between fake and authentic news has rendered the task of distinguishing between them based solely on semantics increasingly formidable [1]. Consequently, attention has shifted towards leveraging external knowledge as supplementary information to bolster fake news detection efforts [18], [20], [35]–[37]. For instance, CompareNet [20] constructs a directed heterogeneous document graph to compare news to external knowledge base through the extractied entities.

Nonetheless, news content exhibits significant variations across diverse platforms and topics, and the distribution of fake and authentic news also fluctuates accordingly [1], [38]. Effective deployment of a well-trained fake news detection model in real-world scenarios necessitates robust cross-domain capabilities. However, only a limited number of studies have earnestly tackled the challenges posed by multi-domain and cross-domain fake news detection [31], [38]–[40]. Consequently, we construct a multi-domain knowledge-enhanced multimodal fine-grained dataset that holds immense potential for facilitating research in the realms of multi-domain and cross-domain fake news detection in reality.

3 FineFake: The Multi-Domain Dataset↩︎

This section presents an in-depth exploration of the fundamental phases involved in constructing the dataset, encompassing multi-domain news collection, semi-manual knowledge alignment, and fine-grained human annotation.

3.1 Multi-domain News Collection↩︎

Current fake news detection datasets predominantly concentrate on sourcing data from singular platforms within narrow topics. Nonetheless, this approach is encumbered by limitations pertaining to both data scale and diversity, thereby restricting the adaptability of detection models within varied real-world news landscapes. To overcome this constraint and develop a large-scale dataset spanning multiple platforms and topics with substantial diversity, we employ a comprehensive data collection strategy that encompasses three primary channels: fact-checking websites (e.g., snopes.com), social media platforms (e.g., Twitter), and reputable news websites (e.g., CNN). Through this multifaceted approach, we envisage the creation of a dataset that mirrors the complexity and heterogeneity inherent in contemporary news dissemination paradigms, thereby providing a benchmark to furnish researchers with a more robust foundation for advancing fake news detection methodologies.

| Topic | Snope Labels |

|---|---|

| Politics | |

| Racial Rumors, Soapbox, Conspiracy , Theorie, | |

| Questionable Quotes, Quotes | |

| Enter. | |

| Holidays, Humor, Media Matters, Paranormal, | |

| Social Media, Sports, Travel, Embarrassments | |

| Business | |

| Law Enforcement, Legal, Legal Affairs, | |

| Product Recalls, Risqué Business, Fraud&Scams | |

| Health | Abortion, Health, Medical |

| Society | |

| Computers, Education, Environment, Rebellion, | |

| Inboxer Rebellion,Love, Luck, Food, Science, | |

| Sexuality, Technology, Weddings,Fauxtography, | |

| Language, Junk New, Hurricane Katrina, College, | |

| History, Glurge Gallery, Old Wives’ Tales | |

| Conflict | |

| Horrors, September 11th, Crime, Controversy |

In this study, we leverage Snopes2 as a pivotal resource for acquiring verified news content categorized by various topics, leveraging the category labels meticulously assigned by professional editors. Snopes serves as a fact-checking website, furnishing invaluable research materials utilized by seasoned editors in their preparatory endeavors. Consequently, each verified claim encompasses a substantial amount of additional information regarding the original news post, including external links to the original source, expert-assigned topic labels, and authenticity assessments provided by professionals. The correlation between the category labels in Snopes and our designated topic labels is elucidated in Table 2. For each verified claim on Snopes, we meticulously compile all pertinent components sourced from the fact-checking websites, employing the external links furnished within each claim to procure news content from traditional official media sources and online streaming platforms.

Upon gathering all external links, we embark on a comprehensive data analysis endeavor to identify additional websites for data collection. For traditional official media sources, including APNews, CNN, New York Times, The Washington Post, and CDC3, we develop custom crawlers tailored to retrieve textual content, images, and associated metadata such as authors and publication dates for each news article.

In the realm of online streaming media, we leverage two prominent platforms: Twitter4 and Reddit5. To collect user interactions associated with posted news and construct the social network, we utilize the Twitter API6. Although Reddit lacks a designated API endpoint for retrieval, we engineer a specialized crawler to gather posts and construct the social network accordingly.

Given our overarching objective of constructing a multimodal dataset and benchmark exclusively in English, we judiciously filter out text-only posts and news articles published in languages other than English. Subsequently, we implement data deduplication techniques to eradicate redundant news items, thereby mitigating the risk of data leakage. Moreover, to uphold stringent data quality standards, we systematically eliminate entries containing unqualified images or excessively brief textual content.

3.2 Semi-manual Knowledge Alignment↩︎

One notable feature of FineFake is its incorporation of pertinent background knowledge, which complements the original multi-modal content. This integration of contextual information and common knowledge holds immense potential in furnishing invaluable signals to advance the identification of fake news. To accomplish the integration of this crucial common knowledge, we adopt a semi-manual knowledge alignment strategy. Specifically, we leverage the entity link tool [41], [42] to adeptly recognize named entities within the news text. To ensure the accuracy of extracted named entities, we initially set the threshold at 0.3 to compile the initial list of entities and subsequently adjust the threshold to 0.5 to identify entities with higher confidence. Entities extracted within the threshold range of 0.3 to 0.5 undergo further verification by human annotators to ascertain alignment with the contextual meaning, thereby achieving entity disambiguation. Subsequently, we retrieve all triples within Wikidata with a distance of one from the entity, retaining relationship-type triples with other entities and attribute triples that describe information about the entity. The obtained entities and their corresponding information as triples in knowledge graph will be showcased in conjunction with the original multi-modal content. The entities and their corresponding information, represented as triples within the knowledge graph, are then seamlessly showcased in conjunction with the original multi-modal content. This augmentation enriches the dataset by providing an additional layer of contextual understanding, thereby facilitating more nuanced analyses and interpretations of fake news instances.

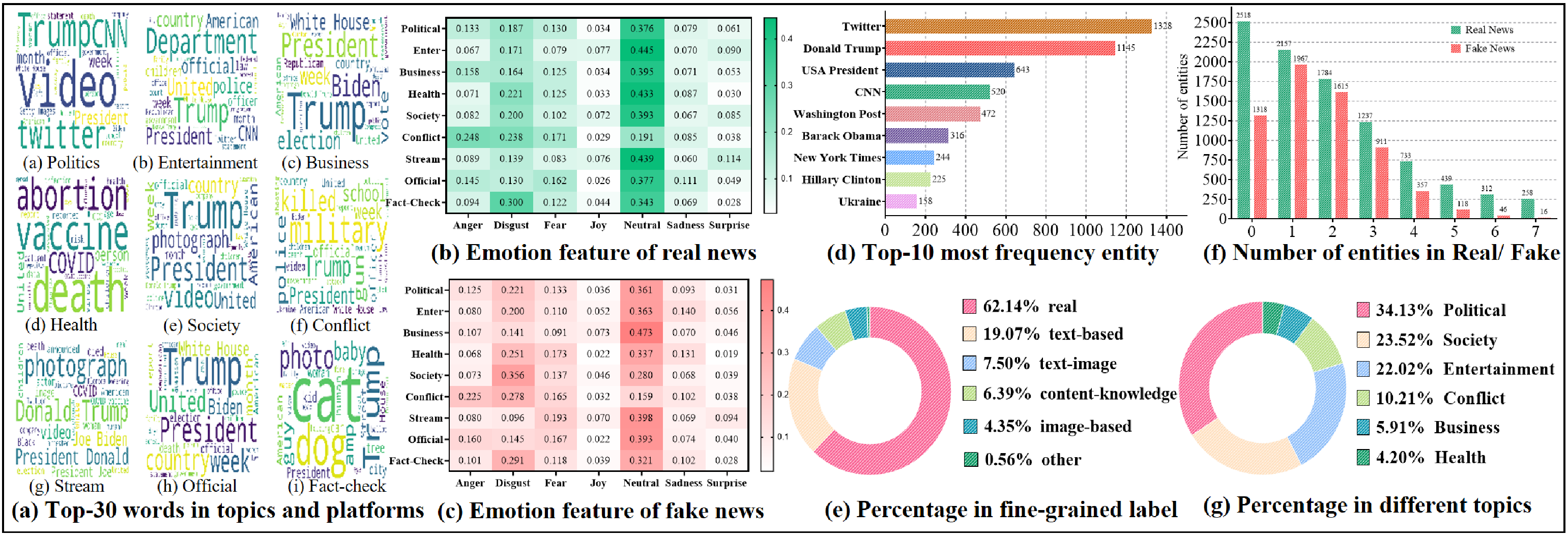

Figure 2: Basic information and statistic analysis on FineFake

3.3 Fine-grained Human Annotation↩︎

Diverging from traditional binary categorization schemes, the FineFake dataset further introduces a novel classification framework wherein each instance of fake news is assigned to one of six distinct categories, namely real, text-based fake, image-based fake, text-image inconsistency, content-knowledge inconsistency and others. These categories are determined based on the underlying reasons that contribute to the falseness of the news, thereby providing a more nuanced understanding of the deceptive nature of the content.

The first two categories, namely text-based fake and image-based fake, denote instances where the falsity of the news can primarily be discerned through analysis of either the textual content or the accompanying images. Text-image inconsistency represents a category wherein the fake news is classified as such due to the evident disparities and contradictions between the textual content and the associated images. The fourth category, content-knowledge inconsistency, encompasses cases where the news content, including both textual content and images, contradicts externally retrieved knowledge. Lastly, the “others” category encompasses instances that do not fall squarely within the aforementioned categories but still exhibit deceptive characteristics. This category ensures the inclusion of diverse and anomalous cases, capturing a broad spectrum of fake news manifestations that may not fit neatly into the predefined categories.

Despite news collected from fact-checking websites comes with authenticity labels assigned by professionals, the other two types of news lack reliable category labels. Therefore, we have engaged professional annotators to label these unlabeled news articles. In the verification stage, 5 annotators are asked to annotate the binary classification label and fine-grained label for each data. As data from official media and online stream media are collected based on the extended information declared by fact-checking websites, we initially utilize the corresponding labeled news from fact-checking websites as reliable references for each data instance. Annotators then will annotate the fine-grained labels and binary classification labels with the help of reference from fact-checking websites. To promote consistency and mitigate subjectivity, we implemented inter-annotator agreement measures. Each news will be independently labeled by five annotators, allowing for the assessment of labeling consistency and the calculation of agreement scores (e.g., Cohen’s kappa coefficient). Any discrepancies or disagreements were then resolved through discussions and consensus among the annotators, ensuring a strict and unified labeling approach.

Through this refined categorization scheme, FineFake facilitates a more nuanced examination of the reasons underlying the falseness of news, empowering researchers to develop sophisticated detection methodologies that account for distinct modalities, inconsistencies, and the intersection between textual and visual elements.

3.4 Downstream Fake News Detection Tasks↩︎

Based on the constructed FineFake dataset, we propose three downstream tasks to evaluate the performance of state-of-the-art(SOTA) fake news detection models under various scenarios.

The primary objective in fake news detection models is to accurately classify news articles as either fake or true. Therefore, we employ the conventional approach of binary classification as the evaluation task. To investigate the efficacy of external knowledge in enhancing fake news detection, we conduct two types of binary classification tasks: one without any external knowledge and another with knowledge augmentation. The loss function is defined as follows: \[\label{equation1} \mathcal{L}(y,\hat{y}) = -y \log(\hat{y})- (1-y) \log(1-\hat{y}),\tag{1}\] in which \(y\) denotes the actual label and \(\hat{y}\) denotes the probability of model’s output.

Currently, both fake news detection models and datasets predominantly concentrate on accurately predicting the authenticity of news articles, often overlooking the determination of the reasons behind fake news and the interpretability of the results. To address this limitation, we propose a novel fine-grained classification task leveraging the fine-grained annotations provided in our dataset. This task extends the traditional binary classification of true or fake into a six-classification problem with fine-grained fake type annotation, including text-based fake, image-based fake, text-image consistency, content-knowledge inconsistency and others. In the training process, we utilize cross-entropy loss as loss function:

\[\label{equation2} \mathcal{L}(y,\hat{y}) = -\sum_{i=1}^{N} y_i \log(\hat{y}_i),\tag{2}\]

where \(N\) denotes the number of label categories.

Most existing fake news detection models are primarily trained within a specific domain, often overlooking the necessity to evaluate their effectiveness in accurately detecting fake news across multiple domains. However, considering the substantial presence of news spanning various domains on the internet, it becomes imperative for a model to possess effective multi-domain adaptation capabilities.

To assess the capacity of existing models for multi-domain adaptation, we further design three unsupervised domain adaptation tasks: topic adaptation, platform adaptation, and dual domain adaptation. Topic adaptation is proposed to measure the models’ capability to overcome data distribution variance \(P(x_s) \ne P(x_t)\) between different topics from the same platform. In this task, models are trained using samples from four topics and then tested on the remaining two topics from the same platform. Platform adaptation, on the contrary, models are trained using samples from one platform while test on another platform with the same topic. This experiment aims to test the model’s ability to adapt to label shift problem \(P(y_s) \ne P(y_t)\). Here, models are trained using samples from one platform and subsequently tested on another platform with the same topic. The most challenging task, dual domain adaptation, requires models to simultaneously adapting across both topics and platforms. This task presents a significant challenge, as it requires the model to exhibit generalization abilities enabling it to perform well when confronted with data from previously unseen domains and deviated label distribution.

4 Comprehensive Analysis of FineFake↩︎

| Topic/Platform | Total | Real | Fake | Words | Entities |

|---|---|---|---|---|---|

| Politics | 5,727 | 3,722 | 2,005 | 290.60 | 3.00 |

| Entertain. | 3,699 | 2,514 | 1,185 | 155.58 | 2.33 |

| Business | 1,003 | 527 | 476 | 308.10 | 3.01 |

| Health | 710 | 438 | 272 | 320.53 | 3.01 |

| Society | 3,939 | 2,236 | 1,703 | 133.94 | 1.95 |

| Conflict | 1,718 | 979 | 739 | 257.57 | 2.62 |

| Stream | 5,000 | 3,895 | 1,105 | 13.75 | 1.25 |

| Official | 4,353 | 4,138 | 215 | 813.02 | 5.57 |

| Fact-Check | 7,556 | 2,474 | 5,082 | 19.40 | 1.73 |

| The All | 16,909 | 10,507 | 6,402 | 222.03 | 2.58 |

In this section, we conduct an extensive experimental analysis aimed at unraveling the intricate structure and inherent characteristics of the FineFake dataset. Table 3 exhibits the detailed statistics of FineFake, including the number of true and fake samples in each topic/platform, the average text length, and the average number of extracted entities for each news. Upon scrutiny of these findings, it becomes apparent that our dataset exhibits a well-balanced distribution between positive and negative samples overall, ensuring a robust representation of both true and fake instances. Furthermore, the obvious differences in text length across three platforms (Stream Media, Official News, Fact-Check Websites) ensures the diversity of data. The imbalanced distribution of positive and negative samples in the three platforms are also significant. Official news sources generally exhibit higher credibility, thus having a higher proportion of true news. In contrast, when it comes to fact-checking websites, due to their focus on debunking fake news events, it has a higher proportion of fake news. This results not only aligns with our real-world understanding but also provides a solid data foundation for studying cross-platform label shift issues in fake news detection. Additionally, Table 3 demonstrates a substantial presence of annotated entity knowledge, affirming the richness and depth of contextual information within the dataset.

Based on the empirical exploration, Figure 2 (a) presents the top-30 words observed in the six topics and three platforms. Notably, one can easily observe that high-frequency vocabulary in different domains exhibits distinct patterns and thematic clusters. Such findings shed light on the domain-specific linguistic variations, revealing the importance of constructing a multi-domain fake news dataset. In order to delve deeper into the disparities in emotions between fake news and real news across domains, we conduct another experiment in terms of emotion tendency. Figure 2 (b) and (c) are heatmaps illustrate the average value of emotion tendencies of the nine domains, which are calculated by Emotion DistilRoBERTa [43]. The emotion distributions of various domains are apparently different, further demonstrating the value of FineFake dataset. One can also see that the emotion tendencies of true/fake news are also different within the same domain, such as the “society” topic exhibits a more prominent “disgust” emotion in fake news than the real ones. In “conflict” domain, there is a significant decrease in neutral sentiment compared to other domains, replaced by a predominant sense of anger. This finding is aligned with previous literature [44], [45] that sentiments also contribute to advancing the performance of fake news detection.

In order to scrutinize the presence of external knowledge entities, we conduct an analysis on the distribution of the number of external knowledge entities involved, as depicted in Figure 2 (f). The results reveal that approximately 85% of the news articles encompass external knowledge entities, with real news articles demonstrating a higher propensity to incorporate such entities compared to fake news articles. Figure 2 (d) illustrates the top-10 most frequently extracted entities and the top-3 entities are “Twitter”, “Donald Trump” and “USA President”. Figure 2 (e) provides an overview of the proportions of data with fine-grained labels. This demonstrates FineFake’s exploration into the fundamental causes of fake news, thus providing a reliable benchmark for fine-grained analysis in future work. Moreover, the proportions of ""text-image inconsistency"" and ""content-knowledge inconsistency"" within the fake news category also highlight the importance of multimodal information and external knowledge. Figure 2 (g) provides an overview of the proportions of data from each topic domain in relation to the total dataset, thereby highlighting the multi-domain characteristic of our study.

5 KEAN: The Proposed Method↩︎

5.1 Architecture↩︎

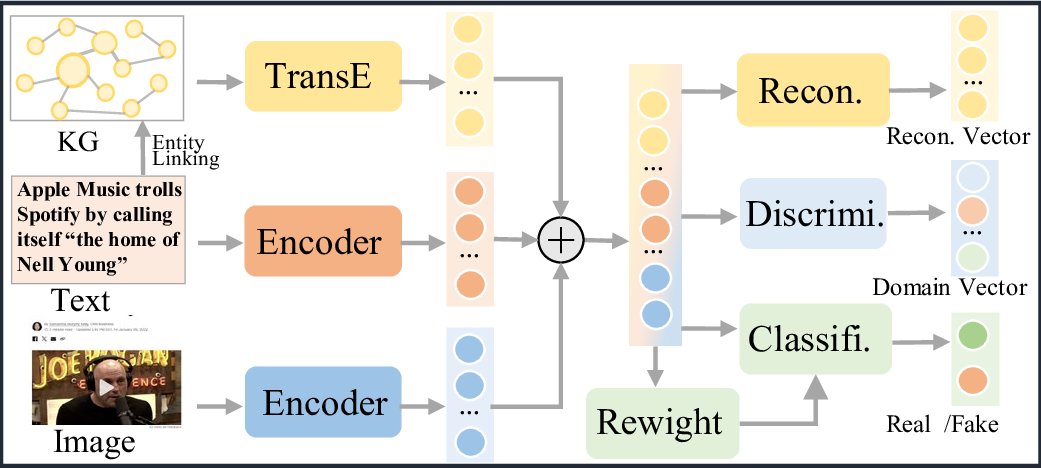

Figure 3: The overview of KEAN model.

To address both the covariate shift and label shift problem simultaneously, we propose a knowledge-enhanced domain adaptation network, dubbed KEAN. As Fig 3 illustrates, the structure of KEAN is based on the architecture of Domain Adversarial Neural Networks (DANN) [46]. As knowledge graph contains rich domain commonsense knowledge [47]–[49], we utilize the extracted entities to establish the sub-graph, thereby aiding in mitigating the covariate shift problem. Furthermore, inspired by previous work [50]–[52], we incorporate a re-weighting strategy to solve label shift problem. Specifically, we re-weight the classifier by estimating the target label marginal to calculate label weights, thereby adjusting the influence of different labels on the classifier during training.

5.2 Multimodal Encoder↩︎

We define an instance \(I = \{T,V,E_T^n\}\) as a tuple representing three different modalities of contents: the textual content \(T\) and the visual content \(V\) of the news, and a set of textual entities \(E_T^n\), where \(n\) represents the number of entities. Additionally, we define the constructed knowledge graph is defined as \(KG\).

Considering both texutal content \(T\) and visual content \(V\) of the news, we utilize a pre-trained CLIP [53] as the encoder in our methodology. This model is utilized to transform both sentences and images into embedding vectors, leveraging its robust multimodal representation capabilities [54]. \[\begin{align} \label{equation3}h_t &= \mathrm{CLIP}_{text}(T), h_t \in \mathcal{R}^{d1} \\ h_v &= \mathrm{CLIP}_{visual}(V), h_v \in \mathcal{R}^{d2} \\ h_t^{'},h_v^{'} &= \mathrm{MLP}_t(h_t),\mathrm{MLP}_v(h_v), h_t^{'},h_v^{'} \in \mathcal{R}^{d}\end{align}\tag{3}\]

In FineFake, each instance contains accurate and abundant external knowledge information from Wikidata. We extract one-hop neighbours of the entities \(E_T^n\) and construct a sub-graph of \(KG\) by aggregating all the triplets, which is defined as \(KG_{sub}\). Specifically, we utilize TransE [55] as our knowledge graph embedding method due to its simplicity and effectiveness. Formally, given a triplet \((h,r,t)\), TransE treats the relationship \(r\) as a translation vector \(r\) from the head entity \(h\) to the tail entity \(t\), represented as \(h+r=t\). After feature extraction, each node \(j \in KG_{sub}\) has its representation \(h_j\). We then compute the average of the feature vectors \(h_j\) for all nodes in \(KG_{sub}\) to obtain the final graph feature \(h_{kg}\) as the representation.

\[\label{equation4} \begin{align} h_j = \mathrm{TransE}(E_j), E_j \in KG_{sub} \\ h_{kg} = \frac{1}{|E|}\sum_{E_j}^{E} h_j , h_j \in KG_{sub} \\ h_{kg}^{'} = \mathrm{MLP}_{kg}(h_{kg}),h_{kg}^{'} \in \mathcal{R}^{d3} \end{align}\tag{4}\] As data has encoded by pretrained model, we utilize fully connected layers for \(h_t,h_v,h_{kg}\) to get the final representation \(h_t^{'},h_v^{'},h_{kg}^{'}\). In the fusion module, we use concatenation to get the final feature representation \(h_{I}=[h_t^{'};h_v^{'};h_{kg}^{'}]\) of the news I.

-2mm

| Category | Methods | Without Knowledge | Knowledge Enhanced | Fine-Grained Classification | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 3-14 | Acc | Pre | Recall | F1 | Acc | Pre | Recall | F1 | Acc | Pre | Recall | F1 | |

| SAFE | 0.740 | 0.751 | 0.738 | 0.744 | / | / | / | / | 0.605 | 0.481 | 0.385 | 0.428 | |

| MVAE | 0.741 | 0.738 | 0.728 | 0.731 | / | / | / | / | 0.550 | 0.448 | 0.315 | 0.307 | |

| CompNet | 0.780 | 0.779 | 0.767 | 0.772 | 0.791 | 0.794 | 0.779 | 0.786 | 0.656 | 0.605 | 0.483 | 0.522 | |

| KAN | 0.779 | 0.767 | 0.772 | 0.769 | 0.789 | 0.787 | 0.776 | 0.781 | 0.632 | 0.643 | 0.383 | 0.424 | |

| KDCN | 0.787 | 0.783 | 0.782 | 0.782 | 0.801 | 0.802 | 0.791 | 0.796 | 0.668 | 0.555 | 0.486 | 0.499 | |

| EANN | 0.785 | 0.789 | 0.774 | 0.781 | / | / | / | / | 0.644 | 0.665 | 0.431 | 0.475 | |

| MDFEND | 0.787 | 0.784 | 0.781 | 0.783 | / | / | / | / | 0.661 | 0.676 | 0.460 | 0.484 | |

| M3FEND | 0.783 | 0.793 | 0.765 | 0.770 | / | / | / | / | 0.680 | 0.656 | 0.625 | 0.634 | |

| CANMD | 0.782 | 0.768 | 0.773 | 0.770 | / | / | / | / | 0.676 | 0.637 | 0.597 | 0.602 | |

| KEAN | 0.790 | 0.791 | 0.779 | 0.785 | 0.803 | 0.806 | 0.788 | 0.797 | 0.692 | 0.685 | 0.605 | 0.638 | |

5.3 Adversarial Training Scheme↩︎

To enable the model to learn domain-invariant representation and address covariate shift, we follow the architecture of DANN. KEAN comprises a task classifer \(C\) (with parameters \(\theta_C\)), a domain-discriminator \(D_{adv}\) (with parameters \(\theta_D\)) and a decoder \(D_{recon}\) (with parameters \(\theta_R\)). \(C\) and \(D_{adv}\) both take fused representation \(h_{I}\) as its inputs. The task classifier \(C\) is responsible for determining the veracity of the input news \(I\), while the domain-discriminator \(D_{adv}\) aims to discriminate the domain label of \(I\). The loss function are as followed: \[\label{equation5} \begin{align} \mathcal{L}_{cls} = E_{I_s} &(-\sum_{i=1}^{N} y_i \log C(h_I)) \\ \mathcal{L}_{adv} = -E_{I_s}(\log D_{adv}&(h_{I_s})) - E_{I_t}(\log(1-D_{adv}(h_{I_t}))\\ \end{align}\tag{5}\] To further enforce domain-invariance into the encoded representation \(h_{kg}^{'}\), we utilize a decoder \(D_{recon}\) with a reconstruction loss, as proposed in prior work [56]: \[\label{equation6} \begin{align} \mathcal{L}_{recon}(I_s,I_t) = E_{h_{kg}}(\left\| D_{recon}(h_{kg}^{'})-h_{kg}\right\|^{2}) \end{align}\tag{6}\] The final optimization of the domain-adversarial training is based on the minimax game: where \(\alpha\) and \(\beta\) are hyper-parameters. The minimax game is realized by reversing the gradients of \(\mathcal{L}_{adv}\) while back-propagation with a reverse layer [46].

\[\label{equation7} \begin{align} \mathcal{L}_{loss} = (\mathcal{L}_{cls}+ &\alpha \mathcal{L}_{adv}+ \beta \mathcal{L}_{recon}) \\ \hat{\theta_C},\hat{\theta_R} = arg \underset{\theta_C,\theta_R} {\text{min}} \mathcal{L}_{loss} , & \hat{\theta_D} = arg \underset{\theta_D}{\text{max}} \mathcal{L}_{loss} \end{align}\tag{7}\]

Re-weighting the classifier is a widely used technique to address label shift problems [50]. Following the approach outlined in BBSE [57], we aim to reweight the classifier by estimating the distribution of labels in target domain. Specifically, We seek to obtain the importance weights \(\hat{w}_t(y)\), defined as the ratio of the probability of observing label \(y\) in the target domain to that in the source domain, i.e., \(\hat{w}_t(y)=\frac{p_t(y)}{p_s(y)}\). To calculate \(\hat{w}_t(y)\), we first train the classifier and then calculate the confusion matrix \(C_h\) of the source domain and probability mass function \(q_h\) of \(f(X)\) under predicted target distribution. Then, \(\hat{w}_t(y)\) can be calculated by \(C_h\) and \(q_h\) as Equition 7 . As we obtain \(\hat{w}_t(y)\), we retrain the classifier by the importance weighted loss as followed: \[\label{sexghdvj} \begin{align} \hat{w}_t(y) = & C_h^{-1}*q_h \\ \mathcal{L}_{loss}^{'} = \frac{1}{n} \sum_{j=1}^{n}& \hat{w} (y_j) \mathcal{L}_{loss} (y_j, f(x_j)) \end{align}\tag{8}\]

6 Experiments↩︎

6.1 Baseline Models↩︎

Following previous works [1], we select the following methods as baselines and divide them into three categories: content-based method, knowledge-enhanced method and multi-domain method.

MVAE [13] comprises three components: an encoder to encode the shared representation of features, a decoder to reconstruct the representation, and a detector to classify the truth of posts. SAFE [33] calculates the relevance between textual and visual information and defines it as cosine similarity modification to detect fake news.

CompNet [20] constructs a directed heterogeneous document graph to utilize knowledge base. KAN [18] incorporates semantic-level and knowledge-level representations in news to improve the performance for fake news detection. KDCN [36] captures two level inconsistent semantics in one unified framework to detect fake news.

EANN [39] firstly utilizes a discriminator to derive event-invariant features for multi-domain fake news detection. MDFEND [31] introduces a multi-domain fake news detection model that leverages a domain gate to aggregate multiple representations extracted by a mixture of experts. M\(^{3}\)FEND [38] proposes a memory-guided multi-view framework to address the problem of domain shift and domain labeling incompleteness. CANMD [40] proposes a contrastive adaptation network to solve the label shift problem in early misinformation detection.

6.2 Implementation Details↩︎

All experiments are conducted using the proposed FineFake dataset. In classification experiments, instances within FineFake are split into the ratio of 6:2:2 for training, evaluating and testing, respectively. In domain adaptation experiments, source domain data are split into the ratio of 9:1 for training and evaluating, while all data from target domain are used for testing. The experiments are executed on a computational setup consisting of 4 NVIDIA 3090 GPUs, each equipped with 24GB of memory. We set \(\alpha\) as 0.8 and \(\beta\) as 0.2 for loss function. For optimization purposes, we employ the AdamW optimizer [58] with a weight decay value of 5e-4. The batch size is set to 32, and the initial learning rate is established at 1e-3. Subsequently, the learning rate was decayed gradually with each epoch. In order to eliminate the potential impact of random variations, we fix the random seed throughout the experiments. The hyperparameters of all baseline models are carefully tuned on the validation sets to achieve an optimal configuration.

-1mm

| Source | Task | Task 1 Topic DA | Task 2 Platform DA | Task 3 Dual DA | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2-18 | Target | Enter. | Conflict | Stream | Official | Fact-Check | Stream | Official | Fact-Check | ||||||||

| 2-18 | Metric | Acc | F1 | Acc | F1 | Acc | F1 | Acc | F1 | Acc | F1 | Acc | F1 | Acc | F1 | Acc | F1 |

| Stream | EANN | 0.918 | 0.915 | 0.714 | 0.678 | / | / | 0.763 | 0.731 | 0.621 | 0.607 | / | / | 0.825 | 0.794 | 0.590 | 0.572 |

| MDFEND | 0.920 | 0.919 | 0.697 | 0.695 | / | / | 0.782 | 0.727 | 0.615 | 0.609 | / | / | 0.802 | 0.789 | 0.697 | 0.681 | |

| M3FEND | 0.912 | 0.910 | 0.649 | 0.655 | / | / | 0.763 | 0.763 | 0.631 | 0.604 | / | / | 0.840 | 0.804 | 0.706 | 0.671 | |

| CANMD | 0.927 | 0.923 | 0.697 | 0.694 | / | / | 0.802 | 0.810 | 0.549 | 0.492 | / | / | 0.791 | 0.882 | 0.653 | 0.434 | |

| KEAN | 0.922 | 0.917 | 0.716 | 0.719 | / | / | 0.776 | 0.797 | 0.634 | 0.621 | / | / | 0.827 | 0.840 | 0.710 | 0.696 | |

| Official | EANN | 0.945 | 0.923 | 0.838 | 0.804 | 0.412 | 0.427 | / | / | 0.401 | 0.379 | 0.336 | 0.232 | / | / | 0.394 | 0.345 |

| MDFEND | 0.948 | 0.927 | 0.847 | 0.783 | 0.679 | 0.686 | / | / | 0.477 | 0.479 | 0.349 | 0.378 | / | / | 0.334 | 0.169 | |

| M3FEND | 0.947 | 0.921 | 0.836 | 0.798 | 0.669 | 0.537 | / | / | 0.411 | 0.383 | 0.331 | 0.164 | / | / | 0.334 | 0.167 | |

| CANMD | 0.947 | 0.921 | 0.823 | 0.782 | 0.487 | 0.425 | / | / | 0.351 | 0.495 | 0.331 | 0.497 | / | / | 0.334 | 0.473 | |

| KEAN | 0.947 | 0.968 | 0.849 | 0.828 | 0.690 | 0.654 | / | / | 0.493 | 0.606 | 0.402 | 0.480 | / | / | 0.411 | 0.495 | |

| Fact-Check | EANN | 0.653 | 0.631 | 0.644 | 0.610 | 0.721 | 0.723 | 0.774 | 0.718 | / | / | 0.686 | 0.695 | 0.711 | 0.714 | / | / |

| MDFEND | 0.661 | 0.647 | 0.634 | 0.628 | 0.703 | 0.710 | 0.784 | 0.715 | / | / | 0.685 | 0.671 | 0.737 | 0.711 | / | / | |

| M3FEND | 0.632 | 0.624 | 0.615 | 0.602 | 0.721 | 0.679 | 0.688 | 0.690 | / | / | 0.677 | 0.677 | 0.673 | 0.687 | / | / | |

| CANMD | 0.644 | 0.563 | 0.590 | 0.538 | 0.669 | 0.491 | 0.678 | 0.801 | / | / | 0.665 | 0.403 | 0.451 | 0.531 | / | / | |

| KEAN | 0.663 | 0.650 | 0.637 | 0.635 | 0.747 | 0.730 | 0.796 | 0.743 | / | / | 0.688 | 0.679 | 0.751 | 0.724 | / | / | |

6.3 Experimental Results↩︎

6.3.1 Binary Classification Task↩︎

In this study, we perform binary classification analysis under two conditions: knowledge enhancement and no knowledge. Experimental results are presented in Table 4. Notably, KDCN and KEAN exhibit the highest improvement when trained on the knowledge-augmented dataset, indicating their robust capacity to assimilate and leverage external knowledge. Across all knowledge-enhanced models, training with knowledge enhancement yields a substantial improvement in all four metrics. This finding demonstrates the significance of incorporating high-quality external knowledge, which further reveals the value of knowledge provided in the proposed FineFake dataset.

6.3.2 Fine-grained Classification Task↩︎

In this task, we utilize the fine-grained annotations for both training and testing purposes. We employ accuracy, macro recall, macro precision and macro f1-score to assess the performance of baseline models. Experimental results are presented in Table 4. Notably, there is significant degradation in the performance of all methods on fine-grained classification. This experiment enables more detailed classification of fake news, thereby enhancing the interpretability of fake news detection.

6.3.3 Multi-domain Adaptation Task↩︎

Here we evaluate the performance of different models under the multi-domain adaption scenario by the three domain adaptation tasks defined in 3.4. Table 5 presents the experimental results. Given significant disparities in the percentage of positive and negative samples across certain domains, e.g. Official Stream, the weighted f1 value is adopted as the evaluation index for all experiments.

In the topic domain adaptation task, we select entertainment and conflict topic as the test data, while utilizing data from the remaining four topics for training. Results demonstrate varying degrees of content differences between topics. Notably, all model’s experience a substantial decline in performance from the entertainment topic to the conflict topic, indicating that the conflict topic exhibits greater variance in data distribution. This finding aligns with our analysis of FineFake in 3.4. KEAN achieves the most SOTA metrics in this task, showcasing its adaptability across different topics. KEAN reaches most SOTA metrics in this task, which demonstrates our model exhibits good adaptability across different topics.

In platform domain adaptation task, we choose politics data as the training and testing data. Politics is chosen due to its high proportion among the six topics and its significant attention in research [9], [59], [60]. KEAN achieves most SOTA in both metrics, particularly when source and target datasets exhibit significant label shift problems, e.g fact-check source domain and official news test domain. This is attributed to our utilization of re-weighting methods, enabling our model to possess superior generalization capabilities when encountering label shift issues.

In the dual domain adaptation task, models are trained with conflict topic data from the source platform and tested with politics topic data from the target platform. Results show a significant decrease in performance across all models on this task, as crossing two domains exacerbates both covariate shift and label shift problems. The pronounced domain shift poses a formidable challenge to the model’s generalization capability. For instance, when encountering substantial label and convariate shift between two news environments(official conflict data with stream politics data), models lose ability to distinguish real from fake news. This indicates the benchmark role of FineFake in future research on fake news detection, offering enhanced generalization capability and practical value. However, KEAN still reaches SOTA in most task settings, further confirming its cross-domain capability to address convariate shift and label shift simultaneously.

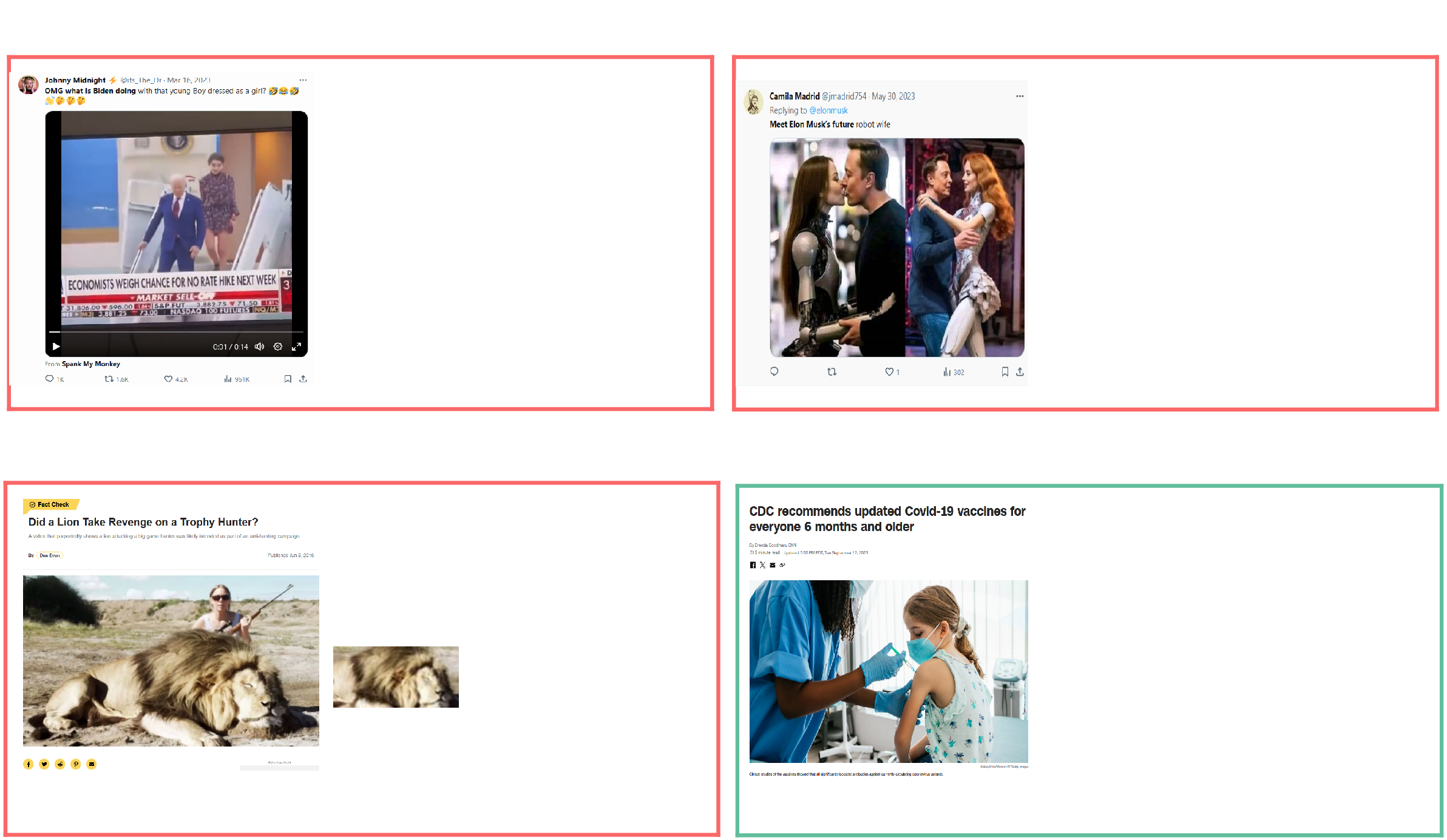

Figure 4: Case studies on our dataset FineFake.

6.4 Case Study↩︎

In this section, we provide four cases from four different topic domains and three platforms to provide an illustrative demonstration of the fine-grained annotations in Figure 4. In the first case, the knowledge provides that the individual depicted in the picture is Biden’s granddaughter instead of a boy. External knowledge is essential to refute this fake news, leading to content-knowledge inconsistency. In the second case, the image is fabricated which makes its false reason image-based fake. In the third case, the textual content claims the lion is taking revenge, but in the picture, the lion has already collapsed, leading to image-text inconsistency. The fourth case is a real news from CNN and thus there is no conflict between the image, text and external knowledge.

7 Conclusion and Future Work↩︎

In this paper, we propose FineFake, a knowledge-enriched dataset for fine-grained multi-domain fake news detection. Each instance contains textual content, images, potential social connections, affiliated domain and other pertinent meta-data. FineFake empowers each news with rich and reliable external common knowledge and employs a fine-grained labeling scheme that classifies news articles into six distinct categories. We design three challenging tasks based on FineFake and conduct extensive experiments to provide reliable benchmarks for the community. Furthermore, we propose a knowledge-enhanced domain adaptation network, dubbed KEAN for fake news detection to simultaneously solve convariate shift problem and label shift problem. Moving forward, our future research endeavors will involve expanding our modalities to include video data.