Learning to Solve Job Shop Scheduling under Uncertainty

March 04, 2024

Abstract

Job-Shop Scheduling Problem (JSSP) is a combinatorial optimization problem where tasks need to be scheduled on machines in order to minimize criteria such as makespan or delay. To address more realistic scenarios, we associate a probability distribution with the duration of each task. Our objective is to generate a robust schedule, i.e. that minimizes the average makespan. This paper introduces a new approach that leverages Deep Reinforcement Learning (DRL) techniques to search for robust solutions, emphasizing JSSPs with uncertain durations. Key contributions of this research include: (1) advancements in DRL applications to JSSPs, enhancing generalization and scalability, (2) a novel method for addressing JSSPs with uncertain durations. The Wheatley approach, which integrates Graph Neural Networks (GNNs) and DRL, is made publicly available for further research and applications.

1 Introduction↩︎

Job-shop scheduling problems (JSSPs) are combinatorial optimization problems that involve assigning tasks to resources (e.g., machines) in a way that minimizes criteria such as makespan, tardiness, or total flow time. While the scheduling of production resources plays an important role in many industries, the JSSPs formulation lacks the handling of uncertainty due to its simplifying assumptions. This leads to several direct practical consequences, such as scheduling from fixed factors which can have significant impact on scheduling performance, and ignoring machine breakdowns or material shortages, leading to poor solutions when faced with real-world uncertainty.

Optimal solving of combinatorial optimization problems is NP-complete, and while there has been a lot of progress in solvers performance [1], classical approaches remain often impractical on large instances. Therefore, approximation and heuristics-based methods have been proposed [2]; but handling uncertainty within these methods remains challenging. Recent works have considered learning algorithms for such problems and report early advances with deep reinforcement learning (DRL) techniques ([3]–[5]). Because it models the world as a runnable environment, and the algorithm learns directly from it, DRL does offer a more natural way to handle uncertainty with JSSPs. As Reinforcement Learning methods are robust to noise, the uncertainty in the problem statement, which is reflected in the learner’s environment, is naturally handled by the algorithm.

We present two contributions for tackling JSSP with uncertain durations.

First, this work shows a range of improvements over the DRL and JSSPs literature, from neural network architectures to training hyper-parameters and reward definitions. These directly lead to better generalization and scalability, both to same-size problems and to larger problems.

Second, the proposed method solves JSSPs with uncertain duration, that beats optimal deterministic solutions on expected uncertainty. This is relevant to the general use-case where uncertainty cannot be known in advance and where the best deterministic schedule uses expected uncertainty on tasks duration.

Overall, this leads to a very flexible and efficient approach, capable of naturally handling duration uncertainty, with top results on existing Taillard benchmarks while setting a new benchmark reference for JSSPs with uncertain durations. The approach, code-named Wheatley, combines Graph Neural Networks (GNNs) and DRL techniques. The code is made available under an Open Source license at https://github.com/jolibrain/wheatley/.

The paper is organized as follows: related works are introduced in Section 2, then the JSSP with uncertainty is formalized as a Markov Decision Process (MDP) in Section 3. In Section 4, we detail the core technical contributions. Section 5 is dedicated to experiments on both deterministic and stochastic JSSPs. Finally, we conclude and discuss future works in Section 6.

2 Related work↩︎

This section provides an overview of techniques developed to address both deterministic and stochastic versions of the Job hop Scheduling Problem ([6]).

Deterministic JSSPs. Mathematical programming, including techniques such as Constraint Programming (CP) or Integer Linear Programming (ILP), has been favored for solving JSSPs due to its precision and ability to model complex scheduling problems. However, the time and resources required to achieve solutions can be very high for large scenarios [1].

Priority Dispatching Rules (PDRs), are heuristic-based strategies that assign priorities to jobs based on predefined criteria; they make local decisions at each step by picking the highest priority job. Common criteria used in PDRs include the Shortest Processing Time (jobs with the shortest processing time are given priority) and the Earliest Due Date (jobs due the earliest are prioritized). This simplifies the scheduling process, making PDRs particularly useful for real-time or large-scale scenarios where rapid decision-making is essential. However, while PDRs are computationally efficient, they rarely yield the optimal solution. A comprehensive evaluation can be found in [2].

Recently, there has been a surge in machine learning and data-driven approaches to solve JSSPs. Instead of relying on handcrafted heuristics, the Learning to Dispatch strategy (L2D) uses machine learning to emulate successful dispatching strategies, as described in [7]. A significant advantage of this method is its size-agnostic nature, as it uses the disjunctive graph representation of the JSSPs. Graph Neural Networks (GNNs) process these graphs to capture intricate relations between operations and their constraints. Deep Reinforcement Learning (DRL) guides the decision-making process, optimizing scheduling decisions based on the features extracted by the GNNs. In [8], the authors leverage a GNN to convert the JSSP graph into node representations. These representations assist in determining scheduling actions. Proximal Policy Optimization (PPO) is employed as a training method for the GNN-derived node embeddings and the associated policy. This method employs an event-based simulator for the JSSP and directly incorporates times into the states of the base Markov Decision Process. This specificity complicates its adaptation to uncertain scenarios. [9] also uses GNNs and PPO for addressing the flexible job-shop problem where the agent also has to choose machines for tasks; the authors add nodes for machines and use two different types of message-passing. The same problem is addressed using a bipartite graph and custom message passing in [10], with good results. The Reinforced Adaptive Staircase Curriculum Learning (RASCL) approach [11] is a Curriculum Learning method that adjusts difficulty levels during learning by dynamically focusing on challenging instances.

Robustness in JSSPs. Stochastic JSSPs (SJSSP) account for uncertainties in processing times by modeling them as random variables. Some techniques use classic solvers to generate robust solutions by anticipating potential disruptions or modeling worst-case scenarios. For instance, in [12], for a given JSSP, several processing times scenarios are sampled. The objective is therefore to generate a unique schedule that is good for all sampled scenarios. PDRs can also be used but they can lack a global view, as in the deterministic case. Several works address SJSSP through meta-heuristics, as described in [13]. Other techniques involving genetic algorithms and their hybridization are also widely used [14], [15]. [16] introduces a way to robustify solution to deterministic relaxation of the SJSSP. [17] presents a both proactive and reactive scheduling: a multi-agent architecture is responsible for the proactive robust scheduling and a repair procedure is involved for machine breakdown and arrival of rush jobs.

Some works address the dynamic variant of SJSSP, in which new jobs can arrive at any time. [18] is a review of such extensions along with corresponding proposed solving methods. Classical approaches involve complex mathematical programming models that do not scale well [19]. Online reactive recovery approaches are a possible solution if no robust solution is available [20]. More recent approaches explore the use of using DRL and GNN [21]. However, to the best of our knowledge, there are no work that use such techniques for the SJSSP.

3 JSSP with Uncertainty as a MDP↩︎

In this section, we first recall the JSSP definition. Then, we describe how to represent uncertainty and define the corresponding MDP. We finally present how to use Reinforcement Learning (RL) for solving the MDP.

3.1 Background↩︎

A JSSP is defined as a pair \((\mathcal{J},\mathcal{M})\), where \(\mathcal{J}\) is a set of jobs and \(\mathcal{M}\) is a set of machines. Each job \(\mathit{J}_{i} \in \mathcal{J}\) must go through \(\mathit{n}_{i}\) machines in \(\mathcal{M}\) in a given order \(\mathit{O}_{ij} \to ... \to \mathit{O}_{i\mathit{n}_{i}}\), where each element \(\mathit{O}_{ij} (1 \leq j \leq \mathit{n}_{i} )\) is called an operation of \(\mathit{J}_{i}\). The binary relation \(\to\) is a precedence constraint. The size of a JSSP instance is classically denoted as \(|\mathcal{J}|\times|\mathcal{M}|\). In the following, the set of all operations is denoted \(\mathcal{O}\). To be executed, each operation \(\mathit{O}_{ij} \in \mathcal{O}\) requires a unique machine \(\mathit{m}_{ij} \in \mathcal{M}\) during a processing time denoted \(\mathit{p}_{ij}\) (\(\mathit{p}_{ij} \in \mathbb{N}^+\)). Each machine can only process one job at a time, and preemption is not allowed.

A solution \(\sigma\) of a JSSP instance is a function that assigns a start date \(\mathit{S}_{ij}\) to each operation \(\mathit{O}_{ij}\) so that precedence between operations of each job are respected and there is no temporal overlap between operations that are performed on the same machine. The completion time of an operation \(\mathit{O}_{ij}\), is \(\mathit{C}_{ij} = \mathit{S}_{ij} + \mathit{p}_{ij}\). A solution \(\sigma\) is optimal if it minimizes the makespan \(\mathit{C}_{\mathit{max}}= \mathit{max}_{\mathit{O}_{ij} \in \mathcal{O}} \{\mathit{C}_{ij}\}\), i.e. the maximal completion time of operations.

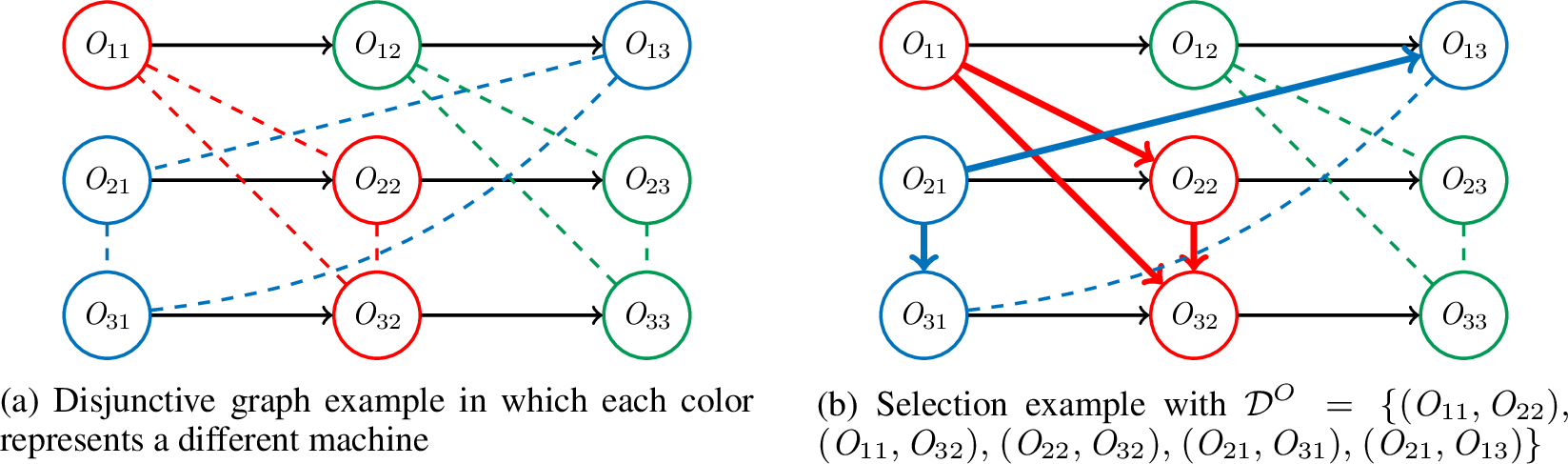

As described in [22], the disjunctive graph is defined by \(\mathcal{G}= (\mathcal{O}, \mathcal{C}, \mathcal{D})\) of a JSSP \((\mathcal{J},\mathcal{M})\) as:

\(\mathcal{O}\) is the set of vertices, i.e. there is one vertex for each operation \(o \in \mathcal{O}\);

\(\mathcal{C}\) is a set of directed arcs representing the precedence constraints between operations of each job (conjunctions);

\(\mathcal{D}\) is a set of edges (disjunctions), each of which connects a pair of operations requiring the same machine for processing.

Figure 1 shows the disjunctive graph of a JSSP with 3 jobs and 3 machines. A selection is a state of the graph in which a direction is chosen for some edges in \(\mathcal{D}\), denoted \(\mathcal{D}^O\). If an edge \((\mathit{O}_{ij}, \mathit{O}_{i'j'})\) in \(\mathcal{D}\) becomes oriented (in that order), then it represents that operation \(\mathit{O}_{ij}\) is performed before \(\mathit{O}_{i'j'}\) on their associated machine. A selection is valid if the set of oriented arcs (\(\mathcal{C}\cup \mathcal{D}^O\)) makes the graph acyclic. A solution \(\sigma\) can be defined by a valid selection in which all edges in \(\mathcal{D}\) have a direction (\(\mathcal{D}= \mathcal{D}^O\)) and in which start dates of operations are the earliest possible dates consistent with the selection precedences, as done in a classical Schedule Generation Scheme (SGS). Figure [fig:disj95with95selection] illustrates a valid selection for the toy JSSP instance of Figure 1.

Figure 1: Disjunctive graph representation

3.2 Representing uncertainty↩︎

JSSPs can be easily extended with duration uncertainty as bounds on tasks’ duration, and effect uncertainty as failure outcomes of a task. While task failures could be represented using special nodes representing completely different outcomes, this would push the boundaries outside JSSP formal capabilities. In this work, failures are handled as retries that consume an uncertain time duration a fixed maximum number of times. In the following, we focus on uncertain task duration as a generic enough scheme to capture relevant uncertainty use-cases.

In the classical JSSP definition, every operation \(\mathit{O}_{ij}\) has a deterministic processing time \(\mathit{p}_{ij}\). We extend this definition by saying that processing times are not known in advance, but that each operation \(\mathit{O}_{ij}\) has an associated probability distribution \(\mathbb{P}_{ij}\) over its possible duration values. The objective is to minimize the average makespan, that is formally defined by \(\mathit{max}_{\mathit{O}_{ij} \in \mathcal{O}} \int_{0}^{+\infty} (\mathit{S}_{ij} + \mathit{p_{ij}}) \, d\mathbb{P}_{ij}\).

3.3 Sequential Decision Making↩︎

The scheduling problem boils down to a Markov Decision Process. Inputs of the problem are the the original disjunctive graph \(\mathcal{G}= (\mathcal{O}, \mathcal{C}, \mathcal{D})\) and the probability distribution over duration values \(\mathbb{P}_{ij}\) associated with each operation \(\mathit{O}_{ij}\).

3.3.0.1 State

The state \(s_t\) at decision step \(t\) is defined by:

the current selection \(\mathcal{D}^O_t\),

the set of already scheduled operations \(\mathcal{S}_t\) at step \(t\).

The initial state \(s_0\) is the disjunctive graph representing the original JSSP instance with \(\mathcal{D}^O_0 = \emptyset\) and \(\mathcal{S}_0 = \emptyset\). The terminal state \(s_T\) is a complete solution where \(\mathcal{D}^O_T = \mathcal{D}\), i.e. all disjunctive arcs have been assigned a direction, and \(\mathcal{S}_T = \mathcal{O}\), i.e. all operations have been scheduled.

3.3.0.2 Actions

At each step \(t\), candidate actions consist in selecting an operation \(\mathit{O}_{ij}\) to put directly after the last scheduled operation on the corresponding machine. This is a simple way to ensure that cycles are never added in \(\mathcal{G}\). Intuitively, it consists in choosing an operation to do before all the ones that have not yet been scheduled, and update the current selection accordingly. Furthermore, we force that an operation is a candidate for selection only if its preceding tasks in the same job have been scheduled. As exactly one operation is scheduled at each step, the final state is reached at step \(T = \mathit{card}(\mathcal{O})\).

Candidates actions \(\mathcal{A}_t\) at step \(t\) are formally defined by \(\mathcal{A}_t = \{\mathit{O}_{ij} \in \mathcal{O}\;|\; \mathit{O}_{ij} \notin \mathcal{S}_t \textit{ and } \forall j' < j, \mathit{O}_{ij'} \in \mathcal{S}_t\}\).

3.3.0.3 Transitions

If the chosen action at step \(t\) consists in selecting the operation \(\mathit{O}_{ij}\) in \(\mathcal{A}_t\), then it leads to adding \(\mathit{O}_{ij}\) to the scheduled operations set and adding the arc \((\mathit{O}_{kl},\mathit{O}_{ij})\) to \(\mathcal{D}^O\) for each operation \(\mathit{O}_{kl}\) scheduled at step \(t\) on the same machine. Formally, this gives:

\(\mathcal{S}_{t+1} = \mathcal{S}_{t} \cup \{\mathit{O}_{ij}\}\)

\(\mathcal{D}^O_{t+1} = \mathcal{D}^O_t \cup \{(\mathit{O}_{kl}, \mathit{O}_{ij}) \in \mathcal{D}\; | \; \mathit{O}_{kl} \in \mathcal{S}_{t} \textit{ and } \mathit{m}_{kl} = \mathit{m}_{ij}\}\)

3.3.0.4 Reward/Cost

In most approaches to solve MDPs with reinforcement learning, it is preferred to use non-sparse rewards, i.e. an informative reward signal at every step. For instance the authors of [7] propose to use the difference of makespan induced by the affectation of the task, as this naturally sums to makespan at the end of the trajectory. With the presence of uncertainty, while we could compute bounds on the makespan in the same way, it is not obvious to aggregate such bounds into a uni-dimensional reward signal.

We use a different approach: we draw durations only when the schedule is complete (at time \(T\)) and give it as a cost. Start date of each operation is its earliest possible date considering conjunctive and disjunctive precedence arcs in the solution. All other rewards are null. Formally, it is defined as follows:

\(\forall t < T\), \(\mathit{r}_t = 0\);

\(\mathit{r}_T = \mathit{max}_{\mathit{O}_{ij} \in \mathcal{O}} (\mathit{S}_{ij} + \mathit{p}_{ij}^\mathit{sample})\) where \(\mathit{p}_{ij}^\mathit{sample} \sim \mathbb{P}_{ij}\).

As reinforcement learning aims at minimizing the expectation of costs sum along trajectories, this corresponds to our objective of minimizing the average makespan.

3.4 Solving the MDP with Reinforcement Learning↩︎

We use a reinforcement learning setup, where the agent selects tasks to schedule, and gets corresponding partial schedules as observation along with rewards. Using this modeling, effects of actions are deterministic (as they only add edges in the graph), all uncertainty is in the reward value.

The objective is to find a policy that minimizes the average makespan over a set of test problems that are not used during the training phase. To do so, we use a simulator that generates problems that are close to the test problems, and aim at obtaining a policy that minimizes the expectation of makespan along the problems generated by the simulator. Such a policy has to be able to generalize to test problems, i.e. give good results without further learning. As the only source of uncertainty is the durations for which parameters only are observable, we want our parametric policy to be able to adapt to these parameters.

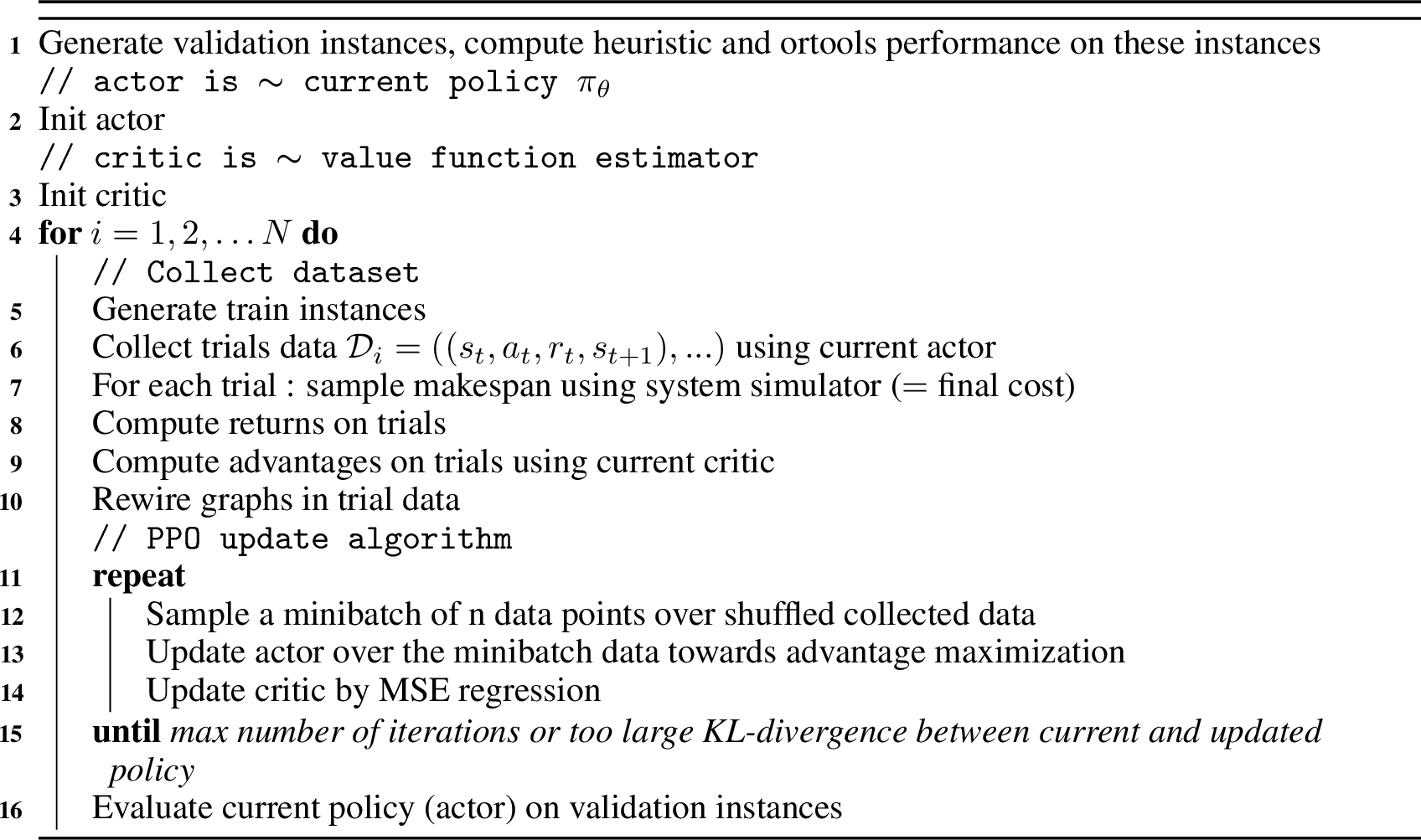

3.4.0.1 Algorithm

In order to learn a policy, we consider a parametric policy and use the Proximal Policy Optimization (PPO) algorithm [23], with action masking [24]. PPO is an on-policy actor-critic RL algorithm. Its current stochastic policy is the actor, while the critic estimates the quality of the current state. More precisely, as shown in Algorithm [algo:general95algo], the algorithm starts by randomly initializing parameters of policy (actor) and value function estimator (critic). Then, for a given number of iterations, its starts by collecting trajectory data in the form (observation, action, next observation) on train problem instances where actions are chosen using current stochastic policy. It then computes makespans corresponding to train instances and by sampling durations and applying chosen order of actions. Using this, it computes returns at every timestep, then advantages (difference of sampled returns to value estimation) using current critic. Observed graphs are then rewired (see section 4.1). The PPO update algorithm itself samples a subset of corresponding observations, actions, advantages, value prediction and returns, then updates the actor parameters (including GNN) using the gradient of the advantages, and updates the critic (including GNN) using gradient of mean square error between the critic value and the observed returns. This PPO update is repeated a small number of times or until the variation in the policy would lead to out-of-distribution critic estimations (because samples are collected using the old policy, i.e. the one before PPO updates).

We then evaluate the current policy (actor) by playing the argmax of the stochastic policy on a given set of validation problems. We repeat these steps until the policy does not seem to improve on validation instances (the \(N\) in the external for loop is an upper bound on the number of iterations, which is set to a large value and the for loop is interrupted manually).

Figure 2: General algorithm

4 GNN Implementation: Rewiring, Embedding and Addressing Uncertainty↩︎

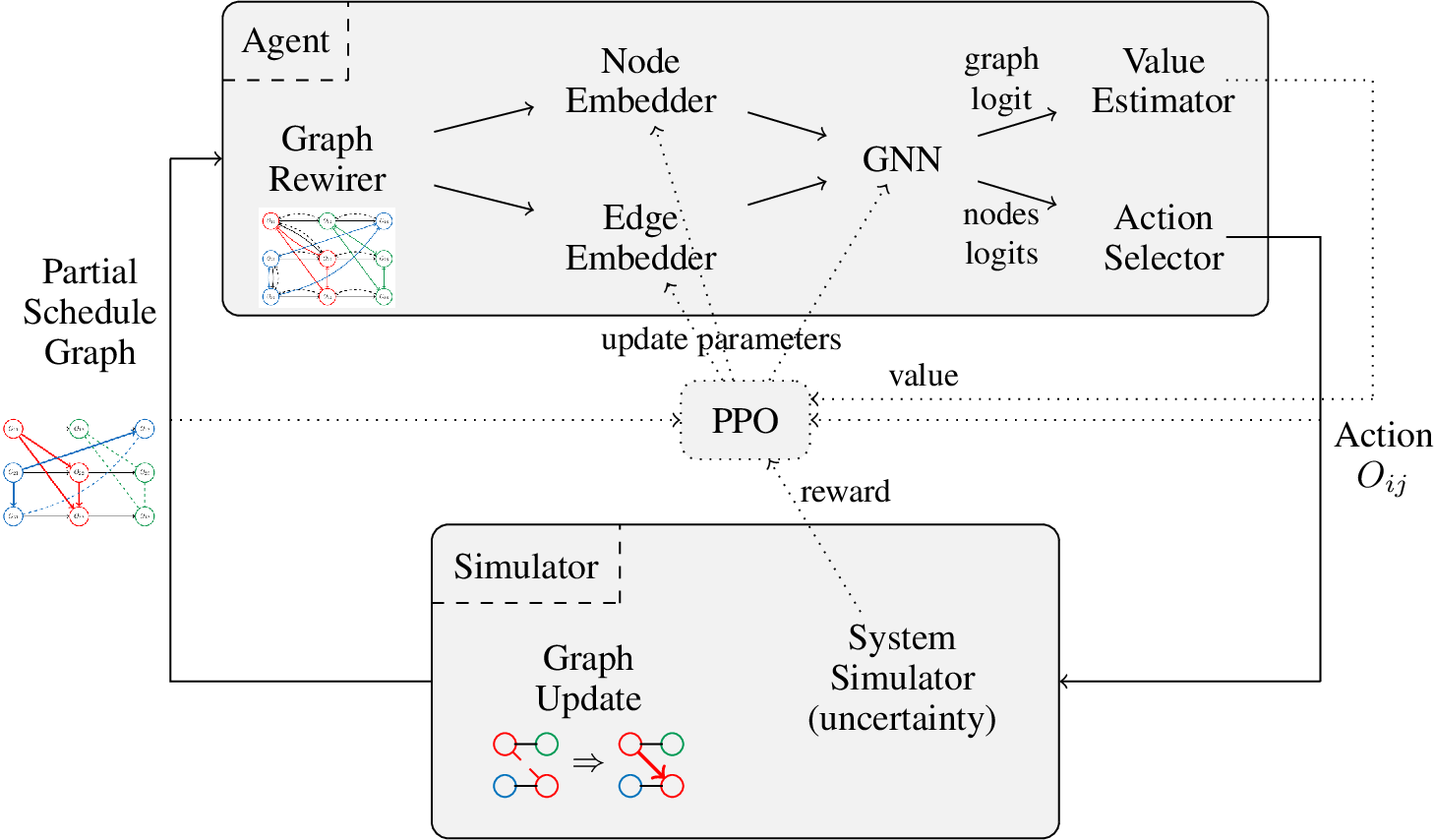

An overview of the architecture is shown in Figure 3. The agent takes as an input a partial schedule in the form of a graph, as in Figure [fig:disj95with95selection]. Several elements, described in this section, are within the actor and allow to choose one action. This action is treated by the simulator to update the schedule graph by adding arcs and simulates the uncertainty when the last state is reached. Note that PPO uses the schedule graph, the action, the reward and the value estimation in order to update the embedders and the GNN.

Figure 3: General Architecture

4.1 Graph Rewiring and Embedding↩︎

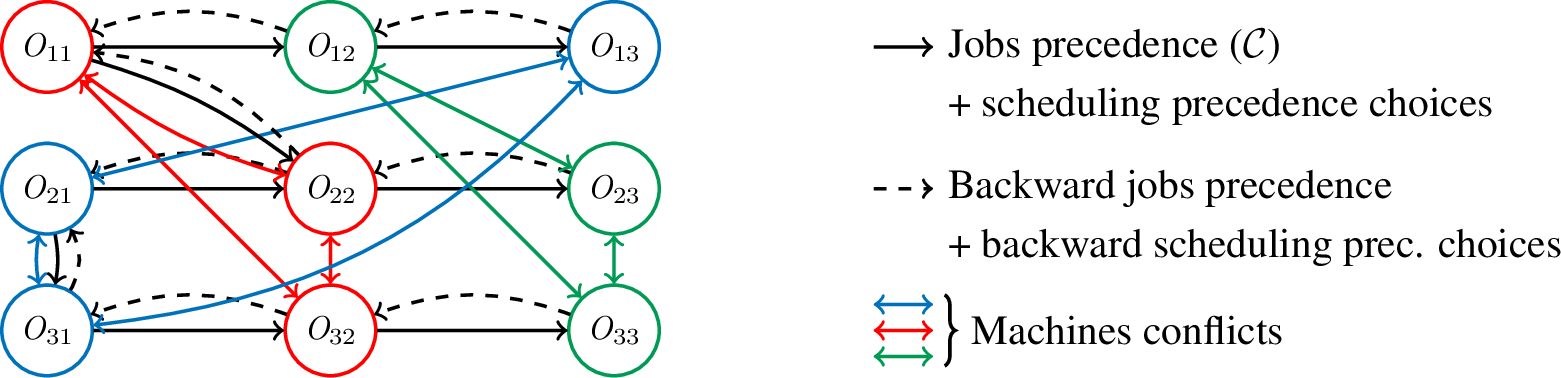

The representation above allows to model partial schedules (where some conflicts are not resolved) as disjunctive graph representation. Generally speaking, Message-Passing Graph Neural Networks (MP-GNN) use the graph structure as a computational lattice, meaning that information has to follow the graph adjacencies and only them. We thus have to make the difference between the input graph and the graph used by the MP-GNN. This is known as “graph rewiring” in the MP-GNN literature. In our case, if we use only precedencies as adjacencies, this would mean the we explicitly forbid information to go from future tasks to present choice of dispatch, which is definitely not what we want: we want the agent to choose task to dispatch based on effects on future conflicts, meaning that we want information go from future to present task.

4.1.0.1 Precedences.

In order to have a rewired graph as small as possible, we remove from \(\mathcal{D}^O\) all edges that are not necessary to obtain the complete order. For instance, we remove from figure [fig:disj95with95selection], the edge \((\mathit{O}_{11}, \mathit{O}_{32})\). Links are then added in the rewired graph in both directions for every precedence, with different types for precedence and reverse-precedence edges. This enables learned operators to differentiate between chronological and reverse-chronological links and allows the network to pass information in a forward and backward way, depending on what is found useful during learning phase.

4.1.0.2 Conflicts.

Remains the challenge of allowing message circulation between tasks sharing the same machine in the GNN. Two options are possible: 1) adding a node representing a machine with links to tasks using the machine and edges in both directions (from tasks to this machine node and in the opposite direction), or 2) directly connecting tasks that share a machine, resulting in a clique per machine in the message-passing graph. In this paper, we choose the second approach as it showed better results than the first one in preliminary experiments.

Figure 4: Rewired graph example with precedences, backward precedences and conflicts as cliques. Each type of arc on the right has its own encoding. Operations \(\mathit{O}_{11}\), \(\mathit{O}_{21}\), \(\mathit{O}_{22}\) and \(\mathit{O}_{31}\) have here been scheduled in this order.

4.1.0.3 Edge attributes.

They are used to give explicitly a different type to edges, allowing the network to learn to pass different messages for reverse precedence, precedence and conflicts links. This helps the GNN to effectively handle interactions between tasks of different jobs that share machines.

4.1.0.4 Node attributes.

We define for node \(n_{ij}\) associated with task \(O_{ij}\) the following attributes: a boolean \(A_{ij}\) indicating if the corresponding task has already been scheduled (affected); a boolean \(\mathit{Sel}_{ij}\) indicating if the node is selectable and the machine identifier \(M_{ij}\). We also give parameters of probabilistic distribution of tasks durations, and corresponding task completion time distribution parameters. Task completion times are initialized as if there were no conflicts, i.e. using only durations of task and previous one from same job. There are updated once tasks are affected, considering conflicts with previously affected tasks.

4.1.0.5 Graph Pooling.

For the GNN to give a global summary of nodes, there are two options: either a global isotropic operator on nodes like mean, maximum or sum (or any combination); or a special node that is connected to every task nodes. The latter case is equivalent to learning a custom pooling operator.

4.1.0.6 Output of the GNN.

The message-passing GNN yields a value for every node, and a global value for the graph (either from the special node or from the chosen isotropic operator). As nodes represent tasks, these values can be directly used as values for later action selection. In our implementation, we also concatenate the global value to every node value.

4.1.0.7 Ability to Deal with Different Problem Sizes.

The GNN outputs a logit for each node, and there is a one-to-one mapping between nodes and actions, whatever the number of nodes/actions. Internally, the message passing scheme collects messages from all neighbors, making the whole pipeline agnostic to the number of nodes. Learning best actions boils down to node regression, with target values being given by the reinforcement learning loop. This still needs some careful implementation with respect to data structures and batching, but the direct mapping from nodes to actions allows to deal with different problem sizes.

4.2 Handling Uncertainty↩︎

On the observation/agent side, we have durations defined with \(\mathbb{P}_{ij}\). From these durations distributions, we can compute approximate distributions of tasks completion time simply by propagating completion time parameters recursively upon the precedence graph, whenever precedences are added. The true real duration of the full schedule is computed only once the complete schedule is known based on all \(\mathbb{P}_{ij}\). It is then passed as a cost signal. As the RL algorithm naturally handles uncertainty of MDPs, it learns to evaluate partial schedule quality based on expectation of costs, which is exactly our objective.

4.3 Implementation details↩︎

4.3.0.1 Connecting to PPO.

In most generic PPO implementation, the actor (policy) consists of a feature extractor whose structure depends on the data type of the observation, followed by a MLP with a output dimension matching the number of actions. Same holds for the critic (value estimator), with the difference that the output dimension is 1. Some layers can be shared (the feature extractor and first layers of the MLPs). In our case, we do not want to use such generic structure because we have a one-to-one matching from the number of nodes of the observation to the actions. We thus always keep the number of nodes as a dimension of the data tensors.

4.3.0.2 Graph embedder

The graph embedder builds the rewired graph by adding edges as stated in section 4.1. It embeds node attributes using a learnable MLP, and edge attributes (here type of edge only) using a learnable embedding. The output dimension of embeddings is an open hyper-parameter hidden_dim, we found a size of 64 being good in our experiments.

4.3.0.3 Message-passing GNN

As a message passing GNN, we use EGATConv from the DGL library [25], which enriches GATv2 graph convolutions [26] with edges attributes. We used 4 attention heads, leading to output of size \(4 \times\) hidden_dim. This dimension is reduced to hidden_dim using learnable MLPs, before being passed to next layer (in the spirit of feed-forward networks used in transformers). This output of a layer can be summed with the input of the layer, using residual connections. For most of our experiments, we used 10 such layers.

4.3.0.4 Action selection

Action selection aims at giving action probabilities given values (logits) output from the GNN. We can either use logits output by the last layer, or use a concatenation of logits output from every layer. We furthermore concatenate the global graph logits of every layer, leading to a data size of \(((n\_layers + 1) \times \emph{hidden\_dim}) \times 2\) per node. This dimension is reduced to 1 using a learnable linear combination (minimal case of a MLP; we did not find using a full MLP to be useful). Finally, a distribution is built upon these logits by normalizing them, taking into account action masks at this point. As node numbers correspond to action numbers, we directly have action identifier when drawing a value from the distribution.

4.3.0.5 Normalization

Along all neural network components, we did not find any kind of normalization to be useful. On the opposite hand, durations are normalized in the [0,1] range.

5 Experiments↩︎

5.1 Uncertainty Modeling↩︎

The framework presented in this paper could accommodate to any kind of duration probability distribution. The main parameters of this distribution belong to the node features and must therefore been described formally.

In order to deal with duration uncertainties, for each operation \(O_{ij}\), we use a triangular distribution with 3 parameters \(min^p_{ij}\), \(max^p_{ij}\), \(mode^p_{ij}\), as this is often used in the context of manufacturing processes.

We also have in the simulator the real processing time of a task, denoted \(real^p_{ij}\), which is observed by the agent in the final state. With such definition, tasks completion times can respectively be represented with their min, max and mode times as follows: \(min^C_{ij} = S_{ij} + min^p_{ij}\), \(max^C_{ij} = S_{ij} + max^p_{ij}\), \(mode^C_{ij} = S_{ij} + mode^p_{ij}\). Task completion time parameters are updated when adding precedences to the graph (min, max, mode start times are computed based on precedence relations). We give both duration distribution parameters and task completion times distribution parameters as node attributes. The real task completion times \(real^C_{ij} = S_{ij} + real^p_{ij}\) can be computed only during the simulation of a complete schedule, giving the real makespan \(M_{real} = max_{ij}(real^C_{ij}\)), used as a cost given to the learning agent.

5.2 Benchmarks and Baselines↩︎

5.2.1 Benchmarks↩︎

Our approach has been tested on instances generated using Taillard rules [27]: durations are uniformly drawn in [1,99], and machine affectation is randomly chosen. For stochastic instances, this duration corresponds to \(mode^p_{ij}\). Minimum and maximum value are uniformly drawn in \([0.95 \times mode^p_{ij}, 1.1 \times mode^p_{ij}]\), meaning that tasks can take at most 5% less time and at most 10% more time than mode value.

5.2.2 Baselines↩︎

In order to evaluate the performance of Wheatley, we compare several approaches:

we first train Wheatley on instances of various sizes. More precisely, W-nxm denotes our approach tested on instances of size \(n \times m\), with \((n,m) \in \{(6,6),(10,10),(15,15)\}\);

for deterministic instances, we can compare with L2D using values reported in the associated paper ([7]);

we test several popular Priority Dispatch Rules ([2]), namely Most Operations Remaining (MOPNR), Shortest Processing Time (SPT), Most Work Remaining (MWKR) and Minimum Ratio of Flow Due Date to Most Work Remaining (FDD/WKR). When computing a schedule, these rules use the mode duration of operations. Next, we retrieve the operations sequence scheduled for each machine and run this sequence with the real operation duration, as done in Schedule Generation Scheme (SGS);

we use the CP-SAT solver of OR-Tools, denoted OR-Tools in deterministic instances test. For stochastic instances, we use it with mode durations and with real instances, respectively denoted OR-Tools mode and OR-Tools real. As for PDRs, we retrieve the order on each machine and use SGS;

for stochastic instances, we implement the approach proposed in [12], here denoted CP-stoc, that consists in finding the schedule that minimize the average makespan over a given number of sampled instances. We found using 50 samples was a very good compromise between solution quality and computation time (100 gives not much improvement and needs too much time). It is implemented with CP Optimizer 22.10 through docplex ([28]).

Classical techniques like CP-stoc and OR-Tools/CP-sat are anytime algorithms that need to compute solution for every problem, while our approach uses a large offline training time and the resulting agent only takes a small inference time for every problem. We decide to give 3 minutes to classical techniques, as they tend to give very quickly very good solutions, including for large problems, but generally need up to hours to find optimal solution as soon as the problem size becomes large. On the opposite hand, Wheatley takes from 1 hour to a few days of training (depending on the problem size), but has a fixed inference time that can become very small when correctly optimized (linear in the number of tasks). The number of iterations to reach the best model is given as table 1.

5pt

| W-6x6 | W-10x10 | W-15x15 | |

|---|---|---|---|

| deterministic | 962 | 542 | 519 |

| stochastic | 712 | 714 | 434 |

5.3 Results↩︎

3pt

| Deterministic | Stochastic | |||||

|---|---|---|---|---|---|---|

| 2-4 (r)5-7 Evaluation | W-6x6 | W-10x10 | W-15x15 | W-6x6 | W-10x10 | W-15x15 |

| \(6\times6\) | \(\mathbf{508}\) | \(521\) | \(521\) | \(\mathbf{700}\) | \(714\) | \(715\) |

| \(10 \times 10\) | \(927\) | \(\mathbf{890}\) | \(915\) | \(1269\) | \(\mathbf{1217}\) | \(1232\) |

| \(15\times15\) | \(1557\) | \(\mathbf{1388}\) | \(1392\) | \(2297\) | \(\mathbf{1889}\) | \(\mathbf{1889}\) |

| \(20\times15\) | \(1798\) | \(\mathbf{1583}\) | \(1622\) | \(2585\) | \(\mathbf{2181}\) | \(2188\) |

| \(20\times20\) | \(2314\) | \(1959\) | \(\mathbf{1888}\) | \(3632\) | \(2643\) | \(\mathbf{2608}\) |

5.3.1 Wheatley baselines↩︎

We first compare the three Wheatley baselines together: we have tested them on small instances, both deterministic and stochastic.

Table 2 presents results obtained for deterministic and stochastic instances on Taillard problems of several sizes. For each size \(n \times m\), we have generated 100 instances and, for the stochastic evaluation, we have then sampled one duration scenario for each instance. We then compute the average makespan for each set of instances sizes. Results show that W-10x10 is a good compromise, both for deterministic and stochastic problems. Therefore, in the following, we only present results associated with this approach.

5.3.2 Deterministic JSSP↩︎

We compare W-10x10 with baselines presented previously for the deterministic case. In Table 3, we present the average makespan and the average gap1 obtained for all instances of each category size. Note that we do not present results obtained for each PDR but only the best result one.

3pt

| Evaluation | W-10x10 | L2D | Best PDR | OR-Tools |

|---|---|---|---|---|

| \(6\times6\) | \(521~(7.4)\) | \(571~(17.7)\) | \(545~(12.4)\) | \(\mathbf{485}~(\mathbf{0})\) |

| \(10 \times 10\) | \(890~(9.6)\) | \(993~(22.3)\) | \(948~(16.8)\) | \(\mathbf{812}~(\mathbf{0})\) |

| \(15\times15\) | \(1389~(17.2)\) | \(1501~(26.7)\) | \(1419~(19.8)\) | \(\mathbf{1185}~(\mathbf{0})\) |

| \(20\times15\) | \(1583~(16.9)\) | - | \(1642~(21.3)\) | \(\mathbf{1354}~(\mathbf{0})\) |

| \(20\times20\) | \(1959~(24.9)\) | \(2026~(29.2)\) | \(1870~(19.3)\) | \(\mathbf{1568}~(\mathbf{0})\) |

| \(30\times10\) | \(1829~(5.5)\) | - | \(1878~(8.9)\) | \(\mathbf{1725}~(\mathbf{0})\) |

| \(30\times15\) | \(2043~(14.5)\) | - | \(2092~(17.3)\) | \(\mathbf{1784}~(\mathbf{0})\) |

| \(30\times20\) | \(2377~(22.0)\) | - | \(2331~(19.7)\) | \(\mathbf{1948}~(\mathbf{0})\) |

| \(50\times15\) | \(3060~(8.3)\) | - | \(3079~(9.0)\) | \(\mathbf{2825}~(\mathbf{0})\) |

| \(50\times20\) | \(3322~(14.9)\) | - | \(3295~(14.0)\) | \(\mathbf{2891}~(\mathbf{0})\) |

| \(60\times10\) | \(3357~(1.7)\) | - | \(3376~(2.3)\) | \(\mathbf{3301}~(\mathbf{0})\) |

| \(100\times20\) | \(5886~(6.9)\) | - | \(5786~(5.1)\) | \(\mathbf{5507}~(\mathbf{0})\) |

Results show that OR-Tools outperforms the other approaches for these sizes, but W-10x10 manages to get close results, even for large instances. More precisely, in comparison with L2D, which is also an approach based on DRL and GNN that was developed for solving JSSPs ([7]), W-10x10 returns better schedules in average. W-10x10 competes with the best PDR, which is mostly MOPNR in the case of instances larger than \(20 \times 20\). These results show that Wheatley is able to learn task selection strategies that generalize to much larger problems.

5.3.3 Stochastic JSSP↩︎

5pt

| OR-Tools | ||||||

|---|---|---|---|---|---|---|

| 6-7 Evaluation | W-10x10 | Wd-10x10 | MOPNR | CP-stoc | mode | real |

| \(6\times6\) | \(714~(16.3)\) | \(817~(33.1)\) | \(699~(13.8)\) | \(\mathbf{669}~(9.0)\) | \(728~(18.6)\) | \(\mathit{614}~(\mathit{0})\) |

| \(10 \times 10\) | \(1217~(21.5)\) | \(1464~(46.1)\) | \(1252~(25.0)\) | \(\mathbf{1177}~\mathbf{(17.5)}\) | \(1262~(25.9)\) | \(\mathit{1002}~(\mathit{0})\) |

| \(15\times15\) | \(1889~(29.3)\) | \(2406~(64.7)\) | \(1988~(36.1)\) | \(\mathbf{1872}~\mathbf{(28.1)}\) | \(1925~(31.8)\) | \(\mathit{1461}~(\mathit{0})\) |

| \(20\times15\) | \(\mathbf{2181}~\mathbf{(30.5)}\) | \(2729~(63.3)\) | \(2314~(38.5)\) | \(2222~(33.0)\) | \(2244~(34.3)\) | \(\mathit{1571}~(\mathit{0})\) |

| \(20\times20\) | \(2643~(36.4)\) | \(3511~(81.2)\) | \(2708~(40.0)\) | \(\mathbf{2631}~\mathbf{(35.8)}\) | \(2619~(35.1)\) | \(\mathit{1938}~(\mathit{0})\) |

| \(30\times10\) | \(\mathbf{2425~(14.1)}\) | \(3511~(65.2)\) | \(2532~(19.1)\) | \(2476~(16.5)\) | \(2598~(22.2)\) | \(\mathit{2126}~(\mathit{0})\) |

| \(30\times15\) | \(\mathbf{2792~(26.7)}\) | \(3251~(47.5)\) | \(2964~(34.5)\) | \(2892~(31.2)\) | \(2943~(33.5)\) | \(\mathit{2204}~(\mathit{0})\) |

| \(30\times20\) | \(\mathbf{3305~(36.9)}\) | \(4186~(73.3)\) | \(3390~(40.4)\) | \(3355~(39.0)\) | \(3299~(36.6)\) | \(\mathit{2415}~(\mathit{0})\) |

| \(50\times15\) | \(\mathbf{4043~(16.5)}\) | \(4413~(27.1)\) | \(4262~(22.8)\) | \(4239~(22.1)\) | \(4435~(27.7)\) | \(\mathit{3472}~(\mathit{0})\) |

| \(50\times20\) | \(\mathbf{4520~(26.8)}\) | \(5351~(50.1)\) | \(4679~(31.2)\) | \(4682~(31.3)\) | \(4758~(33.4)\) | \(\mathit{3566}~(\mathit{0})\) |

| \(60\times10\) | \(\mathbf{4315~(6.3)}\) | \(4475~(10.2)\) | \(4451~(9.6)\) | \(4442~(9.4)\) | \(4579~(12.8)\) | \(\mathit{4061}~(\mathit{0})\) |

| \(100\times20\) | \(\mathbf{7591~(11.8)}\) | \(8377~(23.3)\) | \(7956~(17.1)\) | \(8203~(20.8)\) | \(8188~(20.5)\) | \(\mathit{6793}~(\mathit{0})\) |

Table 4 shows results obtained for stochastic problems. Note that the solver OR-Tools real is perfect, in the sense that it works with real operations duration values, which is unknown for other approaches at the scheduling time. Therefore, the makespan value computed by OR-Tools real is much lower than that of other approaches. Results show that the closest to OR-Tools real is CP-stoc for small problem sizes. In fact, despite the 50 scenarios it works with, it manages to find a good average makespan. However, when the instances size increases, W-10x10 clearly outperforms other approaches. We also present the results for the deterministic of version of Wheatley run on modes as Wd-10x10. This shows that Wheatley is able to successfully generalize on larger problems.

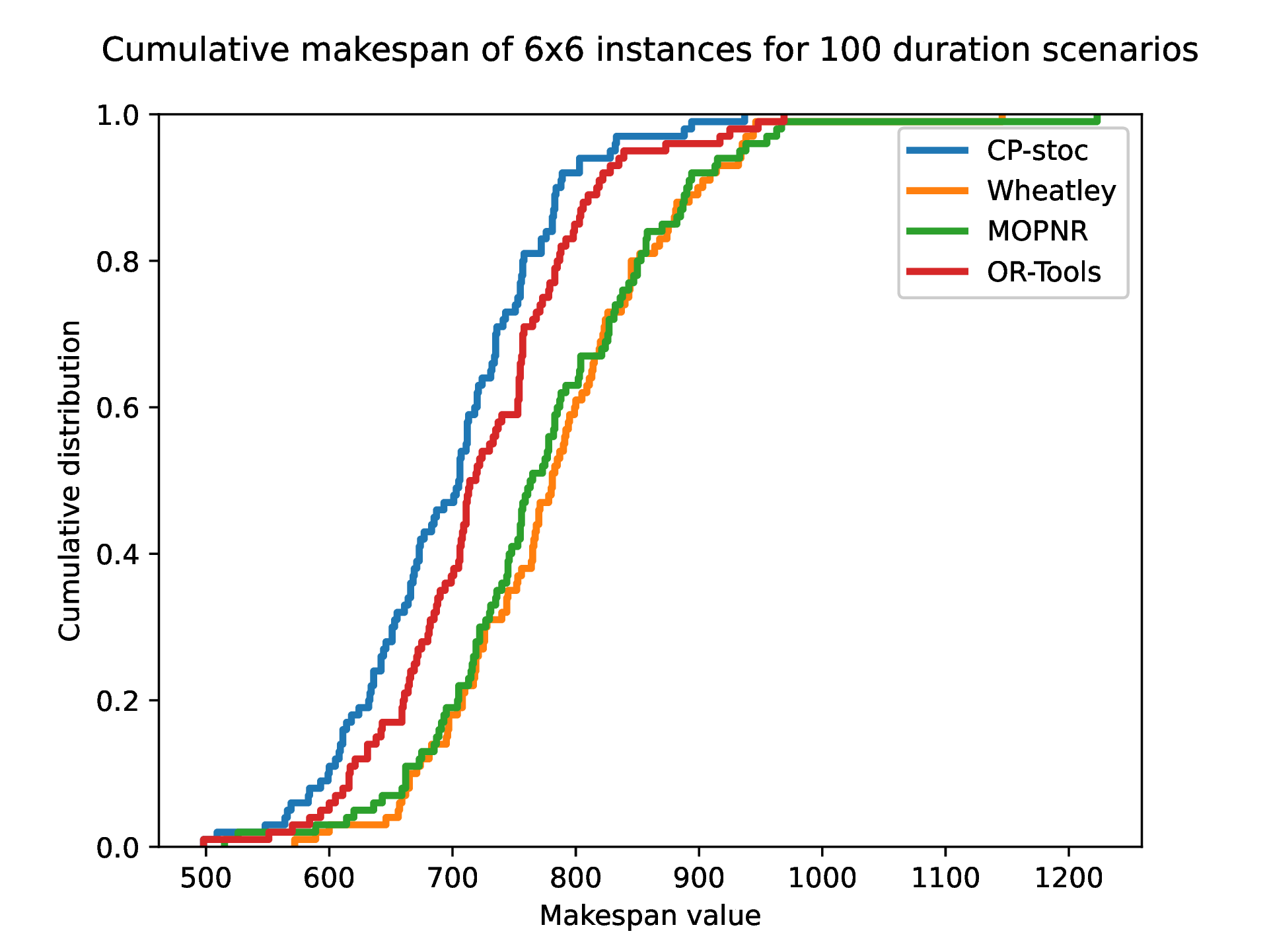

\(6 \times 6\) instance

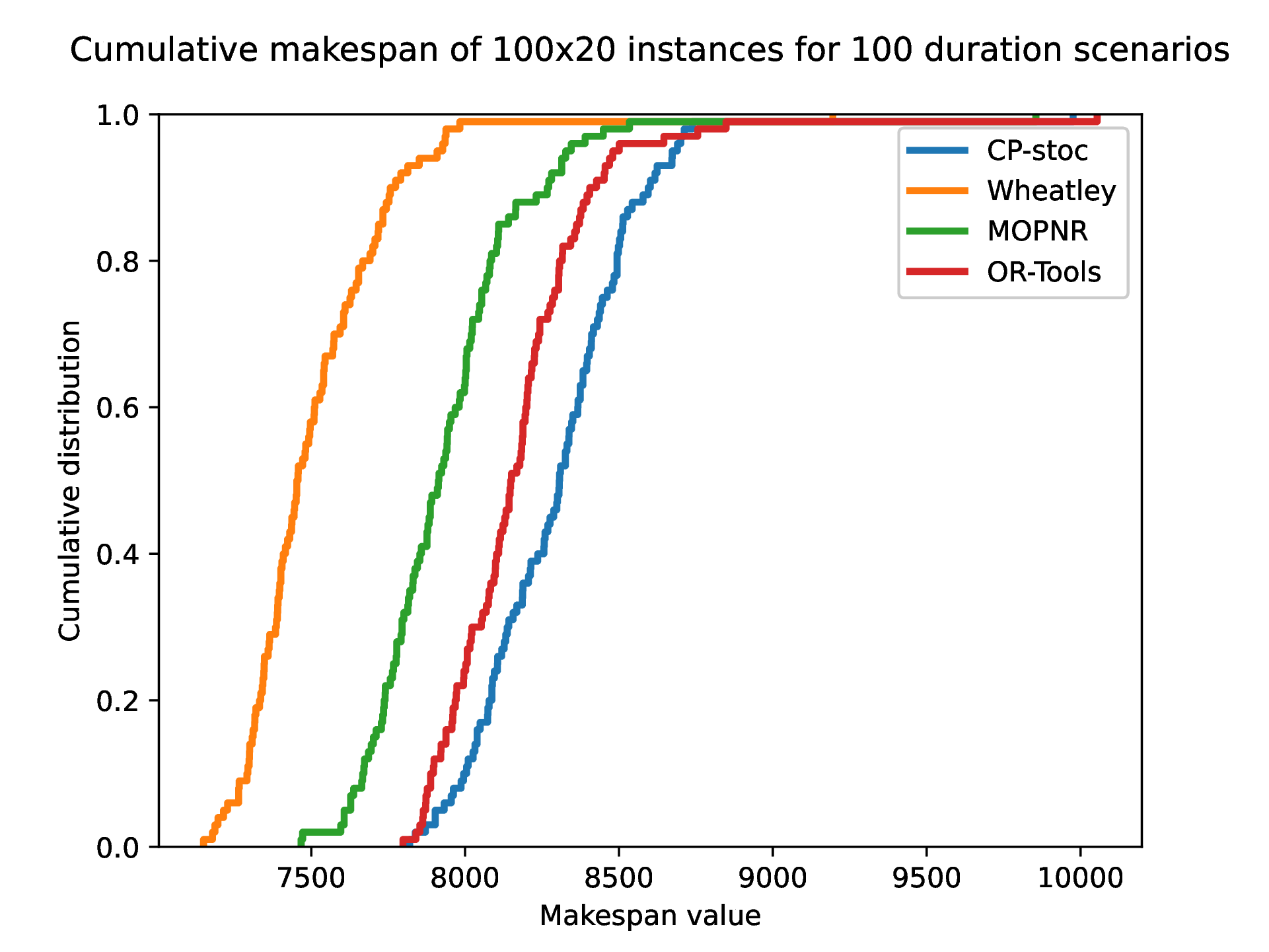

\(100 \times 20\) instance

Figure 5: Cumulative makespan of W-10x10 and CP-stoc for 100 duration scenarios..

In order to further compare the approaches in terms of scatter of the results, we have sampled 100 duration scenarios for one problem of size \(6 \times 6\) and 100 scenarios for one problem of size \(100 \times 20\). Cumulative makespan are presented on Figure 5. It shows that results presented on Table 4 are representative of several scenarios. In fact, for the \(6 \times 6\) problem, CP-stoc returns the lowest makespans, then OR-Tools, and MOPNR and W-10x10 equivalently (Figure 5 (a)). That order is completely reversed in the case of the \(100 \times 20\) problem, in which W-10x10 returns the best results (Figure 5 (b)).

6 Conclusion and Future Works↩︎

This paper presents Wheatley, a novel approach for solving JSSPs with uncertain operations duration. It combines Graph Neural Networks and Deep Reinforcement Learning techniques in order to learn a policy that iteratively selects the next operation to execute on each machine. The policy is updated during the training phase through PPO. Results show that Wheatley is competitive in the case of deterministic JSSPs and outperforms other approaches for stochastic JSSPs. Moreover, Wheatley is able to generalize to larger instances.

This work could be extended in several directions. First, it would be possible to extend the experiments with other JSSPs data and particularly instances coming from the industry. It would also be interesting to study the effect of pretraining the policy before running PPO. Finally, we are convinced that the GNN and DRL could be applied to other scheduling problems, such as the Resource-Constrained Project Scheduling Problem, in which handling uncertainty is essential in an industrial context.

References↩︎

Gap for an approach \(a\) is equal to \(100\cdot\frac{\mathit{makespan}(a) - \mathit{makespan}^\mathit{best}}{\mathit{makespan}^\mathit{best}}\).↩︎