RareBench: Can LLMs Serve as Rare Diseases Specialists?

January 01, 1970

Abstract

Generalist Large Language Models (LLMs), such as GPT-4, have shown considerable promise in various domains, including medical diagnosis. Rare diseases, affecting approximately 300 million people worldwide, often have unsatisfactory clinical diagnosis rates primarily due to a lack of experienced physicians and the complexity of differentiating among many rare diseases. In this context, recent news such as "ChatGPT correctly diagnosed a 4-year-old’s rare disease after 17 doctors failed" underscore LLMs’ potential, yet underexplored, role in clinically diagnosing rare diseases. To bridge this research gap, we introduce RareBench, a pioneering benchmark designed to systematically evaluate the capabilities of LLMs on 4 critical dimensions within the realm of rare diseases. Meanwhile, we have compiled the largest open-source dataset on rare disease patients, establishing a benchmark for future studies in this domain. To facilitate differential diagnosis of rare diseases, we develop a dynamic few-shot prompt methodology, leveraging a comprehensive rare disease knowledge graph synthesized from multiple knowledge bases, significantly enhancing LLMs’ diagnostic performance. Moreover, we present an exhaustive comparative study of GPT-4’s diagnostic capabilities against those of specialist physicians. Our experimental findings underscore the promising potential of integrating LLMs into the clinical diagnostic process for rare diseases. This paves the way for exciting possibilities in future advancements in this field.

<ccs2012> <concept> <concept_id>10010405.10010444.10010449</concept_id> <concept_desc>Applied computing Health informatics</concept_desc> <concept_significance>100</concept_significance> </concept> </ccs2012>

1 Introduction↩︎

Large Language Models (LLMs) like ChatGPT [1] have obtained widespread attention for their exceptional human-like language understanding and generation capabilities. As a result, applying LLMs in medicine is emerging as a promising research direction in artificial intelligence and clinical medicine. Several studies have been taken to explore how LLMs can assist doctors in various medical and clinical tasks, including medical diagnosis [2], [3], clinical report generation [4], [5], and medicine education [6]. However, there is currently a lack of research investigating the capabilities and limitations of LLMs in the context of rare diseases.

Rare diseases collectively refer to a broad category of diseases, typically defined by their low prevalence in the population. Over 7,000 types of rare diseases are currently recognized [7], with approximately 80% being genetic in origin. Patients with rare diseases often face a high probability of misdiagnosis or underdiagnosis [8], and the average time before receiving a confirmative diagnosis extends over several years [9]. The difficulty in diagnosis is largely attributed to the lack of prior exposure to rare diseases among physicians, hindering the accurate recognition of rare diseases and their associated phenotypes. The phenotypes are typically symptoms, signs, or other disease-related information observed in rare disease patients that are used for disease diagnosis. However, there is significant phenotypic overlap among different rare diseases, as well as between rare diseases and common diseases, which further increases the difficulty of disease identification and diagnosis. Clinical diagnosis of rare diseases typically involves two primary steps. Initially, physicians collect clinical information from patients, including epidemiological information, symptoms, signs, past medical history, and family history, etc., to formulate an initial diagnosis. Next, specialized tests such as laboratory tests or imaging examinations will be conducted to further facilitate diagnosis and differential diagnosis. Additionally, due to the frequent involvement of numerous organs and systems in rare diseases, consulting specialists from different fields during the diagnostic process can help achieve a more comprehensive insight and final diagnosis.

There have been many prior works to improve the diagnosis of rare diseases, including standardizing disease phenotype terminology into a hierarchical structure in the Human Phenotype Ontology (HPO) [10]–[12] and building knowledge bases annotating rare diseases with phenotypes such as the Online Mendelian Inheritance in Man (OMIM) [13], Orphanet [14], and the Compendium of China’s Rare Diseases (CCRD) [15]. These efforts result in a clear and structured representation of rare diseases: a disease or a patient can be represented by a set of associated phenotypes. From a machine learning perspective, computational methods can be developed to classify or rank diseases based on a patient’s phenotype information. These computational methods can be classified into two main categories. The first category treats the diagnosis of rare diseases as a ranking problem. In these approaches, a patient or a disease can be represented as phenotype vectors. Diseases are subsequently ranked by computing their semantic similarities with the patient. Methods falling into this category include PhenoMizer [12], RDAD [16], RDD [17], and LIRICAL [18]. However, these methods are constrained by their underlying assumptions, the lack of good-quality cases for training and testing, and the incomplete phenotypic information on many rare diseases in knowledge bases, often leading to relatively poorer diagnostic performance. The second category treats the diagnosis of rare diseases as an extreme multi-class classification task. Due to the scarcity of real-world data and the vast number of rare diseases to classify, this becomes a typical few-shot classification problem. Additionally, because of a lack of large-scale public rare disease patient datasets, most computational methods were only tested on simulated rare disease patient cases or small disease datasets with few diseases involved. Therefore, the clinical diagnostic capability of these methods remains unclear.

The prerequisite for diagnosing rare diseases using computational methods is to extract standardized and essential phenotypes/symptoms from electronic health records (EHRs) of clinical cases. To map clinical texts into standardized phenotypes, various natural language processing (NLP) methods have been developed, including EHR-Phenolyzer [19], ClinPhen [20], PhenoTagger [21], and PhenoBERT [22]. In a recent study, PhenoBCBERT and PhenoGPT [23] models were introduced to identify clinical phenotypes in clinical texts from pediatric patients. However, there are substantial variations in clinical texts in how physicians may record patient phenotypes, incorporating distinct details and terminology. Moreover, the current count of human phenotypes surpasses 17,000 [10]. All these factors present a significant challenge in precisely mapping or deducing phenotypes from clinical texts.

Our work leverages LLMs to conduct comprehensive evaluations in the challenging field of rare diseases. Figure 1 displays the overview of evaluation dimensions for the four tasks of RareBench, and more detailed definitions and descriptions are provided in Section 3. The main contributions are: 1) Dataset and Benchmarking: We develop a diverse, multi-center, and specifically tailored dataset for rare diseases. Alongside this, we introduce RareBench, a comprehensive benchmarking framework for evaluating LLMs’ capabilities in real-world complex clinical scenarios like phenotype extraction and differential diagnosis. 2) Advanced Knowledge Integration Prompt: We integrate rich knowledge sources to create an exhaustive knowledge graph for rare diseases. Utilizing a disease-phenotype graph and the hierarchical structure of the phenotype graph, we devise a novel random walk algorithm capitalizing on phenotype Information Content (IC) values to implement dynamic few-shot prompting strategies. This advancement significantly boosts the performance of LLMs excluding GPT-4 in differential diagnosis, even surpassing GPT-4. 3) Human-versus-LLMs Comparative Studies: We demonstrate GPT-4 on par with senior doctors across five distinct specialties in the differential diagnosis of rare diseases through comparative analysis. The experiments show that GPT-4’s diagnostic capabilities in rare diseases are now commensurate with those of experienced specialist physicians.

Figure 1: RareBench’s overview of evaluation tasks.

2 Related Work↩︎

Figure 2: RareBench is the first benchmark to evaluate LLMs as rare disease specialists on 4 distinct tasks.

2.0.0.0.1 Medical Benchmarks for LLMs.

Prominent medical question-and-answer data benchmarks, such as MedQA [24], PubMedQA [25], MedMCQA [26], MultiMedQA [5], and CMExam [27], primarily derive from medical examinations, typically featuring multiple-choice formats. MedBench [28] introduces a large-scale Chinese benchmark for evaluating LLMs in clinical knowledge and diagnostics. Additionally, DDXPlus [29] provides a detailed medical diagnostic dataset covering symptoms and diagnoses. Our RareBench extends this landscape by focusing on complex clinical scenarios specific to rare diseases.

2.0.0.0.2 LLMs’ Medical Capability.

The evolution of General Medical Artificial Intelligence (GMAI) is reshaping healthcare by automating learning processes and incorporating domain knowledge to reduce the clinical workload [30]. GPT-4 [31], a notable example in this field, has demonstrated exceptional skills in medical questions [32] and rivals or surpasses state-of-the-art models in tasks such as radiology text interpretation [33]. Medprompt [34] further enhances this capability through specialized prompting techniques, enabling foundational models like GPT-4 to outperform dedicated healthcare models. Besides GPT-4, models like AMIE [35] show superior performance in specific medical tasks, even exceeding the diagnostic abilities of general primary care physicians in some cases. These LLMs not only assist in differential diagnosis but also engage in clinical reasoning, thus improving diagnostic accuracy [2], [36]. Moreover, fine-tuned LLMs can efficiently extract valuable data from clinical notes, significantly boosting patient care quality [37]. Despite these advancements, challenges in accuracy, interpretability, and safety persist in the medical application of LLMs, underscoring the need for continuous refinement [38], [39]. Notably, the potential of LLMs in rare disease contexts is yet to be fully explored, and our research aims to fill this gap.

2.0.0.0.3 Diagnosis of Rare Disease.

The initial step in clinical diagnosis involves extracting standardized phenotypes from a patient’s electronic health record (EHR). To translate clinical texts into standardized Human Phenotype Ontology (HPO) terms, various natural language processing (NLP) methods [19]–[23], [40] have been developed. For rare disease diagnosis, current computational methods comprise many statistical or machine learning-based methods [12], [16]–[19], [41]–[44].

3 Composition of RareBench↩︎

This section presents 4 critical tasks of the RareBench framework with the most extensive collection of rare disease patient datasets currently accessible. These tasks include 1) phenotype extraction from EHRs, 2) screening for specific rare diseases, 3) comparative analysis of common and rare diseases, and 4) differential diagnosis among universal rare diseases. Figure 2 demonstrates the process of employing LLMs as rare disease specialists to complete the four tasks in the RareBench using an EHR or a set of phenotypes of an ALS (Amyotrophic Lateral Sclerosis) patient from PUMCH. We also describe our prompting techniques for effectively deploying LLMs as rare disease specialists. Please refer to the Appendix due to the page limit for dataset details and prompt examples.

3.1 Tasks of the RareBench Framework↩︎

3.1.1 Task 1: Phenotype Extraction from Electronic Health Records↩︎

Task 1 involves deriving phenotypes from EHRs for diagnosis. We design 3 sub-tasks: a) Phenotype Extraction: Extracting phenotypes from EHRs precisely. b) General Entity Extraction: Extracting general entities from EHRs. c) General Entity Standardization: Standardizing general entities into phenotypes. General entity extraction and standardization can be considered a two-step decomposition of phenotype extraction, allowing for a more detailed evaluation of LLMs’ capabilities of phenotype extraction.

3.1.2 Task 2: Screening for Specific Rare Diseases↩︎

Task 2 aims to evaluate the capability of LLMs in identifying risk factors or symptoms associated with specific rare diseases. It utilizes patients’ medical histories and auxiliary examinations to discover potential rare diseases and facilitate further diagnosis. For this study, three rare diseases are selected: ALS (Amyotrophic Lateral Sclerosis), PNH (Paroxysmal Nocturnal Hemoglobinuria), and MSA (Multiple System Atrophy).

3.1.3 Task 3: Comparison Analysis of Common and Rare Diseases↩︎

Task 3 aims to validate whether LLMs can differentiate between patients with common and rare diseases that exhibit similar phenotypes/symptoms. From the PUMCH dataset, we select 527 electronic health records containing 60 cases with 13 rare diseases and 467 cases with 64 common diseases, respectively. The task of LLMs is to predict the top ten most likely diseases from the mentioned pool of 77 diseases based on a patient’s electronic health record.

3.1.4 Task 4: Differential Diagnosis among Universal Rare Diseases↩︎

Task 4 is centered on a systematic differential diagnosis across the full spectrum of known rare diseases to identify the most probable rare disease. In this process, specialist physicians consider a range of possible rare diseases and methodically narrow down the potential diagnoses through a process of elimination or additional diagnostic tests. After gathering adequate evidence, they determine the most likely diagnosis. Unlike task 3, this task does not limit the range of rare diseases. Instead, it leverages LLMs to provide the top ten most likely diagnoses based solely on the patient’s phenotypes/symptoms. Task 4 involves 2,185 patient cases encompassing 421 rare diseases, collected from both Public and PUMCH datasets. Its primary objective is to evaluate LLMs’ capability within the complexities of real-world clinical scenarios. As the most pivotal and forward-thinking task of RareBench, it plays a crucial role in accessing the potential of LLMs in handling intricate medical data.

| Dataset | Type | #.Diseases | #.Cases | Source |

|---|---|---|---|---|

| Task 1 | EHRs | 34 | 87 | PUMCH |

| Task 2 | EHRs | 3 | 33 | PUMCH |

| Task 3 | EHRs | 77 | 527 | PUMCH |

| Task 4 | EHRs/symptoms | 421 | 2,185 | Public & PUMCH |

3.2 Rare Disease Patients’ Dataset↩︎

This study categorizes datasets into two main groups: publicly available datasets and the Peking Union Medical College Hospital (PUMCH) datasets. All of these data are utilized to perform one of the four tasks, as illustrated in Table 1. The publicly available datasets provide phenotypes/symptoms alongside confirmed rare diagnoses in OMIM/Orphanet disease codes. Consequently, they can only be employed to assess the performance of differential diagnosis among universal rare diseases (Task 4). In total, public datasets include 1,122 cases spanning over 362 rare diseases. Further descriptions can be found in Appendix 9.1.

Electronic health records from PUMCH serve as valuable resources for both phenotype extraction and three diagnostic tasks. The PUMCH dataset comprises a total of 1,650 cases, consisting of 1,183 rare disease cases and 467 common disease cases. Among them, we select specific datasets for each of the four tasks. Specifically, we first choose 87 EHRs involving 34 rare diseases annotated with physician-identified phenotypes to perform task 1. Next, we select 33 cases with complete auxiliary examination and medical history information to execute task 2. Then, from the remaining medical records (excluding those for tasks 1 and 2), we select 60 cases with rare diseases and 467 cases with common diseases exhibiting phenotypes similar to those of the corresponding rare diseases for task 3. Finally, for task 4, we test all the remaining rare disease records (excluding those for tasks 1 and 2), along with all the aforementioned public cases, totaling 2,185 cases. It’s important to note that the patient’s personally identifiable information has been removed completely. Additionally, doctors from PUMCH monitored all cases before uploading text information, ensuring the absence of any potential personal information leaks. Moreover, we apply reasonable filtering criteria to identify and remove cases of low quality that may be caused by recording errors or missing information, such as those with uncertain or imprecise diagnoses and those lacking sufficient relevant information, i.e., fewer than three phenotypes.

3.3 Framework Setup↩︎

3.3.1 Evaluated Models↩︎

We select eleven models in our evaluation framework, including API-based and open-source LLMs, as detailed in Table 2. Specifically, we choose 5 API-based models, which exhibit superior performance as a result of substantial investment and advanced development. On the other hand, due to limitations in computational resources, our selection of open-source models is confined to 3 general LLMs and 3 medical LLMs, each with a model size of fewer than 10 billion parameters.

| Model | #Size | Form | Version |

|---|---|---|---|

| GPT-4 [31] | N/A | API | 1106-preview |

| GPT-3.5-Turbo [1] | N/A | API | 1106 |

| Gemini Pro [45] | N/A | API | - |

| GLM4 [46], [47] | N/A | API | - |

| GLM3-Turbo [46], [47] | N/A | API | - |

| Mistral-7B [48] | 7B | Open Source | instruct-v0.1 |

| Llama2-7B [49] | 7B | Open Source | chat |

| ChatGLM3-6B [46], [47] | 6B | Open Source | - |

| BioMistral-7B [50] | 7B | Open Source | - |

| HuatuoGPT2-7B [51] | 7B | Open Source | - |

| MedAlpaca-7B [52] | 7B | Open Source | - |

3.3.2 Basic Prompt Design↩︎

For the evaluation of 11 LLMs, we primarily utilize the most fundamental zero-shot prompt. We assign the role of a rare diseases specialist to the LLMs by incorporating "You are a specialist in the field of rare diseases." as the system prompt/initial statement. Additional details on the configuration of LLMs’ hyper-parameters are available in Appendix 9.2.

3.3.3 More Prompting Strategies Exploration on GPT-4↩︎

We further explore diverse prompting strategies with GPT-4, including Chain-of-Thought (CoT) [53] and random few-shot methods. In the CoT settings, the zero-shot prompt is supplemented with "Let us think step by step." to foster a sequential thought process, a technique validated in various general tasks. For random few shots, we provide LLMs with \(m\) random complete input-output examples as prompts, where the choice of \(m\) depends on the specific task.

3.4 Knowledge Integration Dynamic Few-shot↩︎

Beyond the prompts above, we construct a rare disease domain knowledge graph by integrating multiple public knowledge bases. This serves as the foundation for our implemented Information Content (IC) based random walk algorithm. The phenotype embeddings generated via this algorithm have been pivotal in developing a dynamic few-shot prompt, depicted in Figure 3. This innovative approach markedly improves the capabilities of LLMs in differential diagnoses among universal rare diseases (Task 4).

3.4.1 Rare Disease Knowledge Graph Construction↩︎

Previous methods [12], [16], [17], [44] for the differential diagnosis of rare diseases primarily rely on similarity calculations between diseases using phenotypes in knowledge bases. It is feasible to construct a knowledge graph wherein both rare diseases and phenotypes are represented as nodes, connected by their interrelations as edges. There are two types of edges. The first is phenotype-phenotype edges obtained from the HPO hierarchy that organizes phenotypes into a directed acyclic graph where each edge connects a more specific phenotype (child) to a broadly defined phenotype (parent). The other is the disease-phenotype information, for which we integrate data from 4 rare-disease-related knowledge bases: the Human Phenotype Ontology (HPO) [10]–[12], Online Mendelian Inheritance in Man (OMIM) [13], Orphanet [14], and the Compendium of China’s Rare Diseases (CCRD) [15]. This integration notably enhances the annotation of associations between rare diseases and phenotypes. The statistical information of the integrated knowledge graph is presented in Table 3, with detailed descriptions of each knowledge base available in Appendix 9.4.

| Type | Phenotype (P) | Rare Disease (RD) | P-P | P-RD | P-RD | P-RD |

| (Src.) | (HPO) | (\(*\)) | (HPO) | (OMIM) | (Orphanet) | (CCRD) |

| Num. | 17232 | 9260 | 21505 | 54169 | 98031 | 4223 |

3.4.2 Random Walk Based on Information Content↩︎

The concept of Information Content (IC) [54] is similar to Inverse Document Frequency (IDF) utilized in natural language processing. IC is employed as an index of a phenotype’s specificity to a particular disease. Notably, a phenotype’s proximity to the root node in the HPO hierarchy (a broad phenotype), or a higher frequency of its association with multiple diseases (a common phenotype), results in a lower IC, reflecting lesser significance. On the other hand, a phenotype that is highly specific to a rare disease has a high IC. The essence of IC lies in its inverse correlation with the prevalence of a phenotype – the more common a phenotype, the lower its IC. Let \(T\) be the complete set of phenotype terms. For a given term \(t \in T\), the computation of \(IC(t)\) is formulated as follows: \[IC(t) = -log\frac{n(t)}{N},\] where \(n(t)\) represents the count of diseases annotated with the HPO phenotype \(t\) or its descendant phenotypes, and \(N\) signifies the total number of annotated diseases. In our integrated rare disease knowledge graph, the value of \(N\) equals 9,260.

Node2vec [55], a typical shallow embedding method, is calculated by simulating random walks [56] across a graph through a flexible and parameterized walk strategy. These unsupervised walks generate sequences of nodes, which are employed to create node embeddings by using the methodologies developed for Word2vec [57]. In Node2vec, the search bias parameter \(\alpha\) controls the search strategy in generating random walk sequences of nodes, effectively modulating the preference for breadth-first search (BFS) or depth-first search (DFS) strategies in exploring neighboring nodes. However, a direct application of Node2vec to our phenotype-disease knowledge graph in the rare disease domain falls short of adequately capturing each phenotype’s distinct influence on the differential diagnosis of diseases. We innovatively integrated IC values into the Node2vec framework to address this limitation, formulating an IC value-based random walk algorithm. This enhancement is designed to enrich the interactions between phenotypes and rare diseases. Under this new scheme, when a random walk progresses to a phenotype node \(t_1\) and the subsequent node is another phenotype \(t_2\), the walk search bias from \(t_1\) to \(t_2\) is determined as \(\alpha = IC(t_2)\). Conversely, if the following node is a rare disease \(d_1\), the search bias from \(t_1\) to \(d_1\) is set by \(\alpha = IC(t_1)\). This modification ensures that phenotype nodes with higher IC values receive increased focus during the random walk, amplifying associations with the related rare disease nodes.

Figure 3: The workflow of the dynamic few-shot strategy includes an integrated rare disease knowledge graph from 4 knowledge bases and an IC value-based random walk algorithm for phenotype and disease embedding.

3.4.3 Dynamic Few-shot Prompting Strategy↩︎

Employing the IC value-based random walk algorithm, we developed a function \(f: T \rightarrow \mathbb{R}^d\) to project phenotype terms into a \(d\)-dimensional vector space, where \(d = 256\). These embeddings are then utilized to represent patients with rare diseases. A patient \(p\) with a rare disease \(d\) is characterized with a set of phenotype terms \(t_{x_i}\), expressed as \(p = \{t_{x_1}, t_{x_2}, ..., t_{x_n}\}\). The embedding of the patient \(p\) is computed as follows:

\[Embedding(p) = \frac{1}{\sum_{i=1}^{n} IC(t_{x_i})} \sum_{i=1}^{n} IC(t_{x_i}) \cdot f(t_{x_i}).\]

| LLMs | Task 1: Phenotype | Task 2: Screening | Task 3: Comparative | Task 4: Differential Diagnosis | ||||||||||

| Extraction from EHRs | for Specific RDs | Analysis of Common & RDs | among Universal RDs on 2,185 cases | |||||||||||

| 2-15 | F1-score (%) (\(\uparrow\)) | Recall (%) (\(\uparrow\)) | Top-k Recall (%) (\(\uparrow\)) | MR (\(\downarrow\)) | Top-k Recall (%) (\(\uparrow\)) | MR (\(\downarrow\)) | ||||||||

| PTE | GEE | GES | ALS | PNH | MSA | k=1 | k=3 | k=10 | k=1 | k=3 | k=10 | |||

| GPT-4 0-shot | 24.5 | 64.9 | 38.7 | 62.5 | 57.1 | 61.1 | 46.1 | 59.6 | 72.1 | 3.0 | 32.3 | 45.4 | 58.9 | 5.0 |

| 3-shot | 26.0 | 61.9 | 42.0 | - | - | - | - | - | - | - | 30.4 | 43.8 | 57.9 | 5.0 |

| CoT | 25.1 | 65.9 | 39.7 | 62.5 | 57.1 | 66.7 | 47.4 | 62.0 | 75.0 | 2.0 | 33.2 | 46.4 | 59.5 | 4.0 |

| GPT-3.5-Turbo | 17.2 | 48.4 | 32.3 | 37.5 | 42.9 | 44.4 | 33.2 | 45.4 | 58.1 | 5.0 | 21.1 | 34.2 | 48.2 | >10 |

| Gemini Pro | 10.1 | 50.8 | 34.3 | 25.0 | 28.6 | 33.3 | 24.3 | 32.6 | 44.8 | >10 | 14.6 | 22.7 | 33.0 | >10 |

| GLM4 | 15.9 | 56.8 | 24.0 | 50.0 | 42.9 | 50.0 | 31.3 | 42.5 | 57.1 | 8.0 | 19.1 | 30.4 | 45.5 | >10 |

| GLM3-Turbo | 12.9 | 53.5 | 31.4 | 12.5 | 14.3 | 22.2 | 25.8 | 37.8 | 51.2 | 10.0 | 12.4 | 21.0 | 33.2 | >10 |

| Mistral-7B | 3.3 | 26.4 | 8.8 | 0.0 | 14.3 | 11.1 | 13.7 | 19.2 | 29.0 | >10 | 7.2 | 12.5 | 18.9 | >10 |

| Llama2-7B | 0.0 | 0.0 | 0.0 | 0.0 | 14.3 | 5.6 | - | - | - | - | 7.4 | 11.0 | 14.8 | >10 |

| ChatGLM3-6B | 10.3 | 48.3 | 14.6 | 12.5 | 0.0 | 5.6 | 9.7 | 16.1 | 22.8 | >10 | 5.0 | 7.2 | 10.7 | >10 |

| BioMistral-7B | 0.4 | 14.0 | 5.2 | 12.5 | 14.3 | 5.6 | 16.7 | 19.5 | 24.9 | >10 | 6.5 | 8.5 | 12.3 | >10 |

| HuatuoGPT2-7B | 3.7 | 17.8 | 6.6 | 12.5 | 28.6 | 11.1 | 18.6 | 30.4 | 40.8 | >10 | 11.4 | 17.8 | 28.1 | >10 |

| MedAlpaca-7B | 0.0 | 0.0 | 0.0 | 0.0 | 14.3 | 0.0 | - | - | - | - | 8.4 | 14.3 | 19.4 | >10 |

In MedPrompt [34], the dynamic few-shot method selects training examples that are most similar to the specific input. However, it relies on a general-purpose text embedding model such as text-embedding-ada-002 [58], which can only measure the relatedness of phenotype text, without considering deeper associations among phenotypes. Therefore, we utilize the phenotype embeddings generated from our IC value-based random walk algorithm to represent rare disease patients. We then select the top \(m\) most similar examples from the rare disease patient datasets. Data with the highest cosine similarity serve as prompts. From the LLMs’ perspective, such a retrieval augmented generation (RAG) [59] strategy enables LLMs to be more effectively tailored to the rare disease domain by conforming more closely to differential diagnosis tasks and minimizing hallucinations through enriched knowledge. From a clinical perspective, the diagnostic process of specialist physicians relies on medical knowledge and past experiences of those previously diagnosed patients. Consequently, a curated set of relevant examples grants LLMs a distilled version of "clinical experience".

It should be noted that there are many other embedding models available that can be used for choosing examples for LLM’s dynamic few-shot prompts, such as MedPrompt [34] and Auto-CoT [60]. However, our IC-value-based random walk strategy is a simple, easy-to-implement method that captures the critical concept of differential diagnosis. Its effectiveness will be evaluated against other state-of-the-art few-shot methods.

4 Evaluation Results of RareBench↩︎

This section presents the comprehensive results of RareBench in Table 4, including the evaluation of GPT-4 across three different settings: zero-shot, few-shot, and Chain of Thought (CoT) with the zero-shot performance of the other 10 LLMs.

4.1 Task 1: Phenotype Extraction from EHR↩︎

4.1.1 Metric↩︎

Task 1 is evaluated using precision, recall, and F1-score. Accuracy requires exact matches with the reference for phenotype extraction and general entity standardization. For general entity extraction, predictions are correct if they convey the same meaning as the reference.

4.1.2 Results↩︎

Although GPT-4 achieves the best among all LLMs, the results show that all LLMs perform poorly in phenotype extraction. The general entity extraction and standardization results show that LLMs perform well in entity extraction but have weak capabilities in standardizing general entities into phenotypes. This suggests that the main reason for the poor performance of LLMs in phenotype extraction is their weak standardization ability.

Beyond Chain-of-Thought (CoT) and random few-shot, our experimentation includes two additional methods: a) Word-to-phenotype matching, where each word in the output is aligned with the semantically nearest word in the HPO phenotype list. The semantic distance is measured by vectorizing words using OpenAI’s text-embedding-ada-002 [58] model and then assessing cosine similarity; 2) Expanded semantic matching, where we associate each output with the semantically closest \(n\) words in the HPO phenotype list, then integrate these matches as a reference output range before re-querying GPT-4. In our tests, \(n\) is set at 20. The F1-score of these two experiments is 0.306 and 0.322, respectively.

4.2 Task 2: Screening for Specific Rare Diseases↩︎

4.2.1 Metric↩︎

Screening for three specific rare diseases (ALS, PNH, MSA) is measured using recall.

4.2.2 Results↩︎

For this task, GPT-4 again achieves the best performance on all three diseases, with recalls exceeding 0.55 in both zero-shot and Chain of Thought (CoT) settings, respectively. Meanwhile, we did not conduct few-shot learning on GPT-4 due to the limited number of similar cases, as we were concerned that it might influence or bias the subsequent diagnostic results. Other API-based LLMs have recalls of less than or equal to 0.50 on all three diseases. Open-source LLMs have top-1 recalls of less than 0.30 on all three diseases. The use of the CoT approach by GPT-4 results in a slight performance improvement. Overall, LLMs demonstrate the potential for screening three rare diseases using patient information, such as medical history, auxiliary examinations, and laboratory tests.

4.3 Task 3: Comparison Analysis of Common & Rare Diseases↩︎

4.3.1 Metric↩︎

Task 3 employs two key metrics: top-k recall (hit@k, where k=1, 3, 10) and median rank (MR). Top-k recall evaluates the diagnostic accuracy, deeming a diagnosis correct if the actual disease appears within the top-k predictions. Median rank represents the median position of accurate diagnoses within predictions across all cases.

4.3.2 Results↩︎

In this task, GPT-4 achieves a top-1 recall of 0.461 under 0-shot settings (with top-3 and top-10 recalls being 0.596 and 0.721, respectively) and a median rank of 3.0. In comparison, under the Chain of Thought (CoT) setting, GPT-4 achieves the best performance on all metrics. The CoT approach contributes to a moderate improvement in GPT-4’s performance. Additionally, we did not perform few-shot learning on GPT-4 due to the limited number of cases, as we were concerned that it might influence or bias the subsequent diagnostic results. Furthermore, the GPT3.5-Turbo achieves a top-1 recall of 0.332, a top-3 recall of 0.454, a top-10 recall of 0.581, and a median rank 5.0, achieving the second-best performance after the GPT-4 method. The third-best performance is exhibited by GLM4, with a top-1 recall of 0.313, a top-3 recall of 0.425, a top-10 recall of 0.571, and a median rank 8.0. The other two API-based models have top-1 recalls less than 0.26, with median ranks greater than 10.0. All the open-source models yield top-1 recalls less than 0.20, and all have median ranks greater than 10.0. Additionally, we did not present the results of Llama2-7B because we found its performance on lengthy Chinese EHR texts too poor to output normal results.

4.4 Task 4: Differential Diagnosis among Universal Rare Diseases↩︎

4.4.1 Metric↩︎

Task 4 employs the same metric as Task 3.

4.4.2 Results↩︎

We yield the following notable findings in our extensive dataset comprising 2,185 patients with 421 distinct rare diseases. For differential diagnosis of universal rare diseases, GPT-4 achieves a top-1 recall of 0.323 under 0-shot settings (with top-3 and top-10 recalls being 0.454 and 0.589, respectively) and a median rank of 5.0. Interestingly, GPT-4’s performance slightly decreases under random 3-shot, while adopting the Chain of Thought (CoT) approach leads to a performance improvement with a median rank of 4.0. In contrast, the top-1 recalls for the other 4 API-based LLMs are around 0.2, with all their median ranks exceeding 10.0; the top-1 recalls for all 6 LLMs with fewer than 10 billion parameters are less than 0.12. Notably, HuatuoGPT2-7B outperforms models like Mistral-7B and Llama2-7B but falls short against API-based LLMs like GPT-4. Conversely, BioMistral-7B’s performance drops post-training on general biomedical data, indicating that crafting or refining LLMs for the rare disease sector could be a fruitful future endeavor. In conclusion, GPT-4 demonstrated promising differential diagnosis results in a dataset of 2,185 rare disease patients, encompassing 421 different rare diseases. For a comparison of GPT-4 with human physicians, see Section 5.2.

5 Analysis and Discussion↩︎

This section provides a detailed explanation of the performance enhancement for LLMs in Task 4 (differential diagnosis among universal rare diseases) using the dynamic few-shot prompt method based on the knowledge graph. Additionally, it includes a comparison and thorough analysis and discussion of the diagnostic capabilities in rare diseases between doctors from PUMCH and LLMs, using a high-quality subset of the PUMCH dataset.

5.1 Knowledge Integration Dynamic Few-shot↩︎

Figure 4: Performance of six LLMs in rare disease differential diagnosis under zero-shot, random 3-shot, and dynamic 3-shot prompts (using GPT-4 zero-shot as a baseline).

In our research, the IC value-based random walk algorithm we developed is employed to produce embeddings for Human Phenotype Ontology (HPO) phenotype nodes within our comprehensively integrated rare disease knowledge graph. These embeddings are subsequently utilized in dynamic few-shot settings. Our experimental evaluation, involving 2,185 rare disease patients and conducted on six LLMs, including GLM4 [46], [47], is benchmarked against the zero-shot performance of GPT-4. The results are illustrated in Figure 4, with key findings summarized below:

| LLM | Gemini Pro | GLM4 | GLM3-Turbo | Mistral-7B | Llama2-7B | ChatGLM3-6B | ||||||||||||||||||

| Prompt | hit@1 | hit@3 | hit@10 | MR | hit@1 | hit@3 | hit@10 | MR | hit@1 | hit@3 | hit@10 | MR | hit@1 | hit@3 | hit@10 | MR | hit@1 | hit@3 | hit@10 | MR | hit@1 | hit@3 | hit@10 | MR |

| zero-shot | 36.0 | 56.0 | 65.3 | 2.0 | 37.3 | 53.3 | 62.7 | 3.0 | 21.3 | 41.3 | 60.0 | 6.0 | 16.0 | 24.0 | 36.0 | >10 | 21.3 | 37.3 | 49.4 | >10 | 9.3 | 16.0 | 22.7 | >10 |

| random 3-shot | 37.3 | 53.3 | 73.3 | 3.0 | 40.0 | 52.0 | 65.3 | 2.0 | 25.3 | 41.3 | 54.7 | 7.0 | 9.3 | 18.7 | 33.3 | >10 | 16.0 | 25.3 | 38.7 | >10 | 9.3 | 13.3 | 17.3 | >10 |

| MedPrompt (3-shot) | 56.0 | 66.7 | 73.3 | 1.0 | 52.0 | 64.0 | 72.0 | 1.0 | 40.0 | 60.0 | 74.7 | 3.0 | 38.7 | 52.0 | 52.0 | 3.0 | 61.3 | 78.7 | 81.3 | 1.0 | 30.7 | 42.7 | 49.3 | >10 |

| Auto-CoT (3 clusters) | 40.0 | 61.3 | 76.0 | 2.0 | 36.0 | 50.7 | 66.7 | 3.0 | 36.0 | 42.7 | 54.7 | 6.0 | 13.3 | 30.7 | 38.7 | >10 | 20.0 | 30.7 | 40.0 | >10 | 9.3 | 12.0 | 24.0 | >10 |

| dynamic 3-shot (ours) | 62.7 | 74.7 | 82.7 | 1.0 | 62.7 | 70.7 | 76.0 | 1.0 | 72.0 | 77.3 | 84.0 | 1.0 | 38.7 | 52.0 | 64.0 | 3.0 | 66.7 | 73.3 | 80.0 | 1.0 | 34.7 | 41.3 | 48.0 | >10 |

A holistic analysis reveals that dynamic 3-shot settings significantly enhance the performance of the six LLMs compared to their zero-shot capabilities. On average, there is a notable 108% increase in top-1 recall and substantial improvements of 80% and 58% in top-3 and top-10 recalls, respectively.

With dynamic 3-shot, GLM4 outperforms GPT-4’s 0-shot level slightly in top-1 recall. In addition, models with less than 10 billion parameters, like Llama2-7b, achieve 0-shot performance on par with more advanced models, such as Gemini Pro and GLM4.

Our ablation study demonstrates an average enhancement of 23% in top-1 recall across the six LLMs under random 3-shot settings, corresponding increases of 20% and 21% in top-3 and top-10 recalls, respectively. This highlights the effectiveness of our knowledge graph-based dynamic few-shot approach in boosting the capabilities of LLMs, particularly those far behind GPT-4, in the context of rare disease differential diagnosis.

We also compared our approach against MedPrompt [34] and Auto-CoT [60] using 75 high-quality patient cases from PUMCH (employed in our Human-versus-LLMs experiment below) across 6 LLMs. Detailed results are shown in the Table 5. Our Dynamic Few-shot shows superior or comparable performance, notably exceeding GPT-4 and specialist physicians in some cases. However, Auto-CoT underperforms with smaller LLMs like Llama2-7b, highlighting the importance of rationale quality in diagnostics.

5.2 Human versus LLMs experiment on a subset of Task 4↩︎

5.2.1 Selection of Test case and Clinical Specialist↩︎

We selected a subset from the PUMCH dataset to compare the diagnostic performance of physicians with various LLMs. The selection criteria included a wide range of diseases across human organ systems, and multiple cases were included for each disease to assess the difficulty level in diagnosis. Ultimately, this test set comprised 75 cases spanning 16 diseases across 5 hospital departments, including Cardiology, Hematology, Nephrology, Neurology, and Pediatrics. To ensure the integrity of the diagnostic process, any information within the EHRs that could potentially provide clues about the underlying diseases was removed.

A total of 50 physicians were employed from 23 Class-A tertiary hospitals in China. Each of the 5 departments had ten physicians. To minimize diagnostic result errors, we assigned each case to be diagnosed by 4 physicians. In the diagnostic process, clinical specialists rely initially on their knowledge and experience to diagnose cases. For situations where the diagnosis is uncertain, clinical specialists can use external assistance such as books and web tools.

5.2.2 Results↩︎

We initially investigated two input approaches in our comparative experiments. One involved a feature-based input, where the patient’s personal phenotype information was provided to inquire about LLMs’ diagnostic results. The other approach involved entering the patient’s EHR text information with all personally identifiable information removed. We compared the diagnostic outcomes of two input forms on GPT-4 using the test set from the aforementioned 75 PUMCH cases. In contrast, specialist physicians rely solely on EHR text for diagnosis, as shown in Table 6. When utilizing phenotype input, GPT-4 achieves a top-1 recall of 0.520, a top-3 recall of 0.747, a top-10 recall of 0.827, and a median rank of 1.0. Conversely, EHR text input with GPT-4 results in a top-1 recall of 0.453, a top-3 recall of 0.693, a top-10 recall of 0.800, and a median rank of 2.0. Using extracted phenotypes significantly reduces the number of input tokens for LLMs and marginally outperforms the direct use of medical record text in differential diagnosis. This enhancement stems from the extracted phenotypes summarizing crucial information about the patient’s symptoms while eliminating irrelevant details, rendering this input format more economical and efficient for LLMs. In contrast, physicians’ diagnostic performance enhancement when aided by external assistance. The top-1 recall increases from 0.407 to 0.447, the top-3 recall rises from 0.468 to 0.511, and the top-10 recall increases from 0.481 to 0.524.

| hit@1(%) | hit@3(%) | hit@10(%) | MR (\(\downarrow\)) | |

|---|---|---|---|---|

| GPT-4 (Phenotypes) | 52.0 | 74.7 | 82.7 | 1.0 |

| GPT-4 (EHR text) | 45.3 | 69.3 | 80.0 | 2.0 |

| Physicians w/o assistance | 40.7 | 46.8 | 48.1 | - |

| Physicians w/ assistance | 44.7 | 51.1 | 52.4 | - |

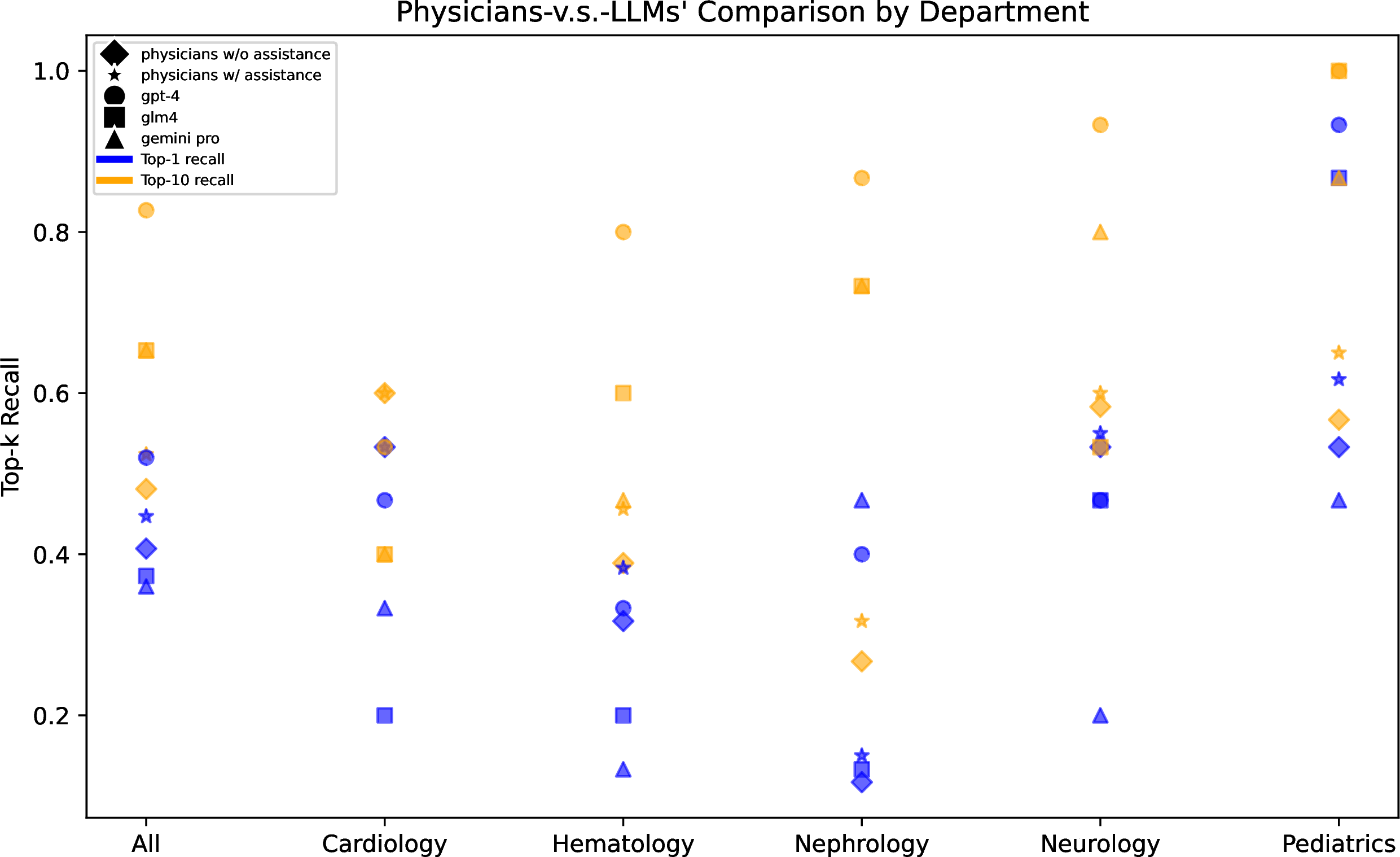

Figure 5: Comparison of top-1 and top-10 recalls in rare disease differential diagnosis across 5 departments between specialist physicians with/without assistance and three LLMs.

We also provide a more detailed presentation of GPT-4’s performance across diseases related to different medical departments. As shown in Figure 5, GPT-4 achieved the best diagnostic results across all 5 departments, surpassing the level of specialist physicians. In contrast, the diagnostic performance of the other two LLMs was inferior to that of specialist physicians. Moreover, there were significant differences in diagnostic results among different departments. For example, all methods achieved high recall rates in pediatrics, possibly due to the comprehensive information available in pediatric cases. Conversely, all methods performed poorly in the cases from the Department of Cardiology, which was speculated to be associated with the inherent features of the diseases themselves. Heart diseases often exhibit similarities and overlaps in symptoms and signs, such as chest pain, chest tightness, palpitations, edema, etc. The differential diagnosis primarily relies on the results of objective auxiliary examinations, including laboratory tests, imaging examinations, and electrophysiological assessments. For instance, in the case of cardiomyopathy, the imaging patterns of echocardiography and cardiac magnetic resonance imaging (MRI) play a crucial role in the diagnostic process. This suggests a future direction that involves utilizing the large multimodal models (LMMs) to incorporate more comprehensive clinical information for diagnosis.

5.3 Case Study↩︎

In the evaluation of phenotype extraction using GPT-4, we observe both positive and negative cases that highlight its capabilities and limitations.

5.3.1 Positive cases↩︎

High Precision in Entity Extraction: GPT-4 adeptly avoids extracting text unrelated to the disease, such as hospital or medication details, demonstrating its discriminative capabilities.

Recognition of Negated Phenotypes: GPT-4 accurately identifies instances where a phenotype is negated, such as correctly omitting "fever" from statements like "no fever." This indicates that GPT-4’s entity extraction transcends simple keyword matching, reflecting a sophisticated comprehension of medical record texts.

5.3.2 Negative Cases↩︎

Challenges in Identifying Entities with Descriptions: GPT-4 occasionally struggles to capture entities that include descriptive details comprehensively. For example, it reduces "Shortness of breath after walking up three flights of stairs" to merely "shortness of breath," omitting the crucial context that specifies the phenotype.

Inconsistencies in Identifying Laboratory Test Results: GPT-4 exhibits limited accuracy in detecting abnormal laboratory test results. For instance, it failed to recognize several abnormal lab results from a patient’s medical record, including "\(K\;3.26mmol/L\downarrow\)," resulting in inaccurate extraction of related phenotypes.

6 Conclusion↩︎

This paper delivers RareBench, an innovative benchmark framework aimed at systematically assessing Large Language Models’ (LLMs) capability in rare disease diagnosis. RareBench combines public datasets with the comprehensive datasets from PUMCH to create the most extensive collection of rare disease patient data available. Leveraging an Information-Content-based random walk algorithm and a rare disease knowledge graph, we introduce a dynamic few-shot prompt, significantly enhancing LLMs’ diagnostic capabilities. Notably, our comparative analysis with specialist physicians demonstrates GPT-4’s superior accuracy in some specific rare disease diagnoses. We expect RareBench to catalyze further advancements and applications of LLMs in tackling the complexities of clinical diagnosis, especially for rare diseases.

7 Limitations and Potential Biases↩︎

Transparency and reliability in decision-making and diagnosis are paramount in medical practice. When utilizing large language models (LLMs) in healthcare, several considerations arise, particularly regarding interpretability, reliability, and the risk of perpetuating existing biases within the models. Firstly, the inner workings of LLMs are often highly complex, non-transparent, and lacking interpretability, which may cause clinicians and patients to hesitate in trusting the model’s decisions. Secondly, AI applications in healthcare must demonstrate high reliability and accuracy. However, due to the limitations of training data, most AI models, including LLMs, may exhibit significant prediction errors and biases, including the diagnosis of rare diseases. Additionally, AI models must justify their ability to generalize to new environments; otherwise, they may result in suboptimal performance in actual clinical settings.

LLMs may unintentionally adopt biases in their training datasets, subsequently influencing predictions. These biases are frequently derived from imbalances within medical data. This issue is particularly acute in the context of rare diseases, where data imbalances are more pronounced due to the scarcity and uneven distribution of cases. Therefore, ongoing monitoring and refinement of the model’s training processes are essential to continually identify and correct biases that may emerge as the model evolves.

8 Ethical Consideration↩︎

Deploying LLMs in clinical settings involves several ethical considerations, including the safety, privacy, and equity of patients. Patient data is extremely sensitive. Therefore, using LLMs in such settings necessitates stringent adherence to health information privacy regulations. Although RareBench demonstrates some promising results for LLMs in diagnosing certain rare diseases, it is essential to emphasize that LLMs should only serve as supplementary tools currently due to issues such as hallucinations. For specific diagnostic decisions, please fully heed the guidance of professional medical practitioners.

This study was approved by the Ethics Committees at Peking Union Medical College Hospital, Peking Union Medical College, and the Chinese Academy of Medical Sciences (approval number S-K2051). It is worth noting that all cases were monitored by doctors from Peking Union Medical College Hospital prior to uploading text information, ensuring the absence of any potential personal information leaks. Xuanzhong Chen, Xiaohao Mao, Qihan Guo, and Ting Chen affirm that the manuscript is an honest, accurate, and transparent account of the study being reported; that no important aspects of the study have been omitted; and that any discrepancies from the study as originally planned (and, if relevant, registered) have been explained.

This work is supported by the National Key R&D Program of China (2021YFF1201303, 2022YFC2703103), Guoqiang Institute of Tsinghua University, and Beijing National Research Center for Information Science and Technology (BNRist). The funders had no roles in study design, data collection and analysis, the decision to publish, and manuscript preparation.

9 Appendix↩︎

9.1 Dataset Details↩︎

Four publicly available datasets are used in this study: MME[61], LIRICAL[18], HMS[62], and RAMEDIS[63]. These datasets are sourced from published articles or open-access datasets. Quality control measures were implemented to filter out cases with insufficient information. Following screening procedures, the MME1 dataset comprises 40 cases across 17 diseases, the LIRICAL2 dataset encompasses 370 cases spanning 252 diseases, the HMS dataset encompasses 88 cases covering 39 diseases, and the RAMEDIS dataset consists of 624 cases spanning 63 diseases.

9.2 Framework Settings↩︎

The implementation details of the models listed in Table 2 are as follows: Both GPT-4 and GPT-3.5 are accessed through OpenAI’s API 3. The models used are "gpt-4-1106-preview" and "gpt-3.5-turbo-1106" respectively. For the two models, the seed parameter is set to 42, while all other parameters are left at their default settings. Both GLM4 and GLM3 are accessed through Zhipu AI’s API 4. The models used are "glm-4" and "glm-3-turbo" respectively. In the model parameter settings, temperature is set to 0.15 and top_p to 0.7; all other parameters are maintained at their default values. Gemini is accessed through DeepMind’s API 5. The model used is "gemini-pro", and the parameters use default settings.

9.3 Prompt Examples↩︎

9.3.1 Task 1: Phenotype Extraction from Electronic Health Records↩︎

Sub-task 1: Phenotype Extraction

[Task] Extract disease phenotypes from the following medical record text.

[Requirement]

Output format: One phenotype per line, the format is [index]. [English name], such as "1. Cough", "2. Pain".

Answer according to the standard English terminology in the HPO database (https://hpo.jax.org/app/). Do not use colloquialisms, and try to be as concise as possible. It is not a restatement of the original words, but a refinement of the phenotype.

Extract all phenotypes appearing in the text without omitting any. output as much as possible.

For symptoms that are denied in the text, such as "no chest pain," and "no cough," do not extract the corresponding phenotypes.

[Medical Record Text]

The patient developed shortness of breath accompanied by chest tightness and facial edema about 3 months ago after physical exertion, with the eyelid edema being the most severe. The shortness of breath was most severe after physical activity and improved after rest. He was treated at a local hospital, and on December 1, 2013, the cardiac function showed by color echocardiography: tricuspid valve disease. Then he went to our hospital. Ultrasound showed myocardial disease, right atrium and right ventricle enlargement, moderate to severe tricuspid valve insufficiency, severe reduction in left and right ventricular systolic function, aortic valve degeneration, and a small amount of pericardial effusion. The patient was admitted to our hospital for outpatient consideration of tricuspid valve disease and is now admitted to our department for further diagnosis and treatment. Since the onset of the disease, the patient’s energy, diet, and sleep have been acceptable, and his bowel movements have been normal. There is no significant change in weight from before. No dry mouth, dry eyes, mouth ulcers, joint swelling and pain, rash, etc.

Sub-task 2: General Entity Extraction

[Task] For the medical record text provided below, mark the text that represents the disease entity.

[Requirement]

Output format: One disease entity per line. The format is [Index]. [Original text]. For example, "1. Fever", "2. Body temperature 39℃".

Output in the order in which it appears in the text; do not omit anything, and output as much as possible.

For symptoms that are denied in the text, such as "no chest pain," and "no cough," do not extract the corresponding entities.

[Medical Record Text]

\(\cdots\)

Sub-task3: General Entity Standardization

[Task] Standardize the following medical entities to Human Phenotype Ontology phenotypes.

[Requirement]

Answer according to the standard English terminology in the HPO database (https://hpo.jax.org/app/). Do not be colloquial, and try to be as formal, standardized, and concise as possible.

Output format: Each line contains one medical entity and its corresponding HPO phenotype. The format is [index]. [Entity name]: [English name of phenotype]. For example, "1. Body temperature 39℃: Fever", "2. Excessive urine output: Polyuria".

[Entity list]

Chest tightness

ST segment elevation

Activity tolerance gradually decreases

ventricular fibrillation

Aspen syndrome

sore throat

J-point elevation greater than 0.2mv and saddle-like elevation

Shortness of breath

pneumonia

cough

9.3.2 Task 2: Screening for Specific Rare Diseases↩︎

Zero-shot prompt As an expert in the field of rare diseases, you are tasked with diagnosing a real clinical case. Please carefully review the patient’s basic medical history, specialized examinations, and auxiliary tests to determine whether the patient may be suffering from [SCREENING DISEASE].

CoT prompt [Zero-shot prompt], Let us think step by step.

9.3.3 Task 3: Comparison Analysis of Common and Rare Diseases↩︎

Zero-shot prompt Please, as a rare disease specialist, answer the following questions. [EHRs] is the patient’s admitted record, including chief complaint, present medical history, etc. [77 CANDIDATE DISEASES] are the types of diseases that the patient may suffer from. The top 10 diagnosed diseases are selected from highest to lowest probability.

CoT prompt [Zero-shot prompt], Let us think step by step.

9.3.4 Task 4: Differential Diagnosis among Universal Rare Diseases↩︎

Here, we present the specific configurations for zero-shot, Chain of Thought (CoT), and (dynamic) few-shot settings.

System prompt You are a specialist in the field of rare diseases. You will be provided and asked about a complicated clinical case; read it carefully and then provide a diverse and comprehensive differential diagnosis.

Zero-shot prompt This rare disease patient suffers from symptoms: [PATIENT_PHENOTYPE]. Enumerate the top 10 most likely diagnoses. Be precise, listing one diagnosis per line, and try to cover many unique possibilities (at least 10). The top 10 diagnoses are:

CoT prompt [Zero-shot prompt]. Let us think step by step.

(Dynamic) Few-shot prompt Let me give you [K] examples first: The [i] patient has a rare disease [EXAMPLE_DIAGNOSIS], and his/her [PHENOTYPE or EHR] is as follows: [EXAMPLE_PHENOTYPE or EXAMPLE_EHR]. Next is the patient case you need to diagnose: [Zero-shot prompt].

9.4 Knowledge Integration Dynamic Few-shot↩︎

9.4.1 Knowledge Bases Details↩︎

The Human Phenotype Ontology (HPO) 6 provides a standardized vocabulary for phenotypic abnormalities encountered in human disease. These phenotype terms form a directed acyclic graph (DAG) and are connected by directed "IS_A" edges (denoting subclass relationships). The files used in this study are "hp.obo" and "phenotype.hpoa", with the version being hp/releases/2023-06-06.

Orphanet 7, funded by the French Ministry of Health, is a non-profit online resource and information platform focusing on rare diseases. It offers information on over 6,000 rare diseases, written by medical experts and researchers and subjected to rigorous quality review. This information includes etiology, symptoms, diagnostic methods, treatment options, and prognosis. Our work primarily utilizes the annotation information on rare diseases and genes from this platform.

Online Mendelian Inheritance in Man (OMIM) 8 is a comprehensive database of genes and genetic diseases, collecting and organizing extensive information about human genetic disorders. It includes descriptions of over 20,000 genetic diseases, covering genetic etiology, clinical manifestation, inheritance patterns, molecular mechanisms, and related literature.

The National Rare Disease Registry System of China (NRDRS) 9, overseen by Peking Union Medical College Hospital, is a national-level online registry platform for rare diseases, aimed at establishing unified rare disease registration standards and norms. The first list of the Compendium of China’s Rare Disease (CCRD) compiles detailed information on 144 rare diseases. The annotations of relationships between diseases and phenotypes are manually extracted. The version used in our study is 2019-11.

9.4.2 Comparison Analysis of Node2vec and IC value-based random walk↩︎

In our integrated rare disease knowledge graph, we implement an IC value-based random walk algorithm to obtain embeddings for phenotype and disease nodes within the graph. While Node2Vec utilizes two parameters, \(p\) and \(q\), to control the walking probability, we dynamically adjust this based on the IC values of different phenotypes. The settings for other parameters are as follows:

‘embedding_dim’: 256,

‘walk_length’: 45,

‘context_size’: 35,

‘walks_per_node’: 40,

‘num_negative_samples’: 1,

‘sparse’: True,

‘loader_batch_size’: 256,

‘loader_shuffle’: True,

‘loader_num_workers’: 4,

‘learning_rate’: 0.01,

‘epoch_nums’: 36

References↩︎

https://github.com/ga4gh/mme-apis/tree/master/testing↩︎

https:// zenodo.org/record/3905420↩︎

https://platform.openai.com/docs/introduction↩︎

https://open.bigmodel.cn/↩︎

https://deepmind.google/technologies/gemini/#introduction↩︎

https://hpo.jax.org/app/↩︎

https://www.orpha.net/consor/cgi-bin/index.php↩︎

https://www.omim.org/↩︎

https://www.nrdrs.org.cn/xhrareweb/homeIndex↩︎