DYMAG: Rethinking Message Passing Using Dynamical-systems-based Waveforms

January 01, 1970

Abstract

We present DYMAG, a graph neural network based on a novel form of message aggregation. Standard message-passing neural networks, which often aggregate local neighbors via mean-aggregation, can be regarded as convolving with a simple rectangular waveform which is non-zero only on 1-hop neighbors of every vertex. Here, we go beyond such local averaging. We will convolve the node features with more sophisticated waveforms generated using dynamics such as the heat equation, wave equation, and the Sprott model (an example of chaotic dynamics). Furthermore, we use snapshots of these dynamics at different time points to create waveforms at many effective scales. Theoretically, we show that these dynamic waveforms can capture salient information about the graph including connected components, connectivity, and cycle structures even with no features. Empirically, we test DYMAG on both real and synthetic benchmarks to establish that DYMAG outperforms baseline models on recovery of graph persistence, generating parameters of random graphs, as well as property prediction for proteins, molecules and materials. Our code is available at https://github.com/KrishnaswamyLab/DYMAG.

1 Introduction↩︎

Message passing graph neural networks (GNNs) rely on aggregating signals via local averaging, which can be interpreted as convolving the node features with a simple, rectangular waveform that is non-zero only within one-hop neighborhoods of each vertex. It is known that this type of message-passing tends to suffer from over-smoothing if too many iterations are applied and from under-reaching if too few are applied [1]–[3]. One possible solution is to use multiscale message passing [4]. Another approach, [5]–[13] more directly related to our work, is to use graph wavelets [14], [15]. These wavelets can be viewed as convolving the input features with multiscale, oscillatory waveforms, in contrast to the simple, rectangular, one-hop waveforms used in message passing.

Here, we introduce DYMAG which uses dynamics on the graph to generate waveforms which we will convolve with the node features. We will use these waveforms as a form of multiscale message aggregation, which we show can effectively extract graph geometric and topological information and outperform baseline methods on graph-level tasks that rely on such graph properties.

We evaluate DYMAG on a broad spectrum of graph learning benchmarks spanning synthetic, citation, molecular, and materials science datasets. To assess its ability to recover generative and topological structure, we first test on synthetic graphs,

including Erdős-Rényi and stochastic block models, where the task involves inferring graph parameters and persistent features. We then evaluate on citation networks, including homophilic datasets - Cora [16], Citeseer [17],

and PubMed [18] - and heterophilic datasets - Texas, Wisconsin, and Cornell [19]. We further demonstrate DYMAG’s scalability on the largest dataset in the Open Graph Benchmark, ogbn-papers100M [20], demonstrating that it can recover topological properties of massive graphs. For molecular property prediction, we consider both protein graphs PROTEINS, ENZYMES, and MUTAG [21] and small-molecule graphs (DrugBank [22], Drug Therapeutics Program AIDS Antiviral Screen Data [23]). Finally, we test on the Materials Project

dataset [24] to predict materials properties such as band gaps. Across these varied domains, DYMAG consistently outperforms standard GNNs

and approaches the performance of pretrained, domain-specific models. Our main contributions are as follows:

Figure 1:  .

.

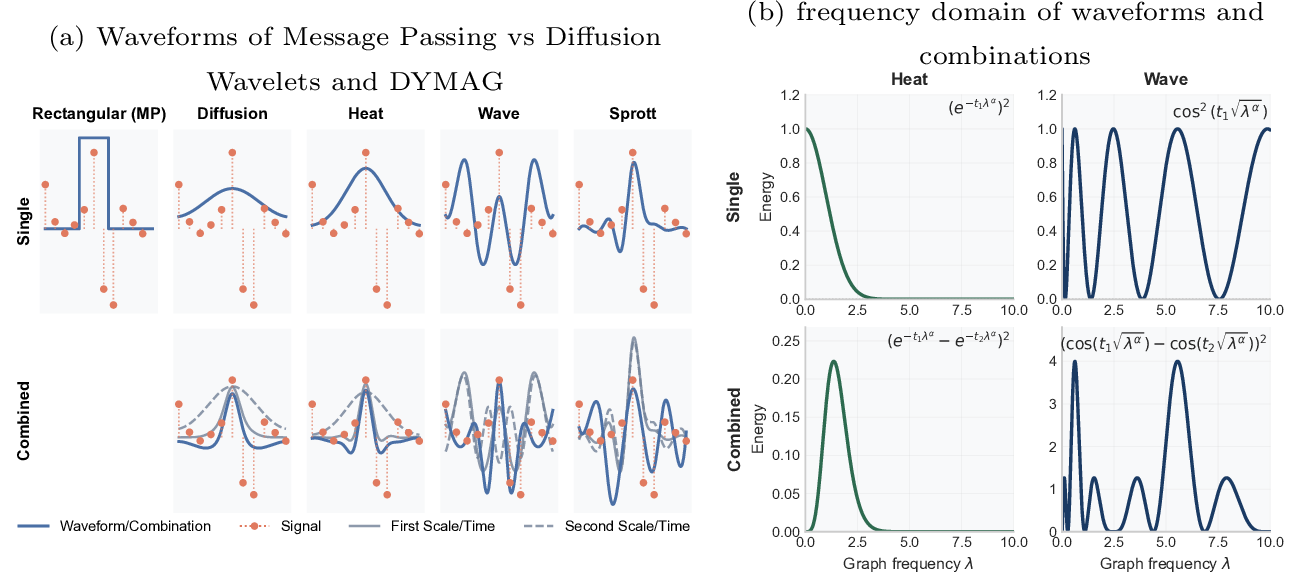

Figure 2: Visualization of Waveforms (a) Waveforms visualized on a line graph with a signal (feature), where DYMAG provides more diverse waveforms than standard message passing; (b) waveforms and combinations provide low-pass and bandpass filters in the frequency domain.

2 Related work and Background↩︎

Previous work has either analyzed dynamics on graphs [25]–[31] or aimed to use dynamics as a framework for understanding GNNs. In the latter case [32]–[34] and [35] viewed message passing as a time-discretized diffusion PDE and used this insight to design novel GNNs. Unlike those methods, we view PDE solutions as waveforms and use convolution against these waveforms to define our aggregation rule. Additionally, existing work primarily focus on parabolic equations while we also consider hyperbolic and chaotic dynamics. We provide a further discussion of related work in . Now, we provide backgrounds on graph signal processing and dynamics. See for details.

2.1 Graph Signal Processing↩︎

In Graph Signal Processing, a node feature vector \(\mathbf{x}\in\mathbb{R}^n\) is viewed as a signal on the vertices of a weighted, undirected graph \(G=(V,E,w)\), \(|V|=n\) [36], [37]. Let \(L=U\Lambda U^\top\) be its Laplacian with eigendecomposition \(L\boldsymbol{\nu}_k=\lambda_k\boldsymbol{\nu}_k\), \(0=\lambda_1\le\cdots\le\lambda_n\). The graph Fourier transform is defined by projecting the singals onto these eigenvectors \(\widehat{\mathbf{x}} = U^\top\mathbf{x}.\) The projection onto the first several eigenvectors (small \(\lambda_k\)) captures the smooth portion of the signal and the projection onto the later eigenvectors (large \(\lambda_k\)) capture oscillatory ones. Classical message‑passing GNNs act as low‑pass filters [38], [39], effectively only keeping the projection onto the first several eigenvectors; DYMAG instead aggregates with waveforms spanning low, mid, and high bands. (Details in .)

2.2 Heat and Wave Dynamics on a Graph↩︎

For \(\alpha > 0\), we define the \(\alpha\)-fractional graph Laplacian by \(L^\alpha := U \Lambda ^\alpha U^\mathsf{T}\). For each \(i\in V\), we let \(\delta_i\) denote the Dirac signal at \(i\) given by: for \(\forall k \in V\), \(\delta_i(k)=1\) if \(i=k\), \(\delta_i(k)=0\) otherwise. We define \[\label{eqn:heat95eq95definition} \smash{-L^\alpha u^{(i)}_H(v,t) = \partial_t u^{(i)}_H(v,t),\quad u^{(i)}_H(v,0)=\delta_i(v), \quad \text{(Heat)}}\tag{1}\] \[\begin{align} \smash{-L^\alpha u^{(i)}_W(v,t) = \partial^2_t u^{(i)}_W(v,t),\quad u^{(i)}_W(v,0)=\delta_i(v), \quad \partial_t u^{(i)}_W(v,0)=c\delta_i(v),\quad \text{(Wave)}}\label{eqn:wave95eq95def} \end{align}\tag{2}\] as the heat and wave equations with a initial value \(\delta_i\) (and initial veclocity \(c\delta_i\) for wave). On a connected graph \(G\), they admit closed‐form solutions:

\[\begin{align} \tag{3} u^{(i)}_H(v,t) &= \sum_{k=1}^n e^{-t\lambda_k^\alpha}\langle \boldsymbol{\nu}_k, \delta_i \rangle \boldsymbol{\nu}_k(v), \quad\text{and}\\ u^{(i)}_W(v,t) &=\sum_{k=1}^n \cos(\sqrt{\lambda_k^\alpha} t)\langle \boldsymbol{\nu}_k,\delta_i \rangle \boldsymbol{\nu}_k(v) + t\langle \boldsymbol{\nu}_1,c\delta_i \rangle\boldsymbol{\nu}_1(v) +\sum_{k=2}^n\frac{1}{\sqrt{\lambda_k^\alpha}}\sin(\sqrt{\lambda_k^\alpha} t)\langle \boldsymbol{\nu}_k,c\delta_i\rangle\boldsymbol{\nu}_k(v). \tag{4} \end{align}\]

These expressions extend to disconnected graphs and, by Remark 1 of [40], are invariant to the choice of Laplacian eigenbasis. (See for details).

2.3 Chaotic Dynamics on a Graph↩︎

Chaotic dynamics, describing systems that have aperiodic behavior and sensitivity to initial conditions [41], can be modeled by the Sprott dynamics [42]:

\[\smash[t]{\frac{d}{dt} u^{(i)}_S(v_k, t)= -b \cdot u^{(i)}_S(v_k, t)+ \tanh( \sum_{v_j \in \mathcal{N}(v_k)} c_{k,j} u^{(i)}(v_j, t)),\quad u_S(\cdot,0)=\delta_i,} \label{eqn:sprott95dynamics}\tag{5}\]

Solutions remain bounded for \(b>0\). For \(b=0.25\), fully connected graphs with generic couplings or sparse graphs exhibit positive Lyapunov exponents (chaos). [42], [43]. (Full details in .)

3 Methods↩︎

DYMAG is graph neural network consisting of two main parts.

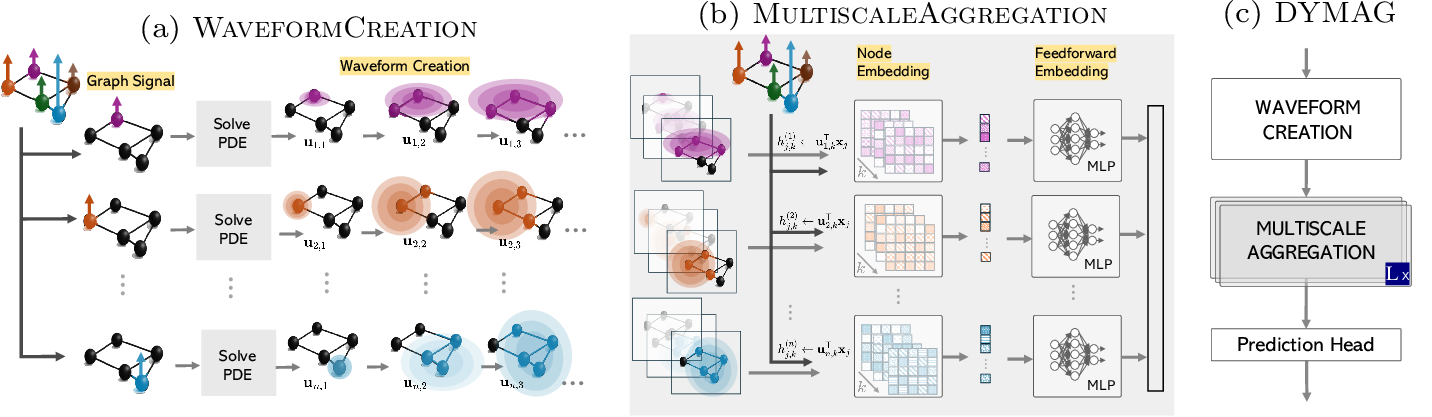

Waveform Bank Creation: A diverse bank of multi-scale waveforms is constructed by solving the PDEs considered in Section 2. These waveforms define a set of basis functions that encode diverse patterns across spatial and temporal scales. (See .)

Multi-scale Aggregations: At each layer \(1\leq\ell\leq L\), node representations \(X^{(\ell-1)}\) are convolved with the waveform bank. The result is then passed through an MLP to produce an updated representation \(X^{(\ell)}\). This step replaces standard message passing mechanisms by aggregation via sophisticated, multiscale waveforms. (See .)

Figure 3: Visual illustration of DYMAG (a) Waveform bank creation solving PDEs. (b) Multiscale Aggregation by taking inner product with the waveforms. (c) DYMAG consists of stacked layers and a prediction head.

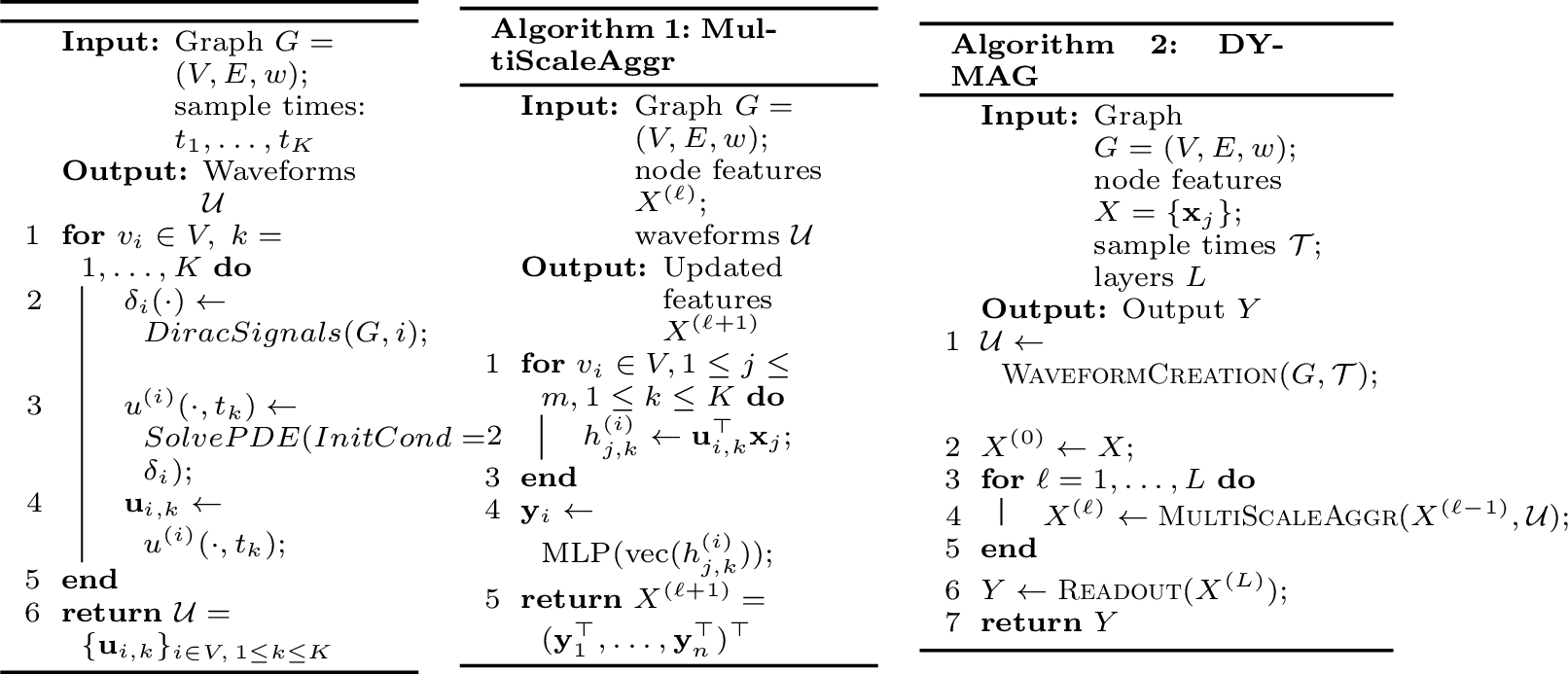

Figure 4: WaveformCreation

3.1 Waveform Bank Creation Using PDEs↩︎

Let \(u^{(i)}(v,t)\) denote the solution to the chosen PDE dynamics (wave, heat, or Sprott equations) with initial condition \(u^{(i)}(\cdot,0) = \delta_i(\cdot)\), where \(\delta_i(j) = 1\) if \(j = i\) and \(0\) otherwise (a Dirac signal centered at node \(i\)). When applicable (for second-order dynamics), we also set \(\partial_t u(\cdot, 0) = c\,\delta_i(\cdot)\), with a fixed hyperparameter \(c\geq 0\). We choose \(K\) time points \(\mathcal{T}=(t_1,\dots, t_K)\) by fixing a maximal time \(T\) and then setting \(t_k=kT/K\). We then define \(\mathcal{U}=\left\{\mathbf{u}_{i,k}\right\}_{i \in V,1\leq k\leq K},\) where \(\mathbf{u}_{i,k}\) is the vector \(\mathbf{u}_{i,k}:=u^{(i)}(\cdot,t_k)\in\mathbb{R}^n\). We refer to \(\mathcal{U}\) as the PDE waveform bank (see Algorithm 4 and a). Each waveform \(\mathbf{u}_{i,k}\) is centered at node \(i\) and corresponds to a snapshot of the PDE dynamics at time scale \(t_k\). The bank \(\mathcal{U}\) collects such waveforms across all nodes and multiple time scales, similar to wavelets but more flexible thanks to the diverse dynamics. shows basic waveforms and more complex patterns created via combinations (from the MLP discussed below).

We note that \(\mathcal{U}\) can be computed offline prior to training for increased computational efficiency. Additionally, note that the waveforms can be computed efficiently via either Chebshev approximation or a Runge-Kutta scheme. We further discuss on complexity and scalability in .

3.2 Multi-scale Aggregation↩︎

In each layer, \(\ell\), we assume that we are given an \(n\times m_\ell\) feature matrix \(X^{(\ell)}\) (where \(X^{(0)}\) consists of the initial node features). We let \(\mathbf{x}_j\in\mathbb{R}^n\) denote the \(r\)-th column of \(X^{(\ell)}\), which we interpret as a signal defined on \(V\). For each waveform \(\mathbf{u}_{i,k}\) in the waveform bank \(\mathcal{U}\) (Section 3.1), we perform an inner product with the node features, thought of as a convolution: \[\begin{align} h_{j,k}^{(i)} &= \langle \mathbf{u}_{i,k},\mathbf{x}_j\rangle = \mathbf{u}_{i,k}^{\mathsf T}\mathbf{x}_j . \label{eq:inner-prod} \end{align}\tag{6}\] We then combine these convolved features by applying an MLP to the states \(h^{(i)}_{j,k}\) associated with each node \(v_i\), i.e., \(\mathbf{y}_i=\text{MLP}\left(\text{vec}\left(h^{(i)}_{j,k}\right)\right)\). We then reorganize the \(\mathbf{y}_i\) into a transformed feature matrix \(X^{(\ell+1)} =(\mathbf{y}_1^{\mathsf{T}},\ldots,\mathbf{y}^{\mathsf{T}}_n)^{\mathsf{T}}\) (so that \(\mathbf{y}^{\mathsf{T}}_i\) is the \(i\)-th row of \(X^{(\ell+1)}\)). See Algorithm [alg:mp] and b.

The inner product, Eqn. 6 , can also be interpreted as the feature \(\mathbf{x}_j\) being updated via a message from a source node \(v_i\) at scale \(k\). Indeed, message passing neural networks can be interpreted as performing such an inner product with a limited bandwidth rectangular waveform as shown in and then applying the MLP. We remark that since we use a waveforms based on PDE solutions of various time snapshots, we obtain multi‑scale embedding. As the time \(t_k\) increases, the waveform effectively dilates and spreads to a larger neighborhood of vertices. Furthermore, via the MLP, DYMAG is able to learn novel combinations of the waveforms, either from different source nodes or at different time scales. This includes the diffusion wavelets [15] which can be obtained by subtracting solutions to the heat equation at different time scales [40].

3.2.0.1 Downstream Readout

After \(L\) rounds of Multi-scale aggregation, the resulting node representations \(X^{(L)} = \{ \mathbf{x}_i^{(L)} \}_{i \in V}\) are used for prediction. For node-level tasks, a shared MLP is applied independently to each node feature vector. For graph-level tasks, node features are first aggregated using a permutation-invariant pooling operation (e.g., global mean or sum), followed by a task-specific MLP to produce the graph-level output. See c and Algorithm [alg:dymag].

3.3 Theoretical Properties Related to Dynamics-based Waveforms on the Graph↩︎

Below, we formulate properties of our waveforms and the information they are able to extract from the graph. These results serve as motivation for our method, which utilizes these dynamics as a novel aggregation paradigm for graph neural networks. Complete proofs are provided in .

3.3.1 Frequency Domain Characteristics of Waveform Based Message Aggregation↩︎

Standard message passing can be viewed as convolving the node features with a single, simple, rectangular waveform. From the perspective of graph signal processing, this corresponds to a low-pass filtering which only preserves the low-frequency (smooth) portion of the node features. In contrast, DYMAG employees a richer, more sophisticated bank of waveforms, which we will show allows DYMAG to extract a variety of different types of information. We first consider a function defined by \(u_{\mathrm{BP}}^{(i)}(v,t) \;=\; u_H^{(i)}(v,t_1)\;-\;u_H^{(i)}(v,t_2)\) for two fixed times, \(0<t_1<t_2\). In the graph-Fourier domain its response at frequency (eigenvalue) \(\lambda_k\) is \(e^{-t_1\lambda_k^\alpha}-e^{-t_2\lambda_k^\alpha}.\) This function (i) is \(0\) at \(\lambda_k=0\), (ii) tends to \(0\) as \(\lambda_k\to\infty\), and (iii) reaches a single maximum at \(\lambda^\star=\left(\frac{1}{t_2-t_1}\log\!\bigl(\tfrac{t_2}{t_1}\bigr)\right)^{1/\alpha}.\) Thus, \(u_{BP}^{(i)}\) suppresses both very low and very high frequencies, but keeps information in a moderate frequency band (which depends on \(t_1\) and \(t_2\)). Therefore, we call \(u^{(i)}_{BP}\) a band-pass function. Notably, DYMAG has the ability to learn this function via the use of the MLP which is applied after Eqn. 6 . We next consider the solution to the wave equation given by Eqn. 4 , for simplicity focusing on the case where \(c=0\). The frequency response at each \(\lambda_k\) is given by \(\cos(\sqrt{\lambda_k^{\alpha}} t)\). Since this function peaks and falls in multiple different “bands" we think of it as a multi-band-pass function. This leads us to the following proposition.

Proposition 1 (Band-pass information). DYMAG is able to extract band-pass, or even multi-band-pass information information from the node features.

Proof sketch. In heat-equation case, DYMAG can learn the band-pass function \(u^{(i)}_{BP}\) via suitable weights in the MLP. In the wave equation case, DYMAG is able to capture multi-band-pass information as a consequence of the sinusoidal frequency responce of the wave solution, \(u_W\). ◻

In addition to the above propositions, we note that DYMAG is also able to learn low-pass information, similar to standard message passing networks. This is a direct consequence of the fact that \(u_H^{(i)}\) has a decreasing frequency response \(e^{-t\lambda_k^\alpha}\). It can also learn high-pass information via function \(u^{(i)}_{\text{High}}=1-u^{(i)}_H\). We next discuss how the frequency-domain characteristics of DYMAG help alleviate the following limitations of standard GNNs:

Over-smoothing: Message passing networks utilize rectangular pulse waveforms, which act as low-pass filters, i.e., smoothing operators. With each layer, the features get smoother and smoother, eventually become nearly constant, which limits their usefulness. By contrast, DYMAG is able to learn band-pass, high-pass, and multi-band-pass information in addition to standard low-pass information. This allows it to avoid the oversmoothing problem. Under-reaching: Message passing networks only aggregate within local, one-hop neighborhoods. Thus their receptive field is equal to the number of layers, which must be kept small to avoid severe oversmoothing. This limits their ability to capture global structure or long range interactions. DYMAG, on the other hand performs aggregation via waveforms which are not confined to one-hop neighborhoods and is able to capture global structure. Heterophily: The local averaging operation in message passing networks, are particularly problematic on heterophilic graphs where many nodes have different labels than their neighbors. DYMAG’s diverse waveform banks are able to capture band-pass, multi-band-pass, and high-frequency information (in addition to low-pass). This makes them well-suited to heterophilic graphs. Additionally, we note that our experiment shows that the Sprott dynamics perform particularly well on node classificaiton on heterophilic graphs (see ), perhaps because of their ability to detect subtle changes in different portions of the network structure.

3.3.2 General Properties of Solutions↩︎

The following result shows that DYMAG is able to identify the connected components of \(G\).

Proposition 2 (Identification of Connected Components). Let \(u^{(i)}(v,t)\) denote the solution to the heat equation, wave equation, or Sprott chaotic dynamics. Suppose that \(G\) is not connected. Then, for any \(v\) which is not in the same connected component as \(v_i\), and all \(t\geq0\), we have \(u^{(i)}(v,t) = 0.\)

Proof sketch. We verify that \(\tilde{u}^{(i)}(v,t) := u^{(i)}(v,t) \cdot \mathbb{1}_{v \in \mathcal{C}}\), where \(\mathcal{C}\subseteq V\) is the component containing \(v_i\) satisfies the same PDE as \(u^{(i)}(v,t)\). The result follows by uniqueness of solutions. ◻

Due to Proposition 2, we will assume that \(G\) is connected in the following sections. However, we note that many of the results still apply to disconnected graphs with suitable modifications.

3.3.3 Heat Dynamics↩︎

The continuous and global nature of DYMAG allows it to instantaneously have a receptive field over the entire graph. Intuitively, this corresponds to information spreading instantaneously over the graph (although for small values of \(t\) the energy \(u^{(i)}_H(v,\cdot)\) will be mostly concentrated near \(v_i\)). This is in contrast to message passing networks where the receptive field about each node is equal to the number of layers (which is usually must be kept small in order to avoid over-smoothing).

Proposition 3. Let \(G\) be connected and let \(L\) be the random-walk Laplacian \(L_{rw}\) (with \(\alpha=1)\). Let \(u^{(i)}_H(v,t)\) be the solution to the heat equation, Eqn. 3 . Then \(u^{(i)}_H(v,t)>0\) for all \(v\in V\) and \(t>0\).

Proof Sketch. This is a consequence of a relationship between \(u^{(i)}_H\) and continuous-time random walks established in . ◻

Our next two results analyze the energy decay of \(u_H^{(i)}\). They suggests that graphs with a larger \(\lambda_2\) will have a faster rate of energy decay. The second eigenvalue of a graph can be related to the isoperimetric ratio of a graph through Cheeger’s inequality, thereby revealing information on graph structure and how “bottlenecked" a particular graph is [44]. Additionally, they show that the properties of heat energy decay can distinguish between between graph structures. We note that although the assumptions for Proposition 5 represents a rather specific set of conditions, we expect that when two graphs have edges generated according to a similar rule or distribution, the more densely connected graph will have more rapidly decaying heat energy.

Proposition 4 (Heat energy). Let \(G\) be connected, and let \(u^{(i)}_H(v,t)\) be as in the solution to the heat equation with initial condition \(\delta_i\) as in . Then, \(e^{-2t \lambda_n^\alpha} \leq \| u^{(i)}_H(\cdot, t)\|_2^2 \leq |\boldsymbol{\nu}_1(i)|^2 + e^{-2t\lambda_2^\alpha}.\)

Proof sketch. It follows from \(\|u^{(i)}_H(\cdot, t) \|_2^2 = \sum_{k = 1}^n e^{-2t\lambda_k^\alpha} |\langle \boldsymbol{\nu}_k, \delta_i \rangle|^2 = \sum_{k = 1}^n e^{-2t\lambda_k^\alpha} |\boldsymbol{\nu}_k(i)|^2. \qedhere\) ◻

Proposition 5 (Heat energy between graphs). Let \(G\) and \(G'\) be graphs on \(n\) vertices with fractional Laplacians \(L_G^\alpha\) and \(L_{G'}^\alpha\) and let \(\delta_i\) and \(\delta_{i'}\) be initial conditions for on \(G\) and \(G'\). Assume: (i) \(L_{G'}^\alpha \succcurlyeq L_G^\alpha\), i.e., \(\mathbf{v}^\mathsf{T} L_{G'}^\alpha \mathbf{v} \geq \mathbf{v}^\mathsf{T} L_{G}^\alpha \mathbf{v}\:\:\text{for all }\mathbf{v}\in\mathbb{R}^n,\) (ii) We have \(| \boldsymbol{\nu}_k'(i)|^2 \leq (1 + \eta_k(t)) |\boldsymbol{\nu}_k(i)|^2\) for all \(1\leq k \leq n,\) where we also assume \(\eta_k(t) := \exp(2t ((\lambda_k')^\alpha-\lambda_k^\alpha)) - 1 \geq 0.\)

Then, with \(u_H\) and \(u_H'\) defined as in , we have \(\|(u^{(i)}_H)'(\cdot,t)\|^2_2 \leq \|u^{(i)}_H(\cdot, t)\|_2^2.\)

Proof sketch. The result is a consequence of Parseval’s identity. ◻

Finally, we restate some known results that provide additional foundation linking the behavior of the heat equation solutions to graph topology. Lemma 1 of [45] shows that the heat equation encodes shortest path distances \(d(v_i,v_j)\) between nodes on the graph:

Proposition 6 (Relation to distances, (Lemma 1 of [45])). Let \(u^{(i)}_H\) denote the solution to Eqn. 1 with initial condition \(\delta_i\) (and \(\alpha=1)\). Then, \(d(v_i, v_j) = \lim_{t\to 0} \frac{\log u_H^{(i)}(v_j, t) }{\log t}.\)

We next consider, the Ollivier-Ricci curvature. This is a discrete notion of curvature, meant to parallel the tradition notion of Ricci curvature in Riemmanian geometry. It is defined by \(\kappa(v_i, v_j) = 1 - W_1(\mu_{v_i}, \mu_{v_j})/d(v_i ,v_j),\) where \(\mu_v\) is a probability measure centered around \(v\) (see [46] for details), \(W_1\) the 1-Wasserstein distance, and \(d(v_i,v_j)\) is the distance (shortest path length) from \(v_i\) to \(v_j\). The following result from [46] relates \(\kappa\) to the heat equation.

Proposition 7 (Relation to Ollivier-Ricci curvature, (Theorem 5.8 of [46])). Let \(L=D-A\) be the unnormalized Laplacian, then \(\kappa(v_i, v_j) = \lim_{t\to 0^+} \frac{1}{t} \bigg(1 - \frac{W_1(u^{(i)}_H(\cdot, t), u^{(j)}_H(\cdot, t))}{d(v_i,v_j)} \bigg).\)

3.3.4 Wave Dynamics↩︎

The periodicity of the solution to the wave equation endows it with the ability to capture long range interactions. Central to this argument is the wave energy, analyzed in the following proposition which focuses on the case where the initial velocity \(c\) is equal to zero:

Proposition 8 (Wave energy bounds). Let \(u_W^{(i)}(v,t)\) be the solution to the fractional wave equation Eqn. 4 with initial conditions \(u_W^{(i)}(\cdot,0) = \delta_i\) and \(\partial_t u_W^{(i)}(\cdot,0) = 0\). Then, for any time \(t \geq 0\), the energy of the waveform satisfies \(|\boldsymbol{\nu}_1(i)|^2 \leq \| u_W^{(i)}(\cdot,t) \|_2^2 \leq 1.\)

Proof sketch. By Parseval’s identity and the explicit solution in , we expand: \(\| u_W^{(i)}(\cdot,t) \|_2^2 = \sum_{k=1}^n \cos^2\left(\sqrt{\lambda_k^\alpha}t\right) |\langle \boldsymbol{\nu}_k, \delta_i \rangle|^2=\sum_{k=1}^n \cos^2\left(\sqrt{\lambda_k^\alpha}t\right) |\boldsymbol{\nu}_k(i)|^2.\) Since \(\cos^2(\cdot) \in [0,1]\) and \(\sum_k |\langle \boldsymbol{\nu}_k, \delta_i \rangle|^2 = \| \delta_i \|_2^2 = 1\), the result follows. ◻

This shows that, unlike heat kernels (which decay over time), the wave energy oscillates, retaining signal over time, and thus can reflect non-local interactions such as those created by cycles in the graph. These oscillations allow the waveforms to “echo” through the graph and revisit distant parts of the structure - a behavior well-suited for recovery of topological features.

Proposition 9 (Recovery of eigenspectrum from waveforms). Let \(G\) be connected, and let \(u_W^{(i)}(v,t)\) be the solution to the fractional wave equation (), with initial conditions \(u_W^{(i)}(\cdot,0) = \delta_i\) and \(\partial_t u_W^{(i)}(\cdot,0) = 0\). Then, for any fixed node \(v\), the sequence of values \(u_W^{(i)}(v, t_1), \dots, u_W^{(i)}(v, t_m)\) obtained from time samples can be used to approximate the full Laplacian eigenspectrum \(\{ \lambda_k^\alpha \}_{k=1}^n\) up to arbitrary precision, provided sufficient time resolution.

Proof sketch. \(u_W^{(i)}(v, t)\) is a linear combination of cosines at frequencies \(\sqrt{\lambda_k^\alpha}\) \(u_W^{(i)}(v, t) = \sum_{k=1}^n \cos\left(\sqrt{\lambda_k^\alpha}t\right) \langle \boldsymbol{\nu}_k, \delta_i \rangle \boldsymbol{\nu}_k(v)\). Thus, we may apply the Shannon-Nyquist sampling theorem. ◻

The graph eigenspectrum encodes a wide range of graph invariants and properties. Proposition 9 demonstrates that the solutions to the wave equation relate to graph spectral properties, and that this entire information is contained in the solutions of the wave equation at each node. For example, we have the following corollary:

Corollary 1 (Cycle Length). The size of cycle graph \(C_n\) can be determined from the solution to the fractional wave equation at a single node \(v\).

Proof sketch. The result follows from Proposition 9 and the fact that the length of a cycle graph is contained in its eigenspectrum. ◻

More generally, when the graph is not a cycle but contains a cyclic subgraphs as a prominent topological feature, this proposition provides some intuition for why the wave-equation is well suited to pick up that a node belongs to a cycle and recover dimension 1 homology.

4 Empirical Results↩︎

We evaluate DYMAG across diverse tasks to assess its ability to recover geometric/topological structure and generalize to downstream biological, chemical, and materials applications. In this section, we set \(\alpha=1\)

so that the fractional Laplacian coincides with the ordinary graph Laplacian. We conduct some experiments with other exponents \(\alpha\) in . Baselines include message-passing GNNs (MPNN [47], GAT [48], GIN [49]), diffusion-based methods (GRAND [33], GRAND++[35]), and GraphGPS [50], a state-of-the art graph transformer. We also compare against a GNN built with fixed-scale wavelets (GWT [15]) and a neural approximation algorithm of the extended persistence diagram (EPD) [51]. For molecular and

materials prediction, we include pretrained models such as ProtBERT [52], MolBERT [53], and GeoCGNN [54].

In all tables, best and second best results are highlighted. Ties within one standard deviation are treated as equivalent. Unless noted otherwise, results reflect means over 10-fold cross-validation. Implementation details, including hyperparameter selection, Chebyshev approximations, and complexity analysis, are provided in . Full results with standard deviations appear in .

4.1 Geometric and Topological Properties↩︎

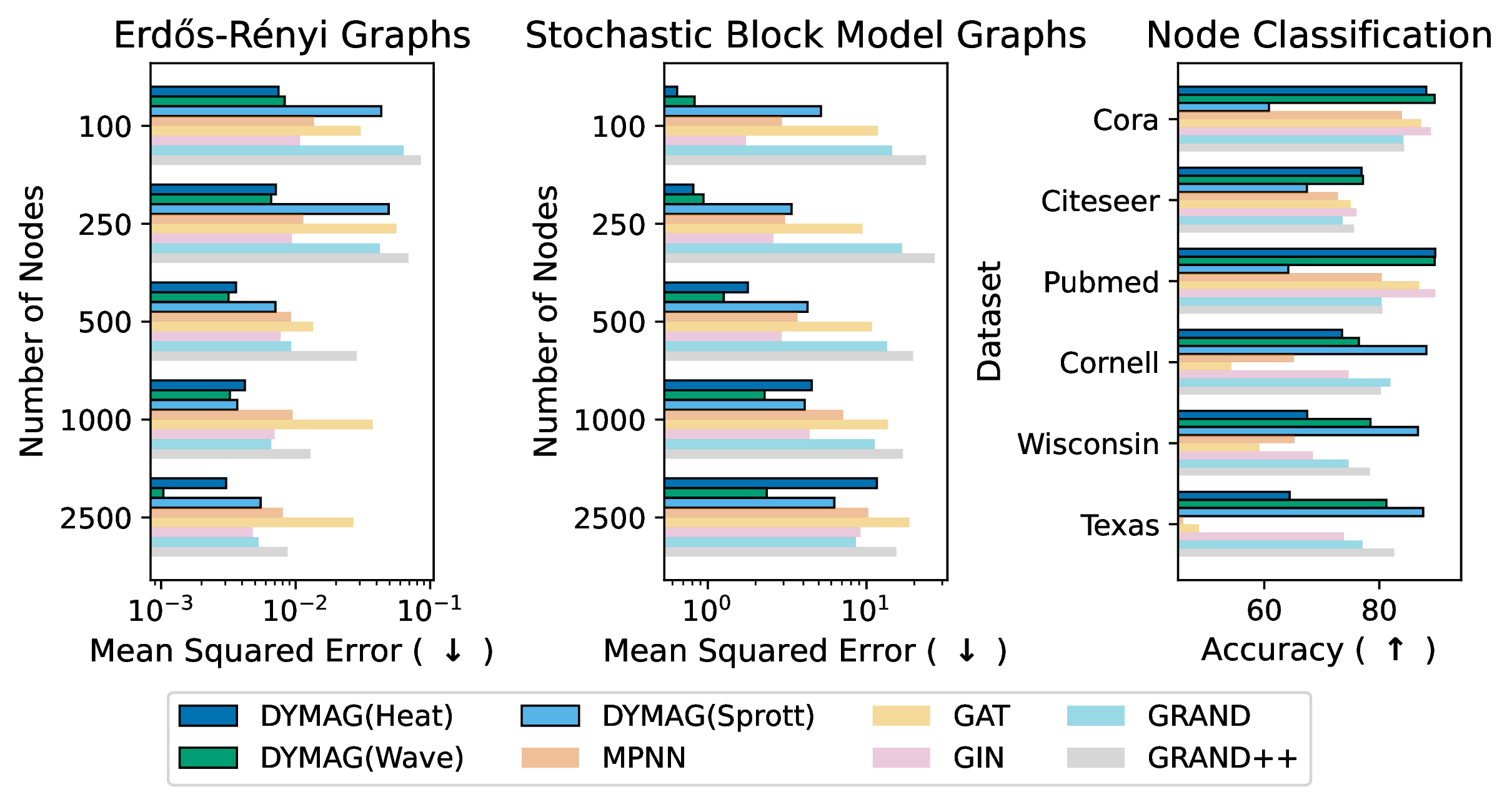

Figure 5: Mean squared error (MSE, lower is better) for predicting the generating parameters of random graphs and node classification accuracy (higher is better) for homophilic and heterophilic datasets. (See also in the Appendix.)

We first evaluated the expressivity of multiscale dynamics as a replacement for message passing by training DYMAG to recover geometric and topological features, including Ollivier–Ricci curvature and the persistence image representation [55] of the extended persistence diagram. Ollivier–Ricci curvature [56], a discrete analog of Ricci curvature from Riemannian geometry, captures local graph geometry. For persistent homology, diagrams are computed from ascending and descending filtrations of each node’s 5-hop neighborhood, using node degree as the filter [51], [57]. The resulting persistence images encode connected components (0 homology) and loops (1st homology).

We evaluated the model on Erdős-Rényi graphs [58], \(G(n,p)\), with \(n\in \{100,200\}\) and \(p\in\{0.04,0.06,0.08\}\), stochastic block model (SBM) graphs, and several citation graphs. The results are shown in and (appendix). DYMAG significantly improves prediction accuracy on both Ollivier–Ricci curvature and persistent homology compared to standard GNNs and GRAND. For persistent homology, DYMAG performs on par with the purpose-built model of [51], a neural approximation of the Union-Find EPD algorithm, despite DYMAG not being designed specifically for this task. DYMAG generalizes well across both synthetic and real-world graphs, including Cora [16], Citeseer [17], and PubMed [18]. Full Results on ER graphs and additional experiments using alternative filtrations are provided in . We note that while persistent homology can be computed directly, it is computationally expensive with cost \(\mathcal{O}(g^3)\), \(g\) being the number of generators [59]. More importantly, these experiments highlight that DYMAG learns rich graph representations that capture topological features when useful for prediction, and it can adapt to the task to extract relevant information for regression or classification.

As shown in and (appendix), we evaluate expressivity via two tasks as proxies: recovering parameters of random graphs and node classification on homophilic (Pubmed, Citeseer, Cora) and heterophilic (Texas, Wisconsin, Cornell) [19] networks. We see that the heat and wave versions of DYMAG are the top performing models on most of the data sets whereas the Sprott version of DYMAG is the top performing model on the heterophilic data sets, possibly because these data sets need a model which is sensitive to small changes in local graph structure. presents results on node-level Ollivier–Ricci curvature, persistent homology with alternative filtrations, and an analysis of fractional heat and wave dynamics across different \(\alpha\) values.

| Model | Cora | Citeseer | PubMed | ogbn-mag | ogbn-papers100M | |||

| \(\kappa\) | EP | \(\kappa\) | EP | \(\kappa\) | EP | EP | EP | |

| DYMAG(Heat) | 1.73e-2 | 1.45e-4 | 2.09e-2 | 3.94e-4 | 7.30e-3 | 7.93e-3 | 5.39e-2 | 9.03e-2 |

| DYMAG(Wave) | 1.85e-2 | 7.41e-5 | 3.41e-2 | 1.58e-4 | 6.51e-3 | 3.29e-3 | 8.01e-3 | 3.25e-2 |

| DYMAG(Sprott) | 1.34e-1 | 3.51e-3 | 1.46e-1 | 7.64e-3 | 2.97e-2 | 8.96e-2 | – | – |

| MPNN | 2.40e-1 | 2.72e-3 | 2.20e-1 | 6.83e-3 | 1.69 | 4.29e-1 | 2.93e-1 | 6.19e-1 |

| GAT | 7.36e-1 | 5.04e-2 | 9.04e-1 | 2.93e-2 | 1.55e-1 | 4.79e-1 | 4.47e-1 | 8.03e-1 |

| GIN | 1.56e-1 | 5.53e-3 | 1.72e-1 | 3.94e-3 | 8.34e-3 | 1.27e-1 | 2.49e-1 | 6.43e-1 |

| GWT | 4.07e-2 | 6.79e-4 | 3.58e-2 | 9.12e-4 | 9.15e-3 | 2.16e-2 | 5.83e-2 | 2.71e-1 |

| GraphGPS | 4.41e-1 | 2.71e-2 | 2.82e-1 | 3.16e-2 | 7.68e-1 | 1.94e-1 | 3.78e-1 | 4.05e-1 |

| GRAND | 6.81e-2 | 8.13e-4 | 1.24e-1 | 6.87e-4 | 2.73e-2 | 3.37e-2 | – | – |

| GRAND++ | 1.74e-1 | 8.16e-3 | 3.09e-1 | 4.21e-3 | 8.72e-2 | 3.07e-1 | – | – |

| Neural EPD Approx. | – | 5.80e-5 | – | 1.29e-4 | – | 2.74e-3 | 6.73e-3 | 3.18e-2 |

| Model | PROTEINS | DrugBank | MP | Antiviral Screen | |

| Dihedral Angles | TPSA | # Aromatic Rings | Band Gap | Active/Inactive | |

| DYMAG (Heat) | \(\textcolor{blue}{\mathbf{0.89 \pm 0.01}}\) | \(\textcolor{blue}{\mathbf{0.97 \pm 0.01}}\) | \(\textcolor{blue}{\mathbf{0.97 \pm 0.02}}\) | \(\textcolor{blue}{\mathbf{0.61 \pm 0.03}}\) | \(0.54 \pm 0.02\) |

| DYMAG (Wave) | \(\textcolor{purple}{\mathbf{0.81 \pm 0.03}}\) | \(\textcolor{purple}{\mathbf{0.90 \pm 0.01}}\) | \(\textcolor{purple}{\mathbf{0.88 \pm 0.01}}\) | \(\textcolor{purple}{\mathbf{0.55 \pm 0.02}}\) | \(\textcolor{purple}{\mathbf{0.61 \pm 0.01}}\) |

| DYMAG (Sprott) | \(0.76 \pm 0.01\) | \(0.77 \pm 0.01\) | \(0.82 \pm 0.03\) | \(\textcolor{purple}{\mathbf{0.54 \pm 0.03}}\) | \(\textcolor{blue}{\mathbf{0.63 \pm 0.02}}\) |

| MPNN | \(0.78 \pm 0.01\) | \(0.71 \pm 0.01\) | \(0.81 \pm 0.01\) | \(0.37 \pm 0.05\) | \(0.51 \pm 0.02\) |

| GAT | \(0.72 \pm 0.02\) | \(0.78 \pm 0.02\) | \(0.83 \pm 0.02\) | \(0.40 \pm 0.03\) | \(\textcolor{purple}{\mathbf{0.59 \pm 0.03}}\) |

| GIN | \(0.69 \pm 0.03\) | \(0.69 \pm 0.01\) | \(0.77 \pm 0.01\) | \(0.38 \pm 0.03\) | \(\textcolor{purple}{\mathbf{0.60 \pm 0.02}}\) |

| GWT | \(\textcolor{purple}{\mathbf{0.81 \pm 0.02}}\) | \(0.83 \pm 0.02\) | \(0.85 \pm 0.01\) | \(0.42 \pm 0.02\) | \(0.58 \pm 0.02\) |

| GraphGPS | \(0.64 \pm 0.03\) | \(0.63 \pm 0.02\) | \(0.67 \pm 0.04\) | \(0.31 \pm 0.02\) | \(0.54 \pm 0.03\) |

| GRAND | \(0.76 \pm 0.03\) | \(0.53 \pm 0.04\) | \(0.64 \pm 0.03\) | \(0.27 \pm 0.03\) | \(0.49 \pm 0.03\) |

| GRAND++ | \(0.62 \pm 0.03\) | \(0.56 \pm 0.02\) | \(0.61 \pm 0.02\) | \(0.31 \pm 0.02\) | \(0.51 \pm 0.02\) |

| Pretrained | \(\textcolor{purple}{\mathbf{0.83 \pm 0.03}}\) | \(\textcolor{blue}{\mathbf{0.98 \pm 0.01}}\) | \(\textcolor{blue}{\mathbf{0.97 \pm 0.01}}\) | \(\textcolor{blue}{\mathbf{0.62 \pm 0.05}}\) | \(\textcolor{purple}{\mathbf{0.59 \pm 0.02}}\) |

| Model | (ProtBERT) | (MolBERT) | (MolBERT) | (GeoCGNN) | (ProtBERT) |

4.2 Proteins, Molecules, and Materials↩︎

We evaluated DYMAG on graphs representing proteins, drug-like molecules, and materials shown in (appendix). We note that DYMAG’s strong performance on these data sets indicates its potential positively impact society by furthering the design of

materials, drugs, or other healthcare treatments. More specifically, the tasks include predicting geometric and chemical properties such as dihedral angles, total polar surface area (TPSA), the number of aromatic rings, band gaps in materials, and anti-HIV

activity. Datasets include PROTEINS [21], DrugBank [22], the Materials Project [24], and the AIDS Antiviral Screen [23]. Comparisons span standard message-passing GNNs (MPNN, GAT, GIN), diffusion-based models (GRAND, GRAND++), spectral methods

(GWT), transformer-based models (GraphGPS), and domain-specific pre-trained models: ProtBERT [52] for proteins, MolBERT [53] for small molecules, and GeoCGNN [54] for materials. Across all datasets, DYMAG consistently outperforms standard GNNs, GRAND, GRAND++, GraphGPS, and GWT by a wide margin. It matches the performance of powerful, task-specific pretrained models

within 1 standard deviation overall, and significantly surpasses them on the PROTEINS and AIDS datasets.

Overall, the heat and wave versions of DYMAG perform strongly across all molecular and material prediction tasks.The Sprott (chaotic) variant shows more variable performance, which may reflect its heightened sensitivity to local graph structure. This behavior appears beneficial in settings with recurring structural motifs, such as the Antiviral Screen dataset, and may be less advantageous in tasks where such sensitivity is less critical. Additional results, including accuracy and training time, are provided in .

5 Conclusion↩︎

We introduce DYMAG as a method for improving aggregation in GNNs. We use dynamics to generate a diverse bank of waveforms that span multiple frequency bands. Messages are aggregated by taking each node feature, interpreted as a graph signal, and projecting it onto this bank via inner products, producing a set of features that encode multi-scale information. The expressiveness of this representation, arising from the rich frequency structure of the waveforms, helps mitigate common GNN limitations such as oversmoothing, underreaching, and heterophily.

One limitation of our method is that the Sprott model is extremely sensitive to the graph structure. This is useful for some tasks which require detecting minute changes in structure. However, this be undesirable in other settings where one may want similar representations of nearly isomorphic. Another limitation is that our method is currently only applicable to supervised tasks. Extending DYMAG to unsupervised tasks, such as clustering, denoising, or signal reconstruction could could be in interesting avenue of future work. Overall, DYMAG establishes a theoretically grounded, empirically strong message aggregation paradigm; future work will broaden its application to diverse graph tasks and refine the accompanying speed-up techniques.

Acknowledgments↩︎

This research was partially funded and supported by CIFAR AI Chair [G.W.], NSERC Discovery grant 03267 [G.W.], NIH grants (1F30AI157270-01, R01HD100035, R01GM130847, R01GM135929) [G.W.,S.K.], NSF Career grant 2047856 [S.K.], NSF grant 2327211 [S.K., G.W., M.P.], NSF grant 2242769 [M.P.], NSF/NIH grant 1R01GM135929-01 [S.K.], NSF CISE grant 2403317 [S.K.], the Chan-Zuckerberg Initiative grants CZF2019182702 and CZF2019-002440 [S.K.], the Sloan Fellowship FG-2021-15883 [S.K.], the Novo Nordisk grant GR112933 [S.K.], Yale - Boehringer Ingelheim Biomedical Data Science Fellowship [D.B.], and Kavli Institute for Neuroscience Postdoctoral Fellowship [D.B.].

6 Related work↩︎

There is a long history of studying dynamics on graphs. For example, [25]–[28] analyze complex interactions such as brain processes, social networks, and spatial epidemics on graphs. The study of graph dynamics has recently crossed into the field of deep learning. with [29]–[31] using neural networks to simulate complex phenomena on irregularly structured domains.

Differing from the above mentioned works, there have also been several recent papers which aim to use dynamics as a framework for understanding GNNs. [32] and [33] take the perspective that message-passing neural networks can be interpreted as the

discretizations of diffusion-type (parabolic) partial differential equations on graph domains where each layer corresponds to a discrete time step. They then use this insight to design GRAND, a novel GNN, based on encoding the input node features, running

a diffusion process for \(T\) seconds and finally applying a decoder network. [35] builds on this work

by extending it to diffusion equations with “sources” placed at the labeled nodes, leading to a new network GRAND++. They then provide an analysis of both GRAND and GRAND++ and show that they are related to different graph random

walks. GRAND is related to a standard graph random walk, whereas GRAND++ is related to a dual random walk started at the the labeled data which can avoid the oversmoothing problem. We also note [60], which the graph heat equation to extract structural information around each node (although not in a neural network context) and [34] which used insights from hyperbolic and parabolic PDEs on manifolds to design a GNN that does not suffer from oversmoothing as well as [61] which uses convolution using unitary groups to improve GNNs ability to learn long-range dependencies.

Our network method differs from these previous works in several important ways. Most importantly, whereas [33] and [32] primarily focused on PDEs as a framework for understanding the behavior of message passing operations, here we propose to use

the dynamics associated to the heat equation as a new form feature aggregation, replacing traditional message passing operations. Additionally, we consider both the heat equation (the prototypical parabolic PDE) and the wave equation (the prototypical

hyperbolic PDE) as well as chaotic dynamics, whereas previous work [32], [33], [35] has primarily focused on parabolic equations. Notably, similar to GRAND++, the wave-equation and the Sprott

versions of DYMAG do not suffer from oversmoothing. However, the long-term behavior of these equations differs from the diffusion-with-a-source equation used in GRAND++ in that they only depend on the geometry of the network and not on

locations of the labeled data. (Additionally, since we does not require labeled data as source locations, our method can be easily adapted to unsupervised problems by removing the MLPs.)

7 Detailed Background↩︎

This section is a more detailed version of the background on graph signal processing and dynamics provided in Section 2.

7.1 Graph Signal Processing↩︎

In graph signal processing, node features are interpreted as signals (functions) defined on the nodes of a graph [36], [37]. Each signal can then be decomposed into different frequencies defined in terms of the eigendecomposition of the graph Laplacian and an associated Fourier transform.

Formally, we let \(\mathbf{x}:V\rightarrow\mathbb{R}\), denote a function (signal) defined on the vertices of a weighted, undirected graph \(G=(V,E,w)\) with vertices \(V=\{v_1,\ldots, v_n\}\). For convenience, we will identify \(\mathbf{x}\) with the vector whose \(k\)-th entry is \(\mathbf{x}(v_k)\). Thus, we will write either \(\mathbf{x}(v_k)\) or \(\mathbf{x}(k)\), depending on context. We may also write \(\mathbf{x}(v)\) if we do not wish to emphasize the ordering of the vertices. We let \(L\) denote a graph Laplacian with eigenvectors \(\boldsymbol{\nu}_1,\ldots,\boldsymbol{\nu}_n\) and eigenvalues \(0=\lambda_1\leq\lambda_2\leq \ldots \leq \lambda_n\), \(L\boldsymbol{\nu}_k=\lambda_k\boldsymbol{\nu}_k\). Unless otherwise specified, we will assume that \(L\) is either the unnormalized Laplacian \(L_{U}=D-A\) or the symmetric normalized Laplacian \(L_{\text{sym}}=D^{-1/2}L_\text{U}D^{-1/2}\), where \(D\) and \(A\) are the weighted degree and adjacency matrices. In these cases, we may write \(L=U\Lambda U^\mathsf{T}\), where \(U\) is a matrix with columns \(\boldsymbol{\nu}_1,\ldots,\boldsymbol{\nu}_n\) and \(\Lambda\) is a diagonal matrix, \(\Lambda_{k,k}=\lambda_k\).1 The graph Fourier transform can be defined as \(\widehat{\mathbf{x}} = U^{\mathsf{T}} \mathbf{x}\) so that \(\widehat{\mathbf{x}}(k)=\langle\boldsymbol{\nu}_k,\mathbf{x}\rangle\). Since the \(\boldsymbol{\nu}_k\) form an orthonormal basis, we obtain the Fourier inversion formula \(\mathbf{x}=\sum_{k=1}^n \widehat{\mathbf{x}}(k)\boldsymbol{\nu}_k\). The eigenvectors \(\boldsymbol{\nu}_k\) are referred to as Fourier modes and the eigenvalues \(\lambda_k\) are interpreted as frequencies. Therefore, the Fourier inversion formula can be thought of as decomposing a signal \(\mathbf{x}\) into different the superposition of Fourier modes at different frequencies.

Standard message passing neural networks are known to essentially perform low-pass filtering [38], [39]; i.e., they preserve the portion of the signal corresponding to the first one or two eigenvectors, while suppressing the rest of the signal. As we discussed in Section 3.3.1, the waveforms utilized in DYMAG may span a broader range of frequency behavior and can act highlight different aspects of the frequency spectrum by acting as either as low-pass, high-pass, or band-pass filters.

7.2 Heat and Wave Dynamics on a Graph↩︎

For \(\alpha > 0\), we define the \(\alpha\)-fractional graph Laplacian by \(L^\alpha := U \Lambda ^\alpha U^\mathsf{T}\). We note that \(L^\alpha\) has the same eigenvectors as \(L\) and the eigenvalues of \(L^\alpha\) are given by \(\lambda_k^\alpha\), i.e., \(L^\alpha\boldsymbol{\nu}_k=\lambda_k^\alpha\boldsymbol{\nu}_k\). Additionally, we see that when \(\alpha=1/m\) for some \(m\in\mathbb{N}\), we have \((L^{1/m})^m=L.\) For each \(i\), we let \(\delta_i\) denote the Dirac signal at \(i\) given by \(\delta_i(k)=1\) if \(i=k\), and \(\delta_i(k)=0\) otherwise. We say that a function \(u^{(i)}_H(v,t)\) solves the \(\alpha\)-fractional heat equation with a initial value \(\delta_i\) if \[\label{appx:eqn:heat95eq95definition} -L^\alpha u^{(i)}_H(v,t) = \partial_t u^{(i)}_H(v,t),\quad u^{(i)}_H(v,0)=\delta_i(v).\tag{7}\]

We say that \(u^{(i)}_W\) solves the \(\alpha\)-fractional wave equation with initial Dirac data \(\delta_i\) and an initial velocity \(c\delta_i\) (where \(c\) is a constant) if \[\begin{align} -L^\alpha u^{(i)}_W(v,t) = \partial^2_t u^{(i)}_W(v,t),\quad u^{(i)}_W(v,0)=\delta_i(v), \quad \partial_t u^{(i)}_W(v,0)=c\delta_i(v).\label{appx:eqn:wave95eq95def} \end{align}\tag{8}\]

If \(G\) is connected, solutions to the heat and wave equations are given explicitly by \[\begin{align} \tag{9} u^{(i)}_H(v,t) &= \sum_{k=1}^n e^{-t\lambda_k^\alpha}\langle \boldsymbol{\nu}_k, \delta_i \rangle \boldsymbol{\nu}_k(v), \quad\text{and}\\ u^{(i)}_W(v,t) &=\sum_{k=1}^n \cos(\sqrt{\lambda_k^\alpha} t)\langle \boldsymbol{\nu}_k,\delta_i \rangle \boldsymbol{\nu}_k(v) + t\langle \boldsymbol{\nu}_1,c\delta_i \rangle\boldsymbol{\nu}_1 +\sum_{k=2}^n\frac{1}{\sqrt{\lambda_k^\alpha}}\sin(\sqrt{\lambda_k^\alpha} t)\langle \boldsymbol{\nu}_k,c\delta_i\rangle\boldsymbol{\nu}_k(v). \tag{10} \end{align}\] We note that Eqns 3 and 4 can also be adapted to disconnected graphs with simple modifications. Furthermore, following Remark 1 in [40], we note that the solutions \(u^{(i)}_H(v,t)\) and \(u^{(i)}_W(v,t)\) defined in Eqn. 3 and 4 do not depend on the choice of orthonormal basis for the graph Laplacian, see Section [appendix:joyce95statement] for details.

7.3 Chaotic Dynamics on a Graph↩︎

We next consider dynamics exhibiting chaos, a behavior that may be informally summarized as “aperiodic long-term behavior in a deterministic system that exhibits sensitive dependence on initial conditions" [41]. As a prototypical example of chaos on graphs, we consider the general, complex, and nonlinear graph dynamics on graphs described in [42]:

where \(b\) is a damping coefficient, and the \(c_{k,j}\) represent interactions. We refer to Eq. 5 as the Sprott equation and denote its solutions by \(u_S(v,t)\). When \(b>0\), solutions \(u_S(v,t)\) remain bounded. In the case of fully connected graphs, with \(b = 0.25\), chaotic dynamics (corresponding to positive Lyapunov exponents, see, e.g., [43]) were observed when a sufficiently large fraction of interactions were neither symmetric (\(c_{j,k} = c_{k,j}\)) nor anti-symmetric (\(c_{j,k} = -c_{k,j}\)). Sparsely connected networks also exhibited positive Lyapunov exponents with a value of \(b = 0.25\) [42].

8 Computational Complexity↩︎

Here, we discuss the computational complexity of DYMAG and show that it may be scaled to large graphs.

We utilize Chebyshev polynomials to approximate \(u^{(i)}_H(v, t)\) and \(u^{(i)}_W(v,t)\) as defined in Eqn. 3 and 4 motivated by the success of Chebyshev polynomials in the approximation of spectral graph wavelets [14] and GNNs [62]. This removes the need for eigendecomposition (which can have \(\mathcal{O}(n^3)\) computational complexity and \(\mathcal{O}(n^2)\) memory). The polynomial approximation has linear complexity for sparse graphs [62]. For example, if \(G\) is a \(k\)-nearest neighbor graph and the order of the polynomial is \(m\), then the time complexity for solving the heat/wave equation is \(O(kmn)\).

DYMAG’s runtime complexity is \(\mathcal{O}(r|E|FT)\), where \(|E|\) is the number of edges, \(F\) is the number of features, and \(r\) is the degree of the Chebyshev polynomial. No closed form solution is available for the Sprott dynamics, so we instead use a fourth-order Runge-Kutta method, which has been successfully applied to chaotic systems [63], [64]. This approach has complexity \(\mathcal{O}(g|E|FT)\), where \(g\) is the number of solver steps between sampled time steps.

For each of the underlying dynamics, DYMAG exhibits linear complexity, with respect to the number of vertices for sparse graphs (setting \(|E|=n\bar{d}\), where \(\bar{d}\) is the average degree). This makes it efficient and scalable to large graphs, where the focus is often on local or node-level properties rather than global topological properties (although predicting global properties is the primary focus of our work). In such cases, local properties can be examined with a smaller feature set of size \(F' \ll n\), by concentrating on subgraphs around nodes of interest. Parallelization is feasible for large sparse graphs since the \(r\)-th order Chebyshev polynomials act over \(r\)-hop neighborhoods, allowing DYMAG to scale with standard MPNN techniques. Furthermore, the feature space \(F\) can be selected or adjusted to be small by utilizing random features, Diracs on a subset of nodes, or natural graph signals.

9 Proofs of Theoretical Results↩︎

Proof of independence of eigenbasis Here we provide a detailed proof for the result that Equations 3 and 4 are invariant to the choice of the Laplacian eigenbasis, as mentioned in .

The solutions \(u^{(i)}_H\) and \(u^{(i)}_W\) do not depend on the choice of eigenbasis (even when the eigenvalues have multiplicity greater than one). To see this, let \(\mathcal{S}_\lambda\) be the set of distinct eigenvalues of \(L\). For \(\lambda\in\mathcal{S}_\lambda,\) let \(E_\lambda\) be the corresponding eigenspace, i.e., the linear space spanned by the set of \(\boldsymbol{\nu}_k\) such that \(\lambda_k=\lambda\). Let \(\pi_\lambda\) denote the corresponding projection operator, i.e., \[\pi_\lambda\mathbf{x}=\sum_{k:\lambda_k = \lambda} \langle \boldsymbol{\nu}_k, \mathbf{x} \rangle \boldsymbol{\nu}_k,\] for all \(\mathbf{x}\in\mathbb{R}^n\), and observe that \(\pi_\lambda\) is independent of choice of eigenbasis. Then, from Eqn. 3 , we may then write,

\[\begin{align} u^{(i)}_H(v,t) &= \sum_{k =1}^n e^{-t\lambda_k^\alpha} \langle \boldsymbol{\nu}_k, \delta_i \rangle \boldsymbol{\nu}_k(v) \\ &= \sum_{\lambda \in \mathcal{S}_\lambda} e^{-t\lambda^\alpha} \sum_{k:\lambda_k = \lambda} \langle \boldsymbol{\nu}_k, \delta_i \rangle \boldsymbol{\nu}_k(v) \\ &= \sum_{\lambda \in \mathcal{S}_\lambda} e^{-t\lambda^\alpha} \pi_\lambda \delta_i(v). \end{align}\]

This establishes that \(u^{(i)}_H\) is independent of the choice of basis. The argument for \(u^{(i)}_W\) is identical other than using Eqn. 4 in place of Eqn. 3 .

Proofs of propositions

Below, we prove , (restated below) the band-pass properties of DYMAG through the waveforms.

Proposition 1. (Band-pass information) DYMAG is able to extract band-pass, or even multi-band-pass information information from the node features.

To make Proposition 1 more precise, we will separate it out into two propositions. However, first, we will introduce some notation and definitions.

We define heat-kernel as \(H^t=e^{-tL^{\alpha}}\), i.e., \[H^t=U\operatorname{diag}\left(\exp(-t{\lambda_1})^\alpha,\dots,\exp(-t\lambda_n^\alpha)\right)U^\mathsf{T}.\] We observe that we may rewrite the solution to the heat equation, with any initial condition \(u_H(\cdot,0)\) as \[\label{eqn:32heat32kerenl32solution} u_H(\cdot,t)=\sum_{m=1}^n e^{-t\lambda_m^\alpha}\langle \boldsymbol{\nu}_m, u(\cdot,0) \rangle \boldsymbol{\nu}_m=H^tu_H(\cdot,0).\tag{11}\] In particular, we have \(\mathbf{u}_{i,k}=H^{t_k}\delta_i\). Thus, we see that the features \[\begin{align} h_{j,k}^{(i)} &= \langle \mathbf{u}_{i,k},\mathbf{x}_j\rangle \end{align}\] defined in Eqn. 6 can be rewritten as \[\begin{align} h^{(i)}_{j,k}&=\sum_{\ell=1}^nH^{t_k}\delta_i(\ell)\mathbf{x}_j(\ell)\\ &=\sum_{\ell=1}^n\sum_{m=1}^n e^{-t\lambda_m^\alpha}\langle \boldsymbol{\nu}_m, \delta_i \rangle \boldsymbol{\nu}_m(\ell)\mathbf{x}_j(\ell)\\ &=\sum_{\ell=1}^n\sum_{m=1}^n e^{-t\lambda_m^\alpha} \boldsymbol{\nu}_m(i) \boldsymbol{\nu}_m(\ell)\mathbf{x}_j(\ell)\\ &=(H^{t_k}\mathbf{x}_j)(i). \end{align}\]

Next, for fixed times \(t_1<t_2\), we define \[\label{eqn:32Psi} \Psi_{t_1,t_2}=H^{t_1}-H^{t_2}\tag{12}\] as the difference of two heat kernels. In the spectral domain, we note that we may write \[\Psi_{t_1,t_2}\mathbf{x}=\sum_{m=1}^n (e^{-t_1\lambda_m^\alpha}-e^{-t_2\lambda_m^\alpha})\langle \boldsymbol{\nu}_m, \mathbf{x} \rangle \boldsymbol{\nu}_m.\] The function, \[\label{eqn:32psi32frequency} \psi_{t_1,t_2}(\lambda)=e^{-t_1\lambda^{\alpha}}-e^{-t_2\lambda^{\alpha}},\tag{13}\] which is referred to as the frequency response is zero both at \(\lambda=0\), and as \(\lambda\rightarrow\infty\). Its support is concentrated in a frequency band centered around \(\lambda^\star =\Bigl(\tfrac{\log(t_2/t_1)}{t_2-t_1}\Bigr)^{1/\alpha}.\) Therefore, \(\Psi_{t_1,t_2}\) is referred to as a band-pass filter. We summarize this in the following proposition.

Proposition 10 (Difference of two heat waveforms is band-pass). Let \(t_1<t_2\) and define \(\Psi_{t_1,t_2}\) as in Eqn. 12 . Then the function \(\mathbf{x}\mapsto\Psi_{t_1,t_2}\mathbf{x}\) performs a band-pass filtering.

Proof. This follows immediately from the frequency response illustrated in Eqn. 13 and the subsequent discussion. ◻

Importantly, we observe that DYMAG has the capacity to learn \(\Psi_{t_1,t_2}\) through the MLP in Algorithm 2. Therefore, Proposition 10 shows that DYMAG, with the heat-equation has the capacity to perform band-pass filtering. This is in contrast to standard message passing networks which are known to effectively perform low-pass filtering (i.e., averaging type operations).

We next turn our attention to the wave equation, focusing on the case with zero initial velocity (i.e., \(c=0\)) for the sake of simplicity. Similar to the heat-case (Eqn. 11 ), we may define a wave kernel by \(W^{t}=\cos\left(t\sqrt{L^{\alpha}}\right)=U\operatorname{diag}\left(\cos(t\sqrt{\lambda_1^\alpha}),\dots,\cos(t\sqrt{\lambda_n^\alpha})\right)U^{\mathsf T}\) and observe that the solution to the wave equation, with initial condition \(u_W(\cdot,0)\) is given by \(W^{t}u_W(\cdot,0)\). In the spectral domain, we may write \[\Phi_t\mathbf{x}=\sum_{m=1}^n \cos(t\sqrt{\lambda_m^\alpha})\langle \boldsymbol{\nu}_m, \mathbf{x} \rangle \boldsymbol{\nu}_m.\label{eqn:32phi32frequency}\tag{14}\] The associated frequency response is given by \[\label{eqn:32wave32frequency32response} \psi(\lambda) = \cos(t\sqrt{\lambda^\alpha}).\tag{15}\] This function attains its maximum absolute value at \(\lambda=\lambda_{m}:=(m\pi/t)^{2/\alpha},\;m=0,1,2,\dots\) (band-passes), and vanishes at \(\lambda=\lambda_{m+\frac{1}{2}}:=((2m+1)\pi/2t)^{2/\alpha}\) (stop-bands). Hence \(u_{\mathrm{W}}^{(i)}\) alternates between preserving and suppressing successive frequency intervals and thus acts as a multi-band filter. We summarize this in the following proposition.

Proposition 11 (Wave equation is multi-band). Fix a time \(t>0\) and, for simplicity, set the initial velocity to zero (\(c=0\)). Consider the wave kernel \(W^{t}=cos\left(t\sqrt{L^{\alpha}}\right)\) and define \(\Phi_t\) as in . Then the function \(\mathbf{x}\mapsto\Phi_t\mathbf{x}\) performs a multi-band-pass filtering.

Proof of . This follows from Eqn. 15 and the subsequent analysis of the maxima and zeros of \(\psi(\lambda)\). ◻

We now turn out attention to Proposition 2.

Proposition 2. (Identification of Connected Components) Let \(u^{(i)}(v,t)\) denote the solution to the heat equation, wave equation, or Sprott chaotic dynamics. Suppose that \(G\) is not connected. Then, for any \(v\) which is not in the same connected component as \(v_i\), and all \(t\geq0\), we have \(u^{(i)}(v,t) = 0.\)

Proof of Proposition 2. First observe that it suffices to prove the result for \(0\leq t\leq T\) where \(T\) is arbitrary (since we may then let \(T\rightarrow \infty\)).

Let \(u^{(i)}\) be a solution to the differential equation and consider the function \(\tilde{u}^{(i)}\) defined by \[\tilde{u}^{(i)}(v,t)=\begin{cases} u^{(i)}(v,t)\quad&\text{if } v\in \mathcal{C}\\ 0\quad&\text{otherwise} \end{cases},\] where \(\mathcal{C}\) is the connected component containing \(v_i\).

We observe that \(\tilde{u}^{(i)}\) is also a solution since the right hand side of each differential equation is localized in the sense that no energy passes between components and the initial condition has support contained in \(\mathcal{C}\). However, since the right hand side of all three differential equations is Lipschitz on \([0,T]\), Theorem 5, Section 6 of [65] implies that there is at most one solution to the differential equation and thus \(\tilde{u}^{(i)}=u^{(i)}\) on \(V\times [0,T]\). Therefore, since \(T\) was arbitrary, we have \(u^{(i)}(v,t)=0\) for all \(v\notin \mathcal{C}\).

Importantly, we note that the fractional Laplacian \(L^\alpha\) is not a graph shift operator, i.e., we may have \(L^\alpha_{i,j}\neq 0\) even if \(i\neq j\) and \(\{v_i,v_j\}\notin E\). However, it is still component-localized in the sense that \(L^\alpha_{i,j}\neq 0\) implies that \(v_i\) and \(v_j\) are in the same connected component. To see this, note that, if \(G\) is disconnected, then we can choose an ordering of the vertices so that \(L\) is block-diagonal (with the diagonal blocks corresponding to connected components). This implies that we may choose an eigenbasis such that each eigenvector \(\boldsymbol{\nu}_i\) has its support (non-zero entries) contained in a single connected component. Therefore, writing \(L^\alpha\) in its outer-product expansion, \[L^\alpha=\sum_{i=1}^n\lambda^\alpha \boldsymbol{\nu}_i\boldsymbol{\nu}_i^\mathsf{T}\] implies that \(L^\alpha\) is component-localized. ◻

Proposition 3. Let \(G\) be connected and let \(L\) be the random-walk Laplacian \(L_{rw}\) (with \(\alpha=1)\). Let \(u^{(i)}_H(v,t)\) be the solution to the heat equation, Eqn. 3 . Then \(u^{(i)}_H(v,t)>0\) for all \(v\in V\) and \(t>0\).

In order to prove Proposition 3, we need the following lemma which relates the heat equation to a continuous time random walker (see e.g., [66]) \(\{X^{\text{continuous}}_t\}_{t\geq 0}\) defined by \(X^{\text{continuous}}_t=X^\text{discrete}_{N(t)},\) where \(\{X^\text{discrete}_k\}_{k=0}^\infty\) is a standard discrete-time random walker (i.e., a walker who moves to a neighboring vertex at each discrete time step) and \(\{N(t)\}_{t\geq 0}\) is an ordinary Poisson process.

Lemma 1. Let \(u^{(i)}_H(v,t)\) be the solution to the heat equation with \(L\) chosen to be the random-walk Laplacian, \(L=L_{RW}=I-P\) where \(P=D^{-1}A\) and initial condition \(\delta_i\). Then \(u^{(i)}_H(\cdot,t)\) is the probability distribution of a continuous-time random walker started at \(v_i\) at time \(t\).

Proof of Lemma 1. We first note that \(L=L_{rw}\) can be written in terms of the symmetric normalized Laplacian \(L_s=I_n-D^{-1/2}AD^{-1/2}\) as \(L_{rw} = D^{-1/2} L_{s} D^{1/2}\). Therefore, \(L_{rw}\) is diagonalizable and may be written as \(L_{rw}=S\Lambda S^{-1}\) where \(L_s=U \Lambda U^{-1}\) is a diagonalization of \(L_s\) and \(S=D^{-1/2}U\). This allows us to write \[P = I-L_{rw} = S(I-\Lambda)S^{-1},\] which implies that for \(k\geq 0\), we have \[P^k = S(I-\Lambda)^kS^{-1}.\]

We next note that Eqn. 3 may be written as \(u^{(i)}_H(\cdot,t)= H^t\delta_i\), where \(H^t\) is the heat kernel defined by \[\label{eqn:32heat95kernel} H^t:= e^{-tL} =Se^{-t\Lambda} S^{-1}.\tag{16}\]

Therefore, it suffices to show that \(H^t\) is the \(t\)-second transition matrix of the continuous-time random walker \(X^{\text{continuous}}_t=X^{\text{discrete}}_{N(t)}\).

By the definition of a Poisson process, \(N(t)\) is a Poisson random variable with parameter \(t\). Thus, for \(k\geq 0,\) \(t\geq 0\), we have \[\mathbb{P}(N_t=k)=A_t(k) = t^k e^{-t}/ k!.\] We next observe that for all \(\mu\in\mathbb{R}\) we have \[\label{eqn:poisson} e^{-t(1- \mu)} = e^{-t}\sum_{k\geq 0}\frac{(t\mu)^k}{k!} = \sum_{k\geq 0} A_t(k)\mu^k.\tag{17}\] Substituting \(\lambda = 1 - \mu\) in Eqn. 17 links the eigenvalues of \(U^t_H\) and \(P\) by \[\begin{align} U^t_H &= Se^{-t\Lambda}S^{-1}= S \sum_{k\geq 0}A_t(k)(I_n - \Lambda)^k S^{-1} \\ &= \sum_{k\geq 0} A_t(k)P^k. \end{align}\] This implies that \(H^t\) is the \(t\)-second transition matrix of the continuous-time random walker and thus completes the proof. ◻

Now we prove Proposition 3.

Proof of Proposition 3. Let \(v\in V\) be arbitrary. As shown in , \(u^{(i)}_H(\cdot,t)\) is the probability distribution of a continuous-time random walk with initial distribution \(\delta_i\) at time \(t\). Therefore, if \(d\) is the length of the shortest path from \(v\) to \(v_i\), then \[\begin{align} u_H^{(i)}(v,t)&=\mathbb{P}(X^{\text{continuous}}_t=v|X^{\text{discrete}}_0=v_i)\\&\geq \mathbb{P}(N(t)=d)\mathbb{P}(X^{\text{discrete}}_d=v|X^{\text{discrete}}_0=v_i)>0. \qedhere \end{align}\] ◻

Proposition 4.(Heat energy) Let \(G\) be connected, and let \(u^{(i)}_H(v,t)\) be as in Eqn. 3 and let \(u^{(i)}_H(t)=u^{(i)}_H(\cdot,t)\). Then, \[\label{eqn:heat95energy95bounds} e^{-2t \lambda_n^\alpha} \leq \| u^{(i)}_H(\cdot, t)\|_2^2 \leq |\boldsymbol{\nu}_1(i)|^2 + e^{-2t\lambda_2^\alpha}\qquad{(1)}\] for all \(t > 0\). Furthermore, as \(t\) converges to infinity we have \[\label{eqn:heat95energy95bounds952} \lim_{t \rightarrow \infty} u^{(i)}_H(t) = \langle \boldsymbol{\nu}_1, \delta_i \rangle \boldsymbol{\nu}_1=\boldsymbol{\nu}_1(i)\boldsymbol{\nu}_1.\qquad{(2)}\]

Proof of Proposition 4. From Eqn. 3 , and the fact that \(\{\boldsymbol{\nu}_k\}_{k=1}^n\) is an ONB, we see \[\begin{align} \|u^{(i)}_H(t) \|_2^2 &= \left\langle \sum_{k=1}^n e^{-t\lambda_k^\alpha}\langle \boldsymbol{\nu}_k,\delta_i\rangle \boldsymbol{\nu}_k, \sum_{k=1}^n e^{-t\lambda_k^\alpha} \langle \boldsymbol{\nu}_k,\delta_i\rangle \boldsymbol{\nu}_k\right\rangle \nonumber\\ &= \sum_{k = 1}^n e^{-2t\lambda_k^\alpha} |\langle \boldsymbol{\nu}_k, \delta_i \rangle|^2. \label{eqn:heat95ONB} \end{align}\tag{18}\] Thus, since \(\lambda_1=0\), upper bound in Eqn. ?? is obtained by: \[\begin{align} \|u^{(i)}_H(t)\|_2^2 &= \sum_{k = 1}^n e^{-2t\lambda_k^\alpha} |\langle \boldsymbol{\nu}_k, \delta_i \rangle|^2 \\ &= |\langle \boldsymbol{\nu}_1, \delta_i \rangle|^2 + \sum_{k = 2}^n e^{-2t\lambda_k^\alpha} |\langle \boldsymbol{\nu}_k, \delta_i \rangle|^2 \\ &\leq |\langle \boldsymbol{\nu}_1, \mathbf{x} \rangle|^2 + e^{-2t \lambda_2^\alpha} \sum_{k = 2}^n |\langle \boldsymbol{\nu}_k, \delta_i \rangle|^2 \\ &\leq |\langle \boldsymbol{\nu}_1, \delta_i \rangle|^2 + e^{-2t\lambda_2^\alpha}. \end{align}\]

The lower bound in Eqn. ?? may be obtained by noting \[\begin{align} \sum_{k = 1}^n e^{-2t\lambda_k^\alpha} |\langle \boldsymbol{\nu}_k, \delta_i \rangle|^2 &\geq e^{-2t\lambda_n^\alpha} \sum_{k = 1}^n |\langle \boldsymbol{\nu}_k, \delta_i \rangle|^2 = e^{-2t\lambda_n^\alpha}. \end{align}\] Eqn. ?? immediately follows Eqn. 18 and the fact that \(0 =\lambda_1< \lambda_2 \leq \ldots \leq \lambda_n\). ◻

Proposition 5. (Heat energy between graphs) Let \(G\) and \(G'\) be graphs on \(n\) vertices with fractional Laplacians \(L_G^\alpha\) and \(L_{G'}^\alpha\) and let \(\delta_i\) and \(\delta_{i'}\) be initial conditions for Eqn. 1 on \(G\) and \(G'\). Assume: (i) \(L_{G'}^\alpha \succcurlyeq L_G^\alpha\), i.e., \(\mathbf{v}^\mathsf{T} L_{G'}^\alpha \mathbf{v} \geq \mathbf{v}^\mathsf{T} L_{G}^\alpha \mathbf{v}\:\:\text{for all }\mathbf{v}\in\mathbb{R}^n,\) (ii) We have \(| \boldsymbol{\nu}_k'(i)|^2 \leq (1 + \eta_k(t)) |\boldsymbol{\nu}_k(i)|^2\) for all \(1\leq k \leq n,\) where we also assume \(\eta_k(t) := \exp(2t ((\lambda_k')^\alpha-\lambda_k^\alpha)) - 1 \geq 0.\) Then, with \(u_H\) and \(u_H'\) defined as in Eqn. 3 , we have \(\|(u^{(i)}_H)'(\cdot,t)\|^2_2 \leq \|u^{(i)}_H(\cdot, t)\|_2^2.\)

Proof of Proposition 5. By Eqn. 18 , we have

\[\begin{align} &\|u^{(i)}_H(\cdot, t)\|_2^2-\|(u^{(i)}_H)'(\cdot,t)\|^2_2 \\ &= \sum_{k = 1}^n e^{-2t\lambda_k^\alpha} |\langle \boldsymbol{\nu}_k, \delta_i \rangle|^2 - e^{-2t\lambda_k'^\alpha}|\langle \boldsymbol{\nu}_k', \delta_{i'} \rangle|^2 \\ &\geq \sum_{k = 1}^n \left[ e^{-2t\lambda_k^\alpha} - e^{-2t\lambda_k'^\alpha}(1 + \eta_i(t)) \right] |\langle \boldsymbol{\nu}_k, \delta_i \rangle|^2 \\& =0.\qedhere \end{align}\] ◻

Proposition 8. (Wave energy bounds) Let \(u_W^{(i)}(v,t)\) be the solution to the fractional wave equation Eqn. 4 with initial conditions \(u_W^{(i)}(\cdot,0) = \delta_i\) and \(\partial_t u_W^{(i)}(\cdot,0) = 0\). Then, for any time \(t \geq 0\), the energy of the waveform satisfies \(|\boldsymbol{\nu}_1(i)|^2 \leq \| u_W^{(i)}(\cdot,t) \|_2^2 \leq 1.\)

Proof of Proposition 8. The proof is similar to the proof of Eqn. ?? . By the same reasoning as in Eqn. 18 , we have \[\begin{align} \label{eqn:wave95energy95expression} \|u^{(i)}_W(t)\|_2^2 &= \sum_{k = 1}^n \cos^2\left(\sqrt{\lambda_k^\alpha}t\right) |\langle \boldsymbol{\nu}_k,\delta_i\rangle|^2. \end{align}\tag{19}\] Therefore, \[\begin{align} \|u^{(i)}_W(t)\|_2^2&= \sum_{k = 1}^n \cos^2(\sqrt{\lambda_k^\alpha}t) |\langle \boldsymbol{\nu}_k,\delta_i\rangle|^2 \leq \sum_{k = 1}^n |\langle \boldsymbol{\nu}_k,\delta_i\rangle|^2 = \|\delta_i\|_2^2=1. \end{align}\] The lower bound follows by noting that since \(\lambda_1=0\) we have: \[\begin{align} \|u^{(i)}_W(t)\|_2^2&= \sum_{k = 1}^n \cos^2(\sqrt{\lambda_k^\alpha}t) |\langle \boldsymbol{\nu}_k,\delta_i\rangle|^2 \\ &\geq \cos^2(\sqrt{\lambda_1^\alpha}t) |\langle \boldsymbol{\nu}_1,\delta_i\rangle|^2 \\ &= |\langle \boldsymbol{\nu}_1,\delta_i\rangle|^2.\\ &= |\boldsymbol{\nu}_1(i)|^2.\qquad\qquad\qquad\qquad\qquad\qquad\qquad\qedhere \end{align}\] ◻

Proposition 9. (Recovery of eigenspectrum from waveforms) Let \(G\) be connected, and let \(u_W^{(i)}(v,t)\) be the solution to the fractional wave equation (Eqn. 4 ), with initial conditions \(u_W^{(i)}(\cdot,0) = \delta_i\) and \(\partial_t u_W^{(i)}(\cdot,0) = 0\). Then, for any fixed node \(v\), the sequence of values \(u_W^{(i)}(v, t_1), \dots, u_W^{(i)}(v, t_m)\) obtained from time samples can be used to approximate the full Laplacian eigenspectrum \(\{ \lambda_k^\alpha \}_{k=1}^n\) up to arbitrary precision, provided sufficient time resolution.

Proof. Fix \(v\). Since \(\mathbf{y}=0,\) we may rewrite Eqn. 4 as \[f(t)\mathrel{\vcenter{:}}= u^{(i)}_W(v,t) = \sum_{k = 1}^n \cos(\sqrt{\lambda_k^\alpha} t) c_k(v)\] where \(c_k(v) = \langle \boldsymbol{\nu}_k,\delta_i\rangle \boldsymbol{\nu}_k(v)\) is a constant with respect to time and depends only on the node position.

Now, let \(\epsilon >0\) be a degree of precision and choose \(K\) such that \(\frac{1}{K}<\epsilon\). Approximate \[f(t)\approx \tilde{f}(t)\mathrel{\vcenter{:}}= \sum_{k=1}^n\cos(a_k t)c_k(v)\] where \(a_k\) is the multiple of \(1/K\) such that \(|a_k-\sqrt{\lambda_k^\alpha}|<\epsilon\). The function \(\tilde{f}\) has a finite Fourier expansion and therefore is uniquely characterized by finitely many samples which allows us to recover the \(a_k\) and thus approximately recover the \(\lambda_k\). ◻

Corollary 1. (Cycle Length) The size of cycle graph \(C_n\) can be determined from the solution to the fractional wave equation at a single node \(v\).

Proof. Denote \(C_n\) the cycle graph with vertices numbered \(0, \ldots, n-1\) and edges \((v, v+1)\) modulo \(n\). Since \(C_n\) is 2-regular, the unnormalized and normalized Laplacian differ only by a constant multiple of 2. Therefore, without loss of generality we will focus on the unnormalized Laplacian.

It is known that an orthogonal eigenbasis is given by \(\{\phi_k\}_{k = 0}^{k = \lfloor n/2 \rfloor } \cup \{\psi_k\}_{k = 1}^{\lceil (n-1)/2 \rceil}\) defined: \[\begin{align} \phi_k(v) = \cos \left( \frac{2 \pi kv }{n} \right), \qquad \psi_k(v) = \sin \left( \frac{2 \pi k v}{n} \right), \end{align}\] where the corresponding eigenvalues are given by \[\label{eq:eigen95value95period} \lambda_k = 2 - 2 \cos \left( \frac{2 \pi k }{n} \right) = 4 \sin^2 \left( \frac{\pi k }{n} \right)\tag{20}\]

Consider the case where the wave equation has an initial condition of \(\mathbf{y} = 0\) and the initial signal is given by \(\mathbf{x} = \delta_a\). The solution to this equation at a fixed node \(v\) is given by:

\[\begin{align} \label{eq:wave95sol95cycle} u_W(t) = &\sum_{k = 1}^{\lfloor \frac{n}{2} \rfloor} \cos \left( 2 t \sin \left( \frac{\pi k }{n} \right) \right) \cos( 2 \pi k a /n) \phi_k/ \| \phi_k\|^2 \nonumber \\ &+ \sum_{k=1}^{\lceil (n-1)/2 \rceil} \cos \left( 2 t \sin \left( \frac{\pi k }{n} \right) \right) \sin( 2 \pi k a/n) \psi_k/\|\psi_k\|^2,\\ &+\phi_0/\|\phi_0\|^2 \end{align}\tag{21}\] where the third term in Eqn. 21 corresponding to the smallest non-zero eigenvalue is nonzero. By Proposition 9 each of the \(\lambda_k\) are uniquely determined by our measurements and \[n = \frac{2 \pi}{\cos ^{-1} \left( \frac{2-\lambda_1}{2} \right)}.\]

Thus \(n\) is uniquely determined by our measurements. ◻

10 Implementation Details↩︎

10.1 Experimental Computation Resources↩︎

All experiments were conducted on a high-performance computing server equipped with an Intel Xeon Gold 6240 CPU (18 cores, 36 threads, 2.60 GHz base frequency) and 730 GB of system RAM. The server is configured with 4 NVIDIA A100 GPUs, each with 40 GB of VRAM, enabling efficient parallel training of deep learning models. The system runs on Red Hat Enterprise Linux 8.8 with CUDA version 11.8 and cuDNN 8.5.0. Experiments were executed using PyTorch 2.0.0 and Python 3.10. Unless otherwise stated, all models were trained using mixed precision to optimize memory usage and throughput.

10.2 DYMAG Parameters↩︎

Here we describe the architecture and training setup of DYMAG used to generate the experimental results presented in this paper. DYMAG supports both node-level and graph-level tasks, and is evaluated on datasets comprising either a single large graph (e.g., citation networks such as PubMed) or collections of small graphs (e.g., synthetic graphs, molecular graphs, and protein structures).

We use a stacked architecture consisting of \(L = 3\) DYMAG layers, each simulating \(K\) discrete time steps of heat or wave dynamics, where \(K\) is selected via grid search specific to each dataset. Between layers, we apply a 3-layer MLP with LeakyReLU activations for node-wise transformation. For all downstream tasks, we use a 5-layer MLP with LeakyReLU activations as the task-specific head.

For node-level tasks such as node classification in citation graphs, we directly use the hidden node embeddings produced by DYMAG and feed them into the 5-layer MLP to perform classification or regression. For datasets composed of multiple small graphs (e.g., synthetic Erdos–Renyi graphs, molecular graphs, etc.), we apply mean pooling across the node dimension to obtain a graph-level embedding. This pooled embedding is passed to the same 5-layer MLP for classification or regression.

10.3 Parameters for Sprott Dynamics↩︎

For Sprott dynamics (), in our experiments, we set \(b=0.25\) and the coupling coefficients as \(\smash{c_{k,j} \sim \frac{1}{\sqrt{n-1}} \left(2 \cdot \mathrm{Bernoulli}(0.5) - 1\right)}\), which assigns each \(c_{k,j}\) a value of \(\pm \frac{1}{\sqrt{n-1}}\) with equal probability.

11 Additional Experiments↩︎

| Dataset | DYMAG(Heat) | DYMAG(Wave) | DYMAG(Sprott) | MPNN | GAT | GIN | GWT | GraphGPS | GRAND | GRAND++ | Neural EPD Approx. |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Ollivier–Ricci Curvature (\(\kappa\)) | |||||||||||

| 1-12 ER (\(p=0.04\), \(n=100\)) | 1.86e-01 | 1.93e-01 | 7.44e+00 | 3.20e+01 | 2.37e+01 | 5.93e+00 | 3.60e-01 | 1.14e+01 | 1.10e+01 | 1.48e+01 | |

| (4.01e-03) | (8.78e-03) | (2.73e-01) | (1.28e+00) | (5.45e-01) | (2.37e-01) | (2.96e-02) | (1.51e-01) | (3.28e-01) | (1.80e-01) | ||

| ER (\(p=0.06\), \(n=100\)) | 1.80e-01 | 1.76e-01 | 7.39e+00 | 3.19e+01 | 2.13e+01 | 2.06e+00 | 3.63e-01 | 8.58e+00 | 9.26e+00 | 1.61e+01 | |

| (8.03e-03) | (5.28e-03) | (4.47e-01) | (5.47e-01) | (2.08e+00) | (2.62e-02) | (1.34e-02) | (9.51e-02) | (5.83e-01) | (6.00e-01) | ||

| ER (\(p=0.08\), \(n=100\)) | 1.78e-01 | 1.79e-01 | 6.81e+00 | 3.19e+01 | 2.99e+01 | 8.62e-01 | 3.47e-01 | 9.13e+00 | 2.27e+00 | 1.35e+01 | |

| (8.39e-03) | (2.83e-03) | (2.23e-01) | (1.33e+00) | (2.74e-01) | (9.19e-02) | (2.15e-02) | (3.42e-01) | (2.18e-02) | (3.76e-01) | ||

| ER (\(p=0.04\), \(n=200\)) | 3.63e-01 | 3.58e-01 | 1.52e+00 | 1.81e+01 | 6.74e+00 | 7.86e-01 | 7.39e-01 | 3.07e+00 | 5.90e-01 | 2.06e+00 | |

| (9.09e-03) | (1.47e-02) | (8.83e-02) | (1.01e+00) | (5.36e-01) | (1.93e-02) | (6.13e-02) | (8.83e-02) | (5.74e-02) | (1.36e-02) | ||

| ER (\(p=0.06\), \(n=200\)) | 3.19e-01 | 2.63e-01 | 5.28e-01 | 1.74e+01 | 4.39e+00 | 3.39e-01 | 6.86e-01 | 1.61e+00 | 7.38e-01 | 1.82e+00 | N/A |

| (6.32e-02) | (1.03e-02) | (1.47e-02) | (1.88e-01) | (1.76e-01) | (1.68e-02) | (8.37e-03) | (7.56e-02) | (9.22e-03) | (2.54e-02) | ||

| ER (\(p=0.08\), \(n=200\)) | 2.14e-01 | 2.57e-01 | 5.73e-01 | 1.55e+01 | 6.18e+00 | 4.27e-01 | 5.93e-01 | 9.92e-01 | 4.33e-01 | 1.76e+00 | |

| (9.55e-03) | (5.22e-02) | (1.35e-02) | (7.13e-01) | (1.41e-01) | (2.64e-02) | (6.76e-03) | (3.25e-02) | (1.28e-02) | (5.41e-02) | ||

| Cora | 1.73e-02 | 1.85e-02 | 1.34e-01 | 2.40e-01 | 7.36e-01 | 1.56e-01 | 4.07e-02 | 4.41e-01 | 6.81e-02 | 1.74e-01 | |

| (4.44e-04) | (2.99e-03) | (5.98e-03) | (8.12e-03) | (3.65e-02) | (2.05e-03) | (5.47e-04) | (2.16e-02) | (1.02e-03) | (5.03e-03) | ||

| Citeseer | 2.09e-02 | 3.41e-02 | 1.46e-01 | 2.20e-01 | 9.04e-01 | 1.72e-01 | 3.58e-02 | 2.82e-01 | 1.24e-01 | 3.09e-01 | |

| (1.04e-03) | (2.29e-02) | (5.76e-03) | (2.82e-03) | (8.06e-03) | (1.51e-03) | (6.59e-04) | (2.63e-02) | (5.66e-03) | (5.61e-03) | ||

| PubMed | 7.30e-03 | 6.51e-03 | 2.97e-02 | 1.69e+00 | 1.55e-01 | 8.34e-03 | 9.15e-03 | 7.68e-01 | 2.73e-02 | 8.72e-02 | |

| (3.00e-05) | (1.68e-04) | (2.67e-03) | (9.92e-02) | (7.06e-03) | (5.55e-04) | (5.12e-04) | (1.38e-02) | (6.70e-04) | (1.11e-03) | ||

| Extended Persistence Image (EP) | |||||||||||

| 1-12 ER (\(p=0.04\), \(n=100\)) | 1.48e-02 | 6.37e-03 | 6.63e-01 | 7.83e-01 | 3.82e+00 | 4.83e-01 | 3.76e-02 | 9.17e-01 | 7.72e-01 | 2.39e+00 | 3.07e-03 |

| (1.26e-03) | (3.30e-03) | (1.01e-02) | (1.25e-02) | (1.41e-01) | (1.90e-02) | (3.46e-03) | (4.13e-02) | (4.33e-02) | (1.08e-01) | (5.43e-05) | |

| ER (\(p=0.06\), \(n=100\)) | 8.65e-03 | 2.79e-03 | 6.24e-01 | 7.35e-01 | 1.57e+00 | 4.09e-01 | 3.54e-02 | 6.31e-01 | 5.72e-01 | 1.15e+00 | 1.37e-03 |

| (7.91e-04) | (1.42e-03) | (7.97e-03) | (1.46e-02) | (7.12e-02) | (2.64e-02) | (2.64e-03) | (5.82e-02) | (3.03e-02) | (5.05e-02) | (2.26e-05) | |

| ER (\(p=0.08\), \(n=100\)) | 8.82e-03 | 2.54e-03 | 5.71e-01 | 9.46e-01 | 6.65e-01 | 3.92e-02 | 3.29e-02 | 4.85e-01 | 1.07e-02 | 8.59e-01 | 1.90e-03 |

| (2.59e-04) | (6.41e-04) | (1.57e-02) | (5.08e-02) | (6.56e-02) | (1.86e-03) | (2.03e-03) | (7.19e-03) | (1.14e-04) | (5.42e-02) | (4.81e-05) | |

| ER (\(p=0.04\), \(n=200\)) | 8.91e-03 | 5.18e-03 | 8.04e-02 | 6.73e-01 | 1.93e-01 | 3.72e-02 | 2.88e-02 | 3.92e-01 | 7.31e-02 | 2.85e-01 | 3.24e-03 |

| (9.43e-05) | (1.94e-03) | (2.70e-03) | (2.66e-02) | (2.18e-03) | (2.92e-03) | (1.54e-03) | (2.58e-02) | (2.31e-03) | (1.84e-02) | (1.28e-05) | |

| ER (\(p=0.06\), \(n=200\)) | 7.41e-03 | 4.76e-03 | 1.39e-01 | 7.29e-01 | 5.48e-01 | 3.69e-02 | 2.57e-02 | 3.68e-01 | 8.39e-02 | 3.28e-01 | 4.30e-03 |

| (1.23e-04) | (4.62e-04) | (4.08e-03) | (2.10e-02) | (2.81e-02) | (5.56e-04) | (8.74e-04) | (5.41e-03) | (4.93e-03) | (2.78e-02) | (5.53e-05) | |

| ER (\(p=0.08\), \(n=200\)) | 4.57e-03 | 1.62e-03 | 1.35e-01 | 1.28e+00 | 1.87e-01 | 3.96e-02 | 2.43e-02 | 3.12e-01 | 4.12e-02 | 3.48e-01 | 1.12e-03 |

| (6.46e-05) | (5.01e-04) | (3.22e-03) | (9.03e-02) | (1.52e-02) | (9.58e-04) | (5.02e-04) | (1.15e-02) | (6.35e-04) | (4.65e-03) | (1.73e-05) | |

| Cora | 1.45e-04 | 7.41e-05 | 3.51e-03 | 2.72e-03 | 5.04e-02 | 5.53e-03 | 6.79e-04 | 2.71e-02 | 8.13e-04 | 8.16e-03 | 5.80e-05 |

| (9.14e-06) | (1.61e-05) | (3.30e-04) | (1.17e-04) | (3.90e-03) | (1.73e-04) | (1.53e-05) | (1.40e-03) | (2.59e-05) | (1.37e-04) | (3.22e-07) | |

| Citeseer | 3.94e-04 | 1.58e-04 | 7.64e-03 | 6.83e-03 | 2.93e-02 | 3.94e-03 | 9.12e-04 | 3.16e-02 | 6.87e-04 | 4.21e-03 | 1.29e-04 |

| (2.45e-05) | (2.91e-05) | (6.28e-04) | (6.02e-04) | (1.00e-03) | (7.05e-05) | (4.80e-05) | (7.36e-04) | (2.17e-05) | (7.09e-05) | (9.21e-07) | |

| PubMed | 7.93e-03 | 3.29e-03 | 8.96e-02 | 4.29e-01 | 4.79e-01 | 1.27e-01 | 2.16e-02 | 1.94e-01 | 3.37e-02 | 3.07e-01 | 2.74e-03 |

| (3.55e-04) | (5.51e-04) | (5.26e-03) | (2.60e-02) | (3.41e-02) | (1.13e-02) | (2.77e-04) | (1.58e-02) | (7.31e-04) | (2.06e-02) | (6.85e-05) | |

| ogbn-mag | 5.39e-02 | 8.01e-03 | – | 2.93e-01 | 4.47e-01 | 2.49e-01 | 5.83e-02 | 3.78e-01 | – | – | 6.73e-03 |

| (5.95e-04) | (1.28e-03) | (5.77e-03) | (1.89e-02) | (3.04e-03) | (8.10e-04) | (1.24e-02) | (1.04e-04) | ||||

| ogbn-papers100M | 9.03e-02 | 3.25e-02 | – | 6.19e-01 | 8.03e-01 | 6.43e-01 | 2.71e-01 | 4.05e-01 | – | – | 3.18e-02 |

| (6.49e-03) | (7.21e-04) | (2.36e-02) | (4.13e-02) | (1.15e-02) | (2.15e-02) | (1.12e-02) | (1.05e-04) | ||||

We present further experimental validation of DYMAG, focusing on its ability to predict both biomolecular properties and topological descriptors such as curvature.

| Model | ENZYMES | PROTEINS | MUTAG |

|---|---|---|---|

| DYMAG(Heat) | \(0.82 \pm 0.02\) | \(0.54 \pm 0.04\) | \(0.79 \pm 0.02\) |

| DYMAG(Wave) | \(0.79 \pm 0.02\) | \(0.71 \pm 0.02\) | \(0.83 \pm 0.02\) |

| DYMAG(Sprott) | \(0.60 \pm 0.04\) | \(0.64 \pm 0.03\) | \(0.74 \pm 0.03\) |

| MPNN | \(0.63 \pm 0.01\) | \(0.67 \pm 0.01\) | \(0.76 \pm 0.02\) |

| GAT | \(0.65 \pm 0.01\) | \(0.64 \pm 0.01\) | \(0.75 \pm 0.02\) |

| GIN | \(0.68 \pm 0.01\) | \(0.69 \pm 0.02\) | \(0.77 \pm 0.02\) |

| GWT | \(0.66 \pm 0.01\) | \(0.66 \pm 0.02\) | \(0.72 \pm 0.02\) |

| GraphGPS | \(0.70 \pm 0.02\) | \(0.68 \pm 0.02\) | \(0.78 \pm 0.02\) |

| GRAND | \(0.71 \pm 0.02\) | \(0.65 \pm 0.02\) | \(0.76 \pm 0.02\) |

| GRAND++ | \(0.74 \pm 0.01\) | \(0.69 \pm 0.01\) | \(0.80 \pm 0.02\) |

In , we present the complete results for predicting Ollivier–Ricci curvature and extended persistence images using synthetic and real-world datasets comprising of Erdős–Rényi graphs and citation networks. For all experiments, DYMAG is trained using a uniform signal as input. Ground truth Ollivier–Ricci curvature values are computed directly from the adjacency matrix, and persistence images are generated using node degree as the filtration function. Results are reported as mean with standard deviation in parentheses. Across all settings, DYMAG variants with heat or wave dynamics consistently outperform baseline methods. Given the high computational cost of computing Ollivier–Ricci curvature on large graphs, we restricted the PubMed evaluation to a subgraph comprising the 2,000 most highly cited papers and omitted this evaluation for OGBN-MAG and OGBN-Papers100M.

In Table 4, we evaluated the performance of DYMAG on three publicly available datasets for biomolecular graph classification from the TUDatasets benchmark [67]. The ENZYMES dataset [68] consists of protein secondary structures with ground truth annotations of catalytic activity. In the PROTEINS dataset [21], the task is to classify whether a protein functions as an enzyme. The MUTAG dataset [69], contains small nitroaromatic compounds, and the task is to classify their mutagenicity on the S.typhimurium bacterium. DYMAG achieves strong validation accuracy across all datasets, with the wave equation variant performing best on PROTEINS and MUTAG.