Dropout Drops Double Descent

May 25, 2023

1 Introduction↩︎

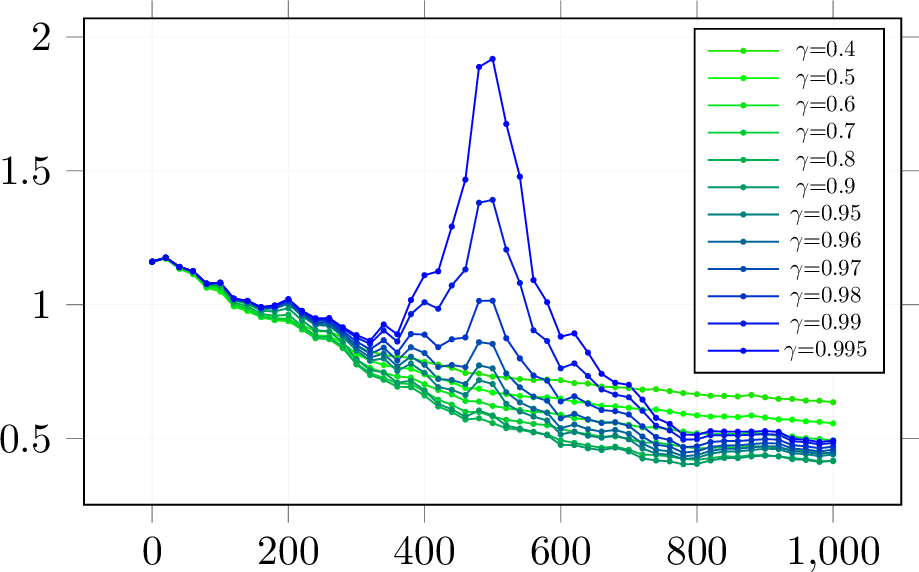

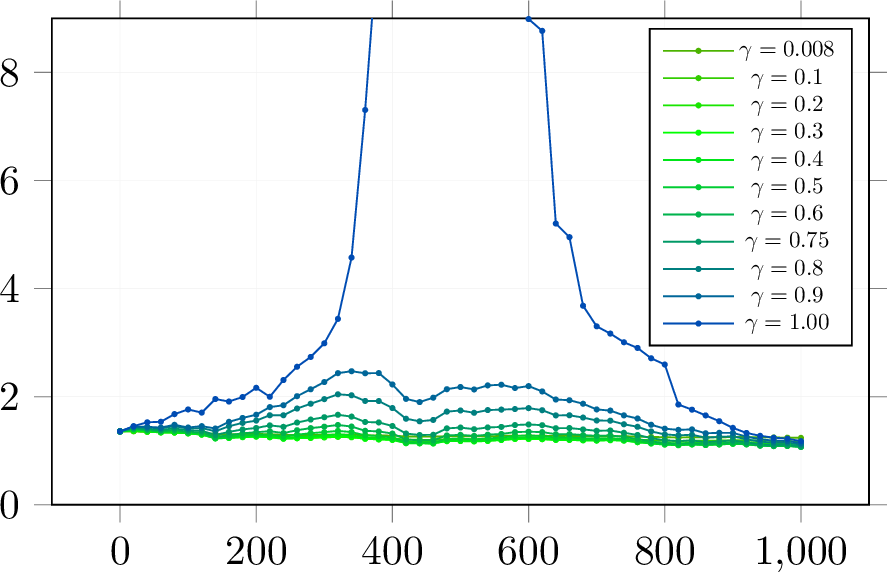

Recent investigations have shown that over-parameterized models, including linear regression and neural networks [1]–[7], demonstrate significant generalization capabilities, even when the labels are influenced by pure noise. This unique characteristic has attracted considerable academic attention, posing significant challenges to traditional generalization theory. A key framework, "Double Descent," helps explain this behavior [1]. In the under-parameterized realm, as we increase the number of model parameters or sample sizes, the test error initially shows a reduction, as illustrated by the peak curve in Figure 1. Intriguingly, as we transition into the over-parameterized domain, instead of increasing, the test error continues to decrease, revealing an unexpected secondary descent phase.

Figure 1: Test Risk of Sample-Wise Double Descent with Dropout. \(\gamma\) denotes the probability of dropout as \(R\). The number in the legend is the present probability. \(p\;=\;500\) and the sample size of the x-axis. Here \(x\sim\mathcal{N}(0,I_p)\), \(\mathrm{y}=x^\top\beta^*+\epsilon\), \(\epsilon\sim\mathcal{N}(0,0.25),\;\beta^*\sim\mathcal{U}(0,1)\) and \(||\beta^*||_2=1\).

This peak phenomenon was first observed as early as three decades ago [4], [6], and its re-emergence in recent years [1], [7] underlines the significant role it plays in research within the over-parameterized regime.

A primary objective of machine learning algorithms is to provide accurate out-of-sample predictions—a quality known as generalization. Traditional generalization theory presents a ‘U-shaped’ risk curve derived from the bias-variance trade-off [8], which suggests the optimal model selection occurs prior to the interpolation point (when \(n=p\)). This trade-off suggests that a small hypothesis class lacks the expressive power necessary to include the truth function. Conversely, a larger class may introduce spurious overfitting patterns. However, in contrast to this traditional view, the double-descent behavior, marked by a "\(\verb|\/\|\)"-shaped trend with increasing model size, implies that we can discover a superior model with zero train and test error without succumbing to overfitting.

The reason behind the relatively recent surge in attention towards the double descent phenomenon is somewhat elusive, but the widespread adoption of regularization methods, such as ridge regularization [3], [9] and early stopping [10], designed to nullify double descent, might provide some explanation. In this study, we focus on one of the most popular regularization methods—dropout.

Dropout is a well-established regularization technique for training deep neural networks. It aims to prevent ‘co-adaptation’ among neurons by randomly excluding them during training [11]. Dropout’s effectiveness extends across a wide range of machine learning tasks, from classification [12] to regression [13]. Notably, dropout was a vital component in the design of AlexNet [14], which significantly outperformed its competitors in the 2012 ImageNet challenge. Due to dropout’s proven efficiency in avoiding overfitting [12] and its broad application scope, we propose that it may significantly mitigate the double descent phenomenon. This leads us to the following question:

Under what conditions and how does dropout mitigate the double descent phenomenon?

We recognize that the double-descent phenomenon exists under both sample-wise and model-wise conditions. This paper considers its occurrence in both linear and nonlinear models, to improve test performance without unexpected non-monotonic responses. The elimination of double descent has indeed become a hot research topic. For instance, ridge regularization can alleviate double descent [9], as can early stopping [10].

We explore a well-specified linear regression model utilizing dropout with \({r}_{ij}\sim\mathcal{B}er(\gamma),{r}\in\{0,1\}^{n\times p},\gamma>0,{X}\in\mathbb{R}^{n\times p}, y\in\mathbb{R}^n, {\beta}\in\mathbb{R}^{p}\), aiming to minimize the empirical risk: \[L=||{y}-({r}\ast {X}){\beta}||_2^2\;,\] where \(\ast\) denotes an element-wise product, serving to drop parameters during the training phase randomly. Dropout aids in preventing overfitting and offers a means to efficiently combine a wide range of different neural network architectures [12].

Our Contributions. Our study tackles the aforementioned question using theoretical and empirical methodologies. Theoretically, we explore the simplest linear regression with dropout regularization, which echoes the influence observed in general ridge regression [15]. When considering the test error—which includes both the bias and variance of a well-formulated linear regression model that employs dropout for isotropic Gaussian features1—we adopt a non-asymptotic perspective. Although we couldn’t secure an exact solution to substantiate the monotonic decline of the test error, we devised an alternative approach. Through the application of Taylor series expansion, we obtained an approximate solution, providing persuasive evidence supporting the continuous decrease of the test error. On the empirical front, our numerical experiments demonstrate that the dropout technique can effectively mitigate the double descent phenomenon in both linear and nonlinear models. In more specific terms, we demonstrate:

Eliminating the Sample-Wise Double Descent. We empirically validate the monotonicity of the test error as the sample size increases (see Figure 1) and theoretically prove the monotonicity of the second-order Neumann series test error. We plan to detail the exact solution in future work.

Eliminating the Model-Wise Double Descent. We empirically demonstrate the monotonicity of the test error as the model size increases.

Multi-layer CNN. We provide empirical evidence showing that dropout can alleviate the double descent in multi-layer CNNs.

1.1 Related works↩︎

Dropout. The purpose of dropout, as proposed in [12], is to alleviate overfitting, and numerous variants of this technique have been further examined in [16]–[22]. As for the theory behind dropout, [23] demonstrates that it functions as an adaptive regularization. [24] postulates that dropout operates akin to a Bayesian approximation algorithm—specifically a Gaussian Process, incorporating an element of uncertainty into the functioning of black-box neural networks. Additionally, several studies have addressed the Rademacher complexity of dropout [25], and its implicit and explicit regularization [26], [27].

Generalized Ridge Regression. The dropout estimator resembles a generalized ridge estimator, represented as \(\hat{{\beta}}=({X}^\top{X}+\lambda{\Sigma_w})^{-1}{X}^\top{y}\), with \({\Sigma_w}\) being the weighted matrix and \(\lambda>0\). Generalized ridge regression was first introduced in [28], with numerous developments discussed in [15], [29]–[34]. Nevertheless, these estimators are typically contemplated when \(n > p\). Hence, their impact in high-dimensional and over-parameterized regimes is scarcely known. [35] recently provided an asymptotic view of the weighted \(\ell_2\) regularization in linear regression.

Dropping Double Descent. Several studies have aimed to counteract the double descent phenomenon. [10] illustrates that early stopping can attenuate double descent. [9] argues that optimal ridge regularization has a similar effect in the non-asymptotic view, a finding that aligns with our study. [3] further sheds light on ridge regularization, illustrating a trend towards the same test error as the tail of double descent in model size.

2 Background↩︎

We consider linear regression in which \(p\) (\(\geq 1\)) covariates \(x\in {\mathbb{R}}^p\) and response \(\mathrm{y}\in {\mathbb{R}}\) are related by \[\label{eqj-1} \mathrm{y} = {x}^\top\beta_0+\epsilon\;,\;\epsilon\sim\mathcal{N}(0,\sigma^2)\tag{1}\] with unknown \({\beta}_0\in\mathbb{R}^p\) and \(\sigma^2>0\), where the occurrences of \(\epsilon\) is independent from those of \(x\), and we estimate \(\beta_0\) from \(n\)(\(\geq 1\)) i.i.d. training data \((x_1,\mathrm{y}_1),\ldots,(x_n,\mathrm{y}_n) \in {\mathbb{R}}^p\times {\mathbb{R}}\).

In particular, we assume that the covariates are generated by \[\label{eqj-2} {x} \sim \mathcal{N}(0,\;I_p)\;.\tag{2}\] Thus, the covariates and response have the joint distribution \(\mathcal{D}\) defined by (1 ) and (2 ), and we express \(z^n:=\{({x}_i,\mathrm{y}_i)\}^n_{i=1}\sim \mathcal{D}^n\) for the training data. For each \(\beta\in {\mathbb{R}}^p\), we define \[\label{eqj-3} R({\beta}) := \mathop{\mathbb{E}}\limits_{({x,\mathrm{y}})\sim\mathcal{D}}[({x}^\top{\beta}-{\mathrm{y}})^2],\tag{3}\] where \(\mathop{\mathbb{E}}\limits_{(x,\mathrm{y})\sim\mathcal{D}}[\cdot]\) is the expectation w.r.t. the distribution \(\mathcal{D}\).

Suppose we estimate \(\beta\) from the training data \(z^n\) by \(\hat{\beta}_n: ({\mathbb{R}}^p\times {\mathbb{R}})^n\rightarrow {\mathbb{R}}^p\). Then, we define \[\label{eqj-4} \begin{align} \bar{R}(\hat{\beta}_n) :&= \mathop{\mathbb{E}}\limits_{z^n \sim\mathcal{D}^n}R(\hat{\beta}_n(z^n)) = \mathop{\mathbb{E}}\limits_{z^n \sim\mathcal{D}^n}\mathop{\mathbb{E}}\limits_{({x,\mathrm{y}})\sim\mathcal{D}}[({x}^\top{\hat{\beta}_n(z^n)}-{\mathrm{y}})^2] \end{align}\tag{4}\] where \(\displaystyle {\mathbb{E}}_{z^n\sim\mathcal{\cal D}^n}[\cdot]\) is the expectation w.r.t. the distribution \(D^n\). Note that (4 ) averages (3 ) over the training data as well while both evaluate the expected squared loss of the estimation.

In this paper, we consider the situation of dropout: given the training data \(z^n=\{(x_i,\mathrm{y}_i)\}_{i=1}^n\), for \(X=[x_1,\ldots,x_n]^\top\in {\mathbb{R}}^{n\times p}\) and \(y=[\mathrm{y}_1,\ldots,\mathrm{y}_n]^\top\in {\mathbb{R}}^n\), we estimate \(\beta\) by the \(\hat{\beta}(z^n)\) that minimizes the training error \(\mathop{\mathbb{E}}\limits_{r\sim\mathcal{B}er(\gamma)}[L]\) for \[L=\|{y}-({r}\ast {X}){\beta}\|_2^2\;,\] where \(\ast\) denotes the element-wise product, each element of \(R\in {\mathbb{R}}^{n\times p}\) takes one and zero with probabilities \(\gamma\) and \(1-\gamma\), respectively, and we write \(r\sim Ber(\gamma)\) for the distribution. Then, the quantity \(\mathop{\mathbb{E}}\limits_{r\sim\mathcal{B}er(\gamma)}[L]\) can be expressed by \[\label{eqj-12} \begin{align} &\mathop{\mathbb{E}}\limits_{r\sim\mathcal{B}er(\gamma)}\|{y}-(r* X){\beta}\|_2^2=\mathop{\mathbb{E}}\limits_{r\sim\mathcal{B}er(\gamma)}\|{y}-M{\beta}||_2^2\\ &={y}^\top{y}-2{\beta}^\top\mathbb{E}(M^\top){y}+{\beta}^\top\mathbb{E}(M^\top M){\beta}\\ &={y}^\top{y}-2\gamma{\beta}^\top X^\top{y}+{\beta}^\top\mathbb{E}(M^\top M){\beta}\\ &=\|{y}-\gamma X{\beta}\|_2^2-\gamma^2{\beta}^\top X^\top X{\beta}+{\beta}^\top\mathbb{E}(M^\top M){\beta}\\ &=\|{y}-\gamma X{\beta}\|_2^2+{\beta}^\top(\mathbb{E}(M^\top M)-\gamma^2X^\top X){\beta}\\ &=\|{y}-\gamma X{\beta}\|_2^2+(1-\gamma)\gamma\|\Gamma{\beta}\|_2^2 \end{align}\tag{5}\] where \(M:=r\ast X\), \(\Gamma=\mathrm{diag}(X^\top X)^{1/2}\), the final equation follows from the fact that the element-wise expectation \({\mathbb{E}}(M^\top M)\) is \[{\mathbb{E}}\left[\sum_{k}m_{ik}m_{jk}\right]= \left\{ \begin{array}{ll} \gamma^2\sum_{k}x_{ik}x_{jk},&i\not=j\\\gamma\sum_{k}x_{ik}^2,&i=j\\ \end{array} \right.\;\] for the \((i,j)\)-th element of \(M^\top M\) (the off-diagonal elements of \({\mathbb{E}}(M^\top M)\) and \(\gamma^2X^\top X\) are canceled out).

We can consider this as a Tikhonov regularization method. Let \(\beta'=\gamma\beta\) as in [12]. Then, (5 ) becomes \[\begin{align} \label{eq55} \|y-X\beta'\|^2+\frac{1-\gamma}{\gamma}\|\Gamma\beta'\|^2\;, \end{align}\tag{6}\] which is minimized when \(\beta'\) is equal to \[\label{eq56} \hat{{\beta}}_{n,\gamma}=\left(X^\top X+\frac{1-\gamma}{\gamma}\Gamma^\top \Gamma\right)^{-1}X^\top{y}\;.\tag{7}\]

3 Drop Double-Descent in Linear Regression↩︎

In this section, we show the monotonicity of the solution in the sample size \(n\) with dropout in linear regression, and its proof follows in Appendix 7.1. Hereafter, we denote \(\hat{\beta}\) by \(\hat{\beta}_{n,\gamma}\) when we require \(n\) and \(\gamma\) to be explicit.

Before proving the claim, we notice that the test error is of the form \[\begin{align} &&{R}(\hat{\beta})= \mathop{\mathbb{E}}\limits_{({x},y)\sim\mathcal{D}}\left[\{{x}^\top(\hat{{\beta}}-\beta_0)+\epsilon\}^2\right]\nonumber = \|\hat{{\beta}}-{\beta}_0\|_2^2+\sigma^2\label{eqj-14}\;, \end{align}\tag{8}\] which is due to \[\begin{align} \mathop{\mathbb{E}}\limits_{{x}\sim\mathcal{N}(0,I_d),\epsilon\sim\mathcal{N}(0,\sigma^2)} [\{ (\hat{{\beta}}-{\beta}_0)^\top x+\epsilon\}^2] = \mathop{\mathbb{E}}\limits_{{x}\sim\mathcal{N}(0,I_p)} [(\{(\hat{\beta}-{\beta}_0)^\top x\})^\top\{ (\hat{\beta}-{\beta}_0)^\top x\}] +\sigma^2 . \end{align}\] For the dropout estimator Eq. (7 ), the expected test error is \[\begin{align} \label{eqj-20} \bar{R}(\hat{\beta}_{n,\gamma})\nonumber &=&\mathbb{E}_X\mathbb{E}_y[{R}(\hat{\beta}_{n,\gamma})]={\mathbb{E}}_X\mathbb{E}_{y}[\|\hat{\beta}_{n,\gamma}-\beta_0\|_2^2]+\sigma^2\nonumber\\ &=& \mathbb{E}_{X}\mathbb{E}_{y}[\|( X^\top X+\Lambda)^{-1}X^\top y-\beta_0\|_2^2]+\sigma^2\nonumber\\ &=&\mathbb{E}_{X}[\|( X^\top X+\Lambda)^{-1}X^\top(X\beta_0+\epsilon)-\beta_0\|_2^2]+\sigma^2\nonumber\\ &=&\mathbb{E}_{X}[\|((X^\top X+\Lambda)^{-1}X^\top X-I_p)\beta_0\|_2^2]\nonumber+\sigma^2{\mathbb{E}}_{X}[\|(X^\top X+\Lambda)^{-1}X^\top\|_F^2]+\sigma^2\nonumber \end{align}\tag{9}\] where \(\Lambda=\frac{1-\gamma}{\gamma}\mathrm{diag}(X^\top X)\). By neglecting the constant terms, the quantity \(\bar{R}(\hat{\beta}_{n,\gamma})\) becomes \[\label{eq1} \begin{align} &\beta_0^{\top}\mathbb{E}_{X}\left[\left(I+A^\top\right)^{-1}\left(I+A\right)^{-1}\right]\beta_0+\sigma^{2} {\mathbb{E}}_{X}\left[\left\|\left(X^{\top} X+\Lambda\right)^{-1} X^{\top}\right\|_{F}^{2}\right], \end{align}\tag{10}\] where \(A=\Lambda^{-1}X^\top X\).

We evaluate the expected test error (10 ) by taking Taylor’s expansion of the matrix \[\left(I+A^\top\right)^{-1}\left(I+A\right)^{-1}\;.\] Then, we claim2.

Theorem 1. Let \(\alpha=C<\frac{1}{(1+\sqrt{\frac{p}{n}})^2}\), the expected test error (10 ) is \[\begin{align} f(\alpha)=&\left\{1-2\alpha+3\alpha^{2} \frac{p}{n}\right\}\left\|\beta_0\right\|^{2}+\sigma^2\alpha^2(\alpha+1)\frac{p}{n}+O({\frac{1}{n^2}}) \end{align}\] with \({\alpha=\frac{\gamma}{1-\gamma}}\).

To make the expected test error monotonically decrease with the chosen hyperparameter \(\alpha\), we need to consider the expected test error in Theorem 1 will not be divergent. To ensure the convergence of this Neumann series, we need the eigenvalue of \(\alpha\cdot A=\alpha\cdot\Lambda^{-1}X^\top X\) to be smaller than \(1\). Therefore, we need to consider the largest eigenvalue \(\lambda_{\text{max}}\) of \(A\) to make \(\alpha < \frac{1}{\lambda_{\text{max}}}\). Before this, we notice some critical points.

Let \(Q:=\mathrm{diag}(X^\top X)\), \(P:=Q^{-\frac{1}{2}}X^\top XQ^{-\frac{1}{2}}\), \(\Lambda:=\frac{1-\gamma}{\gamma}Q\), and \(M:=\Lambda^{-\frac{1}{2}}X^\top X\Lambda^{-\frac{1}{2}}\). Then, \(M\) and \(A=\Lambda^{-1}X^\top X\) share share the same characteristic polynomial \[\begin{align} &\mathcal{P}_{M}(\lambda) =\mathrm{det}(\Lambda^{-\frac{1}{2}}X^\top X\Lambda^{-\frac{1}{2}}-\lambda I) = \mathrm{det}(\Lambda^{-1/2})\mathrm{det}(X^\top X-\Lambda^{\frac{1}{2}}\lambda \Lambda^{\frac{1}{2}})\mathrm{det}(\Lambda^{-\frac{1}{2}})\\ &=\mathrm{det}(\Lambda^{-1}) \mathrm{det}(X^\top X-\lambda \Lambda)=\mathrm{det}(\Lambda^{-1}X^\top X-\lambda I)=\mathcal{P}_{A}(\lambda) \end{align}\;,\] so do the eigenvalues.

Let \(\lambda_{\mathrm{max}}\) and \(\lambda_{\mathrm{min}}\) be the largest and smallest eigenvalues of \(M\). Then, \(\lambda_{\mathrm{max}}\rightarrow(1+\sqrt{d})^2\) and \(\lambda_{\mathrm{min}}\rightarrow(1-\sqrt{d})^2\) as \(n,p\rightarrow\infty\) with \(\frac{p}{n}\rightarrow d\in(0,\infty)\) if \(\mathbb{E}[x^4]<\infty\) (Theorem 1.1 in [36]).

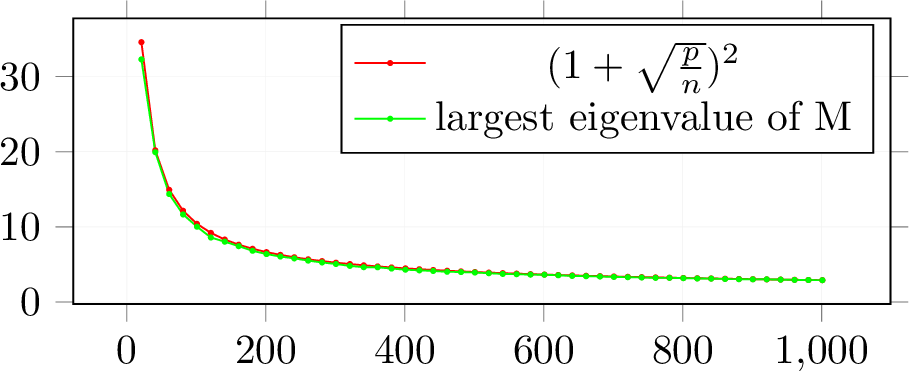

Hence, the maximum eigenvalues of matrices \(M\) and \(A\) are shown to approach \((1+\sqrt{\frac{p}{n}})^2\) asymptotically. Moreover, our empirical investigations corroborate that the largest eigenvalue of the sample correlation matrix \(M\) aligns closely with the theoretical prediction of \((1+\sqrt{\frac{p}{n}})^2\), as illustrated in Fig. 2. The Taylor series expansion converges when the parameter \(\gamma/(1-\gamma)\) is multiplied to make the largest eigenvalue of M less than 1. The proof of Theorem 1 is in Appendix 7.1.

Figure 2: The Largest eigenvalue of Sample Correlation Matrix (\(Q\in\mathbb{R}^{n\times p}\)). X-axis denotes the number of sample \(n\), Y-axis denotes the magnitude of largest eigenvalue and \(n\in\mathbb{N},\;p=500\)

4 Experiments↩︎

This section provides empirical evidence that dropout with optimal rate can effectively eliminate the double descent phenomenon in a broader range of scenarios compared to what is formally proven in Theorem 1.

4.1 Monotonicity for Sample-wise Double Descent↩︎

Elimination Double Descent in Linear Regression. (Synthetic Data)

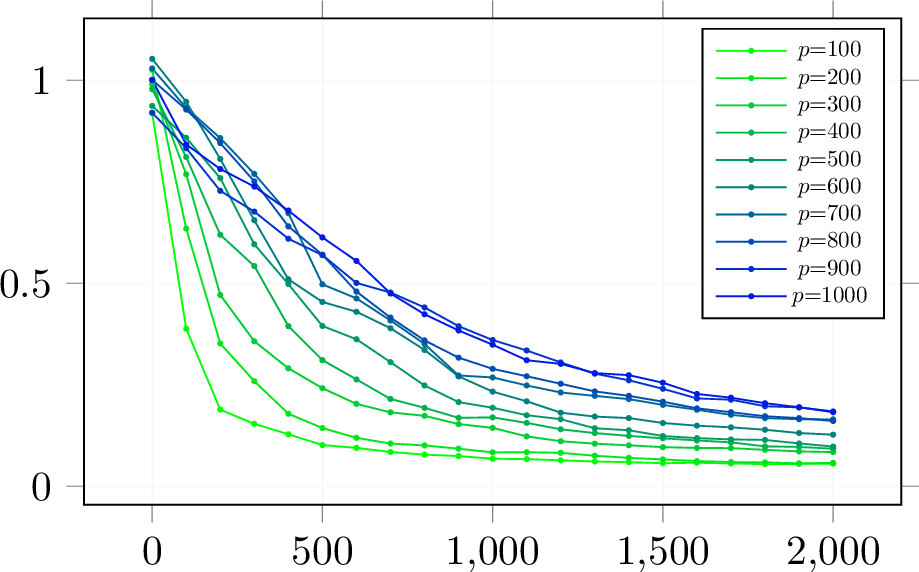

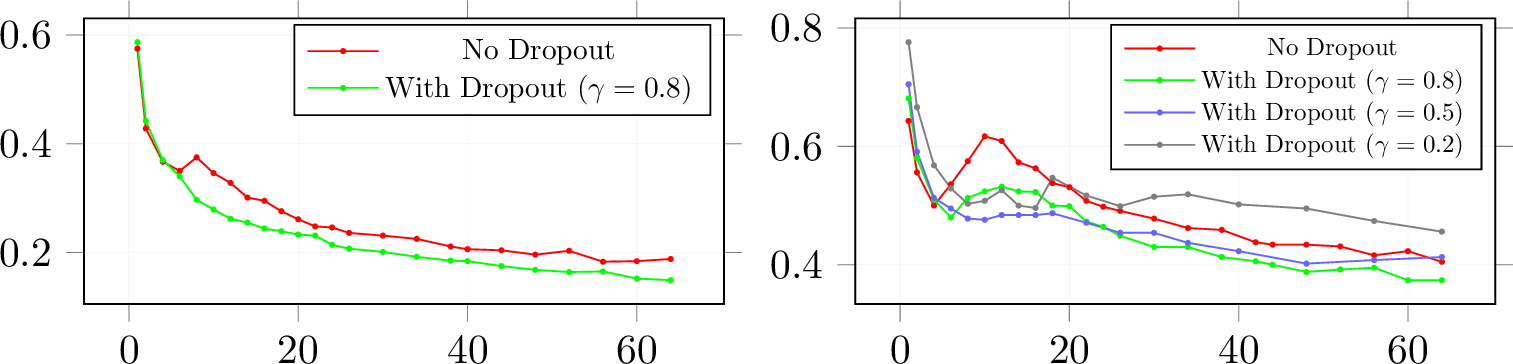

In this part, we evaluate test error using dropout with pseudo optimal probability 0.8 (from Figure 1) in linear regression, the sample distribution \(x\sim\mathcal{N}(0,I_p)\), \(y=x^\top \beta^*+\epsilon\), \(\epsilon\sim\mathcal{N}(0,0.25),\;\beta^*\sim\mathcal{U}(0,1)\) and \(\|\beta^*\|_2=1\). Moreover, the monotonic curves in Figure

3 show that the test error always remains monotonicity within the optimal dropout rate when the sample size increases for various dimensions \(p\).

Random ReLU Initialization. (Fashion-MNIST)

We consider the random nonlinear features stemming from the random feature framework of [37]. We apply random features to Fashion-MNIST [38], an image classification dataset with 10 classes. In the preprocessing step, the input images vector \(x\in\mathbb{R}^d\) are normalized and flattened

to \([-1,1]^d\) for the \(d=784\). To make the correct estimation of mean square loss, the class labels are dealt with the one-hot encoding to \(y\in\{\vec{e}_1,\dots,\vec{e}_{10}\}\subset\mathbb{R}^{10}\). According to the given number of random features \(D\), and the number of sample data \(n\), we are

going to acquire the random classifier by performing linear regression on the nonlinear embedding: \(\tilde{X}:=\mathrm{ReLU}(XW^\top )\) where \(X\in\mathbb{R}^{n\times d}\) and \(W\in\mathbb{R}^{D\times d}\) is a matrix with every entry sampled i.i.d from \(\mathcal{N}(0,1/\sqrt{d})\), and with the nonlinear activation function ReLU applied pointwise. This is equivalent

to a 2-layer fully connected neural network with a frozen (randomly initialized) first layer, trained with dropout. Figure 4 shows the monotonic test error.

Figure 3: Test Risk with Number of Sample in linear regression with Dropout probability 0.8. The test error curves decrease with the optimal dropout rate. The X-axis in this figure is the dimension of the parameter (0.8 is a pseudo-optimal value). The Y-axis is test risk.

Figure 4: Test Risk with Number of Sample in Nonlinear Model with Dropout using Fashion-Mnist. The test error curves are decreasing with the optimal dropout rate. X-axis: sample size; Y-axis: Test risk.

4.2 Monotonicity for Model-wise Double Descent↩︎

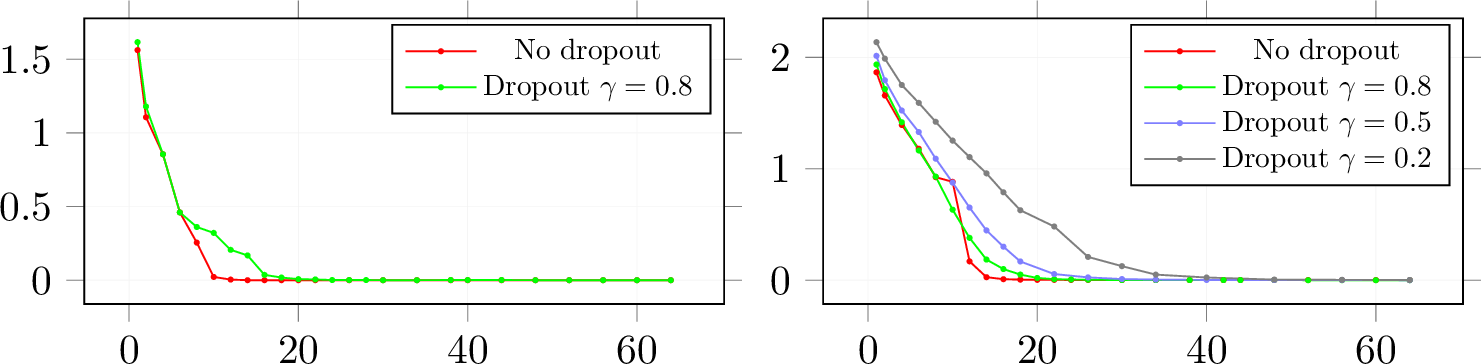

Like above setting, the sample distribution \(x\sim\mathcal{N}(0,I_p)\), \(y=x^\top \beta^*+\epsilon\), \(\epsilon\sim\mathcal{N}(0,0.25),\;\beta^*\sim\mathcal{U}(0,1)\), \(\|\beta^*\|_2=1\) and we fix \(n=500\). The experiment result is the monotonic curves in Figure 5 show that the test error remains monotonicity with the optimal dropout rate as the model size increases. For the multiple descents in Figure 5, the readers can find more details in [39].

Figure 5: Test Risk with of model size in Linear Regression with Dropout. The test error curves decrease with the optimal dropout rate. X-axis: the dimension of the parameter; Y-axis: Test risk.

4.3 Multi-layer CNN↩︎

We use the same setups as in [5]. Here, we give the brief details of the model. For the full details, please check Appendix 8.1.

Standard CNNs: We consider a simple family of 5-layer CNNs, with \(4\) convolutional layers of widths [\(k,2k,4k,8k\)] for varying \(k\), and a fully-connected layer. For context, the CNN with width \(k= 64\), can reach over \(90\%\) test accuracy on CIFAR-10 with data augmentation. We train with cross-entropy loss and the following optimizer: Adam with \(0.0001\) learning rate for 10K epochs; SGD with \(0.1 / \sqrt{\lfloor T / 512\rfloor+1}\) for 500K gradient steps.

Label Noise. In our experiments, label noise [40] of probability prefers to train on samples with the correct label with probability \(0\%,20\%\), and a uniformly random incorrect label otherwise (label noise is sampled only once and not per epoch).

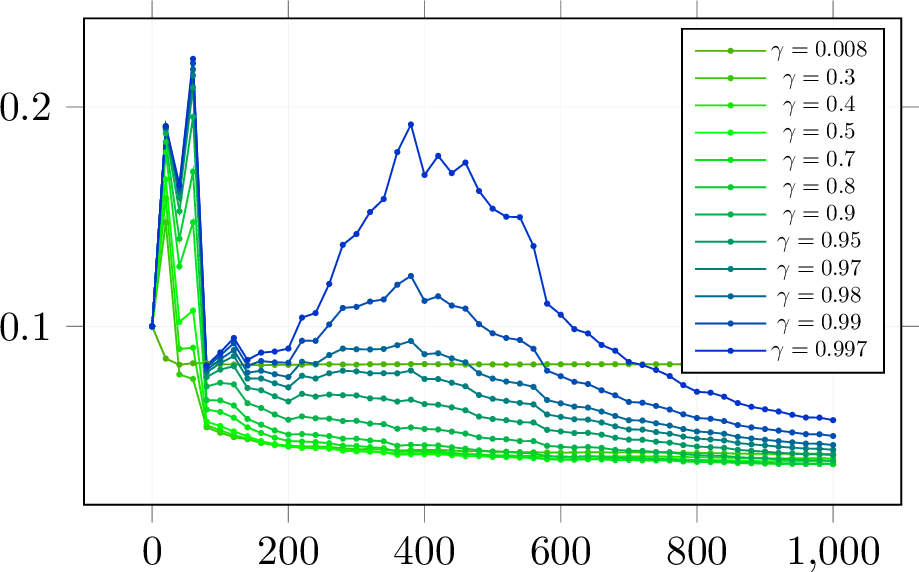

Dropout layer. We add the dropout layer before the full-connected linear layer with the present rate \(\gamma\) [12]. Figure 6 shows the test error results. The training loss is in Figure 7.

Figure 6: Test Risk with Number of width parameter in 5 layer-CNN with Dropout. The x-axis is CNN width parameter (left: \(0\%\) label noise with Adam; right: \(20\%\) label noise with SGD). We can see dropout drops double descent.(\(\gamma\): present rate)

Figure 7: Train Loss with width parameter in 5 layer-CNN with Dropout (left: Adam, right: SGD). X-axis is CNN width parameter

We observe model-wise double descent most strongly in settings with label noise in the train set (as is often true when collecting train data in the real world). For model sizes at the interpolation threshold, there is effectively only one model that fits the train data, and this interpolating model is very sensitive to noise in the train set and/or model misspecification. That is, since the model can barely fit the train data, forcing it to fit even slightly noisy or misspecified labels will destroy its global structure and result in high test error. (See Figure 28 in the Appendix of [5] for an experiment demonstrating this noise sensitivity by showing that ensembling helps significantly in the critically parameterized regime). However, for over-parameterized models, many interpolating models fit the train set, and SGD can find one that “memorizes” (or “absorbs”) the noise while still performing well on the distribution.

5 Discussion↩︎

Our proof considers only the non-exact solution for expected test error, and therefore we cannot definitively assert that the test risk decreases monotonically. However, based on our experimental results and this non-exact proof, we propose the following conjecture:

Conjecture 2. **For any \(n,p\geq 1\), \(\sigma^2>0\), and \(\beta_0\), the expected test risk is monotonic in sample as \[\label{eqj-10} \bar{R}(\hat{{\beta}}_{n+1})\leq\bar{R}(\hat{{\beta}}_{n}).\qquad{(1)}\]

In future research, we aim to prove that the exact solution with dropout can mitigate double descent.

Note that the optimal hyperparameter remains in the fixed dimension \(p\) with a changeable sample size \(n\). This is because the original data \(y\) from the model \(y=X\beta+\epsilon\) will change, thus affecting the common test error. Additionally, [41] contains a statement about the sample covariance matrix \(\mathrm{diag}(X^\top X)\), which converges to the identity matrix for all \(\delta>0\) and \(||x_i||_2\leq \sqrt{d}\) (Corollary 6.20 in [41]): \[P[\|\frac{\mathrm{diag}(X^\top X)}{n}-I_p\|_2\geq\delta]\leq2p\cdot exp\left(-\frac{n\delta^2}{2d(1+\delta)}\right)\] for the \(\mathbb{E}(\mathrm{diag}(X^\top X/n))=I_p\), and by coupling the previous conclusions, it seems that the dropout estimator tends to the ridge estimator [42] and has the same asymptotic risk as the ridge estimator in [3].

Just as with dropout, the implementation of batch normalization [43] is uncomplicated—it merely requires the incorporation of batch normalization layers into the network architecture. Its inherent simplicity positions batch normalization as an ideal candidate for expediting the training process associated with varying combinations of hyperparameters required to optimize the use of dropout layers. While this may not necessarily accelerate each training epoch, it’s likely to facilitate swifter convergence. Given their similarities, several research studies have compared the two techniques [44]–[46]. Based on this, we posit that Batch Normalization might also hold the capacity to alleviate the double descent phenomenon.

6 Conclusion↩︎

Our study employs theoretical and empirical methods to investigate the impact of dropout regularization in linear regression. Theoretically, we extend our analysis to general ridge regression, adopting a non-asymptotic approach to understand the behavior of test error in linear regression models with dropout for isotropic Gaussian features. Empirically, we demonstrate through numerical experiments that dropout effectively mitigates the double descent phenomenon in linear and nonlinear models, including multi-layer CNNs. Our key contributions include demonstrating the elimination of sample-wise and model-wise double descent and providing evidence of dropout efficacy in multi-layer CNNs. For our future work, we will not only pay attention to the exact solution of the expected test risk but also consider the nonisotropic linear regression, even the theoretical analysis for multi-layer neural networks.

7 Proof↩︎

7.1 Proof of Theorem 1↩︎

The First term of (10 )

Let \(\Lambda:=\frac{1-\gamma}{\gamma} \operatorname{diag}\left(X^\top X\right)\), \(A:=\Lambda^{-1} X^\top X\), and \(\alpha = \frac{1-\gamma}{\gamma}\). We

evaluate \(\mathbb{E}[(I+ \left.A^{\top}\right)^{-1}(I+A)^{-1} ]\). Note \(\left(I+A^{\top}\right)^{-1}(I+A)^{-1} =I-A-A^{\top}+A^{2}+\left(A^{\top}\right)^{2}+A^{\top} A+\cdots\). For \(A=\left(a_{i, j}\right)\), we have \(a_{i, j}=\frac{\gamma}{1-\gamma} \cdot \frac{\sum_{k} x_{k, i} x_{k, j}}{\sum_{k} x_{k, i}^{2}}\), and \(\mathbb{E}[A]=\frac{\gamma}{1-\gamma} \cdot I\), which is due to (2 ). For \(A^{2}=\left(b_{i, j}\right)\), we have \[b_{i,

j}=\left(\frac{\gamma}{1-\gamma}\right)^{2} \cdot \sum_{h} \frac{\sum_{k} x_{k, i} x_{k, h}}{\sum_{k} x_{k, i}^{2}} \frac{\sum_{k} x_{k, h} x_{k, j}}{\sum_{k} x_{k, h}^{2}}\] and \(\mathbb{E}\left[A^{2}\right]=\left(\frac{\gamma}{1-\gamma}\right)^{2} \frac{p}{n} \cdot I\). Apparently, we have \(\mathbb{E}\left[A^{\top}\right]=\frac{\gamma}{1-\gamma} \cdot I\) and \(\mathbb{E}\left[\left(A^{\top}\right)^{2}\right]=\left(\frac{\gamma}{1-\gamma}\right)^{2} \frac{p}{n} \cdot I\) (\(\mathbb{E}[b_{i,j}]=0\) if \(i\neq j\); if

\(i=j\), \(\mathbb{E}[\hat{\rho}^2]=\frac{1}{n}\) if \(\rho=0\)). Finally, we evaluate \(\mathbb{E}\left[A^{\top}

A\right]\). For \(A^{\top} A=\left(c_{i, j}\right)\), we have \[c_{i, j}=\left(\frac{\gamma}{1-\gamma}\right)^{2} \cdot \sum_{h} \frac{\sum_{k} x_{k, i} x_{k, h}}{\sum_{k} x_{k, h}^{2}}

\frac{\sum_{k} x_{k, h} x_{k, j}}{\sum_{k} x_{k, h}^{2}}\] so that \(E\left[c_{i, j}\right]=0\) for \(i \neq j\). \[\begin{align} \mathbb{E}\left[c_{i,

i}\right]&=\left(\frac{\gamma}{1-\gamma}\right)^{2} \sum_{h}{\mathbb{E}}\left(\frac{\sum_{k} x_{k, i} x_{k, h}}{\sum_{k} x_{k, h}^{2}}\right)^{2}\\ &={\left(\frac{\gamma}{1-\gamma}\right)^{2} \sum_{h}{\mathbb{E}}\left(\sum_{k} x_{k, i}\frac{x_{k,

h}}{\sum_{k} x_{k, h}^{2}}\right)^{2}\quad(x_i\perp\!\!\!\perp x_h)}\\ &={\left(\frac{\gamma}{1-\gamma}\right)^{2} \sum_{h}{\mathbb{E}}\left(\frac{\sum_{k}x_{k, h}^2+2\sum_{i\neq j}x_{i,h}x_{j,h}}{(\sum_{k} x_{k,

h}^{2})^2}\right)\quad(\mathbb{E}\left(2\sum_{i\neq j}x_{i,h}x_{j,h}\right)=0)}\\&=\left(\frac{\gamma}{1-\gamma}\right)^{2} \sum_{h} \mathbb{E}\left[\frac{1}{\sum_{k} x_{k, h}^{2}}\right]\;,

\end{align}\] where we have used \(\mathbb{E}\left(\sum_{r} u_{r} \alpha_{r}\right)^{2}={\mathbb{E} \sum_{r} u_{r}^{2} \alpha_{r}^{2}=\sum_{r} \alpha_r^{2}}\), when \(u_{r} \sim

N(0,1)\), \(r=1,2, \cdots\), are independent. Then, from the inverse density function of chi-square distribution, we have \(\mathbb{E}\left[A^{\top} A\right]

=\left(\frac{\gamma}{1-\gamma}\right)^{2} \cdot \frac{p}{n-2} \cdot I\). Then, the first term of (10 ) is \[\begin{align}

\left\{1-2\left(\frac{\gamma}{1-\gamma}\right)\frac{p}{n}+\left(\frac{\gamma}{1-\gamma}\right)^{2}\left( \frac{2p}{n}+\frac{p}{n-2}\right)\right\}\left\|\beta_{0}\right\|^{2}\;.

\end{align}\]

The Second term of (10 )

Since \[\left\|\left(X^{\top} X+\Lambda\right)^{-1} X^{\top}\right\|_{F}^{2}=\operatorname{trace}\left\{\left(X^{\top} X+\Lambda\right)^{-1} X^{\top}\right\}^{\top}\left\{\left(X^{\top} X+\Lambda\right)^{-1}

X^{\top}\right\}\] the diagonal entries of \(X \Lambda^{-1}\left\{I-A^{\top}-A+A^{2}+\left(A^{\top}\right)^{2}+A^{\top} A\right\} \Lambda^{-1} X^{\top}\) are \[\begin{align}

(X\Lambda^{-1}\Lambda^{-1}X^\top)\dots m_r^{\prime}&=\left(\frac{\gamma}{1-\gamma}\right)^{2} \sum_{i}\frac{x_{r, i}^2}{(\sum_{k} x_{k, i}^{2})^2}\\ (X\Lambda^{-1}A\Lambda^{-1}X^\top)\dots a_{r}^{\prime}&=\left(\frac{\gamma}{1-\gamma}\right)^{3}

\sum_{i} \sum_{j} \frac{x_{r, i} x_{r, j} a_{i, j}}{\sum_{k} x_{k, i}^{2} \sum_{k} x_{k, j}^{2}} \\ (X\Lambda^{-1}A^2\Lambda^{-1}X^\top)\dots b_{r}^{\prime}&=\left(\frac{\gamma}{1-\gamma}\right)^{4} \sum_{i} \sum_{j} \frac{x_{r, i} x_{r, j} b_{i,

j}}{\sum_{k} x_{k, i}^{2} \sum_{k} x_{k, j}^{2}} \\ (X\Lambda^{-1}A^\top A\Lambda^{-1}X^\top)\dots c_{r}^{\prime}&=\left(\frac{\gamma}{1-\gamma}\right)^{4} \sum_{i} \sum_{j} \frac{x_{r, i} x_{r, j} c_{i, j}}{\sum_{k} x_{k, i}^{2} \sum_{k} x_{k,

j}^{2}}\\

\end{align}\] for \(r=1, \ldots, n\). First, we derive \[\begin{align} \sum_{r}m_r^{\prime}&=\left(\frac{\gamma}{1-\gamma}\right)^{2}{\mathbb{E}} \sum_{i}\frac{\sum_{r}x_{r,

i}^2}{(\sum_{k} x_{k, i}^{2})^2}=\left(\frac{\gamma}{1-\gamma}\right)^{2} \frac{p}{n-2} \end{align}\] \[\begin{align} \sum_{r} a_{r}^{\prime} &=\left(\frac{\gamma}{1-\gamma}\right)^3 \sum_{r} \sum_{i}\left\{\sum_{j

\neq i} \frac{x_{r, i} x_{r, j} \frac{\sum_{k} x_{k, i} x_{k, j}}{\sum_{k} x_{k, i}^{2}}}{\sum_{k} x_{k, i}^{2} \sum_{k} x_{k, j}^{2}}+\frac{x_{r, i}^{2}}{\left(\sum_{k} x_{k, i}^{2}\right)^{2}}\right\} \\ &=\left(\frac{\gamma}{1-\gamma}\right)^3

\sum_{i}\left\{\sum_{j \neq i} \frac{\left(\sum_{k} x_{k, i} x_{k, j}\right)^{2}}{\left(\sum_{k} x_{k, i}^{2}\right)^{2} \sum_{k} x_{k, j}^{2}}+\frac{1}{\sum_{k} x_{k, i}^{2}}\right\} \\ &=\left(\frac{\gamma}{1-\gamma}\right)^3 \sum_{i}

\frac{1}{\sum_{k} x_{k, i}^{2}}\left(\sum_{j \neq i} \hat{\rho}_{i, j}^{2}+1\right)

\end{align}\] Please note that the distribution of \(\hat{\rho}{i, j}\) is independent of \(x{1, i}, \ldots, x_{n, i}\) (as demonstrated in the derivation).Hence, the expectation of

\(\sum_{r} a_{r}^{\prime}\) is \(\left(\frac{\gamma}{1-\gamma}\right)^3\left(\frac{p-1}{n}+1\right) \sum_{i} \frac{1}{\sum_{k} x_{k, i}^{2}}\), when \(x_{1, i},

\ldots\) \(x_{n, i}\) are given. Thus, we obtain \[E\left[\sum_{r} a_{r}^{\prime}\right]=\left(\frac{\gamma}{1-\gamma}\right)^3 \cdot \frac{p}{n-2}

\cdot\left(\frac{p-1}{n}+1\right)\] On the other hand. \[\sum_{r} b_{r}^{\prime}=\left(\frac{\gamma}{1-\gamma}\right)^4\sum_{r} \sum_{i} \sum_{j} \frac{x_{r, i} x_{r, j}}{\sum_{k} x_{k, i}^{2} \sum_{k} x_{k, j}^{2}}

\sum_{h} \frac{\sum_{k} x_{k, i} x_{k, h}}{\sum_{k} x_{k, i}^{2}} \frac{\sum_{k} x_{k, h} x_{k, j}}{\sum_{k} x_{k, h}^{2}}\] Let \[\beta_{i, j, h}:=\sum_{r} \frac{x_{r, i} x_{r, j}}{\sum_{k} x_{k, i}^{2} \sum_{k} x_{k,

j}^{2}} \frac{\sum_{k} x_{k, i} x_{k, h}}{\sum_{k} x_{k, i}^{2}} \frac{\sum_{k} x_{k, h} x_{k, j}}{\sum_{k} x_{k, h}^{2}}\] Then, the \(\sum_{h} \beta_{i, j, h}\) with \(i=j\) is

\(\frac{1}{\sum_{k} x_{k, i}^{2}} \sum_{h}\left(\frac{\sum_{k} x_{k, h} x_{k, j}}{\sqrt{\sum_{k} x_{k, h}^{2} \sum_{k} x_{k, i}^{2}}}\right)^{2}\) and its expectation is \(\frac{1}{n-2}\left(\frac{p-1}{n}+1\right)\). When \(j \neq i=h\), it’s \(\frac{1}{\sum_{k} x_{k, i}^{2}}\left(\frac{\sum_{k} x_{k, i} x_{k, j}}{\sqrt{\sum_{k} x_{k,

i}^{2} \sum_{k} x_{k, j}^{2}}}\right)^{2}\), its expectation is \(\frac{1}{n(n-2)}\) . Since the \(\beta_{i, j, h}\) with \(i, j, h\) different is

\[\sum_{r} \frac{x_{r, i} x_{r, j}}{\sum_{k} x_{k, i}^{2} \sum_{k} x_{k, j}^{2}} \frac{\sum_{k} x_{k, i} x_{k, h}}{\sum_{k} x_{k, i}^{2}} \frac{\sum_{k} x_{k, h} x_{k, j}}{\sum_{k} x_{k, h}^{2}}\] its expectation is \(\frac{1}{n(n-2)}\) If we take expectation w.r.t. \(\left\{x_{k, h}\right\}\), then the value becomes \[\sum_{r} \frac{x_{r, i} x_{r, j}}{\left(\sum_{k} x_{k,

i}^{2}\right)^{2} \sum_{k} x_{k, j}^{2}} \cdot \frac{1}{n} \sum_{k} x_{k, i} x_{k, j}\;,\] where the fact \(E\left[\frac{Z_{1}}{Z_{1}+\cdots+Z_{m}}\right]=\frac{1}{m}\) for i.i.d. \(Z_{1},

\ldots, Z_{m}\) has been used. Thus, the expectation is \(\frac{1}{n(n-2)}\) as well. Hence, \(E\left[\sum_{h} \beta_{i, j, h}\right]\) with \(i \neq

j\) is \(\frac{p}{n(n-2)}\) . Therefore, \[E\left[\sum_{r} b_{r}^{\prime}\right]=\left(\frac{\gamma}{1-\gamma}\right)^4\frac{1}{n-2}\left(\frac{2p-1}{n}+1\right)\;.\] Finally, we

obtain \(E\left[\sum_{r} c_{r}^{\prime}\right]\) . Let \[\gamma_{i, j, h}:=\sum_{r} \frac{x_{r, i} x_{r, j}}{\sum_{k} x_{k, i}^{2} \sum_{k} x_{k, j}^{2}} \frac{\sum_{k} x_{k, i} x_{k, h}}{\sum_{k}

x_{k, h}^{2}} \frac{\sum_{k} x_{k, h} x_{k, j}}{\sum_{k} x_{k, h}^{2}} .\] If \(i=j\), we have \(\sum_{h} \gamma_{i, j, h}:=\sum_{h} \frac{1}{\sum_{k} x_{k, h}^{2}} \cdot\left(\frac{\sum_{k}

x_{k, i} x_{k, h}}{\sqrt{\sum_{k} x_{k, i}^{2}} \sqrt{\sum_{k} x_{k, h}^{2}}}\right)^{2}\) and its expectation is \(\frac{d}{n(n-2)}\). If \(i \neq j, h=i\) \[\gamma_{i, j, h}:=\sum_{r} \frac{x_{r, i} x_{r, j}}{\sum_{k} x_{k, i}^{2} \sum_{k} x_{k, j}^{2}} \frac{\sum_{k} x_{k, i} x_{k, j}}{\sum_{k} x_{k, i}^{2}}=\frac{1}{\sum_{k} x_{k, i}^{2}}\left(\frac{\sum_{k} x_{k, i} x_{k,

j}}{\sqrt{\sum_{k} x_{k, i}^{2}} \sqrt{\sum_{k} x_{k, j}^{2}}}\right)^{2}\] and its expectation is \(\frac{1}{n(n-2)}\) If \(i, j, h\) are different, if we fix \(\left\{x_{k, j}\right\}\) and \(\left\{x_{k, h}\right\}\), then the expectation of \(\gamma_{i, j, h}:=\frac{1}{\sum_{r} x_{r, i}^{2}} \hat{\rho}_{j, h} \hat{\rho}_{i,

j} \hat{\rho}_{i, h}\) is zero. Thus, we have \[E\left[\sum_{r} c_{r}^{\prime}\right]=\left(\frac{\gamma}{1-\gamma}\right)^4(\frac{p^{2}}{n(n-2)}+\frac{2 p(p-1)}{n(n-2)})=\left(\frac{\gamma}{1-\gamma}\right)^4\frac{3

p^{2}-2 p}{n(n-2)}\] with \(\alpha=\frac{\gamma}{1-\gamma}\). Next, the test error is calculated by summing these terms, resulting in\[\begin{align}

&\left\{1-2\alpha+\alpha^{2} \frac{p}{n}\left(3+\frac{2}{n-2}\right)\right\}\left\|\beta_{*}\right\|^{2}+\alpha^2\frac{p}{n-2}\\&+\alpha^{3} \frac{p}{n-2}\left(\frac{p-1}{n}+1\right)+\alpha^4\frac{4p^2-2p-1+n}{n(n-2)}\end{align}\]

8 Experiment Details↩︎

8.1 Models↩︎

Standard CNNs. We consider a simple family of 5-layer CNNs, with four Conv-Batch Norm-ReLU-MaxPool layers and a fully-connected output layer. We scale the four convolutional layer widths as [\(k,2k,4k,8k\)]. The MaxPool is [\(1, 2, 2, 8\)]. For all the convolution layers, the kernel size = \(3\), stride = \(1\), and padding = \(1\). This architecture is based on the “backbone” architecture from [47]. Fork= 64, this CNN has 1558026 parameters and can reach\(>90\%\) test accuracy on CIFAR-10 [48] with data augmentation. The scaling of model size with \(k\) is shown in "Figure 13" of [5].

TLY was supported by JST, the establishment of university fellowships towards the creation of science technology innovation, Grant Number JPMJFS2125. JS was supported by Grants-in-Aid for Scientific Research (C) 22K11931.

Declarations↩︎

Funding - TLY was supported by JST, the establishment of university fellowships towards the creation of science technology innovation, Grant Number JPMJFS2125. JS was supported by Grants-in-Aid for Scientific Research (C) 22K11931.

Conflict of interest/Competing interests - The authors declare no conflict of interest.

Ethics approval - Not Applicable.

Consent to participate - Not Applicable.

Consent for publication - Not Applicable.

Availability of data and materials - Not Applicable.

Code availability - The code will be publicly available once the work is published upon the agreement of different sides.

Authors’ contributions: TLY: Idea and Methodology; JS: Methodology and Supervision. All authors discussed the theoretical and experimental results and contributed to the final manuscript.