Room Impulse Response Estimation in a Multiple Source Environment

May 25, 2023

1 Introduction↩︎

The acoustic properties of a room are described by its room impulse response (RIR), which depends on factors such as room geometry, materials, and object layout. Having RIR in hand is critical for various applications, including virtual and augmented reality [1]–[4], dereverberation [5], and speech recognition [6]. However, obtaining RIRs through physical measurements is an arduous and time-consuming task, motivating research on methods for estimating RIR. One area of research on RIR estimation is blind estimation, which directly predicts RIR from reverberated source recordings without any additional information of the room [7]–[9]. However, most previous methods only consider a single sound source in a room, while in reality, rooms often have multiple sound sources at various locations.

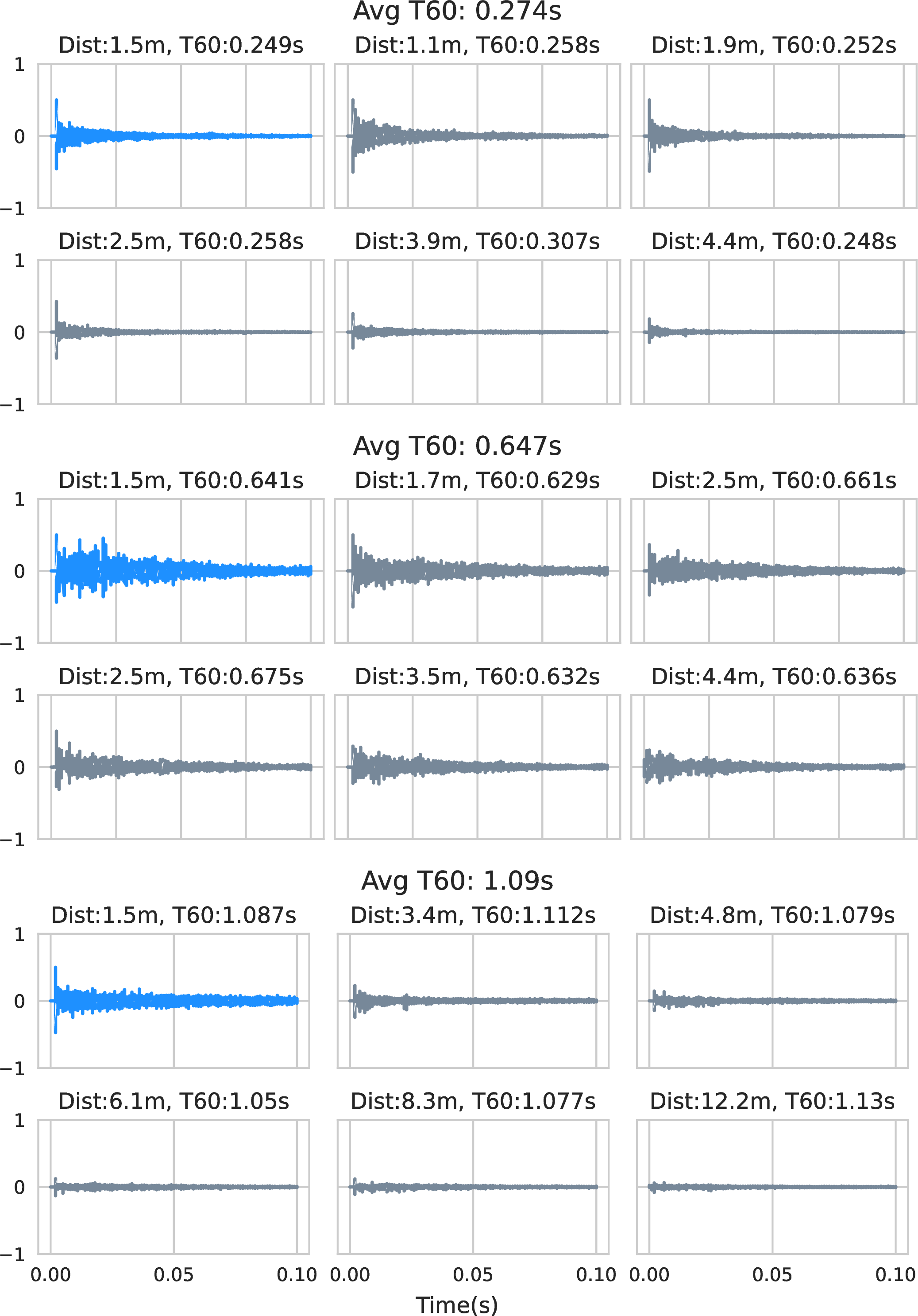

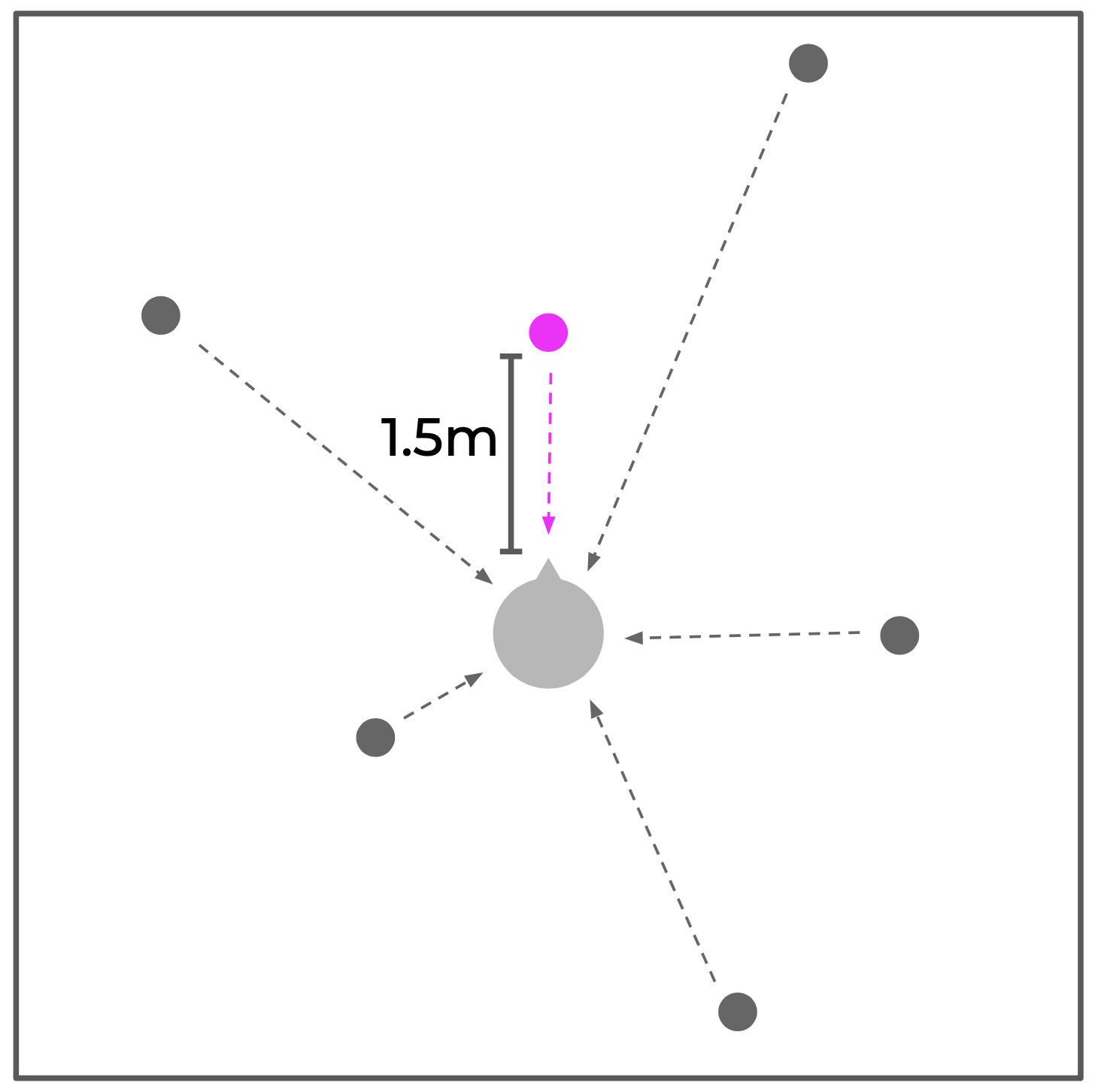

As shown in Figure 1, RIRs vary based on the source-receiver position and orientation, posing a challenge in determining which RIR or portion of the RIR should be estimated in a multiple source environment. Ultimately, the solution will depend on the specific task at hand, which may require estimation of RIRs of all sources or a single RIR that captures general information about the room.

Figure 1: Although the T60 values for RIRs measured in the same room are similar, the waveform shapes vary depending on the source-receiver distance and direction. The representative RIR is in blue..

In this work, we introduce a task of estimating a single, central RIR in a multiple source environment. Our key assumption is that a single RIR can serve as an effective representation of the acoustic characteristics of a room. This hypothesis is grounded in the fact that humans have the ability to distinguish whether different sound sources come from the same room, despite variations in source locations [10]. Additionally, it is well known that while the direct and early reflections of RIR are influenced by the source-receiver position, the late reverberation provides a more comprehensive understanding of the room’s acoustics [11], [12]. Based on these observations, there exists some shared acoustical features among RIRs that originate from the same room. In the following sections, we introduce the training method for estimating representative RIRs, including data processing procedures, and present evaluation metrics for multiple source environments. We also share how we post-processed the estimated monaural RIRs to obtain binaural filters for spatial audio rendering use cases.

1.1 Related works↩︎

Research on blind estimation of RIR or room acoustic parameters from reverberated sources in single source environments has been extensive, employing methods from signal processing [13]–[15] to deep learning [7]–[9], [16], [17]. Previously, room acoustic parameters, such as T60 and direct-to-reverb ratio (DRR), were more commonly estimated, but recent advances in neural networks allowed direct RIR estimation in the time domain [8], [9]. Despite efforts to address the lack of data for training neural networks [16], [18], data remains as a challenge, resulting in an active usage of synthetically generated data. There is even a smaller number of dataset for training in a multiple source environment [19]–[21], which requires measurements of RIRs at multiple locations in a room and in several thousand unique rooms.

Another line of research is a multi-modal approach on estimating RIR from images, videos, or 3D meshes to render sound in a specific room [22]–[24]. In addition, an extensive study involves synthesizing a RIR given source-receiver locations and room descriptions, such as room geometry and T60, which is especially useful for virtual reality applications [25]–[28].

Figure 2: In a multiple source environment, there are many sources in the room (gray circles). The representative RIR is designated as the RIR from a source that is 1.5 meters away from the listener at the center (pink circle)..

2 Methods↩︎

In a multiple source environment, sources are located in various locations within the room and emitted sounds reach the listener from all directions (Figure 2 gray circles). Regardless of how many sources there are and where each source is located, we define the representative RIR as the one that is located 1.5 meters away from the listener at center. We chose 1.5 meters as it approximates the typical conversational distance between people in a room. We imagine virtual conversations as a common use cases for AR/VR scenario. Furthermore, for spatial audio music production, it is recommended to render with a center speaker placed at 1.5 meters from the listener1. Therefore, we setup the system to always predict this central representative RIR, when given any multiple source reverberated recordings.

Figure 3: Single source (SS) vs. multiple source (MS) training setup. In the MS setup, the model is trained to predict the representative RIR, which may not be present in the RIRs utilized to produce

the reverberated audio recording..

2.1 Dataset↩︎

In order to train a model for multiple source scenarios, it is essential to have a dataset that comprises of RIRs measured at different locations within the room and from sufficiently large number of unique rooms. As listed in Table 1, we were able to obtain only 72 rooms with real measured RIRs. To compensate for the limited number of data points, we utilized the large synthetic RIR dataset, GWA [21], which contains over 6000 rooms and 10 RIRs per room, and also generated our own synthetic dataset, referred to as GRIR.

GRIR generation process uses the room simulation method from the pyroomacoustics library [29] to generate multiple RIRs with random source positions in a predefined room. Generated room size ranges from \(24.5 m^2\) to \(4000 m^2\) and T60 value ranges from 0.1s to 1.5s. After generating a RIR with the library, we applied the following fine-tuning steps to render a more realistic RIR. First, we replaced the late reverberation part of the signal with a frequency-dependent exponentially decaying white noise [30]. This is because the late reverberation generated using the library is sparse and unnatural. Then, we applied a random frequency-dependent decay pattern for all RIRs in each room to mimic the unique patterns of a room arising from the type of wall materials and objects in the room. With the addition of 11,000 rooms from GRIR, we have collected data of more than 12,000 unique rooms.

Example distribution of the dataset can be seen in Table [table:test95dataset]. The data demonstrates that, although there are variations in RIRs within a room, similarities can also be observed. The T30 values, which are not heavily influenced by the source-receiver location, exhibit minor variations while the C50 values, which are more dependent on early reflections, display larger variations. Anechoic speech signals used as source signals are obtained from DAPS [31] and VCTK [32] dataset.

*cc Dataset/room & <multicolumn,2>T30 [sec] & & <multicolumn,2>EDT [sec] & & <multicolumn,2>C50 [dB] &

& \(\mu\) & \(\sigma\) & \(\mu\) & \(\sigma\) & \(\mu\) & \(\sigma\)

BUT/Hotel_SkalskyDvur_Room112 & 0.448 & 0.12 & 0.406 & 0.119 & 6.332 & 3.235

BUT/VUT_FIT_L227 & 1.942 & 0.082 & 2.618 & 0.52 & -7.543 & 4.41

BUT/VUT_FIT_Q301 & 0.613 & 0.219 & 0.489 & 0.216 & 5.475 & 5.457

GWA/room0 & 0.804 & 0.058 & 0.704 & 0.142 & 1.863 & 2.743

GWA/room1 & 0.503 & 0.067 & 0.341 & 0.033 & 8.699 & 0.497

GWA/room2 & 0.428 & 0.048 & 0.415 & 0.07 & 6.542 & 1.179

GRIR/room0 & 0.274 & 0.022 & 0.235 & 0.038 & 11.07 & 1.21

GRIR/room1 & 0.647 & 0.018 & 0.859 & 0.109 & 1.466 & 1.733

GRIR/room2 & 1.09 & 0.028 & 1.214 & 0.061 & 0.237 & 0.709

2.2 Data pre-processing↩︎

Prior to training the model, we perform a pre-processing step to normalize the RIRs across datasets. For each room in the dataset, we first identify the representative RIR. For datasets with source-receiver distance and/or orientation metadata, RIRs at a distance around 1.5m (often between 1.4m and 2.0m) and at center are nominated. However, many datasets do not provide distance and orientation information. In such cases, we select the RIR with the largest peak value, because it implies that this RIR has the smallest source-receiver distance and most datasets measure the closest RIR at distances around 1.0m. After the identification of the representative RIR, we compute a normalization factor, referred to as a room normalization factor, which normalizes the representative RIR’s absolute peak amplitude to 0.5. This normalization factor is then applied equally to the rest of the RIRs in the same room. As a result, each RIR in the room can be normalized, while conserving the DRR. Figure 1 shows various RIRs in 3 selected rooms after normalization applied.

| Dataset name | Real RIR | # of rooms | # of RIRs per room (min \(\sim\) max) | Total # of RIRs | Train/Valid/Test |

|---|---|---|---|---|---|

| ACE [33] | True | 7 | 2 | 14 | Test |

| ASH IR | True | 39 | 5 \(\sim\) 72 | 729 | Train |

| BUT [7] | True | 9 | 62 \(\sim\) 465 | 2325 | Train, Test |

| C4DM | True | 3 | 130 \(\sim\) 169 | 468 | Train |

| dEchorate[20] | True | 8 | 30 | 1680 | Train |

| GRIR | False | 9831 | 4 \(\sim\) 14 | 58922 | Train, Valid, Test |

| GWA [21] | False | 1556 | 3 \(\sim\) 10 | 6653 | Train, Valid, Test |

| IoSR | True | 4 | 37 | 148 | Train |

| OpenAir | True | 21 | 2 \(\sim\) 41 | 182 | Train |

| REVERB | True | 2 | 12 \(\sim\) 24 | 36 | Train |

| RWCP | True | 7 | 7 19 | 81 | Train |

| R3VIVAL | True | 8 | 34 | 272 | Train |

| Train | - | 9168 | 2 \(\sim\) 465 | 64452 | Train |

| Valid | - | 1138 | 5 \(\sim\) 10 | 7677 | Valid |

| Test | - | 1254 | 2 \(\sim\) 434 | 8490 | Test |

2.3 Model and Training Method↩︎

To apply our proposed training method, we implemented the Filtered Noise Shaping model [8], which directly generates RIR in the time-domain. Given a reverberated signal of 2.74s, the model predicts a 1 second RIR. The major characteristic of the model is that it generates the early reflections and late reverberation separately. Specifically, the model constructs the late reverberation as a sum of 10 decaying filtered noise, while the early components are generated sample by sample. The boundary between two parts is predefined to be at 0.05s.

As depicted in Figure 3, previous works have utilized a single source training setup (SS), in which the input reverberated signal contains only one source and the model had to predict the corresponding RIR.

In our proposed multiple source training setup (MS), the model is trained to estimate the representative RIR when given a mixture of multiple reverberated source signals. Sources are located at different positions and thus, have different

RIRs. During training, we dynamically synthesize input signals as a combination of 1 to 6 reverberated sources. Each of the reverberated sources is a convolution of a randomly selected RIR from the given room with a random anechoic speech signal.

The input and output format of the MS method is same as that of the SS: both take reverberated source signal as an input and the predicted RIR as an output. The model is trained with the multi-resolution STFT loss [34], which consists of the spectral convergence loss (1) and the spectral log-magnitude loss (2), where \(||\cdot||_F\) is the Frobenius norm, \(||\cdot||_1\) is the L1 norm, and N is the number of STFT frames. As the name suggests, the multiresolution STFT loss is the sum of aforementioned losses

over \(R\) different STFT resolutions (3). We experimented with additional loss functions, such as GAN loss [9] and energy decay relief (EDR) loss [28], but they did not provide perceptually significant

improvement.

\[\begin{align} \label{eq1} L_{sc}(h, \hat{h}) &= \frac{||STFT(h) - STFT(\hat{h})||_F}{||STFT(h)||_F} \\ L_{mag}(h, \hat{h}) &= \frac{1}{N}||\log(STFT(h)) - \log(STFT(\hat{h}))||_1 \\ L_{stft} &= \sum^{R} L_{sc_r}(h, \hat{h}) + L_{mag_r}(h, \hat{h}) \end{align}\tag{1}\]

As in [8], we use the sampling rate of 48kHz. Both the SS and MS methods are trained on 2 GPUs each

with a batch size of 32. Training was stopped after 600 epochs. When training the MS, we used a checkpoint from the SS at 100 epochs and continued the training in the MS setup. The initial learning rate was set to

5e-5.

2.4 Post-processing for binaural rendering↩︎

To test the practicality of the trained model in spatial audio applicationss, we extended the predicted monoaural RIRs need to BRIRs. To this end, we posited that updating only the late reverberation with our predicted RIR, while retaining the direct and early reflection components from a HRIR would sufficiently represent various acoustical environments. Our internal listening test indeed demonstrated that modifying the late reverberation alone was adequate to discern between different rooms. Thus, we binauralize the late reverberation following the binauralization method from [35], which convolves the segments of late reverberation with a set of binaural noise and sums with overlap-add. Then, we simply combine the synthesized late component with the publicly available CIPIC HRIR filters [36] as our internal application only requires BRIRs for a fixed location of sources. Since the predicted RIR has a maximum peak amplitude of 0.5 and the HRIR has that of 0.6, we rescale the predicted RIR to match HRIR. Two filters are combined using the Hann window. Note that the evaluation of the model is only done on monaural RIRs.

3 Results and Discussion↩︎

3.1 Objective evaluation↩︎

| Test dataset | # of Rooms | Real RIR | Model | STFT Loss | T60 Loss | DRR Loss |

|---|---|---|---|---|---|---|

| ACE | 9 | Yes | SS |

2.49 | 0.092 | 224.17 |

MS |

2.072 | 0.081 | 169.12 | |||

| BUT | 3 | Yes | SS |

2.267 | 0.079 | 128.987 |

MS |

2.416 | 0.070 | 117.278 | |||

| GWA & GRIR | 78 + 94 | No | SS |

1.45 | 0.041 | 12.714 |

MS |

1.135 | 0.023 | 8.412 |

We present several evaluation methods to show how effective the MS approach is in handling multiple source environment compared to the SS approach. Note that the results are evaluated on monaural RIRs, not binaural RIRs.

Commonly used metrics in evaluating RIR estimation are STFT loss, T60 error and DRR error. STFT loss is the same multi-resolution loss function used during training 1 ; T60 error is the mean squared error (MSE) of T60 values and DRR

error is the MSE of DRR values between the predicted and the ground-truth RIR. Our test data comprises of both real and simulated RIR data. For real RIR data, we used BUT [18] and ACE [33] datasets. From BUT dataset, we selected 3 out of 9

rooms ("Hotel_SkalskyDvur_Room112", "VUT_FIT_L227", "VUT_FIT_Q301") for testing. From the ACE dataset, we used the provided "babble noise" (recording of multiple people talking) as the input signal, instead of generating reverberated signals from RIRs. For

simulated RIR data, we used rooms from GWA and GRIR dataset that were not used during training stage. RIRs were randomly chosen from each room to construct all test recordings. In order to assess both single and multi-source environments, we prepared 6

sets of test data for each dataset, starting with just one source and gradually increasing the number of sources in the recording. The final set comprises of reverberated recordings with all 6 sources playing simultaneously. Table 2 summarizes the performance of the SS and MS methods. The values are averages over 1 to 6 sources. We observe that training in the MS setting generally

improves the performance of the model on both real and simulated datasets.

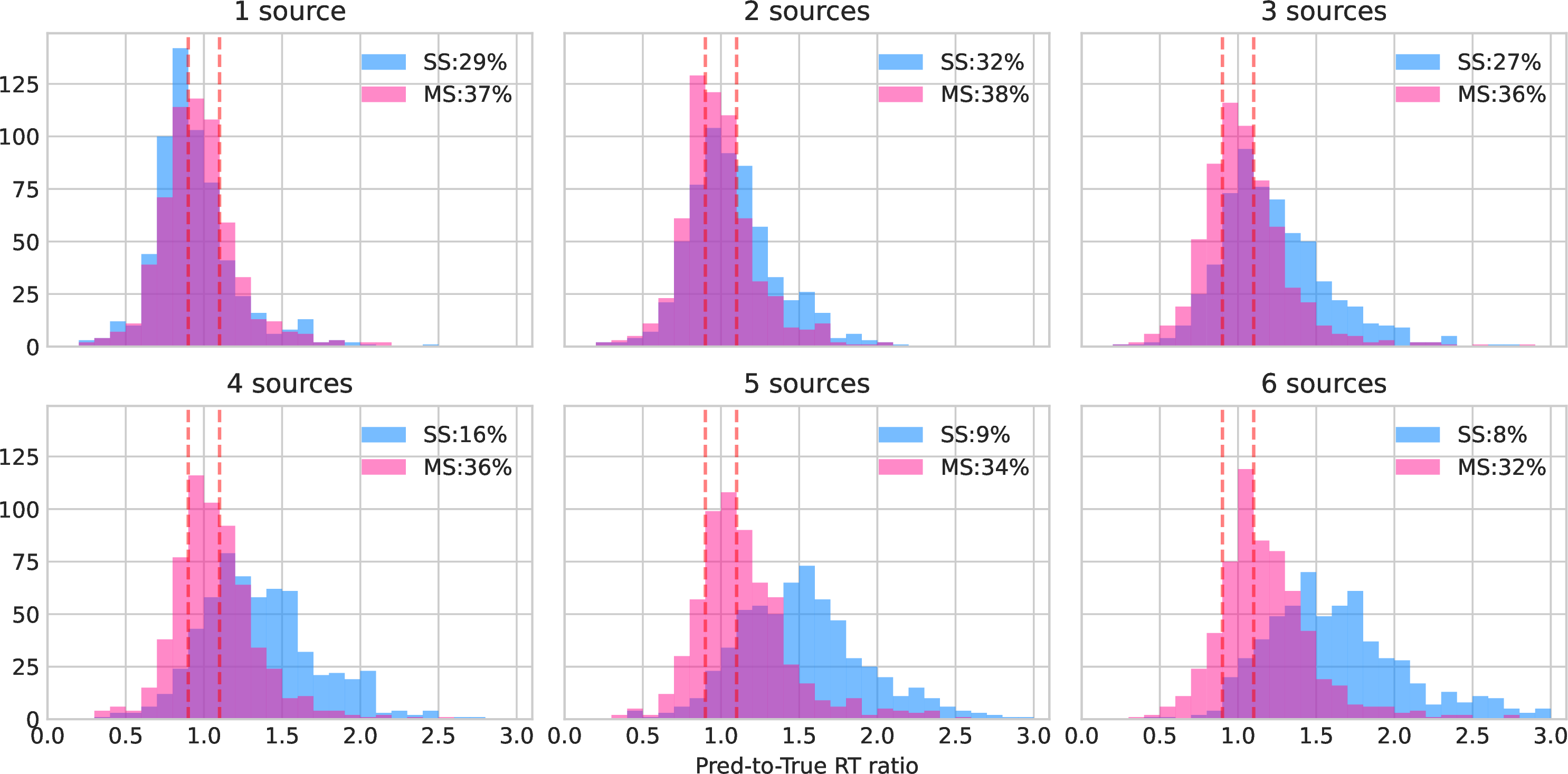

Figure 4: The performance trend of the SS and MS method as the number of sources in the environment increases..

For practical applications, it is important for a model to exhibit a stable performance, regardless of the number of sources in the environment. We plotted the performance of the model per number of sources in the input signals (Figure 4). The evaluation shows that the model trained in the SS setting exhibits a degrading trend of performance as the number of sources increases. On the other hand, the model trained in the

MS setting shows a consistent performance. Importantly, we noticed that the MS method performs better than the SS even in the single source environment, which implies that the MS training setup can

replace the SS setup for the proposed task.

Figure 5: Distribution of pred-to-True T60 ratio for rooms with small T60 (T60 < 0.7). Ratio of 1.0 indicates exactly correct prediction of T60. The red dashed lines represent % error range and numbers in the legend box shows the percentage of rooms predicted within this perceptual range. Total of 615 recordings from GWA and GRIR test data were used..

Figure 6: Distribution of pred-to-True T60 ratio for rooms with large T60 (T60 >= 0.7). Refer to Figure 5 for detail. Total of 245 recordings from GWA and GRIR test data were used..

From our observation, the SS method tends to predict larger T60 values in multiple source environments. We believe this occurs, because blind RIR estimation models most likely rely on the decay patterns to predict RIRs [37]. When there is a mixture of multiple reverberated sources, it may be more difficult to identify these patterns for individual sources. To verify

this observation, we plotted the distribution of prediction-to-true T60 ratios per number of sources (Figure 5, 6). The value of 1 for prediction-to-true T60 ratio indicates

that the model made an accurately prediction, while a value less than 1 indicates the model predicted the RIR with a smaller T60 value, and a value greater than 1 indicates the model predicted the RIR with a larger T60 value. Similar to Figure 4, we expect a robust model to exhibit a consistent distribution despite the number of sources in the input signal.

The prediction-to-true T60 ratios for small and large T60 rooms are shown in figures 5 and 6, respectively. The red dashed lines represent % error range, which we consider

as a range of T60 values that are indistinguishable perceptually. Numbers in the legend box shows the percentage of rooms predicted within this perceptual RIR range. Based on both figures, the SS and MS methods exhibit similar

distributions in the pred-to-true T60 ratio in single source scenario. However, as the number of sources increases, the SS method shows a significant shift in the direction of higher T60 ratios, especially in rooms with smaller T60 values

(Figure 5). This supports the observation that the model tends to overestimate T60 values as the number of sources increases, leading to a sharp decrease in the percentage of correctly predicted rooms, from 27% to

8%. On the other hand, the MS method maintains a relatively stable distribution, indicating its robustness against the number of sources. In highly reverberant rooms with larger T60 values, both models show no clear trend with increasing

number of sources (Figure 6). The number of sources appear to not impact the performance of the models as much in highly reverberated environments. We assume that since identifying the decay pattern is already

non-trivial in very reverberant rooms, adding more sources does not lead to more confusion. Nevertheless, the MS method slightly outperforms the SS in large T60 rooms as well.

We chose to present our results separately for rooms with small and large T60 values due to our observation that errors in T60 values are more discernible to the human ear in smaller T60 rooms. Therefore, showing the model’s robustness in such rooms

would be more valuable. By plotting the rooms separately, we were able to observe the distinct trend of performance in small and large T60 rooms and demonstrate that the MS model exhibits more promising performance in small T60 rooms.

3.2 Qualitative evaluation↩︎

We internally carried out a MUSHRA listening test with 16 participants. Participants were asked to rate the similarity between the reference signal, which was a ground truth RIR convolved with an anechoic signal, and the testing signals, which were

predicted RIRs from the SS and MS methods convolved with anechoic signals. The test consisted of 3 questions per room, and there were a total of 3 rooms. For each room, 2 questions were simulating a multiple source scenario and 1

question was simulating a single source scenario. The rooms selected had an average T60 value of 0.37, 0.85, and 1.31.

Figure 7: MUSHRA test result.

Figure 7 shows the results for each room, as well as for all three rooms ("Total"). While for Room 1, the MS method appears to be rated slightly lower than the SS method, for other 2

rooms, the MS method is given visually higher scores. To formalize the result with the statistical significance, we performed the Kruskal-Wallis H-test, which confirmed that there are significant differences among the median values of the

ratings (F=413.13, p << 0.001). Additionally, we performed a pairwise post-hoc pairwise test with Conover’s test, which also showed that there is a significant difference between the MS and the SS method (p << 0.001).

For the first room, we performed separate post-hoc pairwise test and obtained a result that there is no statistically significant difference between the MS and the SS method (p=0.080). In conclusion, the listening test verified

that users generally perceived the predicted RIRs from the MS method to be closer to the ground truth RIR.

4 Summary↩︎

In this work, we presented a training method for blind RIR estimation that is able to handle signals in a multiple source environment. We showed that by training the model to estimate the representative RIR, the model is able to predict stable RIR regardless of the number of sources in the input signal. Thus, the proposed method is more robust under multiple source environments, compared to the previous single source training methods.

While the current method provides a promising direction, there still is much room for improvement and exploration of the model and the training method. One of the major limitation of the model used in this work is that it assumes the boundary between the early reflection and the late reverberation to be around 0.05s. Designing a model that handles varying boundary location, depending on the T60 values, can be one possible option. While the task introduced in this study was to estimate a single representative RIR, another direction is to identify the number of sources and estimate RIRs for each of the sources, which will be especially useful for modeling individual sources in virtual reality applications.

References↩︎

https://professionalsupport.dolby.com/s/article/Dolby-Atmos-Music-Studio-Best-Practices?language=en_US↩︎