On sampling determinantal and Pfaffian

point processes on a quantum computer

May 25, 2023

Abstract

DPPs were introduced by Macchi as a model in quantum optics the 1970s. Since then, they have been widely used as models and subsampling tools in statistics and computer science. Most applications require sampling from a DPP, and given their quantum origin, it is natural to wonder whether sampling a DPP on a quantum computer is easier than on a classical one. We focus here on DPPs over a finite state space, which are distributions over the subsets of \(\{1,\dots,N\}\) parametrized by an \(N\times N\) Hermitian kernel matrix. Vanilla sampling consists in two steps, of respective costs \(\mathcal{O}(N^3)\) and \(\mathcal{O}(Nr^2)\) operations on a classical computer, where \(r\) is the rank of the kernel matrix. A large first part of the current paper consists in explaining why the state-of-the-art in quantum simulation of fermionic systems already yields quantum DPP sampling algorithms. We then modify existing quantum circuits, and discuss their insertion in a full DPP sampling pipeline that starts from practical kernel specifications. The bottom line is that, with \(P\) (classical) parallel processors, we can divide the preprocessing cost by \(P\) and build a quantum circuit with \(\mathcal{O}(Nr)\) gates that sample a given DPP, with depth varying from \(\mathcal{O}(N)\) to \(\mathcal{O}(r\log N)\) depending on qubit-communication constraints on the target machine. We also connect existing work on the simulation of superconductors to Pfaffian point processes, which generalize DPPs and would be a natural addition to the machine learner’s toolbox. In particular, we describe “projective” Pfaffian point processes, the cardinality of which has constant parity, almost surely. Finally, the circuits are empirically validated on a classical simulator and on 5-qubit IBM machines.

Keywords— Determinantal and Pfaffian point processes, fermionic systems, quantum circuits, Givens rotations.

1 Introduction↩︎

Determinantal point processes (DPPs) were introduced in the thesis of [1], recently translated and reprinted as [2]. Macchi’s motivation was the design of probabilistic models for free fermions in quantum optics; see the preface of [2] for a history of DPPs, and [3] for an extended discussion of the links between free fermions and DPPs. DPPs have known another surge of interest since the 90s for their application to random matrix theory [4]. More recently, they have been adopted as models or sampling tools in fields like spatial statistics [5], Monte Carlo methods [6], machine learning [7], or numerical linear algebra [8]. In the latter two fields, the considered DPPs are often finite, in the sense that a DPP is a probability measure over subsets of a ground set of finite cardinality \(N\gg 1\). Such a finite DPP is specified by an \(N\times N\) matrix called its kernel matrix, which we assume here to be Hermitian.

In machine learning as in numerical linear algebra, it is crucial to be able to sample from the considered finite DPPs. For instance, a famous DPP is the subset of edges of a uniform spanning tree in a connected graph [9]. Sampling these uniform spanning trees is a necessary step for building the randomized preconditioners of Laplacian systems in [10]. As another example, DPPs have been used as randomized summaries of large collections of items, such as a corpus of texts. Sampling the corresponding DPP then allows to extract a small representative subset of sentences [11]. Other machine learning applications include constructing coresets [12], kernel matrix approximation [13], [14] and feature extraction for linear regression [15].

Much research has thus been devoted to sampling finite DPPs on a classical computer, either exactly or approximately. The default generic exact sampler is the ‘HKPV’ sampler [16]. To fix ideas, when applied to a projection DPP, i.e., a DPP that puts all its mass on subsets of some fixed cardinality \(r\leq N\), and assuming the kernel matrix is given in diagonalized form, HKPV has complexity \(\mathcal{O}(Nr^2)\). For DPPs on graphs such as uniform spanning trees, there also exist dedicated algorithms, such as the loop-erased random walks of [17], with an expected number of steps equal to the mean commute time to a chosen root node of the graph.

Given that DPPs are originally a model in quantum electronic optics, and are still the default mathematical object used to describe a quantum physical system known as free fermions [18], it is natural to ask whether finite DPPs can be sampled more efficiently on a quantum computer than on a classical computer. Somewhat implicitly, the question has actually already been tackled in a string of physics papers whose goal is the more ambitious quantum simulation of fermionic systems [19]–[22]. In a reverse cross-disciplinary direction, and still rather implicitly, the quantum algorithms therein are reminiscent of parallel QR decompositions, a key topic in numerical algebra [23], [24]. While finishing this work, we also realized that in the computer science literature, and independently of the aforementioned physics works, [25] recently proposed similar quantum algorithms to sample projection DPPs, as a building block for quantum data analysis pipelines. For our purpose, their main contribution is a quantum circuit with depth logarithmic in \(N\), when [22] only discuss depths linear in \(N\).

On our side, motivated by applications of finite DPPs in data science, we initiated in [3] a programme of reconnection of DPPs to their physical fermionic roots, to foster cross-disciplinary research between mathematics, computer science, and physics on the topic, even if our languages and lore are quite different. In particular, physicists have developed tools for the analysis and the construction of fermionic systems that we would like to apply to DPPs in data science, without reinventing the wheel. The current paper is part of this programme, and sums up our understanding of what the state of the art in physics tells us on sampling finite DPPs, after we follow in the footsteps of [1] and map a given DPP to a fermionic density operator. This cross-disciplinary, self-contained survey is our first contribution.

As an example of what our disciplines can bring to each other, our second contribution is to relate the Pfaffian point processes as defined by [26] – a generalization of DPPs that is natural in theoretical physics but has not yet been used in data science – to a quantum algorithm by [22] for solving the Bogoliubov-de Gennes Hamiltonian. As another example of the fertility of cross-disciplinary work, after we make the link between the quantum circuits of [20], [22] and parallel QR decompositions [24], many new variants of the quantum circuits in [22] become immediately available, adapting to a range of qubit-communication and hardware constraints. In particular, we exhibit a variant of the quantum circuits in [22] with the same dimensions as the best circuit in [25].

Overall, our conclusions on quantum DPP sampling are that if a projection kernel is given in diagonalized form \(\mathbf{K}= \mathbf{Q}^*\mathbf{Q}\), with \(\mathbf{Q}\in\mathbb{C}^{r\times N}\) a matrix with orthonormal rows, one can build quantum circuits that sample DPP(\(\mathbf{K}\)) with \(\mathcal{O}(rN)\) one- and two-qubit gates, and depth depending on what hardware constraints we put on which qubits can be jointly operated. Acting only on neighbouring qubits, depth is \(\mathcal{O}(N)\) [20], [22], while acting on arbitrary pairs of qubits can take the depth down to \(\mathcal{O}(r\log N)\); see our variant of [22] in 5.2.2 and the logarithmic depth Clifford loaders of [25]. Such depths (i.e., the largest number of gates applied to any single qubit) favourably compare to the time complexity \(\mathcal{O}(Nr^2)\) of the classical HKPV algorithm, or the expected complexity in \(\mathcal{O}(Nr + r^3 \log r)\) of the randomized version of HKPV in [27], [28].

That being said, diagonalizing \(\mathbf{K}\) on a classical computer as a preprocessing step remains a \(\mathcal{O}(N^3)\) bottleneck, or at least \(\mathcal{O}(Nd^2)\) in the common case where the diagonalization of \(\mathbf{K}\) can be reduced to the SVD of an \(N\times d\) matrix. This bottleneck thus seems to cancel the advantage of using a quantum circuit if one insists on starting with \(\mathbf{K}\) stored on a classical computer. Yet, while the projection kernel \(\mathbf{K}\) may not be available in diagonalized form, it is common in data science applications [10], [15] to specify it implicitly, as a set of vectors spanning its range. As noted by [28], using a (classical) parallel QR algorithm and \(P\) processors, we can reduce the classical preprocessing step to \(\mathcal{O}(Nd^2/P)\) flops. Importantly for our quantum pipeline, we discuss here how to further reuse the intermediate steps of this preprocessing in the design of the quantum circuit to apply next. This yields a hybrid parallel/quantum algorithm to sample projection DPPs. Compared to the classical HKPV sampler, our pipeline thus provides a linear speedup. Compared to the expected complexity of the randomized classical algorithm discussed in [27], [28], we show a gain in the sampling step, but we arguably share the same bottleneck of classical parallel QR preprocessing. Finally, the necessity for classical preprocessing may disappear in the future, once \(\mathbf{K}\) can be assumed to be initially available as a quantum state, stored on a quantum computer.

The rest of the paper is organized as follows. In 2, we define DPPs and one of their generalizations, Pfaffian PPs (PfPPs). In 3, we introduce the vocabulary of quantum field theory, at the basis of the connection between PfPPs and free fermions. By sticking to the case of a finite-dimensional state space, we can avoid technical difficulties and provide a rigorous, stand-alone introduction, mostly following [29]. Section 4 is devoted to building a Hamiltonian starting from a DPP or a PfPP, so that a simple quantum measurement yields a sample from the corresponding point process. In Section 5, we show how [20], [22] build circuits to simulate the fermionic systems corresponding to our point processes. Our presentation insists on the implicit links with parallel QR algorithms, which allow us to introduce variants of the circuits with smaller complexity under assumptions on the qubit communication constraints of the target machine. Still in Section 5, we show that the projective PfPPs that we simulate generate sets of points with a fixed parity of their cardinality. Finally, we investigate in Section 6 the implementation of the circuits with the library Qiskit [30], and the noise when running the circuits on 5-qubit IBMQ machines [31].

Appendix 8 contains a few detailed proofs that we extracted from the main body of the paper. Appendix 9 contains a discussion on gate details to implement the basic operations introduced in the circuits of 5. Appendix 10 is an overview of the sources of error in current quantum computers and their orders of magnitude.

Notations.

The complex conjugate of a complex number \(z\) is denoted by \(\overline{z}\). Similarly, \(\overline{\mathbf{M}}\) denotes the entrywise complex conjugate of a matrix \(\mathbf{M}\). The transpose of \(\mathbf{M}\) reads \(\mathbf{M}^\top\) and its Moore-Penrose pseudo-inverse is \(\mathbf{M}^+\). For two Hermitian matrices \(\mathbf{M}\) and \(\mathbf{N}\) of the same size, we write \(\mathbf{M}\preceq \mathbf{N}\) if for all complex vectors \(\mathbf{v}\) we have \(\mathbf{v}^* \mathbf{M} \mathbf{v} \leq \mathbf{v}^* \mathbf{N}\mathbf{v}\). The adjoint of an operator \(A\) is written \(A^*\). Also, we denote the canonical basis elements of \(\mathbb{C}^N\) by \(\mathbf{e}_k\), \(1\leq k \leq N\).

For any positive integer \(k\), we write \([k] \triangleq \{1,\dots,k\}\). The matrix obtained by selecting the first \(k\) columns on \(\mathbf{M}\) is denoted by \(\mathbf{M}_{:, [k]}\). Similarly, the \(\ell\)th column of \(\mathbf{M}\) reads \(\mathbf{M}_{:, \ell}\). A point process on \([N]\) is a probability measure over subsets of \([N]\). When talking about a point process \(Y\), we use \(\mathbb{P}\) and \(\mathbb{E}_Y\) to denote the corresponding probability and expectation, like in \(\mathbb{P}(\{1,2\}\subseteq Y)\) or \(\mathbb{E}_Y f(Y)\). Finally, the sigmoid function is \(\sigma(x) = 1/(1+\exp(-x))\).

2 Determinantal and Pfaffian point processes↩︎

In this section, we introduce discrete determinantal point processes (DPPs), and refer to [7] for their elementary properties. We also introduce Pfaffian point processes (PfPPs, [32], [33]), a generalization of DPPs that has not yet been used in machine learning, to the best of our knowledge. As we shall see in 4.4, both DPPs and PfPPs naturally appear when modeling physical particles known as fermions.

2.1 Determinantal point processes↩︎

A determinantal point process is determined by the so-called inclusion probabilities.

Definition 1 (DPP). Let \(\mathbf{K}\in \mathbb{C}^{N\times N}\). A random subset \(Y \subseteq [N]\) is drawn from the DPP of marginal kernel \(\mathbf{K}\), denoted by \(Y\sim \mathrm{DPP}(\mathbf{K})\) if and only if \[\label{eq:def95dpp} \forall S \subseteq [N],\quad \mathbb{P}(S \subseteq Y) = \det (\mathbf{K}_{S}),\qquad{(1)}\] where \(\mathbf{K}_{S} = [\mathbf{K}_{i,j}]_{i,j \in S}\). We take as convention \(\det (\mathbf{K}_{\emptyset}) = 1\).

Note that the matrix \(\mathbf{K}\) is called the marginal kernel since it determines the inclusion probabilities of subsets of items, in the same way the adjective marginal is used for the distribution of a subset of random variables in probability theory. In particular, the one-item inclusion probabilities are given by the diagonal of the kernel, namely \(\mathbb{P}(\{i\} \subseteq Y) = \mathbf{K}_{i,i}\) for all \(i \in [N]\). For all pairs \(\{i,j\}\), \(|\mathbf{K}_{i,j}|\) is interpreted as the similarity of \(i\) and \(j\) – similar items having a low probability to be jointly sampled; see 3 below for more details. A priori, it is not obvious that a given complex matrix \(\mathbf{K}\) defines a DPP.

Theorem 1 ([1], [34]). When \(\mathbf{K}\) is Hermitian, existence of \(\mathrm{DPP}(\mathbf{K})\) is equivalent to the spectrum of \(\mathbf{K}\) being included in \([0,1]\).

As a first comment, note that if \(\mathbf{K}\) is a Hermitian kernel associated with a DPP, the complex conjugate kernel \(\overline{\mathbf{K}}\) defines a DPP with the same law. This is because the eigenvalues of any principal submatrix of \(\mathbf{K}\) are real. Second, as a particular case of 1, when the spectrum of \(\mathbf{K}\) is included in \(\{0,1\}\), we call \(\mathbf{K}\) a projection kernel, and the corresponding DPP a projection DPP. Letting \(r\) be the number of unit eigenvalues of its kernel, samples from a projection DPP have fixed cardinality \(r\) with probability 1 [16]. In applications, projection kernels of rank \(r\) are often available in one of two forms: either \[\label{e:projection95kernel95gram95schmidt} \mathbf{K}= \mathbf{A}(\mathbf{A}^*\mathbf{A})^{+} \mathbf{A}^*,\tag{1}\] where \(\mathbf{A}\in \mathbb{C}^{N \times M}\) is any matrix with rank \(r\leq \min(N, M)\), or in diagonalized form \[\label{e:projection95kernel95diagonalized} \mathbf{K}= \mathbf{U}\mathbf{U}^*,\tag{2}\] where \(\mathbf{U}\in \mathbb{C}^{N\times r}\) has orthonormal columns. We give an example application for each form.

Example 1 (Uniform spanning trees). Consider a finite connected graph \(G\) with \(M\) vertices and \(N\) edges, encoded by its oriented edge-vertex incidence matrix \(\mathbf{A}\in\{-1,0,1\}^{N\times M}\). There are a finite number of spanning trees of \(G\), and we draw one uniformly at random. The edges in that random tree correspond to a subset \(Y\) of the indices \([N]\) of the rows of \(\mathbf{A}\). It turns out [9] that \(Y\) is a projection DPP with kernel 1 .

Uniform spanning trees are useful in many contexts, e.g.to build preconditioners for certain linear systems [10]. Another example of application of DPPs is column-subset selection.

Example 2 (Column subset selection). [15] propose to select \(k\) columns of a “fat" matrix2 \(\mathbf{X}\in\mathbb{R}^{n\times N}\), \(N\gg n\), using the projection DPP with rank-\(k\) kernel \[\label{e:ayoub95kernel} \mathbf{K}= \mathbf{V}_{:,[k]}\mathbf{V}_{:,[k]}^\top,\qquad{(2)}\] where \(\mathbf{X}= \mathbf{U}\mathbf{\Sigma} \mathbf{V}^\top\) is the singular value decomposition of \(\mathbf{X}\), and \(\mathbf{V}_{:,[k]}\) is the matrix given by the first \(k\) columns of \(\mathbf{V}\). This is an example of DPP with a kernel specified by 2 . [15] prove that the projection of \(\mathbf{X}\) onto the subspace spanned by the selected columns is essentially an optimal low-rank approximation of \(\mathbf{X}\). This ensures statistical guarantees in sketched linear regression.

Because we assume that the kernel is Hermitian, a DPP can be seen as a repulsive distribution, in the sense that for all distinct \(i,j\in [N]\), \[\begin{align} \mathbb{P}(\{i,j\} \subseteq Y) &= \mathbf{K}_{i,i} \mathbf{K}_{j,j} - \mathbf{K}_{i,j}\overline{\mathbf{K}_{i,j}}\label{e:proba95DPP95pair}\\ &= \mathbb{P}(\{i\} \subseteq Y)\mathbb{P}(\{j\} \subseteq Y) - \vert\mathbf{K}_{i,j}\vert^2\nonumber\\ &\leq \mathbb{P}(\{i\} \subseteq Y)\mathbb{P}(\{j\} \subseteq Y).\nonumber \end{align}\tag{3}\]

This repulsiveness enforces diversity in samples, and is particularly adequate in applications where a DPP is used to extract a small diverse subset of a large collection of \(N\) items. Beyond column subset selection, this diversity is natural in machine learning tasks such as summary extraction [7] or experimental design [35], [36].

2.2 Pfaffian point processes↩︎

Similarly to the determinant, the Pfaffian of a \(2k\times 2k\) matrix is a polynomial of the matrix entries \[\begin{align} \mathrm{Pf}(\mathbf{A}) = \frac{1}{2^k k!}\sum_{\sigma } \mathrm{sgn}(\sigma) \mathbf{A}_{\sigma(1)\sigma(2)}\dots \mathbf{A}_{\sigma(2k-1)\sigma(2k)}. \end{align}\] It is easy to see that the Pfaffian of \(\mathbf{A}\) is equal to the Pfaffian of its antisymmetric part \((\mathbf{A}-\mathbf{A}^\top)/2\). For \(\mathbf{A}\) skew-symmetric, the definition simplifies to \[\begin{align} \mathrm{Pf}(\mathbf{A}) = \sum_{\sigma \text{ contraction}} \mathrm{sgn}(\sigma) \mathbf{A}_{\sigma(1)\sigma(2)}\dots \mathbf{A}_{\sigma(2k-1)\sigma(2k)}. \end{align}\] Recall that a contraction of order \(m\) (\(m\) even) is a permutation such that \(\sigma(1)< \sigma(3) < ... < \sigma(m - 1)\), and \(\sigma(2i - 1) < \sigma(2i)\) for \(i \leq m/2\). To relate to determinants, note that the Pfaffian of a skew-symmetric matrix \(\mathbf{A}\) of even size satisfies \(\det \mathbf{A} = (\mathrm{Pf}\mathbf{A})^2\).

Definition 2 (PfPP). Let \(\mathbb{K}:[N]\times [N] \rightarrow \mathbb{C}^{2\times 2}\) satisfy \(\mathbb{K}(i,j)^\top = -\mathbb{K}(j,i)\) for all \(1\leq i,j\leq N\). A random subset \(Y \subseteq [N]\) is drawn from the PfPP of marginal kernel \(\mathbb{K}\), denoted by \(Y\sim \mathrm{PfPP}(\mathbb{K})\) if and only if \[\label{eq:def95pfpp} \forall S \subseteq [N],\quad \mathbb{P}(S \subseteq Y) = \mathrm{Pf}(\mathbf{K}_S),\qquad{(3)}\] where \(\mathbf{K}_S = [\mathbb{K}(i,j)]_{i,j\in S}\) is a complex matrix made of \(|S|\) blocks of size \(2\times 2\).

Sufficient conditions on \(\mathbb{K}\) for the existence of \(\mathrm{PfPP}(\mathbb{K})\) were given by [37] when \(\big(\begin{smallmatrix} 0 & -1\\ 1 & 0 \end{smallmatrix}\big)\mathbb{K}(i,j)\) can be mapped to a self-adjoint quaternionic kernel taking values in the set of \(2\times 2\) complex matrices. Later [38] gave an equivalent of the Macchi-Soshnikov Theorem 1 for this type of processes; see [39]. This class of Pfaffian PPs was also studied by [40] in the continuous setting.

More recently, [26] gave another sufficient condition for the existence of a Pfaffian point process on a discrete ground set, which is well-suited to the processes considered in our paper. The PfPPs of [26] correspond to the case where \(\big(\begin{smallmatrix} 0 & 1\\ 1 & 0 \end{smallmatrix}\big)\mathbb{K}(i,j)\) is a self-adjoint complex kernel. The intersection of the classes of PfPPs studied by [37] and [26] is simply the set of PfPPs for which the \(2\times 2\) matrix \(\mathbb{K}(i,j)\) has a vanishing diagonal, i.e., DPPs with Hermitian kernels; see 3 below.

Before going further, we introduce a few useful notations. Consider a \(2N\times 2N\) complex matrix \(\mathbf{S},\) viewed as made of four \(N\times N\) blocks. Define the following transformation of \(\mathbf{S}\), called here particle-hole transformation, which consists in taking the complex conjugation and exchanging blocks along diagonals, i.e. \[\mathrm{ph}(\mathbf{S}) = \mathbf{C} \overline{\mathbf{S}} \mathbf{C}, \text{ with } \mathbf{C}= \begin{pmatrix} \mathbf{0} & \mathbf{I}\\ \mathbf{I}& \mathbf{0} \end{pmatrix}.\]

Proposition 2 ([26]). Let \(\mathbf{S}= \left(\begin{smallmatrix} \mathbf{S}_{11} & \mathbf{S}_{12}\\ \mathbf{S}_{21} & \mathbf{S}_{22} \end{smallmatrix}\right)\) be a Hermitian \(2N\times 2N\) matrix such that \(0 \preceq \mathbf{S}\preceq \mathbf{I}\) and \(\mathrm{ph}(\mathbf{S}) = \mathbf{I}- \mathbf{S}\). There exists a Pfaffian point process with the marginal kernel \[\mathbb{K}_{\textcolor{black}{\mathbf{S}}}(i,j) = \begin{pmatrix} \mathbf{S}_{21}(i,j) & \mathbf{S}_{22}(i,j)\\ \mathbf{S}_{11}(i,j) - \delta_{ij} & \mathbf{S}_{12}(i,j) \end{pmatrix}, \quad 1\leq i,j \leq N.\]

A few remarks are in order. First, the properties of \(\mathbf{S}\) allow to simplify the expression of the kernel in \[\begin{align} \mathbb{K}_{\textcolor{black}{\mathbf{S}}}(i,j) = \left(\begin{matrix} \mathbf{S}_{21}(i,j) & \mathbf{S}_{22}(i,j)\\ -\mathbf{S}_{22}(j,i) & \overline{\mathbf{S}_{21}}(j,i) \end{matrix}\right), \label{eq:K95pfaffian95kernel} \end{align}\tag{4}\] where \(\mathbf{S}_{21}\) is skew-symmetric and \(\mathbf{S}_{22}\) is Hermitian. Second, for a matrix \(\mathbf{S}\) as in 2, we easily see that \(\mathbb{K}_{\textcolor{black}{\mathbf{S}}}\) and \(\mathbb{K}_{\textcolor{black}{\overline{\mathbf{S}}}}\) yield a PfPP with the same law. This is a consequence of the identity \[\begin{align} \mathbb{K}_{\overline{\mathbf{S}}}(i,j) = -\begin{pmatrix} 0 & 1\\ 1 & 0 \end{pmatrix} \mathbb{K}_{\mathbf{S}}(i,j) \begin{pmatrix} 0 & 1\\ 1 & 0 \end{pmatrix}.\label{e:pf95kernel95conjugate} \end{align}\tag{5}\] When no ambiguity is possible, we suppress the dependence on \(\mathbf{S}\) for simplifying expressions. Third, DPPs with Hermitian kernels appear as particular instances of the PfPPs of 2.

Example 3 (vanishing diagonal). Let \(\mathbf{S}\) satisfy the conditions of 2, and let \(\mathbb{K}_{\textcolor{black}{\mathbf{S}}}\) be the corresponding Pfaffian kernel. If \(\mathbf{S}_{21} = 0\), \(Y \sim \mathrm{PfPP}(\mathbb{K}_{\textcolor{black}{\mathbf{S}}})\) is distributed according to \(\mathrm{DPP}(\mathbf{S}_{22})\).

Fourth, for \(Y \sim \mathrm{PfPP}(\mathbb{K}_{\textcolor{black}{\mathbf{S}}})\) and \(i\neq j\), the \(2\)-point correlation function is \[\begin{align} \mathbb{P}(\{i,j\} \subseteq Y) &= \mathbf{S}_{22}(i,i)\mathbf{S}_{22}(j,j) - |\mathbf{S}_{22}(i,j)|^2 + |\mathbf{S}_{21}(i,j)|^2\nonumber\\ &= \mathbb{P}(\{i\} \subseteq Y)\mathbb{P}(\{j\} \subseteq Y) - |\mathbf{S}_{22}(i,j)|^2 + |\mathbf{S}_{21}(i,j)|^2. \label{e:2-point-correlation-PfPP} \end{align}\tag{6}\] Compared with DPPs with Hermitian kernels, Equation 6 suggests that a Pfaffian point process as in Proposition 2 is less repulsive than the related determinantal process \(\mathrm{DPP}(\mathbf{S}_{22})\) – an intuition for this fact is given in 4.4 below. Relatedly, note that the \(1\)-point correlation functions of \(\mathrm{DPP}(\mathbf{S}_{22})\) and \(\mathrm{PfPP}(\mathbb{K}_{\textcolor{black}{\mathbf{S}}})\) for \(\mathbb{K}_{\textcolor{black}{\mathbf{S}}}\) given in 4 are the same. In particular, the expected cardinality of \(Y\sim \mathrm{PfPP}(\mathbb{K}_{\textcolor{black}{\mathbf{S}}})\) is simply \(\require{physics} \mathop{\mathrm{\mathbb{E}}}| Y | = \Tr(\mathbf{S}_{22})\).

We end this section with a result about sample parity, which will be further discussed later in 4.4. 1 below gives the expected parity of the number of samples of a PfPP. Since we are not aware of any similar statement in the literature, we also provide a short proof of 1.

Lemma 1 (Parity of PfPP samples). Let \(\mathbb{K}\) be the kernel of a Pfaffian point process on \([N]\) and let \(\mathbb{J}\) be a \(2N\times 2N\) block matrix such that \(\mathbb{J}(i,j) = \delta_{ij} \left(\begin{smallmatrix} 0 & 1\\ -1 & 0 \end{smallmatrix}\right).\) The expected parity of the cardinality of a sample of \(Y\sim \mathrm{PfPP}(\mathbb{K})\) is \(\mathop{\mathrm{\mathbb{E}}}_{Y}(-1)^{|Y|} = \mathrm{Pf}\left(\mathbb{J} - 2 \mathbb{K}\right).\)

Proof. We shall prove a slightly more general result, which relies on the identity \[\mathrm{Pf}(\mathbb{J} + \mathbb{M}) = \sum_{S\subseteq [N]}\mathrm{Pf}\left(\mathbf{M}_S\right),\] which holds for any skew-symmetric block matrix \(\mathbb{M}\); see [32]. Note that the term corresponding to \(\emptyset\) (and equal to \(1\)) is included in the sum.

Let \(\alpha \neq 1\). We have \[\begin{align} \mathop{\mathrm{\mathbb{E}}}_{Y}(\alpha^{|Y|}) = \sum_{S\subseteq [N]}(\alpha -1)^{|S|} \mathrm{Pf}(\mathbf{K}_S) = \sum_{S\subseteq [N]}\mathrm{Pf}\left((\alpha -1)\mathbf{K}_S\right) = \mathrm{Pf}\left(\mathbb{J} + (\alpha - 1) \mathbb{K}\right), \end{align}\] where the first equality can be derived from [26]. This the desired result if we take \(\alpha = -1\). ◻

Below, in 11, we will see that samples of a PfPP associated with a projective \(\mathbf{S}\) have a fixed parity.

3 From qubits to fermions↩︎

The content of this section is standard; see e.g., the reference textbook [41] for quantum computing basics and [29] for the Jordan-Wigner transform. We also refer to [3], which presents all the basic elements required in this section in the context of optical measurements and the resulting point processes.

3.1 Models in quantum physics↩︎

A quantum model is given by \((i)\) a Hilbert space \((\mathbb{H},\braket{\cdot}{\cdot})\) called the state space, and \((ii)\) a collection of self-adjoint operators \(\mathbb{H}\to \mathbb{H}\) called observables, of which one particular observable \(H:\mathbb{H}\rightarrow \mathbb{H}\) is singled out and called the Hamiltonian. Let \(\psi \neq 0\) be an element of \(\mathbb{H}\). All elements of the form \(z \psi\) for a complex \(z\neq 0\) represent the same quantum state, called a pure quantum state, as opposed to more general states to be defined later. To simplify expressions, it is conventional to only consider elements \(\psi\) of unit norm, and to denote a unit-norm pure state by the “ket” \(\ket{\psi}\), keeping in mind that, as long as \(|z|=1\), all vectors \(z\ket{\psi}\in\mathbb{H}\) represent the same state. The corresponding “bra" \(\bra{\psi}\) is the linear form \(\ket{x}\mapsto \braket{\psi}{x}\).

3.1.1 An observable and a state define a random variable↩︎

Henceforth, we assume that \(\mathbb{H}\) has finite dimension \(d\). Take an observable \(A\). By the spectral theorem, \(A\) can be diagonalized in an orthonormal basis, say with eigenpairs \((\lambda_i, u_i)\) with \(1\leq i \leq d\). For simplicity, we momentarily assume that all the eigenvalues of \(A\) have multiplicity \(1\). Together with a state \(\ket\psi\), the observable \(A = \sum_i \lambda_i u_i u_i ^*\) describes a random variable \(X_{A,\psi}\) on \(\mathrm{spec}(A) = \{\lambda_1, \dots, \lambda_d\}\), through \[\label{e:born95pure95state} \mathbb{P}(X_{A, \psi} = \lambda_i) = \vert\braket{\psi}{u_i}\vert^2.\tag{7}\]

When modeling statistical uncertainty on a state, like when describing the noisy output of an experimental device, physical states are not modelled as unit-norm vectors of \(\mathbb{H}\), but rather as positive trace-one operators. To see how, we first map \(\ket{\psi}\) to the rank-one projector \(\rho = \ketbra{\psi}\). Then the distribution 7 can be equivalently defined as \[\label{e:born} \mathbb{P}(X_{A, \rho} = \lambda_i) = \tr\left[ \rho 1_{\{\lambda_i\}}(A) \right],\tag{8}\] where for any \(f:\mathbb{R}\rightarrow \mathbb{R}\), we have \(f(A) = \sum_i f(\lambda_i) u_i u_i ^*.\) In particular, the expectation of \(X_{A, \ketbra{\psi}}\) is \(\require{physics} \expval{A}{\psi}\). Note that Definition 8 generalizes to operators \(A\) with eigenvalues with arbitrary multiplicity, and to states \(\rho\) beyond projectors. In particular, for \(\mu\) a probability measure on \(\mathbb{H}\), \[\label{e:mixed95state} \rho = \mathop{\mathrm{\mathbb{E}}}_{\psi\sim\mu} \ketbra{\psi}\tag{9}\] still defines a probability measure on the spectrum of \(A\) through 8 . The expectation of that distribution, also called the expectation value of operator \(A\), is \(\require{physics} \expval{A}_\rho \triangleq \tr \rho A\). In physics, the association 8 of a state-observable pair \((\rho, A)\) with the random variable \(X_{A,\rho}\) is known as Born’s rule.

Any \(\rho\) that is not a rank-one projector is called a mixed state, by opposition to rank-one projectors, which are pure states. Mixed states like 9 are commonly used to describe any uncertainty in the actual state of an experimental entity.3

3.1.2 Commuting observables and a state define a random vector↩︎

When all pairs of a set of observables \(A_1, \dots, A_p\) commute, then these observables can be diagonalized in the same orthonormal basis \((u_i)\), and 8 can be naturally generalized to describe a random vector \((X_{A_j,\rho})\) of dimension \(p\), with values in the Cartesian product \[\mathrm{spec}(A_1) \times \dots \times \mathrm{spec}(A_p).\] More precisely, the law of \((X_{A_j,\rho})\) is given by \[\label{e:vector95born} \mathbb{P} (X_{A_1,\rho} = x_1, \dots, X_{A_p,\rho} = x_p) = \tr\left[ \rho 1_{\{x_1\}}(A_1) \dots 1_{\{x_p\}}(A_p) \right],\tag{10}\] see e.g. [42] for an introduction. In this paper, we will associate a Pfaffian point process to a particular mixed state and a set of commuting observables, which can respectively be efficiently prepared and measured on a quantum computer. To define these objects, we first need to explain how physicists build Hamiltonians of fermionic systems.

3.2 The canonical anti-commutation relations↩︎

The Hamiltonian and its structure are often the key part in specifying a model, much like the factorization of a joint distribution in a probabilistic model. In the case of fermions, a family of physical particles that includes electrons, Hamiltonians are typically built as polynomials of fermionic creation-annihilation operators, i.e., operators that satisfy the so-called canonical anti-commutation relations (CAR).

Definition 3 (CAR). Let \(\mathbb{H}\) be a Hilbert space. The operators \(c_j:\mathbb{H}\rightarrow \mathbb{H}\), \(j=1, \dots, p\) and their adjoints are said to satisfy the canonical anti-commutation relations if \[\begin{align} \label{e:CAR}\lbrace c_i,c_j \rbrace = \lbrace c^*_i,c^*_j \rbrace = 0 \qquad \text{and} \qquad \lbrace c_i,c^{*}_j \rbrace = \delta_{ij} \mathbb{I}, \end{align}\qquad{(4)}\] where \(\{u,v\} \triangleq uv+vu\) is the anti-commutator of operators \(u\) and \(v\).

Assuming existence4 for a moment, and limiting ourselves to a finite dimensional Hilbert space \(\mathbb{H}\) of dimension \(d=2^N\), one can say many things on \(\mathbb{H}\) from the fact that there are \(N\) operators \(c_1, \dots, c_N\) satisfying ?? . On that topic, we recommend reading [29], from which we borrow the following lemma.

Lemma 2 (Fock basis; see e.g. [29]). Let \(\mathrm{dim} \mathbb{H} = 2^N\), \(N\in\mathbb{N}^*\), and assume that \(c_1, \dots, c_N\) are distinct operators on \(\mathbb{H}\) that satisfy ?? . First, there is a vector \(\ket{\emptyset}\in\mathbb{H}\), called the vacuum, which is a simultaneous eigenvector of all \(c_i^*c_i\), \(i=1, \dots, N\), always with eigenvalue \(0\). Second, for \(\mathbf{n}=(n_1, \dots, n_N)\in\{0,1\}^N\), consider \[\ket{\mathbf{n}} \triangleq \prod_{i=1}^N (c_i^*)^{n_i} \ket{\emptyset}.\] Then \(\mathcal{B}_{\mathrm{Fock}} = (\ket{\mathbf{n}})\) is an orthonormal basis of \(\mathbb{H}\). Third, for all \(1\leq i \leq N\), \[c_i^*c_i \ket{\mathbf{n}} = n_i \ket{\mathbf{n}}, \label{e:nonzero95terms}\qquad{(5)}\] and, for \(i\neq j\), \[c_i^*c_j \ket{\mathbf{n}} =\pm n_j (1-n_i) \ket{\widetilde{\mathbf{n}}}, \label{e:zero95terms}\qquad{(6)}\] where \(\widetilde{n}_i =1\), \(\widetilde{n}_j =0\), and \(\widetilde{n}_k = n_k\) for \(k\neq i,j\).

The basis built in the lemma in called the Fock basis. Its construction depends on the choice of the operators \(c_1, \dots, c_N\). When there is a risk of confusion, we shall thus further denote \(\ket{\mathbf{n}}\) and \(\ket{\emptyset}\) as \(\ket{\mathbf{n}_c}\) and \(\ket{\emptyset_c}\), respectively. Second, because applying \(c_i\) to a vector of the Fock basis has the effect of zeroing the \(i\)th component if it was \(1\), and mapping to zero otherwise, we call the \(c_i\)’s annihilation operators. Similarly, we call their adjoints creation operators.

3.3 Fermionic operators acting on qubits↩︎

Henceforth, we let \(\mathbb{H} \triangleq (\mathbb{C}^2)^{\otimes N}\). This is the state space describing \(N\) qubits, of dimension \(2^N\). A qubit corresponds to any physical system, the state of which is described by one out of two levels. We associate these two levels with a distinguished orthonormal basis \((\ket{0}, \ket{1})\) of \(\mathbb{C}^2\), commonly called the computational basis.

Consider the so-called Pauli operators on \(\mathbb{C}^{2}\), given in the computational basis as \[\mathbf{I}= \begin{pmatrix} 1 & 0\\ 0 & 1\end{pmatrix},\quad \sigma_x = \begin{pmatrix} 0 & 1\\ 1 & 0\end{pmatrix}, \quad \sigma_y = \begin{pmatrix} 0 & -\mathrm{i}\\ \mathrm{i}& 0\end{pmatrix}, \quad \sigma_z = \begin{pmatrix} 1 & 0\\ 0 & -1\end{pmatrix}. \label{e:pauli95matrices}\tag{11}\] Note that \(\sigma_x^2 = \sigma_y^2 = \sigma_z^2= \mathbf{I}\), and that \(\sigma_x\sigma_y = \mathrm{i}\sigma_z\).

Definition 4 (The Jordan-Wigner (JW) transformation). Define the JW annihilation operators \(a_j\) on \(\mathbb{H}\), for \(j\in\{1, \dots N\}\), by their matrices in the computational basis \[\label{e:jordan95wigner} \underbrace{\sigma_z \otimes \dots \otimes \sigma_z}_{j-1\text{ times}} \otimes \left(\frac{\sigma_x+\mathrm{i}\sigma_y}{2}\right) \otimes \mathbf{I}\otimes \dots \otimes \mathbf{I}.\qquad{(7)}\] The creation operator \(a_j^*\), adjoint of \(a_j\), has matrix \[\underbrace{\sigma_z \otimes \dots \otimes \sigma_z}_{j-1\text{ times}} \otimes \left(\frac{\sigma_x-\mathrm{i}\sigma_y}{2}\right) \otimes \mathbf{I}\otimes \dots \otimes \mathbf{I}.\]

It is easy to check the following properties of the Jordan-Wigner operators.

Proposition 3. Let \(a_1, \dots, a_N\) be the JW operators from 4. They satisfy ?? . Moreover, the so-called number* operators \(N_j= a_j^*a_j\) have the simple matrix form \[\underbrace{\mathbf{I}\otimes \dots \otimes \mathbf{I}}_{j-1\text{ times}} \otimes \frac{\mathbf{I}- \sigma_z}{2} \otimes \mathbf{I}\dots\otimes \mathbf{I}.\] Finally, the Fock basis \(\ket{\mathbf{n}}\) obtained by setting \(c_j = a_j\) in 2 is actually the computational basis, i.e., \[\ket{\mathbf{n}} = \ket{n_1}\otimes \dots \otimes \ket{n_N}.\]*

There are alternative ways to define fermionic operators on \(\mathbb{H}\) using Pauli matrices, like the parity or Bravyi-Kitaev transformations [43]; or the Ball-Verstraete-Cirac transformation [44], [45]. In particular, and at the expense of simplicity, the Bravyi-Kitaev transformation yields operators with smaller weight than Jordan-Wigner: only \(\mathcal{O}(\log N)\) terms are allowed to differ from the identity in the equivalent of ?? .

4 From fermions to Pfaffian point processes↩︎

We first show in 4.1 how to build any discrete DPP with Hermitian kernel from a mixed state corresponding to a particle number preserving Hamiltonian and a set of commuting observables. This connection between DPPs and quasi-free states was observed by [46]. Then we do the same for a class of discrete PfPPs in 4.4 associated to Hamiltonians without particle number conservation.

4.1 Building a DPP from a quantum measurement↩︎

Let \(\mathbf{H}\in\mathbb{C}^{N\times N}\) be Hermitian, and consider the operator \[\begin{align} H &= \sum_{i,j=1}^N c_i^*\mathbf{H}_{ij} c_j,\label{eq:quad95Hamiltonian95vanilla} \end{align}\tag{12}\] which we think of as a Hamiltonian acting on \(\mathbb{H}\). This Hamiltonian preserves the particle number, i.e., \(H\) commutes with the number operator \(\sum_i c_i^*c_i\). Let \((\lambda_k, \mathbf{u}_{k})_{1\leq k \leq N}\) be the eigenpairs of \(\mathbf{H}\) where the eigenvalues satisfy \(\lambda_1 \leq \dots \leq \lambda_N\). The Hamiltonian 12 can thus be rewritten in “diagonalized" form \[\begin{align} H = \sum_{i,j=1}^N c_i^*\left( \sum_{k=1}^N \lambda_k \mathbf{u}_{k} \mathbf{u}_k^*\right)_{ij} c_j= \sum_{i,j,k=1}^N \lambda_k c_i^*\mathbf{u}_{ki} \mathbf{u}_{kj}^* c_j= \sum_{k=1}^N \lambda_k b_k^*b_k, \end{align}\] where we defined the operators \[\begin{align} b_k = \sum_{j=1}^N \mathbf{u}_{kj}^* c_j, \label{e:new95annihilation95operators} \end{align}\tag{13}\] which can be checked to satisfy ?? .

In what follows, we consider in 4.2 DPPs for which the correlation kernel has a spectrum in \([0,1)\), referred to as L-ensembles in the literature. Next, in 4.3, we discuss projection DPPs.

4.2 The case of L-ensembles↩︎

Below, 4 states that there is a natural DPP associated with a Hamiltonian like 12 , whose marginal kernel \(\mathbf{K}\) is obtained by applying a sigmoid to the spectrum of \(\mathbf{H}\); formally \(\mathbf{K} = \sigma(-\beta \mathbf{H})\) with \(\beta >0\) being a parameter called inverse temperature.

Proposition 4 (DPP kernel by taking the sigmoid). Let \(\beta>0\) and \(\mu \geq 0\), \(H\) and \((b_k)\) be respectively defined by 12 and 13 . Consider the mixed state \[\begin{align} \label{e:gaussian} \rho =\frac{1}{Z} \mathrm{e}^{ -\beta \left( H - \mu \sum_{j=1}^N b_j^*b_j \right) }, \end{align}\qquad{(8)}\] where the normalization constant \(Z\) ensures that \(\tr \rho = 1\). For \(i\in[N]\), the observable \(N_i=c_i^*c_i\) is a projector, so that the random variable \(X_{N_i,\rho}\) associated to \(N_i\) and the state ?? by 8 takes values in \(\{0,1\}\). Moreover, all \(N_i\)’s commute pairwise, and thus define a joint Boolean vector \((X_{N_i,\rho})_{i\in[N]}\) through 10 . Consider the point process \[S = \{i\in[N]: X_{N_i,\rho}=1\},\] corresponding to jointly observing all operators \(N_i\) in the state ?? , and reporting the indices corresponding to a \(1\). Then \(S\) is determinantal with correlation kernel \[\mathbf{K}= \mathbf{U}\, \mathrm{Diag}\left( \frac{\mathrm{e}^{-\beta (\lambda_k-\mu)}}{1 + \mathrm{e}^{-\beta (\lambda_k-\mu)}}\right)\, \mathbf{U}^*,\] where \(\mathbf{U}\) and \((\lambda_k)_k\) are obtained by the diagonalization \(\mathbf{H}= \mathbf{U}\, \mathrm{Diag}\left( \lambda_k\right) \mathbf{U}^*\).

Proof. All number operators \(N_i = c_i^*c_i\) commute pairwisely and have spectrum in \(\{0,1\}\); see 2. Consequently, their joint measurement indeed describes a random binary vector \(X=(X_{N_i, \rho})\). By Born’s rule 10 , the correlation function of the point process corresponding to the indices of the \(1\)s in \(X\) are given by \[\require{physics} \mathbb{P} \left ( \{i_1, \dots, i_k\}\subseteq X \right ) = \tr \left(\rho N_{i_1}\dots N_{i_k}\right) \triangleq \expval{N_{i_1}\dots N_{i_k}}_\rho.\] Because of the anti-commutation relations ?? , an explicit computation known as Wick’s theorem implies that for any \(i_1,\dots,i_k\in [N]\), \[\require{physics} \label{e:wick} \expval{N_{i_1}\dots N_{i_k}}_\rho = \det \left(\expval{c_{i_m}^*c_{i_n}}_\rho\right)_{m,n}.\tag{14}\] Wick’s theorem is a standard result in quantum physics; see e.g.[3] for a rewriting with our notations of one of the canonical references [47].

Now, Equation 14 implies that the point process \(X\), consisting of simultaneously measuring the occupation of all qubits using \((N_i)\), is determinantal with correlation kernel \[\require{physics} \begin{align} \mathbf{K}_{ij} &= \expval{c_{i}^*c_{j}}_\rho. \label{e:kernel95intermediate} \end{align}\tag{15}\] In order to provide an explicit computation of \(\mathbf{K}_{ij}\), we use 2 with the operators \(b_1, \dots, b_N\), to obtain a basis \((\ket{\mathbf{n}}) = (\ket{\mathbf{n}}_b)\) of eigenvectors of all operators of the form \(b_i^*b_i\), \(i=1, \dots, N\). We now proceed to computing the trace in 15 by summing over that basis. We write \[\require{physics} \begin{align} \mathbf{K}_{ij} &= \tr \left[\rho c_{i}^*c_{j}\right] \\ &= \tr \left[\rho c_{i}^*c_{j} \sum_{\mathbf{n}} \ketbra{\mathbf{n}}\right]\\ & = Z^{-1} \sum_{\mathbf{n}} \expval{\mathrm{e}^{-\beta \sum_{p} (\lambda_p-\mu) b_p^*b_p} c_i^*c_j}{\mathbf{n}}. \end{align}\] Now note that \(c_j = \sum_{\ell=1}^N \mathbf{u}_{j\ell}b_\ell\), so that \(c_i^*c_j = \sum_{k,\ell=1}^N \mathbf{u}^*_{ik} \mathbf{u}_{j\ell} b_k^*b_\ell\), and \[\require{physics} \begin{align} \mathbf{K}_{ij} &= Z^{-1}\sum_{k,\ell=1}^N \mathbf{u}^*_{ik} \mathbf{u}_{j\ell} \sum_{\mathbf{n}} \expval{\mathrm{e}^{-\beta \sum_{p} (\lambda_p-\mu) b_p^*b_p} b_k^*b_\ell}{\mathbf{n}}. \end{align}\] Because of ?? and the orthonormality of the basis \((\ket{\mathbf{n}})\), for \(k\neq \ell\), \[\require{physics} \expval{\mathrm{e}^{-\beta \sum_{p} (\lambda_p-\mu) b_p^*b_p} b_k^*b_\ell}{\mathbf{n}}=0.\] Therefore, \[\require{physics} \begin{align} \mathbf{K}_{ij} &= Z^{-1}\sum_{k=1}^N \mathbf{u}^*_{ik} \mathbf{u}_{jk} \sum_{\mathbf{n}} \expval{\mathrm{e}^{-\beta \sum_{p} (\lambda_p-\mu) b_p^*b_p} b_k^*b_k}{\mathbf{n}} \tag{16}\\ &= Z^{-1}\sum_{k=1}^N \mathbf{u}^*_{ik} \mathbf{u}_{jk} \sum_{\mathbf{n}} n_k \mathrm{e}^{-\beta \sum_p (\lambda_p-\mu)n_p}. \tag{17} \end{align}\] Now, we pause and compute the normalization constant \(Z\) of \(\rho\). Starting from \(\tr\rho=1\), we write \[\require{physics} \begin{align} Z = \tr \mathrm{e}^{-\beta \sum_{p} (\lambda_p-\mu) b_p^*b_p} = \sum_{\mathbf{n}} \expval{\mathrm{e}^{-\beta \sum_{p} (\lambda_p-\mu) b_p^*b_p}}{\mathbf{n}} = \sum_{\mathbf{n}} \mathrm{e}^{-\beta \sum_p (\lambda_p-\mu)n_p}. \end{align}\] Rewriting the sum as a product, we obtain \[\begin{align} Z &= \prod_{p=1}^N \sum_{n_p \in \{0,1\}} \mathrm{e}^{-\beta (\lambda_p-\mu)n_p} = \prod_{p=1}^N (1+ \mathrm{e}^{-\beta (\lambda_p-\mu)}). \label{e:constant95intermediate} \end{align}\tag{18}\] Now, explicitly writing the dependence of \(Z=Z(\boldsymbol{\lambda})\) to \(\boldsymbol{\lambda}= (\lambda_1, \dots, \lambda_N)\), Equations 17 and 18 together yield \[\begin{align} \mathbf{K}_{ij} &= \sum_{k=1}^N \mathbf{u}^*_{ik} \mathbf{u}_{jk} \frac{\sum_{\mathbf{n}} n_k \mathrm{e}^{-\beta \sum_p (\lambda_p-\mu)n_p}}{\sum_{\mathbf{n}} \mathrm{e}^{-\beta \sum_p (\lambda_p-\mu)n_p}}\\ &= \sum_{k=1}^N \mathbf{u}^*_{ik} \mathbf{u}_{jk} \left[-\frac{1}{\beta}\frac{\mathrm{d}}{\mathrm{d}\lambda_k} \log Z(\boldsymbol{\lambda})\right]\\ &= \sum_{k=1}^N \mathbf{u}^*_{ik} \mathbf{u}_{jk} \frac{\mathrm{e}^{-\beta (\lambda_k-\mu)}}{1 + \mathrm{e}^{-\beta (\lambda_k-\mu)}}, \label{e:kernel95final} \end{align}\tag{19}\] for any \(1\leq i,j \leq N\). Thus, we find \(\mathbf{K}= \overline{\mathbf{U}}\, \mathrm{Diag}\left( \frac{\mathrm{e}^{-\beta (\lambda_k-\mu)}}{1 + \mathrm{e}^{-\beta (\lambda_k-\mu)}}\right)\, \overline{\mathbf{U}}^*\). Since \(\overline{\mathbf{K}}\) and \(\mathbf{K}\) define the same DPP, we have the desired result. ◻

Corollary 1 (Hamiltonian by taking the logit). Let \(\mathbf{K}= \mathbf{V} \mathbf{D} \mathbf{V}^*\) be a Hermitian DPP kernel with \(0\prec \mathbf{D}\prec \mathbf{I}\). Then Proposition 4 yields a DPP with kernel \(\mathbf{K}\) provided we choose \(\mathbf{V} = \mathbf{U}\) and the eigenvalues \(\boldsymbol{\lambda}\) so that \[\beta(\lambda_i-\mu) = \log \frac{1-d_i}{d_i}.\] Furthermore, assuming \(d_1 \geq \dots \geq d_N \geq 0\), and \(d_{r} < \mu < d_{r+1}\), \(\mathbf{K}\) converges to the projection kernel onto the first \(r\) columns of \(\mathbf{V}\).

Proof. The first part is a consequence of 16 . The second statement is maybe easiest to see in terms of Frobenius norm, to wit \(\Vert \mathbf{A}\Vert^2 = \sum_{i=1}^N \sigma_i^2(\mathbf{A})\), where \(\sigma_i(\mathbf{A})\) are the singular values of \(\mathbf{A}\). If \(\mathbf{P}\) denotes the projector into the first \(r\) columns of \(\mathbf{V}\), then the absolute values of the eigenvalues of the Hermitian matrix \(\mathbf{K}-\mathbf{P}\) are its singular values, and all of them converge to \(0\). ◻

4.3 The case of projection DPPs↩︎

With the notation of 4.2, we note that \((\ket{\mathbf{n}}) = (\ket{\mathbf{n}}_b)\) is a basis of eigenvectors of \(\rho\), and that \[\rho\ket{\mathbf{n}} = \frac{\mathrm{e}^{-\beta \sum_p (\lambda_p - \mu) n_p}}{\sum_{\mathbf{n}'} \mathrm{e}^{-\beta \sum_p (\lambda_p - \mu)n'_p}} \ket{\mathbf{n}}.\] In particular, the only eigenvalue that does not vanish when \(\beta\rightarrow \infty\) is that of \(\ket{1,\dots, 1, 0, \dots, 0}\), where the \(1\)s occupy the first \(r\) components, and \(r\in\{1, \dots, N\}\) is such that with \(\lambda_{r} < \mu < \lambda_{r+1}\). Indeed, the ratio of the eigenvalue of \(\ket{1,\dots, 1, 0, \dots, 0}\) with that of any other eigenvector diverges to \(+\infty\), while all eigenvalues remain in \([0,1]\) and the trace is fixed to \(1\). Thus, denoting \(\ket{\psi} = \ket{1,\dots, 1, 0, \dots, 0}\), \[\rho \rightarrow \ketbra{\psi}\] in Frobenius norm as \(\beta\rightarrow \infty\). In particular, \[\tr N_{i_1} \dots N_{i_k} \rho \rightarrow \tr N_{i_1} \dots N_{i_k} \ketbra{\psi}\] as \(\beta\rightarrow \infty\). Combined with the second statement of 1, we know that the correlation functions of the point process corresponding to preparing \(\ketbra{\psi}\) and measuring the occupation of all qubits and recording where the \(1s\) occur is the projection DPP of kernel \(\mathbf{P} = \mathbf{V}_{:,[r]} \mathbf{V}^*_{:,[r]}\). Recall that \(\mathbf{V}_{:,[r]}\) is the matrix obtained by selecting the \(r\) first columns of \(\mathbf{V}\).

Remark 5 (Beyond Gaussian states). We have shown that the kernels of projection DPPs are obtained as limits of kernels associated with Gaussian states ?? . Actually, a DPP kernel can be associated with a density matrix of a quasi-free state generalizing the Gaussian state, for which Wick’s theorem also holds; see e.g.[26]. In particular, every quasi-free state is a convex combination of pure states. We give now a few details by following [48]. Let \(\mathcal{C} \subseteq [N]\) and let \(\ket{\psi_\mathcal{C}} = \prod_{i\in \mathcal{C}} c_i^*\ket{\emptyset}\). Let \(\mathbf{K}\) be a Hermitian matrix with eigenvalues \((\nu_p)\) in \([0,1]\). The density matrix associated with \(\mathbf{K}\) is the quasi-free state \[\rho = \sum_{\mathcal{C}\subseteq [N]} \alpha_\mathcal{C} \ketbra{\psi_\mathcal{C}} \text{ with } \alpha_\mathcal{C} = \prod_{p\in \mathcal{C}}\nu_p \prod_{q\in [N]\setminus\mathcal{C}}(1-\nu_q).\] Furthermore, in 8.7, we establish for the interested reader the determinantal formula for \(\require{physics} \expval{N_{i_1}\dots N_{i_k}}_\rho\) for a projective \(\rho\) without resorting to Wick’s theorem as in the proof of 4.

4.4 Building a PfPP from the BdG Hamiltonian↩︎

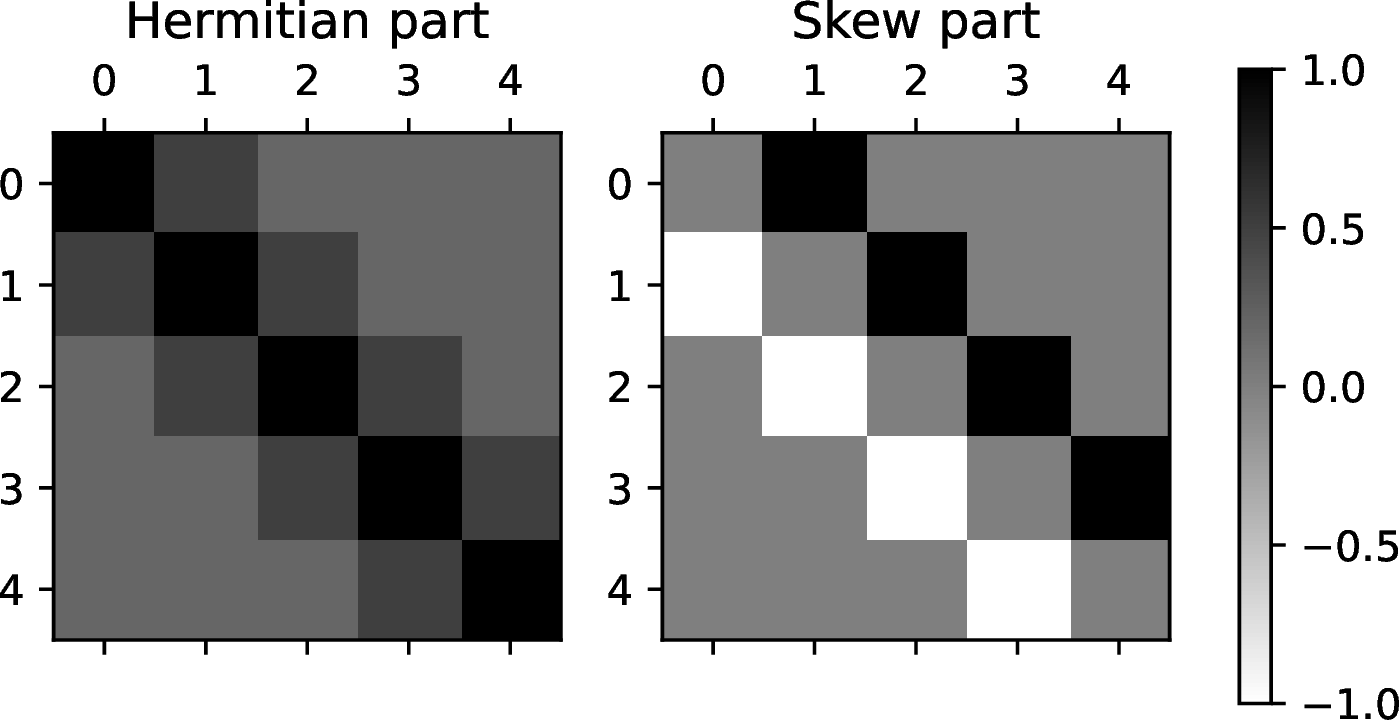

We shall see that Pfaffian point processes appear using slightly more sophisticated Hamiltonians. Unlike Hamiltonian 12 , the Hermitian operator \[\begin{align} H = \frac{1}{2}\sum_{i,j=1}^N \mathbf{M}_{ij} (c_i^*c_j - c_j c_i^*) + \frac{1}{2}\sum_{i,j=1}^N \left( \boldsymbol{\Delta}_{ij} c_i^*c_j^*+ \boldsymbol{\Delta}^*_{ij} c_i c_j\right), \label{e:H95general} \end{align}\tag{20}\] with complex matrices \(\mathbf{M} = \mathbf{M}^*\) and \(\boldsymbol{\Delta}\), does not commute with the total number operator \(N = \sum_i c_i^* c_i\). Physicists say that \(H\) does not “preserve" the total number of particles.

Note that, due to the anti-commutation relation \(c_i^*c_j^*= - c_j^*c_i^*\), we can simply redefine \(\boldsymbol{\Delta}\) so that \(\boldsymbol{\Delta} = - \boldsymbol{\Delta}^\top\). The Hamiltonian 20 becomes the so-called Bogoliubov-de Gennes (BdG) Hamiltonian \[\begin{align} H_{\mathrm{BdG}} = \frac{1}{2}\sum_{i,j=1}^N \mathbf{M}_{ij} (c_i^*c_j - c_j c_i^*) + \frac{1}{2}\sum_{i,j=1}^N \left( \boldsymbol{\Delta}_{ij} c_i^*c_j^*- \overline{\boldsymbol{\Delta}_{ij}} c_i c_j\right), \label{e:H95BdG} \end{align}\tag{21}\] which is a model for superconductors; see e.g.[49] for a modern overview.

Remark 6 (Fermion parity). The Hamiltonian \(H_{\mathrm{BdG}}\) commutes with the parity operator \((-1)^{\sum_{i=1}^N c_i^* c_i}\); see e.g.[50]. Therefore, the eigenfunctions of \(H_{\mathrm{BdG}}\) are also eigenfunctions of the parity operator.

We now investigate the point process associated to the occupation numbers of the Gaussian state \(Z^{-1} \exp(-\beta H_{\mathrm{BdG}}),\) for \(\beta >0\), as we did for ?? . It is convenient to stack the operators \(c_1, \dots, c_N\) and their adjoints in column vectors, and write \(\mathbf{c} = [c_1 \dots c_N ]^\top\) and \(\mathbf{c}^*= [c_1^*\dots c_N^*]^\top\). We can then rewrite the Hamiltonian more compactly as \[\begin{align} H_{\mathrm{BdG}} = \frac{1}{2}\Big(\begin{smallmatrix} \mathbf{c}^*\\ \mathbf{c} \end{smallmatrix}\Big)^*\mathbf{H}_{\mathrm{BdG}} \Big(\begin{smallmatrix} \mathbf{c}^*\\ \mathbf{c} \end{smallmatrix}\Big) \text{ with } \mathbf{H}_{\mathrm{BdG}} = \begin{pmatrix} -\overline{\mathbf{M}} & -\overline{\boldsymbol{\Delta}}\\ \boldsymbol{\Delta} & \mathbf{M} \end{pmatrix}.\label{eq:H95matrix} \end{align}\tag{22}\] By construction, the matrix \(\mathbf{H}_{\mathrm{BdG}}\) obeys the particle-hole symmetry, namely \(\mathbf{C}\overline{\mathbf{H}}_{\mathrm{BdG}}\mathbf{C}= -\mathbf{H}_{\mathrm{BdG}}\) where we recall the definition of the involution \(\mathbf{C}= \big(\begin{smallmatrix} \boldsymbol{0} &\mathbf{I}\\ \mathbf{I}& \boldsymbol{0} \end{smallmatrix}\big)\). The name particle-hole symmetry comes from the fact that \[\mathbf{C}\Big(\begin{smallmatrix} \mathbf{c}^*\\ \mathbf{c} \end{smallmatrix}\Big) = \Big(\begin{smallmatrix} \mathbf{c}\\ \mathbf{c}^* \end{smallmatrix}\Big)\] flips the role of creation and annihilation operators.

Before going further, we introduce a group of transformations preserving ?? that are used to diagonalize the Bogoliubov-de Gennes Hamiltonian; see [51].

Definition 5 (orthogonal complex matrix). A complex invertible \(2N\times 2N\) matrix \(\mathbf{W}\) satisfying \(\mathbf{W}^\top \mathbf{C}\mathbf{W} = \mathbf{C}\) is called an orthogonal complex matrix. The group of orthogonal complex matrices is denoted by \(O(\mathbf{C},\mathbb{C})\).

For any orthogonal complex matrix \(\mathbf{W}\) and any \(N\) operators \(c_1, \dots, c_N\) satisfying ?? , another set of creation-annihilation operators satisfying ?? is given by the so-called Bogoliubov transformation \[\begin{align} \Big(\begin{smallmatrix} \boldsymbol{b}^*\\ \boldsymbol{b} \end{smallmatrix}\Big) = \boldsymbol{W} \Big(\begin{smallmatrix} \mathbf{c}^*\\ \mathbf{c} \end{smallmatrix}\Big),\label{e:Bog95transform} \end{align}\tag{23}\] and by further requiring that \(\mathbf{W}\) is unitary, \(b_k^*\) is the adjoint of \(b_k\) for all \(k \in [N]\). Hence, in what follows, we only consider transformations \(\mathbf{W}\in O(\mathbf{C},\mathbb{C})\cap U(2N)\).

Lemma 3. A unitary orthogonal complex matrix is of the form \(\mathbf{W} = \big(\begin{smallmatrix} \mathbf{U} & \mathbf{V} \\ \overline{\mathbf{V}} & \overline{\mathbf{U}} \end{smallmatrix}\big),\) with \(\mathbf{U} \mathbf{U}^*+ \mathbf{V} \mathbf{V}^*= \mathbf{I}\) and \(\mathbf{U} \mathbf{V}^\top + \mathbf{V} \mathbf{U}^\top = \boldsymbol{0}\). Furthermore, \(\det \mathbf{W} \in \{-1,1\}\).

Next, following [22], we diagonalize 21 by a convenient change of variables, which consists in finding a suitable Bogoliubov transformation. The upshot is that we have the decomposition \[\mathbf{H}_{\mathrm{BdG}} = \mathbf{W}^* \begin{pmatrix} \mathrm{Diag}(-\epsilon_k) & 0\\ 0 & \mathrm{Diag}(\epsilon_k) \end{pmatrix} \mathbf{W},\label{e:eigen95decomp95HBDG}\tag{24}\] as shown in 4 below.

Lemma 4 (Diagonalization of BdG Hamiltonian). Let \(\boldsymbol{\Omega} = \frac{1}{\sqrt{2}} \Big(\begin{smallmatrix} \mathbf{I}& \mathbf{I}\\ \mathrm{i}\mathbf{I}& -\mathrm{i}\mathbf{I} \end{smallmatrix}\Big)\) and define \(\mathbf{A} = -\mathrm{i}\overline{\boldsymbol{\Omega}} \Big(\begin{smallmatrix} \boldsymbol{\Delta} & \mathbf{M}\\ -\overline{\mathbf{M}} & -\overline{\boldsymbol{\Delta}} \end{smallmatrix}\Big) \boldsymbol{\Omega}^*\). The following statements hold.

\(H_{\mathrm{BdG}} = \frac{1}{2} \mathrm{i}\boldsymbol{f}^\top \mathbf{A} \boldsymbol{f}\) where \(\boldsymbol{f} = \boldsymbol{\Omega} \Big(\begin{smallmatrix} \mathbf{c}^*\\ \mathbf{c} \end{smallmatrix}\Big)\) and \(\mathbf{A}\) is real skew-symmetric.

There exists a real orthogonal matrix \(\boldsymbol{R}\) and a real vector \(\boldsymbol{\epsilon} = [\epsilon_1, \dots, \epsilon_N]^\top\) such that \(0\leq \epsilon_1\leq \dots\leq \epsilon_N\) and \(\boldsymbol{R}\mathbf{A}\boldsymbol{R}^\top = \Big(\begin{smallmatrix} 0 & \mathrm{Diag}(\epsilon_k)\\ -\mathrm{Diag}(\epsilon_k) & 0 \end{smallmatrix}\Big).\)

Another set of creation-annihilation operators satisfying ?? is given by \(\Big(\begin{smallmatrix} \boldsymbol{b}^*\\ \boldsymbol{b} \end{smallmatrix}\Big) = \boldsymbol{W} \Big(\begin{smallmatrix} \mathbf{c}^*\\ \mathbf{c} \end{smallmatrix}\Big)\), where \(\boldsymbol{W} = \boldsymbol{\Omega}^*\boldsymbol{R} \boldsymbol{\Omega} \in O(\mathbf{C},\mathbb{C}) \cap U(2N)\).

We have the diagonalization \(H_{\mathrm{BdG}} = \frac{1}{2} \Big(\begin{smallmatrix} \mathbf{b}^*\\ \mathbf{b} \end{smallmatrix}\Big)^\top \Big(\begin{smallmatrix} \mathbf{0} & \mathrm{Diag}(\epsilon_k)\\ -\mathrm{Diag}(\epsilon_k) & \mathbf{0} \end{smallmatrix}\Big) \Big(\begin{smallmatrix} \mathbf{b}^*\\ \mathbf{b} \end{smallmatrix}\Big).\)

We refer to [22] for a proof sketch.

Now, we leverage these results to compute the expectation value of bilinears under \(\rho_{\mathrm{BdG}}\). 5 states a result analogous to 4, and its proof, given in 8.2, relies on similar techniques such as Wick’s theorem.

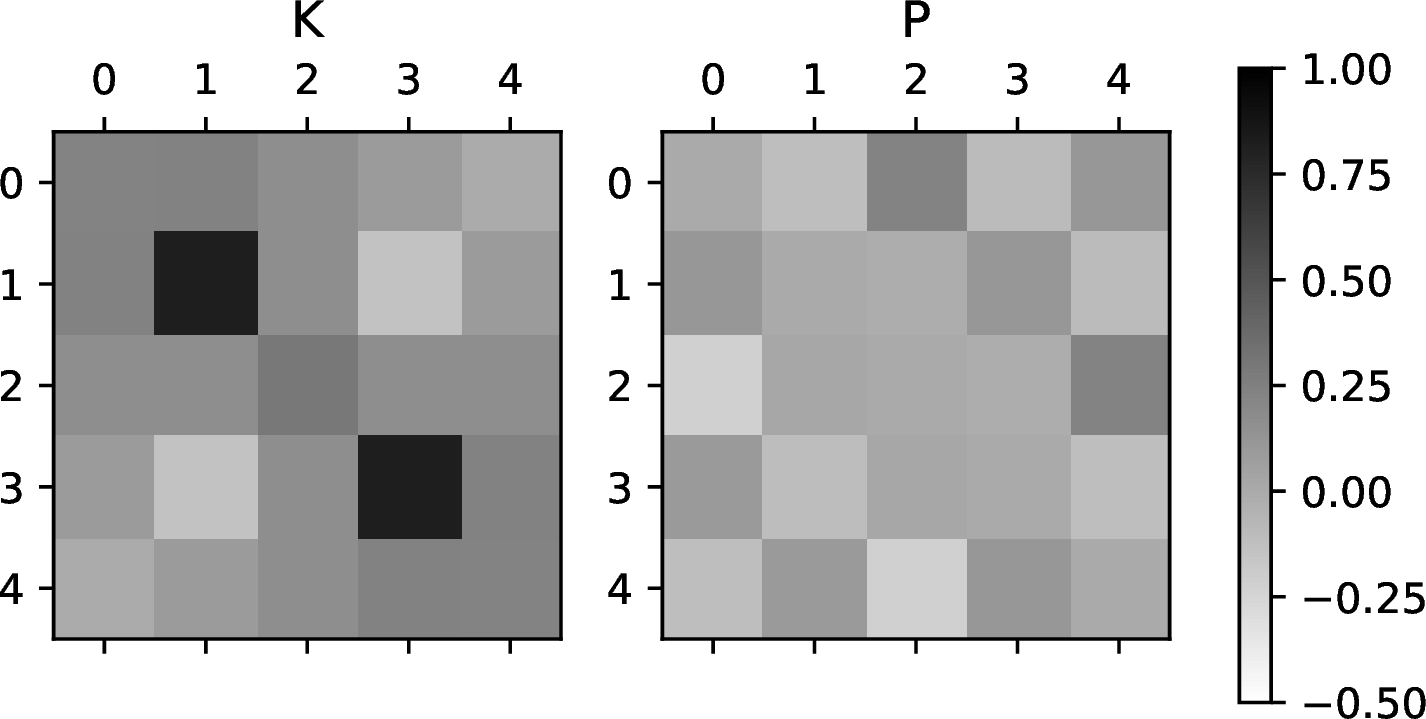

Lemma 5. Let \(\boldsymbol{\epsilon} = [\epsilon_1, \dots, \epsilon_N]^\top\) be the eigenvalues of \(H_{\mathrm{BdG}}\) such that \(0\leq \epsilon_1\leq \dots\leq \epsilon_N\) as given by 4 and let \(\rho = \frac{1}{Z} \exp( -\beta H_{\mathrm{BdG}}).\) We have \[\begin{align} \mathbf{S} \triangleq \begin{pmatrix} (\left\langle c_i c^*_j \right\rangle_{\rho})_{i,j} &(\left\langle c_i c_j\right\rangle_{\rho})_{i,j} \\ (\left\langle c^*_i c^*_j\right\rangle_{\rho})_{i,j} & (\left\langle c^*_i c_j\right\rangle_{\rho})_{i,j} \end{pmatrix} = \overline{\mathbf{W}}^{*} \begin{pmatrix} \mathrm{Diag}(\sigma(\beta \epsilon_k))& \mathbf{0}\\ \mathbf{0} & \mathrm{Diag}(\sigma(-\beta \epsilon_k)) \end{pmatrix} \overline{\mathbf{W}}, \end{align}\] where \(\sigma(x)\) is the sigmoid function; see Section 1. Furthermore, we have that \(\mathbf{S}= \sigma(-\beta \overline{\mathbf{H}_{\mathrm{BdG}}})\) and \(\mathbf{S}\) satisfies \(0\preceq \mathbf{S} \preceq \mathbf{I}\), as well as \(\mathbf{C}\overline{\mathbf{S}}\mathbf{C} = \mathbf{I}- \mathbf{S}\).

Note that the process \(\mathrm{PfPP}(\mathbb{K}_{\mathbf{S}})\) with the \(\mathbf{S}\) matrix given in 5 has the same law as \(\mathrm{PfPP}(\mathbb{K}_{\overline{\mathbf{S}}})\) in the light of the behaviour of this type of Pfaffian kernels under complex conjugation; see 5 .

For convenience, we introduce the following notations, for \(1\leq i,j\leq N\), \[\begin{pmatrix} \left\langle c_i c^*_j\right\rangle_{\rho} & \left\langle c_i c_j\right\rangle_{\rho} \\ \left\langle c^*_i c^*_j\right\rangle_{\rho}& \left\langle c^*_i c_j\right\rangle_{\rho} \end{pmatrix} \triangleq \begin{pmatrix} \delta_{ij}-\mathbf{K}^T_{ij} &-\overline{\mathbf{P}_{ij}} \\ \mathbf{P}_{ij} &\mathbf{K}_{ij} \end{pmatrix},\] where \(\mathbf{0} \preceq \mathbf{K}\preceq \mathbf{I}\) is Hermitian and \(\mathbf{P}\) is skew-symmetric. By definition, we have \[\mathbf{S} = \begin{pmatrix} \mathbf{I}-\overline{\mathbf{K}}& \mathbf{P}^*\\ \mathbf{P} & \mathbf{K} \end{pmatrix}.\] The particle-hole transformation amounts to replacing in \(\mathbf{S}\) each \(c_i\) by \(c_i^*\) and vice-versa. As a consequence of ?? , \(\mathbf{S}\) satisfies \(\mathbf{C}\overline{\mathbf{S}}\mathbf{C} = \mathbf{I}- \mathbf{S}\); see 2.2.

can be seen as a generalization to mixed states of the analysis of [52], and restates the results of [26].

Proposition 7. Let \(N_i = c_i^*c_i\) for \(1\leq i\leq N\) and let \(\rho = Z^{-1} \exp( -\beta H_{\mathrm{BdG}})\). For any \(i_1,\dots,i_k\in [N]\), we have \[\require{physics} \label{eq:correlation95Pfaffian} \expval{N_{i_1}\dots N_{i_k}}_{\rho} = \mathrm{Pf}\left(\mathbb{K}(i_m,i_n)\right)_{1\leq m,n \leq k},\qquad{(9)}\] where each block of the above \(2k \times 2k\) matrix is given in terms of the \(2\times 2\)-matrix-valued kernel \[\begin{align} \mathbb{K}(i,j) = \begin{pmatrix} \mathbf{P}_{i j} & \mathbf{K}_{i j}\\ - \mathbf{K}_{j i} & -\overline{\mathbf{P}_{i j}} \end{pmatrix}. \label{eq:Pfaffian95kernel} \end{align}\qquad{(10)}\] The latter satisfies \(\mathbb{K}(i,j)^\top = -\mathbb{K}(j,i)\) for \(1\leq i,j \leq N\).

The proof of this result, given in 8.3, is also based on Wick’s theorem.

More can also be said about sample parity.

Proposition 8 (Sample parity and Majorana quadratic form). In the setting of 5, for \(Y \sim \mathrm{PfPP}(\mathbb{K})\), we have that \[\mathop{\mathrm{\mathbb{E}}}_Y (-1)^{|Y|} = \det(\mathbf{W})\prod_{k = 1}^N \tanh(\beta \epsilon_k/2).\label{e:expected95parity95tanh}\qquad{(11)}\] Assuming that \(\epsilon_k >0\) for all \(k\), an alternative expression for the expectation of the parity is obtained by using the identity \(\det \mathbf{W} = \mathop{\mathrm{\mathrm{sign}}}\mathrm{Pf}(\mathbf{A}_{M}).\) Here, the skew-symmetric matrix \(\mathbf{A}_{M}\) is the quadratic form of the Majorana representation of the Hamiltonian, namely \[H_{\mathrm{BdG}} = \frac{\mathrm{i}}{2} \boldsymbol{\gamma}^\top \mathbf{A}_{M} \boldsymbol{\gamma},\] where \(\gamma_{2k-1} = \frac{1}{\sqrt{2}}(c_k^*+c_k)\) and \(\gamma_{2k} = \frac{\mathrm{i}}{\sqrt{2}}(c^*_k - c_k)\) for \(k\in [N]\).

The proof of 8 given in 8.4 relies on the expected parity formula of 1. Incidentally, note that this result implies Kitaev’s formula [53] for the parity of a one-dimensional chain of fermions in a limit case that we discuss in 11; see also [50] for a detailed study of Pfaffian formulae for fermion parity.

5 Quantum circuits to sample DPPs and PfPPs↩︎

Armed with the connections between PfPPs and fermions in Section 4, it remains to connect fermions and quantum circuits. Quantum circuits are briefly introduced in Section 5.1 for self-containedness. In Section 5.2, we describe the quantum circuit of [20], later modified by [22], that corresponds to a projection DPP given the diagonalized form of its kernel. In Section 5.2.2, we depart from [22] and highlight the connections of their construction with a classical parallel QR algorithm in numerical algebra [23]. Consequently, we propose to take inspiration from more recent parallel QR algorithms, such as [24], to construct circuits with different constraints on the communication between qubits. In particular, we recover circuits with depth the shortest depth reported in [25], but with QR-style arguments rather than sophisticated data loaders. Moreover, and maybe more importantly for DPP sampling, while sampling from a DPP with non-diagonalized kernel remains limited by the initial (classical) diagonalization step, we argue that if one chooses the right avatar in the available distributed QR algorithms, we can even give a hybrid pipeline of a classical parallel and a quantum algorithm to sample some projection DPPs with non-diagonalized kernel, with a linear speedup compared to the vanilla classical DPP sampler of [16]. The covered DPPs include practically relevant cases such as the uniform spanning trees and column subsets of Examples 1 and 2. In Sections 5.3 and 5.4, we give two standard arguments, respectively due to [16] and [54], to reduce the treatment of (non-projection) Hermitian DPPs to projection DPPs. This concludes our treatment of DPPs. In Section 5.5, we go back to connecting point processes to the work of [22], showing how one can use their circuit corresponding to the BdG Hamiltonian to sample a Pfaffian PP.

5.1 Quantum circuits↩︎

We refer to [41] for a description of quantum circuits and the basic building blocks. In short, in the quantum circuit model of quantum computation, one describes a computation by the initialization of a set of \(N\) qubits, a sequence of unitary operators among a small set of physically-implementable operators called gates, and a physically-implementable observable called measurement. Not all gates can be implemented on every quantum computer, but software development kits like Qiskit [30] allow the user to define a quantum circuit using a large enough set of gates. The latter usually include any tensor product of identity matrices and Pauli matrices 11 (the so-called \(X\), \(Y\), and \(Z\) gates, respectively corresponding to \(\sigma_x\), \(\sigma_y\), and \(\sigma_z\) in 11 ) and a few two-qubit gates such as the ubiquitous CNOT. Qiskit then “transpiles" the resulting circuit into a sequence of actually-implementable gates for a given machine. For instance, with the current technology, not all qubits can be jointly operated on a given machine, e.g., one may not be able to apply a gate that acts on two qubits that are physically too far from each other in the actual quantum machine, or only be able to do so with a significant chance of error. Assuming the transpiling process preserves the dimensions of the circuit, one measures the complexity of a quantum circuit by quantities such as its total number of gates and its depth, i.e., the largest number of elementary gates applied to any single qubit.

Finally, in order to judge the possibility of sampling DPPs today, and be able to estimate what we can do in the future as hardware and software improve, it is important to keep in mind the main sources of error and their order of magnitude in current quantum hardware; see Appendix 10.

5.2 The case of a projection DPP↩︎

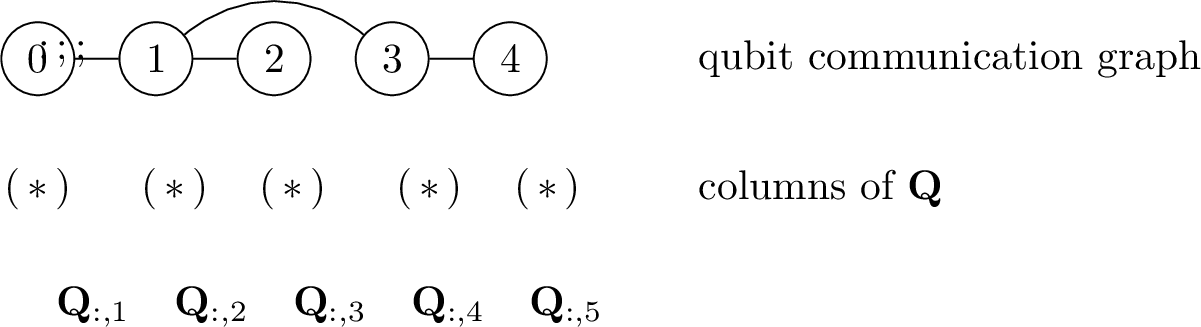

Consider again \((a_j)\) to be the Jordan-Wigner operators, which satisfy ?? , and \((\ket{\mathbf{n}})\) to be the corresponding Fock basis. Let \(r\in\mathbb{N}^*\), \(\mathbf{Q}\in\mathbb{C}^{r\times N}\) have orthonormal rows, and \(b_j^*= \sum_{\ell=1}^N q_{j\ell} a_\ell^*\) for \(1\leq j\leq r\). Let further \[\label{e:slater95determinant} \ket{\psi} = b_1^*\dots b_r^*\ket{\emptyset}.\tag{25}\] From Section 4.3, we know that simultaneously measuring \(N_i = a_i^*a_i\), \(i=1, \dots, N\) on \(\rho = \ketbra{\psi}\) samples the projection DPP with kernel \(\mathbf{K}= \mathbf{Q}^*\mathbf{Q}\). Measuring \(N_i\) is easy, because the Fock basis of the JW operators is the computational basis, see 3, and measurement in the computational basis is a basic operation on any quantum computer. For the same reason, implementing \(a_1^* \dots a_r^*\ket\emptyset\) simply amounts to initializing the first \(r\) qubits to \(\ket{1}\), and the rest to \(\ket{0}\). It remains to be able to prepare the state 25 , where the \(b_i\)’s intervene, not the \(a_i\)’s. This is done by a procedure akin to the QR algorithm by successive Givens rotations; a standard reference on QR algorithms and matrix computations in general is [55].

5.2.1 QR by Givens rotations↩︎

Proposition 9 ([20]–[22]). There is a unitary operator \(\mathcal{U}(\mathbf{Q})\) on \(\mathbb{H}\) such that \[b_1^*\dots b_r^*\ket{\emptyset} = \mathcal{U}(\mathbf{Q}) a_1^* \dots a_r^*\ket\emptyset,\] and \(\mathcal{U}(\mathbf{Q})\) is a product of unitaries corresponding to elementary quantum gates.

Proof. The Givens rotation with parameters \(\theta,\phi\) and indices \(\ell^1, \ell^2\in[N]\) is the unitary matrix \[\mathbf{G}= \mathbf{G}_{\ell^1,\ell^2}(\theta,\phi) = \mathbf{P} \begin{pmatrix} \boldsymbol{\gamma} & \mathbf{0}\\ \mathbf{0} & \mathbb{I} \end{pmatrix} \mathbf{P}^{-1} \text{ with } \boldsymbol{\gamma} = \begin{pmatrix} \cos\theta & \mathrm{e}^{-\mathrm{i}\phi} \sin\theta \\ -\sin\theta \mathrm{e}^{\mathrm{i}\phi}& \cos\theta \end{pmatrix}, \label{e:givens}\tag{26}\] where \(\mathbf{P}\) is the matrix of the permutation \((1\;\ell^1)(2\;\ell^2)\), allowing to select the vectors on which the rotation is applied.5 Choosing \(\theta, \phi\) relevantly, one can make sure that \(\mathbf{Q}\mathbf{G}^*\) has a zero in position \((\ell^1, \ell^2)\), while all columns other than \((\ell_1, \ell_2)\) are left unchanged. Iteratively choosing \((\ell^1, \ell^2)\), we can sequentially introduce zeros in \(\mathbf{Q}\) by multiplying it by \(n_{G}\) Givens rotations \(\mathbf{G}_1, \dots, \mathbf{G}_{n_G}\), never changing an entry that we previously set to zero, until \[\mathbf{Q}\mathbf{G}_1^* \dots \mathbf{G}_{n_G}^* = \begin{pmatrix} \mathbf{\Lambda}\vert \mathbf{0} \end{pmatrix}, \label{e:Givens95chain}\tag{27}\] where \(\mathbf{\Lambda}\) is an \(r\times r\) diagonal unitary matrix, and the right block is the \(r\times (N-r)\) zero matrix.

Now, to each Givens rotation \(\mathbf{G} = \mathbf{G}_{\ell^1,\ell^2}(\theta,\phi)\), we associate a unitary operator \(\mathcal{G} = \mathcal{G}_{\ell^1, \ell^2}(\theta, \phi)\) on \(\mathbb{H}\). We call \(\mathcal{G}\) a Givens operator, as it realizes the Givens rotation \(\mathbf{G}\) by a conjugation of creation operators, i.e. \[\begin{align} \mathcal{G} a_{\ell^1}^*\mathcal{G}^*&= \cos \theta a_{\ell^1}^*+ \mathrm{e}^{-\mathrm{i}\phi}\sin \theta a_{\ell^2}^*,\\ \mathcal{G} a_{\ell^2}^*\mathcal{G}^*&= -\mathrm{e}^{\mathrm{i}\phi}\sin \theta a_{\ell^1}^*+ \cos \theta a_{\ell^2}^*,\\ \mathcal{G} a_{i}^*\mathcal{G}^*&= a_{i}^*, \quad \forall i \notin\{\ell^1, \ell^2\}. \end{align}\] We refer to Appendix 8.1 for an explicit construction. For future convenience, we note that this action can be compactly summarized if one stacks up the creation operators in a vector \(\mathbf{a}^*= (a_1^*\cdots a_N^*)^\top\), and writes \[\begin{align} \mathcal{G} \mathbf{a}^*\mathcal{G}^*= \mathbf{G}\cdot \mathbf{a}^*,\label{e:givens95operator95definition} \end{align}\tag{28}\] with \(\cdot\) defined by matrix-vector multiplication.

Finally, consider the unitary \[\begin{align} \mathcal{U}(\mathbf{Q}) \triangleq \mathcal{G}_{n_G} \dots \mathcal{G}_1, \label{e:Givens95factorization95on95H} \end{align}\tag{29}\] where \(\mathcal{G}_i\) is the Givens operator corresponding to \(\mathbf{G}_i\) in 27 . Then, by construction and up to a phase factor, for all \(1\leq k \leq r\), \[\mathcal{U}(\mathbf{Q}) a_k^*\mathcal{U}(\mathbf{Q})^* = b_k^*, \text{ and } \mathcal{U}(\mathbf{Q}) \ket{\emptyset} = \ket{\emptyset}.\] In particular, \[\begin{align} \ket{\psi} = b_1^*\dots b_r^*\ket{\emptyset} &= \mathcal{U}(\mathbf{Q}) a_1^*\mathcal{U}(\mathbf{Q})^* \dots \mathcal{U}(\mathbf{Q}) a_r^*\mathcal{U}(\mathbf{Q})^* \ket{\emptyset}\\ &= \mathcal{U}(\mathbf{Q}) a_1^*\dots a_r^*\ket{\emptyset}, \end{align}\] again up to a phase factor, which is irrelevant in the resulting state \(\ketbra{\psi}\). ◻

The operator \(\mathcal{U}(\mathbf{Q})\) in Proposition 9 is indeed a product of elementary two-qubit gates, because any Givens operator \(\mathcal{G}_{\ell^1,\ell^2}(\theta,\phi)\) can be implemented as such, as first put forward by [20]. We discuss gate details in Appendix 9.

To see how many gates are required for a given DPP kernel, we need to discuss a final degree of freedom: the Givens chain of rotations 26 is not unique. This is where constraints on which qubits can be jointly acted upon enter the picture.

5.2.2 Parallel QR algorithms and qubit operation constraints↩︎

The product of Givens rotations in 26 can give rise to a quantum circuit of short depth if there are subproducts of rotations that can be performed on disjoint pairs of qubits. Independently of quantum computation, this is exactly the same kind of constraint that numerical algebraists have been studying in a long string of works on parallel QR factorization;6 see [24] and references therein.

As a first example, after a preprocessing phase, the preparation of 25 in [22] implicitly implements a parallel QR algorithm known as Sameh-Kuck [23]. More precisely, in a preprocessing phase, [22] zero out \(r(r-1)/2\) entries in the upper-right corner of \(\mathbf{Q}\) by pre-multiplying \(\mathbf{Q}\) by a product of (complex) Givens rotations. Let us write this product of unitary matrices by \(\mathbf{V}\). This preprocessing requires at most7 \(r(r-1)/2\) Givens rotations, which are implemented thanks to a classical computer. To fix ideas, when \(r = 3\) and \(N = 6\), this results in a matrix of the following form \[\mathbf{V}\mathbf{Q}= \begin{pmatrix} * & * & * & * & 0 & 0\\ * & * & * &* & * & 0\\ * & * & * & * & * & * \end{pmatrix}. \label{e:jiang39s95example}\tag{30}\] Note that replacing \(\mathbf{Q}\) by \(\mathbf{V}\mathbf{Q}\) does not change the kernel of the resulting DPP since \(\mathbf{V}^*\mathbf{V}= \mathbf{I}\). Now, [22] apply QR to \(\mathbf{V}\mathbf{Q}\) as in the proof of Proposition 9, applying Givens rotations on disjoint pairs of neighbouring columns, as they become available. Rather than giving a formal description of the algorithm, available in [23], we follow [22] and depict its application on Example 30 . We use the convenient notation of [22], i.e.bold characters to show the most recently actively updated entries of the matrix, and underlined characters to show entries that automatically result from the rows of \(\mathbf{Q}\) being orthonormal. The successive parallel rounds of the algorithm are then \[\begin{align} \mathbf{V}\mathbf{Q} & = \begin{pmatrix} * & * & * & * & 0 & 0\\ * & * & * &* & * & 0\\ * & * & * & * & * & * \end{pmatrix} \rightarrow \begin{pmatrix} * & * & * & \mathbf{0}& 0 & 0\\ * & * & * &* & * & 0\\ * & * & * & * & * & * \end{pmatrix}\nonumber\\ &\rightarrow \begin{pmatrix} * & * & \mathbf{0}& 0 & 0 & 0\\ * & * & * &* & \mathbf{0}& 0\\ * & * & * & * & * & * \end{pmatrix} \rightarrow \begin{pmatrix} \underline{\lambda_1} & \mathbf{0}& 0 & 0 & 0 & 0\\ \underline{0} & * & * &\mathbf{0}& 0 & 0\\ \underline{0} & * & * & * & * & \mathbf{0} \end{pmatrix}\nonumber\\ &\rightarrow \begin{pmatrix} \lambda_1 & 0 & 0 & 0 & 0 & 0\\ 0 & \underline{\lambda_2} & \mathbf{0}& 0 & 0 & 0\\ 0 & \underline{0} & * & * & \mathbf{0}& 0 \end{pmatrix} \rightarrow \begin{pmatrix} \lambda_1 & 0 & 0 & 0 & 0 & 0\\ 0 & \lambda_2 & 0 & 0 & 0 & 0\\ 0 & 0 & \underline{\lambda_3} & \mathbf{0}& 0 & 0 \end{pmatrix}.\label{e:QR95nnb95Jiang} \end{align}\tag{31}\] The factors \(\lambda_i\) are of unit modulus, as in the construction behind Proposition 9. The upshot is that only a lower triangular matrix remains whose only non-zero entries are on the diagonal, while the other entries of the lower triangle automatically vanish due to row orthonormality.

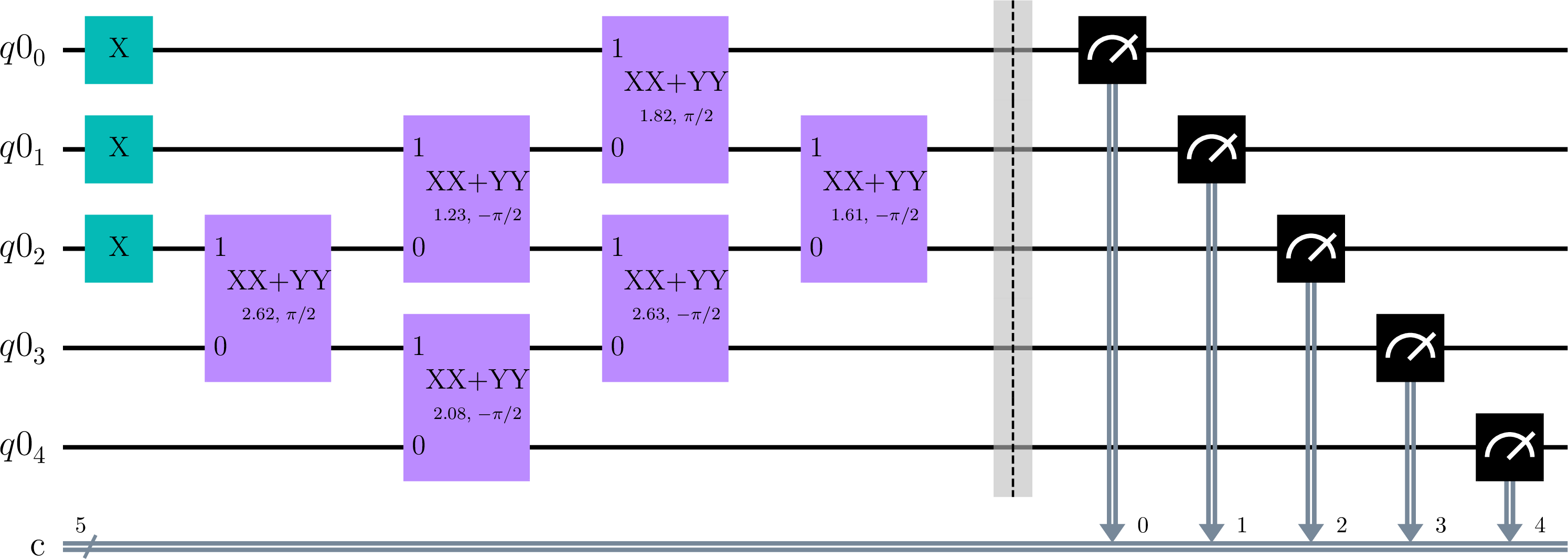

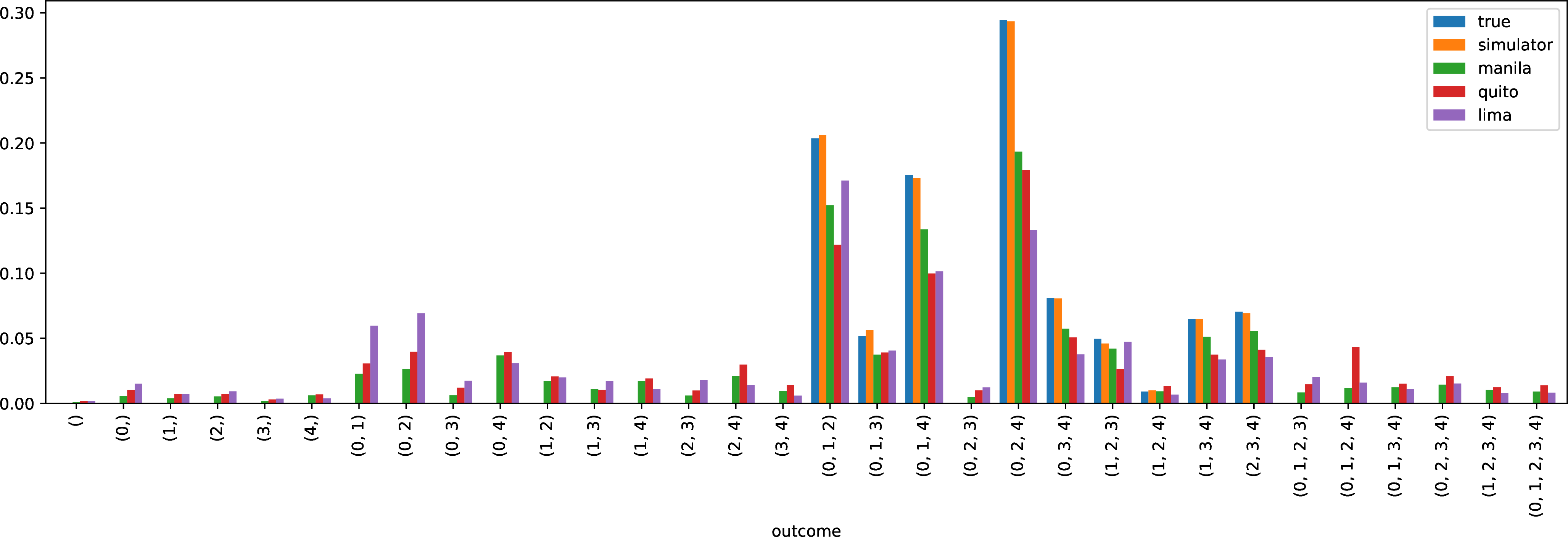

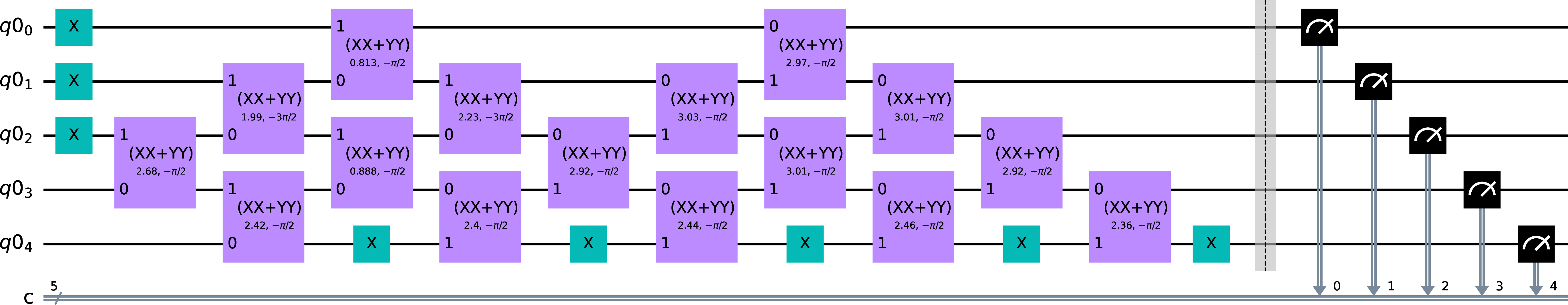

The resulting circuit, an example of which is given in Figure 1, requires \(\mathcal{O}(Nr)\) gates, and has depth \(\mathcal{O}(N)\). The \(\mathcal{O}(r^2)\) preprocessing, which is optional and could also be performed in the quantum circuit, allows getting rid of a handful of (necessarily faulty) quantum gates at a small classical cost. Finally, constraining Givens operators to act on neighbouring qubits, or equivalently, Givens rotations to act on neighbouring columns, is practically relevant if the actual quantum computer at disposal favours two-qubit gates acting on neighbouring qubits.

As a second extreme example, assume that we have no constraint on which pairs of qubits can be jointly operated. We can then perform parallel QR on \(\mathbf{Q}\) using only \(\mathcal{O}(r\log N)\) parallel rounds, simply by acting on all available disjoint pairs in a single row until all but one entry are zeroed, keeping in mind that the leftmost \(r\) entries of each row will be automatically updated because of orthonormality constraints. On an example with \(N=8\) and \(r=3\), this would yield \[\begin{align} \mathbf{Q} &\rightarrow \begin{pmatrix} * & \mathbf{0}& *& \mathbf{0}& * & \mathbf{0}& * & \mathbf{0}\\ * & * & * & * & * & * & * & *\\ * & * & * & * & * & * & * & *\\ \end{pmatrix} \rightarrow \begin{pmatrix} * & 0 & \mathbf{0}& 0 & * & 0 & \mathbf{0}& 0\\ * & * & * & * & * & * & * & *\\ * & * & * & * & * & * & * & *\\ \end{pmatrix}\\ &\rightarrow \begin{pmatrix} \underline{\lambda_1} & 0 & 0 & 0 & \mathbf{0}& 0 & 0 & 0\\ \underline{0} & * & * & * & * & * & * & *\\ \underline{0} & * & * & * & * & * & * & *\\ \end{pmatrix} \rightarrow \begin{pmatrix} \underline{\lambda_1} & 0 & 0 & 0 & 0 & 0 & 0 & 0\\ 0 & * & * & \mathbf{0}& * & \mathbf{0}& * & \mathbf{0}\\ 0 & * & * & * & * & * & * & *\\ \end{pmatrix}\\ &\rightarrow \begin{pmatrix} {\lambda}_1 & 0 & 0 & 0 & 0 & 0 & 0 & 0\\ 0 & * & \mathbf{0}& 0& * & 0 & \mathbf{0}& 0\\ 0 & * & * & * & * & * & * & *\\ \end{pmatrix} \rightarrow \begin{pmatrix} {\lambda}_1 & 0 & 0 & 0 & 0 & 0 & 0 & 0\\ 0 & \underline{\lambda_2} & 0 & 0& \mathbf{0}& 0 & 0 & 0\\ 0 & \underline{0} & * & * & * & * & * & *\\ \end{pmatrix}\\ &\rightarrow \begin{pmatrix} {\lambda}_1 & 0 & 0 & 0 & 0 & 0 & 0 & 0\\ 0 & \lambda_2 & 0 & 0& 0 & 0 & 0 & 0\\ 0 & 0 & * & \mathbf{0}& * & \mathbf{0}& * & \mathbf{0}\\ \end{pmatrix} \rightarrow \begin{pmatrix} {\lambda}_1 & 0 & 0 & 0 & 0 & 0 & 0 & 0\\ 0 & \boldsymbol{\lambda}_2 & 0 & 0& 0 & 0 & 0 & 0\\ 0 & 0 & * & 0 & * & 0 & \mathbf{0}& 0\\ \end{pmatrix}\\ &\rightarrow \begin{pmatrix} {\lambda}_1 & 0 & 0 & 0 & 0 & 0 & 0 & 0\\ 0 & \lambda_2 & 0 & 0& 0 & 0 & 0 & 0\\ 0 & 0 & \underline{\lambda_3} & 0 & \mathbf{0}& 0 & 0 & 0\\ \end{pmatrix}. \end{align}\] The resulting quantum circuit has \(\mathcal{O}(Nr)\) gates as the one of [22], but a depth of only \(\mathcal{O}(r\log_2 N)\). This depth is similar to the shortest depth obtained by [25] with a similar tree-like structure called “parallel Clifford loaders".

Just like in parallel QR, we argue that the choice of the chain 26 of rotations should depend on the particular hardware constraints, like which qubits we allow to be jointly operated. Parallel QR algorithms allow quite arbitrary dependency structures, see e.g. [24]. While initially motivated by communication- or storage constraints in parallel classical computing, these dependency structures are actually tailored to designing quantum circuits to prepare states like 25 depending on a given quantum architecture. Taking the analogy with parallel QR even one step further, we now show that the initial bottleneck of diagonalizing the kernel of a DPP can be combined with the design of the quantum circuit in a single run of a parallel QR algorithm.

Figure 1: A circuit sampling a DPP with projection kernel of rank \(r = 3\), with \(N = 5\) items. On the left-hand side, Pauli \(X\) gates are used to create fermionic modes on the three first qubits. Note also the parallel QR Givens rotations on neibouring qubits indicated by parametrized \(XX+YY\) gates. See 9 for gate details. On the right-hand side, measurements of occupation numbers are denoted by black squares.

5.2.3 A hybrid parallel/quantum algorithm for projection DPPs↩︎

The link of Givens-based quantum circuits with parallel QR algorithms suggests pipelines to sample from projection DPPs, even when the kernel is not yet in diagonalized form.

Proposition 10. Assume that we have access only to \(\mathbf{A}\in \mathbb{R}^{d\times N}\), and that we want to sample from DPP(\(\mathbf{K}\)), where \(\mathbf{K}= \mathbf{V}_{:,[r]} \mathbf{V}_{:,[r]}^*\) and \(\mathbf{V}\) is defined by the singular value decomposition \(\mathbf{A}= \mathbf{U}\mathbf{\Sigma}\mathbf{V}^*\). Then, given \(P\) classical processors, for a run time in \(\mathcal{O}(Nd^2/P)\) up to logarithmic factors in \(P\), we can design a quantum circuit with depth \(\mathcal{O}(N\log P/P)\) and \(\mathcal{O}(Nd)\) gates, such that simultaneous measurements in the computational basis at the output of the circuit yield a sample of DPP(\(\mathbf{K}\)).

Three comments are in order. First, specifying the kernel \(\mathbf{K}\) as in Proposition 10, where \(\mathbf{K}\) is the projection kernel onto the first \(r\) principal components of a full-rank rectangular matrix \(\mathbf{A}\), is common in practice. In particular, it covers the column subset selection of Example 2 and the uniform spanning forests of Example 1. Second, for simplicity we have omitted the additional \(\mathcal{O}(r^2)\) preprocessing introduced by [22]. Third, at least if we neglect physical sources of noise in the quantum circuit (see Appendix 10), the bottleneck in the hybrid sampler remains the initial classical cost \(\mathcal{O}(Nd^2/P)\). Compared with the vanilla classical approach, we have gained a linear speedup thanks to parallelization. Note that it is not clear how such a linear speedup can be gained in the vanilla classical algorithm, but that it can be gained in non-quantum randomized variants [27], [28]. Dequantizing Proposition 10, to obtain a non-quantum algorithm with a similar cost, is actually an interesting question, which we leave to future work.