A machine learning potential for simulating infrared spectra of nanosilicate clusters

May 25, 2023

Abstract

The use of machine learning (ML) in chemical physics has enabled the construction of interatomic potentials having the accuracy of ab initio methods and a computational cost comparable to that of classical force fields. Training an ML model requires an efficient method for the generation of training data. Here we apply an accurate and efficient protocol to collect training data for constructing a neural network based ML interatomic potential for nanosilicate clusters. Initial training data are taken from normal modes and farthest point sampling. Later on, the set of training data is extended via an active learning strategy in which new data are identified by the disagreement between an ensemble of ML models. The whole process is further accelerated by parallel sampling over structures. We use the ML model to run molecular dynamics (MD) simulations of nanosilicate clusters with various sizes, from which infrared spectra with anharmonicity included can be extracted. Such spectroscopic data are needed for understanding the properties of silicate dust grains in the interstellar medium (ISM) and in circumstellar environments.

1 Introduction↩︎

Silicates are the main constituent of dust grains in the interstellar medium (ISM) [1] and in circumstellar environments [2]. They provide surfaces for chemical reactions and nucleation sites for the formation of ice mantles [3]. Silicate dust with different compositions, sizes and shapes exhibit varied properties [4] that can be probed by infrared (IR) observations [5], [6]. For example, a broad band at 9.7 \(\mu\)m is normally assigned to Si-O stretching modes and a band around 18 \(\mu\)m can be attributed to O-Si-O bending modes. Using an astrophysical model to describe IR emission from carbonaceous and silicate dust grains [7], [8], it has been estimated that around 10% of interstellar Si could be locked up in ultrasmall silicate grains with radii \(<\) 1.5 nm [9]. Therefore, having efficient and accurate methods to model the structures and vibrational properties of nanosilicate clusters is beneficial for understanding IR features and other properties of these, presumably very abundant, species.

Most previously reported spectroscopic data of silicate clusters are from classical force field modelings [10] and quantum chemical calculations [10]–[12]. Although producing silicate clusters in experiments is difficult [13], recent cluster beam studies have produced small silicate clusters and verified their structures via their theoretically calculated IR spectra [14]. Many theoretical studies of silicates are based on density functional theory (DFT) calculations, whereby the vibrational spectra assume the harmonic approximation. However, at finite temperature, an anharmonic treatment becomes necessary due to a number of possible associated effects (e.g. thermal peak broadening, frequency redshifting, combination bands, conformational fluxionality) [12]. In this case, ab initio molecular dynamics (AIMD) is a more appropriate method to compute IR spectra with anharmonic and temperature effects included [12]. Hybrid functionals are often used in IR calculations of nanosilicate clusters in order to provide sufficient accuracy [12]. However, the high computational costs of AIMD simulations with hybrid functionals hinder their application for large cluster sizes. Computationally inexpensive interatomic potentials are needed for MD simulations with large system sizes. A number of such potentials have been developed and parameterized classical force fields thus exist, that do describe bulk silicate [15], [16] and silicate clusters [11] quite well. They are often used to roughly predict structure and energetic properties. For example, a force field optimized for silicate clusters has been used for global optimization of silicate clusters with different stoichiometries and sizes [11]. However, classical force fields are sometimes limited by their accuracy. The low-energy isomers predicted by force fields are thus typically refined by a more accurate method like DFT to ensure an accurate energetic ordering is obtained.

Machine learning (ML) techniques such as artificial neural network [17]–[22] and gaussian process regression [23]–[25] are powerful tools to fit interatomic potentials [26], [27] while balancing accuracy and computational costs. They have been successfully applied to global optimization [28]–[37], molecular dynamics [38]–[46] and vibrational spectroscopy [47]–[51]. In this work, we will develop neural network based potentials for silicate clusters. The desired applications are MD simulations of silicate clusters for variable compositions, sizes and temperatures, from which IR spectra can be extracted. The paper is organized as follows. We will first summarize the essential elements to develop an accurate ML potential for three target properties (total energy, atomic forces and total dipole moment) of silicate clusters. This includes our choice of neural network architectures and the generation of training data. We move on to a discussion of how the ML potential is trained and validated on the three target properties. Its spectroscopic predictions are then examined with respect to both harmonic and MD-based spectra. Afterwards, the transferability of the ML potential is tested on the silicate clusters that are not included in the training data. Finally, the ML potential is used in MD simulations of silicate clusters and the astrophysical implication of the obtained IR spectra is discussed.

2 Methods↩︎

In order to compute IR spectra via an MD-based approach, it is needed to have an accurate description of total energy, atomic forces and total dipole moment. Total energy and atomic forces are trained in one model since atomic forces are the negative gradient of the total energy and the inclusion of forces in training often improves model accuracy for both energy and forces [23], [52]–[54]. The total dipole moment is considered as a property which is independent from the energy and the forces. As such, it is therefore trained in a separate model following Gastegger et al [47]. Our choice of neural network models is described in details in 2.1. One of the challenges in training such a model is to make sure the trained model is accurate and robust during a long time MD simulations (needed for spectroscopic accuracy). The trained model should also be applicable for MD simulations at high temperature (e.g. 800K) which are needed for mimicking some circumstellar conditions [12], [55]. To address this issue, we will use an active learning strategy [56], [57] to train the ML model. The model gets iteratively improved. During each iteration, the model is used in an MD sampling for exploring the configuration space and selecting training data [47], [58]. Both low and high temperatures are chosen in the MD sampling so that different regions of the configuration space are covered. More details can be found in 2.2. Another challenge is the selection of training data which is expected to be representative and unique. This could also be addressed by the active learning. At each iteration, only uncertain data that the current model cannot predict well will be added to the training data [47], [56]. More discussions will be given in 2.2. Finally, MD details will be described in 2.3 and DFT settings will be summarized in 2.4.

2.1 Neural network models↩︎

The Gaussian moment neural network (GM-NN) [21], [22] developed by Kästner’s group was used to construct potentials for total energies and atomic forces. The total energy of a given system \(\hat{\text{E}}\) is calculated as a sum of atomic energies \(\epsilon_i\). \[\hat{\text{E}} = \sum_{i=1}^{\text{N}_\text{atoms}} \epsilon_i\] Those atomic energies are the outputs from GM-NN models which take the local atomic environments (learnable Gaussian moments in this model) as inputs. The prediction of atomic force \(\hat{\boldsymbol{F}}_i\) on an atom \(i\) is achieved by taking the partial derivative of the total energy with respect to the atomic position \(\boldsymbol{r}_i\) of atom \(i\). \[\hat{\boldsymbol{F}}_i = - \dfrac{\partial \hat{\text{E}}}{\partial \boldsymbol{r}_i}\] During the training, a combined loss function is used. \[\mathcal{L}_\text{E,F} = \sum_{n=1}^{N_\text{structures}} \biggl[ \lambda_\text{E} || \text{E}^\text{ref} - \hat{\text{E}} ||^2 + \frac{\lambda_\text{F}}{3\text{N}_\text{atoms}} \sum_{i=1}^{\text{N}_\text{atoms}} || \boldsymbol{F}^\text{ref}_i - \hat{\boldsymbol{F}}_i ||^2 \biggr]\] where the trade-off between energy and force is set to \(\lambda_\text{E} = 1\) and \(\lambda_\text{F} =\) 10 Å\(^2\). The GM-NN code [59] was used to train total energies and atomic forces.

The dipole moment was trained separately since the GM-NN code [59] cannot provide dipole moment predictions yet. We used the SchNet neural network [19] implemented in SchNetPack v1.0.0 [60] to model the dipole moment. The total electric dipole moment is computed as a sum of atomic charges weighted by their positions with respect to the center of mass. \[\boldsymbol{\hat{\mu}} = \sum_{i=1}^{\text{N}_\text{atoms}} \hat{q}_i (\boldsymbol{r}_i - \boldsymbol{r}_0)\] where \(\hat{q}_i\) is the charge of atom \(i\) predicted by a SchNet model and \(\boldsymbol{r}_0\) is the center of mass for a given structure. Note that atomic charges are not fixed for each individual atom, but depend on their atomic environments. Consequently, atomic charges are fluctuating in MD simulations. The loss function for dipole moment is defined as \[\mathcal{L}_\mu = \sum_{n=1}^{N_\text{structures}} \biggl[ \frac{1}{3} || \boldsymbol{\mu}^\text{ref} - \hat{\boldsymbol{\mu}} ||^2 \biggr]\]

2.2 Training data↩︎

The silicate clusters investigated in this work are taken from global minimum energy structures of pyroxene (MgSiO\(_3\))\(_\text{N}\) and olivine (Mg\(_2\)SiO\(_4\))\(_\text{N}\) with a range of cluster sizes (N=1-10) [11]. The name of those silicate clusters is abbreviated as P1, P2, ... and P10 for pyroxene (MgSiO\(_3\))\(_\text{N}\) (N=1-10). Similarly, O1, O2, ... and O10 stand for olivine (Mg\(_2\)SiO\(_4\))\(_\text{N}\) (N=1-10). Their structures are shown in Fig. S1. During the construction of ML interatomic potentials, the generation of training data is an important factor next to choosing appropriate ML models. The ideal training data should be representative and not redundant in the configuration space. We have used an active learning strategy to generate our training data [47], [56], [61]. The active learning has been widely used to generate training data while retaining small amounts of training data [62]–[68].

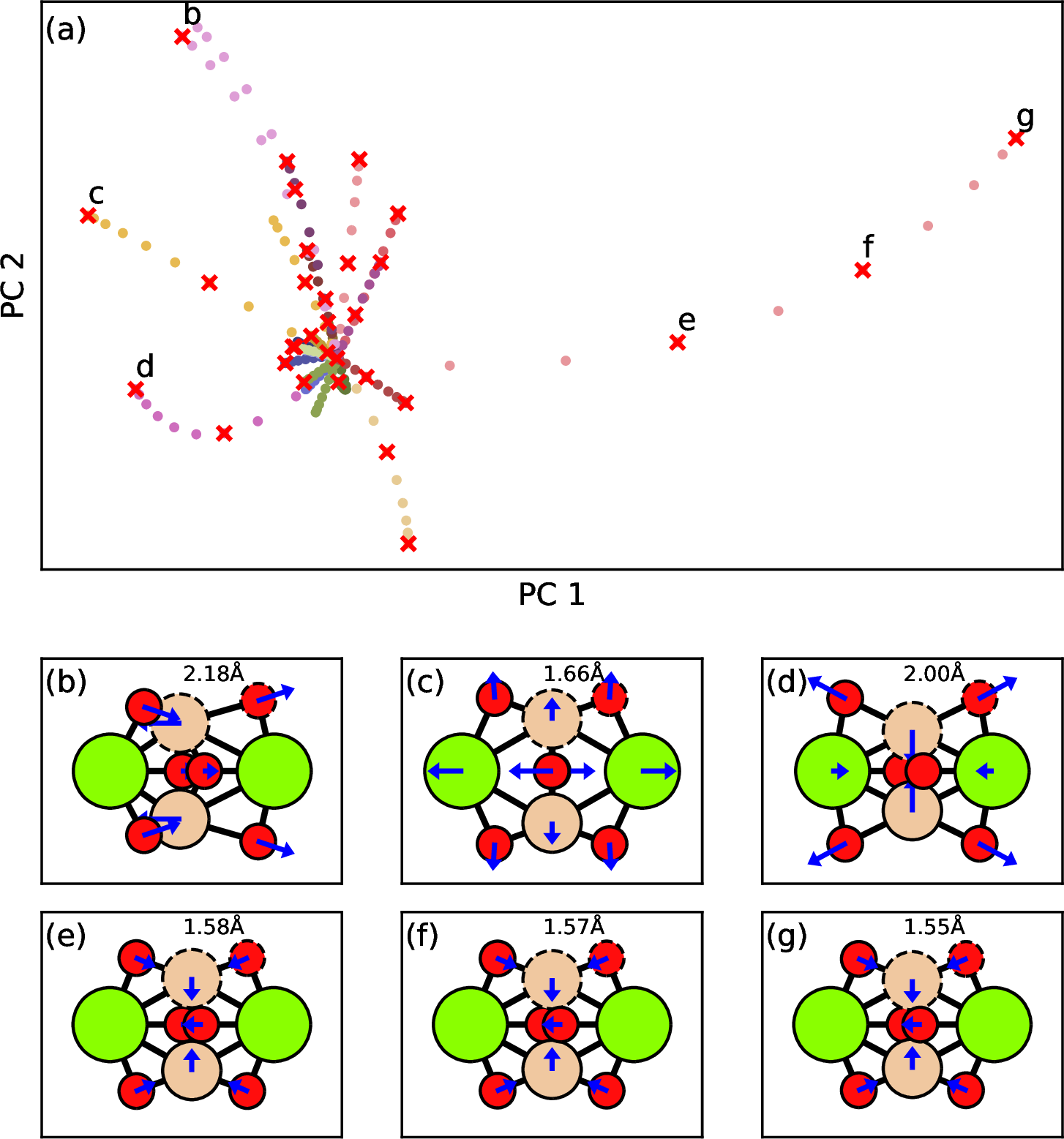

The first step is to make an initial set of training data. Initial training data are generated by normal mode sampling [18], [69], [70] with a modification. Each normal mode represents a unique way to displace a structure from its local minimum as seen in 1. All displaced structures are further filtered by farthest point sampling (FPS) [71], [72] in order to keep the size of training data small. For each silicate cluster, one local minimum structure is selected along with \(3\times \text{N}_\text{atoms}\) structures from our modified normal mode sampling. In the end, 2000 structures collected from all silicate systems are used to train an initial ML model. The whole training data set is split into a training subset and a validation subset with a ratio of 9:1 during initial and subsequent trainings.

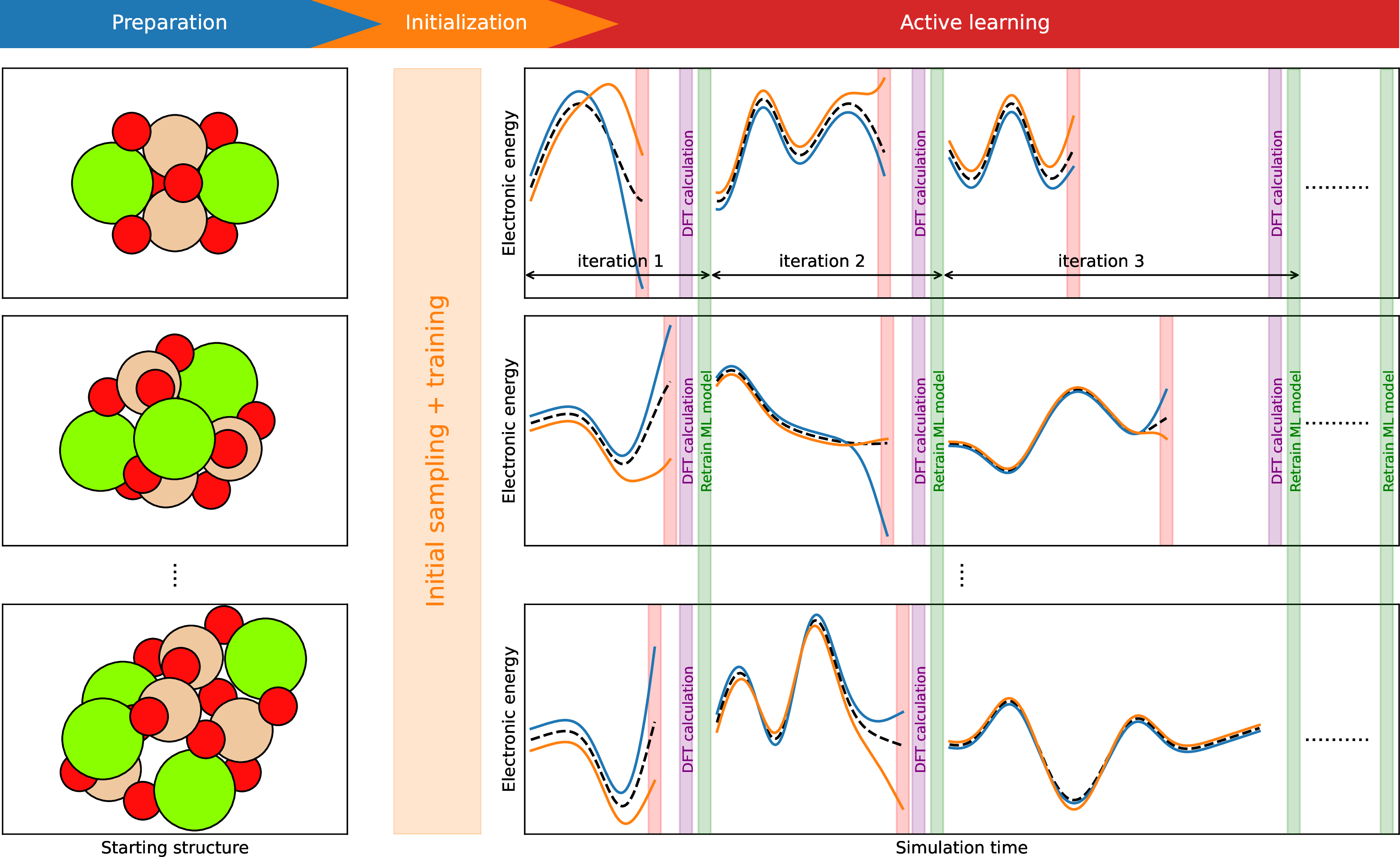

Later on, the ML model is iteratively improved by adding training data from the active learning following the scheme in 2. The sampling is driven by MD using the ML model and is paused upon the appearance of uncertain data that the current ML model cannot describe well. A straightforward approach is to run a DFT calculation at each MD step and compare with the model prediction. Uncertain data can be easily located with this method which is, however, computationally expensive. We use the query by committee method [62], [64] which is a common approach to get an uncertain estimate without heavy DFT calculations. It uses an ensemble of ML models and finds uncertain data where different ML models disagree with each other regarding the energy prediction [64], [73]. The uncertain data is recomputed at the DFT level and added to the training data. A new ML model is trained after the collection of uncertain data. The active learning then moves to next iteration in which MD sampling is performed again. The MD trajectory from previous iteration is used as the starting geometry for the following iteration. For each new iteration, the distribution of atomic velocities is also taken from the previous iteration, and the overall translation and rotation are removed. The target simulation temperature remains unchanged from one iteration to another.

In order to further speed up the sampling, multiple systems are sampled in parallel at different temperatures and stoichiometries [61]. When samplings are finished, DFT calculations on uncertain data can be parallelized as well. Therefore, multiple structures (from one to twenty) are added to the training data in one iteration. Only one job of ML training in one iteration is conducted using the updated training data.

In summary, our protocol for collecting training data was composed of iterations with these steps as shown in 2:

Parallelize over structures

MD propagation until the appearance of an uncertain data or reaching the maximum simulation time

Store uncertain data if appeared

Wait until all MD runs have ended

Perform DFT calculations on uncertain data in a massively parallel manner

Train the ML model

The collection of training data will stop when the accuracy of the ML model cannot be improved by adding more training data. In practice, we assess the difference between DFT and ML in terms of harmonic frequencies of all investigated silicate clusters. We stop collecting training data when this difference cannot be decreased within a number of iterations. Finally, 1458 instances of uncertain data are collected and the final training data consists of 3458 structures.

Figure 1: Farthest point sampling on displaced structures along normal modes. (a) Principal component analysis (PCA) on the global features of various P2 structures. The filled circles in the same color are the structures along one specific mode and different color indicates different modes. The red crosses are the selected points from farthest point sampling. (b) - (g) Snapshots of the selected structures. The normal vectors are plotted in blue arrows. One Si-O bond length is labeled to quantify the difference between those six structures.

Figure 2: Scheme of the active learning in a parallel manner. In each sampling panel, blue and orange lines are energy predictions from two independent ML models trained on the same data. Dashed black lines are the target DFT potential. Each vertical red bar indicates the appearance of an uncertain data.

2.3 MD simulations↩︎

For all MD simulations, a time step of 0.5 fs was used. During the active learning, the temperature was controlled by a Berendsen thermostat [74] with a time constant of 100 fs implemented in ASE 3.21.1 [75]. The maximum simulation time was set to 5 ps during the active learning. During the production runs for the IR spectra, the Nosé-Hoover chain thermostat [76] in SchNetPack 1.0.0 [60] was used to keep a constant temperature. The time constant was 100 fs and a chain length of 3 was used. The total simulation time was set to 40 ps. The first 5 ps was discarded when extracting IR spectra from the autocorrelation function of the dipole time derivative [77] according to 1 . \[\label{eq:autocorr} I_{IR} \propto \int _ {-\infty} ^ {+\infty} \langle \dot{\mu}(\tau) \dot{\mu}(\tau+t) \rangle_{\tau} e^{-i \omega t} dt\tag{1}\] All autocorrelation functions were computed using the Wiener-Khinchin theorem [78]. In order to obtain IR spectra with high quality, a Hann window function [79] and zero-padding were applied to the autocorrelation functions before the Fourier transform. A maximum correlation depth of 2048 fs was used. All processing of IR spectra was done with SchNetPack 1.0.0 [60].

2.4 DFT simulations↩︎

All DFT calculations were performed with ORCA 5.0.0 [80]. A hybrid functional PBE0 [81] was used due to its excellent accuracy in modeling structural and spectroscopic properties of silicate clusters [12]. A double-zeta basis set def2-SVP [82] and an auxiliary basis def2/J [83] were used. All hybrid DFT calculations were accelerated by the RIJCOSX approximation [84]. Here, the RI-J approximation is the resolution of identity (RI) approximation for Coulomb integrals (J) [85]. The "chain of spheres" COSX approximation is used to speed up HF exchange integrals [84]. For all DFT calculations, the SCF convergence criteria were set to tight. Default DFT and COSX grids "DEFGRID2" were used, and normally show small errors for energies, geometries and frequencies compared with a larger grid setting. Examples of ORCA inputs for single point calculations, structure optimization and harmonic calculations are given in the supplementary material.

3 Results↩︎

3.1 Validation of the ML model↩︎

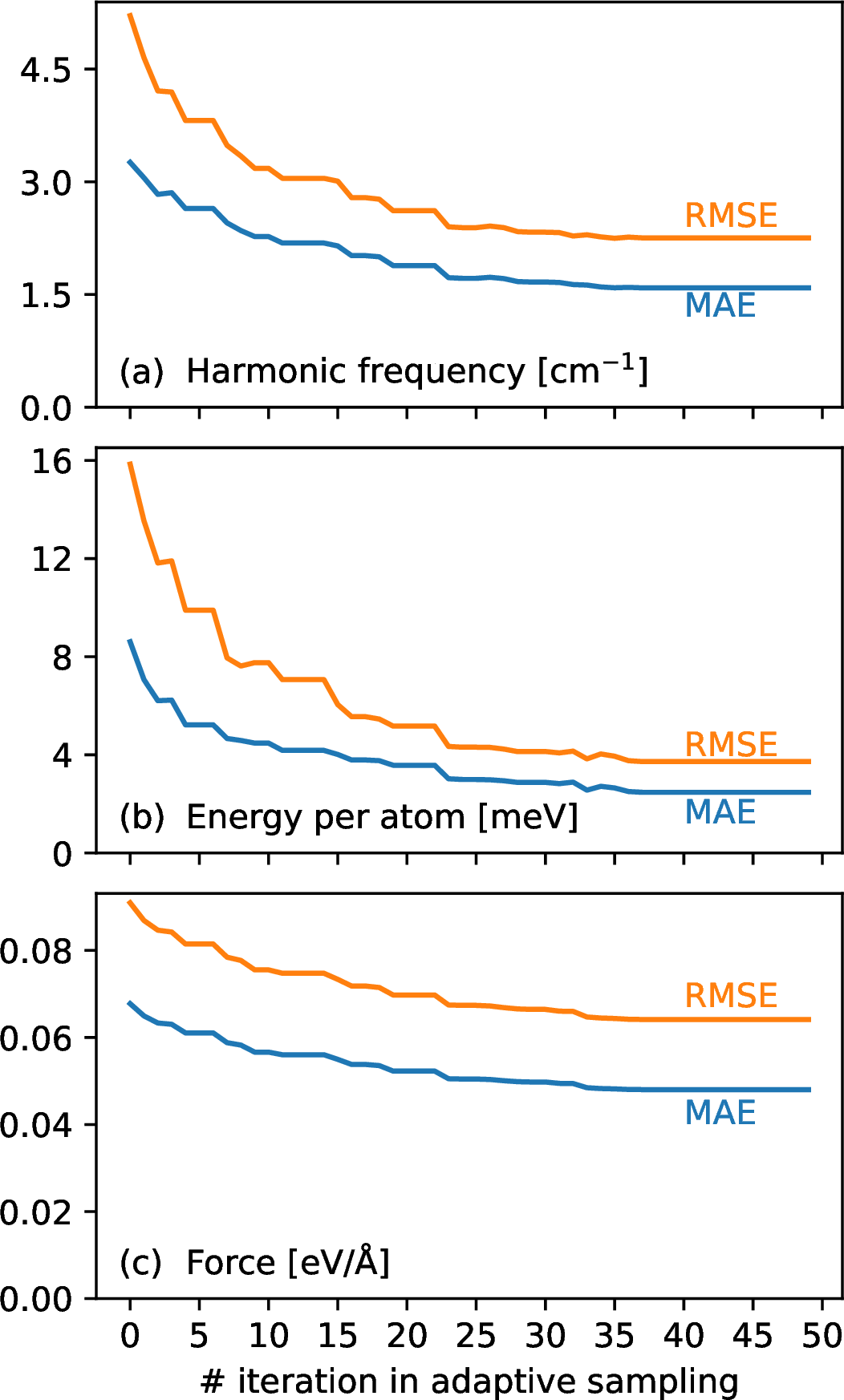

To assess the quality of the trained ML model during the active learning, the model was stored at the end of each iteration and tested against harmonic frequencies of all investigated silicate clusters. Harmonic frequencies are chosen since the reference frequencies from DFT calculations are already available before the active learning and it is computationally cheap to compute harmonic frequencies with the ML potential. The mean absolute error (MAE) and root mean squared errors (RMSE) are used to quantify the difference between the results from DFT and ML. 3 (a) shows MAE/RMSE of harmonic frequency at each sampling iteration. Before the active learning, when the training data are only sampled from normal modes, the MAE of harmonic frequencies is 3.2 cm\(^{-1}\). This error decreases with the use of active learning, indicating the improvement of the ML model when more uncertain data are included in the training. After about 40 iterations, the MAE becomes stagnated and the ML model cannot be further improved by adding more data according to our sampling strategy. Therefore, the active learning is stopped with a 1.6/2.2 cm\(^{-1}\) MAE/RMSE of harmonic frequency. We also need to see if the final ML model can be reliably used in MD simulations. To assess this, another set of test data other than harmonic frequencies is constructed based on the MD simulations using the final ML model. The MD simulations are performed for 100 ps for each silicate cluster at three different temperatures (400K, 800K and 1200K). No obscure configurations (e.g. overlapping atoms and atoms being far from each other) were observed in the trajectory of any above-mentioned MD run. DFT calculations at the PBE0/def2-SVP level are performed on 100 randomly selected structures from each MD run. Finally, a test data set consisting of 6000 structures was collected. To assess the degree of improvement in terms of energy and force predictions, energies and forces are recomputed on the test data using the ML model at each iteration. Their corresponding MAEs and RMSEs are shown in 3 (b) and 3 (c), and decrease as the iteration proceeds. The final ML model gives an MAE/RMSE of 2.3/3.5 meV for energy per atom and 0.043/0.060 eV/Å for atomic force, based on the test data set. The accuracy of the final ML model on the training and validation data set are included in 1.

A comparison between the ML model and classical force fields is given in Fig. S2 in terms of energy and force predictions. For the energy comparison, we use relative energies with respect to the energy of global minimum structure, divided by the number of atoms. The force field by Escatllar et al [11] is chosen since it is parameterized with respect to relative energies and cluster geometries. Our ML model shows a one order of magnitude improvement for energy prediction over the Escatllar force field [11]. For the force prediction, we choose the force field by Walker et al [16], as it is designed to study oxygen diffusion in olivine and more suitable for force prediction than the Escatllar force field [11]. Our ML model shows a two order of magnitude improvement for force prediction over the Walker force field [16].

Figure 3: Accuracy of ML models at each iteration of active learning, in terms of mean absolute errors (MAEs) and root mean squared errors (RMSEs) of (a) harmonic frequency, (b) energy per atom, and (c) atomic force. Harmonic frequency is based on 20 silicate clusters (P1-P10 and O1-O10). Energy and forces are based on the test data. Note that the test data are not included in the training and are used to assess the accuracy of ML models. The generation of test data is described in details in 3.1.

| Property | Unit | Model | Training set | Validation set | Test set | |||

| MAE | RMSE | MAE | RMSE | MAE | RMSE | |||

| Energy per atom | meV | GM-NN | 1.6 | 3.2 | 1.7 | 3.0 | 2.3 | 3.5 |

| Atomic forces | ev/Å | GM-NN | 0.037 | 0.050 | 0.045 | 0.077 | 0.043 | 0.060 |

| Dipole moment | Debye | SchNet | 0.049 | 0.064 | 0.090 | 0.128 | 0.074 | 0.102 |

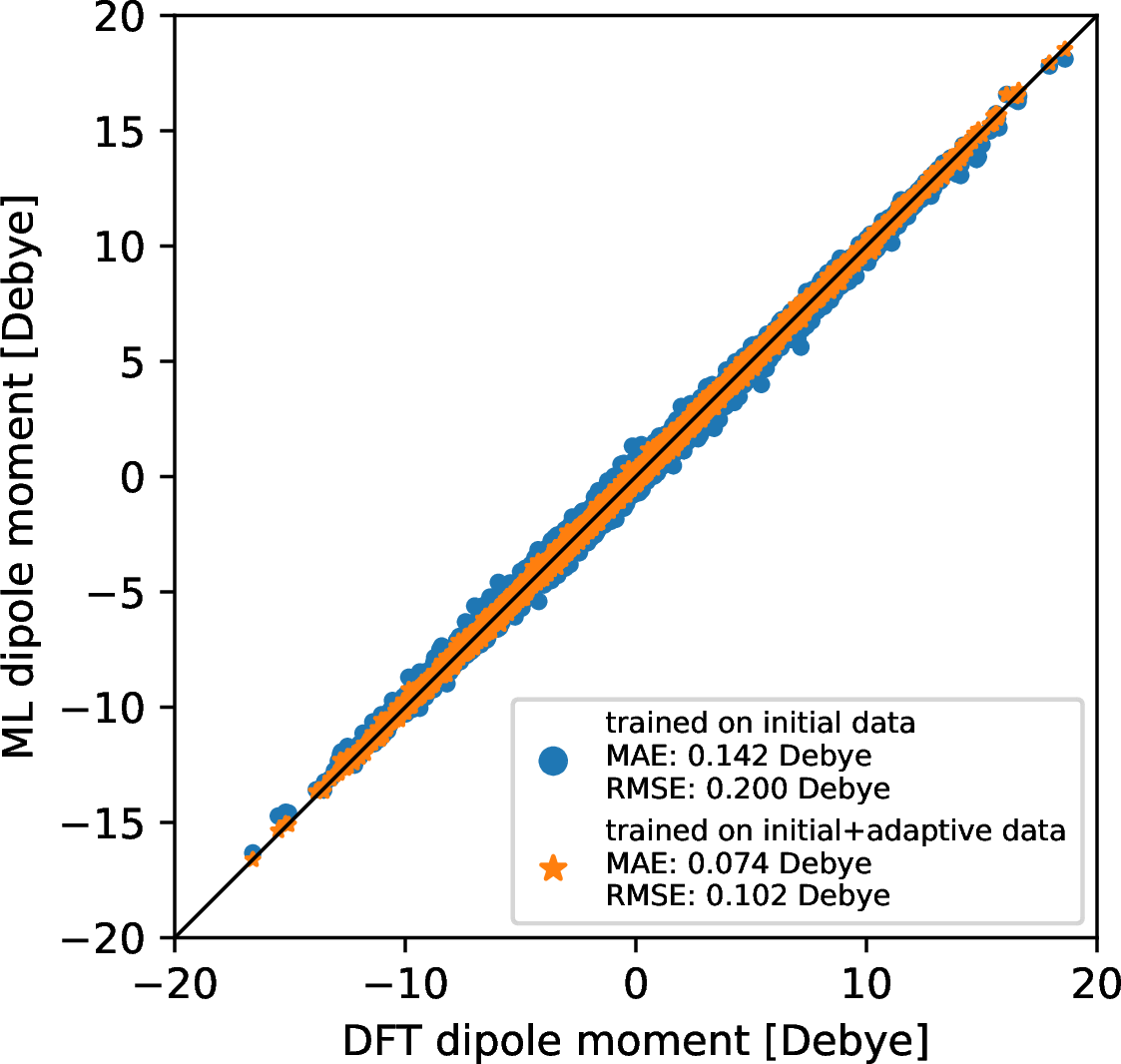

In order to compute infrared intensity, the prediction of dipole moment is needed. We have trained SchNet models only for dipole moment based on two set of training data, namely the initial dataset and the final dataset after the active learning is finished. 4 compares the reference DFT dipole moment against the predicted dipole moment for the test data when different training data are used. The ML model shows good accuracy in predicting dipole moment with MAE of 0.142 Debye and RMSE of 0.200 Debye when only structures sampled from normal modes are included in the training. The ML model from the active learning results in a better accuracy in predicting dipole moment and the MAE/RMSE is only 0.074/0.102 Debye. Adding training data by the active learning indeed improve the ML model’s accuracy regarding dipole moment prediction.

Figure 4: Accuracy of ML models in predicting dipole moment for the test data when different training data are used. Note that the test data are not included in the training and are used to assess the accuracy of ML models. The generation of test data is described in details in 3.1.

3.2 Spectroscopic behavior of the ML model↩︎

Our ML models have reproduced the potential energy surface (PES) and dipole surface of DFT to a reasonable degree of accuracy. The next step is to benchmark the spectroscopic behavior of ML models against DFT results. IR spectra containing both band positions and infrared intensities at the harmonic level are evaluated first. Infrared intensities, which are not examined in 3.1 yet, are calculated from dipole derivatives along normal modes. The DFT-calculated band positions and intensities are reproduced almost exactly by ML models for all investigated silicate clusters, see Fig. S3. MAEs of harmonic frequencies for most clusters are below 2.5 cm\(^{-1}\). P1 and O1 show slightly higher MAEs of 4.2 cm\(^{-1}\) and 3.6 cm\(^{-1}\). For harmonic intensities, the averaged MAE for all clusters is 26.8 km/mol. P2 shows the lowest MAE of 2.8 km/mol and P10 shows the highest MAE of 49.4 km/mol.

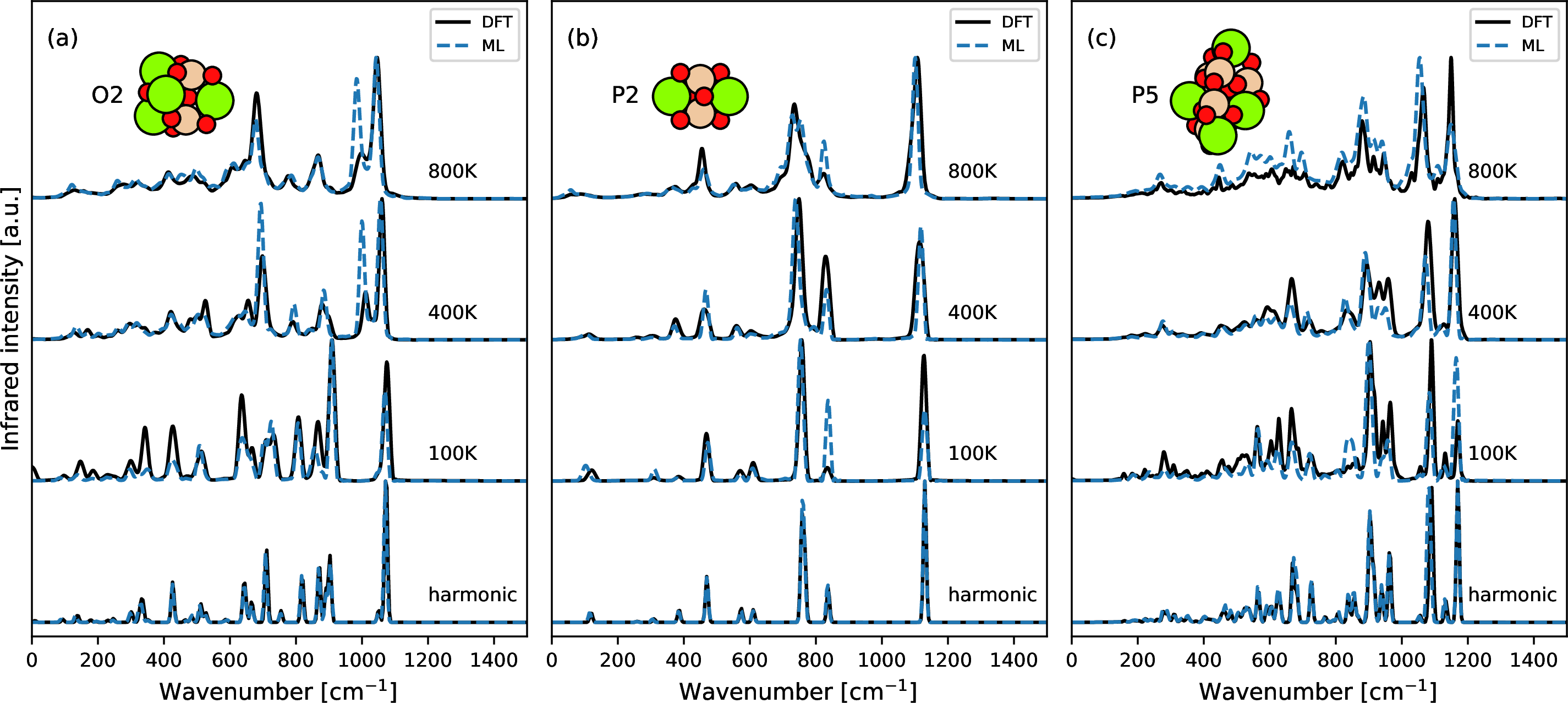

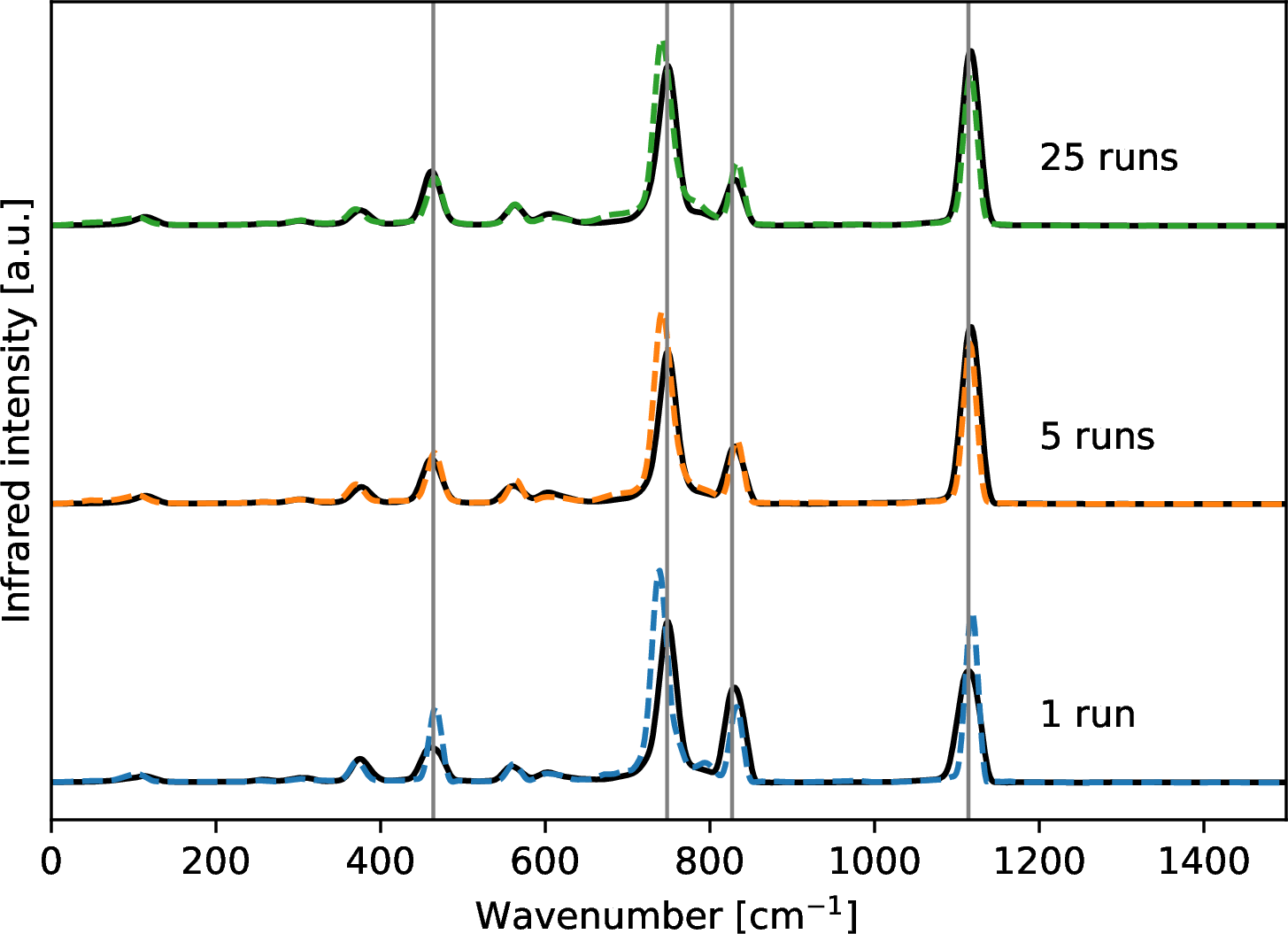

Selected harmonic spectra are given in the bottom panel of 5 along with MD-based spectra at three selected temperatures (100K, 400K and 800K). For MD-based spectra, band positions are well reproduced by ML models while intensities have larger variations than band positions. The variations in intensities could arise from differences in velocity initializations in the MD runs or inaccuracy of the ML models. In order to check the influence of variable velocity initializations, we performed twenty five independent DFT-MD runs for P2 at 400K. The averaged IR spectra over independent DFT-MD runs are compared with those of ML-MD runs in 6. When only one MD run is used, intensity variations are observed for peaks with frequencies around 464, 748, 827, and 1114 cm\(^{-1}\). The variation becomes smaller for bands at 464, 827 and 1114 cm\(^{-1}\) when the number of independent MD runs increased from one to five, and barely changes when twenty five independent runs are used. The disagreement between DFT and ML results of band position and intensity at 748 cm\(^{-1}\) (Si-O stretching modes between the central O atoms and Si atoms) is not sensitive to the number of independent MD runs. This disagreement is therefore likely due to model fitting. Performing independent DFT-MD runs for each silicate size and temperature is computationally expensive. Therefore only one DFT-MD run and one ML-MD run are compared in 5 for each system. In Fig. S4, one DFT-MD run is compared against independent ML-MD runs. In general, DFT results are within the range of independent ML runs. We expect better agreement between DFT and ML when more independent runs are performed. Since it is computationally expensive to run many DFT-MD simulations, the resulting IR spectra are expected to be biased by the velocity initializations. However, the use of an ML potential allows for a fast evaluation of many independent MD runs and thus the generation of converged IR spectra.

Figure 5: Comparing IR spectra from DFT and the ML model. Both harmonic and MD-based spectra are given for selected silicate clusters (a) O2, (b) P2, (c) P5 at 100K, 400K and 800K.

Figure 6: MD-based IR spectra of P2 at 400K averaged over independent runs. DFT results are shown in solid black lines and ML results are shown in colored dashed lines. Number of independent DFT-MD and ML-MD runs is labeled on the right of each spectrum. Bands at 464, 748, 827, and 1114 cm\(^{-1}\) are highlighted by vertical gray lines.

3.3 Transferability of the ML model↩︎

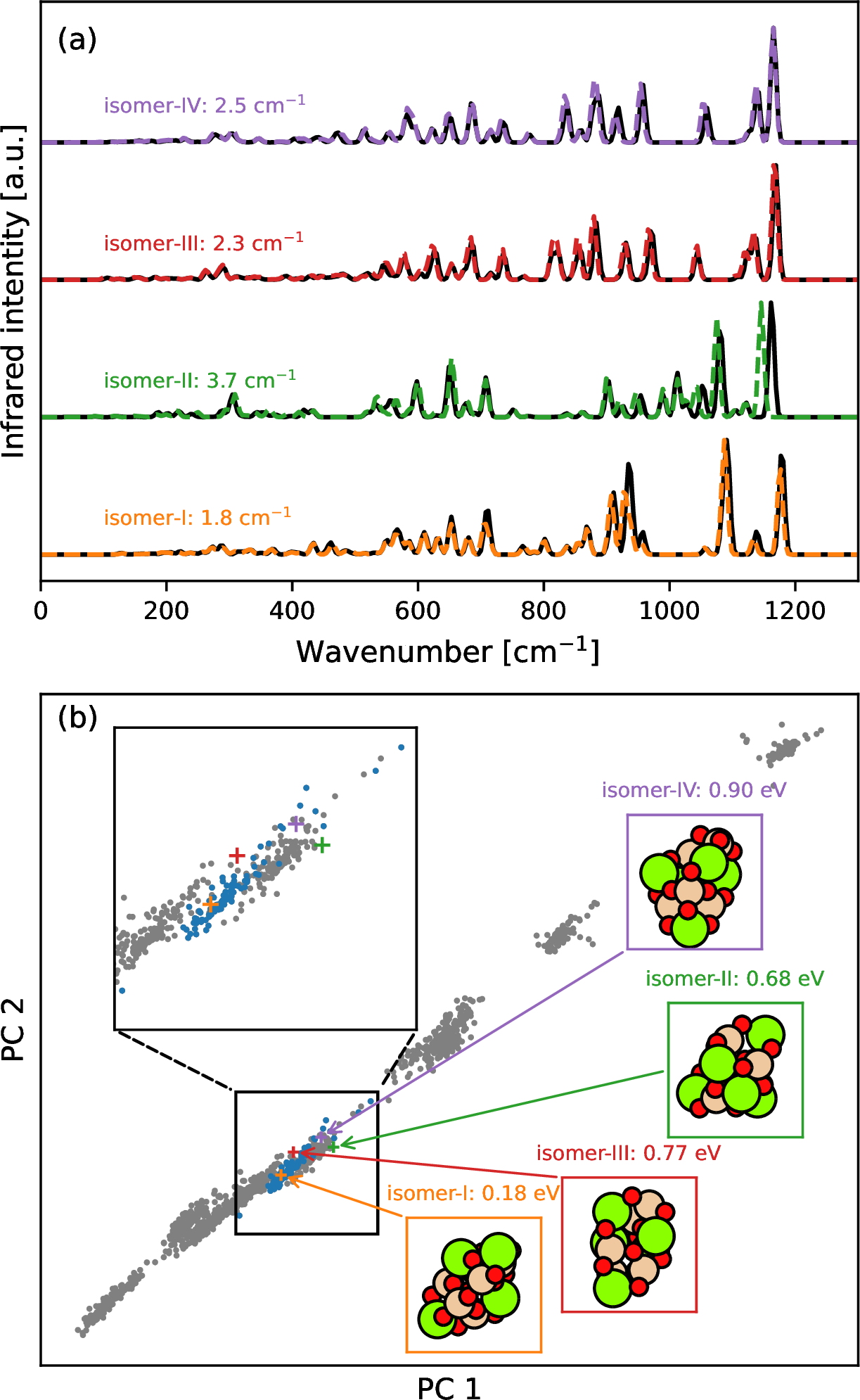

After the assessment of the ML model, we want to see whether the ML model is accurate and transferable for the structures that are different from the global minimum based training and test data. Examples of such structures are high-energy isomers that could be obtained during global optimization searches via the GOFEE (global optimization with first-principles energy expressions) method [35]. At first, P5 high-energy isomers are chosen to test the transferability of the ML model. Only harmonic spectra are used to verify the transferability of the ML model, since harmonic frequencies are sensitive to the quality of the PES and harmonic calculations at the DFT level are computationally much cheaper than DFT-MD simulations. In addition, harmonic frequencies are the main reservoir of vibrational transitions for a given nanosilicate cluster. The synthetic IR spectra of various nanosilicate clusters based on the harmonic approximation are already used to estimate the abundance of nanosilicate clusters in the diffuse ISM [86] and are valuable for understanding the property of interstellar dust. We compute harmonic IR spectra of these isomers with DFT (PBE0/def2-SVP) and the ML model and show their spectra comparisons in 7 (a). All isomers have MAEs of harmonic frequencies lower than 4 cm\(^{-1}\) and infrared intensities are well fitted. The good transferability of the ML model is expected when the target system is similar with training data in the configuration space even though they may look quite different in the Cartesian coordinate space. The configuration space for the nanosilicates investigated in this work has high dimensions and is hard to directly visualize. Therefore, principal component analysis (PCA) is used to reduce the dimension of the configuration space with each configuration represented by a global Smooth Overlap of Atomic Positions (SOAP) feature [87]. Among the four selected isomers, isomer-I has the lowest MAE of harmonic frequency since it lies closer to training data in the feature space than other isomers in 7 (b). Similarly, isomer-II shows the highest MAE of harmonic frequency because it has the lowest similarity to training data in the feature space. Later on, transferability analysis is also performed for other pyroxene (P3-P8) and olivine (O2-O7) isomers (see their structure snapshots in Fig. S6 and Fig. S7) which are not directly included in the training data. The results are shown in Fig. S5. The MAE of harmonic frequency is 2.1 cm\(^{-1}\) for pyroxene and 1.7 cm\(^{-1}\) for olivine. They indicate that the ML model tends to show good prediction accuracy on the test data which looks similar with training data in the feature space. We do not expect that our ML model will be reliable and accurate for bulk silicates and chemical processes involving dramatic changes in bonding types (e.g. oxygen diffusion, silicate growth and non-stoichiometric silicates). The transferability to larger cluster sizes will be examined in a separate study.

Figure 7: Transferability of the ML model on P5 isomers found via the GOFEE method [35]. (a) Harmonic IR spectra of selected P5 isomers with DFT results in black solid lines and ML results in colored dashed lines. MAEs of harmonic frequencies are labeled for each isomer. (b) Principal component analysis (PCA) on global Smooth Overlap of Atomic Positions (SOAP) features [87] of all pyroxene clusters. Gray circles are training data. Blue circles are P5 training data. Colored crosses are high-energy P5 isomers with their structures and relative energies to P5 global minimum attached.

3.4 Application of the ML model↩︎

As our motivation for developing a ML potential of nanosilicate clusters is to aid the interpretation of silicate band in infrared observations, we compute IR spectra of 20 silicate clusters (P1-P10 and O1-O10) via MD simulations with the ML model. 8 shows the sum of IR spectra for two types (pyroxene and olivine) of silicate clusters while assuming the distribution of cluster size is uniform. For both pyroxene and olivine clusters, the most intense IR peaks are in the 9-10 \(\mu\)m range. They both have a main peak with a slightly smaller wavelength than the signature 9.7 \(\mu\)m feature at low temperature. Our MD-based IR spectra at low temperature are in a good agreement with a previous IR study of pyroxene and olivine clusters under the harmonic approximation [11]. As temperature increases, this blueshifted peak broadens and becomes closer to 9.7 \(\mu\)m. In addition, the center position of IR peaks of olivine is closer to 9.7 \(\mu\)m than that of pyroxene. More systematic IR investigations of nanosilicate clusters and their astrophysical implications are planned in the future.

Figure 8: Sum of IR spectra of (a) pyroxene (P1-P10) (b) olivine (O1-O10) at 100K, 400K and 800K using the ML model, assuming equal weights of different cluster sizes. A vertical black line highlights the 9.7 \(\mu\)m silicate band.

4 Conclusions↩︎

We have demonstrated that active learning is an efficient method to generate training data for constructing ML interatomic potentials. After initial training, our ML potential is iteratively improved employing active learning, which can be further accelerated in a parallel manner. Our comparison of ML-based and DFT-based infrared spectra (5) reveals that our ML potential is highly accurate for infrared spectroscopic studies of nanosilicate clusters with both harmonic approximation and an MD-based approach. Our ML potential also exhibits certain transferability regarding harmonic frequencies and IR intensities for high-energy isomers of nanosilicate clusters that are not directly included in the training data, but have similar cluster sizes as the training data. Since the ML potential is computationally much cheaper than DFT, longer MD runs can be conducted and better statistics can be achieved. It remains an open question if our ML potential is still accurate and reliable for larger silicate cluster sizes that are not covered in this study. We will address this issue in a future study. Our work will allow for systemic investigations into how the IR spectra of silicate clusters depend on size, structure and temperature. Our work will be particularly beneficial for understanding the properties of silicate dust grains in space, especially in connection with the highly sensitive IR capabilities of the James Webb Space Telescope [88].

5 Supplementary Material↩︎

See the supplementary material for examples of ORCA input files, silicate structures that are used in this work and more validations of the ML model.

We thank Andreas Møller Slavensky for providing the structure files of high-energy silicate isomers. This work has been supported by the Danish National Research Foundation through the Center of Excellence “InterCat” (Grant agreement no.: DNRF150) and the VILLUM FONDEN (Investigator grant, Project No. 16562). S.T.B acknowledges support from the MICINN funded project grants PID2021-127957NB-I00 and TED2021-132550B-C21, project grant 2021SGR00354 funded by the Generalitat de Catalunya and through the María de Maeztu program for Spanish Structures of Excellence (CEX2021-001202-M).

6 Conflict of Interest↩︎

The authors have no conflicts to disclose.

7 DATA AVAILABILITY↩︎

The ML model developed in this work and source data for figures in this article are available in https://github.com/zyt0y/MLP-IR-nanosilicate-clusters. Other data that support the findings of this work are available within the article and its supplementary material.