Improved Multi-Scale Grid Rendering of Point Clouds for Radar Object Detection Networks

May 25, 2023

Abstract

Architectures that first convert point clouds to a grid representation and then apply convolutional neural networks achieve good performance for radar-based object detection. However, the transfer from irregular point cloud data to a dense grid structure is often associated with a loss of information, due to the discretization and aggregation of points. In this paper, we propose a novel architecture, multi-scale KPPillarsBEV, that aims to mitigate the negative effects of grid rendering. Specifically, we propose a novel grid rendering method, KPBEV, which leverages the descriptive power of kernel point convolutions to improve the encoding of local point cloud contexts during grid rendering. In addition, we propose a general multi-scale grid rendering formulation to incorporate multi-scale feature maps into convolutional backbones of detection networks with arbitrary grid rendering methods. We perform extensive experiments on the nuScenes dataset and evaluate the methods in terms of detection performance and computational complexity. The proposed multi-scale KPPillarsBEV architecture outperforms the baseline by 5.37% and the previous state of the art by 2.88% in Car AP4.0 (average precision for a matching threshold of 4 meters) on the nuScenes validation set. Moreover, the proposed single-scale KPBEV grid rendering improves the Car AP4.0 by 2.90% over the baseline while maintaining the same inference speed.

1 Introduction↩︎

Accurate environmental perception, e.g.. detection of road users, is crucial for safe and reliable operation of autonomous driving platforms. In this context, radar sensors play an increasingly important role in addition to camera and lidar, as they are cost effective and highly robust against adverse weather conditions. Furthermore, radar perception systems are improving at a rapid pace and promise great potential w.r.t.. upcoming high-resolution radar sensors that provide much denser point clouds.

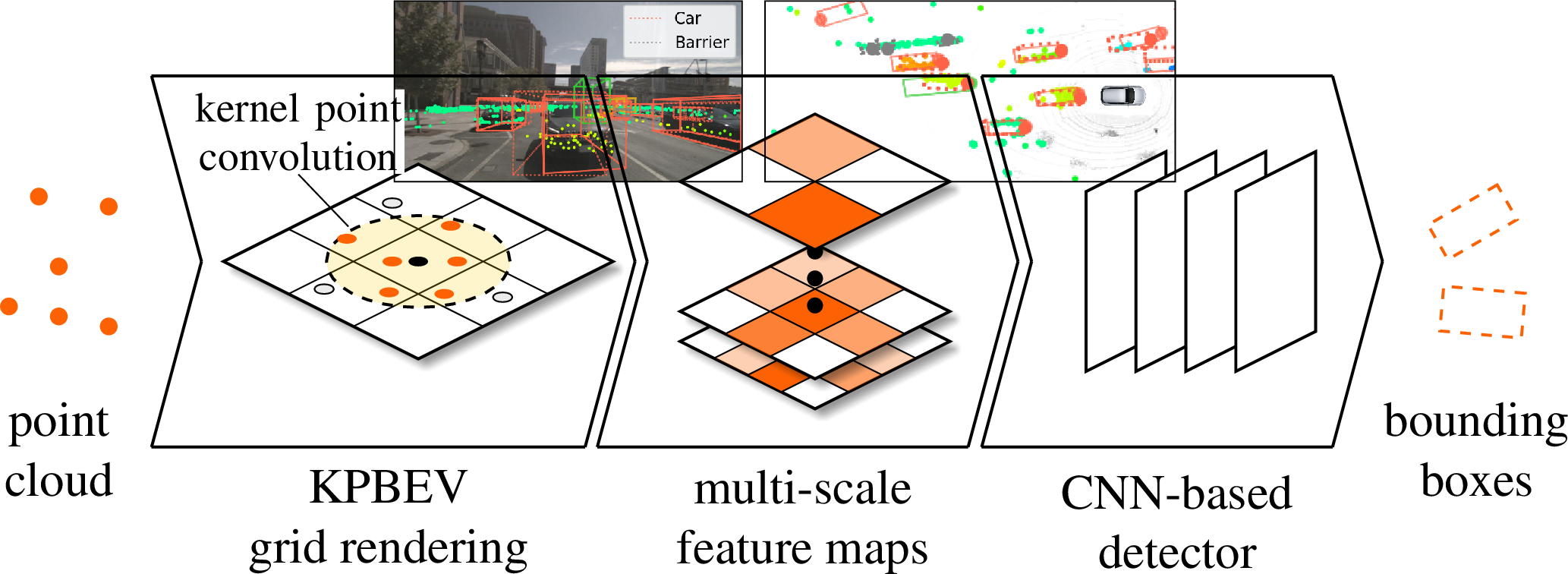

Figure 1: Object detectors based on CNNs render point clouds into a discrete grid representation, which can lead to information loss. We propose the novel multi-scale KPPillarsBEV architecture and improve grid rendering with two key methods. First, we propose KPBEV, a new grid rendering module, that uses kernel point convolutions to learn more expressive features from the radar point cloud. Furthermore, we incorporate scale-aware features into grid-based detectors with a general multi-scale grid rendering formulation.

With regard to object detection, previous work in lidar [@Chen2017; @Zhou2019; @Yang2019; @Lang2019] and radar [@Xu2021; @Scheiner2021; @Ulrich2022] domains has shown that grid-based architectures outperform methods that operate directly on the point cloud. Typically, such grid-based architectures first project point clouds into a 2D bird’s-eye view (BEV) or 3D voxel representation and then apply convolutional neural networks (CNNs). In such architectures, the transition from continuous point cloud to discrete grid representation is an essential step. The grid resolution has to be parametrized carefully, as it has a strong influence on both detection performance and computational complexity. A high grid resolution allows to learn fine-grained features from the input points. On the other hand, it can inhibit the propagation of information over larger spatial extents and increases computational complexity. Specifically, the complexity scales quadratically when increasing the resolution of a 2D grid. In contrast, a low grid resolution improves inference speed and reduces memory requirements of the model. However, it can lead to information loss during grid rendering, as more points fall into the same grid cell, which makes cell-wise feature aggregation more difficult.

In grid-based architectures, information exchange across multiple cells is typically performed by convolutional layers after discretizing the point cloud to a grid. However, finer geometric details in the point cloud may already be lost at this stage. In [@Ulrich2022], this problem is addressed with hybrid models that combine grid-based methods with point-based preprocessing consisting of a graph neural network (GNN) [@Shi2020] or kernel point convolutions (KPConvs) [@Thomas2019]. Still, information is lost during grid rendering which is detrimental to object detection performance. To address this issue, we propose KPBEV, a novel grid rendering module that aims to improve information flow between points within each cell and across multiple cells by directly integrating point convolutions into grid rendering.

Furthermore, traffic participants and other objects of interest can have very different extents, e.g.. trucks in comparison to pedestrians. This challenge is commonly addressed by using detection backbones that down- [@He2016] and upsample spatial features [@Lin2017]. In the context of lidar object detection there are different multi-scale grid rendering approaches [@Ye2020; @Song2020a; @Kuang2020], that directly process the point cloud into feature maps of different spatial scales. In this paper, we propose a general formulation for multi-scale grid rendering which is agnostic to the actual grid rendering method. We also extensively evaluate the benefit of multi-scale grid rendering compared to single-scale with different architectures and grid rendering methods.

Finally, we propose a novel architecture, multi-scale KPPillarsBEV, that brings together our novel KPBEV grid rendering and multi-scale grid rendering formulation with point-based preprocessing [@Ulrich2022]. KPPillarsBEV outperforms the current state-of-the-art radar object detection models. In addition, variations of this architecture offer outstanding tradeoffs between detection performance and computational complexity.

To summarize, the contributions of this work are the following

We propose KPBEV, a grid-rendering module that leverages KPConvs to improve the information exchange between input points and mitigate discretization effects.

We propose a novel multi-scale grid rendering formulation to incorporate multi-scale feature maps rendered directly from the point cloud into detection networks.

We propose a novel multi-scale KPPillarsBEV architecture, that brings together KPBEV, multi-scale rendering and point-based preprocessing, which outperforms the state-of-the-art for radar object detection.

We evaluate detection performance and computational complexity of this architecture and its variants on nuScenes [@Caesar2020]. We find that these variations provide outstanding tradeoffs between performance and complexity compared to the state of the art.

2 Related Work↩︎

2.1 Radar Object Detection↩︎

Data-driven methods for radar-based object detection can be divided into two categories: spectrum-based and point cloud based. Spectrum-based object detection networks use dense representations of the spectral radar data that can include range, radial velocity (Doppler), azimuth and elevation dimensions. For this purpose, CNNs are applied to different 2D projections of the radar tensor, such as range-Doppler and [@Brodeski2019; @Rebut2022] range-azimuth maps [@Lim2019; @Wang2021a] or combinations thereof [@Major2019]. In GTRNet [@Meyer2021] and T-RODNet [@Jiang2023], convolutional feature extractors are combined with graph- and attention-based methods, respectively. On the one hand, using raw spectral data avoids loss of information due to downstream steps in the radar signal processing chain, e.g.. constant false alarm rate detectors. On the other hand, radar spectra approaches are computationally more complex and are more memory-intensive and sensitive to the sensor configuration, which can impede generalization of neural networks based methods.

Thus, a second research direction focuses on the more abstract and sensor-independent point cloud representation of radar data. This can be further subdivided into point- and grid-based methods. Building on the pioneering architectures of PointNet [@Qi2017a] and PointNet++ [@Qi2017], point-based models focus on directly aggregating information from points clouds, which are often irregular and sparse. In the radar domain, such approaches were applied to classification [@Ulrich2021], semantic segmentation [@Schumann2018] and object detection [@Danzer2019; @Bansal2020; @Scheiner2021]. Recent work also investigated more complex point-wise feature extractors such as graph and kernel point convolutions [@Svenningsson2021; @Nobis2021; @Ulrich2022] or transformers [@Bai2021; @Zeller2023]. In contrast, grid-based methods first project the point cloud into a regular grid in order to leverage the capability of CNNs to extract spatial features. To this end, [@Dreher2020; @Scheiner2021] use variations of the YOLO architecture [@Redmon2016] or feature pyramid networks [@Meyer2019a; @Lin2017] to detect objects in BEV projections of the radar point cloud. In [@Scheiner2021; @Xu2021; @Tan2022; @Palffy2022], the PointPillars [@Lang2019] feature encoder is used to learn a more abstract grid representation of the point cloud. [@Ulrich2022] proposes a hybrid architecture, combining grid-based methods with point-based preprocessing to learn more expressive features from point clouds and to improve the detection performance.

2.2 Grid Rendering of Point Clouds↩︎

Converting irregular and sparse point clouds into regular and dense grid representations is a common task for 3D object detection models that leverage CNNs for feature extraction. Methods for such grid rendering of point clouds can be distinguished by the resulting grid representation and the type of encoding used to obtain cell-wise features.

MV3D [@Chen2017] and PIXOR [@Yang2019] utilize handcrafted features such as intensity, density and height maps to obtain a BEV representation from point clouds that can be processed with 2D convolutions. In contrast, VoxelNet [@Zhou2017] and PointPillars [@Lang2019] rely on learnable feature extractors that apply simplified PointNets [@Qi2017a] to all points within the same grid cell. Building on this approach, SECOND [@Yan2018] and PillarNet [@Shi2022] employ hierarchical encoders that successively downsample the initial feature map (along one or multiple spatial dimensions) with sparse convolutions before feeding it into a convolutional detection backbone.

Besides the previously mentioned methods which render the point cloud into a feature map at a single scale, several methods generate feature maps at different spatial scales in parallel. For instance, attentive voxel encoding layers [@Ye2020] or a PointPillars variation [@Song2020a] are applied for different grid resolutions and the resulting grids are fused at a common scale. Voxel-FPN [@Kuang2020] extends this by additionally forwarding the individual feature maps directly to the detection backbone.

3 Method↩︎

3.1 Overview↩︎

In this paper, we aim to improve information flow from irregular point cloud data to image-like, dense feature maps in grid-based object detection networks. To this end, we propose KPBEV, a module that encodes point features into a grid using kernel point convolutions (KPConvs) [@Thomas2019]. In addition, we propose a general multi-scale rendering formulation to encode the point cloud into grids with different spatial scales. Finally, we propose a novel architecture, multi-scale KPPillarsBEV, that brings together KPBEV, multi-scale rendering and point-based preprocessing.

3.2 KPBEV↩︎

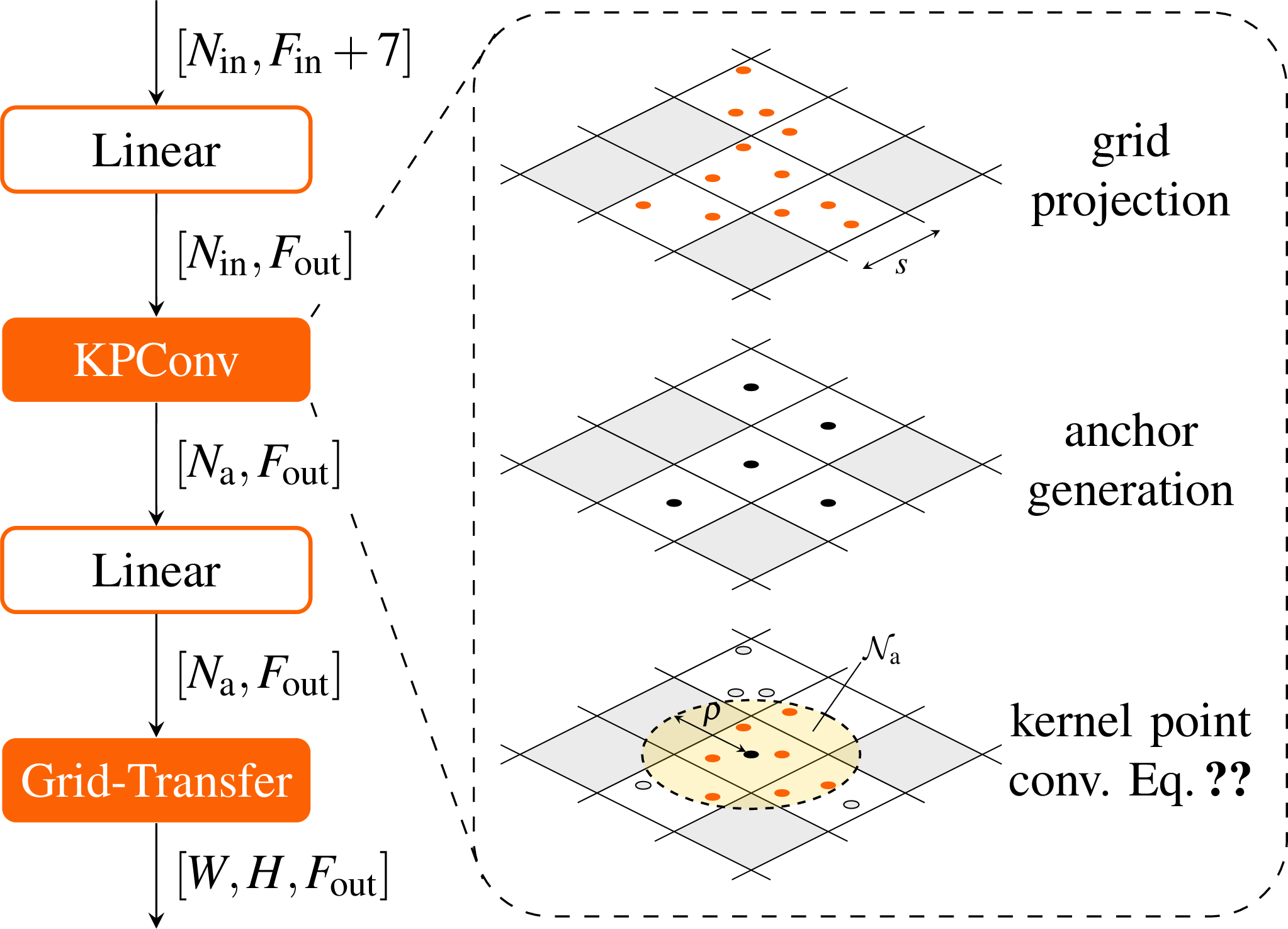

Figure 2: KPBEV extracts features at each anchor point, i.e.. the centers of non-empty grid cells, using rigid KPConvs. Features are transformed by a linear layer before and after this step. Finally, the features are transferred to the grid leveraging the one-to-one correspondence between anchor points and grid cells. Note that batch normalization and ReLU activation are applied after each layer.

In previous work [@Lang2019; @Zhou2017], cell-wise features are obtained by aggregating over points within the same cell using a pooling function. Local neighborhood features across multiple cells are then encoded by applying convolutions. However, at this stage, fine-grained details of the input point cloud may already be lost due to discretization and aggregation.

Thus, we propose a novel grid-rendering module, KPBEV, that aims to improve information flow between points in order to learn more expressive features of local neighborhoods. For this purpose, it exploits both the descriptive power of KPConvs and the flexible parametrization of the receptive field for cell-wise feature aggregation. For each non-empty grid cell (anchor point), we aggregate information from points in its neighborhood using a KPConv.

In previous approaches, a point is usually assigned to a single cell, whereas KPBEV can use the information from a point in multiple cells. In other words, KPBEV can include points from adjacent cells which enables it to better capture local context. Moreover, the anchor-wise aggregated features can be directly transferred to the grid, as there is a one-to-one mapping between each anchor point and each non-empty grid cell.

The structure of the KPBEV module is depicted in Fig. 2. The input is a point cloud \(\mathcal{P}_\textrm{in}=\set{x_0,\ldots,x_{N_\textrm{in}}}\) of \(N_\textrm{in}\) points \(x_i\in \mathbb{R}^2\) and their corresponding features \(f_i\in\mathbb{R}^{F_\textrm{in}}\) in \(\mathcal{F}_\textrm{in}=\set{f_0,\ldots,f_{N_\textrm{in}}}\). First, the input points are projected into a grid of width \(W\), height \(H\) and spatial scale \(s\). Based on this grid projection, a new set of \(N_\textrm{a}\) points is created, that contains a point at the center of each non-empty grid cell. These points serve as anchors for encoding cell-wise contexts from neighboring input points \(\mathcal{N}_\textrm{a} = \set{x_i \in \mathcal{P}_\textrm{in}\;|\; \left\lVert x_i-x_a\right\rVert \leq \rho}\) within a specified radius \(\rho\).

To this end, the input features \(f_i\) are enhanced with additional features \(\set{(x_i-x_a), (x_i-x_c), x_c, n}\) where \((x-y)\) refers to the relative position of two points \(x\) and \(y\), \(x_a\) is the corresponding anchor point of \(x_i\) and \(x_c\) is the centroid of the \(n\) points within the same grid cell. The resulting augmented features \(f_i\in\mathbb{R}^{F_\textrm{in}+7}\) are then processed with a linear layer to obtain a more abstract representation \(f'_i\in\mathbb{R}^{F_\textrm{out}}\).

Subsequently, features are aggregated at each anchor point by applying a rigid KPConv. KPConvs encode features at a given output coordinate from features of input points in a spherical neighborhood. This is done via a set of kernel points \(x_k\) that carry learnable weight matrices \(W_k\) and are evenly distributed around the output point. Specifically, the features of input points are processed by multiplication with the weight matrix of the respective kernel point and a distance-based correlation function \(h(\cdot,\cdot)\).

In general, KPConvs and point convolutions allow for the set of input and output points to be different. KPBEV takes advantage of this fact and aggregates features \(f_{a}\in \mathbb{R}^{F_\textrm{out}}\) at each anchor point \(x_a\) from the respective neighboring input points \(x_i\) and their abstract features \(f'_i\) \[\label{eq:kpconv} f_{a} = \sum_{x_i \in \mathcal{N}_a} f'_i \sum_k h(x_k,x_i-x_a) W_k\tag{1}\] with the linear correlation function \[h(x_k, y) = \max\left(0,\; 1-\frac{\lVert x_k-y \rVert}{\rho_k}\right)\] where \(\rho_k=\rho/2.5\) is the influence radius of each kernel point (see [@Thomas2019]). Note that, depending on the convolution radius \(\rho\), the neighborhood context considered at each anchor may span across several cells. Consequently, information from points in adjacent cells can also be included at this stage to capture the local point cloud context. In addition, KPConvs only have to be applied to each anchor point rather than each input point, which can lead to a significant reduction of computations, as we show in our experiments (see Sec. 4).

The resulting anchor-wise features are once again passed through a linear layer. Since there is a one-to-one correspondence between anchor points and grid cells, the information aggregated at each anchor point can be directly transferred to the underlying grid to obtain a dense feature map \(\mathcal{G}\in\mathbb{R}^{W\times H\times F_\textrm{out}}\). Although we illustrate and focus on the generation of 2D BEV representations (see Fig. 2), the proposed method can also be used to generate 3D voxel grids. Furthermore, we focus on kernel point convolutions as they typically perform well in radar object detection [@Ulrich2022]. However, it is also possible to combine the proposed method with other types of point-based convolutions, such as graph convolutions.

3.3 Multi-Scale Grid Rendering↩︎

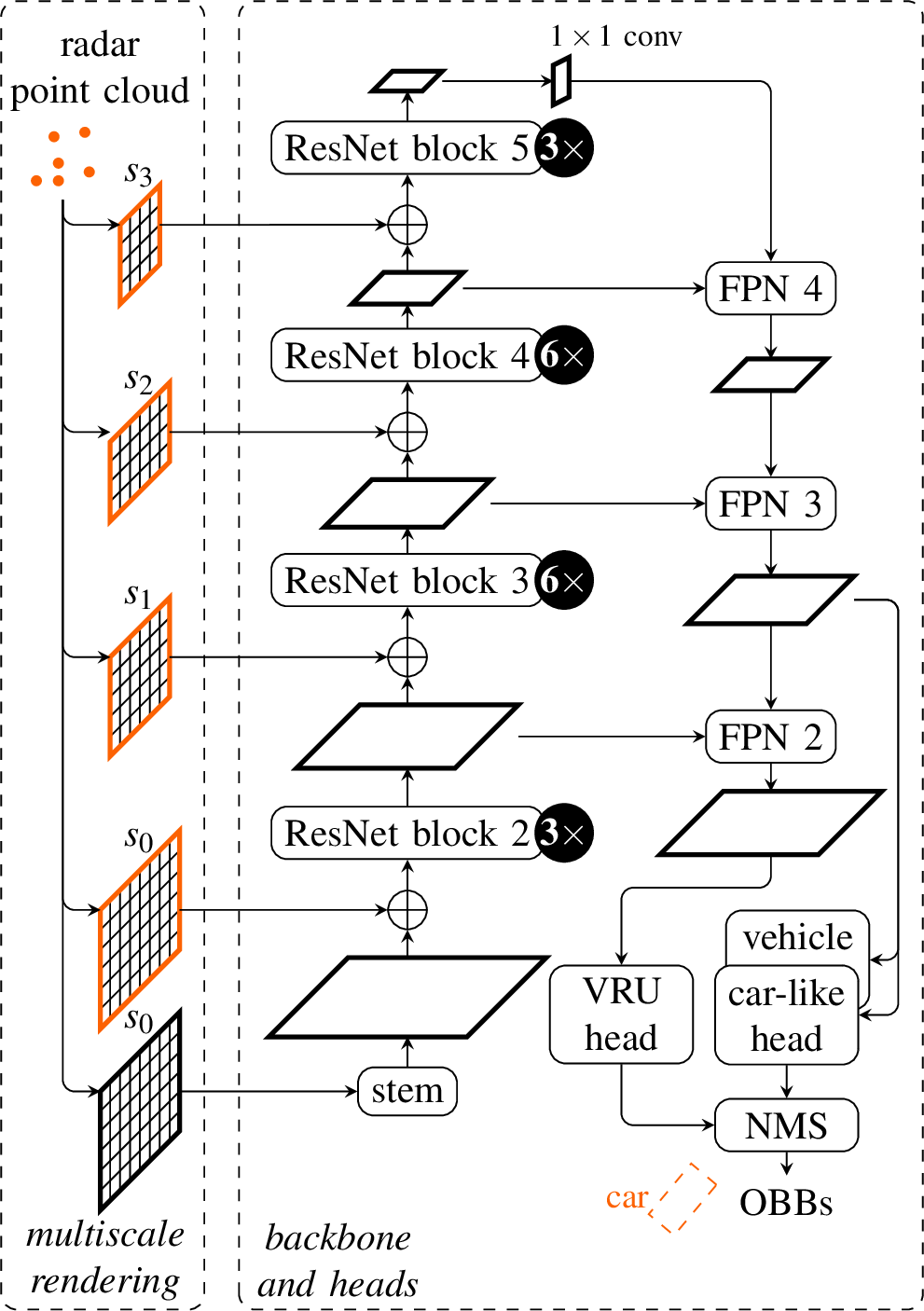

Figure 3: Structure of the multi-scale grid rendering in our network architecture. Given a grid rendering method, for each spatial scale \(s_i\) in the backbone, the radar point cloud is rendered into a grid of corresponding scale (in orange). The resulting scale-aware features are fused at the respective backbone stage via concatenation \(\oplus\). Finally, convolutional detection heads and non-maximum suppression (NMS) are applied to generate and filter oriented bounding boxes (OBBs) for different class groups. The architecture is a combination of a ResNet backbone network and a feature pyramid network (FPN).

Encoding point clouds into grid representations at different spatial scales in order to learn scale-aware features has been shown to be beneficial for lidar object detection [@Ye2020; @Song2020a; @Kuang2020]. We propose a general multi-scale grid rendering formulation to learn scale-aware features.

Our approach differs from previous work in several points. First, we propose a general approach that can be applied to any grid rendering method, e.g.. the PointPillars encoder [@Lang2019] and our newly proposed KPBEV encoder. Second, our multi-scale approach is not tailored to a specific backbone structure, but can be applied to any backbone with feature maps at different scales. For this purpose, an additional grid rendering with individual weights for each scale present in the backbone generates feature maps from the input point cloud. This is illustrated with orange framed grids in Fig. 3 for the backbone considered in this work. In addition, radar point clouds tend to be sparse and the elevation information can be unprecise. Thus, we apply a 2D BEV projection to the point cloud instead of 3D voxelization.

In the present case, the initial feature map with scale \(s_0\) is successively downsampled by a factor of two during each stage of the residual network by applying strided convolutions. Consequently, feature maps with spatial scales \(s_i \in \{s_0,2 s_0, 4 s_0, 8 s_0\}\) are processed within the backbone. For each of these scales, a grid with corresponding resolution is rendered directly from the point cloud in order to learn scale-aware features. Subsequently, the resulting multi-scale feature maps are incorporated into the detection backbone by concatenation at the respective stage.

Generally, given an input which covers a certain area with a given resolution, the backbone downsamples the input to different scales. For each spatial scale in the backbone, the feature map dimensions are typically divided (and are divisible) by an integer factor, which is equivalent to multiplying the resolution by this same factor. In other words, for each spatial scale, the spatial dimensions should be divisible by the resolution to ensure perfect alignment. In our case, the spatial dimensions should be divisible by \(16s_0\) (including the last scale of the backbone).

Any grid rendering method that transforms irregular point cloud data into a regular grid representation can be used in this context, but we focus on the PointPillars and KPBEV grid rendering in this work.

3.4 Multi-scale KPPillarsBEV architecture↩︎

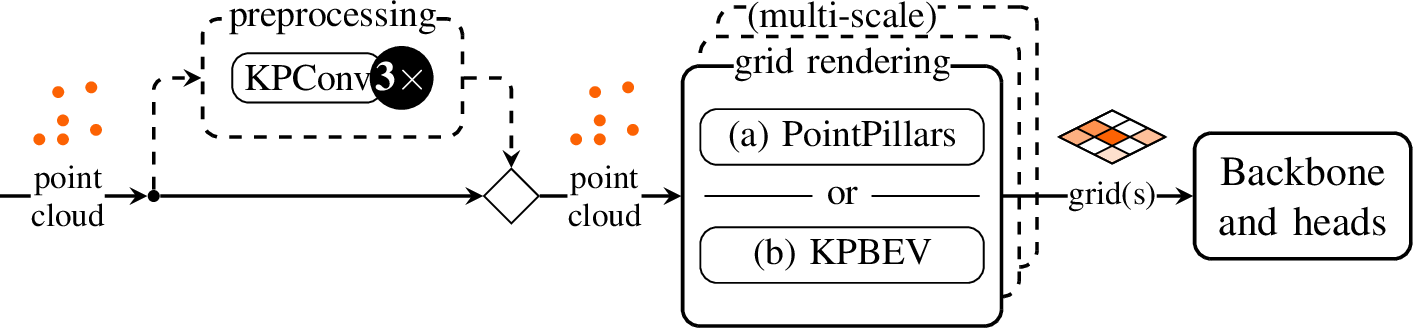

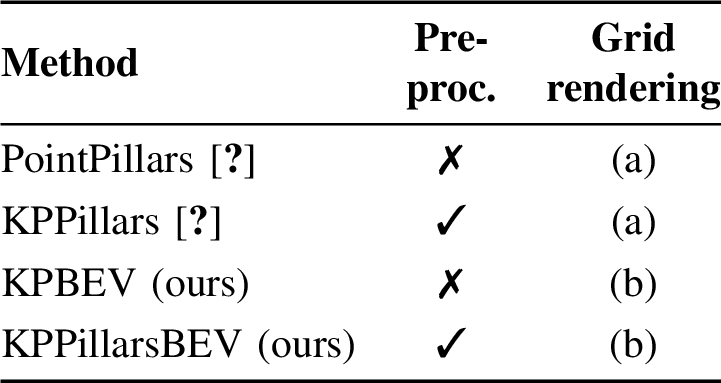

We propose the multi-scale KPPillarsBEV radar object detection architecture which brings together KPBEV grid rendering, multi-scale grid rendering and point pre-processing. We also examine different variations of multi-scale KPPillarsBEV with and without the proposed methods as illustrated in Fig. [fig:architectures]. This enables us to perform an ablation study and quantify the benefits of each contribution. Moreover, these variations offer different tradeoffs between detection performance and complexity.

The multi-scale KPPillarsBEV architecture first preprocesses the input point cloud with three sequential KPConv layers as in [@Ulrich2022] and enriches the point cloud with additional scale-agnostic features. Then, following the proposed multi-scale grid rendering formulation, the resulting point cloud is rendered to a corresponding grid with a KPBEV module for each scale of the backbone. As a result, the point is rendered to the grid with scale-specific features as each KPBEV module has its own set of weights. Finally, the resulting feature maps are processed by a ResNet backbone, a feature pyramid network and detection heads as illustrated in Fig. 3. The predicted oriented bounding boxes are then filtered with non-maximum suppression.

We evaluate different tradeoffs between detection performance and computational complexity with variants of multi-scale KPPillarsBEV as illustrated in Fig. [fig:architectures]. Specifically, we consider three dimensions: the presence of the KPConv preprocessing, the grid rendering method (PointPillars or KPBEV) and whether the grid rendering is multi- or single-scale. Within this framing, the evaluated PointPillars [@Lang2019] architecture has no KPConv preprocessing and single-scale PointPillars grid rendering. Similarly, KPPillars [@Ulrich2022] uses KPConv preprocessing but only has single-scale PointPillars grid rendering. On the other hand, multi-scale KPPillarsBEV has preprocessing and multi-scale KPBEV grid rendering. As a result, it effectively combines scale-agnostic and scale-specific processing and minimizes information loss from the point cloud to the grid. Indeed, we find in our experiments that the combination of preprocessing, KPBEV and multi-scale grid rendering significantly improves detection performance compared to other variants.

All architectures share the same backbone structure depicted in Fig. 3, that consists of a residual network (ResNet) [@He2016] and feature pyramid network (FPN) [@Lin2017] and is similar to [@Yang2019]. The generation of oriented bounding boxes (OBBs) is performed by convolutional detection heads that generate object proposals for specific class groups, such as vulnerable road users (VRUs) or car-like objects.

In addition, when KPBEV grid rendering is performed in a multi-scale manner, it is essential that the influence radius of the KPBEV is coherent with the grid resolution. If the influence radius is kept constant at all spatial scales, the neighborhood may become smaller than the cell size for coarse grids. Consequently, we propose to use an adaptive influence radius \(\rho_{k,i}=(s_i/s_0)\rho_{k,0}\) for each corresponding scale \(s_i\). This is crucial in order for multi-scale KPBEV to significantly outperform single-scale KPBEV.

4 Experiments↩︎

4.1 Experimental setup↩︎

We evaluate the proposed methods on the nuScenes validation set [@Caesar2020], which contains multi-modal sensor data of urban driving scenarios captured in Boston and Singapore. To improve the comparability of our work, we use the official evaluation toolkit and metrics [@Caesar2020]. In the nuScenes benchmark, average precision (AP) metrics are provided for different matching thresholds between ground truth and predictions (0.5, 1, 2 and 4 meters) to quantify the detection performance. Additionally, the mean average precision (mAP) over the different matching thresholds is computed. For a detailed explanation, we refer the reader to [@Caesar2020].

In our evaluation we focus on the AP4.0, i.e.. the AP with a matching threshold of 4 meters, and the mAP for class car. Although the models are also trained to detect other classes such as pedestrian, the performance for these classes is quite low. This was already observed in previous radar literature [@Nobis2021; @Svenningsson2021; @Ulrich2022] and is mostly due to the limited resolution of radars in nuScenes, which results in many objects having no or only very few reflections. Furthermore, the sparsity and the lack of elevation in nuScenes’ radar data impedes classification for some objects, leading to confusion between classes such as bus and truck.

Besides detection performance, we assess computational complexity of the architectures in terms of GPU runtime and peak memory utilization on a NVIDIA V100 SMX2 GPU with the TensorFlow [@Abadi2016] profiler.

4.2 Models↩︎

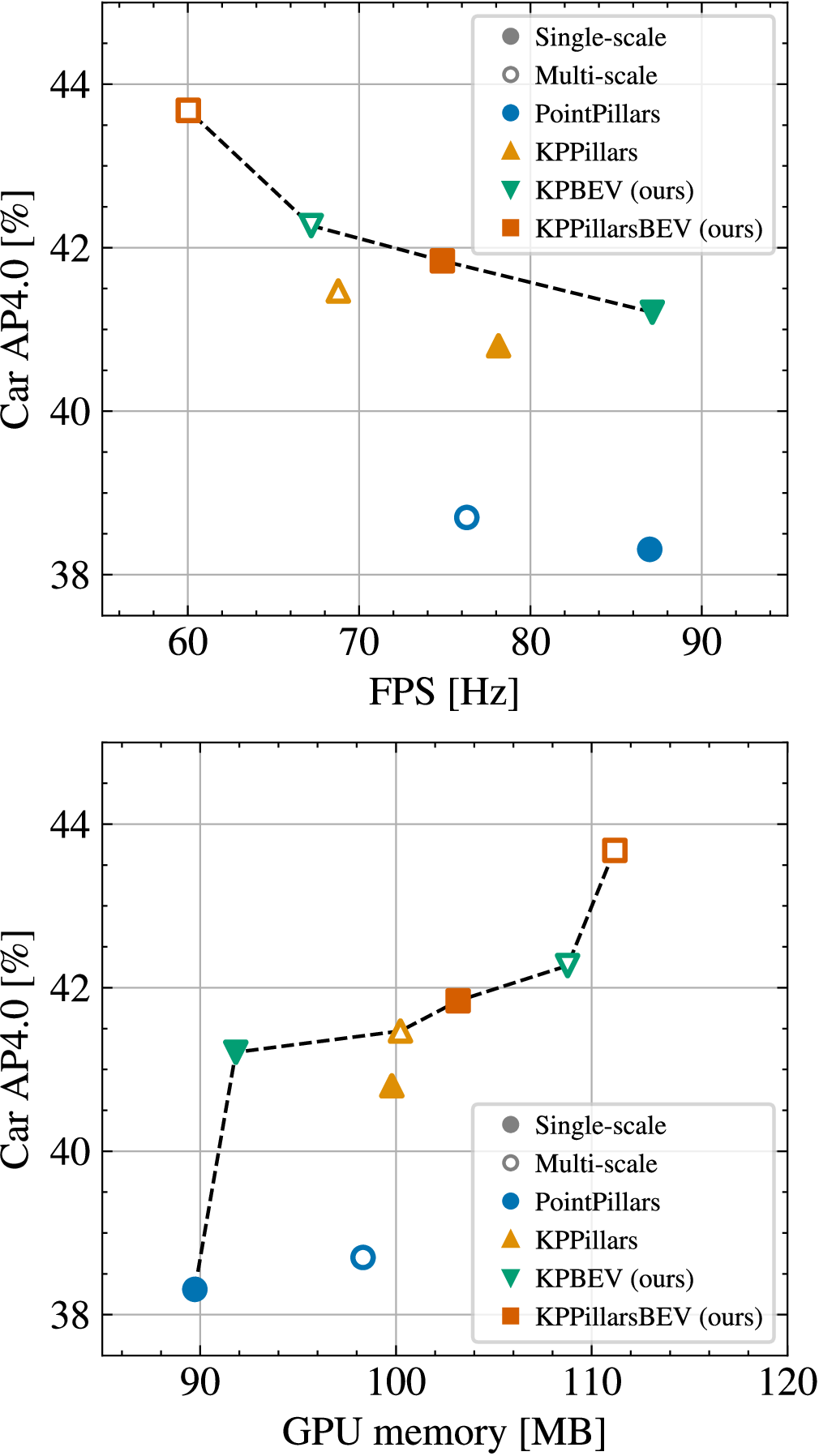

The proposed multiscale KPPillarsBEV significantly outperforms [@Lang2019; @Ulrich2022] in terms of AP4.0 and mAP at a lower but still acceptable frame rate. Single-scale KPBEV provides a significant performance boost compared to single-scale PointPillars with no additional computational cost. As expected, using multi-scale grids improves performance of all methods.

| Method | Multi-scale | AP4.0 [%] \(\uparrow\) | mAP [%] \(\uparrow\) | FPS [Hz] \(\uparrow\) | GPU Mem. [MB] \(\downarrow\) |

|---|---|---|---|---|---|

| PointPillars [@Lang2019] (baseline) | 38.31 | 22.08 | 86.96 | 89.73 | |

| 38.70 | 22.44 | 76.28 | 98.32 | ||

| KPPillars [@Ulrich2022] | 40.80 | 24.01 | 78.13 | 99.79 | |

| 41.47 | 24.37 | 68.78 | 100.23 | ||

| KPBEV (ours) | 41.21 | 24.50 | 87.11 | 91.82 | |

| 42.27 | 25.26 | 67.20 | 108.77 | ||

| KPPillarsBEV (ours) | 41.84 | 24.95 | 74.85 | 103.18 | |

| 43.68 | 26.42 | 60.02 | 111.18 |

Figure 4: Number of frames per second (FPS) and peak memory usage in relation to the detection performance (AP4.0) for class car on nuScenes achieved by the different methods investigated in this work. The black dashed lines indicate the respective Pareto front.

We investigate the influence of multi-scale grid rendering and the novel KPBEV feature encoder. To this end, we consider four different evaluation settings (see Fig. [fig:architectures]):

PointPillars: the baseline setting which uses a point cloud encoder based on a simplified PointNet as in [@Lang2019] to convert the point cloud into a BEV feature map.

KPPillars: state-of-the-art radar setting [@Ulrich2022], that uses point-based preprocessing consisting of three KPConv layers before applying the PointPillars encoder.

KPBEV (ours): low complexity setting that uses the KPBEV feature encoder (see Fig. 2) proposed in this work instead of PointPillars.

KPPillarsBEV (ours): high performance setting that combines the point-based preprocessing of KPPillars with KPBEV grid rendering.

Each of these settings is evaluated with single- and our proposed multi-scale grid rendering.

4.3 Experiment parameters↩︎

The network input is a radar point cloud that is temporally aggregated over five consecutive measurements. Each point carries the features \(\{x, v_r, \sigma, \Delta t\}\), where \(x\in\mathbb{R}^2\) are the 2D Cartesian coordinates, \(v_r\in\mathbb{R}\) is the ego-motion compensated radial velocity, \(\sigma\in\mathbb{R}\) is the radar cross section and \(\Delta t\in\mathbb{R}\) is the timestamp difference w.r.t.. the most recent timestamp. Object detection is then performed on a grid ranging from -60 to 60 meters in both the x- and y-direction with an initial cell size of \(s_0=0.5\,m\). All layers preceding the detection backbone use \(F_\textrm{out}=64\) channels to process the input point cloud. For KPBEV an influence radius of \(\rho_{k,0}=0.6\,m\) is used for the initial scale \(s_0\), while for KPPillars and KPPillarsBEV \(\rho_{k,0}=1\,m\) is used as proposed by [@Ulrich2022]. For KPBEV and KPPillarsBEV, we use the proposed adaptive influence radius scheme in the multi-scale variants.

We run 10 trials per model variant and calculate the average of metrics to account for stochasticity during training. Each model is trained for 50 epochs with a batch size of 24.

4.4 Results↩︎

Tab. 1 shows the quantitative results of the different methods on the nuScenes validation set. For all architectures, we observe that multi-scale grid rendering always improves the detection performance over single-scale grid rendering. The absolute improvements in AP4.0 and mAP range from \(0.39\,\%\) and \(0.36\,\%\) for the baseline to up to \(1.82\,\%\) and \(1.47\,\%\) for KPPillarsBEV. Furthermore, the benefits of multi-scale grid rendering are greater for models with KPBEV grid rendering instead of PointPillars. Hence, we conclude that KPBEV is able to learn more expressive and scale-aware features, due to the improved cell-wise feature aggregation from neighboring points.

This is also reflected when comparing single-scale PointPillars and KPBEV. KPBEV achieves a significant performance increase of \(2.90\,\%\) in AP4.0 and \(2.42\,\%\) in mAP over the baseline, without increasing the inference time. Additionally, KPBEV outperforms KPPillars, the previous state-of-the-art radar model, in terms of both detection performance and computational efficiency.

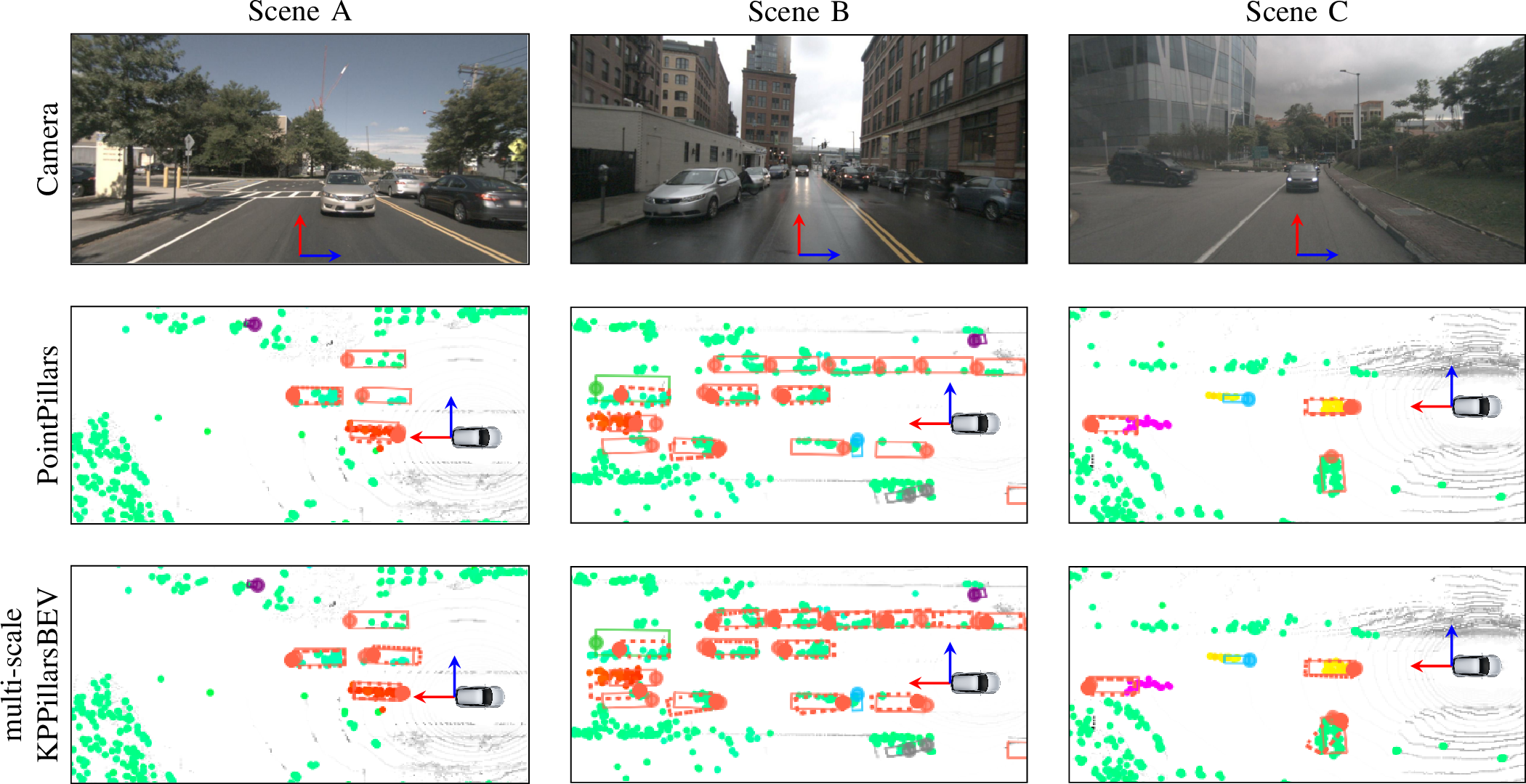

Figure 5: Images of the rear camera and corresponding qualitative results of PointPillars and multi-scale KPPillarsBEV in bird’s-eye perspective for different scenes on the nuScenes validation set. The results show the radar point cloud (colored points, where the color encodes radial velocity), lidar point cloud (grey points), ground truth boxes (solid rectangles) and predicted boxes (dotted rectangles). The qualitative results illustrate the improved detection performance of KPPillarsBEV, as it precisely detects cars that are missed by the baseline (PointPillars), e.g.. the parked cars in Scene B.

The main reason for the efficiency of KPBEV is, that KPConvs are only applied to anchor points, i.e.. once per non-empty grid cell. In contrast, during the point-based preprocessing of KPPillars the convolutions are applied to each input point. For instance, the number of KPConvs is reduced by \(38\,\%\) for a grid resolution of \(0.5\,m\) as there is that many less anchor points than input points.

This property also alleviates the use of larger convolution radii in the multi-scale configuration. As previously described, the convolution radius is increased adaptively for coarser grids. Consequently, the number of neighboring points considered during the feature aggregation at each anchor point grows. At the same time, the number of non-empty cells and thus the number of necessary convolutions decreases as cells become larger. However, using KPBEV at multiple scales still decreases the frame rate by about 20 %.

The trade-off between detection performance and computational complexity is visualized by the Pareto fronts in Fig. 4. In summary, both multi-scale and KPBEV grid rendering benefit the performance of radar object detection networks. The modularity of these approaches help to design suitable, Pareto-optimal architectures. If maximum detection performance is desired, the proposed multi-scale KPPillarsBEV architecture is the best option and achieves an AP4.0 of \(43.68\,\%\) and mAP of \(26.42\,\%\) in our experiments. The strong detection performance of this method is also illustrated in Fig. 5, which shows qualitative results for several scenes in the nuScenes validation set in comparison to the PointPillars baseline. In a scenario with limited compute resources, the single-scale KPBEV architecture may be preferable, as it significantly outperforms the baseline while maintaining the same inference speed.

5 Conclusion↩︎

Grid-based approaches [@Ulrich2022] are currently the state-of-the-art for radar object detection. However, current approaches tend to lose information during the grid rendering step due to aggregation and discretization. To address this, we propose a novel multi-scale KPPillarsBEV architecture which brings together KPConv preprocessing, a novel KPBEV grid rendering and a general multi-scale grid rendering formulation.

Specifically, we introduced two new methods to improve grid rendering for radar-based object detection networks in a principled manner: KPBEV grid rendering and general multi-scale grid rendering. The proposed KPBEV grid rendering based on kernel point convolutions has greater descriptive power than previous encoders which rely on simplified PointNets and mitigates information loss due to aggregation and discretization. Our experiments show that single-scale KPBEV significantly improves detection performance compared to the PointPillars baseline at no additional complexity cost.

Furthermore, we propose a general multi-scale grid rendering formulation which performs grid rendering for each spatial scale with any grid rendering method and incorporates scale-aware features into the detection backbone. We show experimentally that multi-scale grid rendering improves the detection performance for all considered architectures. Moreover, the performance gains are the largest when used in combination with the proposed KPBEV grid rendering.

The proposed multi-scale KPPillarsBEV architecture outperforms the current state of the art for radar object detection on nuScenes. It achieves an AP4.0 of \(43.68\,\%\) and mAP of \(26.42\,\%\) for class car, while running at 60 FPS on a NVIDIA V100 SMX2 GPU, and outperforms the baseline PointPillars by 5.37 % AP4.0.

6 Acknowledgements↩︎

This work was supported by the German Federal Ministry of Education and Research, project ZuSE-KI-AVF under grant no.. 16ME0062.

\(^{1}\)Daniel Köhler and Frank Meinl are with Robert Bosch GmbH, Cross-Domain Computing Solutions, Germany

daniel.koehler2@de.bosch.com↩︎\(^{2}\)Daniel Köhler and Holger Blume are with Leibniz University Hannover, Institute of Microelectronic Systems, Germany↩︎

\(^{3}\)Maurice Quach, Michael Ulrich and Bastian Bischoff are with Robert Bosch GmbH, Corporate Research, Germany↩︎