TemporalMaxer: Maximize Temporal Context with only Max Pooling

for Temporal Action Localization

March 16, 2023

Abstract

Temporal Action Localization (TAL) is a challenging task in video understanding that aims to identify and localize actions within a video sequence. Recent studies have emphasized the importance of applying long-term temporal context modeling (TCM) blocks to the extracted video clip features such as employing complex self-attention mechanisms. In this paper, we present the simplest method ever to address this task and argue that the extracted video clip features are already informative to achieve outstanding performance without sophisticated architectures. To this end, we introduce TemporalMaxer, which minimizes long-term temporal context modeling while maximizing information from the extracted video clip features with a basic, parameter-free, and local region operating max-pooling block. Picking out only the most critical information for adjacent and local clip embeddings, this block results in a more efficient TAL model. We demonstrate that TemporalMaxer outperforms other state-of-the-art methods that utilize long-term TCM such as self-attention on various TAL datasets while requiring significantly fewer parameters and computational resources. The code for our approach is publicly available at https://github.com/TuanTNG/TemporalMaxer.

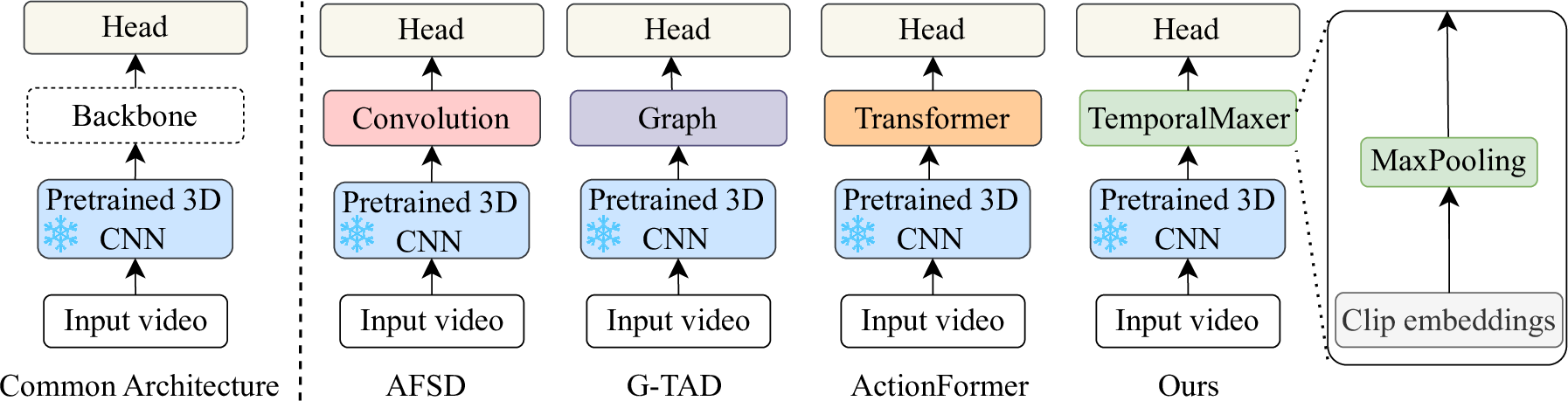

Figure 1: Common architecture in Temporal Action Localization (TAL) and different temporal context modeling (TCM) blocks. Existing works have incorporated extensive parameters, high computational costs, and complex modules in a backbone such as 1D Convolutional layer in AFSD [1] to capture local temporal context, Graph [2] in G-TAD [3] and Transformers [4] in ActionFormer [5] to model long-term temporal contexts. Our proposed method, termed as TemporalMaxer, for the first time exploits the potentials of strong features from pretrained 3D CNN by only utilizing a basic, parameter-free, local operating Max Pooling block. Our proposed method, the simplest backbone ever for TAL, maintains only the most critical information on adjacent and local clip embeddings. Combined with the large receptive field of deep networks, the whole model outperforms other works by a large margin on various datasets.

1 Introduction↩︎

Temporal action localization (TAL), which aims to localize action instances in time and assign their categorical labels, is a challenging but essential task within the field of video comprehension. Various approaches have been proposed to address this task, such as action proposals [6], anchor windows [7], or dense prediction [1]. A widely accepted notion for improving the performance of TAL models is to integrate a component capable of capturing long-term temporal dependencies within the extracted video clip features[5], [8]–[14]. Specifically, the TAL model first employs pre-extracted features from a pre-trained 3D-CNN network, such as I3D [15] and TSN [16], as an input. Then, an encoder, called backbone, encodes features to latent space, and the decoder, called head, predicts action instances as illustrated in Fig. 1. To better capture long-term temporal dependencies, long-term temporal context modeling (TCM) blocks are incorporated into the backbone. Particularly, prior works [3], [17] have employed Graph [2], or more complex modules such as Local-Global Temporal Encoder [8] which uses a channel grouping strategy, or Relation-aware pyramid Network [9] which exploits bi-directional long-range relations. Recently there has been notable interest in the application of the self-attention [4] for long-term TCM [5], [11], [18], [19], resulting in surprising performance improvements.

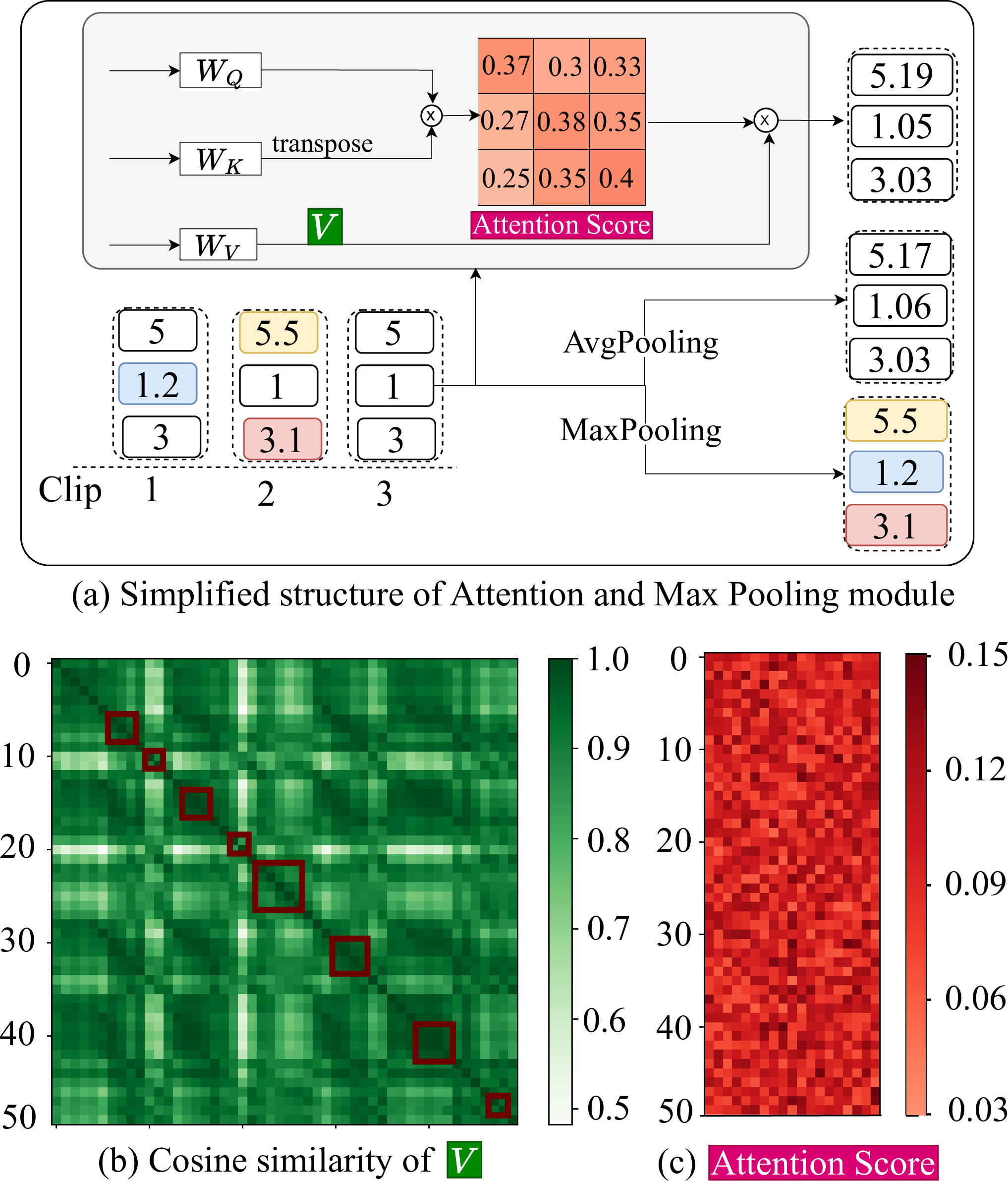

Figure 2: Comparison of effectiveness of Max Pooling over Transformer [4]. (a) When the input clip embeddings are highly similar, given that the value features \(V\) are highly similar and the attention score is distributed equally, the Transformer tends to average out the clip embeddings as done in Average Pooling. In contrast, Max Pooling can retain the most crucial information about adjacent clip embeddings and further remove redundancy in the video. (b) Cosine similarity matrix(bottom-left) of value features in self-attention in ActionFormer [5] where the red boxes exhibit the action intervals. (c) Attention score(bottom-right) after training the ActionFormer[5].

Long-term TCM in the backbone can help the model to capture long-term temporal dependencies between frames, which can be useful in identifying complex actions that may unfold over longer periods of time or heavily overlapping actions. Despite their performance improvement on benchmarks, the inference speed and the effectiveness of the existing approaches are rarely considered. While [5] introduced and pursued the minimalist design, their backbone is still limited to the transformer architecture requiring expensive parameters and computations.

Recently, [20] proposed general architecture abstracted from transformer. Motivated by the success of recent approaches replacing attention module with MLP-like modules[21] or Fourier Transform[22], they deemed the essential of those modules as token mixer which aggregates information among tokens. In turn, they came to propose PoolFormer, equiped with extremely minimized token mixer which replaces the exhausting attention module with very simple pooling layer.

In this paper, motivated by their proposal, we focus on extreme minimization of the backbone network by concentrating on and maximizing the information from the extracted video clip features in a short-term perspective rather than employing exhausting long-term encoding process.

The transformer architecture leading state-of-the-art performance in the machine translation task [4] and computer vision areas [23] have inspired recent works in TAL [5], [11], [18], [19]. From the perspective of long-term TCM, the property that calculates attention weights for the long input sequence has led to recent progress in the TAL. However, such long-term consideration comes at a price of high computation costs and the effectiveness of those approaches have not yet been carefully analyzed.

We argue the essential properties of the video clip features that have not been fully exploited to date. Firstly, the video clips exhibit a high redundancy which leads to a high similarity of the pre-extracted features as demonstrated in Fig. 2. It raises the question of the effectiveness of employing self-attention or graph methods for long-range TCM. Unlike in other domains such as machine translation task where input tokens exhibit distinctiveness, the input clip embeddings in TAL frequently exhibit a high degree of similarity. Consequently, as depicted in Fig. 2, the self-attention recently employed in TAL tend to average the embeddings of clips within the attention scope, losing temporally local minute changes by redundant similar frames under the long-term temporal context modeling. We argue that only certain information within clip embeddings is relevant to the action context of TAL, whereas the remainder of the information is similar across adjacent clips. Therefore, an optimal TCM must be capable of preserving the most discriminative features of clip embeddings that carry the essential information.

To this end, we aim to propose simple yet effective TCM in a straightforward manner. We argue that the TCM can retain the simplest architecture while maximizing informative features extracted from 3D CNN. Max Pooling [24] is deemed the most fitting block for this purpose.

Our proposed method, TemporalMaxer, presents the simplest architecture ever for this task gaining much faster inference speed. Our finding suggests that the pre-trained feature extractor already possess great potential and with those feature, short-term TCM solely can benefit the performance for this task.

Extensive experiments prove the superiority and effectiveness of the proposed method, showing state-of-the-art performance in terms of both accuracy and speed for TAL on various challenging datasets including THUMOS [25], EPIC-Kitchens 100 [26], MultiTHUMOS [27], and MUSES [28].

2 Related Work↩︎

Temporal Action Localization (TAL). Two-Stage and Single-Stage methods are used in TAL to detect actions in videos. Two-Stage methods first generate possible action proposals and classify them into actions. The proposals are generated through anchor windows [29]–[31], detecting action boundaries [32]–[34], graph representation [3], [35], or Transformers [13], [14], [36]. Single-stage TAL performs both action proposal generation and classification in a single pass, without using a separate proposal generation step. The pioneering work [8] developed anchor-based single-stage TAL using convolutional networks, inspired by a single-stage object detector [37], [38]. Meanwhile, [1] proposed an anchor-free single-stage model with a saliency-based refinement module.

Long-term Temporal Context Modeling (TCM). Recent studies have emphasized the necessity of long-term TCM to improve model performance. Long-term TCM helps the model capture long-term temporal dependencies between frames, which can be useful in identifying intricate actions that span over extended timeframes or heavily overlapping actions. Prior work has addressed long-term TCM using Relation-aware pyramid Network [9], Multi-Stage CNN [39], Temporal Context Aggregation Network [8]. Recent works [3], [17] employ Graph [2] where each video clip feature represents a node in a graph as long-term TCM. More recently, Transformer [4] demonstrates an outstanding capacity to capture long-range dependency of the input sequence in the machine translation tasks. Thus, it is a natural fit for Temporal Action Localization (TAL) where each video clip embedding represents a token. Therefore, recent studies [5], [11], [18], [19] have employed Transformers as a long-term TCM.

Our approach, TemporalMaxer, belongs to the single-stage TAL model that utilizes a state-of-the-art ActionFormer [5] as the baseline for comparison. Similar to ActionFormer, TemporalMaxer follows a minimalistic design of sequence labeling where every moment is classified, and their corresponding action boundaries are regressed. The main difference is that we avoid exhausting attention between clips from long-term timeframes which can unintentionally flatten minute information among the crowd of similar frames, but keep the minute information in short-term manner.

3 Method↩︎

3.1 Problem Statement↩︎

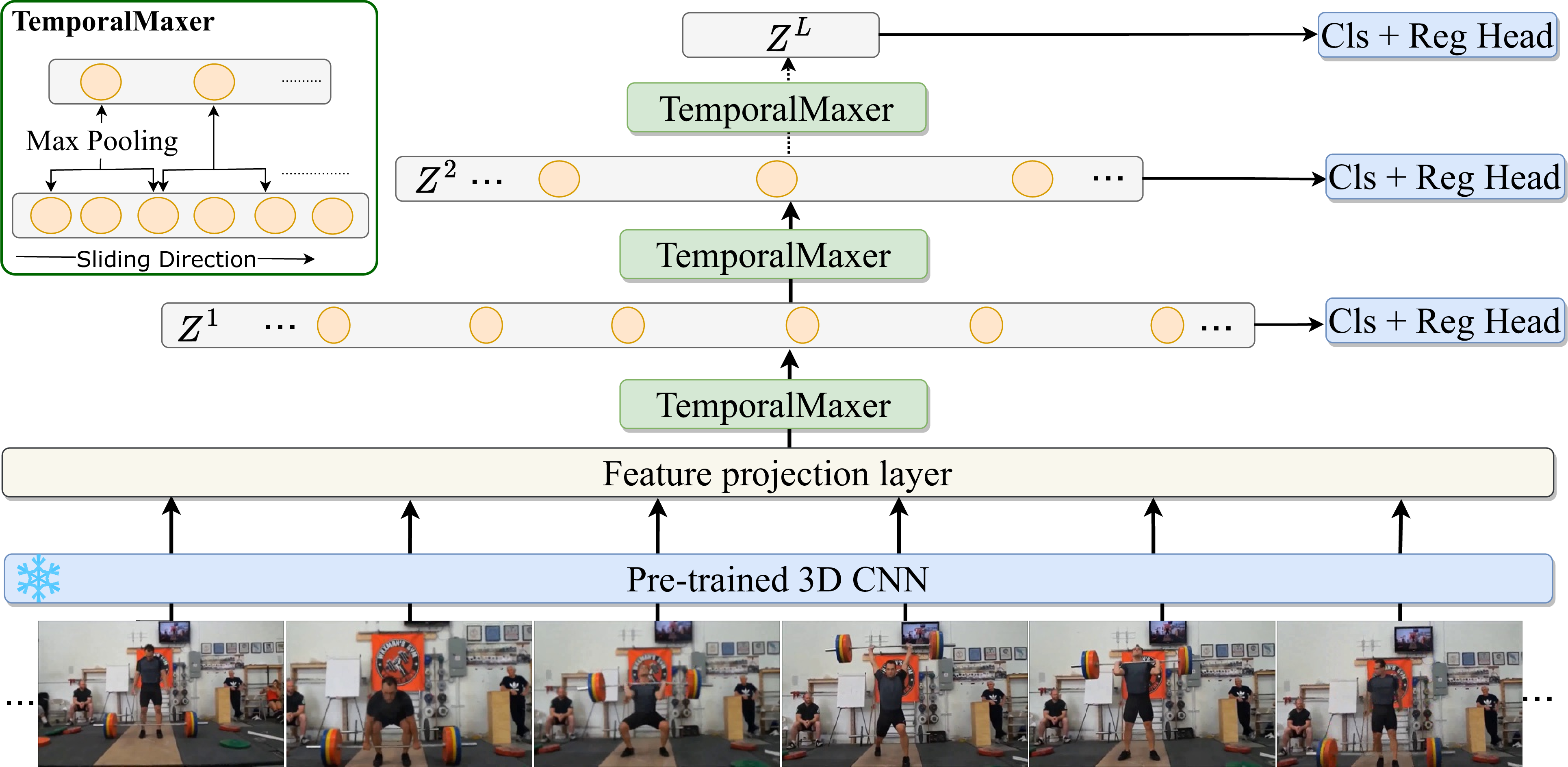

Figure 3: Overview of TemporalMaxer. The proposed method utilizes Max Pooling as a Temporal Context Modeling block applied between temporal feature pyramid levels to maximize informative features of high similarity clip embedding. Specifically, it first extracts features of every clip using pre-trained 3D CNN. After that, the backbone encodes clip features to form a multi-scale feature pyramid. The backbone consists of 1D convolutional layers and TemporalMaxer layers. Finally, a lightweight classification and regression head decodes the feature pyramid to action candidates for every input moment.

Temporal Action Localization. Assume that an untrimmed video \(X\) can be represented by a set of feature vectors \(X = \lbrace{x_1, x_2, . . . , x_T }\rbrace\), where the number of discrete time steps \(t = \lbrace{1, 2, . . . , T}\rbrace\) may vary depending on the length of the video. The feature vector \(x_t\) is extracted from a pre-trained 3D convolutional network and represents a video clip at a specific moment \(t\). The aim of TAL is to predict a set of action instances \(\Psi = \{\psi_1, \psi_2, \ldots, \psi_N \}\) based on the input video sequence \(X\), where \(N\) is the number of action instances in \(X\). Each action instance \(\psi_n\) consists of (\(s_n\), \(e_n\), \(a_n\)) where \(s_n\), \(e_n\), and \(a_n\) are starting time, ending time, and associated action label \(a_n\) respectively, \(s_n \in [1, T]\), \(e_n \in [1, T]\), \(s_n < e_n\), and the action label \(a_n\) belongs to the pre-defined set of \(C\) categories.

3.2 TemporalMaxer↩︎

Action Representation. We follow the anchor-free single-stage representation [1], [5] for an action instance. Each moment is classified into the background or one of \(C\) categories, and regressed the onset and offset based on the current time step of that moment. Consequently, the prediction in TAL is formulated as a sequence labeling problem. \[X=\left\{x_1, x_2, \ldots, x_T\right\} \rightarrow \hat{\Psi}=\left\{\hat{\psi}_1, \hat{\psi}_2, \ldots, \hat{\psi}_T\right\}\] At the time step \(t\), the output \(\hat{\psi}_t = (o_t^s, o_t^e, c_t)\) is defined as following:

\(o_t^s > 0\) and \(o_t^e > 0\) represent the temporal intervals between the current time step \(t\) and the onset and offset of a given moment, respectively.

Given \(C\) action categories, the action probability \(c_t\) can be considered as a set of \(c_t^i\) which is a probability for action \(i^{th}\), where \(1\leq i \leq C\).

The predicted action instance at time step \(t\) can be retrieved from \(\hat{\psi}_t = (o_t^s, o_t^e, c_t)\) by: \[a_t=\arg \max \left(c_t\right), \quad s_t=t-o_t^s, \quad e_t=t+o_t^e\]

Architecture overview. The overall architecture is depicted in Fig. 3. Our proposed method aims to learn to label every input moment by \(f\left(X\right) \rightarrow \hat{\Psi}\) with \(f\) is a deep learning model. \(f\) follows an encoder-decoder design and can be decomposed as \(e \circ d\). The encoder here is the backbone, and the decoder is the classification and regression head. \(e: X \rightarrow Z\) learn to encode the input video feature \(X\) into latent vector \(Z\), and \(d: Z \rightarrow \hat{\Psi}\) learns to predict labels for every input moment. To effectively capture actions transpiring at various temporal scales, we also adopt a multi-scale feature pyramid representation, which is denoted as \(Z = \{Z^1, Z^2, . . . , Z^L\}\).

Encoder design The input feature \(X\) is first encoded into multi-scale temporal feature pyramid \(Z = \{Z^1, Z^2, . . . , Z^L\}\) using encoder \(e\). The encoder \(e\) simply contains two 1D convolutional neural network layers as feature projection layers, followed by \(L-1\) Temporal Context Modeling (TCM) blocks to produce feature pyramid \(Z\). Formally, the feature projection layers are described as: \[\label{eq:projection} X_{p} = E_2(E_1(\text{Concat}(X)))\tag{1}\]

The input video feature sequence \(X = \lbrace{x_1, x_2, . . . , x_T }\rbrace\), where \(x_i \in \mathbb{R}^{1 \times D_{in}}\), is first concatenated in the first dimension and then fed into two feature projection modules \(E_1\), and \(E_2\) in equation 1 , resulting in the projected feature \(X_{p} \in \mathbb{R}^{T \times D}\) with D-dimensional feature space. Each projection module comprises one 1D-convolutional neural network layer, followed by Layer Normalization [40], and ReLU [41]. We simply assign \(Z^1 = X_p\) as the first feature in \(Z\). Finally, the multi-scale temporal feature pyramid \(Z\) is encoded by TemporalMaxer: \[\label{eq:zl} Z^l = \text{TemporalMaxer}(Z^{l-1}).\tag{2}\] Here: TemporalMaxer is Max Pooling and employed with stride 2, \(Z^l \in \mathbb{R}^{\frac{T}{2^{l-1}} \times D}\), \(2 <= l <= L\). It is worth noting that ActionFormer [5] employs Transformer [4] as a TCM block where each clip feature at the moment \(t\) represents a token, our proposed method adopts only Max Pooling [24] as TCM block.

Decoder Design The decoder \(d\) learns to predict sequence labeling, \(\hat{\Psi}=\left\{\hat{\psi}_1, \hat{\psi}_2, \ldots, \hat{\psi}_T\right\}\), for every moment using multi-scale feature pyramid \(Z = \{Z^1, Z^2, . . . , Z^L\}\). The decoder adopts a lightweight convolutional neural network and consists of classification and regression heads. Formally, the two heads are defined as:

\[\label{eq:cls} C_l = \mathcal{F}_c(E_4(E_3(Z^l)))\tag{3}\] \[\label{eq:reg} O_l = \text{ReLU}(\mathcal{F}_o(E_6(E_5(Z^l))))\tag{4}\] Here, \(Z^l \in \mathbb{R}^{\frac{T}{2^{l-1}} \times C}\) is the latent feature of level \(l\), \(C_l=\{c_0, c_{2^{l-1}},...,c_T\} \in \mathbb{R}^{\frac{T}{2^{l-1}} \times C}\) denotes the classification probability with \(c_i \in \mathbb{R}^{C}\), and \(O_l = \{(o_0^s, o_0^e), (o_{2^{l-1}}^s, o_{2^{l-1}}^e),...,(o_T^s, o_T^e)\} \in \mathbb{R}^{\frac{T}{2^{l-1}} \times 2}\) is the onset and offset prediction of input moment \(\{0, 2^{l-1},..., T\}\). \(E\) denotes the 1D convolution followed by Layer Normalization and ReLU activation function. \(\mathcal{F}_c\) and \(\mathcal{F}_o\) are both 1D convolution. Note that all the weights of the decoder are shared between the different features in the multi-scale feature pyramid \(Z\).

Learning Objective. The model predicts \(\hat{\psi}_t = (o_t^s, o_t^e, c_t)\) for every moment of the input \(X\). Following the baseline [5], the Focal Loss [42] and DIoU loss [43] are employed to supervise classification and regression outputs respectively. The overall loss function is defined as: \[\mathcal{L}_{total}=\sum_t\left(\mathcal{L}_{cls}+\mathbb{1}_{c_t} \mathcal{L}_{reg}\right) / T_{+}\] where \(\mathcal{L}_{reg}\) denotes regression loss and is applied only when the indicator function, \(\mathbb{1}_{c_t}\), indicates that the current time step \(t\) is a positive sample. \(T_{+}\) is the number of positive samples. \(\mathcal{L}_{cls}\) is \(C\) way classification loss. The loss function \(\mathcal{L}_{total}\) is applied to all levels on the output of multi-scale feature pyramid \(Z\) and averaged across all video samples during training.

4 Experimental Results↩︎

In this section, we show that our proposed method, TemporalMaxer, demonstrates the outstanding results achieved across a variety of challenging datasets, namely THUMOS [25], EPIC-Kitchens 100 [26], MultiTHUMOS [27], and MUSES [28]. These datasets are recognized as standard benchmarks in the Temporal Action Localization task. Our approach surpasses the state-of-the-art baseline, ActionFormer [5], in each dataset, showcasing its superior performance compared to other works.

Evaluation Metric. We employ a widely-used evaluation metric for TAL known as the mean average precision (mAP) calculated at various temporal intersections over union (tIoU). tIoU is the intersection over union between two temporal windows, i.e., the 1D Jaccard index. We report the mAP scores for all action categories based on the given tIoU thresholds, and further report an averaged mAP value across all tIoU thresholds.

Training Details. To ensure a fair and unbiased comparison, we employed the experimental setup of the robust baseline model, ActionFormer [5]. This setup included various components such as decoder design \(d\), non-maximum suppression (NMS) hyper-parameters in the post-processing stage, data augmentation, learning rate, feature extraction, and the number of feature pyramid level \(L\). The sole variation in our study was the substitution of the Transformer block in ActionFormer with the Max Pooling block. All experiments are conducted with a kernel size of 3 for all TCM blocks. The subsequent ablation will thorough analysis of the effects of varying kernel sizes. During training, the input feature length is kept constant at 2304, corresponding to approximately 5 minutes of video on both THUMOS14 and MultiTHUMOS datasets, roughly 20 minutes on the EPIC-Kitchens 100 dataset, and approximately 45 minutes on MUSES. Additionally, Model EMA [44] and gradient clipping techniques are employed, consistent with those used in [5], to promote training stability.

4.1 Results on THUMOS14↩︎

Dataset. THUMOS14 dataset [25] contains 200 validation videos and 213 testing videos with 20 action classes. Following previous work [3], [5], [6], [32], [34], we trained the model using validation videos and measured the performance on testing videos.

Feature Extraction. Following [5], [34], we extract the features of THUMOS14 dataset using two-stream I3D [15] pre-trained on Kinetics [45]. 16 consecutive frames are fed into I3D pre-trained network with a sliding window of stride 4. The extracted feature is collected after the last fully connected layer and has 1024-D feature space. After that, the two-stream features are further concatenated (2048-D) and utilized as the input of the model.

Results. We compare the performance evaluated on the THUMOS14 dataset [25] with state-of-the-art methods. TemporalMaxer demonstrates remarkable performance, achieving an average mAP of 67.7% mAP, outperforming all previous approaches, both single-stage, and two-stage methods, by a significant margin, with a 1.1% increase in mAP at tIoU=0.4. Especially, TemporalMaxer surpasses all recent methods that utilize long-term TCM blocks including self-attention such as TadTR [11], HTNet [19], TAGS [46], GLFormer [10], ActionFormer [5], or Graph-based like G-TAD [3], VSGN [17], or complex module including Local-Global Temporal Encoder [8].

Moreover, the comparisons between our method and other approaches show that the proposed method not only exhibits outstanding performance but also is efficient in terms of inference speed. Specifically, our method only takes 50 ms on average to fully process an entire video on THUMOS. It is 1.6x faster than the ActionFormer baseline and 3.9x faster than TadTR. However, in section 4.5, we show that our model only takes 10.4 ms for forward time. It means that the rest 39.6 ms is NMS time, causing the most time-consuming. In the later section 4.5, we show that the forward time and the backbone time of our model are 2.9x and 8.0x faster than ActionFormer, respectively.

| Type | Model | Feature | tIoU\(\uparrow\) | time(ms) \(\downarrow\) | |||||

|---|---|---|---|---|---|---|---|---|---|

| 4-9 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | Avg. | |||

| Two-Stage | BMN [6] | TSN [16] | 56.0 | 47.4 | 38.8 | 29.7 | 20.5 | 38.5 | 483* |

| DBG [47] | TSN [16] | 57.8 | 49.4 | 39.8 | 30.2 | 21.7 | 39.8 | — | |

| G-TAD [3] | TSN [16] | 54.5 | 47.6 | 40.3 | 30.8 | 23.4 | 39.3 | 4440* | |

| BC-GNN [35] | TSN [16] | 57.1 | 49.1 | 40.4 | 31.2 | 23.1 | 40.2 | — | |

| TAL-MR [34] | I3D [15] | 53.9 | 50.7 | 45.4 | 38.0 | 28.5 | 43.3 | >644* | |

| P-GCN [48] | I3D [15] | 63.6 | 57.8 | 49.1 | — | — | — | 7298* | |

| P-GCN [48] +TSP [49] | R(2+1)1 D [50] | 69.1 | 63.3 | 53.5 | 40.4 | 26.0 | 50.5 | — | |

| TSA-Net [33] | P3D [51] | 61.2 | 55.9 | 46.9 | 36.1 | 25.2 | 45.1 | — | |

| MUSES [28] | I3D [15] | 68.9 | 64.0 | 56.9 | 46.3 | 31.0 | 53.4 | 2101* | |

| TCANet [8] | TSN [16] | 60.6 | 53.2 | 44.6 | 36.8 | 26.7 | 44.3 | — | |

| BMN-CSA [52] | TSN [16] | 64.4 | 58.0 | 49.2 | 38.2 | 27.8 | 47.7 | — | |

| ContextLoc [53] | I3D [15] | 68.3 | 63.8 | 54.3 | 41.8 | 26.2 | 50.9 | — | |

| VSGN [17] | TSN [16] | 66.7 | 60.4 | 52.4 | 41.0 | 30.4 | 50.2 | — | |

| RTD-Net [13] | I3D [15] | 68.3 | 62.3 | 51.9 | 38.8 | 23.7 | 49.0 | >211* | |

| Disentangle [54] | I3D [15] | 72.1 | 65.9 | 57.0 | 44.2 | 28.5 | 53.5 | — | |

| SAC [55] | I3D [15] | 69.3 | 64.8 | 57.6 | 47.0 | 31.5 | 54.0 | — | |

| 2-10 Single-Stage | A²Net [56] | I3D [15] | 58.6 | 54.1 | 45.5 | 32.5 | 17.2 | 41.6 | 1554* |

| GTAN [7] | P3D [51] | 57.8 | 47.2 | 38.8 | — | — | — | — | |

| PBRNet [57] | I3D [15] | 58.5 | 54.6 | 51.3 | 41.8 | 29.5 | — | — | |

| AFSD [1] | I3D [15] | 67.3 | 62.4 | 55.5 | 43.7 | 31.1 | 52.0 | 3245* | |

| TAGS [46] | I3D [15] | 68.6 | 63.8 | 57.0 | 46.3 | 31.8 | 52.8 | — | |

| HTNet [19] | I3D [15] | 71.2 | 67.2 | 61.5 | 51.0 | 39.3 | 58.0 | — | |

| TadTR [11] | I3D [15] | 74.8 | 69.1 | 60.1 | 46.6 | 32.8 | 56.7 | 195* | |

| GLFormer [10] | I3D [15] | 75.9 | 72.6 | 67.2 | 57.2 | 41.8 | 62.9 | — | |

| AMNet [11] | I3D [15] | 76.7 | 73.1 | 66.8 | 57.2 | 42.7 | 63.3 | — | |

| ActionFormer [5] | I3D [15] | 82.1 | 77.8 | 71.0 | 59.4 | 43.9 | 66.8 | 80 | |

| ActionFormer [5] + GAP [58] | I3D [15] | 82.3 | — | 71.4 | — | 44.2 | 66.9 | >80 | |

| 2-10 | Our (TemporalMaxer) | I3D [15] | 82.8 | 78.9 | 71.8 | 60.5 | 44.7 | 67.7 | 50 |

| 2-10 | |||||||||

4.2 Results on EPIC-Kitchens 100↩︎

Dataset. The EPIC-Kitchens 100 dataset [26] is a comprehensive collection of egocentric action videos, featuring 100 hours of footage from 700 sessions that document cooking activities in a variety of kitchens. Additionally, EPIC-Kitchens 100 is three times larger in terms of total video hours and more than ten times larger in terms of action instances (averaging 128 per video) when compared to THUMOS14. These videos are recorded from a first-person perspective, resulting in significant camera motion, and represent a novel challenge for TAL research.

Feature Extraction. Following previous work [3], [5], [6], we extract the videos feature using SlowFast network [59] pre-trained on EPICKitchens [26]. We utilized a 32-frame input sequence with a stride of 16 to generate a set of 2304-D features. These features were then fed as input to our model.

Result. Tab. 2 shows our results. TemporalMaxer demonstrates notable performance on the EPIC-Kitchens 100 dataset, achieving an average mAP of 24.5% and 22.8% for verb and noun, respectively. The superiority of our approach is further confirmed by a large margin over a strong and robust baseline, ActionFormer [5], with an average improvement of 1.0% mAP for verb and 0.9% mAP for noun. Again, TemporalMaxer outperforms other methods that utilize long-term TCM including self-attention [5] or Graph [3]. These results provide empirical evidence of the effectiveness of the simplest backbone in advancing the state-of-the-art on this challenging task.

| Task | Method | tIoU | |||||

|---|---|---|---|---|---|---|---|

| 3-8 | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | Avg | |

| Verb | BMN [6], [26] | 10.8 | 9.8 | 8.4 | 7.1 | 5.6 | 8.4 |

| G-TAD [3] | 12.1 | 11.0 | 9.4 | 8.1 | 6.5 | 9.4 | |

| ActionFormer [5] | 26.6 | 25.4 | 24.2 | 22.3 | 19.1 | 23.5 | |

| Our (TemporalMaxer) | 27.8 | 26.6 | 25.3 | 23.1 | 19.9 | 24.5 | |

| 2-8 Noun | BMN [6], [26] | 10.3 | 8.3 | 6.2 | 4.5 | 3.4 | 6.5 |

| G-TAD [3] | 11.0 | 10.0 | 8.6 | 7.0 | 5.4 | 8.4 | |

| ActionFormer [5] | 25.2 | 24.1 | 22.7 | 20.5 | 17.0 | 21.9 | |

| Our (TemporalMaxer) | 26.3 | 25.2 | 23.5 | 21.3 | 17.6 | 22.8 | |

4.3 Results on MUSES↩︎

Dataset. The MUSES dataset [28] is a collection of 3,697 videos, with 2,587 for training and 1,110 for testing. MUSES has 31,477 action instances over a duration of 716 video hours with 25 action classes, designed to facilitate multi-shot analyses making the dataset challenging.

Feature Extraction. We directly employ the pre-extracted feature provided by [28]. The feature is extracted using a pre-trained I3D network[15] on the Kinetics dataset [45] using only RGB stream, resulting in 1024-D feature space for a video clip embedding.

Result Tab. 3 shows the results on MUSES dataset. Our method significantly outperforms other works that employ long-term TCM such as G-TAD [3], Ag-Trans [18], and ActionFormer [5] at every tIoU threshold. Notably, TemporalMaxer improves 1.0 mAP at tIoU=0.7. On average, we achieve 27.2 mAP, which is 1.0 mAP higher than the previous approaches, demonstrating the robustness of our method. It is worth noting that we implemented the ActionFormer [5] on the MUSES dataset using the code provided by the authors.

| Method | tIoU | |||

|---|---|---|---|---|

| 2-5 | 0.3 | 0.5 | 0.7 | Avg |

| BU-TAL [34] | 12.9 | 9.2 | 5.9 | 9.4 |

| G-TAD [3] | 19.1 | 11.1 | 4.7 | 11.4 |

| P-GCN [48] | 19.9 | 13.1 | 5.4 | 13.0 |

| MUSES [28] | 25.9 | 18.9 | 10.6 | 18.6 |

| Ag-Trans [18] | 24.8 | 19.4 | 10.9 | 18.6 |

| ActionFormer [5] | 35.9 | 26.9 | 15.2 | 26.2 |

| Our (TemporalMaxer) | 36.7 | 27.8 | 16.2 | 27.2 |

4.4 Results on MultiTHUMOS↩︎

Dataset. The MultiTHUMOS dataset [27] is a densely labeled extension of THUMOS14, consisting of 413 sports videos with 65 distinct action classes. The dataset presents a significant increase in the average number of distinctive action categories per video, compared to THUMOS14. As such, it poses a greater challenge for TAL than THUMOS. While MultiTHUMOS are being used in action detection benchmark [60], [61], a novel approach for action detection, PointTAD [62], utilizes the TAL evaluation metric to assess the completeness of predicted action instances. Given that TAL and action detection share the same setting in terms of input features and annotations, we evaluate the performance of our model on MultiTHUMOS and compare it against the state-of-the-art action detection methods [60]–[63], and strong baseline ActionFormer [5].

Feature Extraction. We only utilize RGB stream as input for I3D network [15] pre-trained on Kinetics [45], following [62], to extract features for MultiTHUMOS. The I3D pre-trained network is fed with 16 sequential frames through a sliding window with a stride of 4. The feature is extracted from the final fully connected layer, resulting in a 1024-D feature space that serves as input for the model.

Result. Tab. 4 provides a comparison of our performance on the MultiTHUMOS dataset [27] with recent state-of-the-art methods. Specifically, our method surpasses the prior work [62] that utilizes TransFormer as feature encoding by a large margin, 6.4% mAP on average. Moreover, TemporalMaxer improves the robust baseline, ActionFormer [5], by 2.4% mAP at tIoU=0.7, and 1.3% mAP on average. It should be noted that we utilized the code provided by the authors to implement ActionFormer [5] on the MultiTHUMOS dataset, and the results [60], [61], [63] are taken from [62].

| Method | tIoU | |||

|---|---|---|---|---|

| 2-5 | 0.2 | 0.5 | 0.7 | Avg |

| PDAN [60] | — | — | — | 17.3 |

| MLAD [63] | — | — | — | 14.2 |

| MS-TCT [61] | — | — | — | 16.2 |

| PointTAD [62] | 39.7 | 24.9 | 12.0 | 23.5 |

| ActionFormer [5] | 46.4 | 32.4 | 15.0 | 28.6 |

| Our (TemporalMaxer) | 47.5 | 33.4 | 17.4 | 29.9 |

4.5 Ablation Study↩︎

We perform various ablation studies to verify the effectiveness of TemporalMaxer. To better understand what is the effective component for TCM, we gradually replace the Max Pooling with other blocks such as convolution, subsampling, and Average Pooling. Furthermore, we evaluate numerous kernel sizes of Max Pooling. Note that all experiments in this section are conducted on the train and validation set of the THUMOS14 dataset.

| TCM | tIoU | GMACs \(\downarrow\) | #params (M) \(\downarrow\) | time (ms) \(\downarrow\) | backbone time (ms) \(\downarrow\) | ||

|---|---|---|---|---|---|---|---|

| 2-4 | 0.5 | 0.7 | Avg. | ||||

| Conv [1] (Our Impl) | 62.8 | 37.1 | 59.4 | 45.6 | 30.5 | 16.3 | 9.0 |

| Subsampling | 64.3 | 37.7 | 61.0 | 16.2 | 7.1 | 10.4 | 2.5 |

| Average Pooling | 66.1 | 39.4 | 63.2 | 16.4 | 7.1 | 10.4 | 2.5 |

| Transformer [4] | 71.0 | 43.9 | 66.8 | 45.3 | 29.3 | 30.5 | 20.1 |

| TemporalMaxer | 71.8 | 44.7 | 67.7 | 16.4 | 7.1 | 10.4 | 2.5 |

Effective of TemporalMaxer. Tab. 5 presents the results of other blocks other than Max Pooling. Motivated by PoolFormer [20] that replaces the computationally intensive and highly parameterized attention module with the most basic block in deep learning, the pooling layer. Our studies started by first questioning the most straightforward approach to leverage the potential of the extracted features from 3D-CNN for the TAL task. PoolFormer retains the structure of Transformer such as FFN [64], [65], residual connection [66], and Layer Normalization [40] because the tokens have to be encoded by the Poolformer itself. However, in TAL the features from 3D CNN have already been pre-extracted and contain useful information. Therefore, we posit that there will be a straightforward block that are more efficient than Transformer/PoolFormer, does not require much computational as well as parameters, and be able to effectively exploit the pre-extracted features.

Our ablation study starts to employ a 1-D convolution module as an alternative to Transformer [4] for TCM block to see how many mAP drop. The result is reported in the first row of Tab. 5. As expected, the convolution layer decreases by 7.4% mAP compared with the ActionFormer baseline. This deduction can be explained for two reasons. First, given the video clip features that are informative but highly similar, and the convolution weight is fixed after training, consequently the convolution operation cannot retain the most informative features of local clip embeddings. Secondly, the convolution layer introduces the most parameters which tend to overparameterize the model. We argue that given the informative features extracted from the pretrained 3D CNN, the model should not contain too many parameters, which may lead to overfitting and thus less generalization. To further concrete our thought, we replace the Transformer with a parameter-free operation, subsampling technique, in which features at even indexes are kept and odd indexes are removed to simulate stride 2 of TCM. Surprisingly, this none-parameter operation achieves higher results than the convolution layer, shown in the second row of Tab. 5. This finding proves that TCM block may not need to contain many parameters, and the features from pretrained 3D CNN are informative and is the potential for TAL.

However, subsampling is prone to losing the most crucial information as only half of the video clip embeddings are kept after a TCM block. Thus, we replace Transformer with Average Pooling with kernel size 3 and stride 2. As expected, Average Pooling improves the result by 2.2 % mAP on average compared with the subsampling. It is because this operation does not drop any clip embedding which helps to retain the crucial information. However, the Average Pooling averages the nearby features, thus the crucial information of adjacent clips is reduced. That is why Average Pooling decreases by 3.6% mAP compared with Transformer.

The ablation study with Average Pooling suggests that the most important information of clip embeddings should be maintained. For that reason, we employ Max Pooling as TCM. The result is provided in the last row of Tab. 5. Max Pooling achieves the highest results at every tIoU threshold and significantly outperforms the strong and robust baseline, ActionFormer. To clarify, TemporalMaxer effectively highlights only critical information from nearby clips and discards the less important information. This result suggests that the feature from pretrained 3D CNN are informative and can be effectively utilized for the TAL model without complex modules like prior works.

Our method, TemporalMaxer, results in the simplest model ever for TAL task that contains minimalist parameters and computational cost for the TAL model. TemporalMaxer is effective at modeling temporal contexts, which outperforms the robust baseline, ActionFormer, with 2.8x fewer GMACs and 3x faster inference speed. Especially, when comparing only the backbone time, our proposed method only takes 2.5 ms which is incredibly 8.0x faster than ActionFormer backbone [18], 20.1 ms.

Different Values of kernel size. We make an ablation with the kernel size of TemporalMaxer 3, 4, 5, 6 and the average mAPs are 67.7, 67.1, 66.8, 65.7, respectively. Our model achieved the highest performance with a kernel size of 3, while the lowest performance was observed with a kernel size of 6. This decrease in performance can be attributed to the corresponding loss of information during training. The results obtained for kernel sizes 3, 4, and 5 are not very sensitive to the kernel size. This suggests that these kernel sizes can effectively capture the relevant temporal information for the task at hand.

5 Conclusion↩︎

In this paper, we propose an extremely simplified approach, TemporalMaxer for temporal action localization task. To minimize the structure, we explore the way to simply maximize the underlying information in the video clip features from pre-trained 3D-CNN. To this end, with a basic, non-parametric and temporally local operating max-pooling block which can effectively and efficiently keep the local minute changes among the sequential similar input images. We achieve competitive performance to other state-of-the-art methods with sophisticated, parametric and long-term temporal context modeling models.