Stable Set Polytopes with High Lift-and-Project Ranks

for the Lovász–Schrijver SDP Operator

March 15, 2023

Abstract

We study the lift-and-project rank of the stable set polytopes of graphs with respect to the Lovász–Schrijver SDP operator \(\mathop{\mathrm{LS}}_+\). In particular, we focus on a search for relatively small graphs with high \(\mathop{\mathrm{LS}}_+\)-rank (i.e., the least number of iterations of the \(\mathop{\mathrm{LS}}_+\) operator on the fractional stable set polytope to compute the stable set polytope). We provide families of graphs whose \(\mathop{\mathrm{LS}}_+\)-rank is asymptotically a linear function of its number of vertices, which is the best possible up to improvements in the constant factor. This improves upon the previous best result in this direction from 1999, which yielded graphs whose \(\mathop{\mathrm{LS}}_+\)-rank only grew with the square root of the number of vertices.

1 Introduction↩︎

In combinatorial optimization, a standard approach for tackling a given problem is to encode its set of feasible solutions geometrically (e.g., via an integer programming formulation). While the exact solution set is often difficult to analyze, we can focus on relaxations of this set that have certain desirable properties (e.g., combinatorially simple to describe, approximates the underlying set of solutions well, and/or is computationally efficient to optimize over). In that regard, the lift-and-project approach provides a systematic procedure which generates progressively tighter convex relaxations of any given \(0,1\) optimization problem. In the last four decades, many procedures that fall under the lift-and-project approach have been devised (see, among others, [1]–[6]), and there is an extensive body of work on their general properties and performance on a wide range of discrete optimization problems (see, for instance, [7] and the references therein). Lift-and-project operators can be classified into two groups based on the type of convex relaxations they generate: Those that generate polyhedral relaxations only (leading to Linear Programming relaxations), and those that generate spectrahedral relaxations (leading to Semidefinite Programming relaxations) which are not necessarily polyhedral. Herein, we focus on \(\mathop{\mathrm{LS}}_+\) (defined in detail in Section 2), the SDP-based lift-and-project operator due to Lovász and Schrijver [2], and its performance on the stable set problem of graphs. This lift-and-project operator was originally called \(N_+\) in [2].

\(\mathop{\mathrm{LS}}_+\) is an operator on convex subsets of the hypercube \([0,1]^n\) — given a convex set \(P \subseteq [0,1]^n\), \(\mathop{\mathrm{LS}}_+(P)\) is a convex set sandwiched between \(P\) and the convex hull of integral points in \(P\), denoted by \(P_I\): \[P \supseteq \mathop{\mathrm{LS}}_+(P) \supseteq P_I.\] The convex hull of integral points in a set is also called its integer hull. So, \(P_I\) is the integer hull of \(P\).

We can apply \(\mathop{\mathrm{LS}}_+\) iteratively to obtain yet tighter relaxations. Given \(p \in \mathbb{N}\), let \(\mathop{\mathrm{LS}}_+^p(P)\) be the set obtained from applying \(p\) successive \(\mathop{\mathrm{LS}}_+\) operations to \(P\). Then it is well known (e.g., it follows from [2] and the definition of \(\mathrm{LS}_+\)) that \[\mathop{\mathrm{LS}}_+^0(P) \mathrel{\vcenter{:}}= P \supseteq \mathop{\mathrm{LS}}_+(P) \supseteq \mathop{\mathrm{LS}}_+^2(P) \supseteq \cdots \supseteq \mathop{\mathrm{LS}}_+^{n-1}(P) \supseteq \mathop{\mathrm{LS}}_+^n(P) = P_I.\] Thus, \(\mathop{\mathrm{LS}}_+\) generates a hierarchy of progressively tighter convex relaxations which converge to \(P_I\) in no more than \(n\) iterations. The reader may refer to Lovász and Schrijver [2] for some other fundamental properties of the \(\mathop{\mathrm{LS}}_+\) operator.

The \(\mathop{\mathrm{LS}}_+\)-rank of a convex subset of the hypercube is defined to be the number of iterations it takes \(\mathop{\mathrm{LS}}_+\) to return its integer hull. As we stated above, the \(\mathop{\mathrm{LS}}_+\)-rank of every convex set \(P \subseteq [0,1]^n\) is at most \(n\), and a number of elementary polytopes in \(\mathbb{R}^n\) have been shown to have \(\mathop{\mathrm{LS}}_+\)-rank \(\Theta(n)\) (see, among others, [8]–[12]).

Next, given a simple, undirected graph \(G=(V,E)\), we denote by \(\mathop{\mathrm{STAB}}(G)\) the stable set polytope of \(G\) (the convex hull of incidence vectors of stable sets in \(G\)), and by \(\mathop{\mathrm{FRAC}}(G)\) the fractional stable set polytope of \(G\) (which is defined by edge inequalities — i.e., \(x_i + x_j \leq 1\) for every edge \(\left\{i,j\right\} \in E(G)\) — and non-negativity constraints, see Section 2.2). Then, we define the \(\mathop{\mathrm{LS}}_+\)-rank of a graph \(G\) as the minimum non-negative integer \(p\) for which \(\mathop{\mathrm{LS}}_+^p(\mathop{\mathrm{FRAC}}(G)) = \mathop{\mathrm{STAB}}(G)\). We denote the \(\mathop{\mathrm{LS}}_+\)-rank of a graph \(G\) by \(r_+(G)\). When \(G\) is bipartite, \(\mathop{\mathrm{FRAC}}(G) = \mathop{\mathrm{STAB}}(G)\), and thus \(r_+(G)=0\) in this case.

Another well-studied convex relaxation of \(\mathop{\mathrm{STAB}}(G)\) is \(\mathop{\mathrm{TH}}(G)\), the Lovász theta body [13] of the given graph. A remarkable property of \(\mathop{\mathrm{LS}}_+\) is that \(\mathop{\mathrm{LS}}_+(\mathop{\mathrm{FRAC}}(G)) \subseteq \mathop{\mathrm{TH}}(G)\) for every graph \(G\). That is, applying one iteration of \(\mathop{\mathrm{LS}}_+\) to the fractional stable set polytope of a graph already yields a tractable relaxation of the stable set polytope that is stronger than \(\mathop{\mathrm{TH}}(G)\) (see [2] for a proof). Therefore, it follows that \(r_+(G) \leq 1\) when \(G\) is a perfect graph (defined in Section 2.2), as it is well-known that \(\mathop{\mathrm{TH}}(G) = \mathop{\mathrm{STAB}}(G)\) in this case [13]. For more analyses of various lift-and-project relaxations of the stable set problem, see (among others) [2], [6], [14]–[23].

On the other hand, while the stable set problem is known to be strongly \(\mathcal{NP}\)-hard, hardness results for \(\mathop{\mathrm{LS}}_+\) (or any other lift-and-project operator utilizing semidefinite programming) on the stable set problem have been relatively scarce. Prior to this manuscript, the worst (in terms of performance by \(\mathop{\mathrm{LS}}_+\)) family of graphs was given by the line graphs of odd cliques. Using the fact that the \(\mathop{\mathrm{LS}}_+\)-rank of the fractional matching polytope of the \((2k+1)\)-clique is \(k\) [24], the natural correspondence between the matchings in a given graph and the stable sets in the corresponding line graph, as well as the properties of the \(\mathop{\mathrm{LS}}_+\) operator, it follows that the fractional stable set polytope of the line graph of the \((2k+1)\)-clique (which contains \(\binom{2k+1}{2} = k(2k+1)\) vertices) has \(\mathop{\mathrm{LS}}_+\)-rank \(k\), giving a family of graphs \(G\) with \(r_+(G) = \Theta\left(\sqrt{|V(G)|}\right)\). This lower bound on the \(\mathop{\mathrm{LS}}_+\)-rank of the fractional stable set polytopes has not been improved since 1999. In this manuscript, we present what we believe is the first known family of graphs whose \(\mathop{\mathrm{LS}}_+\)-rank is asymptotically a linear function of the number of vertices.

1.1 A family of challenging graphs \(\left\{H_k\right\}\) for the \(\mathop{\mathrm{LS}}_+\) operator↩︎

For a positive integer \(k\), let \([k] \mathrel{\vcenter{:}}=\left\{1,2, \ldots, k\right\}\). We first define the family of graphs that pertains to our main result.

Definition 1. Given an integer \(k \geq 2\), define \(H_k\) to be the graph where \[V(H_k) \mathrel{\vcenter{:}}=\left\{ i_p : i \in [k], p \in \left\{0,1,2\right\}\right\},\] and the edges of \(H_k\) are

\(\left\{i_0, i_1\right\}\) and \(\left\{i_1,i_2\right\}\) for every \(i \in [k]\);

\(\left\{i_0, j_2\right\}\) for all \(i,j \in [k]\) where \(i \neq j\).

Figure 1: Several graphs in the family \(H_k\)

Figure 1 illustrates the graphs \(H_k\) for several small values of \(k\). Note that \(H_2\) is the cycle on six vertices. Moreover, \(H_k\) can be obtained from a complete bipartite graph by fixing a perfect matching and replacing each edge by a path of length two (subdividing each matching edge). Figure 2 represents a drawing emphasizing this second feature.

The following is the main result of this paper. Its proof is given in Section 4.

Theorem 1. For every \(k \geq 3\), the \(\mathop{\mathrm{LS}}_+\)-rank of the fractional stable set polytope of \(H_k\) is at least \(\frac{1}{16}|V(H_k)|\).

We remark that, given \(p \in \mathbb{N}\) and a polytope \(P \subseteq [0,1]^n\) with \(\Omega(n^c)\) facets for some constant \(c\), the straightforward formulation of \(\mathop{\mathrm{LS}}_+^p(P)\) is an SDP with size \(n^{\Omega(p)}\). Since the fractional stable set polytope of the graph \(H_k\) has dimension \(n=3k\) with \(\Omega(n^2)\) facets, Theorem 1 implies that the SDP described by \(\mathop{\mathrm{LS}}_+\) that fails to exactly represent the stable set polytope of \(H_k\) has size \(n^{\Omega(n)}\). Thus, the consequence of Theorem 1 on the size of the SDPs generated from the \(\mathop{\mathrm{LS}}_+\) operator is incomparable with the extension complexity bound due to Lee, Raghavendra, and Steurer [25], who showed (in the context of SDP extension complexity) that it takes an SDP of size \(2^{\Omega(n^{1/13})}\) to exactly represent the stable set polytope of a general \(n\)-vertex graph as a projection of a spectrahedron (such sets are also called spectrahedral shadows). That is, while the general lower bound by [25] covers every lifted-SDP formulation, our lower bound is significantly larger but it only applies to a specific family of lifted-SDP formulations.

1.2 Organization of the paper↩︎

In Section 2, we introduce the \(\mathop{\mathrm{LS}}_+\) operator and the stable set problem, and establish some notations and basic facts that will aid our subsequent discussion. In Section 3, we study the family of graphs \(H_k\), their stable set polytope and set up some fundamental facts and the proof strategy. We then prove Theorem 1 in Section 4. In Section 5 we determine the Chvátal–Gomory rank of the stable set polytope of the graphs \(H_k\). In Section 6, we show that the results in Sections 3 and 4 readily lead to the discovery of families of vertex-transitive graphs whose \(\mathop{\mathrm{LS}}_+\)-rank also exhibits asymptotically linear growth. Finally, in Section 7, we close by mentioning some natural research directions inspired by these new findings.

2 Preliminaries↩︎

In this section, we establish the necessary definitions and notation for our subsequent analysis.

2.1 The lift-and-project operator \(\mathop{\mathrm{LS}}_+\)↩︎

Here, we define the lift-and-project operator \(\mathop{\mathrm{LS}}_+\) due to Lovász and Schrijver [2] and mention some of its basic properties. Given a convex set \(P \subseteq [0,1]^n\), we define the cone \[\mathop{\mathrm{cone}}(P) \mathrel{\vcenter{:}}=\left\{ \begin{bmatrix} \lambda\\ \lambda x \end{bmatrix} : \lambda\geq 0, x \in P\right\},\] and index the new coordinate by \(0\). Given a vector \(x\) and an index \(i\), we may refer to the \(i\)-entry in \(x\) by \(x_i\) or \([x]_i\). All vectors are column vectors, so here the transpose of \(x\), \(x^{\top}\), is a row vector. Next, given a symmetric matrix \(M \in \mathbb{R}^{n \times n}\) (which necessarily has only real eigenvalues), we say that \(M\) is positive semidefinite and write \(M \succeq 0\) if all eigenvalues of \(M\) are non-negative. We let \(\mathbb{S}_+^n\) denote the set of \(n\)-by-\(n\) symmetric positive semidefinite matrices, and \(\mathop{\mathrm{diag}}(Y)\) be the vector formed by the diagonal entries of a square matrix \(Y\). We also let \(e_i\) be the \(i^{\textrm{th}}\) unit vector.

Given \(P \subseteq [0,1]^n\), the operator \(\mathop{\mathrm{LS}}_+\) first lifts \(P\) to the following set of matrices: \[\widehat{\mathop{\mathrm{LS}}}_+(P) \mathrel{\vcenter{:}}=\left\{ Y \in \mathbb{S}_+^{n+1} : Ye_0 = \mathop{\mathrm{diag}}(Y), Ye_i, Y(e_0-e_i) \in \mathop{\mathrm{cone}}(P)~\forall i \in [n] \right\}.\] It then projects the set back down to the following set in \(\mathbb{R}^n\): \[\mathop{\mathrm{LS}}_+(P) \mathrel{\vcenter{:}}=\left\{ x \in \mathbb{R}^n : \exists Y \in \widehat{\mathop{\mathrm{LS}}}_+(P), Ye_0 = \begin{bmatrix} 1 \\ x \end{bmatrix}\right\}.\] Given \(x \in \mathop{\mathrm{LS}}_+(P)\), we say that \(Y \in \widehat{\mathop{\mathrm{LS}}}_+(P)\) is a certificate matrix for \(x\) if \(Ye_0 = \begin{bmatrix} 1 \\ x \end{bmatrix}\). The following is a foundational property of \(\mathop{\mathrm{LS}}_+\) [2].

Lemma 1. Let \(P \subseteq [0,1]^n\) be a convex set. Then, \[P \supseteq \mathop{\mathrm{LS}}_+(P) \supseteq P_I.\]

Proof. Let \(x \in \mathop{\mathrm{LS}}_+(P)\), and let \(Y \in \widehat{\mathop{\mathrm{LS}}}_+(P)\) be a certificate matrix for \(x\). Since \(Ye_0 = Ye_i + Y(e_0 - e_i)\) for any index \(i \in [n]\) and \(\widehat{\mathop{\mathrm{LS}}}_+\) imposes that \(Ye_i, Y(e_0 - e_i) \in \mathop{\mathrm{cone}}(P)\), it follows that \(Ye_0 \in \mathop{\mathrm{cone}}(P)\), and thus \(x \in P\). On the other hand, given any integral vector \(x \in P \cap \left\{0,1\right\}^n\), observe that \(Y \mathrel{\vcenter{:}}=\begin{bmatrix} 1 \\ x \end{bmatrix}\begin{bmatrix} 1 \\ x \end{bmatrix}^{\top} \in \widehat{\mathop{\mathrm{LS}}}_+(P)\), and so \(x \in \mathop{\mathrm{LS}}_+(P)\). Thus, since \(P_I \mathrel{\vcenter{:}}=\mathop{\mathrm{conv}}\left( P \cap \left\{0,1\right\}^n \right)\) (i.e., the integer hull of \(P\)), we deduce that \[P_I \subseteq \mathop{\mathrm{LS}}_+(P) \subseteq P\] holds. ◻

Therefore, \(\mathop{\mathrm{LS}}_+(P)\) contains the same set of integral solutions as \(P\). Moreover, if \(P\) is a tractable set (i.e., one can optimize a linear function over \(P\) in polynomial time), then so is \(\mathop{\mathrm{LS}}_+(P)\). It is also known that \(\mathop{\mathrm{LS}}_+(P)\) is strictly contained in \(P\) unless \(P = P_I\). Thus, while it is generally \(\mathcal{NP}\)-hard to optimize over the integer hull \(P_I\), \(\mathop{\mathrm{LS}}_+(P)\) offers a tractable relaxation of \(P_I\) that is tighter than the initial relaxation \(P\). Again, the reader may refer to Lovász and Schrijver [2] additional discussion and properties of the \(\mathop{\mathrm{LS}}_+\) operator.

2.2 The stable set polytope and the \(\mathop{\mathrm{LS}}_+\)-rank of graphs↩︎

Given a simple, undirected graph \(G \mathrel{\vcenter{:}}=(V(G), E(G))\), we define its fractional stable set polytope to be \[\mathop{\mathrm{FRAC}}(G) \mathrel{\vcenter{:}}=\left\{ x \in [0,1]^{V(G)} : x_i + x_j \leq 1, \forall \left\{i, j \right\} \in E(G)\right\}.\] We also define \[\mathop{\mathrm{STAB}}(G) \mathrel{\vcenter{:}}=\mathop{\mathrm{FRAC}}(G)_I = \mathop{\mathrm{conv}}\left( \mathop{\mathrm{FRAC}}(G) \cap \left\{0,1\right\}^{V(G)} \right)\] to be the stable set polytope of \(G\). Notice that \(\mathop{\mathrm{STAB}}(G)\) is exactly the convex hull of the incidence vectors of stable sets in \(G\). Also, to reduce cluttering, we will write \(\mathop{\mathrm{LS}}_+^p(G)\) instead of \(\mathop{\mathrm{LS}}_+^p(\mathop{\mathrm{FRAC}}(G))\).

Given a graph \(G\), recall that we let \(r_+(G)\) denote the \(\mathop{\mathrm{LS}}_+\)-rank of \(G\), which is defined to be the smallest integer \(p\) where \(\mathop{\mathrm{LS}}_+^p(G) = \mathop{\mathrm{STAB}}(G)\). More generally, given a linear inequality \(a^{\top} x \leq \beta\) valid for \(\mathop{\mathrm{STAB}}(G)\), we define its \(\mathop{\mathrm{LS}}_+\)-rank to be the smallest integer \(p\) for which \(a^{\top} x \leq \beta\) is a valid inequality of \(\mathop{\mathrm{LS}}_+^p(G)\). Then \(r_+(G)\) can be alternatively defined as the maximum \(\mathop{\mathrm{LS}}_+\)-rank over all valid inequalities of \(\mathop{\mathrm{STAB}}(G)\).

It is well known that \(r_+(G) = 0\) (i.e., \(\mathop{\mathrm{STAB}}(G) = \mathop{\mathrm{FRAC}}(G)\)) if and only if \(G\) is bipartite. Next, given a graph \(G\) and \(C \subseteq V(G)\), we say that \(C\) is a clique if every pair of vertices in \(C\) is joined by edge in \(G\). Then observe that the clique inequality \(\sum_{i \in C} x_i \leq 1\) is valid for \(\mathop{\mathrm{STAB}}(G)\). A graph \(G\) is perfect if \(\mathop{\mathrm{STAB}}(G)\) is defined by only clique and non-negativity inequalities. Since clique inequalities of graphs have \(\mathop{\mathrm{LS}}_+\)-rank \(1\) (see [2] for a proof), we see that \(r_+(G) \leq 1\) for all perfect graphs \(G\).

The graphs \(G\) where \(r_+(G) \leq 1\) are commonly called \(\mathop{\mathrm{LS}}_+\)-perfect graphs. In addition to perfect graphs, it is known that odd holes, odd antiholes, and odd wheels (among others) are also \(\mathop{\mathrm{LS}}_+\)-perfect. While it remains an open problem to find a simple combinatorial characterization of \(\mathop{\mathrm{LS}}_+\)-perfect graphs, there has been significant recent interest and progress on this front [26]–[30].

Next, we mention two simple graph operations that have been critical to the analyses of the \(\mathop{\mathrm{LS}}_+\)-ranks of graphs. Given a graph \(G\) and \(S \subseteq V(G)\), we let \(G-S\) denote the subgraph of \(G\) induced by the vertices in \(V(G) \setminus S\), and call \(G-S\) the graph obtained by the deletion of \(S\). (When \(S = \left\{i\right\}\) for some vertex \(i\), we simply write \(G-i\) instead of \(G - \left\{i\right\}\).) Next, given \(i \in V(G)\), let \(\Gamma(i) \mathrel{\vcenter{:}}=\left\{ j \in V(G) : \left\{i,j\right\} \in E(G)\right\}\) (i.e., \(\Gamma(i)\) is the set of vertices that are adjacent to \(i\)). Then the graph obtained from the destruction of \(i\) in \(G\) is defined as \[G \ominus i \mathrel{\vcenter{:}}= G - ( \left\{i\right\} \cup \Gamma(i)).\] Then we have the following.

Theorem 2. For every graph \(G\),

We next mention a fact that is folklore, with related insights dating back to Balas’ work on disjunctive programming in the 1970s (see, for instance, [31] and [10]).

Lemma 2. Let \(F\) be a face of \([0,1]^n\), and let \(P \subseteq [0,1]^n\) be a convex set. Then \[\mathop{\mathrm{LS}}_+^p(P \cap F) = \mathop{\mathrm{LS}}_+^p(P) \cap F\] for every integer \(p \geq 1\).

Proof. It suffices to prove the claim for \(p=1\), as the general result would follow from iterative application of this case. First, let \(x \in \mathop{\mathrm{LS}}_+(P \cap F)\). Since \(\mathop{\mathrm{LS}}_+(P \cap F) \subseteq P \cap F \subseteq F\), it follows that \(x \in F\). Now let \(Y \in \widehat{\mathop{\mathrm{LS}}}_+(P \cap F)\) be a certificate matrix for \(x\). Then \(Ye_i, Y(e_0 - e_i) \in \mathop{\mathrm{cone}}(P \cap F) \subseteq \mathop{\mathrm{cone}}(P)\), and so it follows that \(Y \in \widehat{\mathop{\mathrm{LS}}}_+(P)\), which certifies that \(x \in \mathop{\mathrm{LS}}_+(P)\).

Conversely, let \(x \in \mathop{\mathrm{LS}}_+(P) \cap F\), and let \(Y \in \widehat{\mathop{\mathrm{LS}}}_+(P)\) be a certificate matrix for \(x\). Then \(Ye_i, Y(e_0 - e_i) \in \mathop{\mathrm{cone}}(P)\) for every \(i \in [n]\). Now since \(x \in F\) and \(\begin{bmatrix} 1 \\ x \end{bmatrix} = Ye_i + Y(e_0-e_i)\), it follows (from \(F\) being a face of \([0,1]^n\) and that \(Ye_i, Y(e_0-e_i) \in \mathop{\mathrm{cone}}(P)\) and \(\mathop{\mathrm{cone}}(P)\) is a subset of the cone of the hypercube \([0,1]^n\)) that \(Ye_i, Y(e_0 - e_i) \in \mathop{\mathrm{cone}}(F)\) for every \(i \in [n]\). This implies that \(Ye_i, Y(e_0-e_i) \in \mathop{\mathrm{cone}}(P \cap F)\), which in turn implies that \(x \in \mathop{\mathrm{LS}}_+(P \cap F)\). ◻

Using Lemma 2, we obtain another elementary property of \(\mathop{\mathrm{LS}}_+\) that will be useful.

Lemma 3. Let \(G\) be a graph, and let \(G'\) be an induced subgraph of \(G\). Then \(r_+(G') \leq r_+(G)\).

Proof. First, there is nothing to prove if \(r_+(G') = 0\), so we assume that \(r_+(G') = p+1\) for some \(p \geq 0\). Thus, there exists \(\bar{x}' \in \mathop{\mathrm{LS}}_+^p(G') \setminus \mathop{\mathrm{STAB}}(G')\).

Now define \(\bar{x} \in \mathbb{R}^{V(G)}\) where \(\bar{x}_i = \bar{x}_i'\) if \(i \in V(G')\) and \(\bar{x}_i = 0\) otherwise. It is easy to see that \(\bar{x}' \not\in \mathop{\mathrm{STAB}}(G') \Rightarrow \bar{x} \not\in \mathop{\mathrm{STAB}}(G)\). Then, applying Lemma 2 with \(P \mathrel{\vcenter{:}}=\mathop{\mathrm{FRAC}}(G)\) and \(F \mathrel{\vcenter{:}}=\left\{ x \in [0,1]^{V(G)} : x_i = 0~\forall i \in V(G) \setminus V(G')\right\}\), we obtain that \(\bar{x} \in \mathop{\mathrm{LS}}_+^p(G)\). Thus, we conclude that \(\bar{x} \in \mathop{\mathrm{LS}}_+^{p}(G) \setminus \mathop{\mathrm{STAB}}(G)\), and \(r_+(G) \geq p+1\). ◻

We are interested in studying relatively small graphs with high \(\mathop{\mathrm{LS}}_+\)-rank — that is, graphs whose stable set polytope is difficult to obtain for \(\mathop{\mathrm{LS}}_+\). First, Lipták and the second author [16] proved the following general upper bound:

Theorem 3. For every graph \(G\), \(r_+(G) \leq \left\lfloor \frac{ |V(G)|}{3} \right\rfloor\).

In Section 4, we prove that the family of graphs \(H_k\) satisfies \(r_+(H_k) = \Theta(|V(H_k)|)\). This shows that Theorem 3 is asymptotically tight, and rules out the possibility of a sublinear upper bound on the \(\mathop{\mathrm{LS}}_+\)-rank of a general graph.

3 Analyzing \(H_k\) and exploiting symmetries↩︎

3.1 The graphs \(H_k\) and their basic properties↩︎

Recall the family of graphs \(H_k\) defined in Section 1 (Definition 1). For convenience, we let \([k]_p \mathrel{\vcenter{:}}=\left\{ j_p : j \in [k]\right\}\) for each \(p \in \left\{0,1,2\right\}\). Then, as mentioned earlier, one can construct \(H_k\) by starting with a complete bipartite graph with bipartitions \([k]_0\) and \([k]_2\), and then for every \(j \in [k]\) subdividing the edge \(\left\{j_0, j_2\right\}\) into a path of length \(2\) and labelling the new vertex \(j_1\). Figure 2 illustrates alternative drawings for \(H_k\) which highlight this aspect of the family of graphs.

Figure 2: Alternative drawings of the graphs \(H_k\)

Given a graph \(G\), we say that \(\sigma : V(G) \to V(G)\) is an automorphism of \(G\) if, for every \(i,j \in V(G)\), \(\left\{i,j\right\} \in E(G)\) if and only if \(\left\{\sigma(i), \sigma(j)\right\} \in E(G)\). Notice that the graphs \(H_k\) have very rich symmetries, and we mention two automorphisms of \(H_k\) that are of particular interest. Define \(\sigma_1 : V(H_k) \to V(H_k)\) where, for every \(p \in \left\{0,1,2\right\}\), \[\label{eqH95ksigma1} \sigma_1\left( j_p \right) \mathrel{\vcenter{:}}= \begin{cases} (j+1)_p & \textrm{if 1 \leq j \leq k-1;}\\ 1_p & \textrm{if j = k.} \end{cases}\tag{1}\] Also define \(\sigma_2 : V(H_k) \to V(H_k)\) where \[\label{eqH95ksigma2} \sigma_2\left( j_p \right) \mathrel{\vcenter{:}}= j_{(2-p)}~\textrm{for every j \in [k] and p \in \left\{0,1,2\right\}.}\tag{2}\] Visually, \(\sigma_1\) corresponds to rotating the drawings of \(H_k\) in Figure 1 counterclockwise by \(\frac{2\pi}{k}\), and \(\sigma_2\) corresponds to reflecting the drawings of \(H_k\) in Figure 2 along the centre vertical line. Also, notice that \(H_k \ominus i\) is either isomorphic to \(H_{k-1}\) (if \(i \in [k]_1\)), or is bipartite (if \(i \in [k]_0 \cup [k]_2\)). As we shall see, these properties are very desirable in our subsequent analysis of \(H_k\).

Given a graph \(G\), let \(\alpha(G)\) denote the maximum cardinality of a stable set in \(G\). Notice that since \(H_2\) is the \(6\)-cycle, \(\alpha(H_2)=3\), and the maximum cardinality stable sets of \(H_2\) are \(\{1_0, 1_2, 2_1\}\) and \(\{1_1, 2_0,2_2\}\). Moreover, due to the simple recursive structure of this family of graphs, we can construct stable sets for \(H_k\) from stable sets for \(H_{k-1}\) for every integer \(k \geq 3\). If \(S\) is a (maximum-cardinality) stable set for \(H_{k-1}\) then \(S \cup \{k_1\}\) is a (maximum-cardinality) stable set for \(H_k\). This shows that \(\alpha(H_k) = k+1\) for every \(k \geq 2\).

Also, notice that each of the sets \([k]_0\), \([k]_1\), and \([k]_2\) is a stable set in \(H_k\). While they each have cardinality \(k\) and thus are not maximum cardinality stable sets in \(H_k\), they are inclusion-wise maximal (i.e., each of them is not a proper subset of another stable set in \(H_k\)). The following result characterizes all inclusion-wise maximal stable sets in \(H_k\).

Lemma 4. Let \(k \geq 2\) and let \(S \subseteq V(H_k)\) be an inclusion-wise maximal stable set in \(H_k\). Then one of the following is true:

\(|S| = k\), \(|S \cap \left\{j_0, j_1, j_2\right\}| = 1\) for every \(j \in [k]\), and either \(S \cap [k]_0 = \emptyset\) or \(S \cap [k]_2 = \emptyset\);

\(|S| = k+1\), and there exists \(j \in [k]\) where \[S = \left( [k]_1 \setminus \left\{j_1\right\} \right) \cup \left\{j_0, j_2\right\}.\]

Proof. First, notice that if \(|S \cap \left\{j_0,j_1,j_2\right\}| =0\) for some \(j \in [k]\), then \(S \cup \left\{j_1\right\}\) is a stable set, contradicting the maximality of \(S\). Thus, we assume that \(|S \cap \left\{j_0,j_1,j_2\right\}| \geq 1\) for every \(j \in [k]\).

If \(|S \cap \left\{j_0,j_1,j_2\right\}| = 1\) for all \(j \in [k]\), then \(|S| = k\). Also, since \(\left\{j_0, j_2'\right\}\) is an edge for every distinct \(j,j' \in [k]\), we see that \(S \cap [k]_0\) and \(S \cap [k]_2\) cannot both be non-empty. Thus, \(S\) belongs to (i) in this case.

Next, suppose there exists \(j\in [k]\) where \(|S \cap \left\{j_0,j_1,j_2\right\}| \geq 2\). Then it must be that \(j_0,j_2 \in S\) and \(j_1 \not\in S\). Then it follows that, for all \(j' \neq j\), \(S \cap \left\{j_0', j_1', j_2'\right\} = \left\{j_1'\right\}\), and \(S\) belongs to (ii). ◻

Next, we describe two families of valid inequalities of \(\mathop{\mathrm{STAB}}(H_k)\) that are of particular interest. Given distinct indices \(j,j' \in [k]\), define \[B_{j,j'} \mathrel{\vcenter{:}}= V(H_k) \setminus \left\{j_1, j_2, j_0', j_1'\right\}.\] Then we have the following.

Lemma 5. For every integer \(k \geq 2\),

the linear inequality \[\label{lem61eq0} \sum_{i \in B_{j,j'}} x_{i} \leq k-1\tag{3}\] is a facet of \(\mathop{\mathrm{STAB}}(H_k)\) for every pair of distinct \(j,j' \in [k]\).

the linear inequality \[\label{lem62eq0} \sum_{i \in [k]_0 \cup [k]_2} (k-1)x_i + \sum_{i \in [k]_1} (k-2)x_i \leq k(k-1)\tag{4}\] is valid for \(\mathop{\mathrm{STAB}}(H_k)\).

Proof. We first prove (i) by induction on \(k\). When \(k=2\), 3 gives an edge inequality, which is indeed a facet of \(\mathop{\mathrm{STAB}}(H_2)\) since \(H_2\) is the \(6\)-cycle.

Next, assume \(k \geq 3\). By the symmetry of \(H_k\), it suffices to prove the claim for the case \(j=1\) and \(j'=2\). First, it follows from Lemma 4 that \(B_{1,2}\) does not contain a stable set of size \(k\), and so 3 is valid for \(\mathop{\mathrm{STAB}}(H_k)\). Next, by the inductive hypothesis, \[\label{lem61eq1} \left( \sum_{ i \in B_{1,2}} x_i \right) - \left( x_{k_0} + x_{k_1} + x_{k_2} \right) \leq k-2\tag{5}\] is a facet of \(\mathop{\mathrm{STAB}}(H_{k-1})\), and so there exist stable sets \(S_1, \ldots, S_{3k-3} \subseteq V(H_{k-1})\) whose incidence vectors are affinely independent and all satisfy 5 with equality. We then define \(S_i' \mathrel{\vcenter{:}}= S_i \cup \left\{k_1\right\}\) for all \(i \in [3k-3]\), \(S_{3k-2}' \mathrel{\vcenter{:}}=[k]_0, S_{3k-1}' \mathrel{\vcenter{:}}=[k]_2\), and \(S_{3k}' \mathrel{\vcenter{:}}=[k-1]_1 \cup \left\{k_0,k_2\right\}\). Then we see that the incidence vectors of \(S_1', \ldots, S_{3k}'\) are affinely independent, and they all satisfy 3 with equality. This finishes the proof of (i).

We next prove (ii). Consider the inequality obtained by summing 3 over all distinct \(j,j' \in [k]\): \[\label{lem62eq1} \sum_{(j,j') \in [k]^2, j \neq j'} \left( \sum_{i \in B_{j,j'}} x_{i} \right) \leq \sum_{(j,j') \in [k]^2, j \neq j'} (k-1).\tag{6}\] Now, the right hand side of 6 is \(k(k-1)(k-1)\). On the other hand, since \(|B_{j,j'} \cap [k]_0| =|B_{j,j'} \cap [k]_2| = k-1\) for all \(j,j'\), we see that if \(i \in [k]_0 \cup [k]_2\), then \(x_{i}\) has coefficient \((k-1)(k-1)\) in the left hand side of 6 . A similar argument shows that \(x_{i}\) has coefficient \((k-1)(k-2)\) for all \(i \in [k]_1\). Thus, 4 is indeed \(\frac{1}{k-1}\) times 6 . Therefore, 4 is a non-negative linear combination of inequalities of the form 3 , so it follows from (i) that 4 is valid for \(\mathop{\mathrm{STAB}}(H_k)\). ◻

3.2 Working from the shadows to prove lower bounds on \(\mathop{\mathrm{LS}}_+\)-rank↩︎

Next, we aim to exploit the symmetries of \(H_k\) to help simplify our analysis of its \(\mathop{\mathrm{LS}}_+\)-relaxations. Before we do that, we describe the broader framework of this reduction that shall also be useful in analyzing lift-and-project relaxations in other settings. Given a graph \(G\), let \(\mathop{\mathrm{Aut}}(G)\) denote the automorphism group of \(G\). We also let \(\chi_S\) denote the incidence vector of a set \(S\). Then we define the notion of \(\mathcal{A}\)-balancing automorphisms.

Definition 2. Given a graph \(G\) and \(\mathcal{A}\mathrel{\vcenter{:}}=\left\{ A_1, \ldots, A_{L}\right\}\) a partition of \(V(G)\), we say that a set of automorphisms \(\mathcal{S}\subseteq \mathop{\mathrm{Aut}}(G)\) is \(\mathcal{A}\)-balancing if

For every \(\ell \in [L]\), and for every \(\sigma \in \mathcal{S}\), \(\left\{ \sigma(i) : i \in A_{\ell}\right\} = A_{\ell}\).

For every \(\ell \in [L]\), the quantity \(|\left\{ \sigma \in \mathcal{S}: \sigma(i) =j \right\}|\) is invariant under the choice of \(i, j \in A_{\ell}\).

In other words, if \(\mathcal{S}\) is \(\mathcal{A}\)-balancing, then the automorphisms in \(\mathcal{S}\) only map vertices in \(A_{\ell}\) to vertices in \(A_{\ell}\) for every \(\ell \in [L]\). Moreover, for every \(i \in A_{\ell}\), the \(|\mathcal{S}|\) images of \(i\) under automorphisms in \(\mathcal{S}\) spread over \(A_{\ell}\) evenly, with \(|\left\{ \sigma \in \mathcal{S}: \sigma(i) = j\right\}| = \frac{|\mathcal{S}|}{|A_{\ell}|}\) for every \(j \in A_{\ell}\).

For example, for the graph \(H_k\), consider the vertex partition \(\mathcal{A}_1 \mathrel{\vcenter{:}}=\left\{ [k]_0, [k]_1, [k]_2\right\}\) and \(\mathcal{S}_1 \mathrel{\vcenter{:}}=\left\{ \sigma_1^j : j \in [k]\right\}\) (where \(\sigma_1\) is as defined in 1 ). Then observe that, for every \(p \in \left\{0,1,2\right\}\), \(\left\{ \sigma(i) : i \in [k]_p \right\} = [k]_p\), and \[| \left\{ \sigma \in \mathcal{S}_1 : \sigma(i) = j\right\} | = 1\] for every \(i,j \in [k]_p\). Thus, \(\mathcal{S}_1\) is \(\mathcal{A}_1\)-balancing. Furthermore, if we define \(\mathcal{A}_2 \mathrel{\vcenter{:}}=\left\{ [k]_0 \cup [k]_2 , [k]_1\right\}\) and \[\label{eqS2balancing} \mathcal{S}_2 \mathrel{\vcenter{:}}=\left\{ \sigma_1^j \circ \sigma_2^{j'} : j \in [k], j' \in [2]\right\}\tag{7}\] (where \(\sigma_2\) is as defined in 2 ), one can similarly show that \(\mathcal{S}_2\) is \(\mathcal{A}_2\)-balancing.

Next, we prove several lemmas about \(\mathcal{A}\)-balancing automorphisms that are relevant to the analysis of \(\mathop{\mathrm{LS}}_+\)-relaxations. Given \(\sigma\in \mathop{\mathrm{Aut}}(G)\), we extend the notation to refer to the function \(\sigma : \mathbb{R}^{V(G)} \to \mathbb{R}^{V(G)}\) where \(\sigma(x)_{i} = x_{\sigma(i)}\) for every \(i \in V(G)\). The following lemma follows readily from the definition of \(\mathcal{A}\)-balancing automorphisms.

Lemma 6. Let \(G\) be a graph, \(\mathcal{A}\mathrel{\vcenter{:}}=\left\{ A_1, \ldots, A_{L}\right\}\) be a partition of \(V(G)\), and \(\mathcal{S}\subseteq \mathop{\mathrm{Aut}}(G)\) be an \(\mathcal{A}\)-balancing set of automorphisms. Then, for every \(x \in \mathbb{R}^{V(G)}\), \[\frac{1}{|\mathcal{S}|} \sum_{\sigma \in \mathcal{S}} \sigma(x) =\sum_{\ell = 1}^L \frac{1}{|A_{\ell}|} \left( \sum_{i \in A_{\ell}} x_i \right) \chi_{A_{\ell}}.\]

Proof. For every \(\ell \in [L]\) and for every \(i \in A_{\ell}\), the fact that \(\mathcal{S}\) is \(\mathcal{A}\)-balancing implies \[\label{lemABalancing0eq1} \frac{1}{|\mathcal{S}|} \sum_{\sigma \in \mathcal{S}} \sigma(e_i) = \frac{1}{|A_{\ell}|} \chi_{A_{\ell}}.\tag{8}\] Since \(x = \sum_{ i \in V(G)} x_i e_i\), the claim follows by summing \(x_i\) times 8 over all \(i \in V(G)\). ◻

Lemma 7. Let \(G\) be a graph, \(\sigma \in \mathop{\mathrm{Aut}}(G)\) be an automorphism of \(G\), and \(p \geq 0\) be an integer. If \(x \in \mathop{\mathrm{LS}}_+^p(G)\), then \(\sigma(x) \in \mathop{\mathrm{LS}}_+^p(G)\).

Proof. When \(p=0\), \(\mathop{\mathrm{LS}}_+^0(G) = \mathop{\mathrm{FRAC}}(G)\), and the claim holds due to \(\sigma\) being an automorphism of \(G\). Next, it is easy to see from the definition of \(\mathop{\mathrm{LS}}_+\) that the operator is invariant under permutation of coordinates (i.e., given \(P \subseteq [0,1]^n\), if we let \(P_{\sigma} \mathrel{\vcenter{:}}=\left\{ \sigma(x) : x \in P\right\}\), then \(x \in \mathop{\mathrm{LS}}_+(P) \Rightarrow \sigma(x) \in \mathop{\mathrm{LS}}_+(P_{\sigma})\)). Applying this insight recursively proves the claim for all \(p\). ◻

Combining the above results, we obtain the following.

Proposition 4. Suppose \(G\) is a graph, \(\mathcal{A}\mathrel{\vcenter{:}}=\left\{A_1, \ldots, A_{L}\right\}\) is a partition of \(V(G)\), and \(\mathcal{S}\subseteq \mathop{\mathrm{Aut}}(G)\) is \(\mathcal{A}\)-balancing. Let \(p \geq 0\) be an integer. If \(x \in \mathop{\mathrm{LS}}_+^p(G)\), then \[x' \mathrel{\vcenter{:}}=\sum_{\ell=1}^{L} \left( \frac{ 1 }{|A_{\ell}|} \sum_{i \in A_{\ell}}x_i \right)\chi_{A_{\ell}}\] also belongs to \(\mathop{\mathrm{LS}}_+^p(G)\).

Proof. Since \(\mathcal{S}\) is \(\mathcal{A}\)-balancing, it follows from Lemma 6 that \[x' = \frac{1}{|\mathcal{S}|} \sum_{\sigma \in \mathcal{S}} \sigma(x).\] Also, since \(x \in \mathop{\mathrm{LS}}_+^p(G)\), Lemma 7 implies that \(\sigma(x) \in \mathop{\mathrm{LS}}_+^p(G)\) for every \(\sigma \in \mathcal{S}\). Thus, \(x'\) is a convex combination of points in \(\mathop{\mathrm{LS}}_+^p(G)\), which is a convex set. Hence, it follows that \(x' \in \mathop{\mathrm{LS}}_+^p(G)\). ◻

Notice that the symmetrized vector \(x'\) in Proposition 4 has at most \(L\) distinct entries, one for each of \(A_{\ell} \in \mathcal{A}\). Thus, instead of fully analyzing a family of SDPs in \(\mathbb{S}_+^{\Omega(n^p)}\) or its projections \(\mathop{\mathrm{LS}}_+^p(G)\), the presence of \(\mathcal{A}\)-balancing automorphisms allows us to work with a spectrahedral shadow in \([0,1]^L\), a set of much lower dimension, for a part of the analysis. For instance, in the extreme case when \(G\) is vertex-transitive, we see that the entire automorphism group \(\mathop{\mathrm{Aut}}(G)\) is \(\left\{V(G)\right\}\)-balancing, and so for every \(x \in \mathop{\mathrm{LS}}_+^p(G)\), Proposition 4 implies that \(\frac{1}{|V(G)|}\left(\sum_{i \in V(G)} x_i \right) \bar{e} \in \mathop{\mathrm{LS}}_+^p(G)\), where \(\bar{e}\) denotes the vector of all ones.

Now we turn our focus back to the graphs \(H_k\). The presence of an \(\mathcal{A}_2\)-balancing set of automorphisms (as described in 7 ) motivates the study of points in \(\mathop{\mathrm{LS}}_+^p(H_k)\) of the following form.

Definition 3. Given real numbers \(a,b \in \mathbb{R}\) and an integer \(k \geq 2\), \(w_k(a,b) \in \mathbb{R}^{V(H_k)}\) is defined as the vector with entries \[[w_k(a,b)]_i \mathrel{\vcenter{:}}=\begin{cases} a & \textrm{if i \in [k]_0 \cup [k]_2;}\\ b & \textrm{if i \in [k]_1.} \end{cases}\]

For an example, the inequality 4 can be rewritten as \(w(k-1,k-2)^{\top} x \leq k(k-1)\). The following is a main reason why we are interested in looking into points of the form \(w_k(a,b)\).

Lemma 8. Suppose there exists \(x \in \mathop{\mathrm{LS}}_+^p(H_k)\) where \(x\) violates 4 . Then there exist real numbers \(a,b\) where \(w_k(a,b) \in \mathop{\mathrm{LS}}_+^p(H_k)\) and \(w_k(a,b)\) violates 4 .

Proof. Given \(x\), let \(a \mathrel{\vcenter{:}}=\frac{1}{2k}\sum_{i \in [k]_0 \cup [k]_2} x_{i}\) and \(b \mathrel{\vcenter{:}}=\frac{1}{k}\sum_{i \in [k]_1} x_{i}\). Due to the presence of the \(\mathcal{A}_2\)-balancing automorphisms \(\mathcal{S}_2\), as well as Proposition 4, we know that \(x' \mathrel{\vcenter{:}}= w_k(a,b)\) belongs to \(\mathop{\mathrm{LS}}_+^{p}(H_k)\). Now since \(x\) violates 4 , \[k(k-1) < w(k-1,k-2)^{\top} x = (k-1)(2ka) + (k-2)(kb) = w(k-1,k-2)^{\top} x',\] and so \(x'\) violates 4 as well. ◻

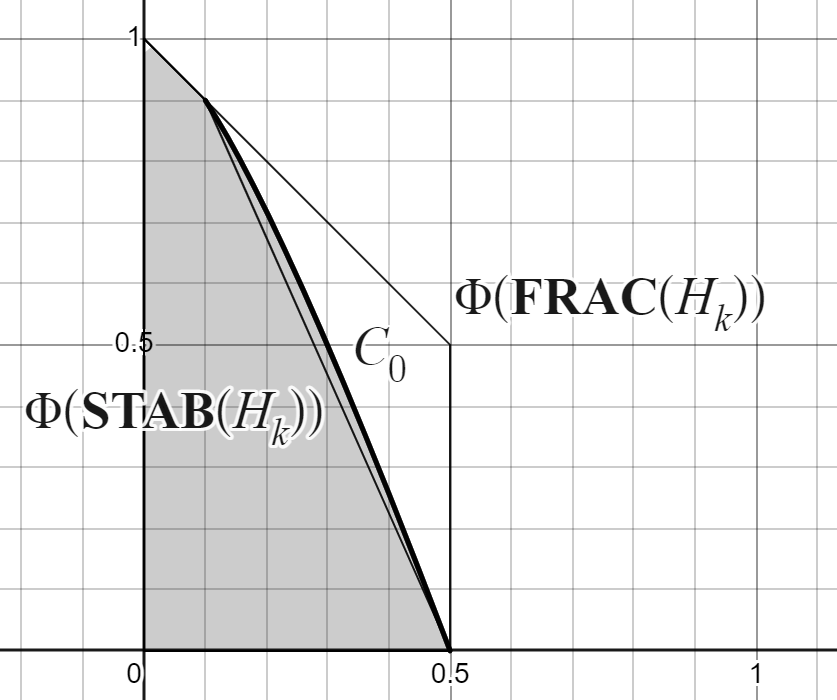

A key ingredient in our proof of the main result is to find a point \(x \in \mathop{\mathrm{LS}}_+^p(H_k)\) where \(x\) violates 4 for some \(p \in \Theta(k)\), which would imply that \(r_+(H_k) > p\). Lemma 8 assures that, due to the symmetries of \(H_k\), we are not sacrificing any sharpness of the result by only looking for such points \(x\) of the form \(w_k(a,b)\). This enables us to capture important properties of \(\mathop{\mathrm{LS}}_+^p(H_k)\) by analyzing a corresponding “shadow” of the set in \(\mathbb{R}^2\). More explicitly, given \(P \subseteq \mathbb{R}^{V(H_k)}\), we define \[\Phi(P) \mathrel{\vcenter{:}}=\left\{ (a,b) \in \mathbb{R}^2 : w_k(a,b) \in P\right\}.\] For example, it is not hard to see that \[\Phi(\mathop{\mathrm{FRAC}}(H_k)) = \mathop{\mathrm{conv}}\left(\left\{ (0,0), \left(\frac{1}{2},0\right), \left(\frac{1}{2}, \frac{1}{2}\right), (0,1)\right\}\right)\] for every \(k \geq 2\). We can similarly characterize \(\Phi(\mathop{\mathrm{STAB}}(H_k))\).

Lemma 9. For every integer \(k \geq 2\), we have \[\Phi(\mathop{\mathrm{STAB}}(H_k)) = \mathop{\mathrm{conv}}\left(\left\{ (0,0), \left(\frac{1}{2},0\right), \left(\frac{1}{k}, \frac{k-1}{k}\right), (0,1)\right\}\right).\]

Proof. Let \(k \geq 2\) be an integer. Then, the empty set, \([k]_1\), \([k]_0\), and \([k]_2\) are all stable sets in \(H_k\). Notice that \(\chi_{\emptyset} = w_k(0,0)\) and \(\chi_{[k]_1} = w_k(0,1)\), and thus \((0,0)\) and \((0,1)\) are both in \(\Phi(\mathop{\mathrm{STAB}}(H_k))\). Also, since \(\frac{1}{2} \chi_{[k]_0} + \frac{1}{2} \chi_{[k]_2} = w_k\left(\frac{1}{2},0\right) \in\mathop{\mathrm{STAB}}(H_k)\), we have \(\left(\frac{1}{2},0\right) \in \Phi(\mathop{\mathrm{STAB}}(H_k))\). Next, recall from Lemma 4 that for every \(j \in [k]\), \[S_j \mathrel{\vcenter{:}}=\left( [k]_1 \setminus \left\{j_1\right\} \right) \cup \left\{j_0, j_2\right\}\] is a stable set of \(H_k\). Thus, \(\frac{1}{k} \sum_{j=1}^k \chi_{S_j} = w_k\left( \frac{1}{k}, \frac{k-1}{k} \right) \in \mathop{\mathrm{STAB}}(H_k)\), and so \(\left(\frac{1}{k},\frac{k-1}{k}\right) \in\Phi(\mathop{\mathrm{STAB}}(H_k))\). Therefore, \(\Phi(\mathop{\mathrm{STAB}}(H_k)) \supseteq \mathop{\mathrm{conv}}\left(\left\{ (0,0), \left(\frac{1}{2},0\right), \left(\frac{1}{k}, \frac{k-1}{k}\right), (0,1)\right\}\right)\).

On the other hand, for all \((a,b) \in \Phi(\mathop{\mathrm{STAB}}(H_k))\), it follows from Lemma 5 that \[2(k-1)a +(k-2)b \leq k-1\] Since \(\Phi(\mathop{\mathrm{STAB}}(H_k)) \subseteq \Phi(\mathop{\mathrm{FRAC}}(H_k))\), using our characterization of \(\Phi(\mathop{\mathrm{FRAC}}(H_k))\), we deduce \(\Phi(\mathop{\mathrm{STAB}}(H_k))\) is contained in the set \[\mathop{\mathrm{conv}}\left(\left\{ (0,0), \left(\frac{1}{2},0\right), \left(\frac{1}{2}, \frac{1}{2}\right), (0,1)\right\}\right) \cap \left\{(a,b)\in \mathbb{R}^2 \, : \, 2(k-1)a +(k-2)b \leq k-1 \right\}.\] However, the above set is exactly \(\mathop{\mathrm{conv}}\left(\left\{ (0,0), \left(\frac{1}{2},0\right), \left(\frac{1}{k}, \frac{k-1}{k}\right), (0,1)\right\}\right)\). Therefore, \[\Phi(\mathop{\mathrm{STAB}}(H_k)) = \mathop{\mathrm{conv}}\left(\left\{ (0,0), \left(\frac{1}{2},0\right), \left(\frac{1}{k}, \frac{k-1}{k}\right), (0,1)\right\}\right).\] ◻

Even though \(\mathop{\mathrm{STAB}}(H_k)\) is an integral polytope, notice that \(\Phi(\mathop{\mathrm{STAB}}(H_k))\) is not integral. Nonetheless, it is clear that \[\mathop{\mathrm{LS}}_+^p(H_k) = \mathop{\mathrm{STAB}}(H_k) \Rightarrow \Phi(\mathop{\mathrm{LS}}_+^p(H_k)) = \Phi(\mathop{\mathrm{STAB}}(H_k)).\] Thus, to show that \(r_+(H_k) > p\), it suffices to find a point \((a,b) \in \Phi(\mathop{\mathrm{LS}}_+^p(H_k)) \setminus \Phi(\mathop{\mathrm{STAB}}(H_k))\). More generally, given a graph \(G\) with a set of \(\mathcal{A}\)-balancing automorphisms where \(\mathcal{A}\) partitions \(V(G)\) into \(L\) sets, one can adapt our approach and study the \(\mathop{\mathrm{LS}}_+\)-relaxations of \(G\) via analyzing \(L\)-dimensional shadows of these sets.

3.3 Strategy for the proof of the main result↩︎

So far, we have an infinite family of graphs \(\left\{H_k\right\}\) with nice symmetries. In Lemma 5(i) we derived a family of facets of \(\mathop{\mathrm{STAB}}(H_k)\), and then showed in Lemma 5(ii) that these facets imply a symmetrized valid inequality for \(\mathop{\mathrm{STAB}}(H_k)\). Notably, the left hand side of this symmetrized inequality only has two distinct entries — one for the vertices in \([k]_0 \cup [k]_2\), and the other for vertices in \([k]_1\).

Also, due to the symmetries of \(H_k\), these graphs admit \(\mathcal{A}\)-balancing automorphisms. Using that and Proposition 4, we can use an arbitrary point \(x \in \mathop{\mathrm{LS}}_+^p(H_k)\) to derive a symmetrized point \(x' \in \mathop{\mathrm{LS}}_+^p(H_k)\) for every \(k \geq 2\) and \(p \geq 0\). In particular, using the \(\mathcal{A}\)-balancing automorphisms described in 7 , we are assured that the symmetrized \(x'\) has at most two distinct entries — one for vertices in \([k]_0 \cup [k]_2\), and one for vertices in \([k]_1\).

These findings motivate the study of the two dimensional shadow \(\Phi(\mathop{\mathrm{LS}}_+^p(H_k))\) of \(\mathop{\mathrm{LS}}_+^p(H_k)\). We also have a complete characterization of \(\Phi(\mathop{\mathrm{STAB}}(H_k))\) for every integer \(k\geq 2\) (Lemma 9). Thus, to show that \(H_k\) has \(\mathop{\mathrm{LS}}_+\)-rank greater than \(p\), it suffices to establish the existence of a point in the two dimensional set \(\Phi(\mathop{\mathrm{LS}}_+^p(H_k)) \setminus \Phi(\mathop{\mathrm{STAB}}(H_k))\).

The rest of the proof of the main result, presented in the next section, starts by characterizing all symmetric positive semidefinite matrices that could certify the membership of a vector \(x'\) in \(\mathop{\mathrm{LS}}_+(H_k)\) (\(x'\), as mentioned above, has at most two distinct entries). This characterization is relatively simple, since due to the isolated symmetries of \(x'\), there always exists a symmetric positive semidefinite certificate matrix for certifying the membership of \(x'\) in \(\mathop{\mathrm{LS}}_+(H_k)\) which has at most four distinct entries (ignoring the \(00^{\mathrm{th}}\) entry of the certificate matrix, which is one). Next, we construct a compact convex set which is described by three linear inequalities and a quadratic inequality such that this set is a subset of \(\Phi(\mathop{\mathrm{LS}}_+(H_k))\) and a strict superset of \(\Phi(\mathop{\mathrm{STAB}}(H_k))\) for every integer \(k \geq 4\). This enables us to conclude for all \(k \geq 4\), \(r_+(H_k) \geq 2\), and establish some of the tools for the rest of the proof. The point \(w_k\left(\frac{1}{k},\frac{k-1}{k}\right)\) already plays a special role.

Then, we put all these tools together to prove that there is a point in \(\mathop{\mathrm{LS}}_+^p(H_k) \setminus \mathop{\mathrm{STAB}}(H_k)\) which is very close to \(w_k\left(\frac{1}{k},\frac{k-1}{k}\right)\) (we do this partly by working in the \(2\)-dimensional space where \(\Phi(\mathop{\mathrm{LS}}_+^p(H_k)\) lives). For the recursive construction of certificate matrices (to establish membership in \(\mathop{\mathrm{LS}}_+^p(H_k)\)), we show that in addition to the matrix being symmetric, positive semidefinite, and satisfying a simple linear inequality, membership of two suitable vectors \(w_{k-1}(a_1,b_1)\) and \(w_{k-1}(a_2,b_2)\) in \(\mathop{\mathrm{LS}}_+^{p-1}(H_{k-1})\) suffice (Lemma 11). The rest of the analysis proves that there exist values for these parameters which allow the construction of certificate matrices for suitable pairs of integers \(k\) and \(p\).

4 Proof of the main result↩︎

4.1 \(\Phi(\mathop{\mathrm{LS}}_+(H_k))\) — the shadow of the first relaxation↩︎

Here, we aim to study the set \(\Phi(\mathop{\mathrm{LS}}_+(H_k))\). To do that, we first look into potential certificate matrices for \(w_k(a,b)\) that have plenty of symmetries. Given \(k \in \mathbb{N}\) and \(a,b,c,d \in \mathbb{R}\), we define the matrix \(W_k(a,b,c,d) \mathrel{\vcenter{:}}=\begin{bmatrix} 1 & w_k(a,b)^{\top} \\ w_k(a,b) & \overline{W} \end{bmatrix}\), where \[\overline{W} \mathrel{\vcenter{:}}=\begin{bmatrix} a & 0 & a-c \\ 0 & b & 0 \\ a-c & 0 & a \end{bmatrix} \otimes I_k + \begin{bmatrix} c & a-c & 0 \\ a-c & d & a-c \\ 0 & a-c & c \end{bmatrix} \otimes (J_k - I_k).\] Note that \(\otimes\) denotes the Kronecker product, \(I_k\) is the \(k\)-by-\(k\) identity matrix, and \(J_k\) is the \(k\)-by-\(k\) matrix of all ones. Also, \(W_k(a,b,c,d) \in \mathbb{R}^{(\left\{0\right\} \cup V(H_k)) \times (\left\{0\right\} \cup V(H_k))}\), and the \(|V_k| = 3k\) columns of \(\overline{W}\) are indexed by the vertices \(1_0, 1_1, 1_2, 2_0, 2_1, 2_2, \ldots\) from left to right, with the rows following the same ordering. Then we have the following.

Lemma 10. Let \(k \in \mathbb{N}\) and \(a,b,c,d \in \mathbb{R}\). Then \(W_k(a,b,c,d) \succeq 0\) if and only if the following conditions hold:

\(c \geq 0\);

\(a - c \geq 0\);

\((b-d) - (a-c) \geq 0\);

\(2a + (k-2)c - 2k a^2 \geq 0\);

\((2a + (k-2)c - 2k a^2)(2b + 2(k-1)d - 2kb^2) - (2(k-1)(a-c) - 2kab)^2 \geq 0\).

Proof. Define matrices \(\overline{W}_1, \overline{W}_2, \overline{W}_3 \in \mathbb{R}^{3k \times 3k}\) where \[\begin{align} \overline{W}_1 & \mathrel{\vcenter{:}}=\frac{1}{2} \cdot J_k \otimes \begin{bmatrix} c & 0 & -c \\ 0 & 0 & 0 \\ -c & 0 & c \end{bmatrix}, \\ \overline{W}_2 & \mathrel{\vcenter{:}}=\frac{1}{k} \cdot (kI_k - J_k) \otimes \begin{bmatrix} a-c & c-a & a-c \\ c-a & b-d & c-a \\ a-c & c-a & a-c \end{bmatrix}, \\ \overline{W}_3 & \mathrel{\vcenter{:}}=\frac{1}{2k} \cdot J_k \otimes \begin{bmatrix} 2a + (k-2)c - 2k a^2 & 2(k-1)(a-c) - 2kab & 2a + (k-2)c - 2k a^2\\ 2(k-1)(a-c) - 2kab &2b + 2(k-1)d - 2kb^2 & 2(k-1)(a-c) - 2kab\\ 2a + (k-2)c - 2k a^2 & 2(k-1)(a-c) - 2kab & 2a + (k-2)c - 2k a^2 \end{bmatrix}. \end{align}\] Then \[\begin{align} &\overline{W}_1 + \overline{W}_2 + \overline{W}_3 \\ ={}& \begin{bmatrix} a - a^2 & -ab & a-c-a^2 \\ -ab & b -b^2 & -ab \\ a-c-a^2 & -ab & a-ab^2 \end{bmatrix} \otimes I_k + \begin{bmatrix} c -a^2 & a-c-ab & -a^2 \\ a-c-ab & d -b^2& a-c-ab \\ -a^2 & a-c-ab & c-a^2 \end{bmatrix} \otimes (J_k - I_k)\\ ={}& \overline{W} - w_k(a,b)(w_k(a,b))^{\top}, \end{align}\] which is a Schur complement of \(W_k(a,b,c,d)\). Thus, we see that \(W_k(a,b,c,d) \succeq 0\) if and only if \(\overline{W}_1 + \overline{W}_2 + \overline{W}_3 \succeq 0\). Moreover, observe that the columns of \(\overline{W}_i\) and \(\overline{W}_j\) are orthogonal whenever \(i \neq j\). Thus, \(\overline{W}_1 + \overline{W}_2 + \overline{W}_3 \succeq 0\) if and only if \(\overline{W}_1, \overline{W}_2\), and \(\overline{W}_3\) are all positive semidefinite. Now observe that \[\begin{align} \overline{W}_1 \succeq 0 &\iff \begin{bmatrix} c & -c \\ -c & c \end{bmatrix} \succeq 0 \iff \textrm{(S1) holds},\\ \overline{W}_2 \succeq 0 &\iff \begin{bmatrix} a-c & c-a \\ c-a & b-d \end{bmatrix} \succeq 0 \iff \textrm{(S2) and (S3) hold},\\ \overline{W}_3 \succeq 0 &\iff \begin{bmatrix} 2a + (k-2)c - 2k a^2 & 2(k-1)(a-c) - 2kab \\ 2(k-1)(a-c) - 2kab & 2b + 2(k-1)d - 2kb^2\end{bmatrix} \succeq 0 \iff \textrm{(S4) and (S5) hold}. \end{align}\] Thus, the claim follows. ◻

Next, for convenience, define \(q_k \mathrel{\vcenter{:}}= 1-\sqrt{\frac{k}{2k-2}}\), and \[p_k(x,y) \mathrel{\vcenter{:}}=(2x^2-x)+2q_k^2(y^2-y)+4q_kxy.\] Notice that the curve \(p_k(x,y) = 0\) is a parabola for all \(k \geq 3\). Then, using Lemma 10, we have the following.

Proposition 5. For every \(k \geq 4\), \[\label{prop64eq0} \Phi(\mathop{\mathrm{LS}}_+(H_k)) \supseteq \left\{ (x,y) \in \mathbb{R}^2: p_k(x,y) \leq 0, x+y \leq 1, x \geq 0, y \geq 0\right\}.\qquad{(1)}\]

Proof. For convenience, let \(C\) denote the set on the right hand side of ?? . Notice that the boundary points of the triangle \(\left\{ (x,y) : x+y \leq 1, x \geq 0,y \geq 0\right\}\) which lie in \(C\) are also boundary points of \(\Phi(\mathop{\mathrm{STAB}}(H_k))\). Thus, let us define the set of points \[C_0 \mathrel{\vcenter{:}}=\left\{ (x,y) \in \mathbb{R}^2: p_k(x,y) = 0, \frac{1}{k} < x < \frac{1}{2}\right\}.\] To prove our claim, it suffices to prove that for all \((a,b) \in C_0\), there exist \(c,d \in \mathbb{R}\) such that \(W_k(a,b,c,d)\) certifies \(w_k(a,b) \in \mathop{\mathrm{LS}}_+(H_k)\).

Figure 3: Visualizing the set \(C\) for the case \(k=10\)

To help visualize our argument, Figure 3 (which is produced using Desmos’ online graphing calculator [32]) illustrates the set \(C\) for the case of \(k=10\). Now given \((a, b) \in \mathbb{R}^2\) (not necessarily in \(C_0\)), consider the conditions (S3) and (S5) from Lemma 10: \[\begin{align} \tag{9} b-a+c &\geq d,\\ \tag{10} (2a + (k-2)c - 2k a^2)(2b + 2(k-1)d - 2kb^2) - (2(k-1)(a-c) - 2kab)^ 2 & \geq 0. \end{align}\] If we substitute \(d = b-a+c\) into 10 and solve for \(c\) that would make both sides equal, we would obtain the quadratic equation \(p_2c^2 + p_1c + p_0 =0\) where \[\begin{align} p_2 \mathrel{\vcenter{:}}={}& (k-2)(2(k-1)) - (-2(k-1))^2,\\ p_1 \mathrel{\vcenter{:}}={}& (k-2)(2b + 2(k-1)(b-a)-2kb^2) + (2a - 2ka^2)(2(k-1))\\ & -2(-2(k-1))(2(k-1)a - 2kab),\\ p_0 \mathrel{\vcenter{:}}={}& (2a - 2ka^2)(2b + 2(k-1)(b-a)-2kb^2) - (2(k-1)a - 2kab)^2. \end{align}\] We then define \[c \mathrel{\vcenter{:}}=\frac{-p_1}{2p_2} = -a^2-2ab-\frac{b^{2}}{2}+\frac{3a}{2}+\frac{b}{2}+\frac{b\left(b-1\right)}{2\left(k-1\right)},\] and \(d \mathrel{\vcenter{:}}= b-a+c\). We claim that, for all \((a,b) \in C_0\), \(W_k(a,b,c,d)\) would certify \(w_k(a,b) \in \mathop{\mathrm{LS}}_+(H_k)\). First, we provide some intuition for the choice of \(c\). Let \(\overline{q}_k \mathrel{\vcenter{:}}= 1+ \sqrt{\frac{k}{2k-2}}\) and \[\overline{p}_k(x,y) \mathrel{\vcenter{:}}=(2x^2-x) + 2\overline{q}_k^2(y^2-y) + 4\overline{q}_kxy.\] Then, if we consider the discriminant \(\Delta p \mathrel{\vcenter{:}}= p_1^2 - 4p_0p_2\), one can check that \[\Delta p = 4(k-1)^2 p_k(a,b) \overline{p}_k(a,b).\] Thus, when \(\Delta p > 0\), there would be two solutions to the quadratic equation \(p_2x^2 + p_1x + p_0 = 0\), and \(c\) would be defined as the midpoint of these solutions. In particular, when \((a,b) \in C_0\), \(p_k(a,b) = \Delta p = 0\), and so \(c = \frac{-p_1}{2p_2}\) would indeed be the unique solution that satisfies both 9 and 10 with equality.

Now we verify that \(Y \mathrel{\vcenter{:}}= W_k(a,b,c,d)\), as defined, satisfies all the conditions imposed by \(\mathop{\mathrm{LS}}_+\). We first show that \(W_k(a,b,c,d) \succeq 0\) by verifying the conditions from Lemma 10. Notice that (S3) and (S5) must hold by the choice of \(c\) and \(d\). Next, we check (S1), namely \(c \geq 0\). Define the region \[T \mathrel{\vcenter{:}}=\left\{ (x,y) \in \mathbb{R}^2 : \frac{1}{k} \leq x \leq \frac{1}{2}, \frac{(1-2x)(k-1)}{k-2} \leq y \leq 1 - x\right\}.\] In other words, \(T\) is the triangular region with vertices \(\left(\frac{1}{k}, \frac{k-1}{k} \right), \left(\frac{1}{2},0 \right)\), and \(\left(\frac{1}{2}, \frac{1}{2} \right)\). Thus, \(T\) contains \(C_0\) and it suffices to show that \(c \geq 0\) over \(T\). Fixing \(k\) and viewing \(c\) as a function of \(a\) and \(b\), we obtain \[\frac{ \partial c}{\partial a} = -2a-2b+\frac{3}{2}, \quad \frac{ \partial c}{\partial b} = \frac{(-4a-2b+1)k +4a +4b-2}{2k-2}.\] Solving \(\frac{ \partial c}{\partial a} = \frac{ \partial c}{\partial b} = 0\), we obtain the unique solution \((a,b) = \left( \frac{-k+2}{4k}, \frac{4k-2}{4k} \right)\), which is outside of \(T\). Next, one can check that \(c\) is non-negative over the three edges of \(T\), and we conclude that \(c \geq 0\) over \(T\), and thus (S1) holds. The same approach also shows that both \(a-c\) and \(2a+(k-2)c-2ka^2\) are non-negative over \(T\), and thus (S2) and (S4) hold as well, and we conclude that \(Y \succeq 0\).

Next, we verify that \(Ye_i, Y(e_0-e_i) \in \mathop{\mathrm{cone}}(\mathop{\mathrm{FRAC}}(H_k))\). By the symmetry of \(H_k\), it suffices to verify these conditions for the vertices \(i=1_0\) and \(i=1_1\).

\(Ye_{1_0}\): Define \(S_1 \mathrel{\vcenter{:}}=\left\{1_0, 1_2\right\} \cup \left\{i_1 : 2 \leq i \leq k\right\}\) and \(S_2 \mathrel{\vcenter{:}}=[k]_0\). Observe that both \(S_1, S_2\) are stable sets of \(H_k\), and that \[\label{prop64Ye1} Ye_{1_0} = (a-c) \begin{bmatrix} 1 \\ \chi_{S_1} \end{bmatrix} + c \begin{bmatrix} 1 \\ \chi_{S_2} \end{bmatrix},\tag{11}\] Since we verified above that \(c \geq 0\) and \(a-c \geq 0\), \(Ye_{1_0} \in \mathop{\mathrm{cone}}(\mathop{\mathrm{STAB}}(H_k))\), which is contained in \(\mathop{\mathrm{cone}}(\mathop{\mathrm{FRAC}}(H_k))\).

\(Ye_{1_1}\): The non-trivial edge inequalities imposed by \(Ye_{1_1} \in \mathop{\mathrm{cone}}(\mathop{\mathrm{FRAC}}(H_k))\) are \[\begin{align} \tag{12} [Ye_{1_1}]_{2_2} + Y[e_{1_1}]_{3_0} \leq [Ye_{1_1}]_{0} & \Rightarrow 2(a-c) \leq b, \\ \tag{13} [Ye_{1_1}]_{2_0} + [Ye_{1_1}]_{2_1} \leq [Ye_{1_1}]_{0} & \Rightarrow a-c+d \leq b. \end{align}\] Note that 13 is identical to (S3), which we have already established. Next, we know from (S4) that \(c \geq \frac{2ka^2-2a}{k-2}\). That together with the fact that \(2(k-1)a+(k-2)b \geq k-1\) for all \((a,b) \in C_0\) and \(k \geq 4\) implies 12 .

\(Y(e_0-e_{1_0})\): The non-trivial edge inequalities imposed by \(Y(e_0-e_{1_0}) \in \mathop{\mathrm{cone}}(\mathop{\mathrm{FRAC}}(H_k))\) are \[\begin{align} \tag{14} [Y(e_0-e_{1_0})]_{2_2} + [Y(e_0-e_{1_0})]_{3_0} \leq [Y(e_0-e_{1_0})]_{0} & \Rightarrow a + (a-c) \leq 1-a, \\ \tag{15} [Y(e_0-e_{1_0})]_{2_1} + [Y(e_0-e_{1_0})]_{2_2} \leq [Y(e_0-e_{1_0})]_{0} & \Rightarrow (b-a+c)+ a \leq 1-a. \end{align}\] 14 follows from 12 and the fact that \(a -c \geq 0\). For 15 , we aim to show that \(a+b+c \leq 1\). Define the quantity \[g(x,y) \mathrel{\vcenter{:}}= 1-\frac{5}{2}x-\frac{3}{2}y+x^{2}+\frac{1}{2}y^{2}+2xy-\frac{y\left(y-1\right)}{2\left(k-1\right)}.\] Then \(g(a,b) = 1-a-b-c\). Notice that, for all \(k\), the curve \(g(x,y) = 0\) intersects with \(C\) at exactly three points: \((0,1), \left( \frac{1}{k}, \frac{k-1}{k} \right)\), and \(\left(\frac{1}{2} , 0\right)\). In particular, the curve does not intersect the interior of \(C\). Therefore, \(g(x,y)\) is either non-negative or non-positive over \(C\). Since \(g(0,0) = 1\), it is the former. Hence, \(g(x,y) \geq 0\) over \(C\) (and hence \(C_0\)), and 15 holds.

\(Y(e_0-e_{1_1})\): The non-trivial edge inequalities imposed by \(Y(e_0-e_{1_1}) \in \mathop{\mathrm{cone}}(\mathop{\mathrm{FRAC}}(H_k))\) are \[\begin{align} \tag{16} [Y(e_0-e_{1_1})]_{1_0} + [Y(e_0-e_{1_1})]_{2_2} \leq [Y(e_0-e_{1_1})]_{0} & \Rightarrow a + c \leq 1-b, \\ \tag{17} [Y(e_0-e_{1_1})]_{2_0} + [Y(e_0-e_{1_1})]_{2_1} \leq [Y(e_0-e_{1_1})]_{0} & \Rightarrow c + (b-d) \leq 1-b. \end{align}\] 16 is identical to 14 , which we have verified above. Finally, 17 follows from (S3) and the fact that \(a+b \leq 1\) for all \((a,b) \in C\).

This completes the proof. ◻

An immediate consequence of Proposition 5 is the following.

Corollary 1. For all \(k \geq 4\), \(r_+(H_k) \geq 2\).

Proof. For every \(k \geq 4\), the set described in ?? is not equal to \(\Phi(\mathop{\mathrm{STAB}}(H_k))\). Thus, there exists \(w_k(a,b) \in \mathop{\mathrm{LS}}_+(H_k) \setminus \mathop{\mathrm{STAB}}(H_k)\) for all \(k \geq 4\), and the claim follows. ◻

Corollary 1 is sharp — notice that destroying any vertex in \(H_3\) yields a bipartite graph, so it follows from Theorem 2(i) that \(r_+(H_3)=1\). Also, since destroying a vertex in \(H_4\) either results in \(H_3\) or a bipartite graph, we see that \(r_+(H_4) = 2\).

4.2 Showing \(r_+(H_k) = \Theta(k)\)↩︎

We now develop a few more tools that we need to establish the main result of this section. Again, to conclude that \(r_+(H_k) > p\), it suffices to show that \(\Phi(\mathop{\mathrm{LS}}_+^p(H_k)) \supset \Phi(\mathop{\mathrm{STAB}}(H_k))\). In particular, we will do so by finding a point in \(\Phi(\mathop{\mathrm{LS}}_+^p(H_k)) \setminus \Phi(\mathop{\mathrm{STAB}}(H_k))\) that is very close to the point \(\left(\frac{1}{k}, \frac{k-1}{k}\right)\). Given \((a,b) \in \mathbb{R}^2\), let \[s_k(a,b) \mathrel{\vcenter{:}}=\frac{\frac{k-1}{k} - b}{\frac{1}{k} - a}.\] That is, \(s_k(a,b)\) is the slope of the line that contains the points \((a,b)\) and \(\left(\frac{1}{k}, \frac{k-1}{k}\right)\). Next, define \[f(k, p) \mathrel{\vcenter{:}}=\sup \left\{ s_k(a,b) : (a,b) \in \Phi(\mathop{\mathrm{LS}}_+^p(H_k)), a > \frac{1}{k}\right\}.\] In other words, \(f(k,p)\) is the slope of the tangent line to \(\Phi(\mathop{\mathrm{LS}}_+^p(H_k))\) at the point \(\left(\frac{1}{k}, \frac{k-1}{k}\right)\) towards the right hand side. Thus, for all \(\ell < f(k,p)\), there exists \(\varepsilon > 0\) where the point \(\left( \frac{1}{k} + \varepsilon, \frac{k-1}{k} + \ell \varepsilon \right)\) belongs to \(\Phi(\mathop{\mathrm{LS}}_+^p(H_k))\). For \(p=0\) (and so \(\mathop{\mathrm{LS}}_+^p(H_k) = \mathop{\mathrm{FRAC}}(H_k)\)), observe that \(f(k, 0) = -1\) for all \(k \geq 2\) (attained by the point \(\left( \frac{1}{2}, \frac{1}{2} \right))\). Next, for \(p=1\), consider the polynomial \(p_k(x,y)\) defined before Proposition 5. Then any point \((x,y)\) on the curve \(p_k(x,y) = 0\) has slope \[\frac{\partial}{\partial x} p_k(x,y) = \frac{1-4x- 4q_k y}{4q_k^2y - q_k + 4q_kx}.\] Thus, by Proposition 5, \[\label{dydx:eq1} f(k,1) \geq \left. \frac{\partial}{\partial x} p_k(x,y) \right|_{(x,y) = \left(\frac{1}{k}, \frac{k-1}{k}\right)} = -1 - \frac{k}{3k^2- 2(k-1)^2 \sqrt{ \frac{2k}{k-1}} -4k}\tag{18}\] for all \(k \geq 4\). Finally, if \(p \geq r_+(H_k)\), then \(f(k,p) = -\frac{2(k-1)}{k-2}\) (attained by the point \(\left( \frac{1}{2},0 \right) \in \Phi(\mathop{\mathrm{STAB}}(H_k))\)).

We will prove our \(\mathop{\mathrm{LS}}_+\)-rank lower bound on \(H_k\) by showing that \(f(k,p) > -\frac{2(k-1)}{k-2}\) for some \(p = \Theta(k)\). To do so, we first show that the recursive structure of \(H_k\) allows us to establish \((a,b) \in \Phi(\mathop{\mathrm{LS}}_+^p(H_k))\) by verifying (among other conditions) the membership of two particular points in \(\Phi(\mathop{\mathrm{LS}}_+^{p-1}(H_{k-1}))\), which will help us relate the quantities \(f(k-1,p-1)\) and \(f(k,p)\). Next, we bound the difference \(f(k-1,p-1) - f(k,p)\) from above, which implies that it takes \(\mathop{\mathrm{LS}}_+\) many iterations to knock the slopes \(f(k,p)\) from that of \(\Phi(\mathop{\mathrm{FRAC}}(H_k))\) down to that of \(\Phi(\mathop{\mathrm{STAB}}(H_k))\).

First, here is a tool that will help us verify certificate matrices recursively.

Lemma 11. Suppose \(a,b,c,d \in \mathbb{R}\) satisfy all of the following:

\(W_k(a,b,c,d) \succeq 0\),

\(2b+ 2c -d \leq 1\),

\(w_{k-1}\left(\frac{a-c}{b}, \frac{d}{b} \right), w_{k-1}\left(\frac{a-c}{1-a-c}, \frac{b-a+c}{1-a-c}\right) \in \mathop{\mathrm{LS}}_+^{p-1}(H_{k-1})\).

Then \(W_k(a,b,c,d)\) certifies \(w_k(a,b) \in \mathop{\mathrm{LS}}_+^p(H_k)\).

Proof. For convenience, let \(Y \mathrel{\vcenter{:}}= W_k(a,b,c,d)\). First, \(Y \succeq 0\) from (i). Next, we focus on the following column vectors:

\(Ye_{1_0}\): \(Y \succeq 0\) implies that \(c \geq 0\) and \(a-c \geq 0\) by Lemma 10. Then it follows from 11 that \(Ye_{1_0} \in \mathop{\mathrm{cone}}(\mathop{\mathrm{STAB}}(H_k)) \subseteq \mathop{\mathrm{cone}}(\mathop{\mathrm{LS}}_+^{p-1}(H_k))\).

\(Ye_{1_1}\): (iii) implies \(\begin{bmatrix} b \\ w_{k-1}(a-c, d) \end{bmatrix} \in \mathop{\mathrm{cone}}(\mathop{\mathrm{LS}}_+^{p-1}(H_{k-1}))\). Thus, \[Ye_{1_1}= \begin{bmatrix} b \\ 0 \\ b \\ 0 \\ w_{k-1}(a-c,d) \end{bmatrix} \in \mathop{\mathrm{cone}}(\mathop{\mathrm{LS}}_+^{p-1}(H_k)).\]

\(Y(e_0-e_{1_0})\): Let \(S_1 \mathrel{\vcenter{:}}=[k]_2\), which is a stable set in \(H_k\). Then observe that \[Y(e_0 - e_{1_0}) = c \begin{bmatrix} 1 \\ \chi_{S_1} \end{bmatrix} + \begin{bmatrix} 1 - a -c \\ 0 \\ b \\ 0 \\ w_{k-1}(a-c, b-a+c) \end{bmatrix}.\] By (iii) and the fact that \(\mathop{\mathrm{cone}}(\mathop{\mathrm{LS}}_+^{p-1}(H_k))\) is a convex cone, it follows that \(Y(e_0-e_{1_0}) \in \mathop{\mathrm{cone}}(\mathop{\mathrm{LS}}_+^{p-1}(H_k))\).

\(Y(e_0-e_{1_1})\): Define \(S_2 \mathrel{\vcenter{:}}=[k]_0, S_3 \mathrel{\vcenter{:}}=\left\{1_0, 1_2\right\} \cup \left\{i_1 : 2 \leq i \leq k\right\}\), and \(S_4 \mathrel{\vcenter{:}}=\left\{i_1 : 2 \leq i \leq k\right\}\), which are all stable sets in \(H_k\). Now observe that \[\begin{align} Y(e_0 - e_{1_1}) {}=& c \begin{bmatrix} 1 \\ \chi_{S_1} \end{bmatrix}+ c \begin{bmatrix} 1 \\ \chi_{S_2} \end{bmatrix} + (a-c) \begin{bmatrix} 1 \\ \chi_{S_3} \end{bmatrix} + (b-d-a+c) \begin{bmatrix} 1 \\ \chi_{S_4} \end{bmatrix} \\ & + (1-2b- 2c+d)\begin{bmatrix} 1 \\ 0 \end{bmatrix}. \end{align}\] Since \(Y \succeq 0\), \(b-d-a+c \geq 0\) from (S3). Also, \(1-2b-2c+d \geq 0\) by (ii). Thus, \(Y(e_0-e_{1_1})\) is a sum of vectors in \(\mathop{\mathrm{cone}}(\mathop{\mathrm{STAB}}(H_k))\), and thus belongs to \(\mathop{\mathrm{cone}}(\mathop{\mathrm{LS}}_+^{p-1}(H_k))\).

By the symmetry of \(H_k\) and \(W_k(a,b,c,d)\), it suffices to verify the membership conditions for the above columns. Thus, it follows that \(W_k(a,b,c,d)\) indeed certifies \(w_k(a,b) \in \mathop{\mathrm{LS}}_+^p(H_k)\). ◻

Example 1. We illustrate Lemma 11 by using it to show that \(r_+(H_7) \geq 3\). Let \(k = 7, a = 0.1553, b = 0.8278, c = 0.005428\), and \(d = 0.6665\). Then one can check (via Lemma 10) that \(W_k(a,b,c,d) \succeq 0\), and \(2b+2c-d \leq 1\). Also, one can check that \(w_{k-1}\left(\frac{a-c}{b}, \frac{d}{b}\right)\) and \(w_{k-1}\left(\frac{a-c}{1-a-c}, \frac{b-a+c}{1-a-c}\right)\) both belong to \(\mathop{\mathrm{LS}}_+(H_{k-1})\) using Proposition 5. Thus, Lemma 11 applies, and \(w_k(a,b) \in \mathop{\mathrm{LS}}_+^2(H_k)\). Now observe that \(2(k-1)a +(k-2)b = 6.0026 > k-1\), and so \(w_k(a,b) \not\in \mathop{\mathrm{STAB}}(H_k)\), and we conclude that \(r_+(H_7) \geq 3\).

Next, we apply Lemma 11 iteratively to find a lower bound for the \(\mathop{\mathrm{LS}}_+\)-rank of \(H_k\) as a function of \(k\). The following is an updated version of Lemma 11 that gets us a step closer to directly relating \(f(k,p)\) and \(f(k-1,p-1)\).

Lemma 12. Suppose \(a,b,c,d \in \mathbb{R}\) satisfy all of the following:

\(W_k(a,b,c,d) \succeq 0\),

\(2b+ 2c -d \leq 1\),

\(\max\left\{ s_{k-1}\left(\frac{a-c}{b}, \frac{d}{b} \right), s_{k-1}\left(\frac{a-c}{1-a-c}, \frac{b-a+c}{1-a-c} \right)\right\} \leq f(k-1, p-1)\).

Then \(f(k,p) \geq s_k(a,b)\).

Proof. Given \(a,b,c,d \in \mathbb{R}\) that satisfy the given assumptions, define \[\begin{align} a(\lambda) & \mathrel{\vcenter{:}}=\frac{\lambda}{k} + (1-\lambda)a, & b(\lambda) & \mathrel{\vcenter{:}}=\frac{\lambda(k-1)}{k} + (1-\lambda)b, \\ c(\lambda) & \mathrel{\vcenter{:}}=(1-\lambda)c, & d(\lambda) & \mathrel{\vcenter{:}}=\frac{\lambda(k-2)}{k} + (1-\lambda)d. \end{align}\] Then notice that \[\label{lem67eq1} W_k(a(\lambda),b(\lambda),c(\lambda),d(\lambda)) = \lambda W_k\left( \frac{1}{k}, \frac{k-1}{k}, 0, \frac{k-2}{k} \right) + (1-\lambda) W_k(a,b,c,d).\tag{19}\] Observe (e.g., via Lemma 10) that \(W_k\left( \frac{1}{k}, \frac{k-1}{k}, 0, \frac{k-2}{k} \right) \succeq 0\) for all \(k \geq 2\). Since \(W_k(a,b,c,d) \succeq 0\) from (i), it follows from 19 and the convexity of the positive semidefinite cone that \[W_k(a(\lambda),b(\lambda),c(\lambda),d(\lambda)) \succeq 0\] for all \(\lambda\in [0,1]\).

Now observe that for all \(\lambda> 0\), \(s_k(a(\lambda), b(\lambda)) = s_k(a,b)\), \(s_{k-1}\left(\frac{a(\lambda)-c(\lambda)}{b(\lambda)}, \frac{d(\lambda)}{b(\lambda)} \right) = s_{k-1}\left(\frac{a-c}{b}, \frac{d}{b} \right)\), and \(s_{k-1}\left(\frac{a(\lambda)-c(\lambda)}{1-a(\lambda)-c(\lambda)}, \frac{b(\lambda)-a(\lambda)+c(\lambda)}{1-a(\lambda)-c(\lambda)} \right)= s_{k-1}\left(\frac{a-c}{1-a-c}, \frac{b-a+c}{1-a-c} \right)\). By assumption (iii), there must be a sufficiently small \(\lambda>0\) where \(w_{k-1}\left(\frac{a(\lambda)-c(\lambda)}{b(\lambda)}, \frac{d(\lambda)}{b(\lambda)} \right)\) and \(w_{k-1}\left(\frac{a(\lambda)-c(\lambda)}{1-a(\lambda)-c(\lambda)}, \frac{b(\lambda)-a(\lambda)+c(\lambda)}{1-a(\lambda)-c(\lambda)} \right)\) are both contained in \(\mathop{\mathrm{LS}}_+^{p-1}(H_k)\). Then Lemma 11 implies that \(w_k( a(\lambda), b(\lambda)) \in \mathop{\mathrm{LS}}_+^p(H_k)\), and the claim follows. ◻

Next, we define four values corresponding to each \(k\) that will be important in our subsequent argument: \[\begin{align} u_1(k) & \mathrel{\vcenter{:}}=-\frac{2(k-1)}{k-2}, & u_2(k) & \mathrel{\vcenter{:}}=\frac{k-4- \sqrt{17k^2-48k+32}}{2(k-2)},\\ u_3(k) & \mathrel{\vcenter{:}}=\frac{4(k-1)(-3k+4-2\sqrt{k-1}) }{(k-2)(9k-10)}, & u_4(k) & \mathrel{\vcenter{:}}=-1 - \frac{k}{3k^2- 2(k-1)^2 \sqrt{ \frac{2k}{k-1}} -4k}. \end{align}\]

Notice that \(u_1(k) = f(k,p)\) for all \(p \geq r_+(H_k)\), and \(u_4(k)\) is the expression given in 18 , the lower bound for \(f(k,1)\) that follows from Proposition 5. Then we have the following.

Lemma 13. For every \(k \geq 5\), \[u_1(k) < u_2(k) < u_3(k) < u_4(k).\]

Proof. First, one can check that the chain of inequalities holds when \(5 \leq k \leq 26\), and that \[\label{lem69eq1} -2 < u_2(k) < \frac{1-\sqrt{17}}{2} < u_3(k) < -\frac{4}{3} < u_4(k)\tag{20}\] holds for \(k=27\). Next, notice that \[\lim_{k \to \infty} u_1(k) = -2, \quad \lim_{k \to \infty} u_2(k) = \frac{1-\sqrt{17}}{2}, \quad \lim_{k \to \infty} u_3(k) = -\frac{4}{3},\] and that \(u_i(k)\) is an increasing function of \(k\) for all \(i \in [4]\).

Thus, 20 in fact holds for all \(k \geq 27\), and our claim follows. ◻

Now we are ready to prove the following key lemma which bounds the difference between \(f(k-1,p-1)\) and \(f(k,p)\).

Lemma 14. Given \(k \geq 5\) and \(\ell \in (u_1(k), u_3(k))\), let \[\gamma \mathrel{\vcenter{:}}=(k-2)(9k-10)\ell^2 + 8(k-1)(3k-4)\ell + 16(k-1)^2,\] and \[h(k,\ell) \mathrel{\vcenter{:}}=\frac{4(k-2) \ell + 8(k-1)}{ \sqrt{\gamma} + 3(k-2)\ell + 8(k-1)} - 2 - \ell.\] If \(f(k-1, p-1) \leq \ell+ h(k,\ell)\), then \(f(k,p) \leq \ell\).

Proof. Given \(\varepsilon > 0\), define \(a \mathrel{\vcenter{:}}=\frac{1}{k} + \varepsilon\) and \(b \mathrel{\vcenter{:}}=\frac{k-1}{k} + \ell \varepsilon\). We solve for \(c,d\) so that they satisfy condition (ii) in Lemma 12 and (S5) in Lemma 10 with equality. That is, \[\begin{align} \tag{21} d-2b-2c &= 1, \\ \tag{22} (2a + (k-2)c - 2k a^2)(2b + 2(k-1)d - 2kb^2) - (2(k-1)(a-c) - 2kab)^2 &= 0. \end{align}\] To do so, we substitute \(d = 2b+2c-1\) into 22 , and obtain the quadratic equation \[p_2c^2 + p_1c + p_0 =0\] where \[\begin{align} p_2 \mathrel{\vcenter{:}}={}& (k-2)(4(k-1)) - (-2(k-1))^2,\\ p_1 \mathrel{\vcenter{:}}={}&(k-2)(2b + 2(k-1)(2b-1) -2kb^2) + (2a - 2ka^2)(4(k-1)) \\ & -2(-2(k-1))(2(k-1)a - 2kab),\\ p_0 \mathrel{\vcenter{:}}={}& (2a - 2ka^2)(2b + 2(k-1)(2b-1) -2kb^2) - (2(k-1)a - 2kab)^2. \end{align}\] We then define \(c \mathrel{\vcenter{:}}=\frac{-p_1 + \sqrt{p_1^2 - 4p_0p_2}}{2p_2}\) (this would be the smaller of the two solutions, as \(p_2 < 0\)), and \(d \mathrel{\vcenter{:}}= 2b+2c-1\). First, we assure that \(c\) is well defined. If we consider the discriminant \(\Delta p \mathrel{\vcenter{:}}= p_1^2 - 4p_0p_2\) as a function of \(\varepsilon\), then \(\Delta p(0) = 0\), and that \(\frac{d^2}{d \varepsilon^2} \Delta p(0) > 0\) for all \(\ell \in ( u_1(k), u_3(k))\). Thus, there must exist \(\varepsilon > 0\) where \(\Delta p \geq 0\), and so \(c,d\) are well defined.

Next, we verify that \(W_k(a,b,c,d) \succeq 0\) for some \(\varepsilon > 0\) by checking the conditions from Lemma 10. First, by the choice of \(c,d\), (S5) must hold. Next, define the quantities \[\begin{align} \theta_1 & \mathrel{\vcenter{:}}= c, & \theta_2 & \mathrel{\vcenter{:}}= a-c,\\ \theta_3 & \mathrel{\vcenter{:}}= b-d-a+c, & \theta_4 & \mathrel{\vcenter{:}}= 2a + (k-2)c - 2k a^2. \end{align}\] Notice that at \(\varepsilon = 0\), \(\theta_i = 0\) for all \(i \in [4]\). Next, given a quantity \(q\) that depends on \(\varepsilon\), we use the notation \(q'(0)\) denote the one-sided derivative \(\lim_{\varepsilon \to 0^+} \frac{q}{\varepsilon}\). Then it suffices to show that \(\theta_i'(0) \geq 0\) for all \(i \in [4]\). Observe that \[\begin{align} \theta_1'(0) \geq 0 &\iff c'(0) \geq 0, & \theta_2'(0) \geq 0 &\iff c'(0) \leq 1,\\ \theta_3'(0) \geq 0 &\iff c'(0) \leq -1 - \ell , & \theta_4'(0) \geq 0 &\iff c'(0) \geq \frac{2}{k-2}. \end{align}\] Now one can check that \[c'(0) = \frac{-3k \ell-\sqrt{\gamma} -4k+2\ell+4}{4k-4}.\] As a function of \(\ell\), \(c'(0)\) is increasing over \((u_1(k), u_3(k))\), with \[\begin{align} \left. c'(0)\right|_{\ell = u_1(k)} &= \frac{2}{k-2}, & \left. c'(0)\right|_{\ell = u_3(k)} &= \frac{(6k-4)\sqrt{k-1}+10k-12}{(k-2)(9k-10)}. \end{align}\] Thus, for all \(k \geq 5\), we see that \(\frac{2}{k-2} \leq c'(0) \leq \min\left\{1, -1-\ell\right\}\) for all \(\ell \in (u_1(k), u_3(k))\), and so there exists \(\varepsilon > 0\) where \(W_k(a,b,c,d) \succeq 0\).

Next, for convenience, let \[\begin{align} s_1 & \mathrel{\vcenter{:}}= s_{k-1}\left(\frac{a-c}{b}, \frac{d}{b} \right), & s_2 & \mathrel{\vcenter{:}}= s_{k-1}\left(\frac{a-c}{1-a-c}, \frac{b-a+c}{1-a-c} \right). \end{align}\] Notice that both \(s_1, s_2\) are undefined at \(\varepsilon = 0\), as \(\left(\frac{a-c}{b}, \frac{d}{b} \right) = \left(\frac{a-c}{1-a-c}, \frac{b-a+c}{1-a-c} \right) = \left( \frac{1}{k-1}, \frac{k-2}{k-1} \right)\) in this case. Now one can check that \[\begin{align} \lim_{\varepsilon \to 0^+} s_1 &= \frac{-2 \sqrt{\gamma}-2(k-2)\ell - 8(k-1)}{\sqrt{\gamma} +3(k-2)\ell + 8(k-1)},\\ \lim_{\varepsilon \to 0^+} s_2 &= \frac{(-2k+3) \sqrt{\gamma}-(2k-1)(k-2)\ell - 8(k-1)^2}{(k-2)\sqrt{\gamma} +(3k-2)(k-2)\ell + 8(k-1)^2}. \end{align}\] Observe that for \(k \geq 5\) and for all \(\ell \in (u_1(k), 0)\), we have \[\begin{align} 0 &> \frac{-2 \sqrt{\gamma}}{ \sqrt{\gamma}} > \frac{(-2k+3) \sqrt{\gamma}}{(k-2) \sqrt{\gamma}}, \\ 0 &> \frac{-2(k-2)\ell - 8(k-1)}{3(k-2)\ell + 8(k-1)} > \frac{-(2k-1)(k-2)\ell - 8(k-1)^2}{(3k-2)(k-2)\ell + 8(k-1)^2}. \end{align}\] Thus, we conclude that for all \(k, \ell\) under our consideration, \(s_1 \geq s_2\) for arbitrarily small \(\varepsilon > 0\).

Now, notice that \(h(k,\ell) = \lim_{\varepsilon \to 0^+} s_1 - \ell\). Thus, if \(\ell \in (u_1(k), u_3(k))\), then there exists \(\varepsilon > 0\) where the matrix \(W_k(a,b,c,d)\) as constructed is positive semidefinite, satisfies \(d \geq 2b+2c-1\) (by the choice of \(c,d\)), with \(s_2 \leq s_1 \leq h(k,\ell) + \ell\). Hence, if \(f(k-1, p-1) \geq \ell + h(k,\ell)\), then Lemma 12 applies, and we obtain that \(f(k,p) \geq \ell\). ◻

Applying Lemma 14 iteratively, we obtain the following.

Lemma 15. Given \(k \geq 5\), suppose there exists \(\ell_1, \ldots, \ell_p \in \mathbb{R}\) where

\(\ell_p > u_1(k)\), \(\ell_2 < u_3(k-p+2)\), and \(\ell_1 < u_4(k-p+1)\);

\(\ell_i + h(k-p+i, \ell_i) \leq \ell_{i-1}\) for all \(i \in \left\{2, \ldots, p\right\}\).

Then \(r_+(H_k) \geq p+1\).

Proof. First, notice that \(\ell_1 < u_4(k-p+1) \leq f(k-p+1,1)\) by Proposition 5. Then since \(\ell_2 < u_3(k-p+2)\) and \(\ell_2 + h(k-p+2, \ell_2) \leq \ell_1\), Lemma 14 implies that \(\ell_2 \leq f(k-p+2, 2)\). Iterating this argument results in \(\ell_i \leq f(k-p+i, i)\) for every \(i \in [p]\). In particular, we have \(\ell_p \leq f(k,p)\). Since \(\ell_p > u_1(k)\), it follows that \(r_+(H_k) > p\), and the claim follows. ◻

Lemmas 14 and 15 provide a simple procedure of establishing \(\mathop{\mathrm{LS}}_+\)-rank lower bounds for \(H_k\).

Example 2. Let \(k = 7\). Then \(\ell_2 = -2.39\) and \(\ell_1 = \ell_2 + h(7,\ell_2)\) certify that \(r_+(H_7) \geq 3\). Similarly, for \(k = 10\), one can let \(\ell_3 = -2.24, \ell_2 = \ell_3+h(10,\ell_3)\), and \(\ell_1 = \ell_2 + h(9,\ell_2)\) and use Lemma 15 to verify that \(r_+(H_{10}) \geq 4\).

Next, we prove a lemma that will help us obtain a lower bound for \(r_+(H_k)\) analytically.

Lemma 16. For all \(k \geq 5\) and \(\ell \in (u_1(k), u_2(k))\), \(h(k,\ell) \leq \frac{2}{k-2}\).

Proof. One can check that the equation \(h(k,\ell) = \frac{2}{k-2}\) has three solutions: \(\ell = u_1(k), u_2(k)\), and \(\frac{k-4- \sqrt{17k^2-48k+32}}{2(k-2)}\) (which is greater than \(u_2(k)\)). Also, notice that \(\left. \frac{\partial }{\partial \ell} h(k,\ell) \right|_{\ell = u_1(k)} = -\frac{1}{k-1} < 0\). Since \(h(k,\ell)\) is a continuous function of \(\ell\) over \((u_1(k), u_2(k))\), it follows that \(h(k,\ell) \leq \frac{2}{k-2}\) for all \(\ell\) in this range. ◻

We are finally ready to prove the main result of this section.

Theorem 6. The \(\mathop{\mathrm{LS}}_+\)-rank of \(H_k\) is

at least \(2\) for \(4 \leq k \leq 6\);

at least \(3\) for \(7 \leq k \leq 9\);

at least \(\lfloor 0.19(k-2)\rfloor + 3\) for all \(k \geq 10\).

Proof. First, \(r_+(H_4) \geq 2\) follows from Corollary 1, and \(r_+(H_7) \geq 3\) was shown in Example 1 and again in Example 2. Moreover, one can use the approach illustrated in Example 2 to verify that \(r_+(H_k) \geq \lfloor 0.19(k-2)\rfloor + 3\) for all \(k\) where \(10 \leq k \leq 49\). Thus, we shall assume that \(k \geq 50\) for the remainder of the proof.

Let \(q \mathrel{\vcenter{:}}=\lfloor 0.19(k-2)\rfloor\), let \(\varepsilon >0\) that we set to be sufficiently small, and define \[\ell_i \mathrel{\vcenter{:}}=\varepsilon + u_1(k) + \sum_{j=1}^{q+2-i} \frac{2}{k-1-j}.\] for every \(i \in [q+2]\). (We aim to subsequently apply Lemma 15 with \(p = q+2\).) Now notice that \[\sum_{j=1}^{q} \frac{2}{k-1-i} \leq \int_{k-2-q}^{k-2} \frac{2}{t}~dt = 2\ln\left( \frac{k-2}{k-2-q} \right),\] Also, notice that \[u_2(k-q) - u_1(k) \geq u_2\left(\frac{4}{5}k\right) - u_1(k),\] as \(u_2\) is an increasing function in \(k\) and \(q \leq \frac{k}{5}\). Also, one can check that \(\overline{w}(k) \mathrel{\vcenter{:}}= u_2\left(\frac{4}{5}k\right) - u_1(k)\) is also an increasing function for all \(k \geq 5\). Next, we see that \[2\ln\left( \frac{k-2}{k-2-q} \right) \leq \overline{w}(50) \iff q \leq \left( 1 - \frac{1}{\exp(\overline{w}(50) /2)} \right) (k-2)\] Since \(1 - \frac{1}{\exp(\overline{w}(50) /2)} > 0.19\), the first inequality does hold by the choice of \(q\). Hence, \[\ell_2 - \varepsilon = u_1(k) + \sum_{j=1}^{q} \frac{2}{k-1-j} < u_2(k-q).\] Thus, we can choose \(\varepsilon\) sufficiently small so that \(\ell_2 < u_2(k-q)\). Then Lemma 16 implies that \(\ell_i + h(k-q-2+i, \ell_i) \leq \ell_{i-1}\) for all \(i \in \left\{2, \ldots, q+2\right\}\). Also, for all \(k \geq 50\), \(u_2(k-q) + \frac{1}{k-q-1} < u_4(k-q-1)\). Thus, we obtain that \(\ell_1 < u_4(k-q-1)\), and it follows from Lemma 15 that \(r_+(H_k) \geq q+3\). ◻

Since \(H_k\) has \(3k\) vertices, Theorem 6 (and the fact that \(r_+(H_3) =1\)) readily implies Theorem 1. In other words, we now know that for every \(\ell \in \mathbb{N}\), there exists a graph on no more than \(16\ell\) vertices that has \(\mathop{\mathrm{LS}}_+\)-rank \(\ell\).

5 Chvátal–Gomory rank of \(\mathop{\mathrm{STAB}}(H_k)\)↩︎

In this section we determine the degree of hardness of \(\mathop{\mathrm{STAB}}(H_k)\) relative to another well-studied cutting plane procedure that is due to Chvátal [33] with earlier ideas from Gomory [34]. Given a set \(P \subseteq [0,1]^n\), if \(a^{\top}x \leq \beta\) is a valid inequality of \(P\) and \(a \in \mathbb{Z}^n\), we say that \(a^{\top}x \leq \lfloor \beta\rfloor\) is a Chvátal–Gomory cut for \(P\). Then we define \(\mathop{\mathrm{CG}}(P)\), the Chvátal–Gomory closure of \(P\), to be the set of points that satisfy all Chvátal–Gomory cuts for \(P\). Note that \(\mathop{\mathrm{CG}}(P)\) is a closed convex set which contains all integral points in \(P\). Furthermore, given an integer \(p \geq 2\), we can recursively define \(\mathop{\mathrm{CG}}^p(P) \mathrel{\vcenter{:}}=\mathop{\mathrm{CG}}( \mathop{\mathrm{CG}}^{p-1}(P))\). Then given any valid linear inequality of \(P_I\), we can define its \(\mathop{\mathrm{CG}}\)-rank (relative to \(P\)) to be the smallest integer \(p\) for which the linear inequality is valid for \(\mathop{\mathrm{CG}}^p(P)\).

In Section 4, we proved that the inequality 4 has \(\mathop{\mathrm{LS}}_+\)-rank \(\Theta(|V(H_k)|)\). This implies that the inequality 3 also has \(\mathop{\mathrm{LS}}_+\)-rank \(\Theta(|V(H_k)|)\) (since it was shown in the proof of Lemma 5(ii) that 4 is a non-negative linear combination of 3 ). Here, we show that 3 has \(\mathop{\mathrm{CG}}\)-rank \(\Theta(\log(|V(H_k)|))\).

Theorem 7. Let \(d\) be the \(\mathop{\mathrm{CG}}\)-rank of the facet 3 of \(\mathop{\mathrm{STAB}}(H_k)\) relative to \(\mathop{\mathrm{FRAC}}(H_k)\). Then \[\log_4 \left( \frac{3k-7}{2} \right) < d \leq \log_2\left(k-1\right).\]

Before providing a proof of Theorem 7, first, we need a lemma about the valid inequalities of \(\mathop{\mathrm{STAB}}(H_k)\).

Lemma 17. Suppose \(a^{\top}x \leq \beta\) is valid for \(\mathop{\mathrm{STAB}}(H_k)\) where \(a \in \mathbb{Z}_+^{V(H_k)} \setminus \left\{0\right\}\). Then \(\frac{\beta}{a^{\top}\bar{e}} > \frac{1}{3}\).

Proof. We consider two cases. First, suppose that \(a_{j_1} = 0\) for all \(j \in [k]\). Since \([k]_p\) is a stable set in \(H_k\) for \(p \in \left\{0,1,2\right\}\), observe that \[a^{\top}\bar{e} = a^{\top}\left(\chi_{[k]_0} + \chi_{[k]_1} + \chi_{[k]_2}\right) \leq \beta+ 0 + \beta= 2\beta.\] Thus, we obtain that \(\frac{\beta}{a^{\top}\bar{e}} \geq \frac{1}{2} > \frac{1}{3}\) in this case. Otherwise, we may choose \(j \in [k]\) where \(a_{j_1} > 0\). Consider the stable sets \[S_0 \mathrel{\vcenter{:}}=([k]_0 \setminus \left\{j_0\right\}) \cup \left\{j_1\right\}, S_1 \mathrel{\vcenter{:}}=([k]_1 \setminus \left\{j_1\right\}) \cup \left\{j_0, j_2\right\}, S_2 \mathrel{\vcenter{:}}=([k]_2 \setminus \left\{j_2\right\}) \cup \left\{j_1\right\}.\] Now \(\chi_{S_0} + \chi_{S_1} + \chi_{S_2} = \bar{e} + e_{j_1}\). Since \(a_{j_1} > 0\), this implies that \[a^{\top}\bar{e} < a^{\top} (\bar{e} + e_{j_1}) = a^{\top} \left( \chi_{S_0} + \chi_{S_1} + \chi_{S_2} \right) \leq 3\beta,\] and so \(\frac{\beta}{a^{\top}\bar{e}} > \frac{1}{3}\) in this case as well. ◻

We will also need the following result.