Revealing Secrets in SPARQL Session Level

September 13, 2020

Abstract

Based on Semantic Web technologies, knowledge graphs help users to discover information of interest by using live SPARQL services. Answer-seekers often examine intermediate results iteratively and modify SPARQL queries repeatedly in a search session. In this context, understanding user behaviors is critical for effective intention prediction and query optimization. However, these behaviors have not yet been researched systematically at the SPARQL session level. This paper reveals secrets of session-level user search behaviors by conducting a comprehensive investigation over massive real-world SPARQL query logs. In particular, we thoroughly assess query changes made by users w.r.t. structural and data-driven features of SPARQL queries. To illustrate the potentiality of our findings, we employ an application example of how to use our findings, which might be valuable to devise efficient SPARQL caching, auto-completion, query suggestion, approximation, and relaxation techniques in the future1.

1 Introduction↩︎

Semantic Web technologies enable an increasing amount of data to be published as knowledge graphs using RDF. SPARQL endpoints have emerged as useful platforms for accessing knowledge graphs via live SPARQL querying. Currently, there are billions of RDF triples available from hundreds of SPARQL endpoints 3. However, users often fail to express their information needs in one succinct query. This is due to their unfamiliarity with the ontology underlying the endpoints they query, or with SPARQL’s syntax. This finding has been corroborated by an analysis on the LSQ dataset [1], where 31.70% of the real-world queries posted to 4 different SPARQL endpoints contain parse errors and 21.42% of the queries produce zero answers. Therefore, SPARQL queries are continuously refined to retrieve satisfying results in practice. We can use techniques based on information about underlying data or query sequence history to assist users. The underlying data is informative and useful, but in some cases, historical queries are the main source of information that is available, e.g.., where we do not have access to data. In this paper, we provide session-level query analysis to enhance techniques based on query sequence history.

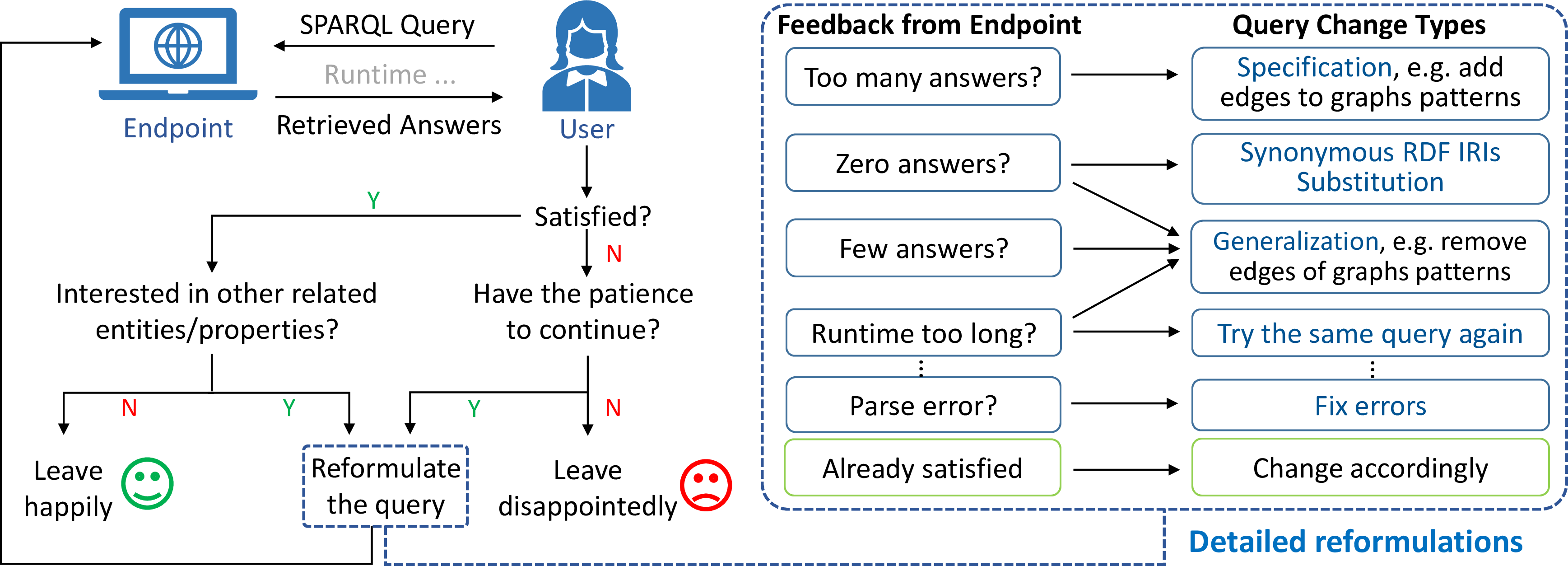

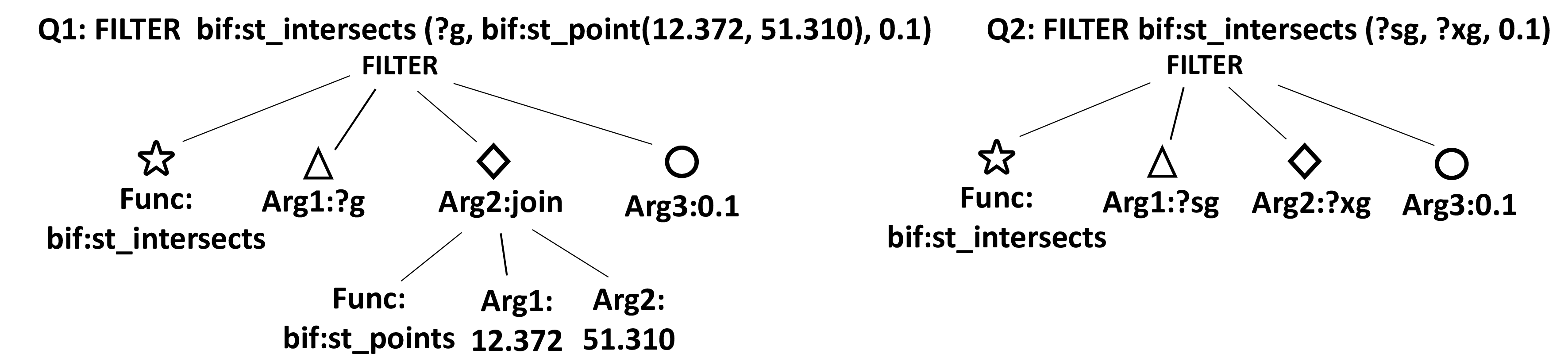

In the field of Information Retrieval (IR), the continuous query reformulation process is called a search session and has been well-studied to generate query suggestions and enhance user satisfaction by utilizing implicit (e.g.., query changes [2], clicks and dwell time in a certain website [3]), and explicit (e.g.., relevance scores [2]) user feedback. In a SPARQL search session, feedback from users is generally only revealed in query changes, which makes it more challenging to understand drifting user intentions. Fortunately, SPARQL queries contain richer information in query change types (Fig. 1) compared to the keyword queries in IR. Thus, a more detailed session-level analysis of the real-world SPARQL queries posted by users is both possible and of central importance for devising efficient caching mechanisms [4], query relaxation [5], [6], query approximation [7], query auto-completion [8], and query suggestion [9].

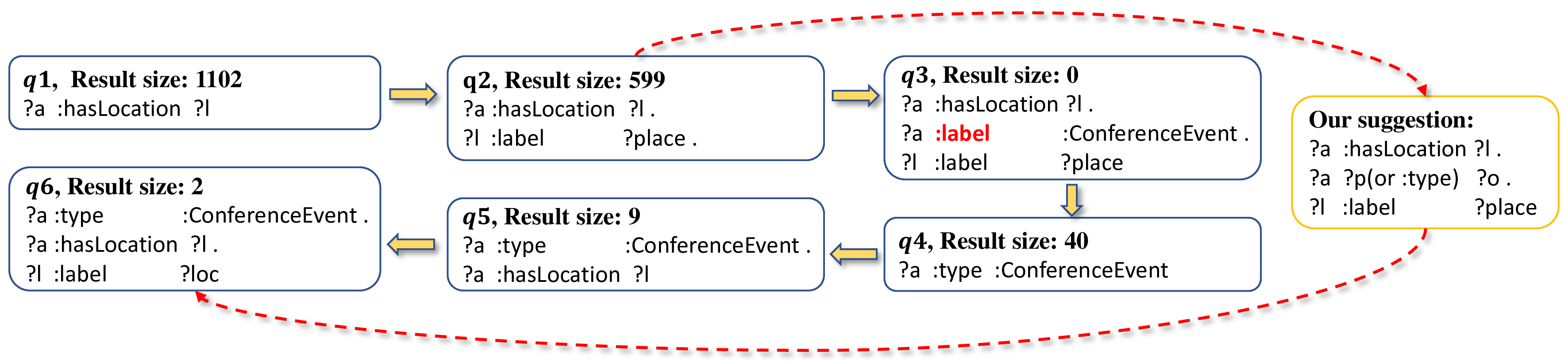

Figure 1: A typical SPARQL search session

Prior SPARQL query log analyses [1], [10]–[12] have focused on analyzing the structural features (e.g., usage of different SPARQL operators, triple patterns, types of joins) of queries in isolation. The potential correlations between queries in a search session have not been fully investigated. Similarities between queries within the same session have been reported [13], [14]. This property has been used in query augmentation [4] to retrieve closely related results. However, these works do not provide deeper insight into query changes within the search session. In addition, there has been no distinction made between robotic queries (i.e.., machine-generated) and organic (i.e.., human-generated) queries. Given that the distribution of queries in SPARQL endpoints is heavily dominated by robotic queries, in terms of volume and query load (over 99% in LSQ [1]), current studies on the similarity of queries depend heavily on robotic queries.

In this paper, we fill the research gap discussed above via the session-level analysis of real-world organic queries collected from 10 SPARQL endpoints. Specifically, we study the evolvement of structural and data-driven (e.g., result

set size) features in single SPARQL search sessions. We also provide comprehensive insights regarding session-level query reformulations on SPARQL operators, triple patterns, and FILTER constraints. Furthermore, we implement an

application example about the usage of our findings which might be useful to devise more efficient mechanisms for SPARQL query auto-completion, recommendations, caching, etc... Our contributions can be summarized as follows:

We port the concept of sessions to SPARQL queries and give a specification of SPARQL search sessions.

We are the first, to the best of our knowledge, to investigate potential correlations between SPARQL queries and provide a comprehensive analysis of query reformulations in a given search session.

We provide an application example of how our findings can be used to illustrate the potentiality of utilizing user behaviors in a search session.

2 Preliminaries↩︎

This section briefly introduces datasets and the pre-processing we use, followed by a formal definition of the SPARQL search session, as well as structural and data-driven features of SPARQL queries.

2.1 Datasets and Pre-processing↩︎

The difficulties of formulating a SPARQL query depend on the complexity of schema of knowledge graphs. Also, SPARQL queries that are used to query knowledge graphs from different domains have different features. Therefore, we selected 10 LSQ datasets [1] (version \(2\), \(15\) from Bio2RDF [15] and \(3\) others), containing real-world SPARQL queries collected from public SPARQL endpoints of these datasets. The selected datasets include 7/15 diverse datasets from Bio2RDF [15] (a compendium of bioinformatics datasets in RDF), i.e.., NCBI Gene (Ncbigene), National Drug Code Directory (Ndc), Orphanet, Saccharomyces Genome Database (Sgd), Side Effect Resource (Sider), Affymetrix, Gene Ontology Annotation (Goa), and the remaining 3 datasets: DBpedia [16] (extracted from Wikipedia), SWDF [17] (Semantic Web Dog Food about conferences metadata), and LinkedGeoData (LGD) [18] (a spatial RDF dataset).

| Dataset | #Queries | #Executions | Begin time | End time | #Users |

|---|---|---|---|---|---|

| LGD | 651,251/667,856 | 1,586,660/1,607,821 | 2015/11/22 | 2016/11/20 | 26,211 |

| SWDF | 520,740/521,250 | 1,415,438/1,415,993 | 2014/5/15 | 2014/11/12 | 936 |

| DBpedia | 3,001,541/4,196,762 | 3,552,212/6,248,139 | 2015/10/24 | 2016/2/11 | 39,922 |

| Affymetrix | 618,796/630,499 | 1,782,776/1,818,020 | 2013/5/5 | 2015/9/18 | 1,159 |

| Goa | 630,934/638,570 | 2,345,460/2,377,718 | 2013/5/5 | 2015/9/18 | 1,190 |

| Ncbigene | 679,586/689,885 | 1,561,592/1,593,958 | 2014/5/14 | 2015/9/18 | 417 |

| Ndc | 707,579/720,838 | 2,354,808/2,411,232 | 2013/5/16 | 2015/9/18 | 1,286 |

| Sgd | 618,670/630,891 | 1,992,800/2,038,097 | 2013/5/5 | 2015/9/18 | 1,304 |

| Sider | 186,122/187,976 | 677,950/681,247 | 2015/5/31 | 2015/9/18 | 216 |

| Orphanet | 476,603/477,036 | 1,521,797/1,523,459 | 2014/6/11 | 2015/9/18 | 171 |

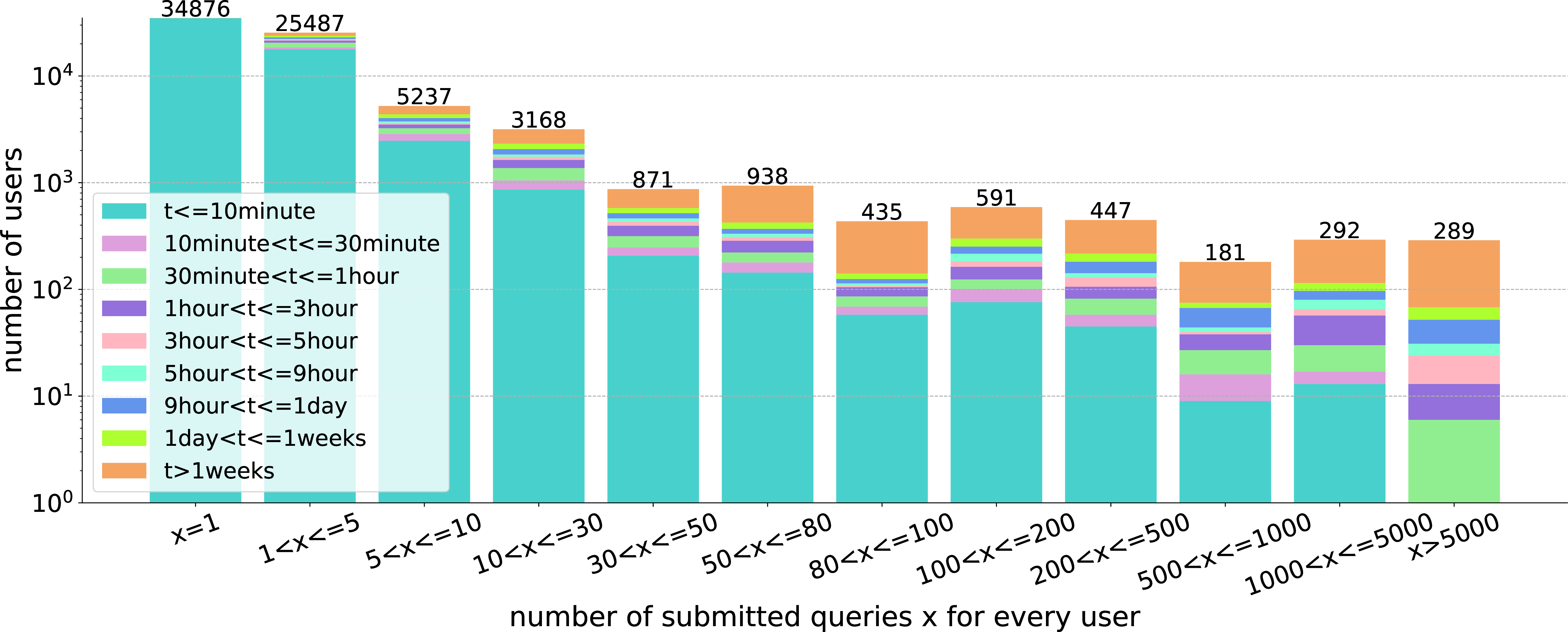

Figure 2: Distribution of the number of submitted queries \(x\) and time-span \(t\) of the submitted queries for each user. The X-axis indicates different intervals of submitted query numbers; The Y-axis indicates the number of users in the interval. Different colors indicate different time-spans.

Tab. 1 gives an overview of the selected datasets in terms of the number of queries (#Queries) and their total number of executions

(#Executions4) executed by different users (#Users) within a time frame. The basic distribution of the number and time-span of the submitted queries

for each user is presented in Fig. 2. This figure shows the existence of robotic queries that are submitted in a short period. These robotic queries do not show clear trends in individual human usage [19] but easily cause analytic biases due to their sheer size. Therefore, we need to remove robotic queries. There are generally three characteristics

that can be used to recognize them: (1) special agent names (e.g.. Java) [14], [19] (2) relatively high query request frequency [14] (3) loop patterns existing in query

sequences submitted by one user [14], where the SPARQL structures remain the same in contiguous queries, while only IRIs,

literals, or variables change. However, due to the privacy policy, agent names are usually unavailable in practice. Therefore, we combine (2) and (3) to design a two-step process: (a) filtering out users who submit queries with a high-request frequency,

i.e.., users who submit more than \(30\) times in a \(30\) minutes sliding window. This threshold is a relatively high frequency in our dataset. We use it to compute the average frequency.

Also, to make sure this is not a rigorous cut-off rule, we supplement the second step: (b) examining every query sequence submitted by one user. Those sequences with loop patterns are excluded. After robotic query removal process, there are \(51,575\) (\(0.64\%\)) likely organic queries and \(67,594\) (\(0.36\%\)) executions in our datasets. These executions come from

\(7,718\) (\(10.60\%\)) users, each having submitted \(8.76\) queries on average.

2.2 Definitions↩︎

Formally, a SPARQL search session \(s = \{Q, R, T\}\) consists of a sequence of queries \(Q = \{q_1,\cdots, q_i,\cdots,q_n\}\), retrieved result sets \(R = \{R_{q_1}, \cdots, R_{q_i}, \cdots, R_{q_n}\}\), and time information \(T = \{T_{q_1},\cdots, T_{q_i}, \cdots, T_{q_n}\}\), where \(n\) is the number of queries in the session (i.e., the session length) and \(i\) indexes the queries. Each result set \(R_{q_i}\) contains all the results of \(q_i\), while each time information \(T_{q_i}\) contains the time stamp and executing runtime of \(q_i\). In practice, a SPARQL search session is recognized by three constraints5: queries in a sequence are (1) executed by one user, which is identified by encrypted IP addresses (2) within a time window of a fixed \(time\_threshold\) (inspired by [4], [20], we set \(time\_threshold\) to 1 hour in this paper). (3) If we define \(term(q)\) (i.e., a term set of one query) as a set which contains all the specified terms (i.e., RDF IRIs) and variables used in the query, then for any pair of contiguous queries \((q_i, q_{i+1})\) in the session, it satisfies \(term(q_i)\cap term(q_{i+1}) \neq \varnothing\). Here, we include variables in the term set \(term(q)\) because our experiments shows that users typically do not change variable names in a session: 91% (27% for 1 variable, 35% for 2 variables, and 29% for more than 2 variables) of continuous query pairs in sessions have at least one variable name in common. Please note that, (3) here is a minimum requirement for sessions, while the one user and 1-hour threshold setting can ensure the topic continuity to a large extent, which can be evaluated by the number of common variable names and high similarity score of IRI terms in Fig. 6 (introduce later). Furthermore, although we acknowledge that there could be other ways to identify sessions, the method we present here is reasonable. Based on these constraints of sessions, we extract \(14,039\) sessions from organic queries in our dataset. The distribution of the organic session length is presented in Fig. 3.

We follow [21]–[24] to define two types of SPARQL query features, i.e.., structural and data-driven features, for the SPARQL session-level analysis.

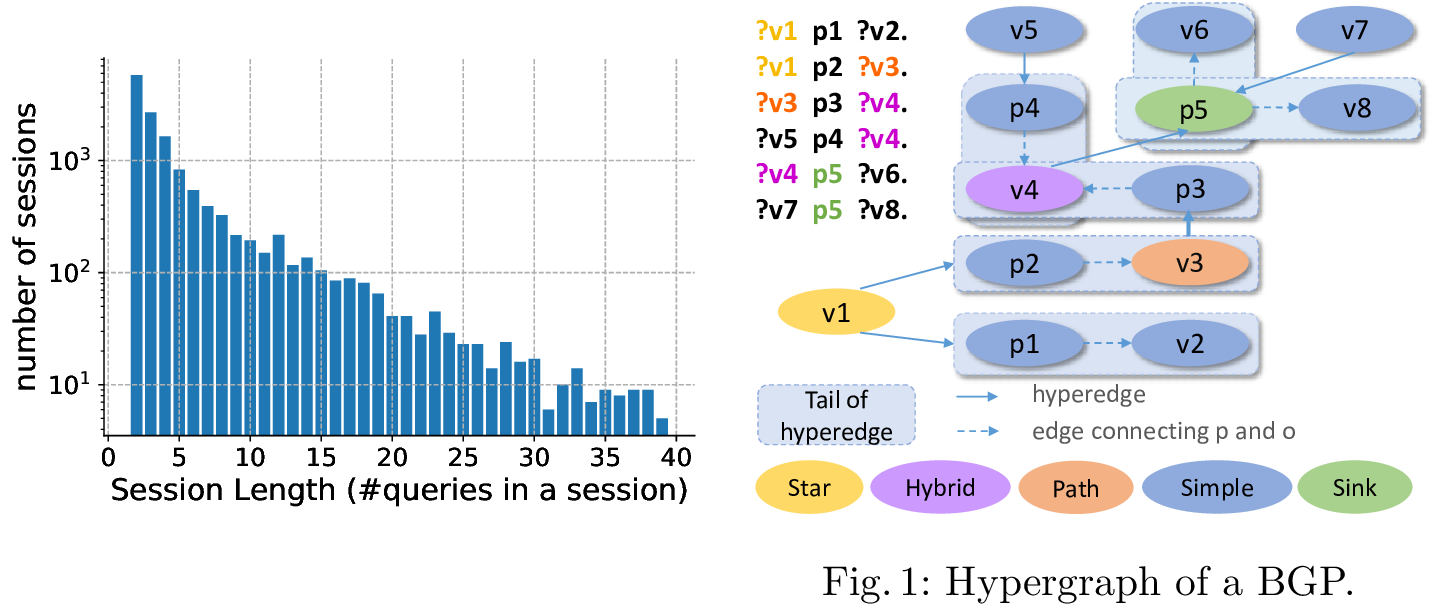

Structural features: The basic graph patterns (BGPs) in SPARQL queries organize a set of triple patterns into different types of structures. We represent each BGP of a SPARQL query as a directed hypergraph to easily compare the structural changes between different queries in a search session. The hypergraph representation [23] contains nodes for all three components of the triple patterns \(<\)s,p,o\(>\). A hyperedge \(e = (s,(p,o)) \in E \subseteq V^3\) connects the head vertex \(s\) and the tail hypervertex \((p, o)\), where \(E\) is the set of all hyperedges and \(V\) is the set of all vertices in the hypergraph. The hypergraph of a complete SPARQL query (consider BGPs only) is the union of hypergraph representations of all BGPs in the query. An example is illustrated in Fig. [fig:graph95pre]. We define the following structural features based on the hypergraph representation.

Figure 3: Organic session length.

The triple pattern count refers to the number of triple patterns in a BGP, which distinguishes between simple and structural complex queries.

The join vertex type is characterized by in-degrees and out-degrees for vertices in BGPs. A star vertex only has (multiple) outgoing edges. A sink vertex only has (multiple) incoming edges. There is only one in-degree and one out-degree for a path vertex. The in-degree (or out-degree) of a hybrid vertex is more than one while out-degree (or in-degree) is at least one.

The join vertex degree indicates the summation of the in-degree and out-degree of a join vertex. For a SPARQL query, we use the minimum, mean, and maximum of join vertex degrees in the query to represent this feature.

The projection variable count is the number of selected variables that form the solution sequences in the

SELECTquery form.The IRI term set is the collection of used IRI terms in a SPARQL query. This feature presents the information that users are interested in.

Data-driven features: We mainly consider the result size, i.e.., \(|R_{q_{i}}|\), the number of solutions for SPARQL queries in this paper. The change of result size (decrease or increase) generally reflects whether users want more specific or more general answers, and as a result, is an important feature to capture the drifting query intentions.

3 Query Changes in SPARQL Search Session↩︎

User search behaviors, represented by changes over queries, are the key to understand user intentions. In this section, we investigate query changes based on the aforementioned SPARQL query features from two aspects: (1) the evolvement of query changes (Sec. 3.1); (2) detailed reformulation strategies (Sec. [subsec:op]). Please note that due to the space limitation, we only provide individual dataset-level results when different datasets show very different results. More rudimentary dataset analysis are provided in [1].

3.1 Evolvement of Query Changes↩︎

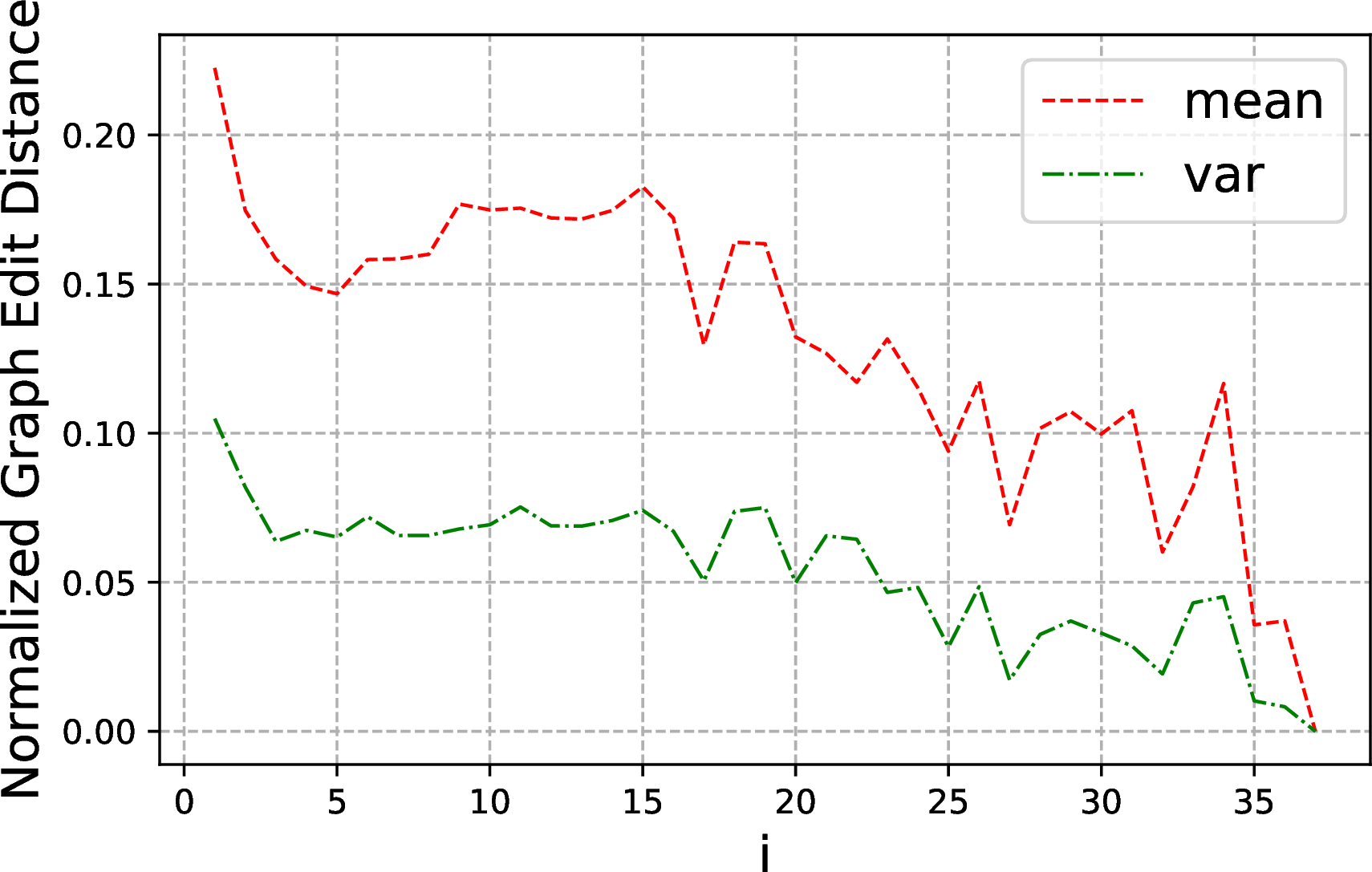

We study the query evolvement in terms of three structural aspects (i.e.., graph edit distance, graph pattern similarity, and IRI term similarity), as well as one data-driven aspect, i.e.., changes of result size.

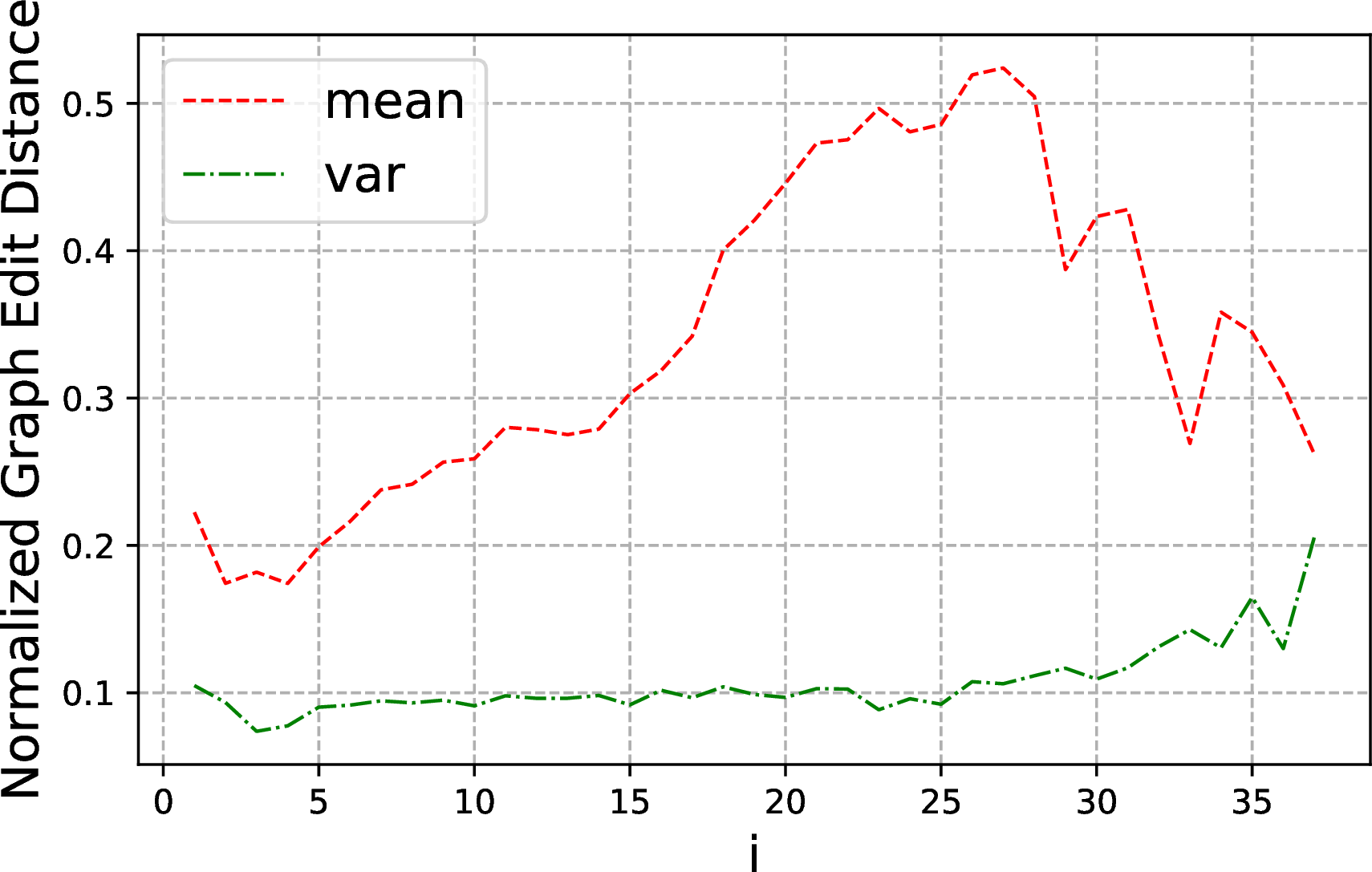

Graph Edit Distance (GED): Given a query sequence \(Q\) of a session \(s\), we represent each BGP of queries as a directed hypergraph and utilize the normalized GED to measure differences between hypergraphs. The GED between two hypergraphs is normalized by dividing the maximum of the number of edges and vertices in two hypergraphs. On this basis, the GED between two queries is accessed by the average of GEDs between hypergraphs in different operator blocks of the two queries. A GED numeric value ranges from \(0\) (no change), to \(1\) (complete change), indicating the degree of changes between two queries. We conduct two types of comparisons: (1) GED between two contiguous queries, i.e.., \((q_i, q_{i+1})\), (2) GED between the initial query and the other query in a given session, i.e.., \((q_1,q_{i+1})\). Consider the sequence \(\{q_1, q_2, q_3\}\) as an example: we calculate GED values of (1) \((q_1,q_2)\),\((q_2,q_3)\), and (2) \((q_1,q_2)\),\((q_1,q_3)\). The average and variance of GEDs (given by mean and var respectively) in single search sessions on our 10 datasets6 are presented in Fig. 4.

GED for \((q_i, q_{i+1})\)

GED for \((q_1, q_{i+1})\)

Figure 4: Evolvement of GED of \(Q\) in sessions. X-axis shows the query index ” i"..

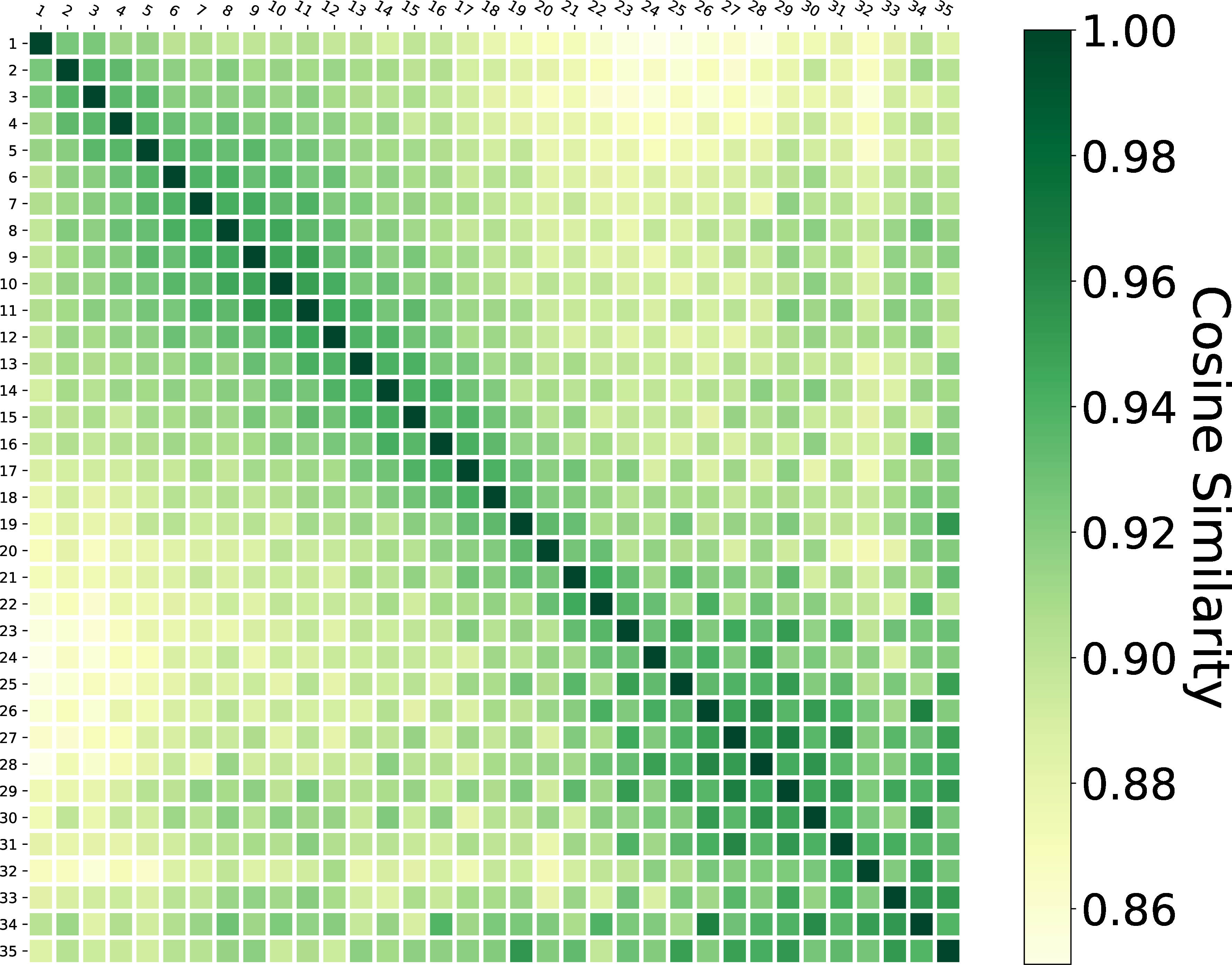

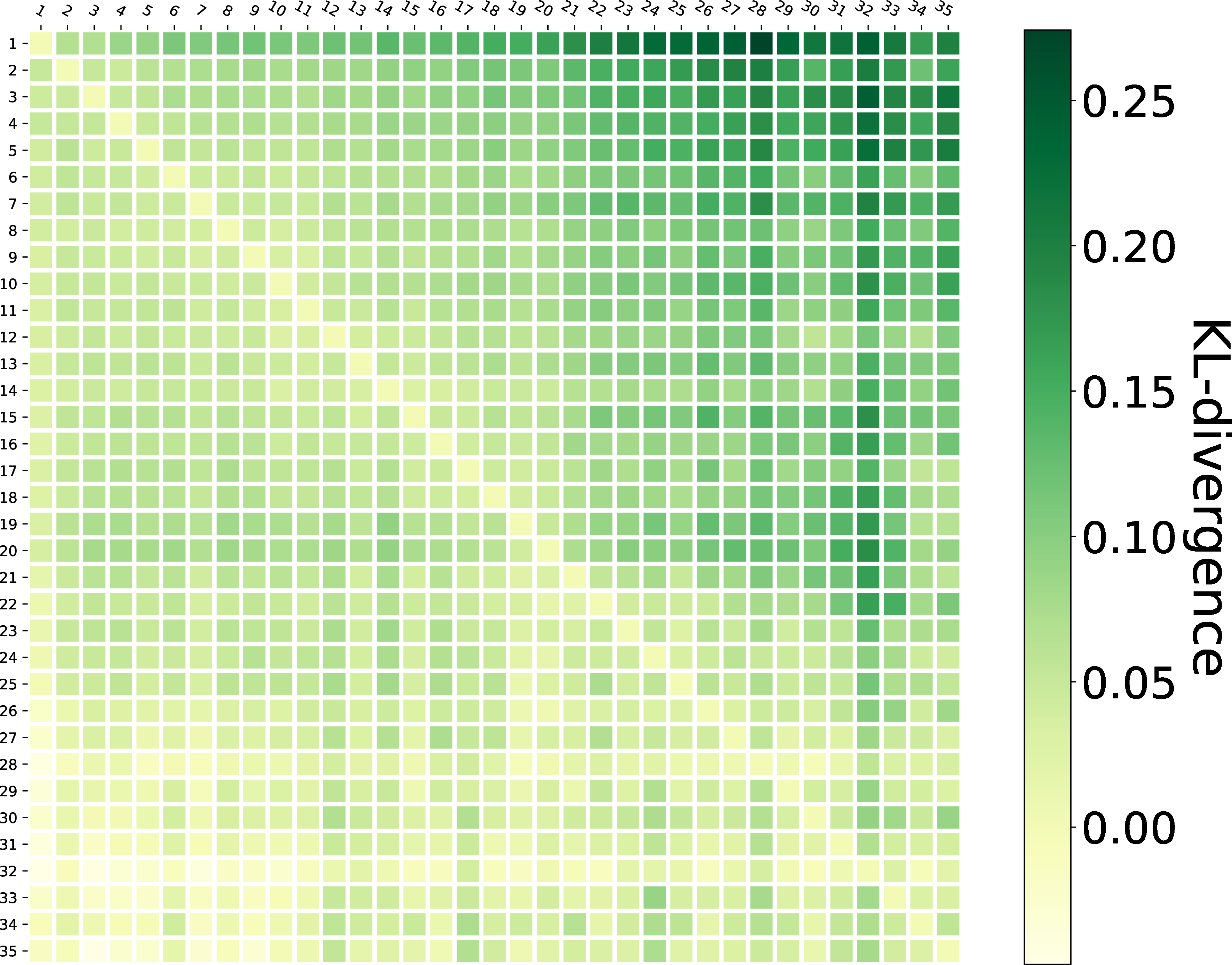

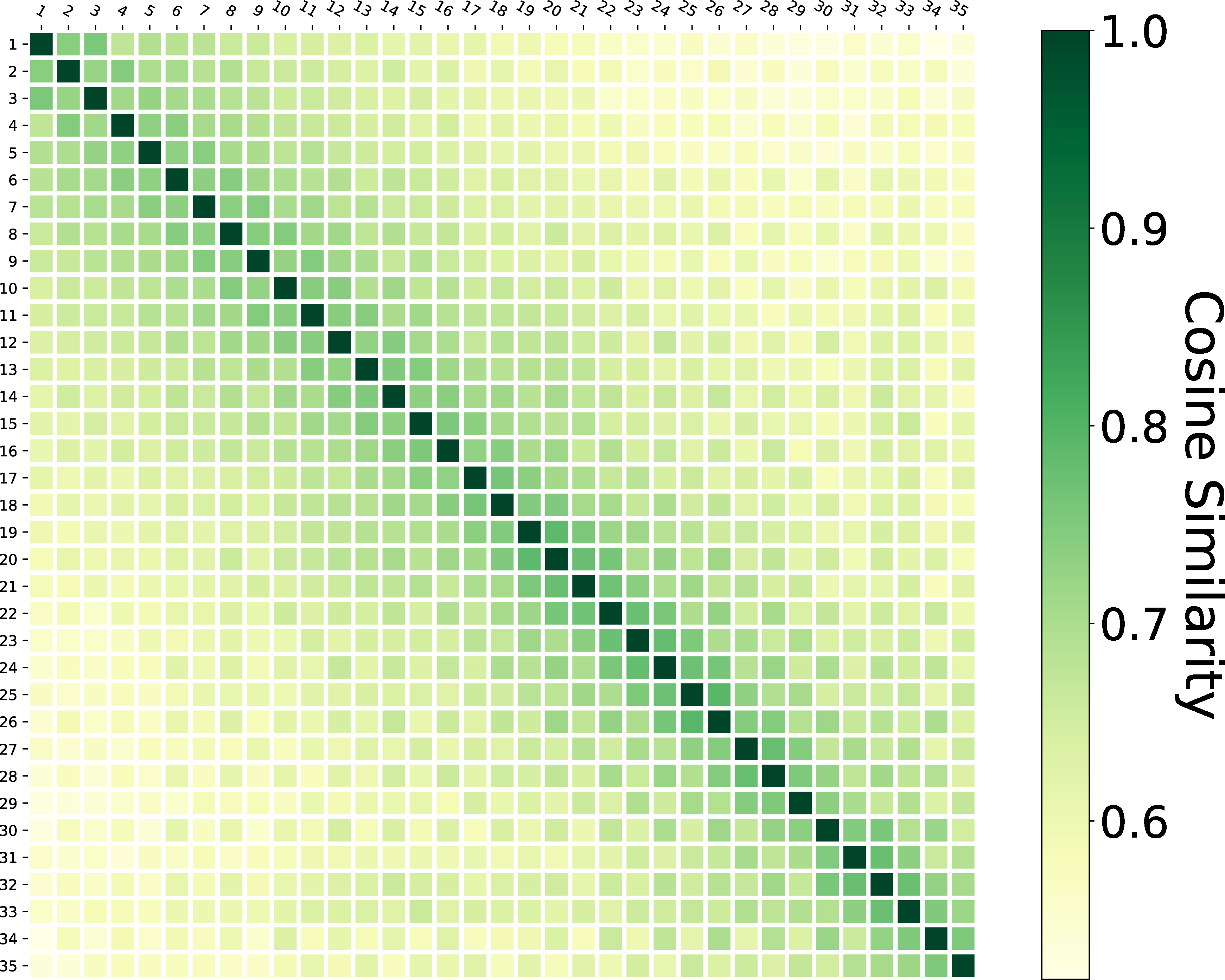

Cosine similarity

KL divergence

Figure 5: Graph pattern similarity of query sequence \(Q\) in sessions..

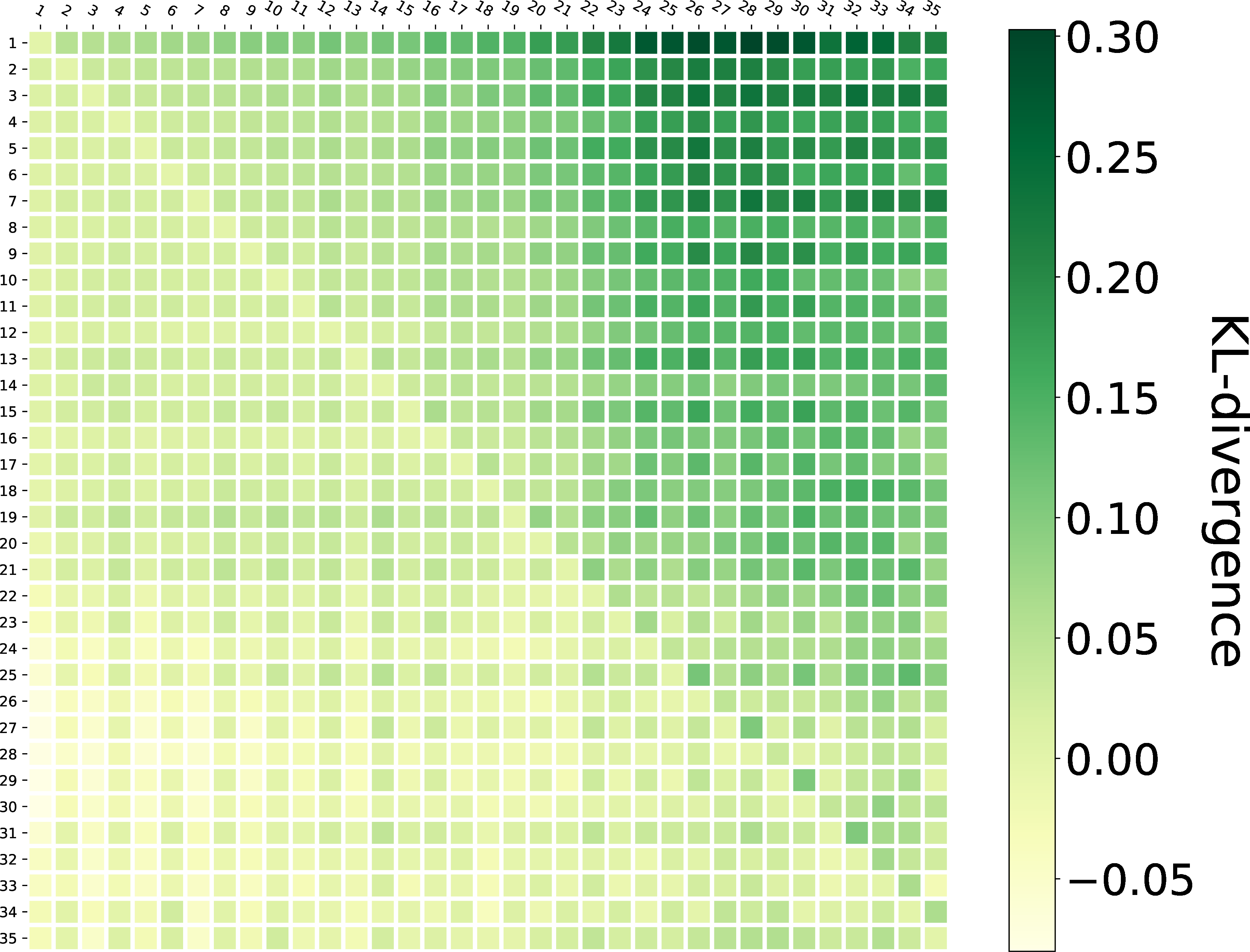

Graph Pattern Similarity: Based on [22], [23], we select the following \(10\) structural features to form a feature vector78: \[\label{equ:featureVec} \begin{align} V\;=\;[\;&\#triplePatterns,\;\#BGP,\;\#Projection,\;\#Sink Join Vertex, \\ &\#Star Join Vertex,\;\#Hybrid Join Vertex,\;\#Path Join Vertex, \\ & MaxJoinDegree,\;MinJoinDegree,\;MeanJoinDegree\;]. \end{align}\tag{1}\]

For a query sequence \(Q=\{q_1, q_2\cdots q_n\}\) in a single search session \(s\), we initialize vectors \(\{\mathbf{V_{q_1}, V_{q_2}} \cdots \mathbf{V_{q_n}} \}\) according to Equation. 1 . Then, we normalize every item (\(k\) indexes the item) in a vector by

\[\mathbf{\hat{V}_{q_i} } (k) = \frac{\mathbf{V_{q_i}} (k)}{\max_{j=1,\cdots,n} \mathbf{V_{q_j}} (k)}.\]

We use two metrics to measure graph pattern similarity between two queries: (1) cosine distance, which is a symmetric measurement defined as \(Cosine(\mathbf{\hat{V}_{q_1}} , \mathbf{\hat{V}_{q_2}} ) = Cosine( \mathbf{\hat{V}_{q_2}} , \mathbf{\hat{V}_{q_1}} )\) and performed by: \[\label{equ:cos} Cosine( \mathbf{\hat{V}_{q_1}} , \mathbf{\hat{V}_{q_2}} ) = \frac{\mathbf{\hat{V}_{q_1}}\cdot\mathbf{\hat{V}_{q_2}}}{ \left\Vert \mathbf{\hat{V}_{q_1}} \right\Vert \left\Vert \mathbf{\hat{V}_{q_2}} \right\Vert}\tag{2}\] (2) KL divergence, which is asymmetric, and performed by: \[\label{equ:kl} D_{KL}( \mathbf{\hat{V}_{q_1}} || \mathbf{\hat{V}_{q_2}} ) = \sum \mathbf{\hat{V}_{q_1}}(k) \log \frac{ \mathbf{\hat{V}_{q_1}} (k)}{ \mathbf{\hat{V}_{q_2}} (k) }.\tag{3}\]

Cosine similarity between two vectors ranges from \(0\) (complete change) to \(1\) (constant). Original KL divergence ranges from \(0\) (constant) to \(+\infty\). The \(+\infty\) is caused by zeros in denominators. To prevent this problem, we only calculate \(D_{KL}( \mathbf{\hat{V}_{q_1}} || \mathbf{\hat{V}_{q_2}} )\) when \(\mathbf{\hat{V}_{q_1}} (k) \neq 0\;and\;\mathbf{\hat{V}_{q_2}} (k) \neq 0\) here. The minus, zero, and positive result of \(D_{KL}(\mathbf{\hat{V}_{q_1}} || \mathbf{\hat{V}_{q_2}} )\) indicate that distribution of \(\mathbf{\hat{V}_{q_2}}\) is more concentrated than, equal to, or more scattered than distribution of \(\mathbf{\hat{V}_{q_1}}\), respectively. Results are presented in Fig. 5 as a \(m\times m\) matrix \(M\), where \(m\) is the longest length of sessions. The rows and columns of \(M\) are indexed by the query \(q_i\) in a session. \(M_{ij}\) in \(M\) presents the average cosine similarity (or KL divergence) between vectors of \(q_i\) and \(q_j\) in single search sessions, i.e.., \(\mathbf{\hat{V}_{q_i}}\) and \(\mathbf{\hat{V}_{q_j}}\).

IRI Term Similarity: We construct a query-term matrix \(D\) which is a \(x\times y\) matrix for every dataset. \(x\) is the number of queries and \(y\) is the number of terms used in queries of a certain dataset. The rows of \(D\) are indexed by queries \(q_i\) and columns are indexed by terms \(t_j\). \(D_{ij}\) in this matrix represents whether the term \(t_j\) is used in query \(q_i\). If \(t_j\) is used, \(D_{ij}\) is \(1\). If not, \(D_{ij}\) is \(0\). Query \(q_i\) can be represented by a vector \(\mathbf{V_{q_i}}\) which is constituted by row \(i\) in this query-term matrix \(D\). The vector \(\mathbf{V_{q_i}}\) indicates the IRI term distribution in \(q_i\)9. Then, we use cosine similarity and KL divergence to visualize the evolvement of IRI term similarity in single search sessions, as shown in Fig. 6. Please note that the analyses and visualizations of Fig. 4, 5, 6 are based on raw data. Lengths of sessions are not normalized. The motivation is to find different user behaviors by session position, which is normally used in log analysis of web searching fields.

Cosine similarity

KL divergence

Figure 6: IRI term similarity of query sequence \(Q\) in sessions..

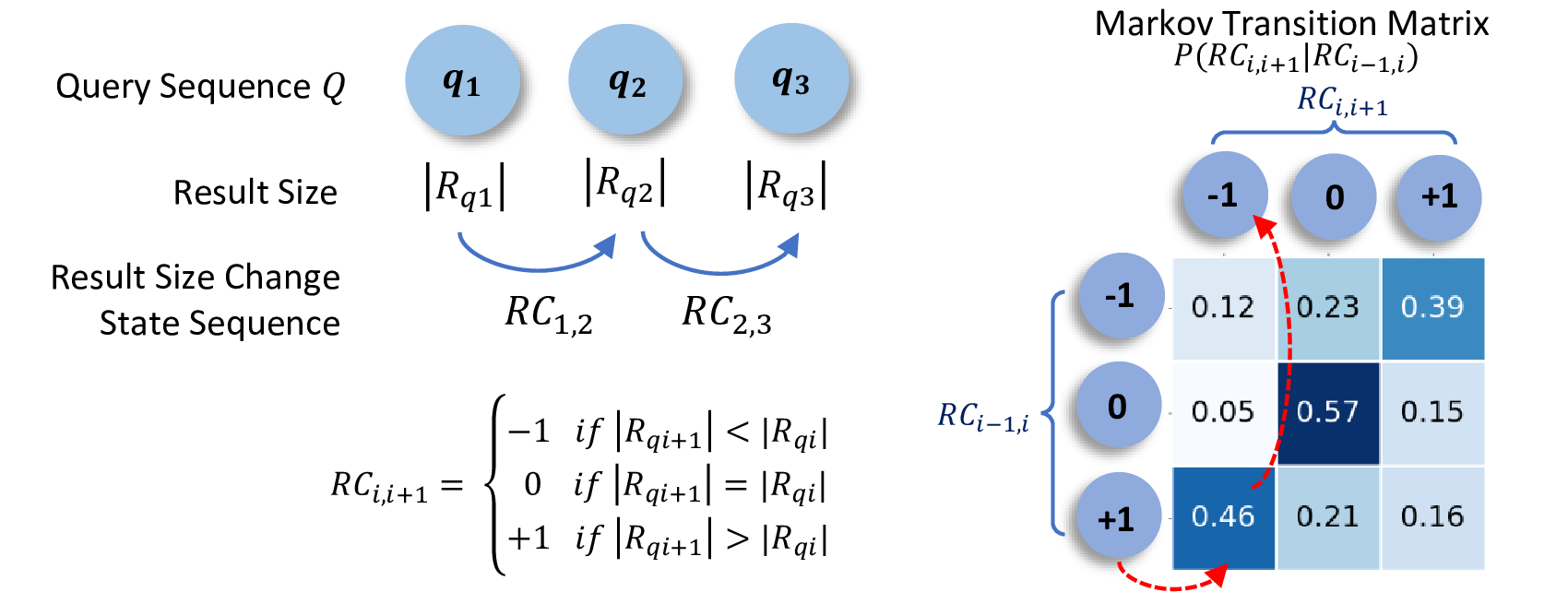

Change of Result Size: For the data-driven feature, we investigate the transition probability between three result size change states, i.e.., decrease, remain unchanged, and increase10, presented as \(-1\), \(0\), \(+1\) respectively. For a single query sequence \(Q=\{q_1,q_2,\cdots,q_{n-1},q_n\}\), we generate a result size change state sequence \(\{RC_{1,2},\cdots,RC_{n-1,n}\}\) which is then used to calculate the Markov transition matrix between three types of \(RC\) states, i.e.., \(-1,0,\) and \(+1\). A Markov transition matrix is a square matrix describing the probabilities of transferring from one state to another. The state transition probability is formulated by \(P(RC_{i,i+1}|RC_{i-1,i}) = \frac{\#(RC_{i-1,i}, RC_{i,i+1})}{\#RC_{i-1,i}}\). The state transition probabilities in single search sessions on our \(10\) datasets are shown in Fig. 7. The probability of transferring from \(RC_{i-1,i}\) to \(RC_{i,i+1}\) can be determined by the intersections of the corresponding row and column in the Markov transition matrix. For example, as shown in Fig. 7, the number \(0.46\) in row \(+1\), column \(-1\) indicates the probability of moving from a result size increase state to a decrease state, i.e.., \(P(-1|+1)\), is \(0.46\).

Figure 7: Markov transition matrix of result size change states.

Key Findings: The above results allow us to make the following observations.

The GEDs between \((q_i, q_{i+1})\) decrease gradually in a session (as shown in Fig. 4 (a)), which indicates that query change between two contiguous queries is increasingly indistinct as users getting closer to their information needs. Interestingly, the GEDs between \((q_1, q_{i+1})\) increase consistently at first, then decrease (Fig. 4 (b)). Combining with key findings.3 (below), this suggests that users may use prior query structures to explore other related information.

The graph patterns of queries in the same session are broadly similar, as illustrated by the \(0.78 \sim 1\) cosine similarity and \(-0.07 \sim 0.46\) KL divergence in Fig. 5. This indicates that users usually change the structure of graph patterns slightly in a SPARQL search session. The GEDs in Fig. 4 show this conclusion as well.

The IRI term similarity is less and less similar, as shown in Fig. 6. In more detail, the distribution of IRI terms used in queries is more scattered, which indicates that users tend to include more IRI terms and express a clearer intention as the session moves forward. However, please note that IRI terms are not getting entirely different considering high numerical values (cosine similarity (\(0.5 \sim 1.0\)) and kl-divergence (up to \(0.30\)) in Fig. 6).

The result size changes indicate that previous result size change does influence the intention of the current query: if the number of results is increased (decreased) in the last query, then users tend to make their current queries more specific (general). On the other hand, the same number of results (mostly zero results) indicates the unfamiliarity of underlying data for users, which may lead to an additional iteration of zero results.

3.2 Query Reformulations in Single SPARQL Sessions↩︎

We explore different types of reformulations over query sequences in single SPARQL search sessions as discussed in detail below. Please note that we have not considered semantically equivalent rewritings for now. We only track user reformulations and try to find valuable findings about user behaviours.

Reformulations of SPARQL Operators: We first investigate reformulations in terms of operators for contiguous query pairs. The usage of operators generally reflects the query intent. For

instance, the addition of Distinct may occur when a user checks answers with many duplicates. For SPARQL query forms (i.e., SELECT, CONSTRUCT, ASK, DESCRIBE), only \(2.51\%\) SPARQL query pairs

have such changes. Among these changes, the most common one (\(49.26\%\) relative to the number of changes on query forms) is between SELECT and CONSTRUCT. This indicates that users first check the

underlying data in knowledge graphs by SELECT, then construct a graph using CONSTRUCT. The second most frequent change (\(22.60\%\)) of the query form is between SELECT and

ASK. In this scenario, users may issue an ASK query to examine whether a specific solution exists, followed by the SELECT query to get the desired results. Tab. 2 shows the percentage-wise distribution of the different operators in terms of their usage (i.e., presence of an operator) and reformulations (addition or removal) in the query logs. Please note that removals and

additions are relative to the total usage of the operators in the query logs. The results show that a majority of the selected operators are frequently reformulated in the query logs. Reformulations of Triple Patterns:

Given a contiguous query pair \((q_i, q_{i+1})\) in a search session, there are three formulations possible pertaining to the triple patterns used in the

query pair: (1) new triple pattern(s) is added, (2) existing triple pattern(s) is deleted, (3) some changes (substitutions) are made in the individual elements (subject, predicate, object) of the triple patterns. In this section, we show

reformulations of triple patterns within different SPARQL operator blocks (e.g., Union, Optional) and the substitutions made on different join vertex types and their connecting edges and nodes.

| Operator | Total-usage | Removals | Additions | Operator | Total-usage | Removals | Additions |

|---|---|---|---|---|---|---|---|

| Filter | 64.67 | 11.13 | 10.99 | Count | 2.64 | 38.06 | 31.00 |

| Union | 23.42 | 9.63 | 9.56 | Sample | 0.17 | 27.19 | 32.46 |

| Optional | 15.14 | 18.62 | 19.02 | GroupConcat | 0.10 | 23.19 | 20.29 |

| Graph | 1.71 | 25.33 | 26.45 | Sum | 0.03 | 50.00 | 50.00 |

| Bind | 0.98 | 24.55 | 25.00 | Min | 0.06 | 12.50 | 15.00 |

| Minus | 0.37 | 52.63 | 42.91 | Max | 0.02 | 45.45 | 27.27 |

| Service | 0.33 | 17.49 | 21.08 | Avg | 0.02 | 14.29 | 28.57 |

| Values | \(<\)0.01 | 0 | 100 | Distinct | 50.63 | 4.08 | 3.73 |

| SeqPath | 2.22 | 4.20 | 5.93 | Limit | 21.84 | 13.58 | 14.00 |

| MulPath | 0.67 | 11.62 | 12.06 | OrderBy | 7.97 | 25.83 | 24.29 |

| AltPath | 0.30 | 7.43 | 26.73 | Offset | 2.60 | 16.06 | 21.58 |

| InvPath | 0.05 | 14.29 | 8.57 | GroupBy | 1.35 | 23.85 | 26.04 |

| Projection | 67.77 | 3.29 | 3.34 | Having | 0.22 | 18.00 | 26.67 |

(1) Operator Block-wise Reformulations: There are \(78.24\%\) pairs of contiguous queries that show changes in triple patterns. We list the triple pattern reformulations that occur in the top-\(6\) most frequent SPARQL operator blocks in Tab. 3. In addition, we show reformulations in the Main block which does

not contain any of the operator blocks. An example of the Main block is the body of the SPARQL SELECT query, which only contains a set of triple patterns as the BGP. The triple patterns in the Graph Template operator

block represent the graph templates used in the CONSTRUCT queries. The combined substitutions represent the made-in-one or more-than-one elements of the triple patterns. The percentages reported in this table are relative to the total

usage of certain operator blocks in query logs. Note that the reformulations on triple patterns are only considered when the corresponding operator block exists both in \(q_i\) and \(q_{i+1}\). The additions and removals of operators are not included in Tab 3.

The results suggest that reformulations are very common in different operator blocks. In most operator blocks, substitutions are more frequent than additions and removals. This indicates that users first make changes in the elements of the existing triple patterns, rather than inserting or deleting new triple patterns. Substitutions happening in subject, predicate and object are mostly evenly distributed.

0.44em

| Main | Template | Union | Optional | Service | Graph | Subquery | |

|---|---|---|---|---|---|---|---|

| Addition | 21.92 | 42.00 | 7.79 | 4.30 | 6.87 | 4.21 | 79.95 |

| Removal | 21.28 | 26.33 | 8.30 | 3.00 | 7.63 | 3.31 | 80.06 |

| Combined Substitution | 61.10 | 55.92 | 43.16 | 17.76 | 9.16 | 8.93 | 1.21 |

| Subject substitution | 37.97 | 28.95 | 49.81 | 40.41 | 54.55 | 18.66 | 73.88 |

| Predicate substitution | 28.06 | 36.44 | 18.49 | 9.56 | 18.18 | 36.27 | 5.22 |

| Object substitution | 33.97 | 34.61 | 31.70 | 50.02 | 27.27 | 45.07 | 20.90 |

| Ncbigene | Ndc | Orphanet | Sgd | Sider | Affymetrix | Goa | SWDF | LGD | DBpedia | ||

|---|---|---|---|---|---|---|---|---|---|---|---|

| center | 20.51 | 12.50 | 11.40 | 1.98 | 20.37 | 2.08 | 1.96 | 6.93 | 28.48 | 29.38 | |

| neigh edges | 3.20 | 2.37 | 3.35 | 11.44 | 0.77 | 3.75 | 2.88 | 3.77 | 8.63 | 7.11 | |

| Star | neigh nodes | 12.32 | 9.34 | 14.13 | 13.14 | 35.00 | 20.13 | 18.86 | 6.40 | 9.38 | 12.67 |

| center | 5.26 | 5.88 | 3.70 | 8.68 | 45.75 | 2.94 | 0.64 | 7.41 | 0.64 | 15.52 | |

| neigh edges | 0 | 5.88 | 0 | 4.25 | 0.08 | 22.73 | 0 | 4.00 | 0.27 | 11.96 | |

| Sink | neigh nodes | 19.51 | 10.73 | 22.22 | 51.73 | 11.18 | 65.24 | 61.83 | 6.62 | 26.06 | 22.12 |

| center | 26.67 | 22.81 | 21.43 | 6.19 | 6.28 | 17.13 | 24.53 | 7.91 | 29.04 | 27.28 | |

| Path | neigh edges | 1.67 | 5.26 | 7.14 | 13.84 | 0.88 | 7.69 | 8.49 | 4.73 | 14.31 | 5.42 |

| center | 18.75 | 0 | 0 | 90.88 | 13.04 | 87.53 | 68.84 | 11.51 | 43.92 | 45.54 | |

| in edges | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.66 | 0.80 | 15.19 | |

| in nodes | 0 | 0 | 0 | 37.15 | 2.94 | 36.06 | 28.70 | 5.81 | 18.73 | 24.41 | |

| out edges | 23.81 | 19.23 | 30.00 | 0.38 | 0 | 0.18 | 1.27 | 1.12 | 0.27 | 5.72 | |

| Hybrid | out nodes | 47.62 | 41.67 | 60.00 | 2.62 | 16.83 | 1.68 | 7.84 | 4.45 | 18.31 | 13.66 |

(2) Substitutions on Different Join Vertex Types and Neighbors: To further study user preferences on substitutions, we investigate the elements in hypergraphs of the queries in which substitutions appear most frequently. To this end, we consider the join vertex (the center), the direct subjects and objects this vertex connects to (neighbor nodes), and the direct predicates this vertex connects to (neighbor edges). Also, for hybrid vertices, we divide their neighbors into incoming and outgoing types. The distribution of substitutions on different positions is shown in Tab. 4. These percentages are relative to the occurrence of join vertex types and the neighbors of centers. We use red to indicate the highest value in each column. The results indicate that most substitutions happen on incoming nodes of the hybrid vertex. This is because the hybrid node has the highest connectivity with other nodes in the query hypergraph. As such, it is likely to be changed more frequently by the users.

Figure 8: Example of corresponding elements of two FILTER constraints.

| Dataset | Ncbigene | Ndc | Orphanet | Sgd | Sider | Affymetrix | Goa | SWDF | LGD | DBpedia |

|---|---|---|---|---|---|---|---|---|---|---|

| Block Subs | 0 | 20 | 8 | 121 | 3 | 94 | 7 | 34 | 11,787 | 3,155 |

| Specific Subs | 4 | 69 | 52 | 67 | 32 | 97 | 17 | 137 | 5,017 | 5,702 |

| variable | 25.00 | 24.64 | 21.15 | 17.91 | 0 | 39.18 | 11.76 | 19.71 | 3.41 | 21.43 |

| IRI | 0 | 59.42 | 5.77 | 74.63 | 0 | 20.62 | 35.29 | 8.03 | 1.14 | 30.90 |

| string | 75.00 | 15.94 | 73.08 | 7.46 | 100 | 38.14 | 29.41 | 64.96 | 3.37 | 28.20 |

| number | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.73 | 90.53 | 7.33 |

Reformulations of FILTER Constraints: A constraint, expressed by the keyword FILTER, is a restriction on solutions over the whole group in which the FILTER appears11. Similar to reformulations of triple patterns, there are three reformulations for FILTER constraints: addition(s), removal(s), and substitutions on elements of FILTER constraints.

But unlike the triple pattern, which has three elements, a FILTER constraint can have different elements that are used inside its body. Therefore, we express FILTER constraints as parse trees (see Fig.8). On this basis, we compare two parse trees of FILTER constraints in contiguous queries, i.e.., \(q_i\) and \(q_{i+1}\), and find changes in

corresponding elements (substitutions). For example, the elements with the same patterns in Fig. 8 are compared. Please note that corresponding elements are not only between single values, i.e.., specific

substitutions, which can be compared directly, but also between one single value and another constraint function or between constraint functions i.e.., block substitutions. The Arg2 in Fig. 8 is an

example of block substitution.

Among all the contiguous query pairs in the same session, \(37.68\%\) have reformulations about FILTER constraints. Again, the majority of the reformulations (\(92.17\%\))

are substitutions. We present distributions of block and specific substitutions in different datasets in Tab. 5. The first two rows present

the number of block and specific substitutions. For specific substitutions, we also list the percentage of specific substitutions on different data types: variable, IRI, string, and number. Substitutions on

FILTER constraints show very different distributions in different datasets, especially in the LGD. The LGD is a knowledge graph that contains spatial datasets [18]. Geometry data types and functions embedded in GEOSPARQL [25] are used to satisfy the needs for representing and

retrieving spatially related data. Functions like intersects, overlaps involve many numeric calculations, which makes numbers-specific substitutions more dominant (\(90.53\%\)) compared to other

substitutions. Furthermore, FILTER constraints in the LGD are more complex than other datasets: the number of block substitutions is twice the number of specific substitutions. For other datasets, substitutions usually happen

in strings, which serve as an argument in functions of strings.

Figure 9: HMM model of the SPARQL search session.

4 An Application Example of Findings↩︎

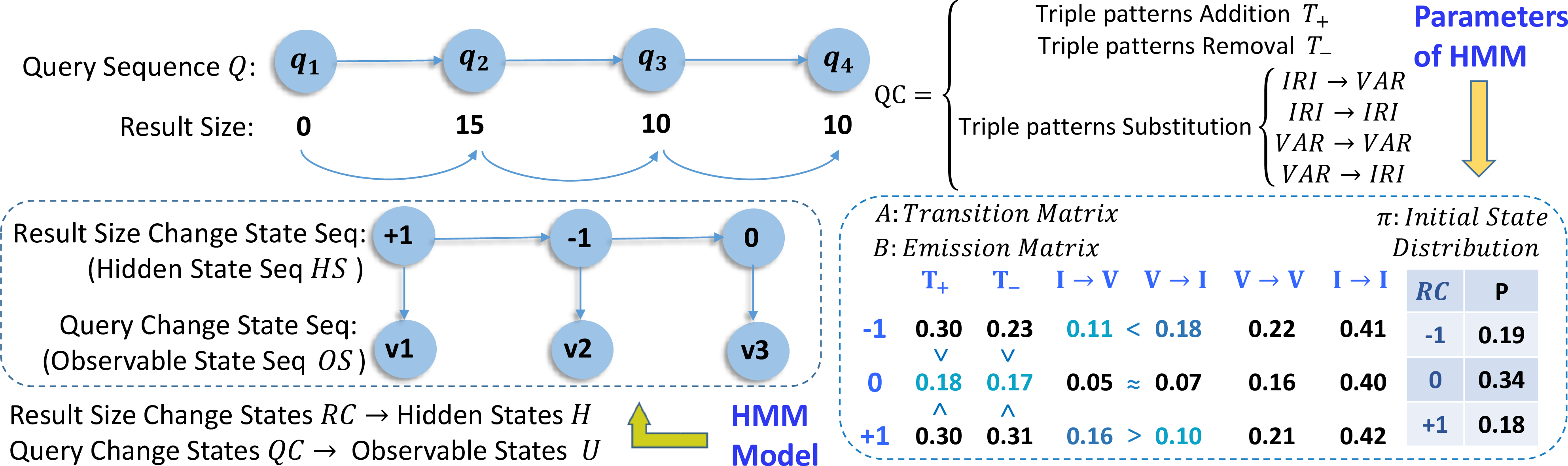

To show the potentiality of our findings, we use a simple model to predict user intentions of a given session and give reformulation suggestions based on the predicted intention. In single sessions, continuous query reformulations can be captured, while the drifting user intentions are abstract and can not be observed directly. We utilize a Hidden Markov Model (HMM) to characterize this process, and model the intention sequence as an unobservable sequence, the reformulation sequence as an observable sequence. We assume that \(H\)=\(\{h_1, h_2 \cdots h_e\}\) is the set of hidden states and \(U\)=\(\{u_1, u_2 \cdots u_f\}\) is the set of observable states (\(e\) and \(f\) are the number of corresponding states), while \(HS\)=\((hs_1, hs_2\cdots hs_t)\) and \(OS\)=\((os_1, os_2\cdots os_t)\) are the sequence of hidden states and observable states, respectively. We use result size changes to model drifting intentions, i.e.., the hidden states \(H\), and consider changes in triple patterns as observable states \(U\). We employ the maximum likelihood estimation to calculate parameters of the HMM model, i.e.., initial state distribution \(\pi\), transition probability matrix \(A\) for hidden states, and the emission probability matrix \(B\). The matrix \(A\) is the Markov transition matrix in Fig. 7. The details and parameters of the model are presented in Fig. 9. In summary, our model is capable of (1) inferring user intentions based on the observable query change sequence, i.e.., a decoding problem: given \(\lambda\)=\((A,B,\pi)\) and \(OS\)=\((os_1, os_2 \cdots os_t)\), calculate a sequence \(HS\)=\((hs_1, hs_2 \cdots hs_t)\) that maximizes \(P(HS|OS)\); (2) suggesting reformulation strategies by the possibility of subsequent reformulation strategies, i.e.., an evaluation problem: given parameters of HMM \(\lambda\)=\((A,B,\pi)\), calculate \(p(OS|\lambda)\).

Figure 10: A session (only BGPs without prefixes) from SWDF log dataset.

Case Study: We use a session shown in Fig. 10 to illustrate how our model works. In this session, the user use \(6\) queries in total to find the locations of the conferenceEvent. By recommending adding a generic triple pattern to impose a restriction on the variable \(a\) first, then making it specific according to the retrieved results, we assist this user omitting a few inefficient reformulations.

Discussions: Here are some possible directions to update the model: (1) More comprehensive ways to model states in HMM. (2) Consider correlations between queries in query sequences, not just correlations of contiguous query pairs. (3) Combination with techniques based on the underlying data.

5 Related Work↩︎

SPARQL Query Log Analysis in Session Level: Previous SPARQL query log analyses have given a comprehensive analysis of SPARQL queries in terms of structural features in isolation, such as the most frequent patterns [10], topological structures [13] and the join vertex types [11] etc... However, the analysis of potential correlations between queries is limited. Raghuveer et al.. [14] first define the query sequence executed by the same user as a SPARQL user session. On this basis, they introduce the important feature of robotic queries, the loop patterns, to describe the repetitive patterns of sessions. Bonifati et al.. [13] illustrate the similarity of queries in a query sequence as per the distribution of the length of so-called streaks. However, the similarity property introduced in these studies [13], [14] applies more to robotic queries from which organic queries are not separated. Bonifati et al.. [26] analyze robotic and organic queries separately, but not at the session level. In this paper, we seek a more specific definition of the search session, and comprehensively analyze the evolvements of structure and data-driven features of the organic queries in sessions.

Applications based on Session-Level Analysis: The similarity property proposed by previous researchers [13], [14] has been utilized in query augmentation [4] to retrieve more related results. Lorey et al.. [4] focus on retrieving SPARQL queries with high similarity with current queries, but fail to capture the drifting intentions behind queries in single search sessions. Utilizing explicit user feedback directly, Lehmann et al.. [9] use active learning to determine different reformulation strategies, which is similar to the example we conduct in this study. However, explicit feedback is usually unavailable in most search scenarios. We utilize reformulations between queries as implicit user feedback.

SPARQL Similarity: Different SPARQL similarity measures have been used in this paper to capture the query changes in sessions. Dividino et al.. [27] classify SPARQL similarity measures into \(4\) categories: (1) query structure, where SPARQL queries are expressed as strings or graph structures; (2) query content, where triple patterns are expressed as strings, ontological terms, or numerical values; (3) query languages, based on operators usages; (4) result sets. Dividino et al.. [27] also argue that the choice of different measurements is dependent on the application at hand. In our work, we consider all these dimensions separately and conduct a comprehensive analysis of query changes in the sessions.

6 Conclusions↩︎

This paper reveals secrets of user search behaviors in SPARQL search sessions by investigating \(10\) real-world SPARQL query logs. Specifically, we analyze the evolvement of query changes, w.r.t. structural and

data-driven features of SPARQL queries in sessions, and reach a series of novel findings. We thoroughly investigate reformulations in terms of SPARQL operators, triple patterns, and FILTER constraints. Furthermore, we provide an

application example about the usage of our findings. We hope results presented here will serve as a basis for future SPARQL caching, auto-completion, suggestion, and relaxation techniques.

Acknowledgements↩︎

This work has been supported by by National Natural Science Foundation of China with Grant Nos. 61906037 and U1736204; the German Federal Ministry for Economic Affairs and Energy (BMWi) within the project RAKI under the grant no 01MD19012D, by the German Federal Ministry of Education and Research (BMBF) within the project DAIKIRI under the grant no 01IS19085B, and by the EU H2020 Marie Skłodowska-Curie project KnowGraphs under the grant agreement no 860801; National Key Research and Development Program of China with Grant Nos. 2018YFC0830201 and 2017YFB1002801; the Fundamental Research Funds for the Central Universities.

References↩︎

Code and data are available at: https://github.com/seu-kse/SparqlSession↩︎

Corresponding author↩︎

https://sparqles.ai.wu.ac.at/availability, accessed on 2025-01-08.↩︎

A query can be executed multiple times on the same dataset.↩︎

We remove the queries with parse errors and the contiguous same queries before the recognition. For example, \(q_1, q_2, q_2, q_3\) is processed into \(q_1, q_2, q_3\).↩︎

We use randomly selected sessions in DBpedia because the GED computation on such large-scale data is NP-hard and time-consuming.↩︎

We append a \(1\) to vectors to avoid all-zero vectors.↩︎

Features in this vector can also be extended to more dimensions or features.↩︎

The query representation method can be replaced by other distributed representations such as trained embedding. We do not use embedding here because training embeddings for \(10\) datasets is highly resource and time-consuming.↩︎

We eliminate the processing error state here.↩︎